Seeing the City Live: Bridging Edge Vehicle Perception and Cloud Digital Twins to Empower Smart Cities

Highlights

- Integration of real-time edge vehicle perception with a cloud-based digital twin enables accurate, live replication of dynamic urban traffic.

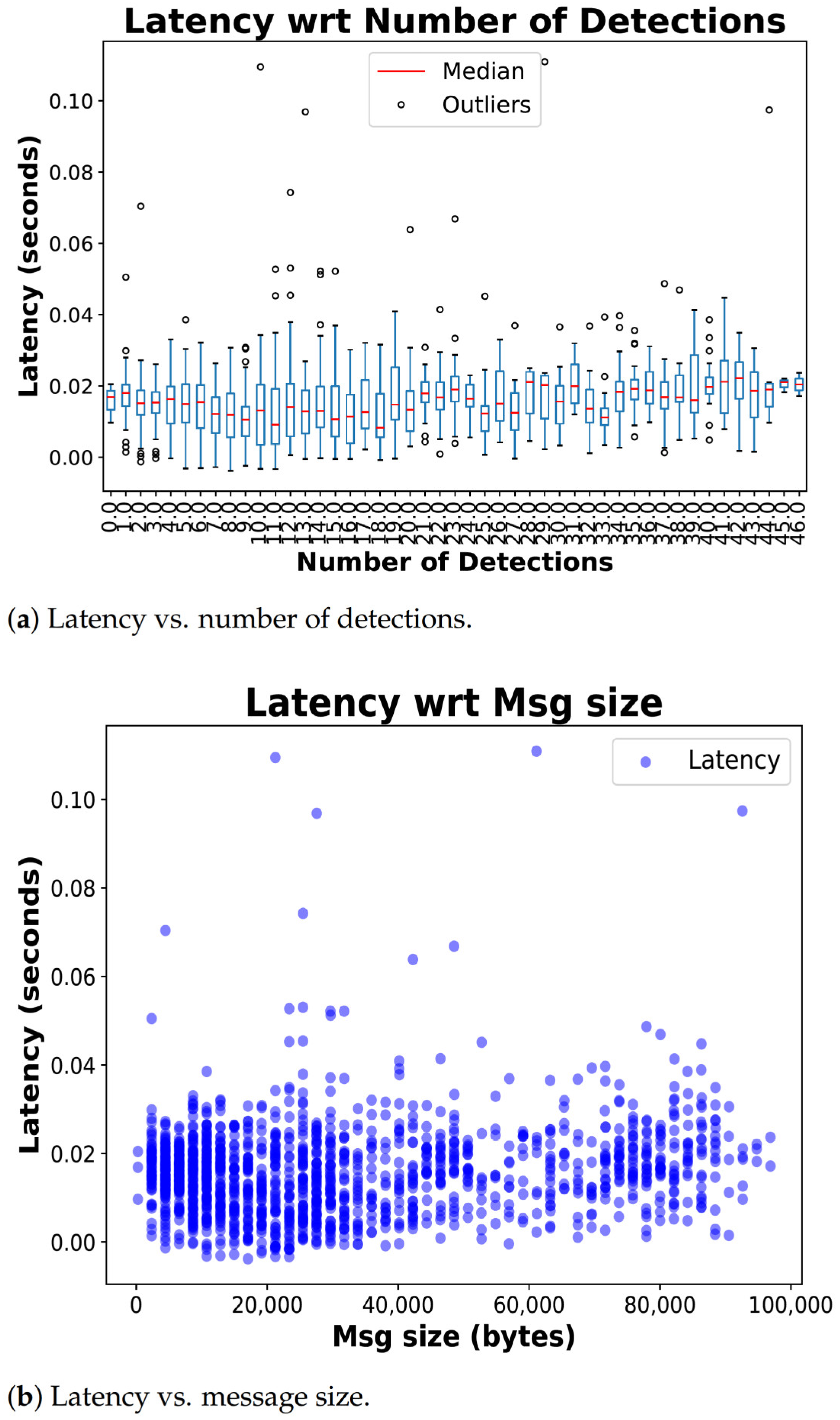

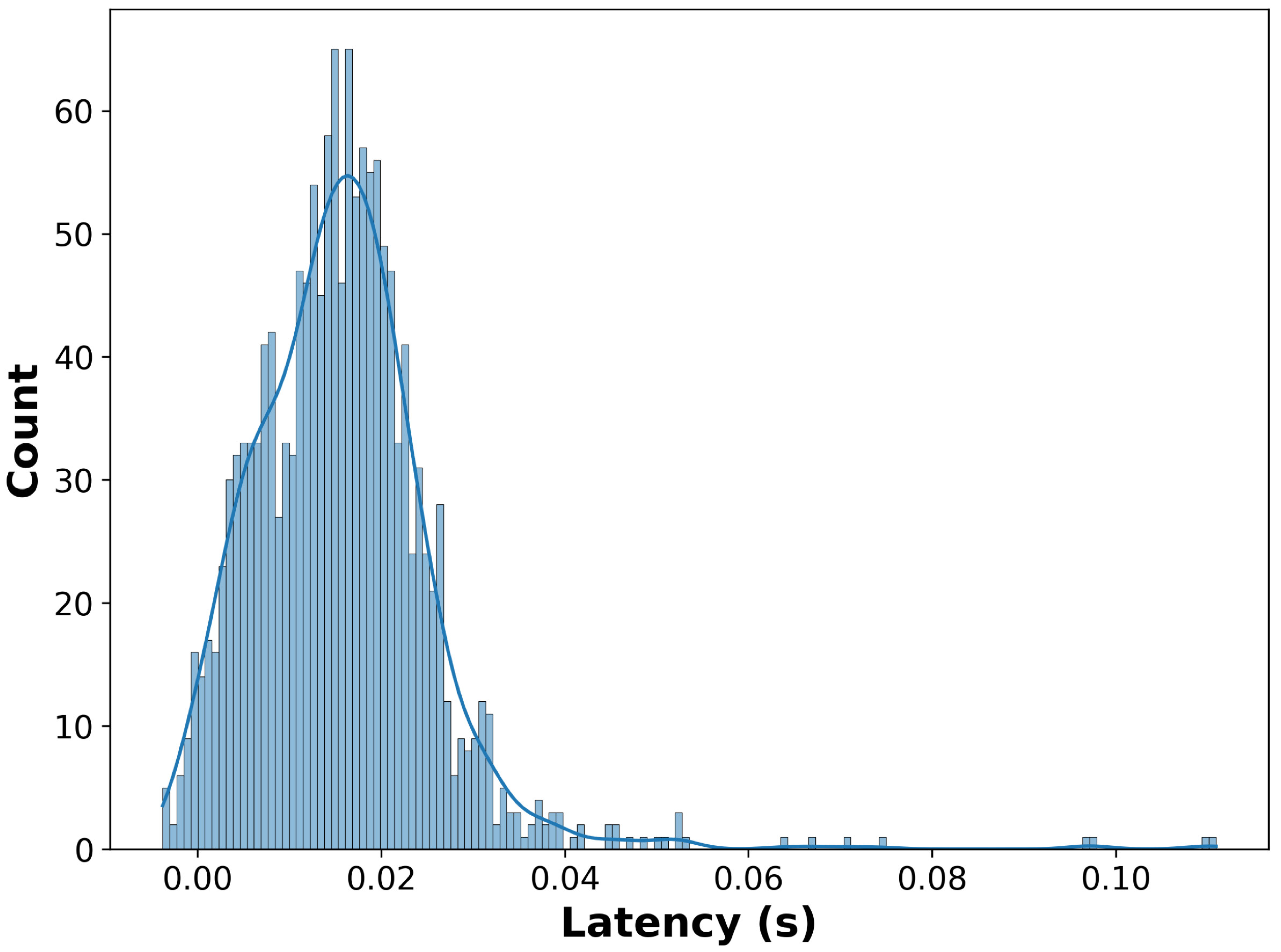

- Demonstration of a low-latency vehicle-to-cloud communication pipeline achieving minimum end-to-end delay for synchronized traffic monitoring.

- The proposed framework supports scalable, real-time intelligent transportation system (ITS) applications for smart city traffic visualization, predictive analytics, and cooperative mobility management.

- This end-to-end integration enhances traffic safety and efficiency by providing continuous, high-fidelity digital twin representations for proactive urban traffic management.

Abstract

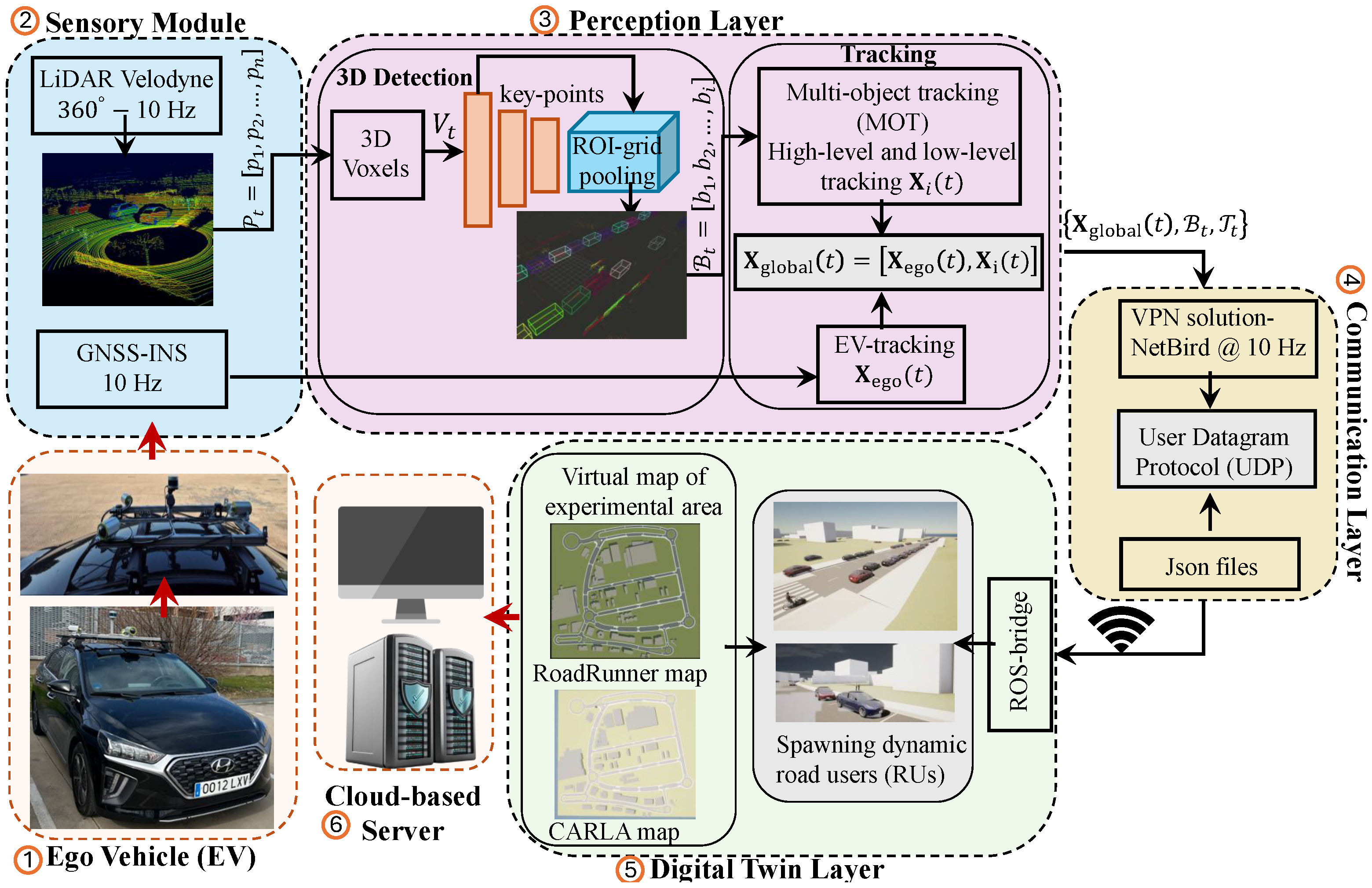

1. Introduction

- Design and deployment of a real-time multi-sensor perception and tracking system on an experimental EV, capable of detecting and tracking dynamic road users with high accuracy in complex urban environments.

- Development of a cloud-based, high-fidelity digital twin that replicates the real traffic environment in synchronization with physical real-world perception data, enabling city scale situational awareness and safe virtual testing.

- Integration of edge-based perception with cloud-based simulation through a low-latency communication layer, enabling live projection of real-time dynamic transportation information into the digital twin for use in smart city traffic monitoring, incident management, and predictive control.

2. Related Work

2.1. Traffic Simulator and Digital Twins

2.2. Simulation Platforms for Transportation Digital Twins

2.3. Digital Twin Applications Using CARLA

2.4. Real-Time ITS Digital Twin Framework

3. Methodology

3.1. Real-Time Perception Layer

3.1.1. Stage 1: Velodyne LiDAR Sensor

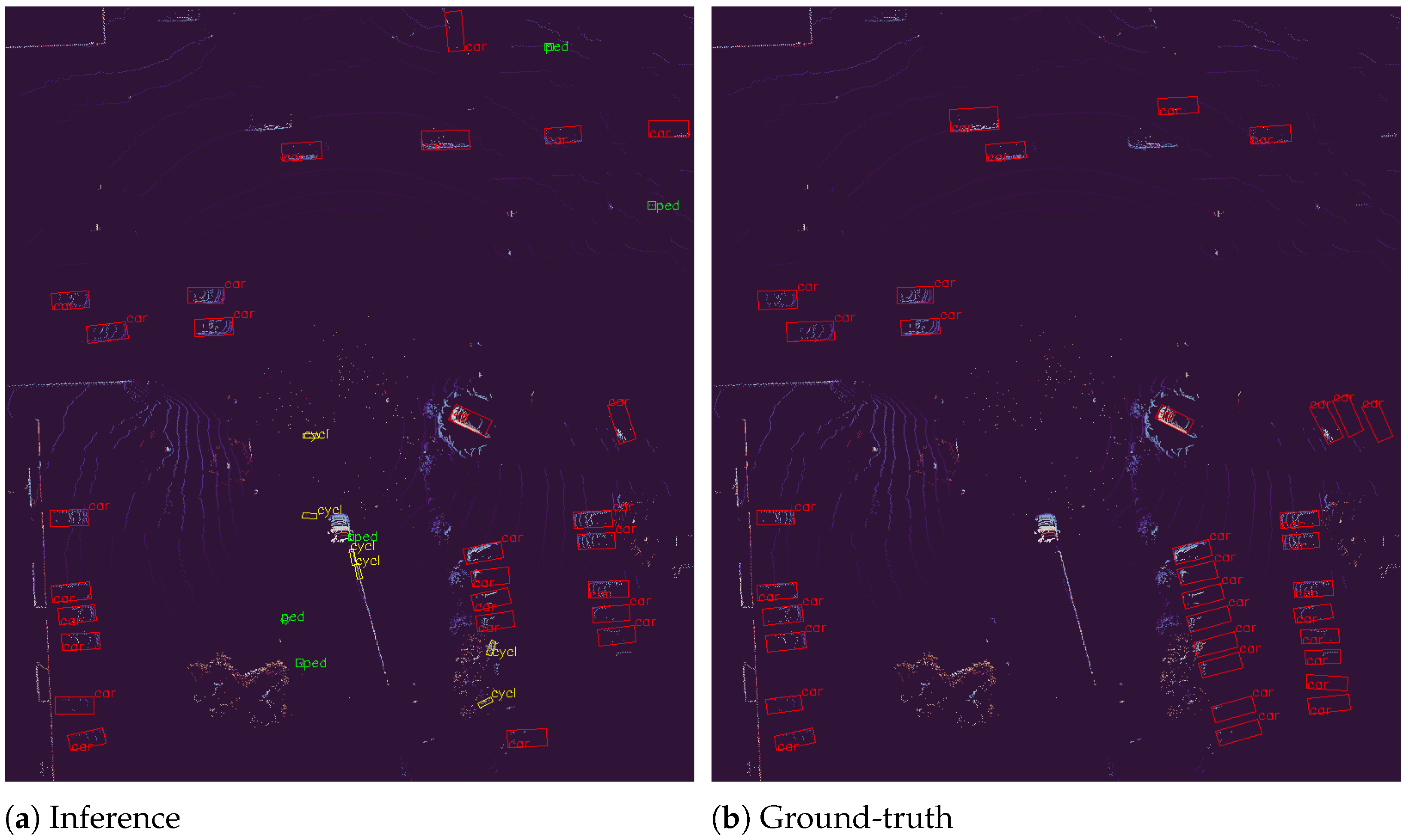

3.1.2. Stage 2: 3D Detection Model

3.1.3. Stage 3: Multi-Stage Tracking

- Ego Vehicle Tracking (Localization): Ego vehicle localization was performed by employing the data from the GNSS-INS system, which was installed on the UC3M vehicle, and an Unscented Kalman filter (UKF) [7] was used to incorporate vehicle motion equations and kinematics constraints for localization in global coordinates. This approach allows us to estimate the ego vehicle’s position precisely and smoothly, mitigating noise or transient errors from raw satellite measurements. Accurate ego localization is critical for aligning new 3D detections with historical data.The ego vehicle’s state vector at time-instant was estimated by the UKF and is defined as follows:where are the global positions in meters (east, north, height); is the vehicle’s global yaw (orientation) measured from the East axis (in radians); and are velocities along the horizontal east-north plane of the GPS frame, respectively. In most driving conditions remains close to zero because of constant elevation. However, retaining it in the state vector allows the tracking system to remain robust and generalizable.The process model assumes constant velocity and heading in the horizontal plane to predict future states at time-instant as follows:where is the time interval between updates, and are independent Gaussian noise terms modeling system uncertainty, i.e.,Please note that the sensitivity analysis of the UKF was performed following [37]. The process noise covariance matrix Q and the measurement noise covariance matrix R were configured using empirical noise characteristics derived from GPS and sensor data, which effectively capture the true uncertainty in the measurements and process dynamics. This data-driven approach involves analyzing the statistical distribution of sensor errors and noise during operation, which allows us to tailor the covariance matrices to reflect realistic conditions rather than rely on arbitrary or theoretical values. For example, the measurement noise covariance R was set by examining the variance in GPS position and heading measurements, while the process noise Q was designed to account for model uncertainties and expected dynamics variation. Such calibration ensures that the UKF maintains robust and consistent state estimation by appropriately balancing trust between predicted states and measured observations. This method provides practical parameterization of UKF.

- Multi-Object Tracking (MOT): This core component fuses real-time 3D object detections with ego pose filtering, for consistent tracking of each object. The MOT framework is structured into two hierarchical stages: (i) a high-level association and management stage and (ii) a low-level state estimation stage for each dynamic object in the environment.High-Level MOT: The high-level MOT architecture maintains a tracker list where denotes the set of visible trackers, and is the set of occluded trackers. Associations between detections and the tracker list were performed by leveraging the Hungarian algorithm [38], which uses the L2 distance in position space as the association cost metric. Tracker for the i-th detected object is defined as a tuple:where is the state vector of the detected object, is the hit count indicating the number of successful associations, is the miss count tracking consecutive frames without associations, and is a unique identifier.At each frame update, the tracker’s status update and removal decisions were computed according to the and the predefined thresholds as follows:A tracker is considered visible, i.e., belonging to , if its hit count consistently exceeds the threshold and it has been matched with detections in recent frames. Conversely, (occluded) if the tracker has not been matched in recent frames, but its miss count remains below the removal threshold. The association process was carried out in two steps: first, 3D detections were matched to visible trackers , and then remaining unmatched detections were compared with occluded trackers . Any remaining unmatched detections were used to initialize new trackers, which were initially placed in (occluded) until their hit count surpassed the visible threshold. This multi-stage approach helps mitigate false positives and improves robustness against short-term occlusions.Low-Level MOT: The state vector for the i-th tracked object is defined as follows:where comprises 3D position (), orientation , bounding box dimensions () and velocity components () of the detected object, expressed in the local coordinate frame of the onboard LiDAR sensor.The state vector corresponding to each tracker (regardless of its list) was provided as an input to the UKF. The UKF recursively estimates and updates using the nonlinear process model:where denotes the system dynamics, represents process noise, and is the control input that encodes the change in EV’s positions. The UKF prediction step assumes that detected objects are static in the global frame, meaning that their positions do not change between frames. Instead, the relative positions of the detections are updated by incorporating the EV’s motion as a control input represented by (as shown in Equation (13)). Since the EV state is derived from GPS parameters, integrating ego motion into the UKF ensures accurate temporal alignment of the tracked object states while mitigating drift caused by the movement of the EV itself. Additionally, the UKF smooths the estimates of object sizes over time, helping reduce noise introduced by raw sensor measurements.Since both detections and tracked states are referenced in the local LiDAR frame, the UKF state variables represent relative positions and motions, not absolute global coordinates. Details of the local-to-global coordinate transformation are provided in subsequent paragraph.Local-to-Global Coordinate Transformation: Once the detections have been filtered and tracked using the MOT framework, homogeneous transformations are applied to convert the local detections into the global coordinate frame. This process involves transforming the detections from the LiDAR frame to the GPS frame and subsequently to the global frame provided by the ego vehicle’s localization system. This sequence follows the chain rule for coordinate transformations as follows:

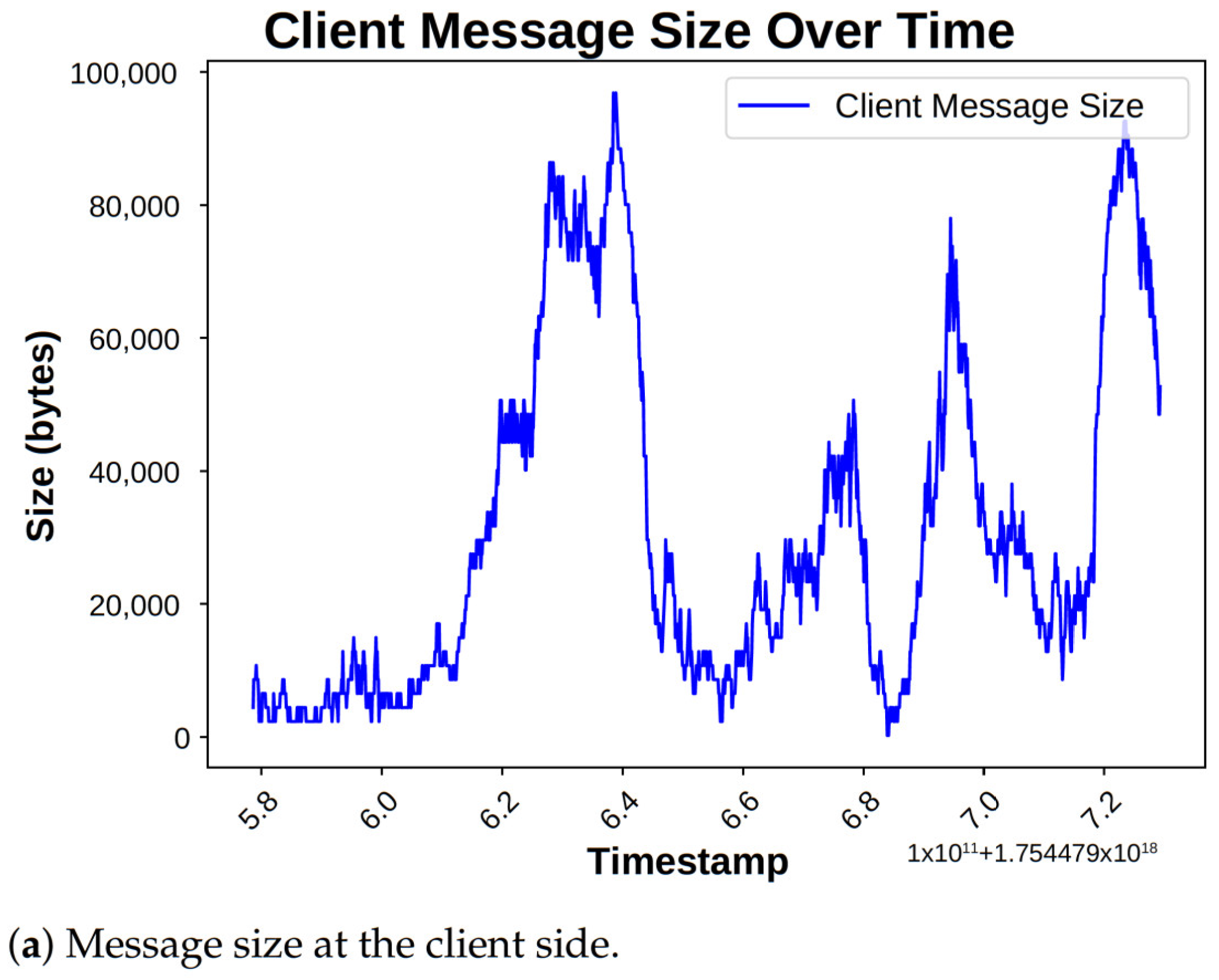

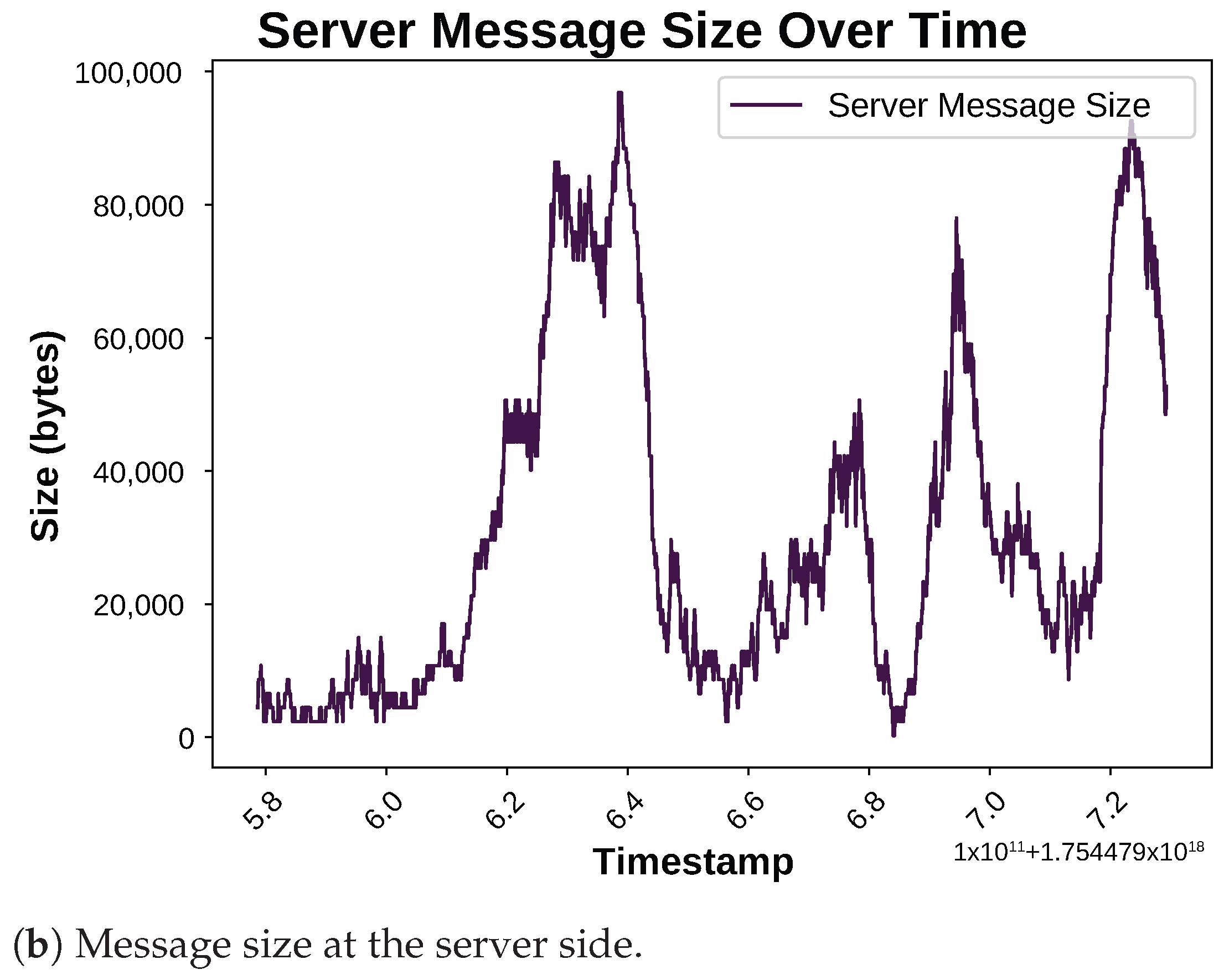

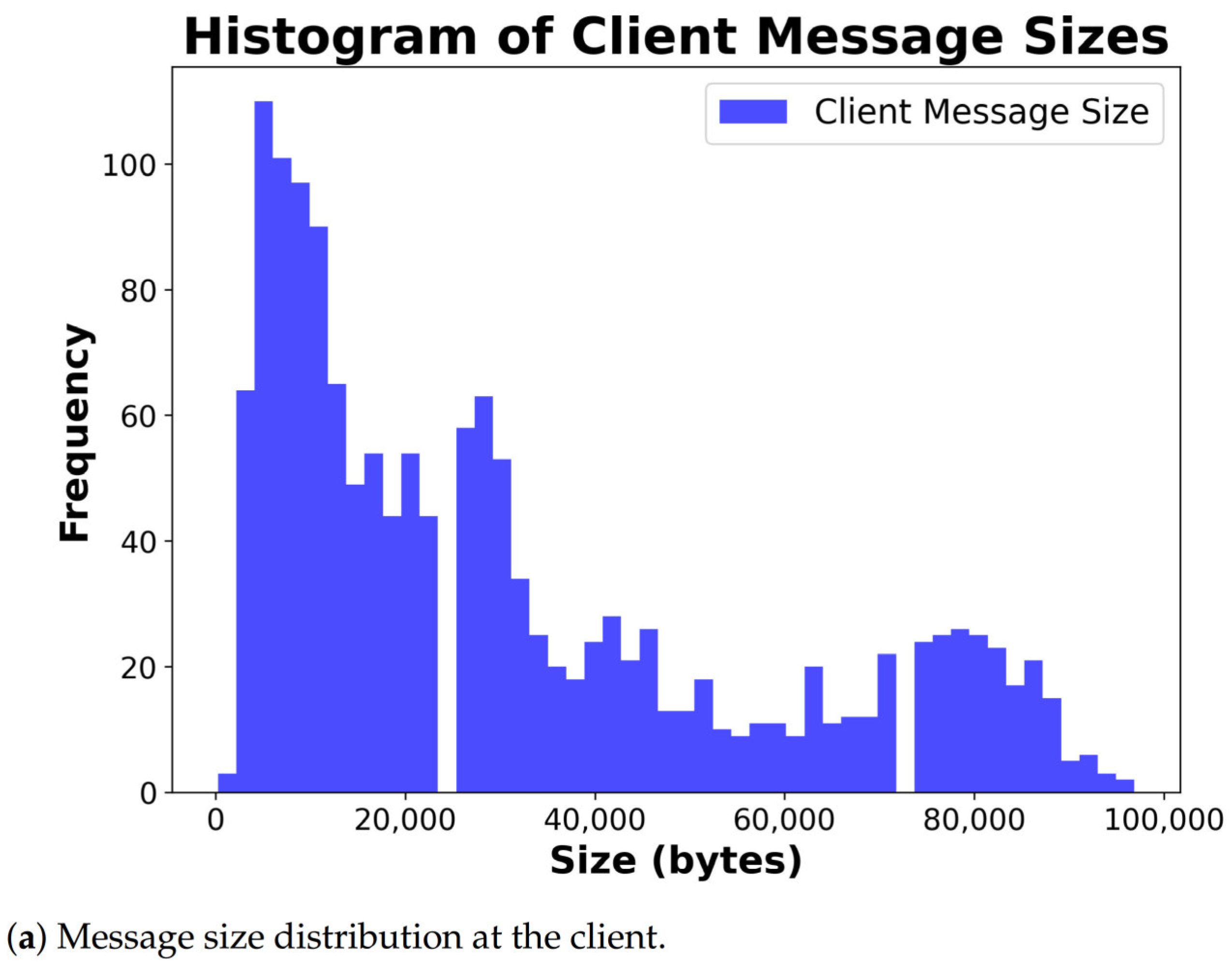

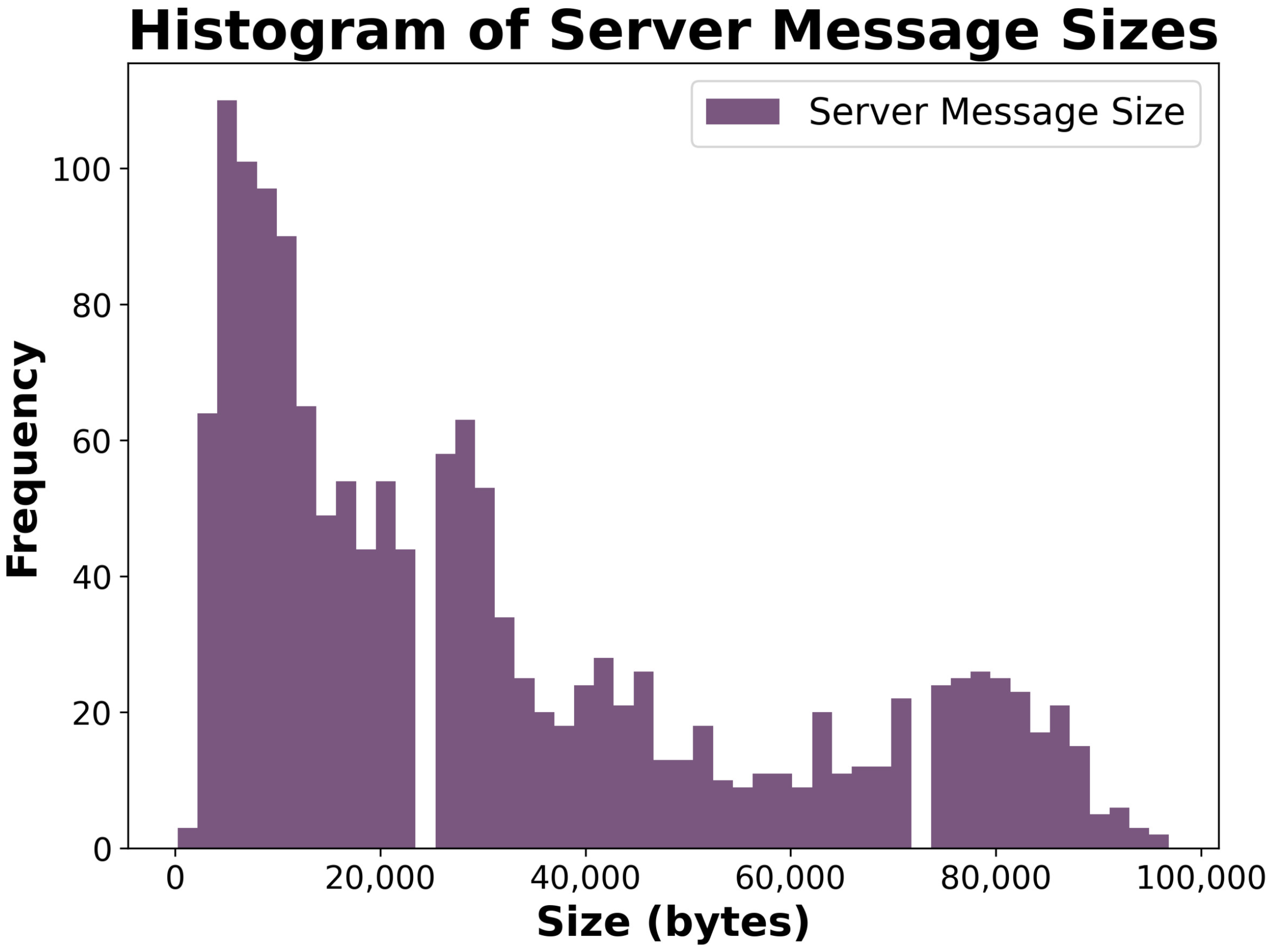

3.2. Communication Layer

Security and Privacy Considerations

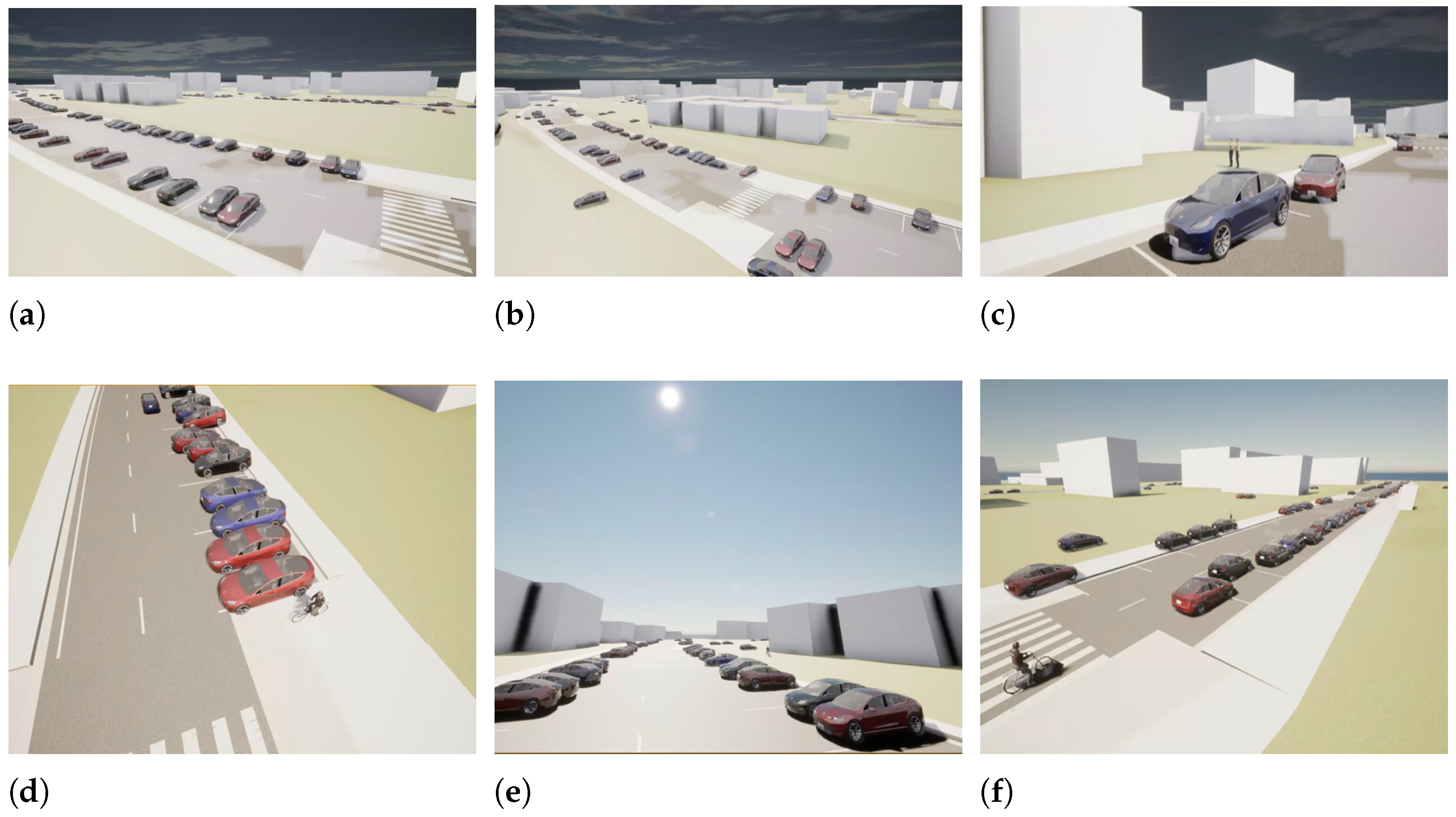

3.3. Digital Twin Layer

| Algorithm 1 Spawning real-time detected objects into the digital twin of CARLA. |

|

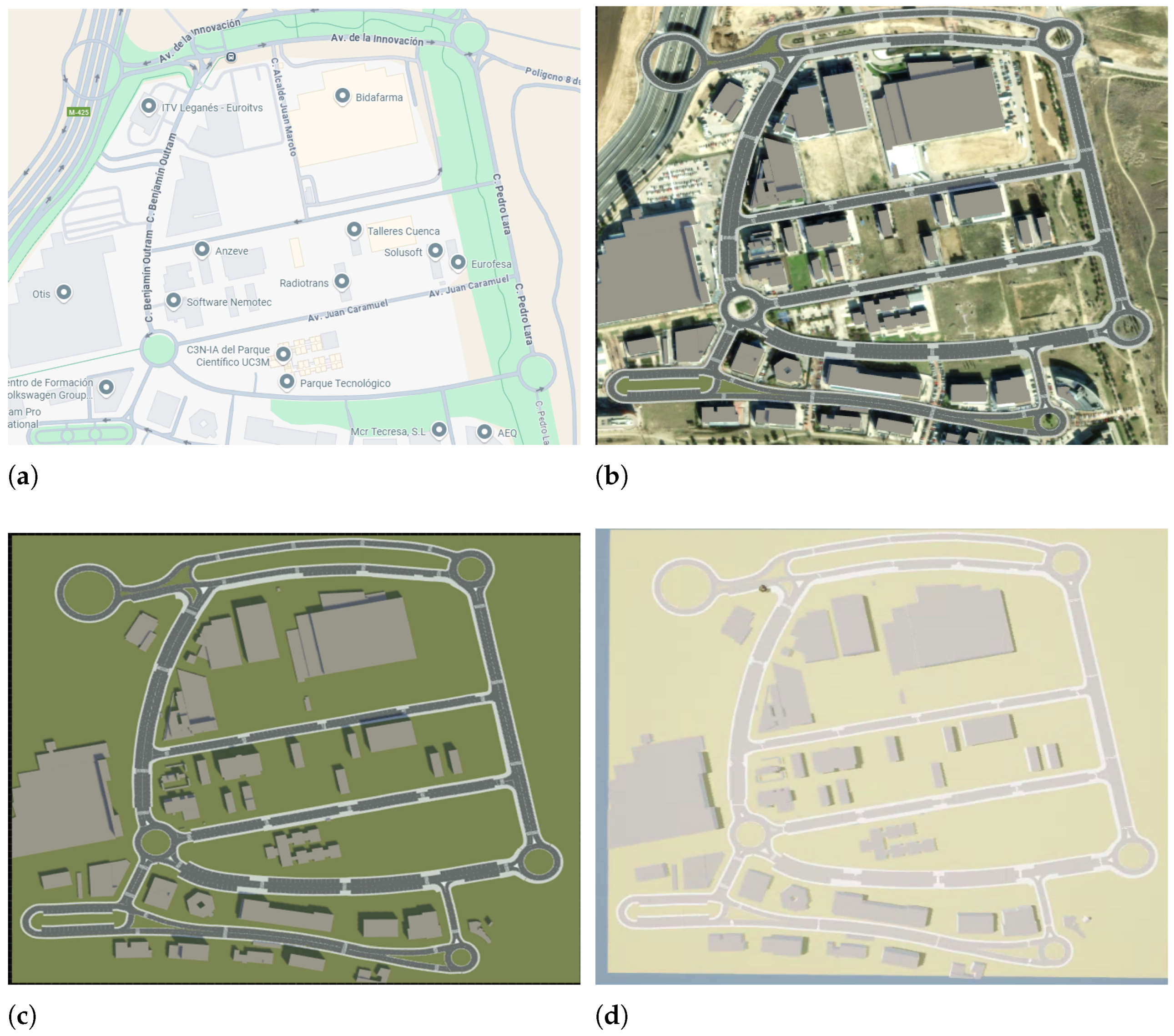

3.3.1. Map Creation from OpenStreetMap

3.3.2. Road and Traffic Infrastructure Modeling

3.3.3. Real-Time Object Spawning and Management

4. Experimental Results and Discussion

4.1. Experimental Area

4.2. Experimental Vehicle Hardware

4.2.1. Perception Layer

4.2.2. Communication Layer

4.3. Digital Twin Layer

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Elassy, M.; Al-Hattab, M.; Takruri, M.; Badawi, S. Intelligent transportation systems for sustainable smart cities. Transp. Eng. 2024, 16, 100252. [Google Scholar] [CrossRef]

- Jog, Y.; Singhal, T.K.; Barot, F.; Cardoza, M.; Dave, D. Need & gap analysis of converting a city into smart city. Int. J. Smart Home 2017, 11, 9–26. [Google Scholar] [CrossRef]

- Gupta, M.; Miglani, H.; Deo, P.; Barhatte, A. Real-time traffic control and monitoring. E-Prime Electr. Eng. Electron. Energy 2023, 5, 100211. [Google Scholar] [CrossRef]

- Bashir, A.; Mohsin, M.A.; Jazib, M.; Iqbal, H. Mindtwin AI: Multiphysics informed digital-twin for fault localization in induction motor using AI. In Proceedings of the 2023 International Conference on Big Data, Knowledge and Control Systems Engineering (BdKCSE), Sofia, Bulgaria, 2–3 November 2023; pp. 1–8. [Google Scholar]

- Guo, Y.; Zou, K.; Chen, S.; Yuan, F.; Yu, F. 3D digital twin of intelligent transportation system based on road-side sensing. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 2083, p. 032022. [Google Scholar]

- Alemaw, A.S.; Slavic, G.; Iqbal, H.; Marcenaro, L.; Gomez, D.M.; Regazzoni, C. A data-driven approach for the localization of interacting agents via a multi-modal dynamic bayesian network framework. In Proceedings of the 2022 18th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Madrid, Spain, 29 November–2 December 2022; pp. 1–8. [Google Scholar]

- Iqbal, H.; Sadia, H.; Al-Kaff, A.; Garcia, F. Novelty Detection in Autonomous Driving: A Generative Multi-Modal Sensor Fusion Approach. IEEE Open J. Intell. Transp. Syst. 2025, 6, 799–812. [Google Scholar] [CrossRef]

- Wolniak, R.; Turoń, K. Between Smart Cities Infrastructure and Intention: Mapping the Relationship Between Urban Barriers and Bike-Sharing Usage. Smart Cities 2025, 8, 124. [Google Scholar] [CrossRef]

- Fong, B.; Situ, L.; Fong, A.C. Smart technologies and vehicle-to-X (V2X) infrastructures for smart mobility cities. In Smart Cities: Foundations, Principles, and Applications; Wiley: Hoboken, NJ, USA, 2017; pp. 181–208. [Google Scholar]

- Sadia, H.; Iqbal, H.; Hussain, S.F.; Saeed, N. Signal detection in intelligent reflecting surface-assisted NOMA network using LSTM model: A ML approach. IEEE Open J. Commun. Soc. 2024, 6, 29–38. [Google Scholar] [CrossRef]

- Sadia, H.; Iqbal, H.; Ahmed, R.A. MIMO-NOMA with OSTBC for B5G cellular networks with enhanced quality of service. In Proceedings of the 2023 10th International Conference on Wireless Networks and Mobile Communications (WINCOM), Istanbul, Turkiye, 26–28 October 2023; pp. 1–6. [Google Scholar]

- Kotusevski, G.; Hawick, K.A. A review of traffic simulation software. Res. Lett. Inf. Math. Sci. 2009, 13, 35–54. [Google Scholar]

- Wang, Z.; Gupta, R.; Han, K.; Wang, H.; Ganlath, A.; Ammar, N.; Tiwari, P. Mobility digital twin: Concept, architecture, case study, and future challenges. IEEE Internet Things J. 2022, 9, 17452–17467. [Google Scholar] [CrossRef]

- Rezaei, Z.; Vahidnia, M.H.; Aghamohammadi, H.; Azizi, Z.; Behzadi, S. Digital twins and 3D information modeling in a smart city for traffic controlling: A review. J. Geogr. Cart. 2023, 6, 1865. [Google Scholar] [CrossRef]

- Shibuya, K. Synchronizing Everything to the Digitized World. In The Rise of Artificial Intelligence and Big Data in Pandemic Society: Crises, Risk and Sacrifice in a New World Order; Springer: Singapore, 2022; pp. 159–174. [Google Scholar]

- Kusari, A.; Li, P.; Yang, H.; Punshi, N.; Rasulis, M.; Bogard, S.; LeBlanc, D.J. Enhancing SUMO simulator for simulation based testing and validation of autonomous vehicles. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 829–835. [Google Scholar]

- Samuel, L.; Shibil, M.; Nasser, M.; Shabir, N.; Davis, N. Sustainable planning of urban transportation using PTV VISSIM. In Proceedings of the SECON’21: Structural Engineering and Construction Management; Springer: Cham, Switzerland, 2022; pp. 889–904. [Google Scholar]

- Casas, J.; Ferrer, J.L.; Garcia, D.; Perarnau, J.; Torday, A. Traffic simulation with aimsun. In Fundamentals of Traffic Simulation; Springer: New York, NY, USA, 2010; pp. 173–232. [Google Scholar]

- W Axhausen, K.; Horni, A.; Nagel, K. The Multi-Agent Transport Simulation MATSim; Ubiquity Press: London, UK, 2016. [Google Scholar]

- Kim, S.; Suh, W.; Kim, J. Traffic simulation software: Traffic flow characteristics in CORSIM. In Proceedings of the 2014 International Conference on Information Science & Applications (ICISA), Seoul, Republic of Korea, 6–9 May 2014; pp. 1–3. [Google Scholar]

- Jiménez, D.; Muñoz, F.; Arias, S.; Hincapie, J. Software for calibration of transmodeler traffic microsimulation models. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1317–1323. [Google Scholar]

- Grimm, D.; Schindewolf, M.; Kraus, D.; Sax, E. Co-simulate no more: The CARLA V2X Sensor. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 2429–2436. [Google Scholar]

- Stubenvoll, C.; Tepsa, T.; Kokko, T.; Hannula, P.; Väätäjä, H. CARLA-based digital twin via ROS for hybrid mobile robot testing. Scand. Simul. Soc. 2025, 378–384. [Google Scholar] [CrossRef]

- Zhou, Z.; Lai, C.C.; Han, B.; Hsu, C.H.; Chen, S.; Li, B. CARLA-Twin: A Large-Scale Digital Twin Platform for Advanced Networking Research. In Proceedings of the IEEE INFOCOM 2025—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Rio de Janeiro, Brazil, 1–4 November 2016. [Google Scholar]

- Isoda, T.; Miyoshi, T.; Yamazaki, T. Digital twin platform for road traffic using carla simulator. In Proceedings of the 2023 IEEE 13th International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 3–5 September 2023; pp. 47–50. [Google Scholar]

- Park, H.; Easwaran, A.; Andalam, S. TiLA: Twin-in-the-loop architecture for cyber-physical production systems. In Proceedings of the 2019 IEEE 37th International Conference on Computer Design (ICCD), Abu Dhabi, United Arab Emirates, 17–20 November 2019; pp. 82–90. [Google Scholar]

- Ding, Y.; Zou, J.; Fan, Y.; Wang, S.; Liao, Q. A Digital Twin-based Testing and Data Collection System for Autonomous Driving in Extreme Traffic Scenarios. In Proceedings of the 2022 6th International Conference on Video and Image Processing, Shanghai, China, 23–26 December 2022; pp. 101–109. [Google Scholar]

- Zhang, H.; Yue, X.; Tian, K.; Li, S.; Wu, K.; Li, Z.; Lord, D.; Zhou, Y. Virtual roads, smarter safety: A digital twin framework for mixed autonomous traffic safety analysis. arXiv 2025, arXiv:2504.17968. [Google Scholar] [CrossRef]

- Xu, H.; Berres, A.; Yoginath, S.B.; Sorensen, H.; Nugent, P.J.; Severino, J.; Tennille, S.A.; Moore, A.; Jones, W.; Sanyal, J. Smart mobility in the cloud: Enabling real-time situational awareness and cyber-physical control through a digital twin for traffic. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3145–3156. [Google Scholar] [CrossRef]

- Rose, A.; Nisce, I.; Gonzalez, A.; Davis, M.; Uribe, B.; Carranza, J.; Flores, J.; Jia, X.; Li, B.; Jiang, X. A cloud-based real-time traffic monitoring system with lidar-based vehicle detection. In Proceedings of the 2023 IEEE Green Energy and Smart Systems Conference (IGESSC), Long Beach, CA, USA, 13–14 November 2023; pp. 1–6. [Google Scholar]

- Hu, C.; Fan, W.; Zeng, E.; Hang, Z.; Wang, F.; Qi, L.; Bhuiyan, M.Z.A. Digital twin-assisted real-time traffic data prediction method for 5G-enabled internet of vehicles. IEEE Trans. Ind. Inform. 2021, 18, 2811–2819. [Google Scholar] [CrossRef]

- Kušić, K.; Schumann, R.; Ivanjko, E. A digital twin in transportation: Real-time synergy of traffic data streams and simulation for virtualizing motorway dynamics. Adv. Eng. Inform. 2023, 55, 101858. [Google Scholar] [CrossRef]

- Kaytaz, U.; Ahmadian, S.; Sivrikaya, F.; Albayrak, S. Graph neural network for digital twin-enabled intelligent transportation system reliability. In Proceedings of the 2023 IEEE International Conference on Omni-layer Intelligent Systems (COINS), Berlin, Germany, 23–25 July 2023; pp. 1–7. [Google Scholar]

- Teofilo, A.; Sun, Q.C.; Amati, M. SDT4Solar: A Spatial Digital Twin Framework for Scalable Rooftop PV Planning in Urban Environments. Smart Cities 2025, 8, 128. [Google Scholar] [CrossRef]

- Kourtidou, K.; Frangopoulos, Y.; Salepaki, A.; Kourkouridis, D. Digital Inequality and Smart Inclusion: A Socio-Spatial Perspective from the Region of Xanthi, Greece. Smart Cities 2025, 8, 123. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10529–10538. [Google Scholar]

- Rhudy, M.; Gu, Y.; Gross, J.; Napolitano, M. Sensitivity analysis of EKF and UKF in GPS/INS sensor fusion. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Portland, OR, USA, 8–11 August 2011; p. 6491. [Google Scholar]

- Kumar, H.; Saxena, V. Optimization and Prioritization of Test Cases through the Hungarian Algorithm. J. Adv. Math. Comput. Sci. 2025, 40, 61–72. [Google Scholar] [CrossRef]

- Kjorveziroski, V.; Bernad, C.; Gilly, K.; Filiposka, S. Full-mesh VPN performance evaluation for a secure edge-cloud continuum. Softw. Pract. Exp. 2024, 54, 1543–1564. [Google Scholar] [CrossRef]

- Xiao, P.; Shao, Z.; Hao, S.; Zhang, Z.; Chai, X.; Jiao, J.; Li, Z.; Wu, J.; Sun, K.; Jiang, K.; et al. Pandaset: Advanced sensor suite dataset for autonomous driving. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3095–3101. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From points to parts: 3d object detection from point cloud with part-aware and part-aggregation network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12697–12705. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Liu, X.; Xue, N.; Wu, T. Learning auxiliary monocular contexts helps monocular 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March; 2022; Volume 36, pp. 1810–1818. Available online: https://ojs.aaai.org/index.php/AAAI/article/view/20074 (accessed on 18 November 2025).

- Chen, W.; Zhao, J.; Zhao, W.L.; Wu, S.Y. Shape-aware monocular 3D object detection. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6416–6424. [Google Scholar] [CrossRef]

- Lu, Y.; Ma, X.; Yang, L.; Zhang, T.; Liu, Y.; Chu, Q.; Yan, J.; Ouyang, W. Geometry uncertainty projection network for monocular 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 3111–3121. [Google Scholar]

- Chen, H.; Huang, Y.; Tian, W.; Gao, Z.; Xiong, L. Monorun: Monocular 3d object detection by reconstruction and uncertainty propagation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10379–10388. [Google Scholar]

- Liu, C.; Gu, S.; Van Gool, L.; Timofte, R. Deep line encoding for monocular 3d object detection and depth prediction. In Proceedings of the 32nd British Machine Vision Conference (BMVC 2021); BMVA Press: Glasgow, UK, 2021; p. 354. [Google Scholar]

- Reading, C.; Harakeh, A.; Chae, J.; Waslander, S.L. Categorical depth distribution network for monocular 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8555–8564. [Google Scholar]

- Liu, Y.; Yixuan, Y.; Liu, M. Ground-aware monocular 3d object detection for autonomous driving. IEEE Robot. Autom. Lett. 2021, 6, 919–926. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, J.; Zhou, J. Objects are different: Flexible monocular 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 3289–3298. [Google Scholar]

- Ma, X.; Zhang, Y.; Xu, D.; Zhou, D.; Yi, S.; Li, H.; Ouyang, W. Delving into localization errors for monocular 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4721–4730. [Google Scholar]

- Wang, T.; Xinge, Z.; Pang, J.; Lin, D. Probabilistic and geometric depth: Detecting objects in perspective. In Proceedings of the Conference on Robot Learning, PMLR. 2022, pp. 1475–1485. Available online: https://proceedings.mlr.press/v164/wang22i.html (accessed on 18 November 2025).

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian adverse driving conditions dataset. Int. J. Robot. Res. 2021, 40, 681–690. [Google Scholar] [CrossRef]

| Software | Simulation Type | Key Features | Ease of Use | Integration Capabilities | Visualization Quality |

|---|---|---|---|---|---|

| SUMO [16] | Microscopic | Open-source, traffic flow modeling, supports large-scale networks | Moderate | Integration with CARLA and GIS tools | Moderate |

| PTV Vissim [17] | Microscopic | Multimodal traffic simulation, pedestrian modeling | Easy | High compatibility with ITS applications | High |

| Aimsun [18] | Microscopic and Mesoscopic | Real-time simulation, urban and regional network modeling | Moderate | Works with ITS and vehicle guidance systems | High |

| MATSim [19] | Agent-based and Macroscopic | Large-scale transport demand modeling (e.g., Berlin, Zurich) | Moderate | GIS-based map imports | Moderate |

| CORSIM [20] | Microscopic | Freeway and urban street simulation, intersection control | Easy | Limited integration | Moderate |

| TransModeler [21] | Microscopic and Mesoscopic | Multimodal simulation, congestion analysis | Moderate | ITS and traffic management systems | High |

| CARLA [22] | Microscopic | Autonomous driving focused, realistic 3D environments, sensor integration | Moderate | Co-simulation with SUMO and Unity | Very High |

| Aspect | Proposed Framework |

|---|---|

| Edge Processing | Real-time, onboard 3D detection and tracking |

| Communication | Low-latency UDP vehicle-to-cloud pipeline showing minimum delays |

| Digital Twin Synchronization | Continuous, precise, live synchronization using real perception data |

| Integration | Unified, extensible pipeline from edge-to-cloud |

| Smart City Impact | Designed for real-time traffic monitoring, predictive analytics, and smart city ITS deployments |

| Models | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |

| Part- [41] | 87.5 | 78.5 | 73.1 | 53.1 | 43.4 | 40.0 | 79.2 | 63.5 | 57.0 |

| SECOND [42] | 84.7 | 76.0 | 68.7 | - | - | - | - | - | - |

| PointRCNN [43] | 87.0 | 76.6 | 70.7 | 47.9 | 39.3 | 36.0 | 74.0 | 58.8 | 52.5 |

| PointPillers [44] | 82.5 | 74.3 | 68.8 | 51.4 | 41.9 | 38.8 | 77.1 | 58.6 | 51.9 |

| ConvNet [45] | 87.4 | 76.4 | 66.7 | 52.2 | 43.4 | 38.8 | 82.0 | 65.0 | 56.5 |

| Ours | 90.3 | 81.4 | 76.8 | 52.5 | 43.3 | 40.3 | 78.6 | 63.7 | 57.7 |

| Models | References | Easy | Moderate | Hard |

|---|---|---|---|---|

| MonoCon | [46] | 72.15 | 60.29 | 54.40 |

| Shape-aware | [47] | 73.53 | 62.68 | 56.61 |

| GUPNet | [48] | 71.17 | 59.32 | 52.14 |

| MonoRUn | [49] | 67.95 | 58.91 | 52.94 |

| DLE | [50] | 70.26 | 56.44 | 43.01 |

| CaDDN | [51] | 67.94 | 54.76 | 50.60 |

| Ground-aware | [52] | 69.42 | 54.47 | 42.51 |

| MonoFlex | [53] | 71.59 | 62.19 | 56.10 |

| MonoDLE | [54] | 67.23 | 59.98 | 54.35 |

| PGD | [55] | 66.33 | 55.88 | 49.35 |

| Ours | - | 74.8 | 63.8 | 59.2 |

| Classes | n Points Threshold | IoU Threshold | Score Threshold | Total Instances | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|---|---|

| Car | 20 | 0.7 | 0.5 | 13,817 | 9858 | 1964 | 1995 | 0.834 | 0.832 | 0.832 |

| Pedestrian | 2 | 0.5 | 0.35 | 1587 | 900 | 373 | 386 | 0.707 | 0.7 | 0.703 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iqbal, H.; Godoy, J.; Martin, B.; Al-kaff, A.; Garcia, F. Seeing the City Live: Bridging Edge Vehicle Perception and Cloud Digital Twins to Empower Smart Cities. Smart Cities 2025, 8, 197. https://doi.org/10.3390/smartcities8060197

Iqbal H, Godoy J, Martin B, Al-kaff A, Garcia F. Seeing the City Live: Bridging Edge Vehicle Perception and Cloud Digital Twins to Empower Smart Cities. Smart Cities. 2025; 8(6):197. https://doi.org/10.3390/smartcities8060197

Chicago/Turabian StyleIqbal, Hafsa, Jaime Godoy, Beatriz Martin, Abdulla Al-kaff, and Fernando Garcia. 2025. "Seeing the City Live: Bridging Edge Vehicle Perception and Cloud Digital Twins to Empower Smart Cities" Smart Cities 8, no. 6: 197. https://doi.org/10.3390/smartcities8060197

APA StyleIqbal, H., Godoy, J., Martin, B., Al-kaff, A., & Garcia, F. (2025). Seeing the City Live: Bridging Edge Vehicle Perception and Cloud Digital Twins to Empower Smart Cities. Smart Cities, 8(6), 197. https://doi.org/10.3390/smartcities8060197