Feature-Level Vehicle-Infrastructure Cooperative Perception with Adaptive Fusion for 3D Object Detection

Abstract

Highlights

- The proposed feature-level VICP framework consistently outperforms state-of-the-art baselines on the DAIR-V2X-C dataset, achieving higher and .

- Experiments show that RFR delivers the largest gain, UWF improves robustness via adaptive uncertainty weighting, and CDCA enhances feature calibration.

- Cooperative perception effectively overcomes occlusion and blind-spot limitations of vehicle-centric systems.

- The proposed model provides a reference for scalable and generalizable deployment of cooperative perception within smart city infrastructure.

Abstract

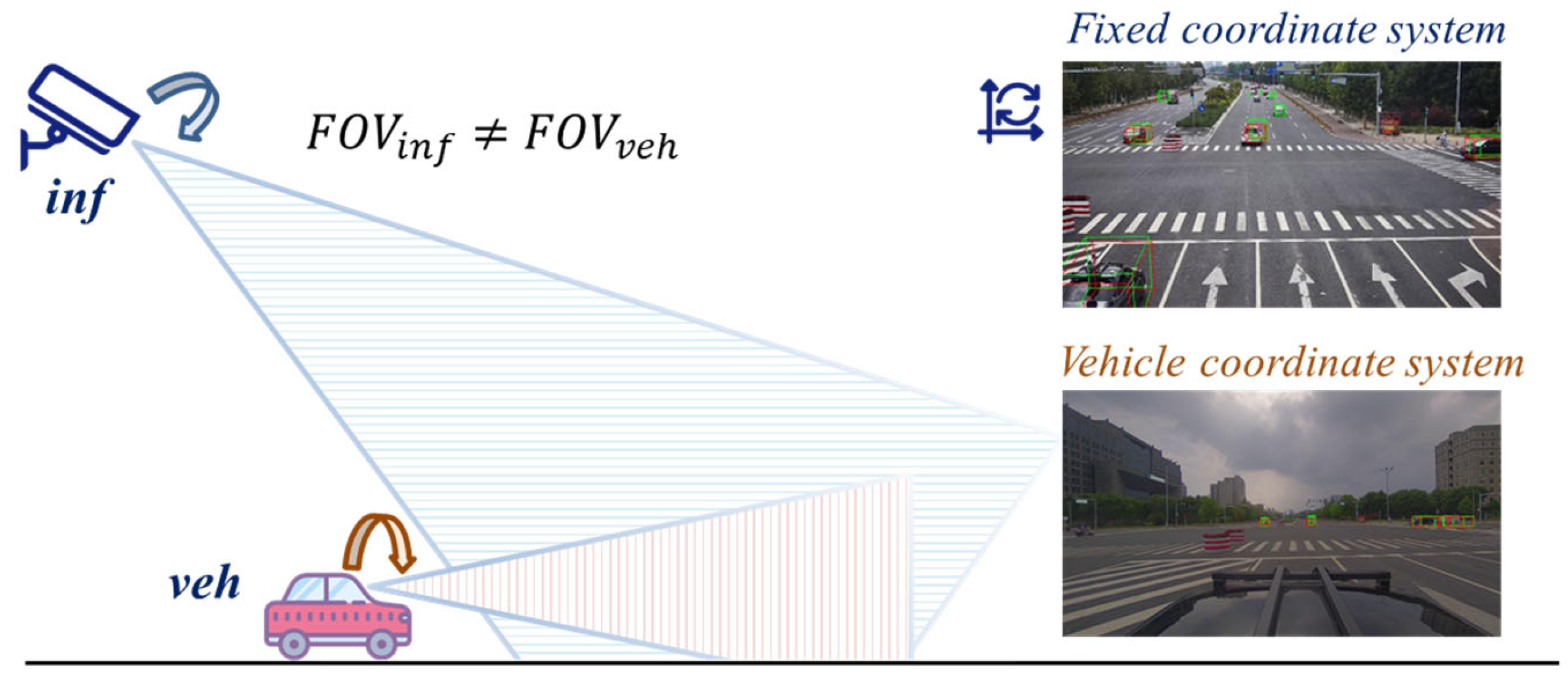

1. Introduction

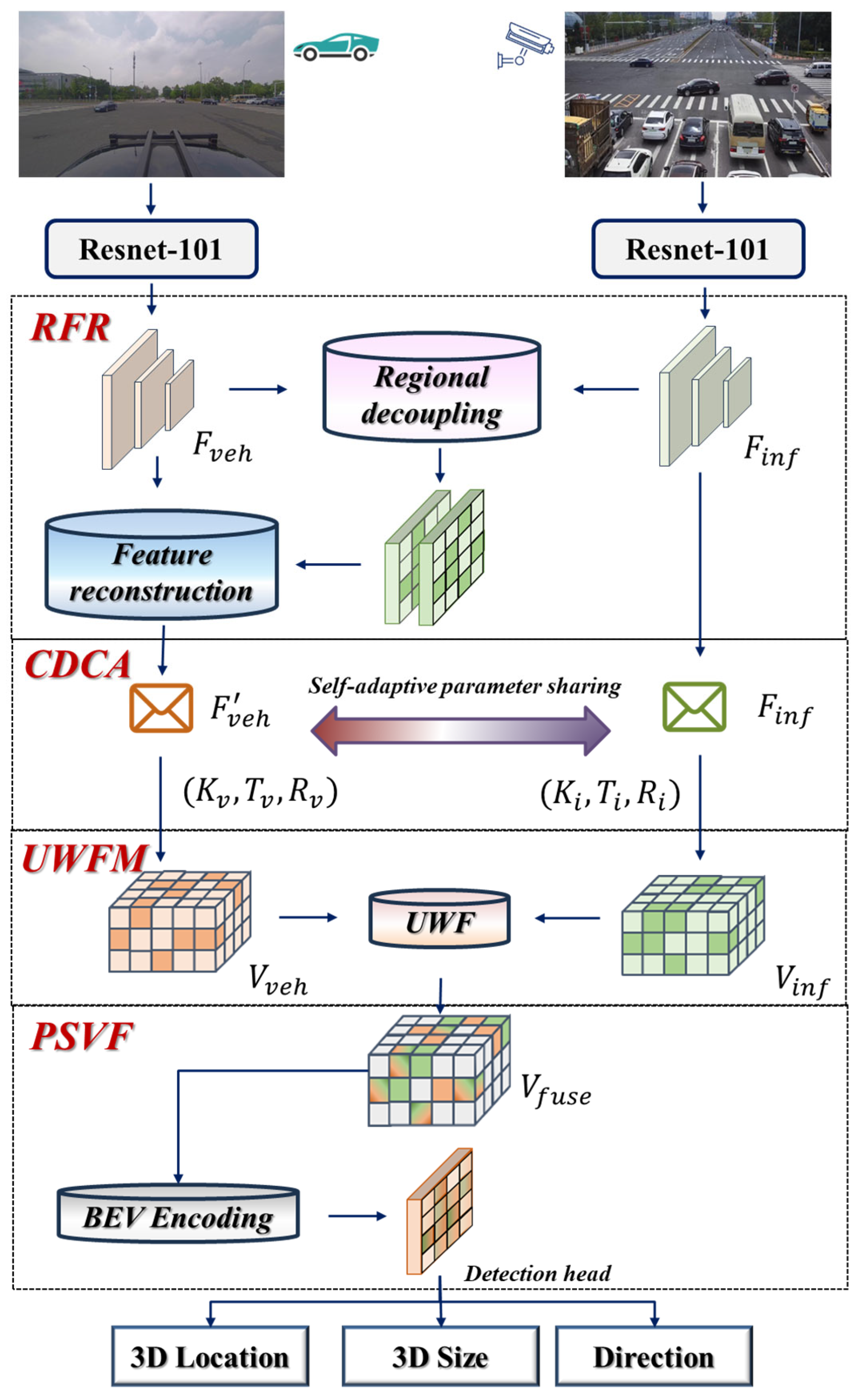

- A feature-level vehicle-infrastructure perception framework is proposed and experimentally validated, demonstrating superior occlusion handling and adaptability.

- This study proposes a confidence-map-based regional feature reconstruction (RFR) that decouples roadside features and uses deformable attention to reconstruct and augment onboard features, thereby improving onboard representation and cross-view detection.

- A context-driven channel attention (CDCA) module is proposed to exploit global image-level context for adaptive, channel-wise recalibration of multi-scale features on both vehicle and roadside sensors, thereby eliminating reliance on external calibration parameters.

- An uncertainty-weighted fusion (UWF) mechanism is designed to estimate voxel-level uncertainty across heterogeneous feature sources and to allocate fusion weights dynamically based on confidence, significantly enhancing robustness under noise, occlusion, and projection errors.

2. Related Work

2.1. Vision-Based 3D Object Detection

2.2. Vehicle-Infrastructure Cooperative Perception

2.3. Channel Recalibration and Uncertainty Driven Fusion

3. Methods

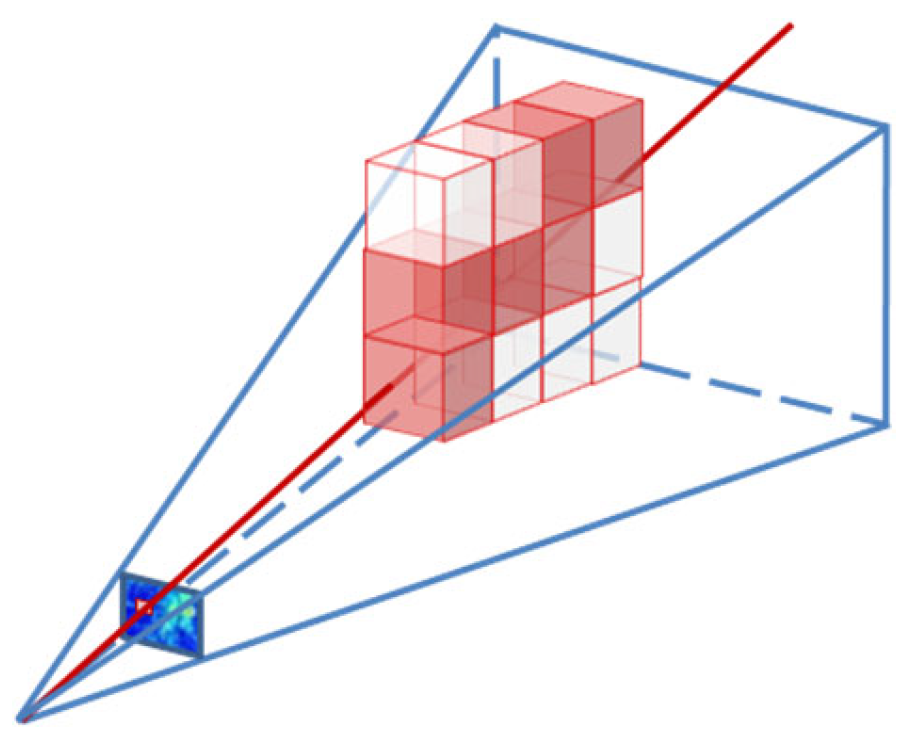

3.1. Feature Representation and Regional Feature Reconstruction

3.2. Context-Driven Channel Attention

3.3. Uncertainty-Weighted Fusion Mechanism

3.4. Point Sampling Voxel Fusion

4. Dataset Description and Experimental Setup

4.1. Dataset Description

4.2. Experimental Settings

4.3. Evaluation Metrics

5. Results and Analysis

5.1. Analysis of Comparative Experimental Results

5.2. Ablation Study

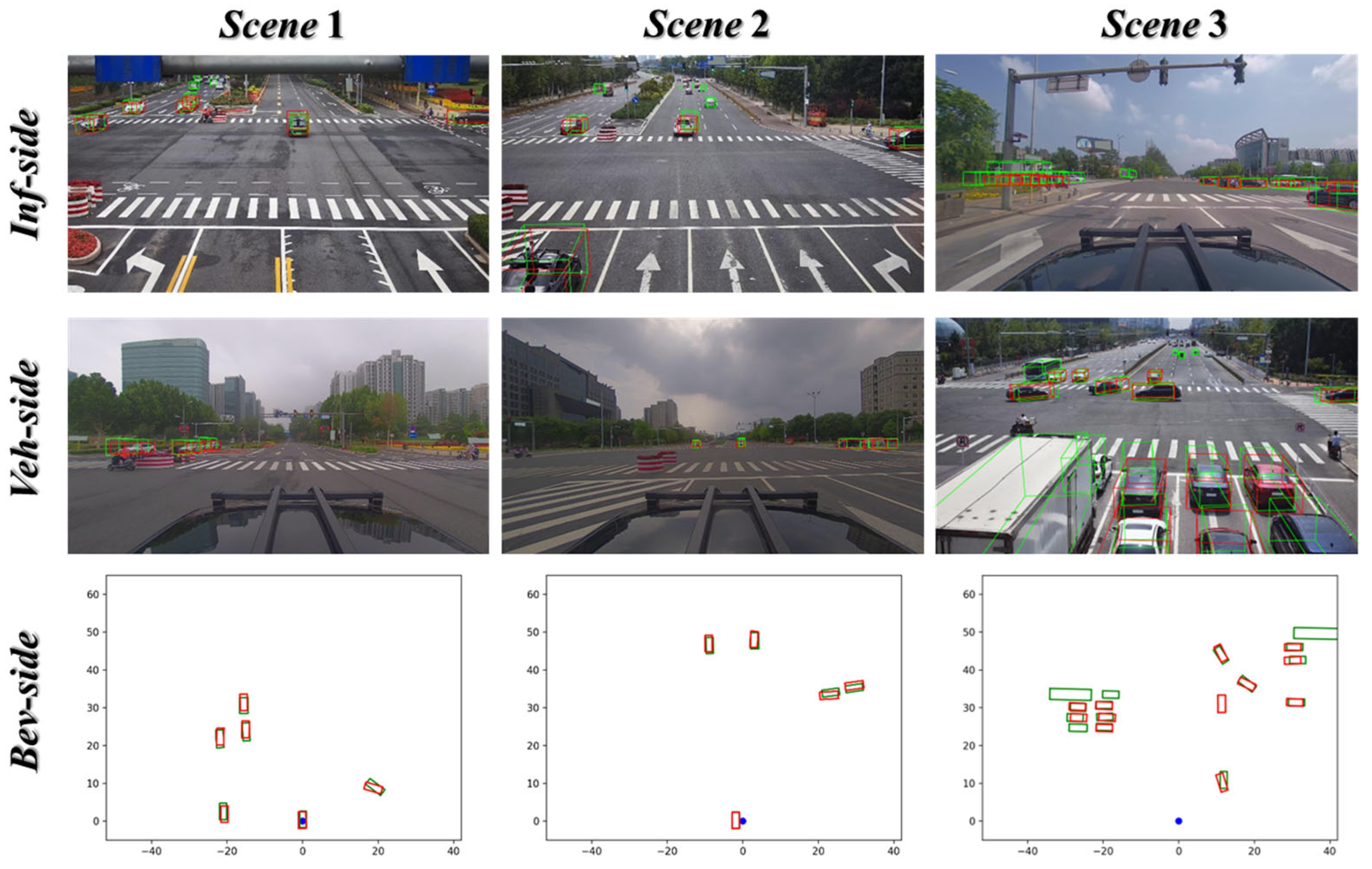

5.3. Visualization

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Clancy, J.; Molloy, D.; Hassett, S.; Leahy, J.; Ward, E.; Denny, P.; Jones, E.; Glavin, M.; Deegan, B. Evaluating the feasibility of intelligent blind road junction V2I deployments. Smart Cities 2024, 7, 973–990. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, X.; Yu, J.; Li, J.; Zhao, T.; Wang, L.; Huang, Y.; Zhang, C.; Wang, H.; Li, Y. MonoGAE: Roadside monocular 3D object detection with ground-aware embeddings. IEEE Trans. Intell. Transp. Syst. 2024, 25, 17587–17601. [Google Scholar] [CrossRef]

- Han, Y.; Zhang, H.; Li, H.; Jin, Y.; Lang, C.; Li, Y. Collaborative perception in autonomous driving: Methods, datasets, and challenges. IEEE Intell. Transp. Syst. Mag. 2023, 15, 131–151. [Google Scholar] [CrossRef]

- Arnold, E.; Dianati, M.; De Temple, R.; Fallah, S. Cooperative perception for 3D object detection in driving scenarios using infrastructure sensors. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1852–1864. [Google Scholar] [CrossRef]

- Wang, T.H.; Manivasagam, S.; Liang, M.; Yang, B.; Zeng, W.; Urtasun, R. V2vnet: Vehicle-to-vehicle communication for joint perception and prediction. In Proceedings of the Computer Vision—ECCV 2020, Virtually, 23–28 August 2020; pp. 605–621. [Google Scholar]

- Hu, Y.; Lu, Y.; Xu, R.; Xie, W.; Chen, S.; Wang, Y. Collaboration helps camera overtake lidar in 3d detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 9243–9252. [Google Scholar]

- Luo, G.; Zhang, H.; Yuan, Q.; Li, J. Complementarity-enhanced and redundancy-minimized collaboration network for multi-agent perception. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 3578–3586. [Google Scholar]

- Xu, R.; Xiang, H.; Tu, Z.; Xia, X.; Yang, M.H.; Ma, J. V2x-ViT: Vehicle-to-everything cooperative perception with vision transformer. In Proceedings of the ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 107–124. [Google Scholar]

- Cui, G.; Zhang, W.; Xiao, Y.; Yao, L.; Fang, Z. Cooperative perception technology of autonomous driving in the internet of vehicles environment: A review. Sensors 2022, 22, 5535. [Google Scholar] [CrossRef]

- Liu, J.; Wang, P.; Wu, X. A Vehicle-Infrastructure Cooperative Perception Network Based on Multi-Scale Dynamic Feature Fusion. Appl. Sci. 2025, 15, 3399. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Xiao, Y.; Wang, T. Spatiotemporal Dual-Branch Feature-Guided Fusion Network for Driver Attention Prediction. Expert Syst. Appl. 2025, 292, 128564. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Zhang, Y.; Yu, P. Asymmetric light-aware progressive decoding network for RGB-thermal salient object detection. J. Electron. Imaging 2025, 34, 013005. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, P.; Xiao, Y.; Wang, S. Pyramid-structured multi-scale transformer for efficient semi-supervised video object segmentation with adaptive fusion. Pattern Recognit. Lett. 2025, 194, 48–54. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Zhen, J.; Kang, Y.; Cheng, Y. Adaptive downsampling and scale enhanced detection head for tiny object detection in remote sensing image. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6003605. [Google Scholar] [CrossRef]

- Xu, R.; Tu, Z.; Xiang, H.; Shao, W.; Zhou, B.; Ma, J. CoBEVT: Cooperative bird’s eye view semantic segmentation with sparse transformers. arXiv 2022, arXiv:2207.02202. [Google Scholar]

- Wang, Z.; Fan, S.; Huo, X.; Xu, T.; Wang, Y.; Liu, J.; Chen, Y.; Zhang, Y.Q. Emiff: Enhanced multi-scale image feature fusion for vehicle-infrastructure cooperative 3d object detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 16388–16394. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; p. 28. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Brazil, G.; Liu, X. M3d-rpn: Monocular 3d region proposal network for object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9287–9296. [Google Scholar]

- Liu, Z.; Wu, Z.; Tóth, R. Smoke: Single-stage monocular 3d object detection via keypoint estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 996–997. [Google Scholar]

- Tang, X.; Wang, W.; Song, H.; Zhao, C. CenterLoc3D: Monocular 3D vehicle localization network for roadside surveillance cameras. Complex Intell Syst. 2023, 9, 4349–4368. [Google Scholar] [CrossRef]

- Ding, M.; Huo, Y.; Yi, H.; Wang, Z.; Shi, J.; Lu, Z.; Luo, P. Learning depth-guided convolutions for monocular 3d object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1000–1001. [Google Scholar]

- Wang, Y.; Chao, W.L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8445–8453. [Google Scholar]

- Ma, X.; Wang, Z.; Li, H.; Zhang, P.; Ouyang, W.; Fan, X. Accurate monocular 3d object detection via color-embedded 3d reconstruction for autonomous driving. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–28 October 2019; pp. 6851–6860. [Google Scholar]

- Chabot, F.; Chaouch, M.; Rabarisoa, J.; Teuliere, C.; Chateau, T. Deep manta: A coarse-to-fine many-task network for joint 2d and 3d vehicle analysis from monocular image. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2040–2049. [Google Scholar]

- He, T.; Soatto, S. Mono3d++: Monocular 3d vehicle detection with two-scale 3d hypotheses and task priors. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8409–8416. [Google Scholar] [CrossRef]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. Detr3d: 3d object detection from multi-view images via 3d-to-2d queries. In Proceedings of the 5th Conference on Robot Learning (CoRL 2021), London, UK, 8–11 November 2022; pp. 180–191. [Google Scholar]

- Philion, J.; Fidler, S. Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 194–210. [Google Scholar]

- Li, Y.; Ge, Z.; Yu, G.; Yang, J.; Wang, Z.; Shi, Y.; Sun, J.; Li, Z. Bevdepth: Acquisition of reliable depth for multi-view 3d object detection. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1477–1485. [Google Scholar] [CrossRef]

- Song, Z.; Yang, L.; Xu, S.; Liu, L.; Xu, D.; Jia, C.; Jia, F.; Wang, L. Graphbev: Towards robust bev feature alignment for multi-modal 3d object detection. In Proceedings of the 2024 European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 347–366. [Google Scholar]

- Cai, H.; Zhang, Z.; Zhou, Z.; Li, Z.; Ding, W.; Zhao, J. Bevfusion4d: Learning lidar-camera fusion under bird’s-eye-view via cross-modality guidance and temporal aggregation. arXiv 2023, arXiv:2303.17099. [Google Scholar]

- Fan, S.; Wang, Z.; Huo, X.; Wang, Y.; Liu, J. Calibration-free bev representation for infrastructure perception. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 9008–9013. [Google Scholar]

- Xu, R.; Xiang, H.; Xia, X.; Han, X.; Li, J.; Ma, J. Opv2v: An open benchmark dataset and fusion pipeline for perception with vehicle-to-vehicle communication. In Proceedings of the 2022 IEEE International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2583–2589. [Google Scholar]

- Xiang, C.; Feng, C.; Xie, X.; Shi, B.; Lu, H.; Lv, Y.; Yang, M.; Niu, Z. Multi-sensor fusion and cooperative perception for autonomous driving: A review. IEEE Intell. Transp. Syst. Mag. 2023, 15, 36–58. [Google Scholar] [CrossRef]

- Xu, R.; Chen, W.; Xiang, H.; Liu, L.; Ma, J. Model-agnostic multi-agent perception framework. arXiv 2022, arXiv:2203.13168. [Google Scholar]

- Zhou, Y.; Yang, C.; Wang, P.; Wang, C.; Wang, X.; Van, N.N. ViT-FuseNet: MultiModal fusion of vision transformer for vehicle-infrastructure cooperative perception. IEEE Access 2024, 12, 31640–31651. [Google Scholar] [CrossRef]

- Liu, H.; Gu, Z.; Wang, C.; Wang, P.; Vukobratovic, D. A lidar semantic segmentation framework for the cooperative vehicle-infrastructure system. In Proceedings of the 2023 IEEE 98th Vehicular Technology Conference (VTC2023-Fall), Hong Kong, China, 10–13 October 2023; pp. 1–5. [Google Scholar]

- Yang, D.; Yang, K.; Wang, Y.; Liu, J.; Xu, Z.; Yin, R.; Zhai, P.; Zhang, L. How2comm: Communication-efficient and collaboration-pragmatic multi-agent perception. In Proceedings of the 37th Conference on Neural Information Processing Systems (NeurIPS 2023), Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 25151–25164. [Google Scholar]

- Zhou, L.; Gan, Z.; Fan, J. CenterCoop: Center-based feature aggregation for communication-efficient vehicle-infrastructure cooperative 3d object detection. IEEE Robot. Autom. Lett. 2023, 9, 3570–3577. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Nabati, R.; Qi, H. Centerfusion: Center-based radar and camera fusion for 3d object detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 5–9 January 2021; pp. 1527–1536. [Google Scholar]

- Liang, T.; Xie, H.; Yu, K.; Xia, Z.; Lin, Z.; Wang, Y.; Tang, T.; Wang, B.; Tang, Z. Bevfusion: A simple and robust lidar-camera fusion framework. In Proceedings of the 36th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 28 November–9 December 2022; Volume 35, pp. 10421–10434. [Google Scholar]

- Li, G.; Yang, L.; Lee, C.G.; Wang, X.; Rong, M. A Bayesian deep learning RUL framework integrating epistemic and aleatoric uncertainties. IEEE Trans. Ind. Electron. 2020, 68, 8829–8841. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, Y.; Zhang, Y.; Zhang, T. Video saliency prediction via single feature enhancement and temporal recurrence. Eng. Appl. Artif. Intell. 2025, 160, 111840. [Google Scholar] [CrossRef]

- Demir, A.; Yilmaz, F.; Kose, O. Early detection of skin cancer using deep learning architectures: Resnet-101 and inception-v3. In Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO), Izmir, Turkey, 3–5 October 2019; pp. 1–4. [Google Scholar]

- Vangimalla, R.R.; Sreevalsan-Nair, J. A multiscale consensus method using factor analysis to extract modular regions in the functional brain network. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) in Conjunction with the 43rd Annual Conference of the Canadian Medical and Biological Engineering Society, Virtual, 20–24 July 2020; pp. 2824–2828. [Google Scholar]

- Zhang, Y.; Wu, C.; Guo, W.; Zhang, T.; Li, W. CFANet: Efficient detection of UAV image based on cross-layer feature aggregation. IEEE Trans. Geosci. 2023, 61, 5608911. [Google Scholar] [CrossRef]

- Fan, S.; Yu, H.; Yang, W.; Yuan, J.; Nie, Z. Quest: Query stream for vehicle-infrastructure cooperative perception. arXiv 2023, arXiv:2308.01804. [Google Scholar]

- Wen, L.; Li, X.; Gao, L. A transfer convolutional neural network for fault diagnosis based on ResNet-50. Neural Comput. Appl. 2019, 32, 6111–6124. [Google Scholar] [CrossRef]

- Haque, M.F.; Lim, H.Y.; Kang, D.S. Object detection based on VGG with ResNet network. In Proceedings of the 2019 International Conference on Electronics, Information, and Communication (ICEIC), Auckland, New Zealand, 22–25 January 2019; pp. 1–3. [Google Scholar]

| Type | Model | ||||

|---|---|---|---|---|---|

| Single_Veh | EMIFF_Veh | 14.62 | 7.92 | 15.77 | 9.65 |

| Ours_Veh | 14.78 | 8.26 | 15.99 | 9.61 | |

| Single_Inf | EMIFF_Inf | 22.27 | 8.40 | 23.41 | 13.34 |

| Ours_Inf | 21.34 | 8.28 | 23.12 | 13.05 | |

| DL | EMIFF_Veh and Inf | 26.22 | 11.60 | 29.25 | 16.44 |

| Ours_Veh and Inf | 26.65 | 12.08 | 29.86 | 16.73 | |

| FL | EMIFF | 30.24 | 15.07 | 33.73 | 20.74 |

| QUEST | 33.30 | 14.10 | 39.40 | 20.30 | |

| Ours | 31.01 | 15.76 | 33.38 | 21.02 | |

| Backbone | Param | GFLOPS | ||||

|---|---|---|---|---|---|---|

| VGG16_EMIFF | 28.12 | 14.27 | 30.45 | 17.05 | 138.36M | 152.30 |

| VGG16_Ours | 28.85 | 14.88 | 31.12 | 17.64 | 143.73M | 135.98 |

| Res50_EMIFF | 30.24 | 15.07 | 33.38 | 20.74 | 49.33M | 123.76 |

| Res101_EMIFF | 31.13 | 15.84 | 34.68 | 21.30 | 87.31M | 201.46 |

| Res50_Ours | 31.01 | 15.76 | 33.73 | 21.02 | 54.72M | 107.44 |

| Res101_Ours | 31.58 | 16.31 | 34.16 | 21.49 | 92.66M | 184.93 |

| Model | Input Resolution | Backbone + FPN FPS ↑ | Total FPS ↑ | Total Latency (ms) ↓ |

|---|---|---|---|---|

| Res50_Ours | 1280 × 720 | 42.6 | 34.1 | 29.3 |

| 1920 × 1080 | 33.4 | 26.8 | 37.3 | |

| Res101_Ours | 1280 × 720 | 31.7 | 25.5 | 39.2 |

| 1920 × 1080 | 25.6 | 21.3 | 46.9 |

| RFR | Camera Parameter Embedding | CDCA | UWF | ||||

|---|---|---|---|---|---|---|---|

| √ | √ | 27.53 | 12.89 | 30.13 | 17.46 | ||

| √ | √ | 27.86 | 13.04 | 30.39 | 17.64 | ||

| √ | √ | √ | 31.03 | 15.18 | 33.85 | 21.07 | |

| √ | √ | 29.69 | 14.84 | 32.72 | 19.30 | ||

| √ | √ | √ | 31.58 | 16.31 | 34.16 | 21.49 | |

| Voxelization Strategy | Param | GFLOPS | Total FPS ↑ | Total Latency (ms) ↓ | ||

|---|---|---|---|---|---|---|

| Full-grid voxelization | 93.4 M | 189.7 | 22.4 | 47.5 | 15.89 | 21.27 |

| Uniform voxel sampling | 92.5 M | 186.2 | 25.1 | 42.8 | 16.02 | 21.33 |

| PSVF (ours) | 92.7 M | 184.9 | 31.7 | 39.2 | 16.31 | 21.49 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, S.; Peng, J.; Wang, S.; Wu, D.; Ma, C. Feature-Level Vehicle-Infrastructure Cooperative Perception with Adaptive Fusion for 3D Object Detection. Smart Cities 2025, 8, 171. https://doi.org/10.3390/smartcities8050171

Yu S, Peng J, Wang S, Wu D, Ma C. Feature-Level Vehicle-Infrastructure Cooperative Perception with Adaptive Fusion for 3D Object Detection. Smart Cities. 2025; 8(5):171. https://doi.org/10.3390/smartcities8050171

Chicago/Turabian StyleYu, Shuangzhi, Jiankun Peng, Shaojie Wang, Di Wu, and Chunye Ma. 2025. "Feature-Level Vehicle-Infrastructure Cooperative Perception with Adaptive Fusion for 3D Object Detection" Smart Cities 8, no. 5: 171. https://doi.org/10.3390/smartcities8050171

APA StyleYu, S., Peng, J., Wang, S., Wu, D., & Ma, C. (2025). Feature-Level Vehicle-Infrastructure Cooperative Perception with Adaptive Fusion for 3D Object Detection. Smart Cities, 8(5), 171. https://doi.org/10.3390/smartcities8050171