1. Introduction

Autonomous vehicles [

1,

2] have achieved significant progress in recent years, with marked improvements in core subsystems such as perception, decision-making, and planning. These advancements offer revolutionary potential for transforming traffic systems, alleviating congestion, and enhancing traffic efficiency [

3]. As key enablers of intelligent transportation systems, autonomous vehicles play a pivotal role in accelerating the transition toward smart cities. However, the complexity and unpredictability of real-world driving scenarios present significant challenges to autonomous driving systems. Of particular concern are corner cases—low-probability, high-risk scenarios [

4]—which result in frequent collisions involving autonomous vehicles [

5,

6] and have emerged as a critical bottleneck hindering large-scale deployment. To ensure safe real-world operation of autonomous vehicles, this necessitates the development of a comprehensive scenario-based dataset to validate the functional reliability of autonomous driving systems [

7].

However, the definition and quantification methods of corner cases have emerged as the primary challenge in the development of naturalistic driving corner case datasets. While low probability and high risk [

8] are widely regarded as core attributes of corner cases, scholars have yet to reach a consensus on their formal criteria. Existing research has explored their characteristics through diverse methodological approaches. Bolte et al. [

9] highlighted the inherent unpredictability of corner cases. Koopman et al. [

10] analyzed the distinction between the concepts of an edge case and corner case, stating that “an edge case is a rare combination of normal parameters within the expected range; however, not all edge cases are corner cases. Only when the combination of parameters has a certain novelty can it be regarded as a corner case, which will create an emergency related to system behavior. If a Corner Case is not considered during system design and validation, the system will not be able to handle it well.” Pfeil et al. [

11] discussed such cases through the lens of system-level anomalies arising from interactions among multiple scenario elements. Meanwhile, Zhang et al. [

12] and Kowol et al. [

13] emphasized the coupling effect between anomalous system states and rare behavioral patterns. Furthermore, Rosch et al. [

14] believed that corner cases exhibit a strong correlation with the ego vehicle’s driving tasks. Synthesizing existing research, the common attributes of corner cases can be categorized into three features: (1) triggered by multi-dimensional scenario elements [

10,

11]; (2) coexistence of low-probability occurrence and high-risk consequences [

8,

12,

13]; and (3) strong correlation with the ego vehicle’s driving task [

14]. The ambiguity in defining corner cases poses significant challenges to the development of a systematic corner case dataset.

Naturalistic driving data are the primary source for constructing scenario datasets. Current research has focused on data collection and annotation in conventional driving scenarios, which struggles to address the urgent need for corner cases in autonomous driving system functional testing. For instance, the NGSIM [

15] and HighD [

16] datasets collected vehicle trajectory data on highways via fixed cameras and drone aerial photography, respectively. Unobstructed data collection methods improved trajectory accuracy. The Cityscapes [

17] and Mapillary Vistas [

18] datasets established comprehensive annotation systems for urban street environments using multi-source image data. The Driving Stereo [

19], Argorse [

20], and ApolloScape [

21] datasets enhanced scenario complexity by fusing multi-sensor data, with ApolloScape’s millimeter-level point cloud accuracy and nuScenes’s [

22] multi-attribute 3D annotation system significantly advancing dataset quality. While existing datasets excel, whether in terms of data volume, annotation quality, or scenario diversity, their scenario coverage remains limited, predominantly targeting conventional scenarios and leading to a minimal proportion of corner cases. Furthermore, these datasets lack systematic analysis of the correlations among scenario elements. Additionally, the heterogeneity in data formats hinders standardized testing processes, increasing complexity and time costs, which underscores the critical need to develop a standardized corner case dataset. A specific analysis of existing mainstream datasets is provided in

Table 1.

To address the aforementioned challenges, this study proposes a quantitative framework for evaluating scenario marginality, incorporating both risk and rarity metrics. This framework facilitates the extraction of corner cases from naturalistic driving data collected from multiple sources. Furthermore, we propose a format conversion and standardization methodology to unify heterogeneous corner case data, which yields a corner case dataset featuring diverse interaction patterns and standardized formats. This study can not only improve testing efficiency for autonomous vehicles but also enhance their adaptability to diverse corner cases. The specific contributions of this paper are as follows:

(1) Leveraging naturalistic driving data from diverse sources, this study constructs a standardized corner case dataset specifically tailored for autonomous vehicle testing. The dataset exhibits diverse interaction patterns, unified formatting, and strong applicability.

(2) We develop a comprehensive theoretical framework for corner case data collection and extraction. This framework systematically leverages real-world naturalistic driving data resources, enabling the efficient extraction of high-value corner case data.

(3) By systematically investigating the intrinsic properties of corner cases, we propose a novel definition integrating low-probability and high-risk characteristics. Furthermore, we design a quantitative evaluation methodology incorporating risk and rarity metrics.

The remainder of this paper is organized as follows.

Section 2 presents the methodological framework for constructing the corner case dataset.

Section 3 introduces the classification scheme and storage format of the corner cases.

Section 4 provides a comprehensive validation of the dataset’s applicability.

Section 5 outlines the potential application directions of the dataset. Finally,

Section 6 concludes this paper.

2. Methods

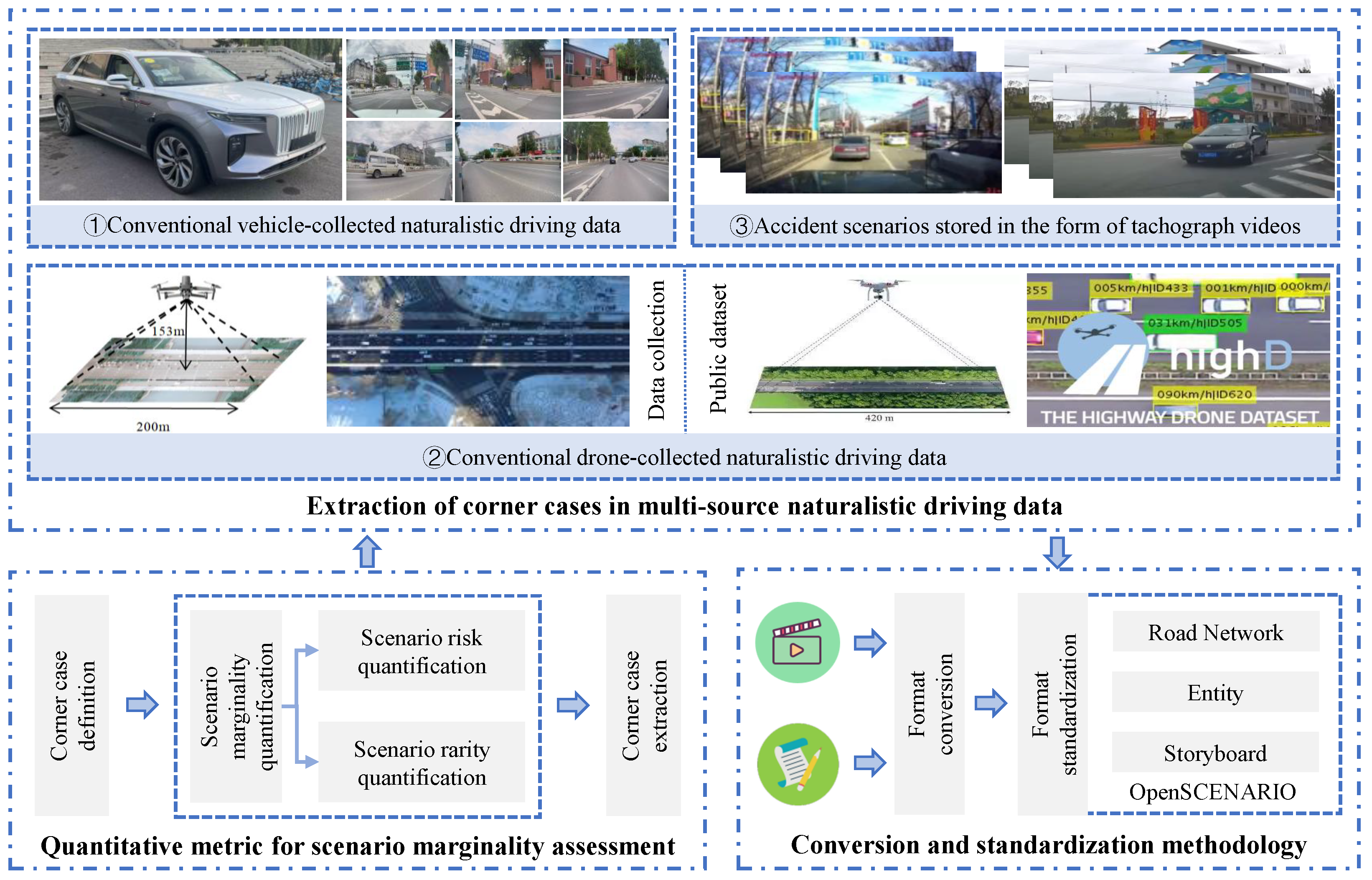

This paper proposes a systematic methodology for constructing a corner case dataset, comprising three core components: (1) a scenario marginality quantification method, (2) a multi-source data-driven corner case extraction framework, and (3) a format conversion and standardization approach. As illustrated in

Figure 1, the proposed framework initially quantifies scenario marginality by integrating risk and rarity. Next, a vehicle-mounted data collection platform is developed to systematically gather conventional scenario data across diverse road types and environmental conditions. A drone collection platform is deployed to capture traffic flow data under varying weather conditions, supplemented with the HighD naturalistic driving dataset. Additionally, a corner case extraction framework is designed using tachograph-recorded accident videos from public datasets. By integrating these three data sources, a comprehensive set of widely distributed corner cases is extracted. Finally, we establish a unified format conversion and standardization methodology, organizing the extracted corner cases into a standardized scenario representation to improve applicability.

2.1. Quantitative Metric for Scenario Marginality Assessment

The development of a quantitative evaluation method for corner cases, rooted in their definition, serves as the foundation for extracting such cases from naturalistic driving datasets. As defined in this study, scenario marginality is evaluated quantitatively through integrated risk and rarity, effectively capturing the characteristics of corner cases: high risk and low probability.

2.1.1. Scenario Risk Quantification

Risk refers to the degree of potential danger in traffic scenarios, where a higher quantified risk value indicates a greater level of hazard. This metric is employed to describe the high-risk characteristics of corner cases. In this study, we employ the minimum time to collision (TTC) derived from time-series scenarios to quantify risk. If

is negative, no immediate collision risk exists between vehicles. When

is sufficiently large, the inter-vehicle risk remains low. As

approaches zero from positive values, the scenario presents a critical risk. Building upon this, we develop a quantitative risk evaluation assessment metric for traffic scenarios, which is defined by Equation (

1):

where

denotes the quantitative risk metric for traffic scenarios, which is computed using distinct

value ranges. A smaller

indicates a higher level of risk.

2.1.2. Scenario Rarity Quantification

Risk should not be the sole criterion for assessing scenario marginality. The infrequency of such scenarios further challenges autonomous vehicles’ adaptation capabilities, often leading to abnormal behaviors. To address this limitation, we propose a rarity metric that quantifies the low-probability attributes inherent to corner cases.

Scenario rarity refers to the low probability of a scenario occurring, characterized by low-probability driving behaviors or scenario elements. This study dissects each temporal scenario into a set of fundamental driving behaviors

T, such as following, cutting-in, on-ramp, and off-ramp maneuvers, and describes these behaviors through parametric modeling. Aligned with the hierarchical framework established by the PEGASUS project, scenarios are further decomposed into distinct scenario elements

E (e.g., weather conditions and road geometry). The co-occurrence of low-probability driving behaviors and scenario elements across hierarchical levels induces a compounding effect, whereby their interaction exponentially diminishes the possibility of scenario occurrence. The quantification of scenario rarity is mathematically formalized in Equation (

2):

where

represents the scenario rarity metric incorporating driving behaviors

T and scenario elements

E,

denotes the probability of the

ith driving behavior, and

corresponds to the probability of the

jth scenario element.

This study utilizes both collected and publicly available naturalistic driving datasets to analyze the probability of driving behaviors and scenario elements, thereby determining the specific values of and . It is important to note that due to the sheer volume of naturalistic driving data, a representative subset is used to derive probability values for quantitative analysis. The dataset selected for analysis comprises 2000 scenarios, encompassing a diverse range of driving behaviors and scenario elements, sufficient to satisfy the statistical requirements for estimating probabilities.

2.1.3. Scenario Marginality Quantification

The quantification of scenario marginality necessitates the integration of both scenario risk and rarity to capture the long-tail distribution of low-probability, high-risk corner cases, thereby deriving the scenario marginality quantification method shown in Equation (

3):

where

represents the quantitative metric for scenario marginality, incorporating the combined impact of scenario risk and rarity.

2.2. Extraction of Corner Cases in Multi-Source Naturalistic Driving Data

This study leverages three distinct data sources: vehicle-collected naturalistic driving data, drone-collected naturalistic driving data, and accident video data stored in tachograph videos. By employing the proposed scenario marginality indicators, this work systematically identifies and extracts corner cases from multi-source datasets.

2.2.1. Extraction of Corner Cases in Vehicle-Collected Naturalistic Driving Data

Vehicle-collected naturalistic driving data captures the dynamic interactions during driving process, serving as a critical data form for dataset construction. In this paper, we develop a vehicle platform to collect naturalistic driving data and employ the scenario marginality quantification method to systematically analyze and extract corner cases.

The integration of high-precision visual sensors for naturalistic driving data acquisition represents a widely adopted methodology to enable centimeter-level scenario data collection and document the spatiotemporal dynamics of human–vehicle–road interactions. This study utilizes a Hongqi E-HS9 vehicle from China to construct an experimental data acquisition system, as shown in

Figure 2. The vehicle integrates an OxTS RT3000 V3 combined GNSS/INS positioning system (manufactured by Oxford Technical Solutions Ltd., Middleton Stoney, UK), capturing three-dimensional coordinates, heading, and kinematic parameters. To achieve full circumferential video coverage, the vehicle is equipped with a Horizon MATRIX® MONO3 vision system (made by Horizon Robotics Inc., headquartered in Beijing, China), consisting of six high-precision cameras to provide 360° environmental monitoring. Under optimal lighting conditions, the system maintains a detection radius of 120 m. Through processing of MONO3-captured imagery, the MATRIX framework extracts trajectory information of surrounding traffic participants and lane geometry with centimeter-level positional accuracy. All experimental instruments are installed in positions that do not interfere with normal driving operations.

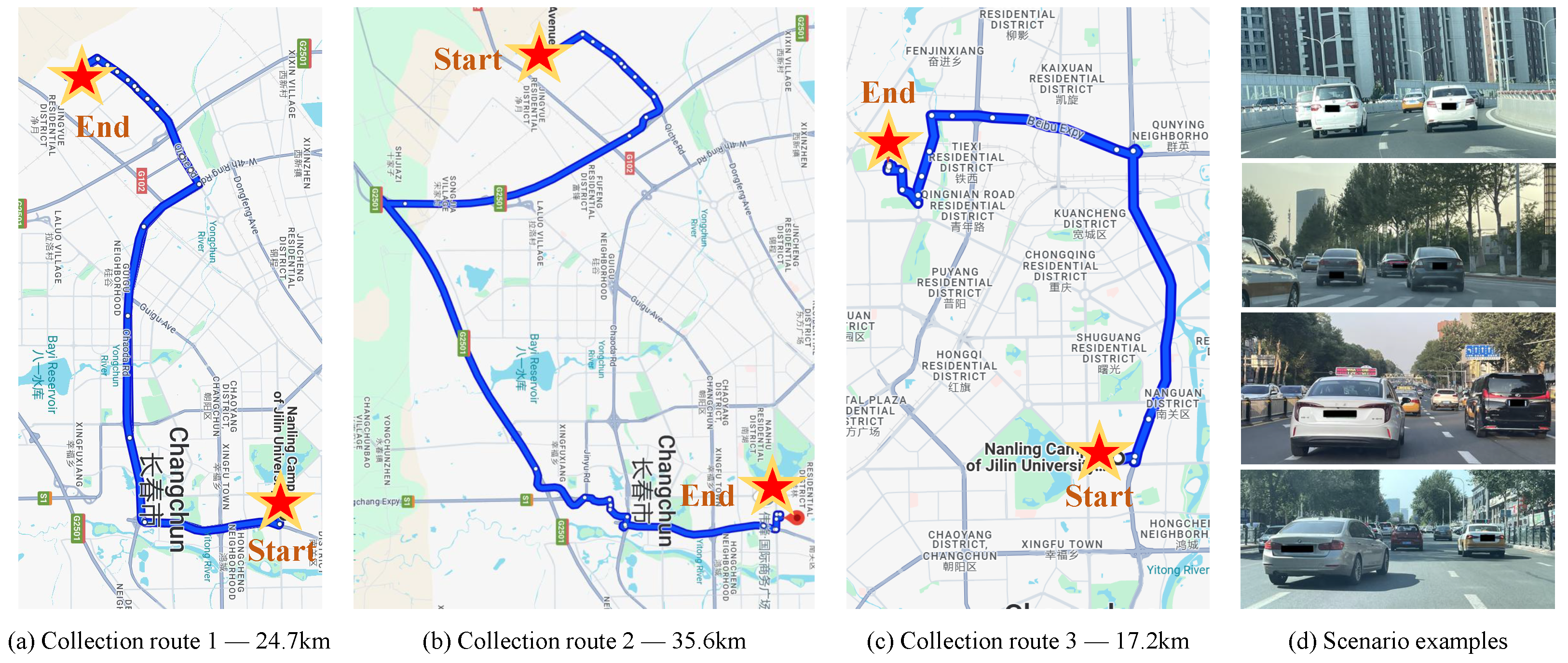

This study presents the collection of naturalistic driving data in Changchun, Jilin Province, China. Unlike mainstream naturalistic driving datasets, our methodology encompasses diverse road sections and environmental conditions (e.g., nighttime, daytime, and rainy weather). By strategically planning the data collection routes and designing the collection conditions, varying complexities of road sections and environmental conditions can be flexibly integrated to capture the intricate interaction behaviors of vehicles under the combined influence of multi-level scenario elements. We collected approximately 78 km of dynamic scenario data, covering diverse road types (e.g., straight roads, ramps, roundabouts, and expressways) and capturing driving behaviors such as car-following, lane-changing, and merging across varying weather conditions. The detailed collection routes and scenario examples are illustrated in

Figure 3.

The naturalistic driving data collected using the real vehicle platform encompasses diverse scenario types, a wide range of scenario elements, and heterogeneous driving behaviors. These data serve as a robust foundation for calculating driving behaviors and scenario element probabilities across varying weather conditions and road segments. Following the definition of corner cases and the development of a quantitative evaluation method, such cases can be systematically extracted from the naturalistic driving dataset. This study establishes a threshold value ( = 0.8) for scenario marginality, which not only excludes driving scenarios with insufficient risk but also accounts for the cumulative impact of scenario rarity on the quantification of marginality.

2.2.2. Extraction of Corner Cases in Drone-Collected Naturalistic Driving Data

The naturalistic driving data collected via the drone-based methodology enables comprehensive coverage of road networks and facilitates the acquisition of complex traffic scenarios while preserving natural driving patterns. This study develops a drone-based data collection platform to capture scenario data across diverse weather conditions and integrates this dataset with the HighD [

16] dataset, which was also acquired using drone-mounted sensors. By leveraging the proposed scenario marginality quantification evaluation method, this study enables the systematic extraction of corner case data.

This study utilizes the M30T drone to develop a drone-based data collection platform. As illustrated in

Figure 4, naturalistic driving data were collected in Changchun, Jilin Province, China, specifically along the Yatai Street Expressway. Unlike mainstream drone-based datasets such as HighD, this work focuses on capturing naturalistic driving behavior on expressways under diverse meteorological conditions. The drone operated at an altitude of 153 m, monitoring a 200 m road segment, and recorded approximately 10 h of traffic flow scenario data. Examples of scenarios under varying weather conditions are presented in

Figure 5.

The data collected via drone are in the form of traffic flow videos, which cannot be directly utilized for corner case extraction. In this study, the YOLOv8 algorithm [

23] is employed to detect vehicles in the video, while the DeepSORT algorithm [

24] is utilized for frame-by-frame vehicle tracking. The drone camera parameters are calibrated using pre-measured real-world coordinates, enabling the conversion of video-based scenario data into temporal trajectory points for subsequent extraction and analysis. The quantification method for drone-perspective corner cases is identical to the vehicle-perspective approach. To ensure consistency, the same scenario marginality threshold (

= 0.8) is adopted.

2.2.3. Extraction of Corner Cases in Accident Videos

Tachographs are devices commonly installed in modern vehicles, which can automatically trigger, identify, and store accident-related data, thereby aiding in determining accident liability. Consequently, numerous accident scenario datasets generated by tachographs, such as CCD [

25] and A3D [

26], have been established. Naturalistic driving datasets of accident scenarios provide researchers with numerous corner cases, which represent potential real-world hazards and hold significant practical value. However, these datasets often lack critical information, preventing their direct application to the testing of autonomous driving systems. This paper proposes a structured framework for extracting corner cases from these datasets, aiming to address this limitation. Accident videos recorded by tachographs often lack synchronized multi-sensor temporal data, complicating the accurate calibration of internal and external camera parameters. This limitation hinders the extraction of critical information from such videos. To address this, we propose an accident scenario restoration framework with a joint camera parameter calibration strategy as its core component, presented in

Figure 6.

In the framework illustrated in

Figure 6, the video is initially preprocessed to enhance quality. The Efficient Multistage Video Denoising (EMVD) [

27] and Real-Time Intermediate Flow Estimation (RIFE) [

28] algorithms are applied to perform denoising and enhancement, thereby optimizing the video data’s usability. Subsequently, the YOLOv8 algorithm [

23] detects and identifies key traffic participants, while the DeepSORT [

24] algorithm tracks them frame by frame to derive trajectory information in pixel coordinates. Next, the DroidCalib [

29] algorithm calibrates camera parameters, enabling the transformation of trajectories from pixel coordinates to the world coordinate system. Finally, a data postprocessing pipeline is implemented, employing the Kalman [

30] algorithm to predict missing trajectories and the Savitzky–Golay (SG) algorithm to mitigate trajectory fluctuations, resulting in refined accident scenario trajectory data.

This study focuses on extracting key information from tachograph videos using established methodologies, specifically traffic participants’ interactive motion behaviors, and does not provide algorithmic implementation details. Notably, the scenarios’ marginality derived from tachograph videos obviously exceeds the threshold = 0.8; thus, no further quantification of scenario marginality is required.

2.3. Conversion and Standardization Methodology

To enhance the compatibility and applicability of the corner case dataset, this study unifies the format of multi-source corner cases through conversion and standardization, thereby generating standardized scenario files compatible with simulation testing. A format conversion method is developed based on spatiotemporal alignment for corner case temporal trajectory data captured from vehicle and drone perspectives. Specifically, timestamp synchronization and coordinate system conversion are performed, aligning the drone’s coordinate system with the vehicle’s UTM framework and representing temporal data as sequential trajectory points. The scenario reproduction process is implemented using the RoadRunner Scenario platform. By analyzing temporal trajectory characteristics, integrating traffic participant motion logic, and incorporating road topology, we generated scenario description files compliant with the ASAM OpenSCENARIO 1.0 specification. A dual validation mechanism—combining XML Schema verification and logical consistency checks—is employed, ensuring scenario file executability and parametric scalability in simulation testing, thereby delivering a standardized corner case dataset for autonomous driving system validation.

3. Data Records

We have shared the corner case dataset in a public repository (Figshare [

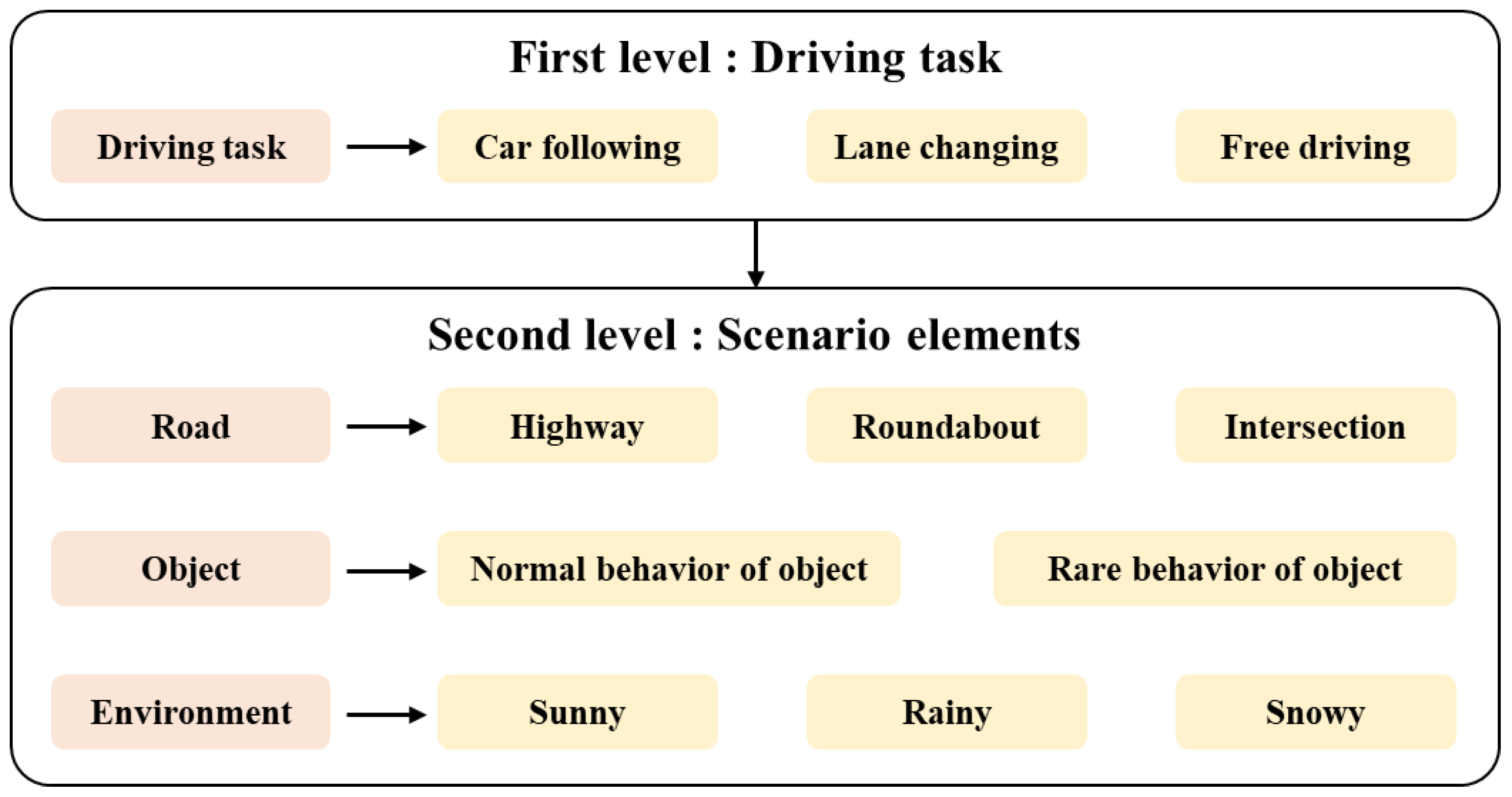

31]) and partitioned it into two directories. The corner case-openX directory contains standardized corner case data. To ensure structural clarity, the dataset is organized into subdirectories systematically labeled by corner case category. Each subfolder represents a distinct corner case category and includes OpenSCENARIO standard files in four formats: xosc, xodr, osgb, and genjson. The xosc file defines dynamic traffic scenarios, while the xodr file describes static road networks. The osgb file contains 3D visual models with road textures for rendering, and the genjson file archives scenario parameters and resource metadata. These files comprehensively encapsulate key information such as trajectory, velocity, road geometry, and environmental condition, enabling detailed annotation of corner cases through standardized representations. As per the corner case definition, such scenarios result from interactions between the ego vehicle’s driving task and scenario elements. We therefore classify corner cases through a dual framework combining driving task and scenario elements, as illustrated in

Figure 7, thereby enabling a detailed analysis of corner case elements.

The classification method for corner case scenarios is depicted in

Figure 7. The ego vehicle’s driving task is designated as the first level, with its attributes comprising following, lane-changing, free-driving, and others. The second level comprises scenario elements, including road, object, and environment. Road attributes encompass scenarios such as highways, roundabouts, and urban intersections. Object attributes are categorized into normal and rare behaviors based on the scenario rarity quantification index. The rarity quantification index calculates the probability of occurrence; if the probability falls below 0.3, the behavior is classified as rare—otherwise, it is considered normal. Environmental attributes involve meteorological and lighting conditions, such as sunny, overcast, rainy, foggy, and low-light scenarios.

In addition to the extracted corner case data within the public repository, a substantial volume of accident scenario videos exhibiting pronounced marginality characteristics—stored as tachograph recordings—are shared in the corner case-videos directory.

4. Validation of Corner Case Applicability

To verify the quality of the naturalistic driving corner case dataset presented in this study, we present a detailed analysis of the dataset. The interaction trajectories of some scenarios are depicted in

Figure 8, while the corresponding corner case descriptions and classification information are summarized in

Table 2.

Figure 8 and

Table 2 reveal that the dataset contains a diverse range of naturalistic driving corner cases, encompassing varied road types, environmental conditions, and vehicle interaction behaviors. The scenario categorization follows a clear hierarchical structure, resulting in a rich variety of corner case types derived from the combination of driving tasks and scenario elements. To evaluate the effectiveness of the dataset proposed in this study, we randomly selected 10 scenarios from the constructed corner case dataset (ours) and from representative naturalistic driving datasets (HighD and NGSIM). The resulting scenario marginality scores are shown in

Figure 9.

As shown in

Figure 9, the constructed corner case dataset exhibits significantly higher scenario marginality compared to existing mainstream naturalistic driving datasets. Among the 10 randomly selected scenarios, the average scenario marginality is approximately 2.78 times that of the mainstream dataset, demonstrating the effectiveness of the dataset developed in this study. We quantify the distribution of corner cases across naturalistic driving data sources, as summarized in

Table 3.

Table 3 reveals that corner case occurrences in the HighD dataset are extremely limited. By developing a data collection platform for targeted collection of naturalistic driving data, the proportion of corner cases is significantly enhanced. Specifically, drone-collected data increases the rarity of environmental scenario elements, thereby elevating the proportion of corner cases. By comparison, vehicle-collected data incorporates more diverse combination types of scenario elements, such as environment, road geometry, and vehicle behavior. Vehicle-collected data exhibits a higher proportion of corner cases compared to drone-collected data. However, both remain below 10%. In contrast, corner case data extracted from accident video datasets constitutes 100% of the proportion, as accident scenarios are inherently high-risk and meet the scenario marginality threshold.

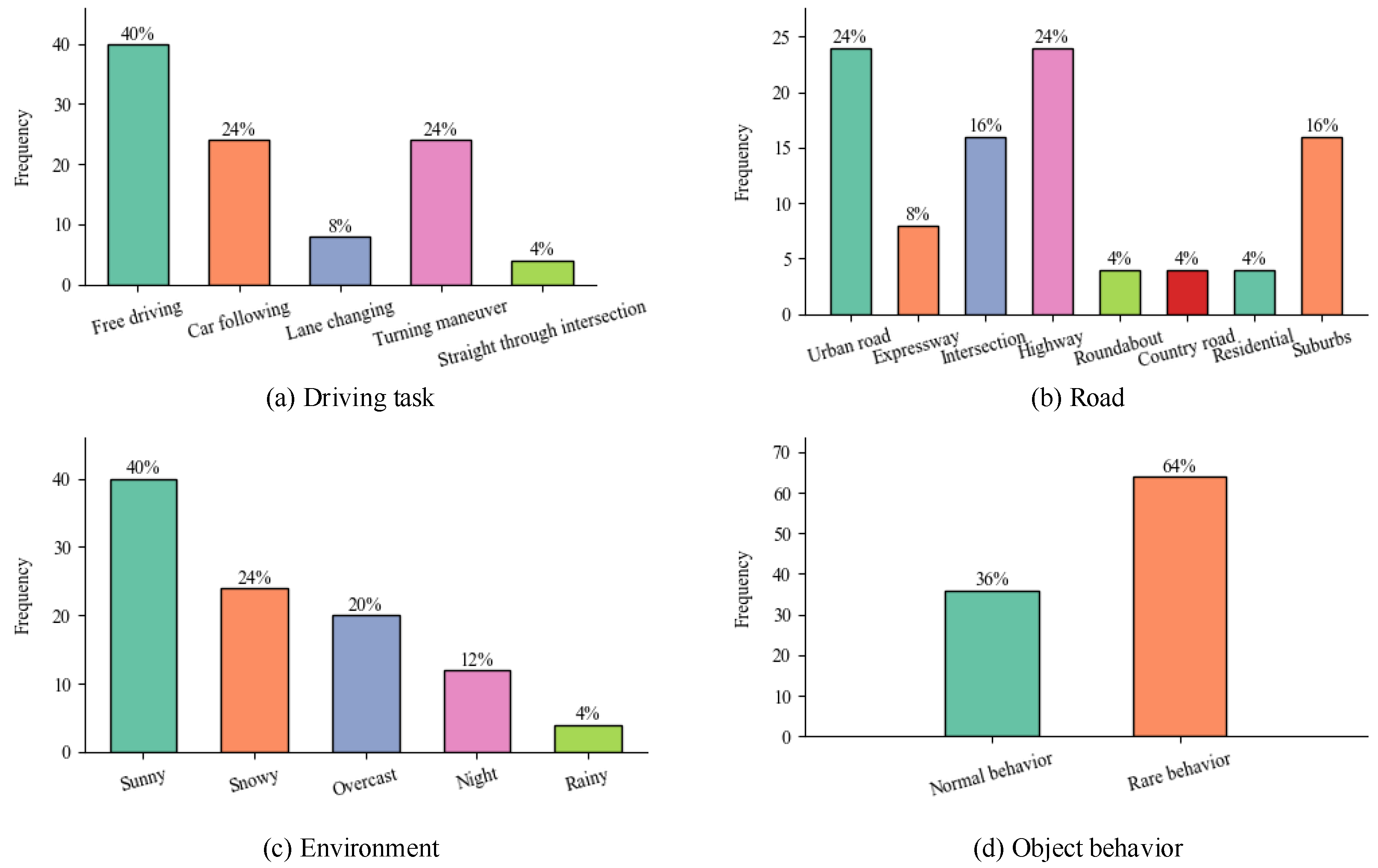

The statistical results demonstrate that extracting vehicle behavior data from accident videos represents a novel method for acquiring corner cases, enabling substantial reductions in data collection costs and enhanced data acquisition efficiency. This study further performs a statistical analysis of driving tasks, road types, environmental conditions, and object behavior patterns within the corner case dataset, as illustrated in

Figure 10.

Figure 10 demonstrates that the diversity of the corner case dataset is well ensured, as it includes a wide range of driving behaviors, road conditions, environmental factors, and object behaviors. Notably, rare object behaviors constitute a larger proportion of the dataset, suggesting that such anomalies are more likely to cause failures in the ego vehicle’s response, thereby contributing to the occurrence of corner cases. In practical applications, corner cases must exhibit smooth and continuous trajectories, avoiding excessive fluctuations. The Savitzky–Golay (SG) algorithm is employed to smooth the trajectories, and the root mean square error (RMSE) is subsequently calculated between the smoothed and non-smoothed trajectories. A smaller

value corresponds to a lower degree of trajectory fluctuation, thereby demonstrating stronger applicability. The speed information is calculated using the same method described above, as detailed in Equation (

4):

where

e quantifies the dataset’s volatility and serves to calculate trajectory and velocity information. A smaller

e value indicates higher applicability of the corner case dataset. Here,

denotes the raw data,

denotes the

-smoothed data, and

t denotes the total number of corner cases in the dataset.

The calculated trajectory fluctuation metric for the naturalistic driving corner case dataset is 0.013 m, with a speed fluctuation metric of 0.021 m/s. These results demonstrate small fluctuations in both trajectory and speed data, with smooth corresponding curves, indicating high data quality. The trajectory characteristics are consistent with those of real-world driving data, making the dataset applicable for autonomous vehicle testing and validation. In summary, the high marginality, diversity, and applicability of the constructed corner case dataset have been comprehensively validated, demonstrating the effectiveness of the proposed dataset.

In this study, an Autonomous Emergency Braking (AEB) system equipped with YOLOv8 and DeepSORT perception algorithms was selected as the system under test. Scenarios suitable for evaluating the obstacle avoidance functionality of AEB were extracted from the constructed corner case dataset. The test focused on the AEB’s obstacle avoidance performance under corner case elements (e.g., nighttime and heavy fog) and short obstacle distances. Prior to AEB activation, the ego vehicle followed a naturalistic driving trajectory obtained through trajectory extraction. For comparison, an equal number of scenarios were randomly selected from mainstream naturalistic driving datasets—NGSIM and HighD. The obstacle avoidance success rates of the AEB system were then evaluated on two datasets to validate the effectiveness of the constructed corner case dataset. The statistical results of AEB’s avoidance success rates are presented in

Table 4.

As shown in

Table 4, the corner case dataset constructed in this study exhibits a significantly low obstacle avoidance success rate. In most scenarios, the AEB system failed to effectively avoid collisions. This can be attributed to two main factors: first, the presence of challenging scenario elements such as nighttime and heavy fog imposes considerable difficulties on the perception system, preventing it from detecting hazardous vehicles within a safe distance; second, abnormal driving behaviors of the hazardous vehicles result in extremely compressed reaction distances, leaving the ego vehicle with insufficient time and space to avoid a collision.

In contrast, the AEB system achieved a success rate of 94.74% on the mainstream naturalistic driving dataset, indicating that most scenarios in these datasets do not adversely impact the AEB system and therefore fail to expose its functional limitations. These scenarios are largely ineffective for robust testing. This stark contrast further demonstrates the effectiveness of the proposed corner case dataset in revealing system weaknesses and supporting safety-critical evaluation.

5. Usage Notes

The corner case dataset presented in this study is stored in the OpenSCENARIO standard file format, which ensures high compatibility. This format features a standardized structure, including Road Network, Entity, and Storyboard, and enables a detailed description of the dynamic temporal scenarios. It is critical to note that all scenario data within the dataset are collected through manual vehicle driving. This section elaborates on the application method and practical value of the dataset.

For autonomous driving system test verification, the naturalistic driving dataset serves as a comprehensive repository of vehicle interaction behaviors. The scenario data corresponds to the concrete scenario tier within the hierarchical testing framework, which comprises “functional scenario, logical scenario, concrete scenario”. By analyzing interaction behaviors in concrete corner cases, scenario parameters can be generalized, thereby extending concrete scenarios into the logical scenario parameter space and constructing a corner case dataset with a pronounced long-tail distribution. This enables efficient validation of the autonomous driving system across diverse corner case types. For autonomous driving system algorithm training, the naturalistic driving dataset’s diverse interaction modalities offer real-world training samples for autonomous driving models. These data enable adversarial learning, reinforcement learning, and similar techniques to enhance algorithmic robustness under varying corner case conditions, constantly optimizing and improving the functional logic of the autonomous driving system algorithm.