A Comprehensive Literature Review on Modular Approaches to Autonomous Driving: Deep Learning for Road and Racing Scenarios

Abstract

Highlights

- A comprehensive analysis of deep learning techniques in both on-road and autonomous racing cars, highlighting distinct challenges and requirements for each context.

- The identification of critical challenges for future research, to ensure safety and performance in autonomous systems.

- The detailed evaluation of planning methods and performance metrics points to opportunities to refine existing methodologies and identify emerging research areas that can guide the development of more efficient, robust, and scalable autonomous driving technologies.

- The challenges identified in sensor fusion, environmental robustness, and computational efficiency imply that addressing these issues is critical to progress in autonomous systems.

Abstract

1. Introduction

- This review is the first to present a comparison of state-of-the-art approaches that address deep learning methods between autonomous driving on the road and racetrack scenarios.

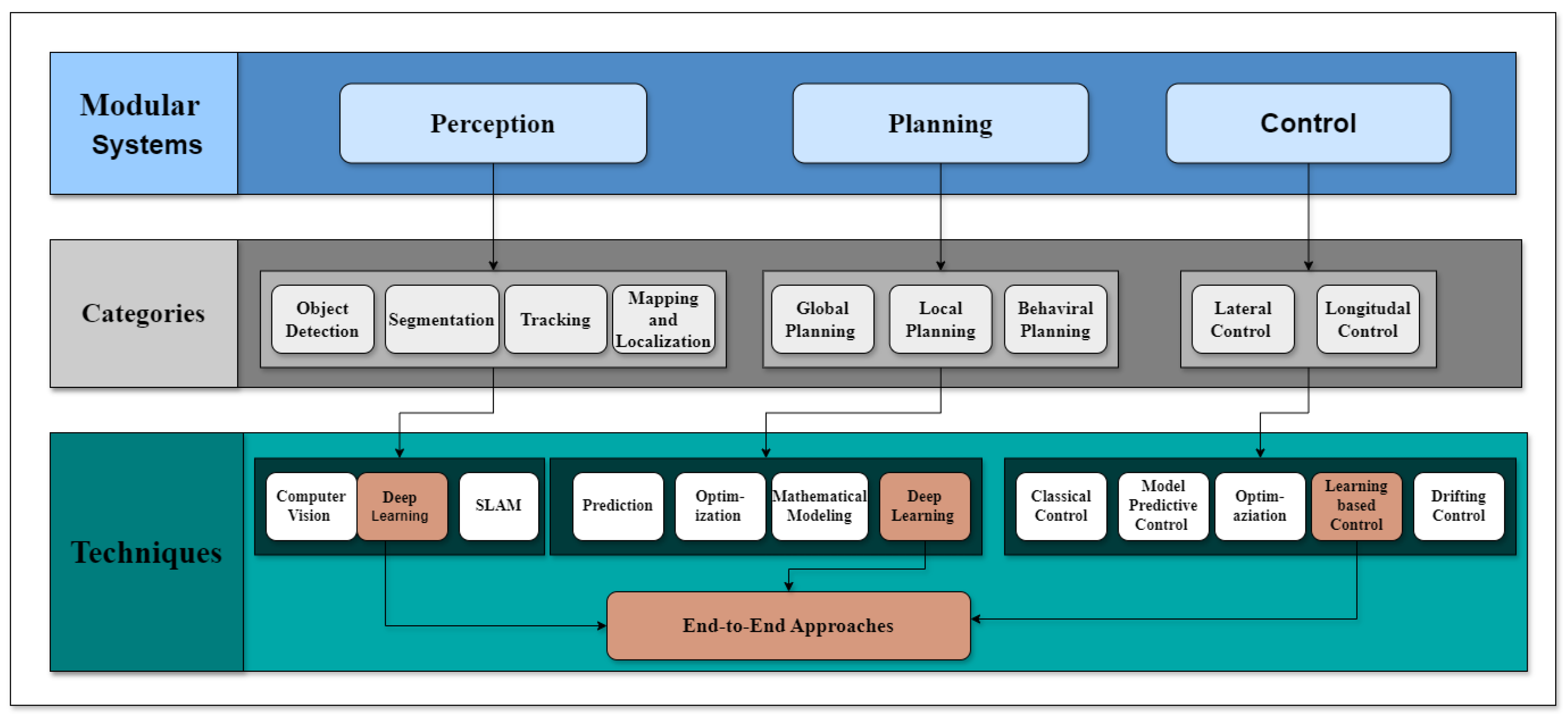

- We highlight existing deep learning approaches for modular systems, including perception, planning, control, end-to-end approaches, and safety.

- We also describe both scenarios’ benchmarks, simulations, and real-time platforms.

- In addition, we discuss evaluation metrics, state-of-the-art performance, and their comparisons.

- Finally, we outline the potential challenges and research directions from the state-of-the-art.

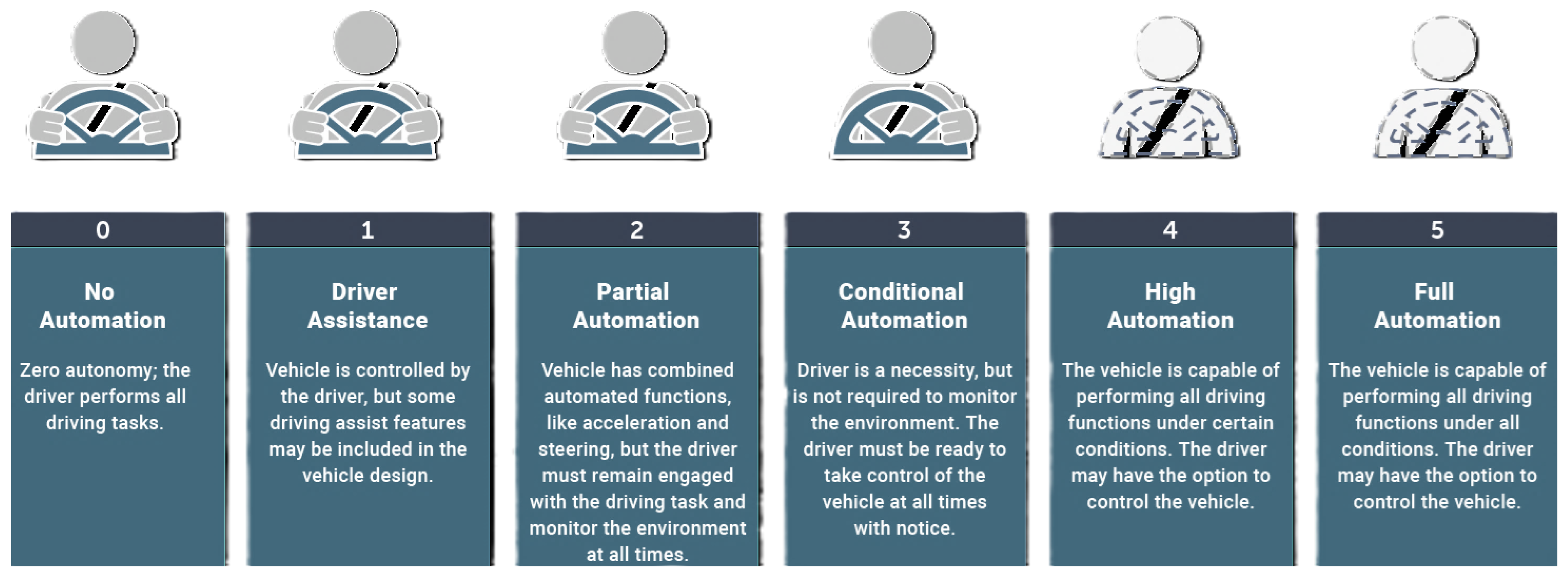

2. Background

2.1. On-Road Autonomous Vehicles

2.2. Autonomous Racing Cars

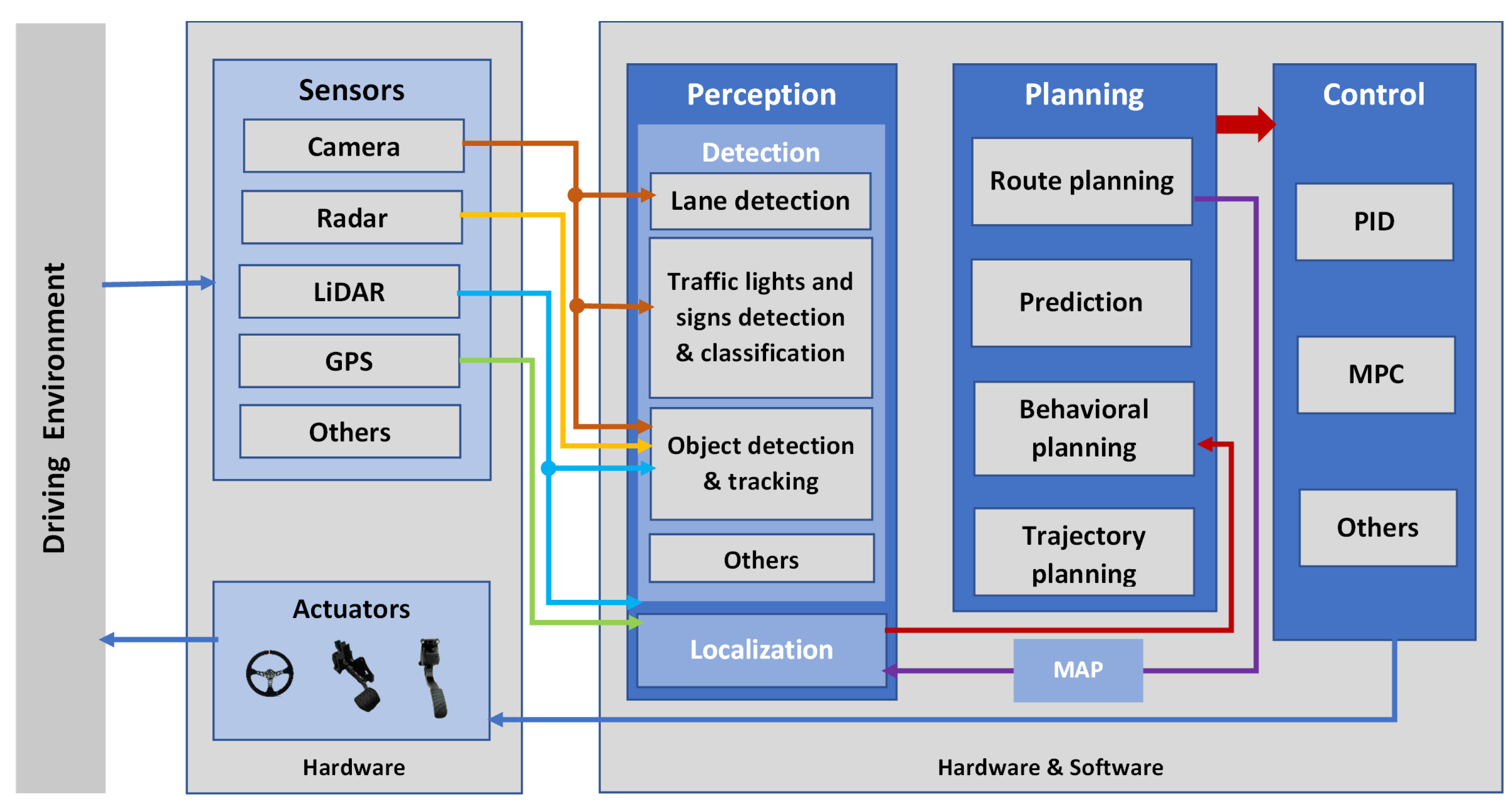

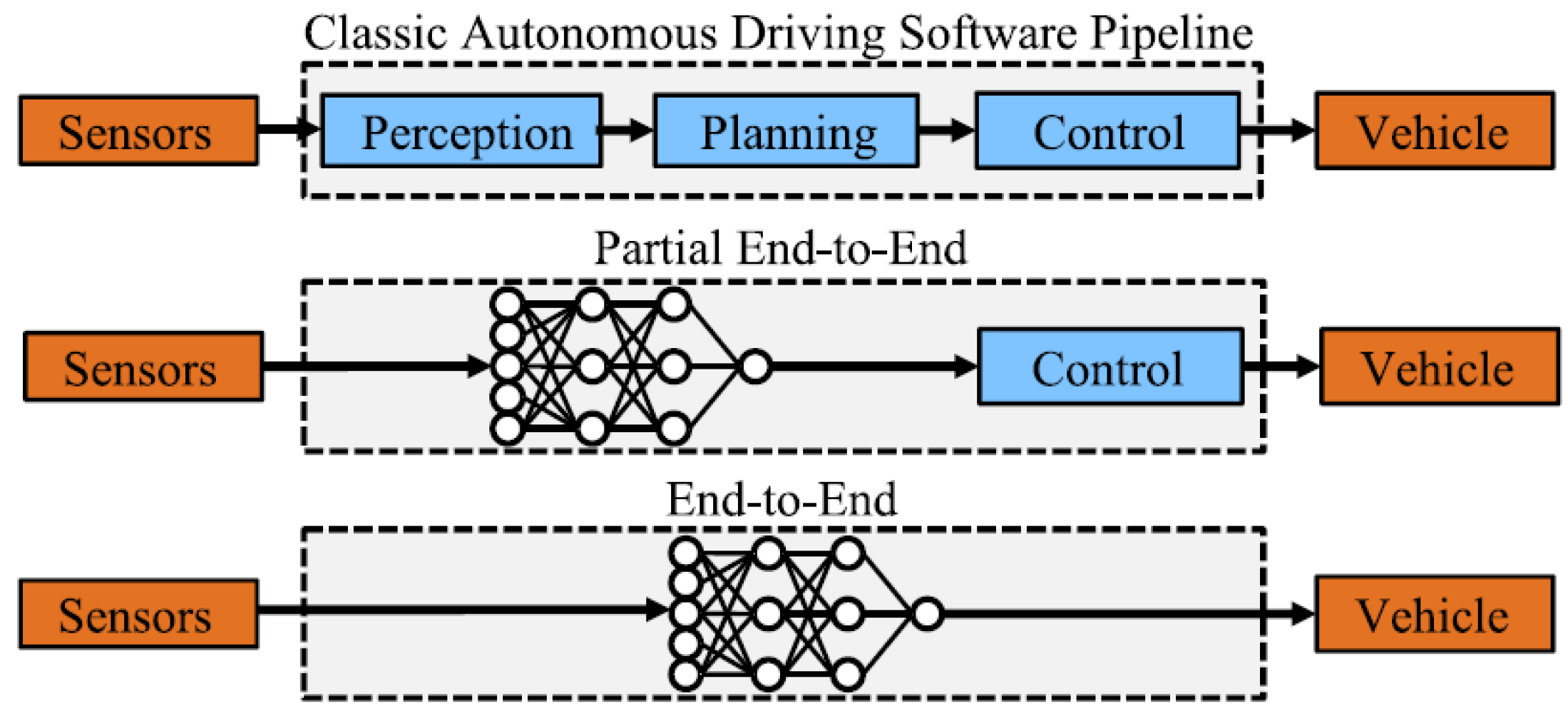

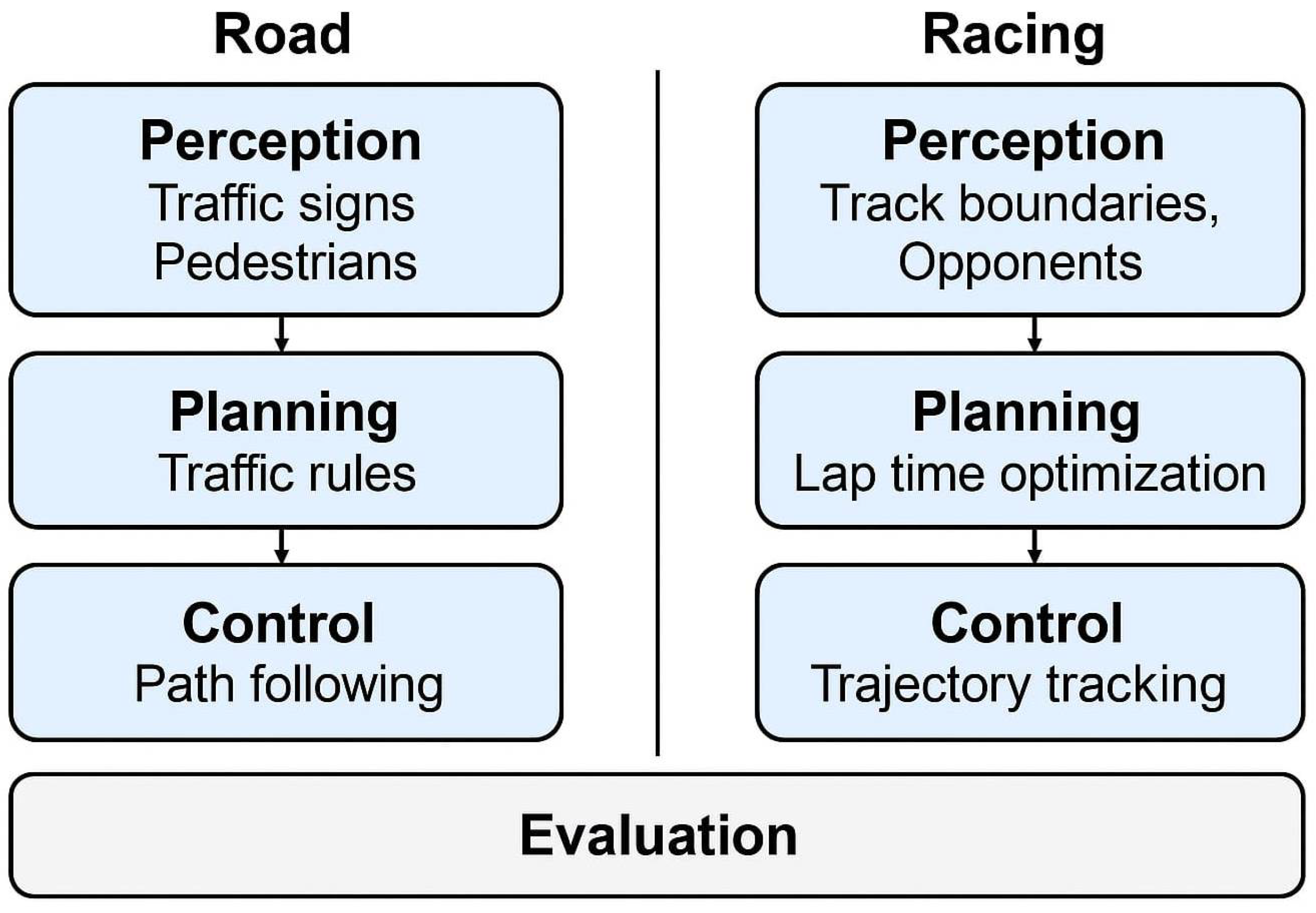

2.3. Modular System

3. Related Work

3.1. Perception-Focused Surveys

3.2. Planning and Trajectory Prediction

3.3. Control Systems

3.4. End-to-End Learning

3.5. Safety in Autonomous Driving

3.6. Large Language Models and Vision-Language Models

3.7. Simulation Modalities in Autonomous Driving Research

3.8. Comparative Context and Scope

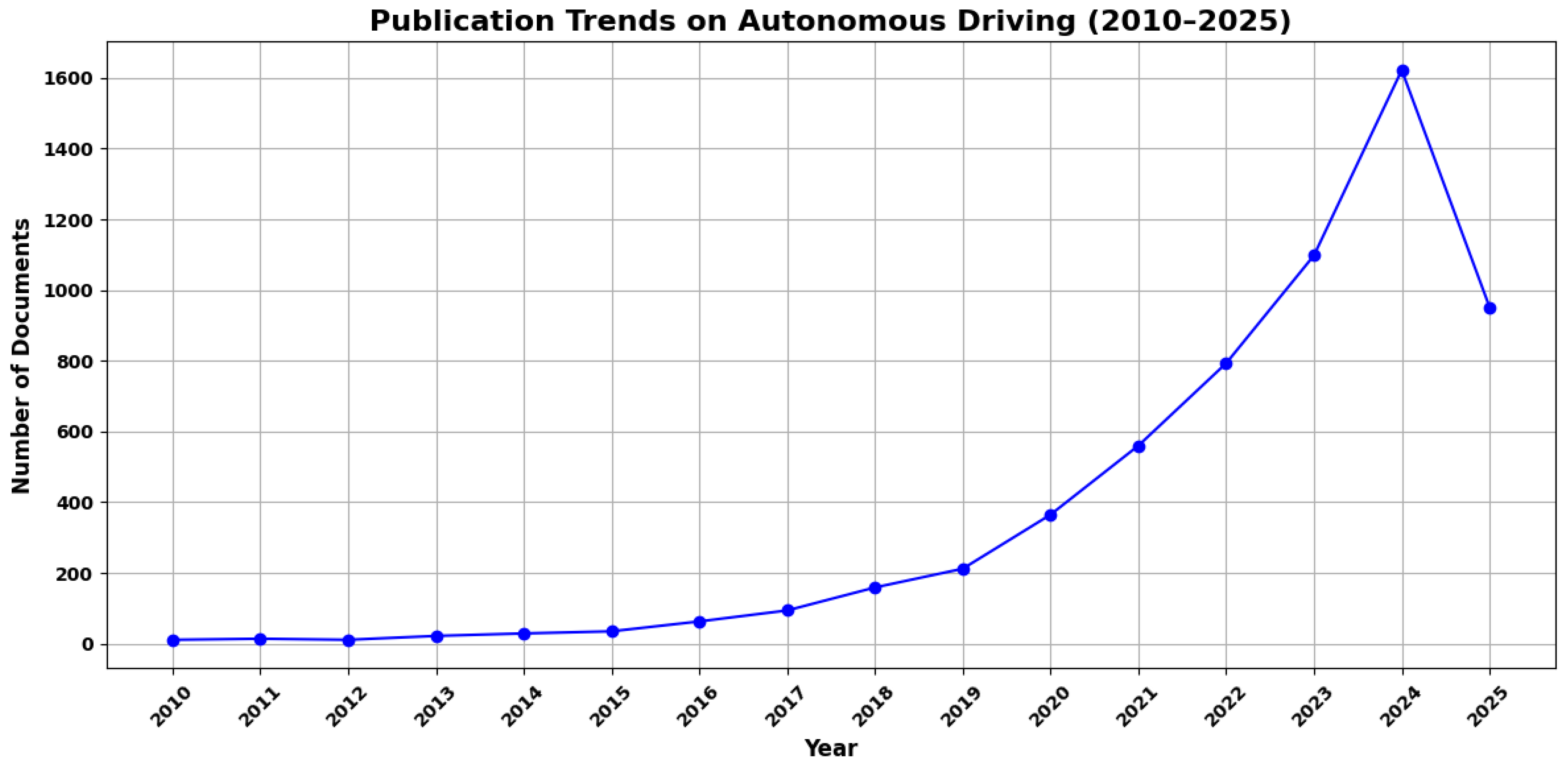

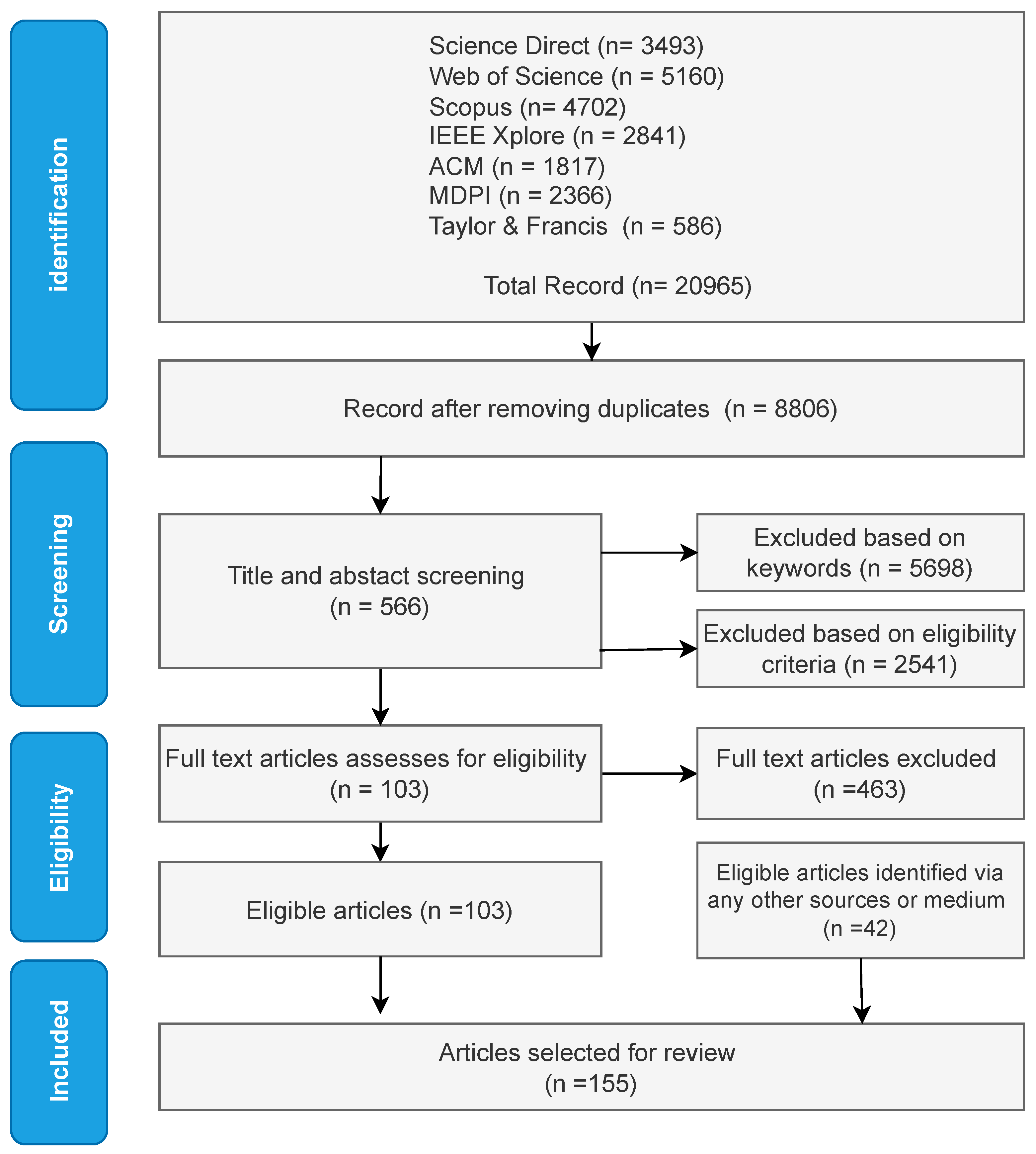

4. Materials and Methods

4.1. Research Motivations

4.2. Research Questions

- RQ1: What are the deep learning approaches used in modular autonomous driving systems on the road and in racing scenarios?

- RQ2: What safety and robustness techniques from machine learning and deep learning are used in autonomous driving on the road and in racing scenarios?

- RQ3: What are the existing datasets used for machine learning/deep learning techniques in autonomous driving?

- RQ4: What performance evaluation metrics are used to evaluate the modular system in autonomous driving on the road and in race scenarios?

4.3. Information Sources and Databases

4.4. Search Strategy and Key Terms

4.5. Eligibility Criteria and Quality Assessment

4.6. Data Extraction

4.7. Risk of Bias Assessment

4.8. Effect Measures

4.9. Reporting Bias Assessment

4.10. Registration and Protocol

5. Results

5.1. Characteristics of the Selected Studies

5.2. Synthesis of Results

6. Major Findings

6.1. RQ1: What Are the Deep Learning Approaches Used in Modular Autonomous Driving Systems on the Road and in Racing Scenarios?

6.1.1. Perception

- On-Road Perception Techniques

- Racing Car Perception Techniques

- Comparative Analysis and Challenges

6.1.2. Planing

- On-Road Path Planning Techniques

- Racing Car Planning Techniques

- Comparative Analysis and Future Research Directions

6.1.3. Control

- Lateral and Longitudinal Control in On-Road Vehicles

- High-Speed Control Strategies in Racing Cars

- Comparative Analysis and Challenges

- End-to-end approaches

- Fully End-to-End Systems: On-Road Scenarios

- Fully End-to-End Systems: Racing Scenarios

- Partially End-to-End Approaches and Hybrid Methods

- Comparative Analysis and Challenges

6.2. What Are the Safety and Robustness Machine Learning/Deep Learning Techniques Used in Autonomous Driving on the Road and in Racing Scenarios?

- Safety in Road Scenarios

- Safety Concerns in Racing Scenarios

- Safety in Adversarial and Edge-Case Scenarios

6.3. What Are the Existing Datasets Used for Machine Learning/Deep Learning Techniques in Autonomous Driving?

- Real-Time and Simulations on-road vehicles

- Real-Time and Simulations racing cars

6.4. What Performance Evaluation Metrics Are Used to Evaluate the Modular System in Autonomous Driving on the Road and in Racing Scenarios?

7. Limitations of the Study

8. Real-Time Challenges and Future Directions

8.1. Real-Time Challenges for On-Road and Racing Scenarios

8.1.1. On-Road Autonomous Vehicles

8.1.2. Autonomous Racing Cars

8.2. Research Directions for On-Road and Racing Scenarios

8.2.1. Perception

8.2.2. Planning

8.2.3. Control

8.2.4. End-to-End Approach

8.3. Certainty of Evidence

9. Integration of State-of-the-Art Modules: A Case Study

- Perception module: The perception stage uses PointPillars [15], a 3D object detection framework capable of efficiently processing LiDAR point clouds for real-time object localization. Its voxelization of point cloud data allows for low-latency obstacle detection, critical for downstream trajectory planning. For visual perception, the use of YOLOv5 [94] allows for robust and lightweight object detection from RGB cameras, allowing redundancy in multisensory fusion pipelines.

- Planning module: For the planning subsystem, we propose the use of SCOUT [96], a spatiotemporal graph-based model capable of predicting trajectories with interaction awareness. SCOUT predicts the future movements of surrounding agents and proposes safe and dynamically feasible paths. Its graph-based structure allows explicit modeling of interactions between multiple agents, making it suitable for complex urban environments.

- Control module: The control layer incorporates robust adaptive learning control (RALC) [134], which ensures stability in the face of modeling inaccuracies and external disturbances. By integrating learning-based disturbance estimation with classical feedback control, RALC is suitable for dynamically uncertain conditions.

- Deployment environment: To test the system, we propose the use of the CARLA simulator [45], which provides several urban, suburban, and highway scenarios. The possibility of simulating sensors (LiDAR, cameras, GPS, IMU) enables realistic sensor fusion, as well as scenario-based system evaluation.

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AD | Autonomous Driving |

| ADAS | Advanced Driver-Assistance Systems |

| ADE | Average Displacement Error |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| APF | Artificial Potential Field |

| AUC | Area Under the Curve |

| BAT | Behavior-Aware Trajectory prediction model |

| BRPPO | Balanced Reward-inspired Proximal Policy Optimization |

| CLIP | Contrastive Language–Image Pre-training |

| CNN | Convolutional Neural Network |

| DARPA | Defense Advanced Research Projects Agency |

| DBF | Distance Between Boundary Failures |

| DDPG | Deep Deterministic Policy Gradient |

| DIRL | Deep Imitative Reinforcement Learning |

| DLC | Deep Latent Competition |

| DMP | Dynamic Movement Primitives |

| DRL | Deep Reinforcement Learning |

| FCOS | Fully Convolutional One-Stage Object Detection |

| FDE | Final Displacement Error |

| FIM | Failure Prediction and Intervention Module |

| GAN | Generative Adversarial Network |

| GCN | Graph Convolution Network |

| GNN | Graph Neural Network |

| GPS | Global Positioning System |

| GVQA | Graph Visual Question Answering |

| HIL | Hardware-in-the-loop |

| HJ | Hamilton–Jacobi |

| HRL | Hierarchical Reinforcement Learning |

| IOU | Intersection Over Union |

| IRL | Inverse Reinforcement Learning |

| L2R | Learn-to-Race |

| LiDAR | Light Detection and Ranging |

| LLM | Large Language Model |

| LMPC | Learning Model Predictive Control |

| LMVO | Learned Model Velocity Optimization |

| LPV | Linear Parameter Varying |

| LQNG | Linear-Quadratic Nash Game |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPF | Modified Artificial Potential Field |

| MDP | Markov Decision Process |

| mIoU | Mean Intersection Over Union |

| MLLM | Multimodal Large Language Model |

| MPC | Model Predictive Controls |

| MPPI | Model Predictive Path Integral |

| MSE | Mean Squared Error |

| NHTSA | National Highway Traffic Safety Administration |

| RALC | Robust Adaptive Learning Control |

| R-CNN | Region-based Convolutional Neural Network |

| RL | Reinforcement Learning |

| RMSE | Root Mean Square Error |

| RPL | Residual Policy Learning |

| SAE | Society of Automotive Engineers |

| SLAM | Simultaneous Localization and Mapping |

| SSD | Single Shot Detection |

| TBF | Mean Time Between Boundary Failures |

| TFR | Trajectory Fit Ratio |

| TrAAD | Traffic-Aware Autonomous Driving |

| V2V | Vehicle-to-Vehicle Communication |

| V2X | Vehicle-to-Everything Communication |

| VGG | Visual Geometry Group |

| VLM | Vision Language Model |

| VLP | Vision-Language-Planning |

| VNP | Versatile Network Pruning |

| YOLO | You Only Look Once |

References

- World Health Organization. Global Health Estimates 2019: Deaths by Cause, Age, Sex, by Country and by Region. 2020. Available online: https://injuryfacts.nsc.org/international/international-overview/ (accessed on 10 September 2024).

- Yasin, Y.; Grivna, M.; Abu-Zidan, F. Motorized 2–3 wheelers death rates over a decade: A global study. World J. Emerg. Surg. 2022, 17, 7. [Google Scholar] [CrossRef]

- Francis, J.; Chen, B.; Ganju, S.; Kathpal, S.; Poonganam, J.; Shivani, A.; Vyas, V.; Genc, S.; Zhukov, I.; Kumskoy, M.; et al. Learn-to-Race Challenge 2022: Benchmarking Safe Learning and Cross-domain Generalisation in Autonomous Racing. arXiv 2022, arXiv:2205.02953. [Google Scholar]

- Zhao, J.; Zhao, W.; Deng, B.; Wang, Z.; Zhang, F.; Zheng, W.; Cao, W.; Nan, J.; Lian, Y.; Burke, A.F. Autonomous driving system: A comprehensive survey. Expert Syst. Appl. 2024, 242, 122836. [Google Scholar] [CrossRef]

- Wadekar, S.N.; Schwartz, B.; Kannan, S.S.; Mar, M.; Manna, R.K.; Chellapandi, V.; Gonzalez, D.J.; Gamal, A.E. Towards End-to-End Deep Learning for Autonomous Racing: On Data Collection and a Unified Architecture for Steering and Throttle Prediction. arXiv 2021, arXiv:2105.01799. [Google Scholar]

- Bosello, M.; Tse, R.; Pau, G. Train in Austria, Race in Montecarlo: Generalized RL for Cross-Track F1tenth LIDAR-Based Races. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2022; Volume 19, pp. 290–298. [Google Scholar] [CrossRef]

- Anzalone, L.; Barra, P.; Barra, S.; Castiglione, A.; Nappi, M. An End-to-End Curriculum Learning Approach for Autonomous Driving Scenarios. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19817–19826. [Google Scholar] [CrossRef]

- Fernandes, D.; Silva, A.; Névoa, R.; Simões, C.; Gonzalez, D.; Guevara, M.; Novais, P.; Monteiro, J.; Melo-Pinto, P. Point-cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Inf. Fusion 2021, 68, 161–191. [Google Scholar] [CrossRef]

- Dickmanns, E.; Zapp, A. Autonomous High Speed Road Vehicle Guidance by Computer Vision1. IFAC Proc. Vol. 1987, 20, 221–226. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The robot that won the DARPA Grand Challenge. J. Field Robot. 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Betz, J.; Betz, T.; Fent, F.; Geisslinger, M.; Heilmeier, A.; Hermansdorfer, L.; Herrmann, T.; Huch, S.; Karle, P.; Lienkamp, M.; et al. TUM Autonomous Motorsport: An Autonomous Racing Software for the Indy Autonomous Challenge. arXiv 2022, arXiv:2205.15979. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, Y. Autonomous Driving with Deep Learning: A Survey of State-of-Art Technologies. arXiv 2020, arXiv:2006.06091. [Google Scholar]

- Morooka, F.E.; Junior, A.M.; Sigahi, T.F.A.C.; Pinto, J.d.S.; Rampasso, I.S.; Anholon, R. Deep Learning and Autonomous Vehicles: Strategic Themes, Applications, and Research Agenda Using SciMAT and Content-Centric Analysis, a Systematic Review. Mach. Learn. Knowl. Extr. 2023, 5, 763–781. [Google Scholar] [CrossRef]

- Golroudbari, A.A.; Sabour, M.H. Recent Advancements in Deep Learning Applications and Methods for Autonomous Navigation: A Comprehensive Review. arXiv 2023, arXiv:2302.11089. [Google Scholar]

- Jebamikyous, H.H.; Kashef, R. Autonomous Vehicles Perception (AVP) Using Deep Learning: Modeling, Assessment, and Challenges. IEEE Access 2022, 10, 10523–10535. [Google Scholar] [CrossRef]

- Jiang, Y.; Hsiao, T. Deep Learning in Perception of Autonomous Vehicles. In Proceedings of the 2021 International Conference on Public Art and Human Development (ICPAHD 2021), Kunming, China, 24–26 December 2021; Atlantis Press: Dordrecht, The Netherlands, 2022; pp. 561–565. [Google Scholar] [CrossRef]

- Delecki, H.; Itkina, M.; Lange, B.; Senanayake, R.; Kochenderfer, M.J. How Do We Fail? Stress Testing Perception in Autonomous Vehicles. arXiv 2022, arXiv:2203.14155. [Google Scholar]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.; Li, X. 3D Object Detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Mao, J.; Shi, S.; Wang, X.; Li, H. 3D Object Detection for Autonomous Driving: A Review and New Outlooks. arXiv 2022, arXiv:2206.09474. [Google Scholar]

- Ma, X.; Ouyang, W.; Simonelli, A.; Ricci, E. 3D Object Detection from Images for Autonomous Driving: A Survey. arXiv 2022, arXiv:2202.02980. [Google Scholar] [CrossRef]

- Large, N.L.; Bieder, F.; Lauer, M. Comparison of different SLAM approaches for a driverless race car. tm Tech. Mess. 2021, 88, 227–236. [Google Scholar] [CrossRef]

- Abaspur Kazerouni, I.; Fitzgerald, L.; Dooly, G.; Toal, D. A survey of state-of-the-art on visual SLAM. Expert Syst. Appl. 2022, 205, 117734. [Google Scholar] [CrossRef]

- Teng, S.; Hu, X.; Deng, P.; Li, B.; Li, Y.; Ai, Y.; Yang, D.; Li, L.; Xuanyuan, Z.; Zhu, F.; et al. Motion Planning for Autonomous Driving: The State of the Art and Future Perspectives. IEEE Trans. Intell. Veh. 2023, 8, 3692–3711. [Google Scholar] [CrossRef]

- Abdallaoui, S.; Aglzim, E.H.; Chaibet, A.; Kribèche, A. Thorough Review Analysis of Safe Control of Autonomous Vehicles: Path Planning and Navigation Techniques. Energies 2022, 15, 1358. [Google Scholar] [CrossRef]

- Li, S.; Shu, K.; Chen, C.; Cao, D. Planning and Decision-making for Connected Autonomous Vehicles at Road Intersections: A Review. Chin. J. Mech. Eng. 2021, 34, 133. [Google Scholar] [CrossRef]

- Zhou, H.; Laval, J.; Zhou, A.; Wang, Y.; Wu, W.; Qing, Z.; Peeta, S. Review of Learning-Based Longitudinal Motion Planning for Autonomous Vehicles: Research Gaps Between Self-Driving and Traffic Congestion. Transp. Res. Rec. 2022, 2676, 324–341. [Google Scholar] [CrossRef]

- Bachute, M.R.; Subhedar, J.M. Autonomous Driving Architectures: Insights of Machine Learning and Deep Learning Algorithms. Mach. Learn. Appl. 2021, 6, 100164. [Google Scholar] [CrossRef]

- Khanum, A.; Lee, C.Y.; Yang, C.S. Involvement of Deep Learning for Vision Sensor-Based Autonomous Driving Control: A Review. IEEE Sensors J. 2023, 23, 15321–15341. [Google Scholar] [CrossRef]

- Kalandyk, D. Reinforcement learning in car control: A brief survey. In Proceedings of the 2021 Selected Issues of Electrical Engineering and Electronics (WZEE), Rzeszow, Poland, 13–15 September 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Tampuu, A.; Matiisen, T.; Semikin, M.; Fishman, D.; Muhammad, N. A Survey of End-to-End Driving: Architectures and Training Methods. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 1364–1384. [Google Scholar] [CrossRef]

- Le Mero, L.; Yi, D.; Dianati, M.; Mouzakitis, A. A Survey on Imitation Learning Techniques for End-to-End Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 14128–14147. [Google Scholar] [CrossRef]

- Coelho, D.; Oliveira, M. A Review of End-to-End Autonomous Driving in Urban Environments. IEEE Access 2022, 10, 75296–75311. [Google Scholar] [CrossRef]

- Huang, R.N.; Ren, J.; Gabbar, H.A. The Current Trends of Deep Learning in Autonomous Vehicles: A Review. J. Eng. Res. Sci. 2022, 1, 56–68. [Google Scholar] [CrossRef]

- Razi, A.; Chen, X.; Li, H.; Wang, H.; Russo, B.; Chen, Y.; Yu, H. Deep learning serves traffic safety analysis: A forward-looking review. IET Intell. Transp. Syst. 2023, 17, 22–71. [Google Scholar] [CrossRef]

- Muhammad, K.; Ullah, A.; Lloret, J.; Ser, J.D.; de Albuquerque, V.H.C. Deep Learning for Safe Autonomous Driving: Current Challenges and Future Directions. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4316–4336. [Google Scholar] [CrossRef]

- Deng, Y.; Zhang, T.; Lou, G.; Zheng, X.; Jin, J.; Han, Q. Deep Learning-Based Autonomous Driving Systems: A Survey of Attacks and Defenses. arXiv 2021, arXiv:2104.01789v2. [Google Scholar] [CrossRef]

- Hou, L.; Chen, H.; Zhang, G.K.; Wang, X. Deep Learning-Based Applications for Safety Management in the AEC Industry: A Review. Appl. Sci. 2021, 11, 821. [Google Scholar] [CrossRef]

- Li, Y.; Katsumata, K.; Javanmardi, E.; Tsukada, M. Large Language Models for Human-like Autonomous Driving: A Survey. arXiv 2024, arXiv:2407.19280. [Google Scholar]

- Yang, Z.; Jia, X.; Li, H.; Yan, J. LLM4Drive: A Survey of Large Language Models for Autonomous Driving. arXiv 2024, arXiv:2311.01043. [Google Scholar]

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Zhou, Y.; Liang, K.; Chen, J.; Lu, J.; Yang, Z.; Liao, K.D.; et al. A Survey on Multimodal Large Language Models for Autonomous Driving. arXiv 2023, arXiv:2311.12320. [Google Scholar]

- Zhou, X.; Liu, M.; Yurtsever, E.; Zagar, B.L.; Zimmer, W.; Cao, H.; Knoll, A.C. Vision Language Models in Autonomous Driving: A Survey and Outlook. arXiv 2024, arXiv:2310.14414. [Google Scholar] [CrossRef]

- Cui, Y.; Huang, S.; Zhong, J.; Liu, Z.; Wang, Y.; Sun, C.; Li, B.; Wang, X.; Khajepour, A. DriveLLM: Charting the Path Toward Full Autonomous Driving With Large Language Models. IEEE Trans. Intell. Veh. 2024, 9, 1450–1464. [Google Scholar] [CrossRef]

- Zheng, P.; Zhao, Y.; Gong, Z.; Zhu, H.; Wu, S. SimpleLLM4AD: An End-to-End Vision-Language Model with Graph Visual Question Answering for Autonomous Driving. arXiv 2024, arXiv:2407.21293. [Google Scholar]

- Kaur, R.; Arora, A.; Nayyar, A.; Rani, S. A survey on simulators for testing self-driving cars. Comput. Mater. Contin. 2021, 66, 1043–1062. [Google Scholar]

- Zhang, T.; Liu, H.; Wang, W.; Wang, X. Virtual Tools for Testing Autonomous Driving: A Survey and Benchmark of Simulators, Datasets, and Competitions. Electronics 2024, 13, 3486. [Google Scholar] [CrossRef]

- Shen, Y.; Chandaka, B.; Lin, Z.; Zhai, A.; Cui, H.; Forsyth, D.; Wang, S. Sim-on-Wheels: Physical World in the Loop Simulation for Self-Driving. IEEE Robot. Autom. Lett. 2023, 8, 8192–8199. [Google Scholar] [CrossRef]

- Caleffi, F.; Rodrigues, L.; Stamboroski, J.; Pereira, B. Small-scale self-driving cars: A systematic literature review. J. Traffic Transp. Eng. 2024, 11, 170–188. [Google Scholar] [CrossRef]

- Fremont, D.; Kim, E.; Pant, Y.; Seshia, S.; Acharya, A.; Bruso, X.; Wells, P.; Lemke, S.; Lu, Q.; Mehta, S. Formal Scenario-Based Testing of Autonomous Vehicles: From Simulation to the Real World. In Proceedings of the 23rd International Conference on Intelligent Transportation, Rhodes, Greece, 20–23 September 2023; Volume 108, pp. 1211–1230. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Howe, M.; Bockman, J.; Orenstein, A.; Podgorski, S.; Bahrami, S.; Reid, I. The Edge of Disaster: A Battle Between Autonomous Racing and Safety. arXiv 2022, arXiv:2206.15012. [Google Scholar]

- Zhou, Y.; Wen, S.; Wang, D.; Meng, J.; Mu, J.; Irampaye, R. MobileYOLO: Real-Time Object Detection Algorithm in Autonomous Driving Scenarios. Sensors 2022, 22, 3349. [Google Scholar] [CrossRef]

- Cai, Y.; Luan, T.; Gao, H.; Wang, H.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. YOLOv4-5D: An Effective and Efficient Object Detector for Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 3065438. [Google Scholar] [CrossRef]

- Mahaur, B.; Mishra, K.; Kumar, A. An improved lightweight small object detection framework applied to real-time autonomous driving. Expert Syst. Appl. 2023, 234, 121036. [Google Scholar] [CrossRef]

- Yang, M.; Fan, X. YOLOv8-Lite: A Lightweight Object Detection Model for Real-time Autonomous Driving Systems. IECE Trans. Emerg. Top. Artif. Intell. 2024, 1, 1–16. [Google Scholar] [CrossRef]

- Jia, X.; Tong, Y.; Qiao, H.; Li, M.; Tong, J.; Liang, B. Fast and accurate object detector for autonomous driving based on improved YOLOv5. Sci. Rep. 2023, 13, 9711. [Google Scholar] [CrossRef]

- Ranasinghe, P.; Muthukuda, D.; Morapitiya, P.; Dissanayake, M.B.; Lakmal, H. Deep Learning Based Low Light Enhancements for Advanced Driver-Assistance Systems at Night. In Proceedings of the 2023 IEEE 17th International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 25–26 August 2023; pp. 489–494. [Google Scholar] [CrossRef]

- Karagounis, A. Leveraging Large Language Models for Enhancing Autonomous Vehicle Perception. arXiv 2024, arXiv:2412.20230. [Google Scholar]

- Ananthajothi, K.; Satyaa Sudarshan, G.S.; Saran, J.U. LLM’s for Autonomous Driving: A New Way to Teach Machines to Drive. In Proceedings of the 2023 3rd International Conference on Mobile Networks and Wireless Communications (ICMNWC), Tumkur, India, 4–5 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Elhenawy, M.; Ashqar, H.I.; Rakotonirainy, A.; Alhadidi, T.I.; Jaber, A.; Tami, M.A. Vision-Language Models for Autonomous Driving: CLIP-Based Dynamic Scene Understanding. Electronics 2025, 14, 1282. [Google Scholar] [CrossRef]

- Guo, Z.; Yagudin, Z.; Lykov, A.; Konenkov, M.; Tsetserukou, D. VLM-Auto: VLM-based Autonomous Driving Assistant with Human-like Behavior and Understanding for Complex Road Scenes. arXiv 2024, arXiv:2405.05885. [Google Scholar]

- Mohapatra, S.; Yogamani, S.; Gotzig, H.; Milz, S.; Mader, P. BEVDetNet: Bird’s Eye View LiDAR Point Cloud based Real-time 3D Object Detection for Autonomous Driving. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 2809–2815. [Google Scholar] [CrossRef]

- Zhou, Y.; Wen, S.; Wang, D.; Mu, J.; Richard, I. Object Detection in Autonomous Driving Scenarios Based on an Improved Faster-RCNN. Appl. Sci. 2021, 11, 11630. [Google Scholar] [CrossRef]

- Shi, Y.; Guo, Y.; Mi, Z.; Li, X. Stereo CenterNet-based 3D object detection for autonomous driving. Neurocomputing 2022, 471, 219–229. [Google Scholar] [CrossRef]

- An, K.; Chen, Y.; Wang, S.; Xiao, Z. RCBi-CenterNet: An Absolute Pose Policy for 3D Object Detection in Autonomous Driving. Appl. Sci. 2021, 11, 5621. [Google Scholar] [CrossRef]

- Chen, Y.N.; Dai, H.; Ding, Y. Pseudo-Stereo for Monocular 3D Object Detection in Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 887–897. [Google Scholar]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. arXiv 2020, arXiv:2005.07431. [Google Scholar]

- Zhang, J.; Cao, J.; Chang, J.; Li, X.; Liu, H.; Li, Z. Research on the Application of Computer Vision Based on Deep Learning in Autonomous Driving Technology. arXiv 2024, arXiv:2406.00490. [Google Scholar]

- Zhao, X. Deep learning based visual perception and decision-making technology for autonomous vehicles. Appl. Comput. Eng. 2024, 33, 191–200. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, Z.; Zhong, R.; Zhang, L.; Ma, H.; Liu, L. A Dense Feature Pyramid Network-Based Deep Learning Model for Road Marking Instance Segmentation Using MLS Point Clouds. IEEE Trans. Geosci. Remote Sens. 2021, 59, 784–800. [Google Scholar] [CrossRef]

- Shao, X.; Wang, Q.; Yang, W.; Chen, Y.; Xie, Y.; Shen, Y.; Wang, Z. Multi-Scale Feature Pyramid Network: A Heavily Occluded Pedestrian Detection Network Based on ResNet. Sensors 2021, 21, 1820. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, W.; Cui, Y.; Yu, L.; Luo, T. GCNet: Grid-like context-aware network for RGB-thermal semantic segmentation. Neurocomputing 2022, 506, 60–67. [Google Scholar] [CrossRef]

- Bai, J.; Zhu, J.; Song, Y.; Zhao, L.; Hou, Z.; Du, R.; Li, H. A3t-gcn: Attention temporal graph convolutional network for traffic forecasting. ISPRS Int. J. Geo-Inf. 2021, 10, 485. [Google Scholar] [CrossRef]

- Mseddi, W.; Sedrine, M.A.; Attia, R. YOLOv5 Based Visual Localization For Autonomous Vehicles. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021. [Google Scholar] [CrossRef]

- Wenzel, P.; Wang, R.; Yang, N.; Cheng, Q.; Khan, Q.; von Stumberg, L.; Zeller, N.; Cremers, D. 4Seasons: A Cross-Season Dataset for Multi-Weather SLAM in Autonomous Driving. In Proceedings of the Pattern Recognition: 42nd DAGM German Conference, DAGM GCPR 2020, Tübingen, Germany, 28 September–1 October 2020; Akata, Z., Geiger, A., Sattler, T., Eds.; ACM: Cham, Switzerland, 2021; pp. 404–417. [Google Scholar]

- Gallagher, L.; Ravi Kumar, V.; Yogamani, S.; McDonald, J.B. A Hybrid Sparse-Dense Monocular SLAM System for Autonomous Driving. In Proceedings of the 2021 European Conference on Mobile Robots (ECMR), Bonn, Germany, 31 August–3 September 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, T. Perception Stack for Indy Autonomous Challenge and Reinforcement Learning in Simulation Autonomous Racing. Technical Report No. UCB/EECS-2023-187, 2 May 2023. Available online: https://www2.eecs.berkeley.edu/Pubs/TechRpts/2023/EECS-2023-187.pdf (accessed on 16 July 2024).

- Balakrishnan, A.; Ramana, K.; Dhiman, G.; Ashok, G.; Bhaskar, V.; Sharma, A.; Gaba, G.S.; Masud, M.; Al-Amri, J.F. Multimedia Concepts on Object Detection and Recognition with F1 Car Simulation Using Convolutional Layers. Wirel. Commun. Mob. Comput. 2021, 2021, 5543720. [Google Scholar] [CrossRef]

- Teeti, I.; Musat, V.; Khan, S.; Rast, A.; Cuzzolin, F.; Bradley, A. Vision in adverse weather: Augmentation using CycleGANs with various object detectors for robust perception in autonomous racing. arXiv 2022, arXiv:2201.03246. [Google Scholar]

- Katsamenis, I.; Karolou, E.E.; Davradou, A.; Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Kalogeras, D. TraCon: A novel dataset for real-time traffic cones detection using deep learning. arXiv 2022, arXiv:2205.11830. [Google Scholar]

- Strobel, K.; Zhu, S.; Chang, R.; Koppula, S. Accurate, Low-Latency Visual Perception for Autonomous Racing: Challenges, Mechanisms, and Practical Solutions. arXiv 2020, arXiv:2007.13971. [Google Scholar]

- Peng, W.; Ao, Y.; He, J.; Wang, P. Vehicle Odometry with Camera-Lidar-IMU Information Fusion and Factor-Graph Optimization. J. Intell. Robotic Syst. 2021, 101, 81. [Google Scholar] [CrossRef]

- Karle, P.; Fent, F.; Huch, S.; Sauerbeck, F.; Lienkamp, M. Multi-Modal Sensor Fusion and Object Tracking for Autonomous Racing. IEEE Trans. Intell. Veh. 2023, 8, 3871–3883. [Google Scholar] [CrossRef]

- Carranza-García, M.; Torres-Mateo, J.; Lara-Benítez, P.; García-Gutiérrez, J. On the Performance of One-Stage and Two-Stage Object Detectors in Autonomous Vehicles Using Camera Data. Remote Sens. 2021, 13, 89. [Google Scholar] [CrossRef]

- Liu, Y.; Diao, S. An automatic driving trajectory planning approach in complex traffic scenarios based on integrated driver style inference and deep reinforcement learning. PLoS ONE 2024, 19, e0297192. [Google Scholar] [CrossRef] [PubMed]

- Gupta, P.; Isele, D.; Bae, S. Towards Scalable and Efficient Interaction-Aware Planning in Autonomous Vehicles using Knowledge Distillation. arXiv 2024, arXiv:2404.01746. [Google Scholar]

- Hui, F.; Wei, C.; ShangGuan, W.; Ando, R.; Fang, S. Deep encoder–decoder-NN: A deep learning-based autonomous vehicle trajectory prediction and correction model. Phys. A Stat. Mech. Appl. 2022, 593, 126869. [Google Scholar] [CrossRef]

- Zhang, Z. ResNet-Based Model for Autonomous Vehicles Trajectory Prediction. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 565–568. [Google Scholar] [CrossRef]

- Paz, D.; Zhang, H.; Christensen, H.I. TridentNet: A Conditional Generative Model for Dynamic Trajectory Generation. In Proceedings of the Intelligent Autonomous Systems 16; Ang, M.H., Jr., Asama, H., Lin, W., Foong, S., Eds.; Springer: Cham, Switzerland, 2022; pp. 403–416. [Google Scholar]

- Cai, P.; Sun, Y.; Wang, H.; Liu, M. VTGNet: A Vision-Based Trajectory Generation Network for Autonomous Vehicles in Urban Environments. IEEE Trans. Intell. Veh. 2021, 6, 419–429. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Y.; Zhou, Z.; Nam, K.; Wei, C.; Yin, C. Trajectory Prediction of Preceding Target Vehicles Based on Lane Crossing and Final Points Generation Model Considering Driving Styles. IEEE Trans. Veh. Technol. 2021, 70, 8720–8730. [Google Scholar] [CrossRef]

- Zhang, Q.; Hu, S.; Sun, J.; Chen, Q.A.; Mao, Z.M. On Adversarial Robustness of Trajectory Prediction for Autonomous Vehicles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 15159–15168. [Google Scholar]

- Wang, J.; Wang, P.; Zhang, C.; Su, K.; Li, J. F-Net: Fusion Neural Network for Vehicle Trajectory Prediction in Autonomous Driving. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 4095–4099. [Google Scholar] [CrossRef]

- Qu, L.; Dailey, M.N. Vehicle Trajectory Estimation Based on Fusion of Visual Motion Features and Deep Learning. Sensors 2021, 21, 7969. [Google Scholar] [CrossRef]

- Lin, L.; Li, W.; Bi, H.; Qin, L. Vehicle Trajectory Prediction Using LSTMs with Spatial-Temporal Attention Mechanisms. IEEE Intell. Transp. Syst. Mag. 2022, 14, 197–208. [Google Scholar] [CrossRef]

- Greer, R.; Deo, N.; Trivedi, M. Trajectory Prediction in Autonomous Driving With a Lane Heading Auxiliary Loss. IEEE Robot. Autom. Lett. 2021, 6, 4907–4914. [Google Scholar] [CrossRef]

- Song, H.; Luan, D.; Ding, W.; Wang, M.Y.; Chen, Q. Learning to Predict Vehicle Trajectories with Model-based Planning. In Proceedings of the 5th Conference on Robot Learning, PMLR, London, UK, 8–11 November 2021; Faust, A., Hsu, D., Neumann, G., Eds.; Volume 164, pp. 1035–1045. [Google Scholar]

- Chen, X.; Xu, J.; Zhou, R.; Chen, W.; Fang, J.; Liu, C. TrajVAE: A Variational AutoEncoder model for trajectory generation. Neurocomputing 2021, 428, 332–339. [Google Scholar] [CrossRef]

- Li, X.; Rosman, G.; Gilitschenski, I.; Vasile, C.I.; DeCastro, J.A.; Karaman, S.; Rus, D. Vehicle Trajectory Prediction Using Generative Adversarial Network With Temporal Logic Syntax Tree Features. IEEE Robot. Autom. Lett. 2021, 6, 3459–3466. [Google Scholar] [CrossRef]

- Zhang, K.; Zhao, L.; Dong, C.; Wu, L.; Zheng, L. AI-TP: Attention-based Interaction-aware Trajectory Prediction for Autonomous Driving. IEEE Trans. Intell. Veh. 2023, 8, 73–83. [Google Scholar] [CrossRef]

- Sheng, Z.; Xu, Y.; Xue, S.; Li, D. Graph-Based Spatial-Temporal Convolutional Network for Vehicle Trajectory Prediction in Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17654–17665. [Google Scholar] [CrossRef]

- Carrasco, S.; Llorca, D.; Fern, E.; Sotelo, M.A. SCOUT: Socially-COnsistent and UndersTandable Graph Attention Network for Trajectory Prediction of Vehicles and VRUs. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 1501–1508. [Google Scholar] [CrossRef]

- Jo, E.; Sunwoo, M.; Lee, M. Vehicle Trajectory Prediction Using Hierarchical Graph Neural Network for Considering Interaction among Multimodal Maneuvers. Sensors 2021, 21, 5354. [Google Scholar] [CrossRef] [PubMed]

- Li, F.J.; Zhang, C.Y.; Chen, C.P. Robust decision-making for autonomous vehicles via deep reinforcement learning and expert guidance. Appl. Intell. 2025, 55, 412. [Google Scholar] [CrossRef]

- Liao, H.; Li, Z.; Shen, H.; Zeng, W.; Liao, D.; Li, G.; Li, S.E.; Xu, C. BAT: Behavior-Aware Human-Like Trajectory Prediction for Autonomous Driving. arXiv 2023, arXiv:2312.06371. [Google Scholar] [CrossRef]

- Zhai, F.; Xu, H.; Chen, C.; Zhang, G. Deep Learning Based Approach for Human-like Driving Trajectory Planning. In Proceedings of the 2023 3rd International Conference on Robotics, Automation and Intelligent Control (ICRAIC), Los Alamitos, CA, USA, 22–24 December 2023; pp. 393–397. [Google Scholar] [CrossRef]

- Cai, L.; Guan, H.; Xu, Q.H.; Jia, X.; Zhan, J. A novel behavior planning for human-like autonomous driving. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2025, 0, 09544070241310648. [Google Scholar]

- Pan, C.; Yaman, B.; Nesti, T.; Mallik, A.; Allievi, A.G.; Velipasalar, S.; Ren, L. VLP: Vision Language Planning for Autonomous Driving. arXiv 2024, arXiv:2401.05577. [Google Scholar]

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Wang, Z. Drive as You Speak: Enabling Human-Like Interaction with Large Language Models in Autonomous Vehicles. arXiv 2023, arXiv:2309.10228. [Google Scholar]

- He, L.; Aouf, N.; Song, B. Explainable Deep Reinforcement Learning for UAV autonomous path planning. Aerosp. Sci. Technol. 2021, 118, 107052. [Google Scholar] [CrossRef]

- Xu, C.; Zhao, W.; Chen, Q.; Wang, C. An actor-critic based learning method for decision-making and planning of autonomous vehicles. Sci. China E Technol. Sci. 2021, 64, 984–994. [Google Scholar] [CrossRef]

- Cheng, Y.; Hu, X.; Chen, K.; Yu, X.; Luo, Y. Online longitudinal trajectory planning for connected and autonomous vehicles in mixed traffic flow with deep reinforcement learning approach. J. Intell. Transp. Syst. 2023, 27, 396–410. [Google Scholar] [CrossRef]

- Luis, S.Y.; Reina, D.G.; Marín, S.L.T. A Multiagent Deep Reinforcement Learning Approach for Path Planning in Autonomous Surface Vehicles: The Ypacaraí Lake Patrolling Case. IEEE Access 2021, 9, 17084–17099. [Google Scholar] [CrossRef]

- Naveed, K.B.; Qiao, Z.; Dolan, J.M. Trajectory Planning for Autonomous Vehicles Using Hierarchical Reinforcement Learning. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 601–606. [Google Scholar] [CrossRef]

- Yang, J.; Wang, P.; Yuan, W.; Ju, Y.; Han, W.; Zhao, J. Automatic generation of optimal road trajectory for the rescue vehicle in case of emergency on mountain freeway using reinforcement learning approach. IET Intell. Transp. Syst. 2021, 15, 1142–1152. [Google Scholar] [CrossRef]

- Bayerlein, H.; Theile, M.; Caccamo, M.; Gesbert, D. Multi-UAV Path Planning for Wireless Data Harvesting With Deep Reinforcement Learning. IEEE Open J. Commun. Soc. 2021, 2, 1171–1187. [Google Scholar] [CrossRef]

- Jain, A.; Morari, M. Computing the racing line using Bayesian optimization. arXiv 2020, arXiv:2002.04794. [Google Scholar]

- Ögretmen, L.; Chen, M.; Pitschi, P.; Lohmann, B. Trajectory Planning Using Reinforcement Learning for Interactive Overtaking Maneuvers in Autonomous Racing Scenarios. arXiv 2024, arXiv:2404.10658. [Google Scholar]

- Cleac’h, S.L.; Schwager, M.; Manchester, Z. LUCIDGames: Online Unscented Inverse Dynamic Games for Adaptive Trajectory Prediction and Planning. arXiv 2020, arXiv:2011.08152. [Google Scholar]

- Karle, P.; Török, F.; Geisslinger, M.; Lienkamp, M. MixNet: Structured Deep Neural Motion Prediction for Autonomous Racing. arXiv 2022, arXiv:2208.01862. [Google Scholar]

- Ghignone, E.; Baumann, N.; Boss, M.; Magno, M. TC-Driver: Trajectory Conditioned Driving for Robust Autonomous Racing—A Reinforcement Learning Approach. arXiv 2022, arXiv:2205.09370. [Google Scholar]

- Weaver, C.; Capobianco, R.; Wurman, P.R.; Stone, P.; Tomizuka, M. Real-time Trajectory Generation via Dynamic Movement Primitives for Autonomous Racing. In Proceedings of the 2024 American Control Conference (ACC), Toronto, ON, Canada, 8–9 July 2024; IEEE: New York, NY, USA, 2024; pp. 352–359. [Google Scholar]

- Evans, B.; Jordaan, H.W.; Engelbrecht, H.A. Autonomous Obstacle Avoidance by Learning Policies for Reference Modification. arXiv 2021, arXiv:2102.11042. [Google Scholar]

- Thakkar, R.S.; Samyal, A.S.; Fridovich-Keil, D.; Xu, Z.; Topcu, U. Hierarchical Control for Cooperative Teams in Competitive Autonomous Racing. arXiv 2022, arXiv:2204.13070. [Google Scholar] [CrossRef]

- Trumpp, R.; Javanmardi, E.; Nakazato, J.; Tsukada, M.; Caccamo, M. RaceMOP: Mapless Online Path Planning for Multi-Agent Autonomous Racing using Residual Policy Learning. arXiv 2024, arXiv:2403.07129. [Google Scholar]

- Garlick, S.; Bradley, A. Real-Time Optimal Trajectory Planning for Autonomous Vehicles and Lap Time Simulation Using Machine Learning. arXiv 2021, arXiv:2102.02315. [Google Scholar] [CrossRef]

- Kim, T.; Lee, H.; Hong, S.; Lee, W. TOAST: Trajectory Optimization and Simultaneous Tracking using Shared Neural Network Dynamics. arXiv 2022, arXiv:2201.08321. [Google Scholar] [CrossRef]

- Chisari, E.; Liniger, A.; Rupenyan, A.; Gool, L.V.; Lygeros, J. Learning from Simulation, Racing in Reality. arXiv 2021, arXiv:2011.13332. [Google Scholar]

- Fuchs, F.; Song, Y.; Kaufmann, E.; Scaramuzza, D.; Durr, P. Super-Human Performance in Gran Turismo Sport Using Deep Reinforcement Learning. IEEE Robot. Autom. Lett. 2021, 6, 4257–4264. [Google Scholar] [CrossRef]

- Zhang, R.; Hou, J.; Chen, G.; Li, Z.; Chen, J.; Knoll, A. Residual Policy Learning Facilitates Efficient Model-Free Autonomous Racing. IEEE Robot. Autom. Lett. 2022, 7, 11625–11632. [Google Scholar] [CrossRef]

- Weiss, T.; Chrosniak, J.; Behl, M. Towards multi-agent autonomous racing with the Deepracing framework. In Proceedings of the International Conference on Robotics and Automation, Virtual Conference, 31 May–31 August 2020. [Google Scholar]

- Busch, F.L.; Johnson, J.; Zhu, E.L.; Borrelli, F. A Gaussian Process Model for Opponent Prediction in Autonomous Racing. arXiv 2022, arXiv:2204.12533. [Google Scholar]

- Brüdigam, T.; Capone, A.; Hirche, S.; Wollherr, D.; Leibold, M. Gaussian Process-based Stochastic Model Predictive Control for Overtaking in Autonomous Racing. arXiv 2021, arXiv:2105.12236. [Google Scholar]

- Tian, Z.; Zhao, D.; Lin, Z.; Flynn, D.; Zhao, W.; Tian, D. Balanced reward-inspired reinforcement learning for autonomous vehicle racing. In Proceedings of the 6th Annual Learning for Dynamics & Control Conference, PMLR, Oxford, UK, 15–17 July 2024; Abate, A., Cannon, M., Margellos, K., Papachristodoulou, A., Eds.; Volume 242, pp. 628–640. [Google Scholar]

- Trent Weiss, V.S.; Behl, M. DeepRacing AI: Agile Trajectory Synthesis for Autonomous Racing. arXiv 2020, arXiv:2005.05178. [Google Scholar]

- Gao, Z.; Yu, T.; Gao, F.; Zhao, R.; Sun, T. Human-like mechanism deep learning model for longitudinal motion control of autonomous vehicles. Eng. Appl. Artif. Intell. 2024, 133, 108060. [Google Scholar] [CrossRef]

- Renz, K.; Chen, L.; Marcu, A.M.; Hünermann, J.; Hanotte, B.; Karnsund, A.; Shotton, J.; Arani, E.; Sinavski, O. CarLLaVA: Vision language models for camera-only closed-loop driving. arXiv 2024, arXiv:2406.10165. [Google Scholar]

- Elallid, B.B.; Bagaa, M.; Benamar, N.; Mrani, N. A reinforcement learning based autonomous vehicle control in diverse daytime and weather scenarios. J. Intell. Transp. Syst. 2024; in press. [Google Scholar] [CrossRef]

- Yin, Y. Design of Deep Learning Based Autonomous Driving Control Algorithm. In Proceedings of the 2022 2nd International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 14–16 January 2022; pp. 423–426. [Google Scholar] [CrossRef]

- Li, X.; Liu, C.; Chen, B.; Jiang, J. Robust Adaptive Learning-Based Path Tracking Control of Autonomous Vehicles Under Uncertain Driving Environments. IEEE Trans. Intell. Transp. Syst. 2022, 23, 20798–20809. [Google Scholar] [CrossRef]

- Xue, H.; Zhu, E.L.; Dolan, J.M.; Borrelli, F. Learning Model Predictive Control with Error Dynamics Regression for Autonomous Racing. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: New York, NY, USA, 2024; pp. 13250–13256. [Google Scholar] [CrossRef]

- Brunnbauer, A.; Berducci, L.; Brandstätter, A.; Lechner, M.; Hasani, R.M.; Rus, D.; Grosu, R. Model-based versus Model-free Deep Reinforcement Learning for Autonomous Racing Cars. arXiv 2021, arXiv:2103.04909. [Google Scholar]

- Du, Y.; Chen, J.; Zhao, C.; Liu, C.; Liao, F.; Chan, C.Y. Comfortable and energy-efficient speed control of autonomous vehicles on rough pavements using deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2022, 134, 103489. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhang, X.; Xu, X.; Liu, X.; Liu, J. Deep Neural Networks with Koopman Operators for Modeling and Control of Autonomous Vehicles. IEEE Trans. Intell. Veh. 2022, 8, 135–146. [Google Scholar] [CrossRef]

- Fényes, D.; Németh, B.; Gáspár, P. A Novel Data-Driven Modeling and Control Design Method for Autonomous Vehicles. Energies 2021, 14, 517. [Google Scholar] [CrossRef]

- He, S.; Xu, R.; Zhao, Z.; Zou, T. Vision-based neural formation tracking control of multiple autonomous vehicles with visibility and performance constraints. Neurocomputing 2022, 492, 651–663. [Google Scholar] [CrossRef]

- Salunkhe, S.S.; Pal, S.; Agrawal, A.; Rai, R.; Mole, S.S.S.; Jos, B.M. Energy Optimization for CAN Bus and Media Controls in Electric Vehicles Using Deep Learning Algorithms. J. Supercomput. 2022, 78, 8493–8508. [Google Scholar] [CrossRef]

- Wang, W.; Xie, J.; Hu, C.; Zou, H.; Fan, J.; Tong, W.; Wen, Y.; Wu, S.; Deng, H.; Li, Z.; et al. DriveMLM: Aligning Multi-Modal Large Language Models with Behavioral Planning States for Autonomous Driving. arXiv 2023, arXiv:2312.09245. [Google Scholar]

- Prathiba, S.B.; Raja, G.; Dev, K.; Kumar, N.; Guizani, M. A Hybrid Deep Reinforcement Learning For Autonomous Vehicles Smart-Platooning. IEEE Trans. Veh. Technol. 2021, 70, 13340–13350. [Google Scholar] [CrossRef]

- Mushtaq, A.; Haq, I.U.; Imtiaz, M.U.; Khan, A.; Shafiq, O. Traffic Flow Management of Autonomous Vehicles Using Deep Reinforcement Learning and Smart Rerouting. IEEE Access 2021, 9, 51005–51019. [Google Scholar] [CrossRef]

- Pérez-Gil, O.; Barea, R.; López-Guillén, E.; Bergasa, L.M.; Gómez-Huélamo, C.; Gutiérrez, R.; Díaz-Díaz, A. Deep Reinforcement Learning Based Control for Autonomous Vehicles in CARLA. Multimed. Tools Appl. 2022, 81, 3553–3576. [Google Scholar] [CrossRef]

- Dong, J.; Chen, S.; Li, Y.; Du, R.; Steinfeld, A.; Labi, S. Space-weighted information fusion using deep reinforcement learning: The context of tactical control of lane-changing autonomous vehicles and connectivity range assessment. Transp. Res. Part C Emerg. Technol. 2021, 128, 103192. [Google Scholar] [CrossRef]

- Peng, B.; Keskin, M.F.; Kulcsár, B.; Wymeersch, H. Connected autonomous vehicles for improving mixed traffic efficiency in unsignalized intersections with deep reinforcement learning. Commun. Transp. Res. 2021, 1, 100017. [Google Scholar] [CrossRef]

- Muzahid, A.J.M.; Kamarulzaman, S.F.; Rahman, M.A.; Alenezi, A.H. Deep Reinforcement Learning-Based Driving Strategy for Avoidance of Chain Collisions and Its Safety Efficiency Analysis in Autonomous Vehicles. IEEE Access 2022, 10, 43303–43319. [Google Scholar] [CrossRef]

- Zheng, L.; Son, S.; Lin, M.C. Traffic-Aware Autonomous Driving with Differentiable Traffic Simulation. arXiv 2023, arXiv:2210.03772. [Google Scholar]

- Folkestad, C.; Wei, S.X.; Burdick, J.W. Quadrotor Trajectory Tracking with Learned Dynamics: Joint Koopman-based Learning of System Models and Function Dictionaries. arXiv 2021, arXiv:2110.10341. [Google Scholar]

- Jain, A.; O’Kelly, M.; Chaudhari, P.; Morari, M. BayesRace: Learning to race autonomously using prior experience. arXiv 2020, arXiv:2005.04755. [Google Scholar]

- Evans, B.; Engelbrecht, H.A.; Jordaan, H.W. From Navigation to Racing: Reward Signal Design for Autonomous Racing. arXiv 2021, arXiv:2103.10098. [Google Scholar]

- Salvaji, A.; Taylor, H.; Valencia, D.; Gee, T.; Williams, H. Racing Towards Reinforcement Learning based control of an Autonomous Formula SAE Car. arXiv 2023, arXiv:2308.13088. [Google Scholar]

- Betz, J.; Zheng, H.; Liniger, A.; Rosolia, U.; Karle, P.; Behl, M.; Krovi, V.; Mangharam, R. Autonomous Vehicles on the Edge: A Survey on Autonomous Vehicle Racing. arXiv 2022, arXiv:2202.07008. [Google Scholar] [CrossRef]

- Fu, D.; Li, X.; Wen, L.; Dou, M.; Cai, P.; Shi, B.; Qiao, Y. Drive Like a Human: Rethinking Autonomous Driving with Large Language Models. arXiv 2023, arXiv:2307.07162. [Google Scholar]

- Lee, D.; Liu, J. End-to-End Deep Learning of Lane Detection and Path Prediction for Real-Time Autonomous Driving. arXiv 2021, arXiv:2102.04738. [Google Scholar] [CrossRef]

- Hwang, J.J.; Xu, R.; Lin, H.; Hung, W.C.; Ji, J.; Choi, K.; Huang, D.; He, T.; Covington, P.; Sapp, B.; et al. EMMA: End-to-End Multimodal Model for Autonomous Driving. arXiv 2024, arXiv:2410.23262. [Google Scholar]

- Hu, B.; Jiang, L.; Zhang, S.; Wang, Q. An Explainable and Robust Motion Planning and Control Approach for Autonomous Vehicle On-Ramping Merging Task Using Deep Reinforcement Learning. IEEE Trans. Transp. Electrif. 2024, 10, 6488–6496. [Google Scholar] [CrossRef]

- Kalaria, D.; Lin, Q.; Dolan, J.M. Adaptive Planning and Control with Time-Varying Tire Models for Autonomous Racing Using Extreme Learning Machine. arXiv 2023, arXiv:2303.08235. [Google Scholar]

- Mammadov, M. End-to-end Lidar-Driven Reinforcement Learning for Autonomous Racing. arXiv 2023, arXiv:2309.00296. [Google Scholar]

- Cosner, R.K.; Yue, Y.; Ames, A.D. End-to-End Imitation Learning with Safety Guarantees using Control Barrier Functions. In Proceedings of the CDC, Cancun, Mexico, 6–9 December 2022; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Natan, O.; Miura, J. End-to-end Autonomous Driving with Semantic Depth Cloud Mapping and Multi-agent. IEEE Trans. Intell. Veh. 2023, 8, 557–571. [Google Scholar] [CrossRef]

- Lee, H.; Choi, Y.; Han, T.; Kim, K. Probabilistically Guaranteeing End-to-end Latencies in Autonomous Vehicle Computing Systems. IEEE Trans. Comput. 2022, 71, 3361–3374. [Google Scholar] [CrossRef]

- Nair, U.R.; Sharma, S.; Parihar, U.S.; Menon, M.S.; Vidapanakal, S. Bridging Sim2Real Gap Using Image Gradients for the Task of End-to-End Autonomous Driving. arXiv 2022, arXiv:2205.07481. [Google Scholar]

- Antonio, G.P.; Maria-Dolores, C. Multi-Agent Deep Reinforcement Learning to Manage Connected Autonomous Vehicles at Tomorrow’s Intersections. IEEE Trans. Veh. Technol. 2022, 71, 7033–7043. [Google Scholar] [CrossRef]

- Agarwal, T.; Arora, H.; Schneider, J. Learning Urban Driving Policies Using Deep Reinforcement Learning. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 607–614. [Google Scholar] [CrossRef]

- Cui, J.; Qiu, H.; Chen, D.; Stone, P.; Zhu, Y. Coopernaut: End-to-End Driving With Cooperative Perception for Networked Vehicles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17252–17262. [Google Scholar]

- Kwon, J.; Khalil, A.; Kim, D.; Nam, H. Incremental End-to-End Learning for Lateral Control in Autonomous Driving. IEEE Access 2022, 10, 33771–33786. [Google Scholar] [CrossRef]

- Schwarting, W.; Seyde, T.; Gilitschenski, I.; Liebenwein, L.; Sander, R.; Karaman, S.; Rus, D. Deep Latent Competition: Learning to Race Using Visual Control Policies in Latent Space. arXiv 2021, arXiv:2102.09812. [Google Scholar]

- Hsu, B.J.; Cao, H.G.; Lee, I.; Kao, C.Y.; Huang, J.B.; Wu, I.C. Image-Based Conditioning for Action Policy Smoothness in Autonomous Miniature Car Racing with Reinforcement Learning. arXiv 2022, arXiv:2205.09658. [Google Scholar]

- Cota, J.L.; Rodríguez, J.A.T.; Alonso, B.G.; Hurtado, C.V. Roadmap for development of skills in Artificial Intelligence by means of a Reinforcement Learning model using a DeepRacer autonomous vehicle. In Proceedings of the 2022 IEEE Global Engineering Education Conference (EDUCON), Tunis, Tunisia, 28–31 March 2022; pp. 1355–1364. [Google Scholar] [CrossRef]

- Huch, S.; Sauerbeck, F.; Betz, J. DeepSTEP–Deep Learning-Based Spatio-Temporal End-To-End Perception for Autonomous Vehicles. arXiv 2023, arXiv:2305.06820. [Google Scholar]

- Aoki, S.; Yamamoto, I.; Shiotsuka, D.; Inoue, Y.; Tokuhiro, K.; Miwa, K. SuperDriverAI: Towards Design and Implementation for End-to-End Learning-based Autonomous Driving. arXiv 2023, arXiv:2305.10443. [Google Scholar]

- Tian, X.; Gu, J.; Li, B.; Liu, Y.; Wang, Y.; Zhao, Z.; Zhan, K.; Jia, P.; Lang, X.; Zhao, H. DriveVLM: The Convergence of Autonomous Driving and Large Vision-Language Models. arXiv 2024, arXiv:2402.12289. [Google Scholar]

- Xu, Y.; Hu, Y.; Zhang, Z.; Meyer, G.P.; Mustikovela, S.K.; Srinivasa, S.; Wolff, E.M.; Huang, X. VLM-AD: End-to-End Autonomous Driving through Vision-Language Model Supervision. arXiv 2024, arXiv:2412.14446. [Google Scholar]

- Zhang, Y. LIDAR–camera deep fusion for end-to-end trajectory planning of autonomous vehicle. J. Phys. Conf. Ser. 2022, 2284, 012006. [Google Scholar] [CrossRef]

- Chen, L.; Hu, X.; Tang, B.; Cheng, Y. Conditional DQN-Based Motion Planning With Fuzzy Logic for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2966–2977. [Google Scholar] [CrossRef]

- Prakash, A.; Chitta, K.; Geiger, A. Multi-Modal Fusion Transformer for End-to-End Autonomous Driving. arXiv 2021, arXiv:2104.09224. [Google Scholar]

- Weiss, T.; Behl, M. DeepRacing: A Framework for Autonomous Racing. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020; IEEE: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Zheng, H.; Betz, J.; Mangharam, R. Gradient-free Multi-domain Optimization for Autonomous Systems. arXiv 2022, arXiv:2202.13525. [Google Scholar]

- Song, Y.; Lin, H.; Kaufmann, E.; Duerr, P.; Scaramuzza, D. Autonomous Overtaking in Gran Turismo Sport Using Curriculum Reinforcement Learning. arXiv 2021, arXiv:2103.14666. [Google Scholar]

- Abrecht, S.; Hirsch, A.; Raafatnia, S.; Woehrle, M. Deep Learning Safety Concerns in Automated Driving Perception. IEEE Trans. Intell. Veh. 2024; early access. [Google Scholar] [CrossRef]

- Wang, Y.; Jiao, R.; Zhan, S.S.; Lang, C.; Huang, C.; Wang, Z.; Yang, Z.; Zhu, Q. Empowering Autonomous Driving with Large Language Models: A Safety Perspective. arXiv 2024, arXiv:2312.00812. [Google Scholar]

- Chen, H.; Cao, X.; Guvenc, L.; Aksun-Guvenc, B. Deep-Reinforcement-Learning-Based Collision Avoidance of Autonomous Driving System for Vulnerable Road User Safety. Electronics 2024, 13, 1952. [Google Scholar] [CrossRef]

- Yu, K.; Lin, L.; Alazab, M.; Tan, L.; Gu, B. Deep Learning-Based Traffic Safety Solution for a Mixture of Autonomous and Manual Vehicles in a 5G-Enabled Intelligent Transportation System. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4337–4347. [Google Scholar] [CrossRef]

- Wan, L.; Sun, Y.; Sun, L.; Ning, Z.; Rodrigues, J.J.P.C. Deep Learning Based Autonomous Vehicle Super Resolution DOA Estimation for Safety Driving. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4301–4315. [Google Scholar] [CrossRef]

- Karmakar, G.; Chowdhury, A.; Das, R.; Kamruzzaman, J.; Islam, S. Assessing Trust Level of a Driverless Car Using Deep Learning. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4457–4466. [Google Scholar] [CrossRef]

- Zhu, Z.; Hu, Z.; Dai, W.; Chen, H.; Lv, Z. Deep learning for autonomous vehicle and pedestrian interaction safety. Saf. Sci. 2022, 145, 105479. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Mo, X.; Hu, Z.; Huang, C.; Hang, P. Toward Safe and Smart Mobility: Energy-Aware Deep Learning for Driving Behavior Analysis and Prediction of Connected Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4267–4280. [Google Scholar] [CrossRef]

- Chen, B.; Francis, J.; Herman, J.; Oh, J.; Nyberg, E.; Herbert, S.L. Safety-aware Policy Optimisation for Autonomous Racing. arXiv 2021, arXiv:2110.07699. [Google Scholar]

- Cai, P.; Wang, H.; Huang, H.; Liu, Y.; Liu, M. Vision-Based Autonomous Car Racing Using Deep Imitative Reinforcement Learning. IEEE Robot. Autom. Lett. 2021, 6, 7262–7269. [Google Scholar] [CrossRef]

- Tearle, B.; Wabersich, K.P.; Carron, A.; Zeilinger, M.N. A Predictive Safety Filter for Learning-Based Racing Control. IEEE Robot. Autom. Lett. 2021, 6, 7635–7642. [Google Scholar] [CrossRef]

- Tranzatto, M.; Dharmadhikari, M.; Bernreiter, L.; Camurri, M.; Khattak, S.; Mascarich, F.; Pfreundschuh, P.; Wisth, D.; Zimmermann, S.; Kulkarni, M.; et al. Team CERBERUS Wins the DARPA Subterranean Challenge: Technical Overview and Lessons Learned. arXiv 2022, arXiv:2207.04914. [Google Scholar] [CrossRef]

| Inclusion | Exclusion | |

|---|---|---|

| Type of Study | Original research papers, review papers, technical reports, data papers | Posters, short papers, editorials, letters |

| Language | English | Non-English |

| Countries/Regions | Not restricted | - |

| Publication Year | January 2020 to December 2025 | Pre-2020 or Post-2025 |

| Intervention | Machine learning/deep learning approaches | Other approaches rather than machine learning or deep learning |

| Scope | Deep learning approaches for modular autonomous driving systems | Other focus rather than deep learning approaches for modular autonomous driving systems |

| Modular System Module | Components | Autonomous On-Road Vehicles | Autonomous Racing Cars |

|---|---|---|---|

| Perception | Sensor Fusion | Tracks pedestrians, vehicles, and road signs using cameras, LiDAR, and radar. | Detects track boundaries, other cars, and obstacles using cameras, LiDAR, radar, and GPS. |

| Object Detection | Pedestrians, cyclists, road signs, other vehicles, traffic signals, and weather conditions. | Track conditions such as turns, barriers, and opponents. | |

| Lane and Road Recognition | Road lanes, lane markings, road edges, and free space to drive. | Track boundaries, racing lines, and potential off-track hazards | |

| Planning | Path Planning | Manage trajectory, traffic rules, collision obstacles and road geometry. | Optimal trajectory, avoiding collisions and overtaking at high speeds. |

| Decision-Making | Safe driving decisions include stopping at signals, changing lanes, and walking to pedestrians. | Aggressive decision-making, such as high-speed overtaking, defending positions, and responding to track conditions. | |

| Speed Control | Balances fuel efficiency and safety based on traffic, road conditions, and speed limits. | Maximizes speed while maintaining control, particularly during cornering and on straights. | |

| Control | Steering Control | Smooth steering for safe navigation, considering curves, traffic, and road signs. | Makes precise, rapid steering adjustments to maintain the optimal racing line. |

| Throttle & Braking Control | Adjust throttle and braking to maximize fuel efficiency and safety, including emergency braking. | Precise throttle and braking to optimize lap times, especially during sharp corners and accelerations. | |

| Stability and Traction Control | Ensure vehicle stability in varying weather and road conditions, minimizing skidding and loss of control. | Maximizes traction, especially during cornering, to maintain grip and minimize oversteer/understeer. | |

| Vehicle Safety | Monitors vehicle health and adapts for comfort and fuel efficiency. | Monitors vehicle performance in real-time for performance optimization. |

| Platform | Reference | Approach | Modular | Performance |

|---|---|---|---|---|

| Autonomous Vehicles | [85] | VAE + GRU and RL | Trajectory planning | Average route completion degree (100%), number of collisions N is 6, Number of deadlocks is 0, and average running time is 542.56 s |

| [101] | Graph-spatial-temporal-CNN with GRU | Trajectory prediction | Average RMSE 1.52 with all vehicles, and Average RMSE 1.49 with one vehicle | |

| [102] | SCOUT: Attention-based GCN | Interaction, trajectory prediction | Average displacement error (ADE)/final displacement error (FDE), InD dataset: 0.46/1.03 | |

| [104] | SAC and VAE | Decision-making | Success rate outperformed | |

| [109] | LLMs | Motion planning and decision-making | ||

| Autonomous Racing Cars | [118] | RL | Trajectory planning for overtaking maneuvers | Success rate up to 92%, time per planning cycle 1.5 ms |

| [126] | ANN: feed-forward neural network | Optimal trajectory and lap time | MAE = ±0.27, and ±0.11 at curvature point, 9000 times faster performance than traditional methods | |

| [125] | Multi-agent: artificial potential field (APF) planner with residual policy learning (RPL) | Path planning | Collision ratio of IC = 0.33% and IC = 0.42% | |

| [134] | Balanced reward-inspired proximal policy optimization (BRPPO) | Decision-making to navigate complex tracks | Number of collisions is 0 on all tracks | |

| [122] | Dynamic movement primitives (DMPs) | Trajectory generation | Mean lap times between the acceleration goal DMP (Mean = 134.75, SD = 0.85) and Velocity goal DMP (M = 136.87, SD = 1.34) |

| Platform | Reference | Approach | Modular | Performance |

|---|---|---|---|---|

| Autonomous Vehicles | [136] | Proposed a human-like neural network | Longitudinal motion control | Control style consistency and convergence rate. |

| [137] | VLM | Literal controls | Driving Score (DS) 6.87, route completion (RC) 18.08 and infraction score (IS) 0.42 | |

| [138] | DRL with DQN | Vehicles control in difficult environments | Maximizes success rate with minimizes collision rate | |

| [139] | CNN with pre-training as well as maintaining overfitting | Lateral control: steering angle estimation | Improve training and generalization to prevent over-fitting | |

| [140] | Introduced a novel approach—robust adaptive learning control (RALC) | Predicting uncertainties and lateral tracking controls | Tracking performance and errors: lateral deviation and heading errors are evaluated on an eight-shaped track where the adhesion coefficient is = 1. | |

| Autonomous Racing Cars | [132] | Gaussian process model and model predictive control (MPC) | Control safely overtaking opponents | Lateral and longitudinal error are (0.02 and 0.026) means and (0.006 and 0.006) variance respectively |

| [141] | Mathematical models | Learning MPC | 20th iteration lap time (ILT-20) is 5.0 | |

| [142] | Model-free DRL, Dreamer for Sim2Real | Controls | Lap time on four tracks | |

| [130] | ResRace: MAPF and model-free-DRL | Control policy | Lap time on five tracks |

| Platform | Reference | Approach | Modular | Performance |

|---|---|---|---|---|

| Autonomous Vehicles | [161] | LLMs | Perception to controls | Ability to reason and solve long-tailed cases |

| [162] | DSUNet (depthwise separable convolutions) | Planning: Lane detection and path prediction | Static/dynamic MAE: estimated curvature: 0.0046/0.0049, lateral offset: 0.18/0.11 | |

| [44] | VLMs | Perception to planning | Accuracy 66.5 | |

| [7] | ShuffleNet V2: proximal policy optimization (PPO) algorithm with curriculum learning and actor-critic | e2e: perception to controls | Best: Collision rate is 63%, waypoint distance is 2.98 m, speed is 8.65 km/h, total reward is 2025, and timestep is 374. | |

| [163] | Multimodal LLMs | Perception to planning | Avg L2 (m) 0.29 s | |

| [164] | Fuses RL with optimization-based methods | Explainable and robust motion planning and control approach | Online Computing time PPO-based in few millisecond(very fast) and optimization-based 120–150 ms (very slow) and Proposed (Hybrid) < 100 ms (acceptable) | |

| Autonomous Racing Cars | [165] | Extreme Learning Machine (ELM) | Planning and control | Optimal lap times 8.46 s, Mean deviation from racing line 0.0832 m and violation time 0.46 s |

| [166] | RL | e2e: Perception to trajectory planning | Success rate around 80% | |

| [5] | Direct policy learning with CNN and LSTM | e2e: Perception to controls (steering angles and throttles) | Average lap time: (84.19, 142.4), at highest speed: (70 mph, 60 mph), competed laps: (65, 50) track1 and track2, respectively | |

| [6] | CNN, transfer learning, DQN | e2e process for Sim2Real | Lap time: (AUT: 23 s, BRC: 56 s, GBR: 48 s, MCO: 42 s) | |

| [167] | Imitation learning (IL): IRL | Trajectories to controllers | CBFs-value for safety guarantee |

| Datasets | Sensors | Purpose | Developed | Type |

|---|---|---|---|---|

| CARLA | LiDAR, cameras | Perception, planning, control, sensor fusion, edge case testing | Computer game with game world and AI agents | Fully Simulation |

| LGSVL | LiDAR, cameras | Perception, localization, V2X interaction, control | Computer game world using Unity, AI agent simulation | |

| AirSim | LiDAR, cameras | Perception, reinforcement learning, control | Game-based simulation using Unreal Engine with drone/car agents | |

| KITTI | LiDAR, cameras | Perception (object detection, SLAM), sensor calibration | Real video data with AI agents making decisions on pre-existing video | Semi-simulation |

| Waymo Open | LiDAR, cameras | Perception, planning evaluation, tracking | Real-world recordings processed with autonomous AI modules | |

| nuScenes | LiDAR, cameras | Sensor fusion, prediction, 3D object detection | Real-world dataset with annotated scenes used for training AI agents | |

| Duckietown | Cameras, GPS, IMU | Lane detection, control, end-to-end learning | Scale model car with sensors running in a physical, scaled-down town | Semi-Real |

| Tesla Autopilot | LiDAR, cameras, radar | Perception, autopilot control, real-world planning | Real car with sensor suite collecting data from actual roadways | Real-World |

| Waymo | LiDAR, cameras, radar | Perception, prediction, planning, localization, control | Autonomous vehicles operating and recording in real environments | |

| Cruise | LiDAR, cameras, radar | Mapping, decision-making, motion planning, control | Real vehicles with AI-driven, collecting real data |

| Datasets | Sensors | Purpose | Developed | Type |

|---|---|---|---|---|

| Sim4Racing | Cameras, IMU | End-to-end driving, high-speed control, reinforcement learning | Game engine simulation with virtual racing environment and AI agents | Full simulation |

| TORCS | Cameras, wheel encoders | Planning, lane keeping, control, speed optimization | Classic racing simulator used for training AI agents | |

| DeepRacer (Sim) | Cameras, LiDAR | End-to-end policy learning, control | Cloud-based virtual simulation platform for reinforcement learning | |

| FormulaTrainee | Video footage (real track) | Decision-making from video frames, imitation learning | AI agent trained using pre-recorded video of real race tracks | Semi-simulation |

| DriverNet | Dash cam videos | Lane following, control | Real driving video data | |

| F1TENTH | Cameras, LiDAR, IMU | Planning, real-time obstacle avoidance, racing policy control | Onboard sensors operating in real environment | Semi-Real |

| DonkeyCar | Cameras, IMU | End-to-end learning, behavioral cloning | DIY scale car platform with sensors trained in physical tracks | |

| Audi RS5 (AutoDrive) | Cameras, radar, LiDAR | Real-time planning, high-speed control, safety-critical navigation | Full-sized car with sensor suite tested on race tracks | Real-World |

| Indy Autonomous Challenge | Cameras, LiDAR, GPS, IMU | Full autonomy in high-speed race scenarios, perception, planning | Full-size open-wheel race cars equipped with sensors in real competitions | |

| Roborace | Cameras, LiDAR, radar, GPS, IMU | High-speed autonomous racing, multi-agent interaction, real-time planning | Real full-size electric race cars with autonomous control tested in real racing circuits |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, K.; Moreira, C.; Pereira, J.; Jardim, S.; Jorge, J. A Comprehensive Literature Review on Modular Approaches to Autonomous Driving: Deep Learning for Road and Racing Scenarios. Smart Cities 2025, 8, 79. https://doi.org/10.3390/smartcities8030079

Hussain K, Moreira C, Pereira J, Jardim S, Jorge J. A Comprehensive Literature Review on Modular Approaches to Autonomous Driving: Deep Learning for Road and Racing Scenarios. Smart Cities. 2025; 8(3):79. https://doi.org/10.3390/smartcities8030079

Chicago/Turabian StyleHussain, Kamal, Catarina Moreira, João Pereira, Sandra Jardim, and Joaquim Jorge. 2025. "A Comprehensive Literature Review on Modular Approaches to Autonomous Driving: Deep Learning for Road and Racing Scenarios" Smart Cities 8, no. 3: 79. https://doi.org/10.3390/smartcities8030079

APA StyleHussain, K., Moreira, C., Pereira, J., Jardim, S., & Jorge, J. (2025). A Comprehensive Literature Review on Modular Approaches to Autonomous Driving: Deep Learning for Road and Racing Scenarios. Smart Cities, 8(3), 79. https://doi.org/10.3390/smartcities8030079