Smart City Community Watch—Camera-Based Community Watch for Traffic and Illegal Dumping

Abstract

Highlights

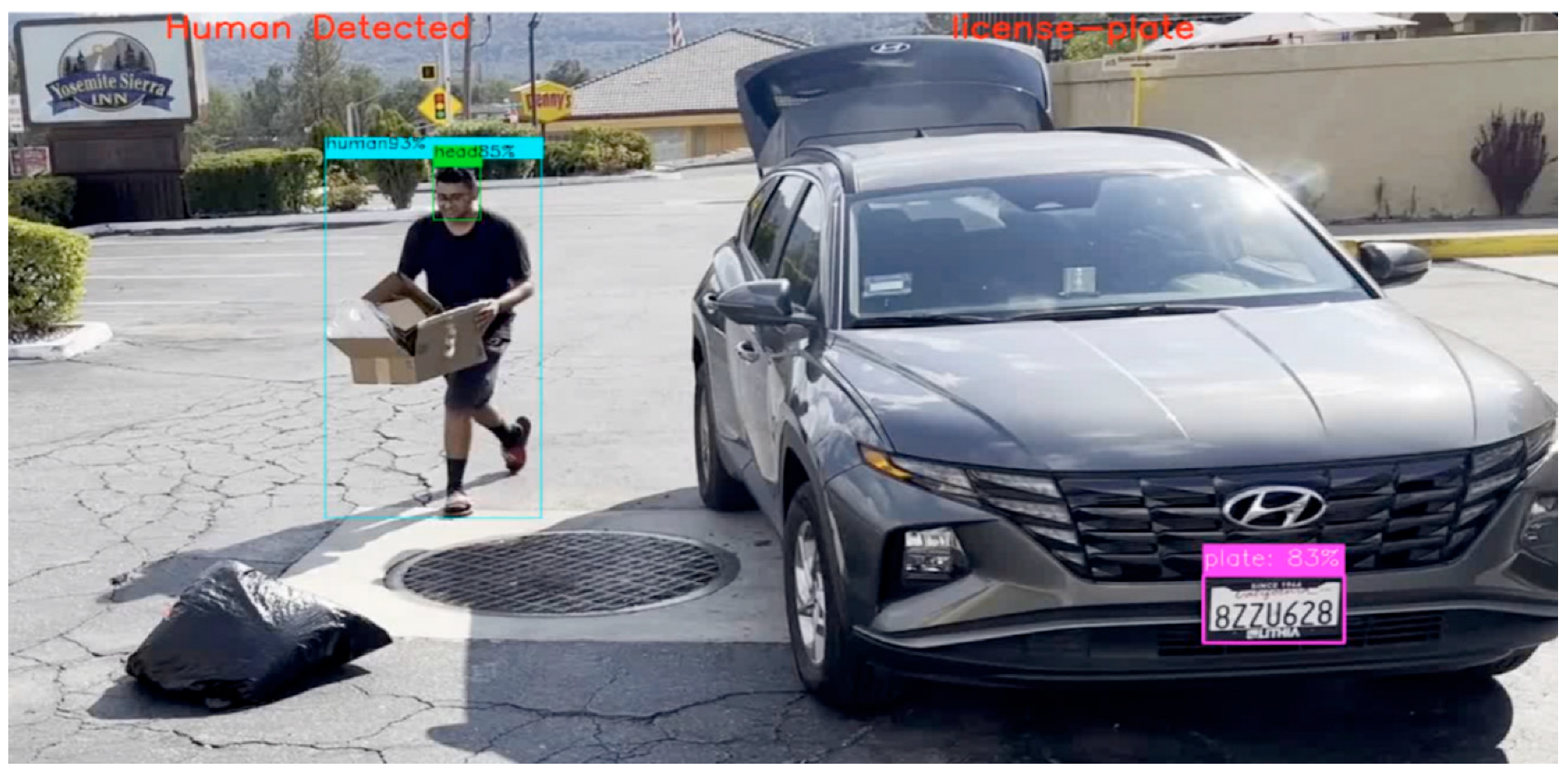

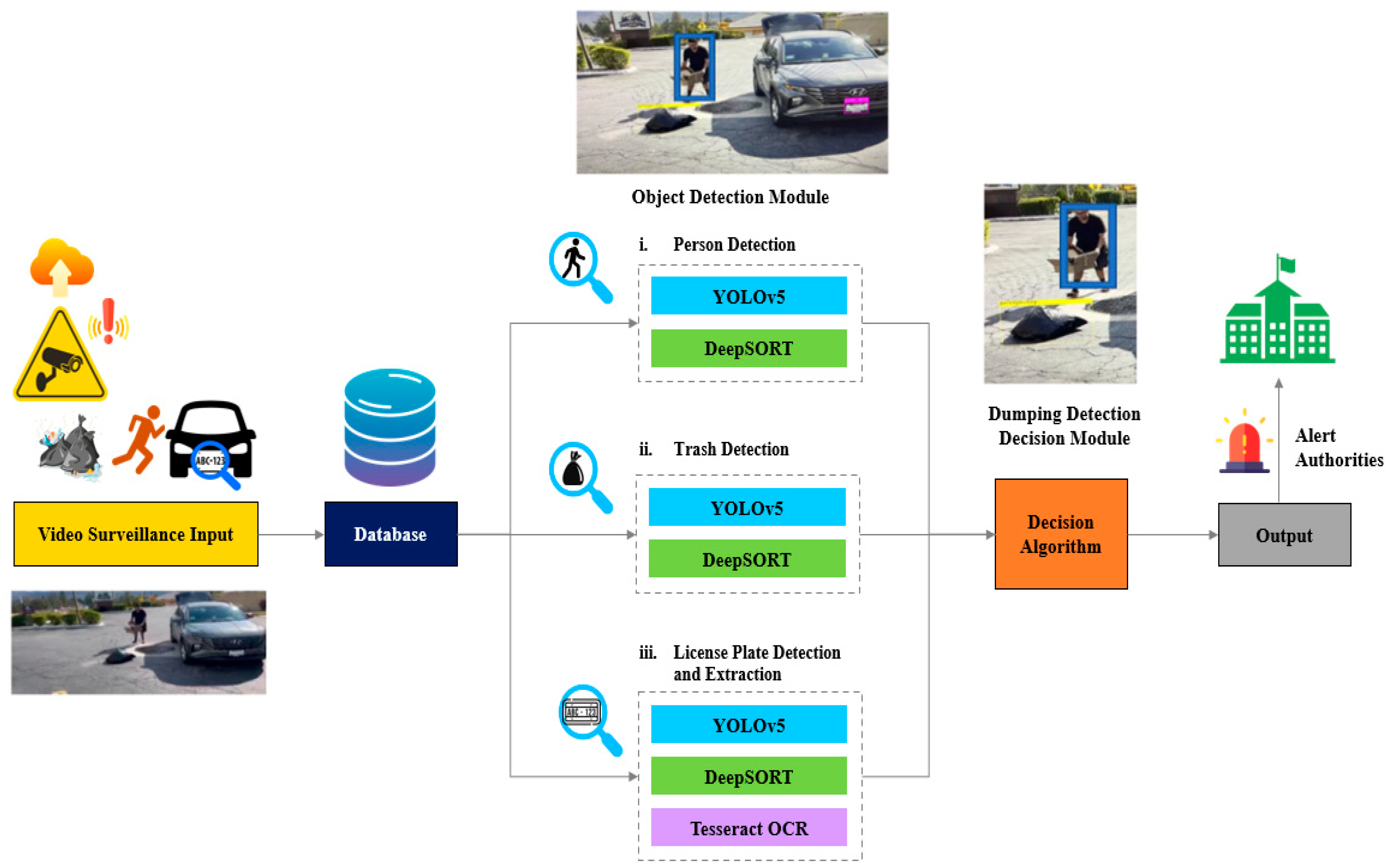

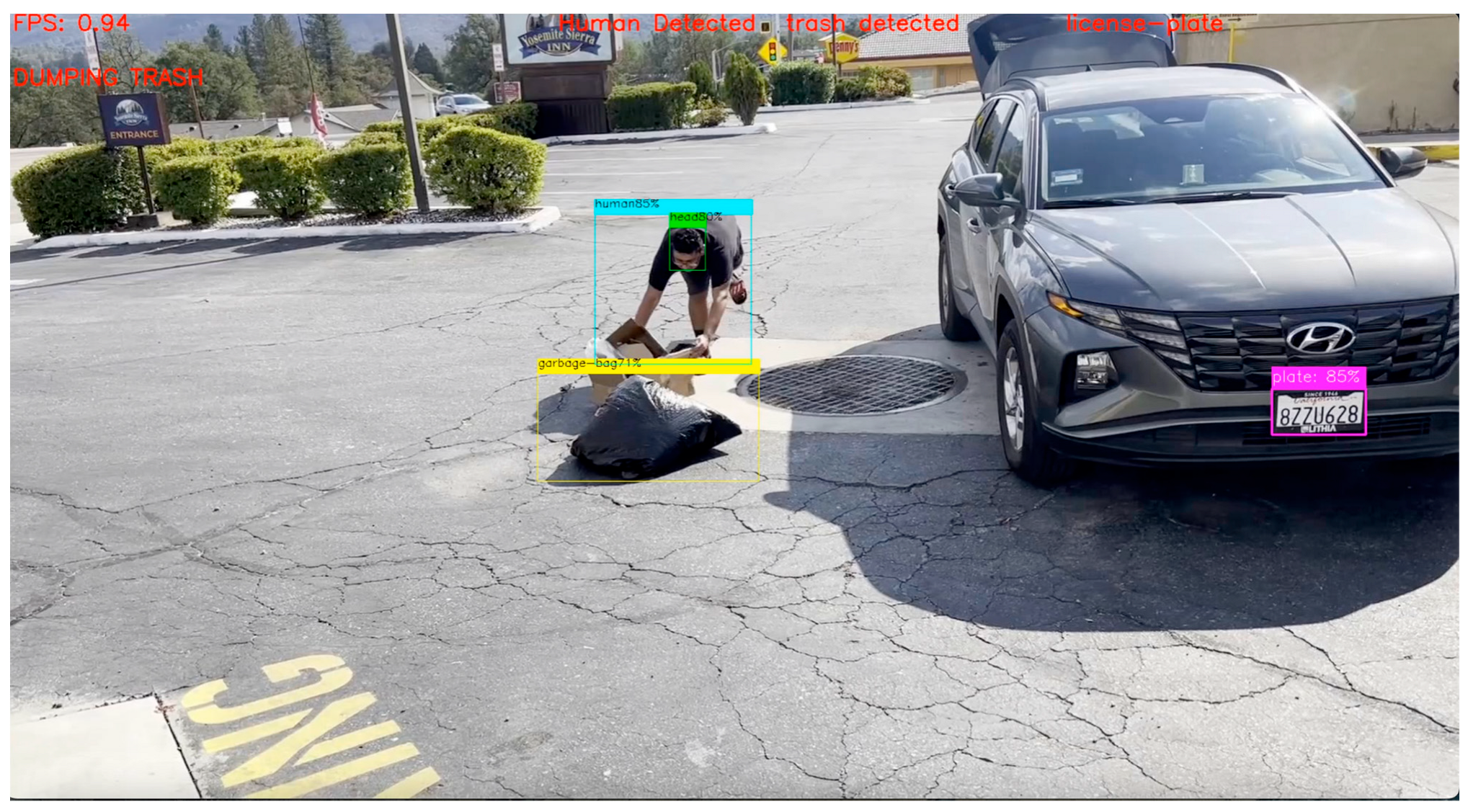

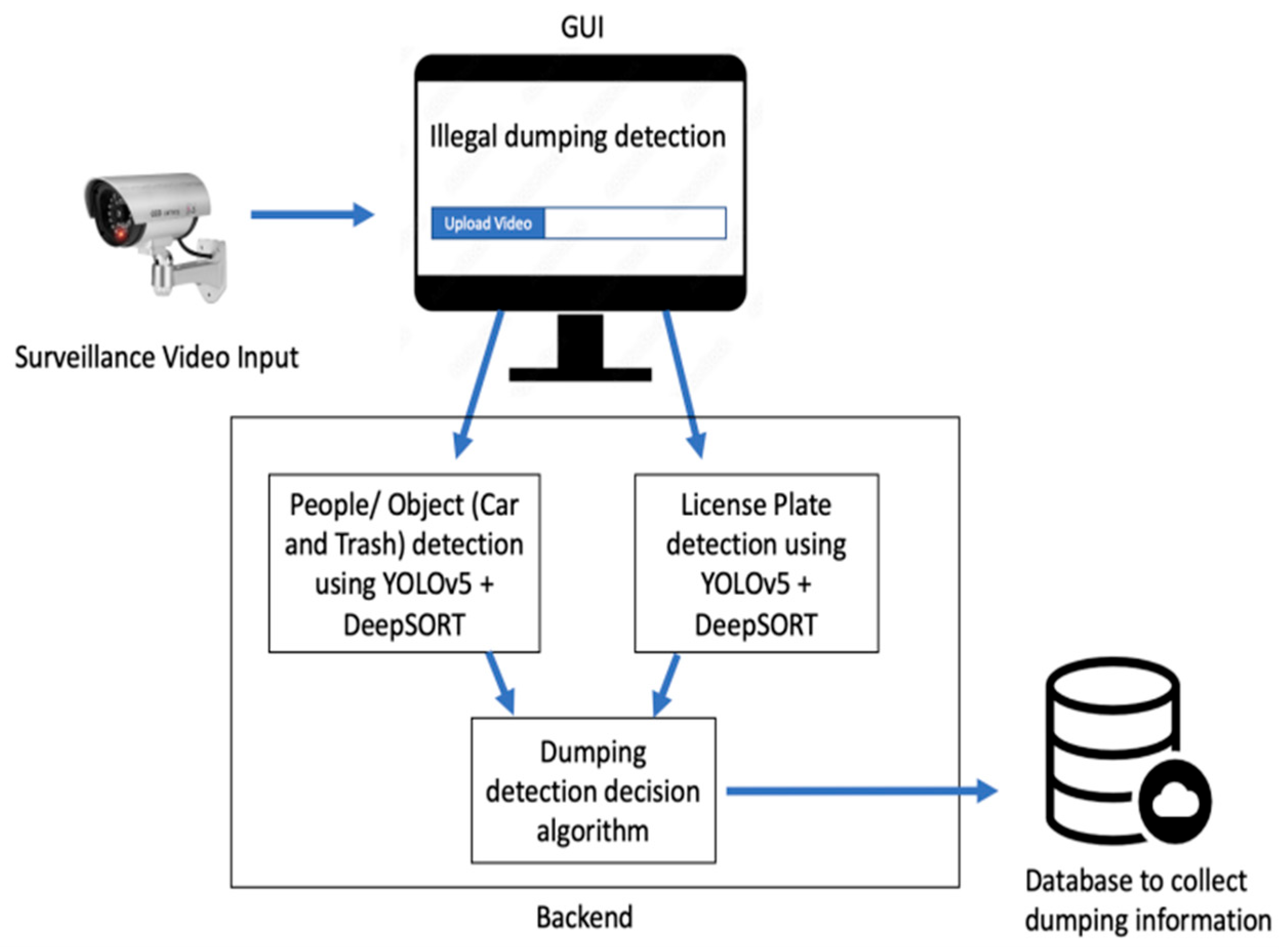

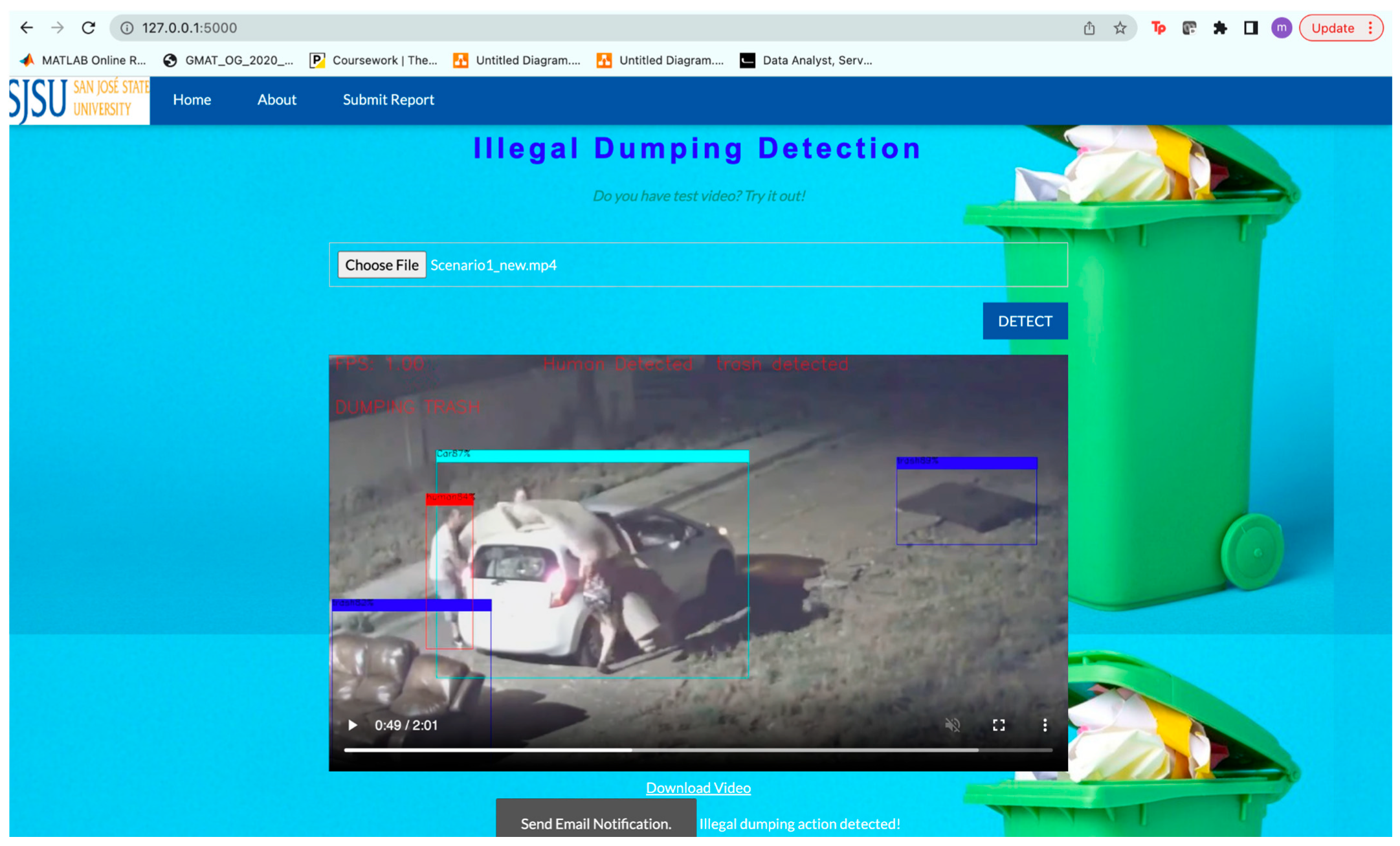

- The smart city community watch program developed using YOLOv5 for object detection and DeepSORT for multi-object tracking achieves 97% accuracy in detecting illegal dumping.

- The web-based application integrates person detection, trash detection, license plate detection and extraction, and a decision algorithm aiding government agencies to monitor and effectively manage illegal dumping.

- With the 97% detection accuracy and real-time detection capabilities of the YOLOv5- and DeepSORT-based solution, this solution can help in saving government expenditure to clean up illegal dumping.

- The solution can be integrated with smart city programs such as smart waste management initiatives, aid in effective and proactive public management, and promote public health.

Abstract

1. Introduction

2. Related Work

2.1. Literature Survey

2.2. Technology and Solution Survey

3. Data Engineering

3.1. Data Collection

- Object detection;

- License plate detection;

- Action detection.

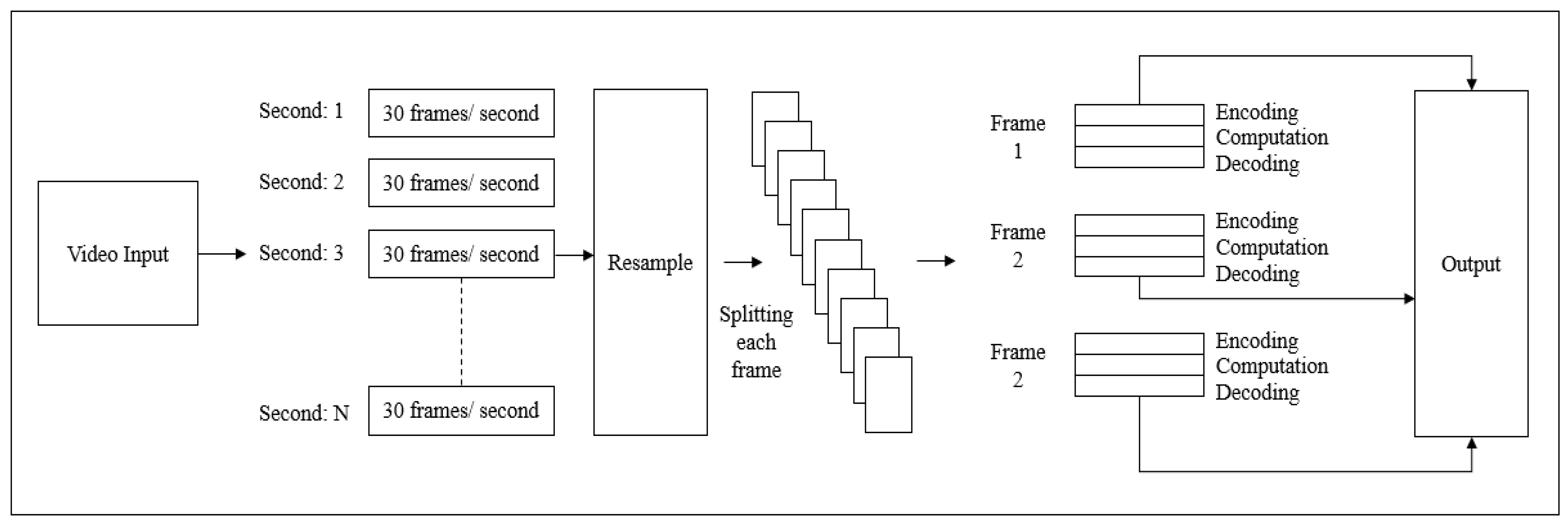

3.2. Data Pre-Processing

- Images were pre-processed using sharpening and augmentation techniques from the source;

- A random sample of the image data was taken and bounding boxes were displayed to determine the quality of the data. It was possible to identify any images that were of poor quality or did not contain the desired information using this method.

- The vehicle’s type (car or motorcycle);

- The license plate layout (Brazilian or Mercosur);

- Text (e.g., ABC-1234);

- The four-corner position (x, y) for each image.

- Image resizing (1080 × 1080);

- Grayscale to pre-process the images;

- Gaussian blur and sharpness adjustments;

- Modify the perspective of monitoring cameras at various heights.

3.3. Training Data Preparation

4. Model Development

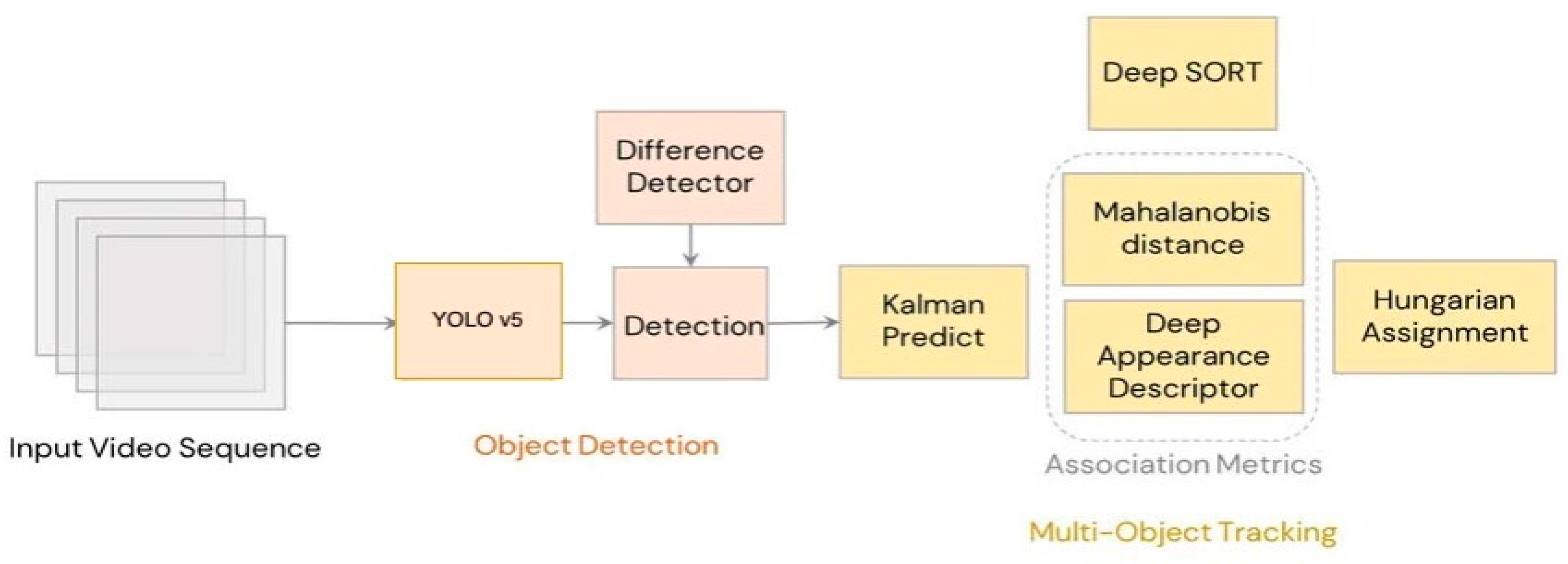

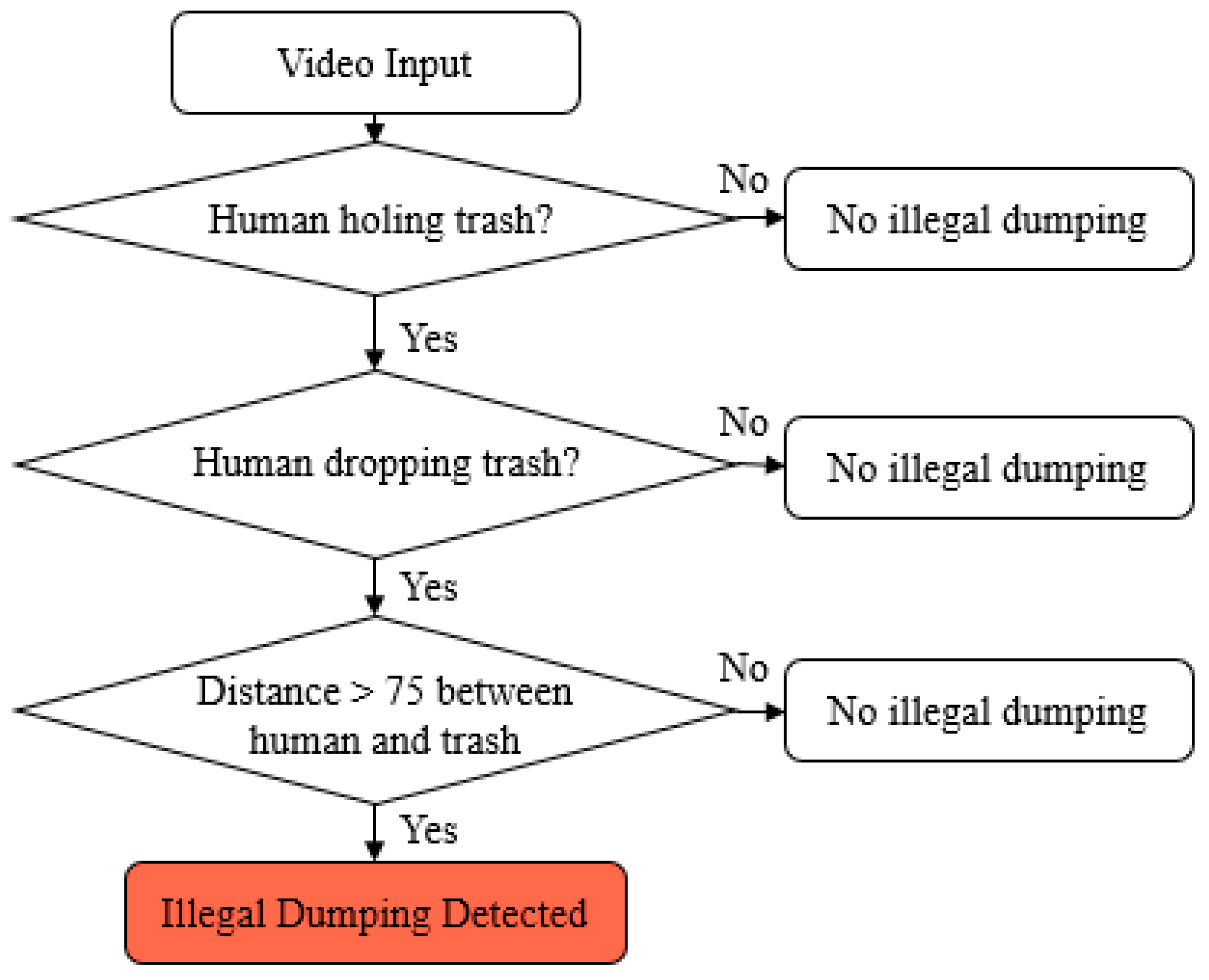

4.1. Model Proposal

4.2. Model Supports

4.3. Model Comparison

4.4. Model Evaluation

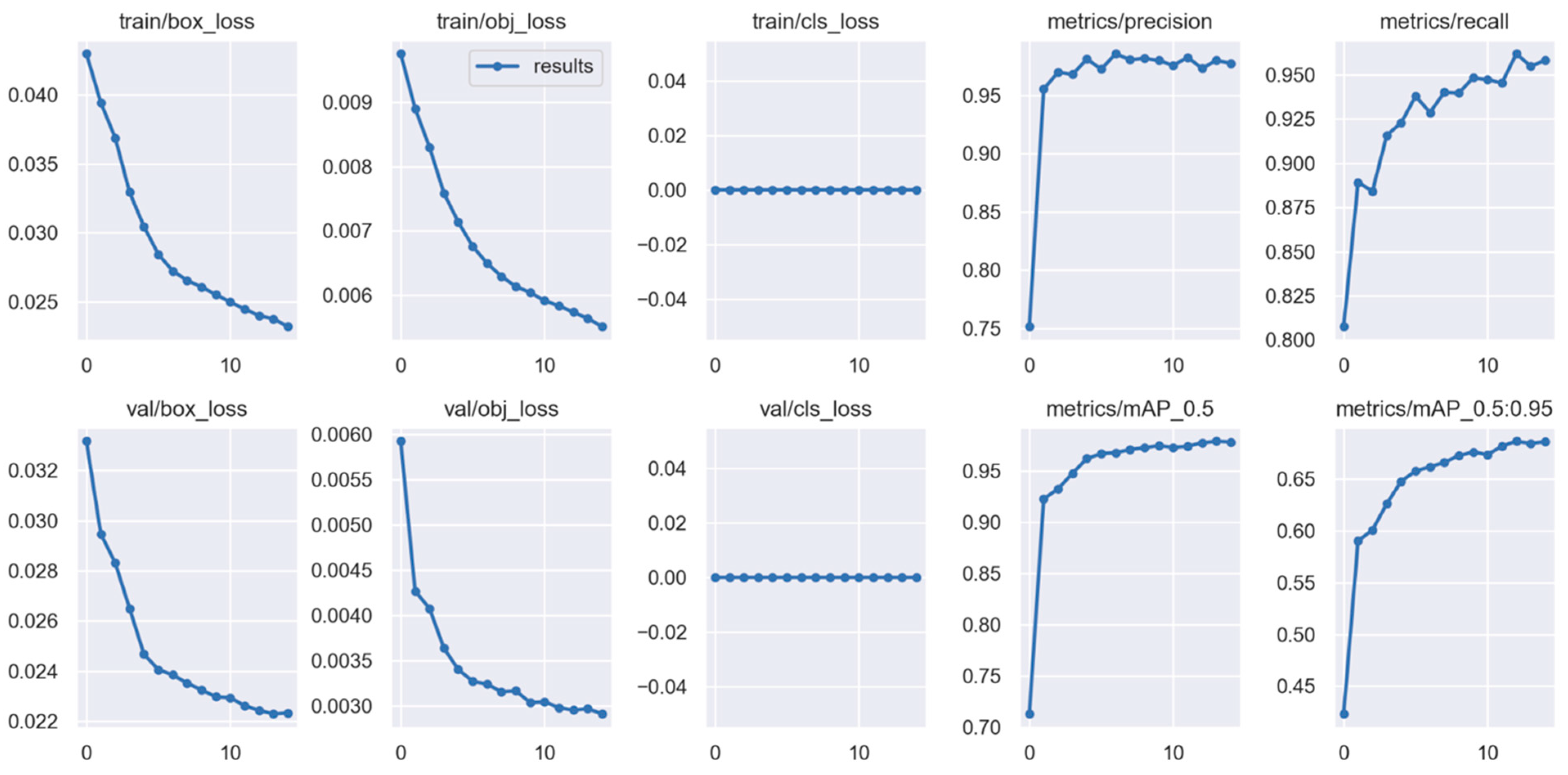

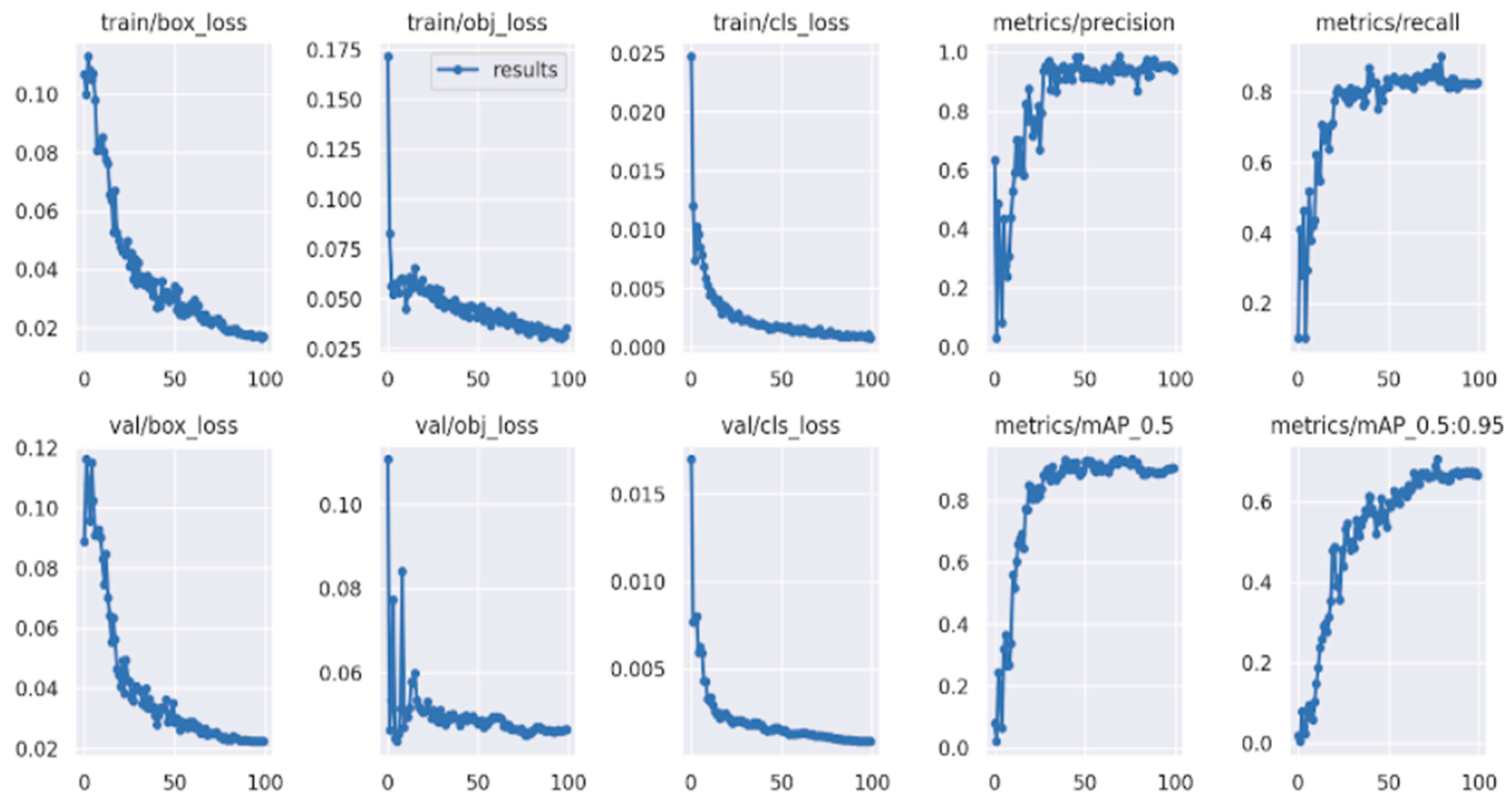

4.4.1. Performance Evaluation

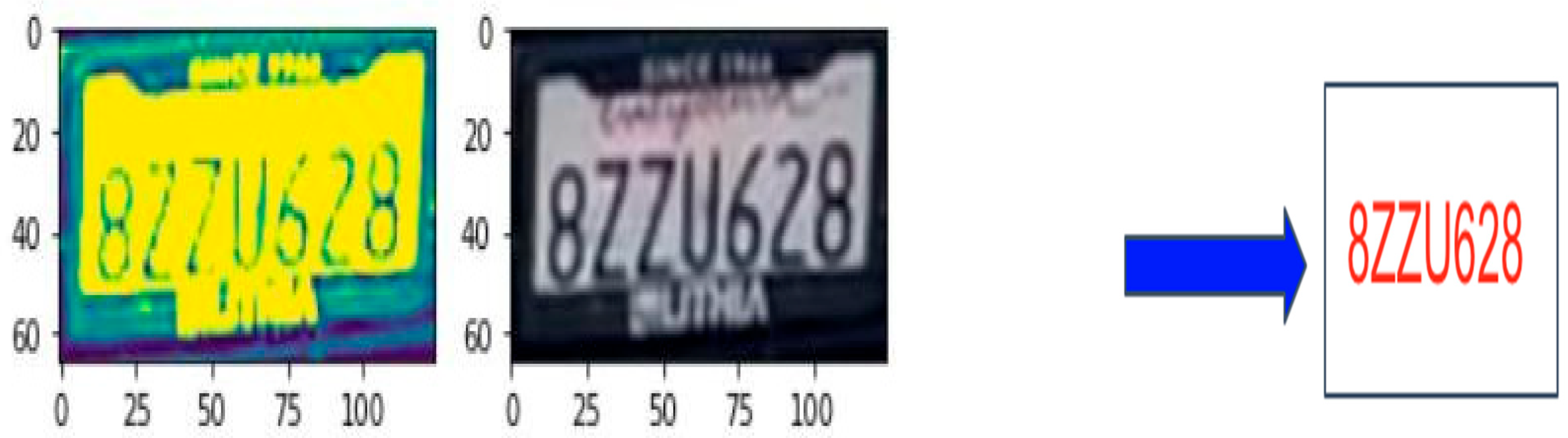

4.4.2. License Plate Detection and Recognition Using YOLOv5 and OCR

4.4.3. Frames per Second

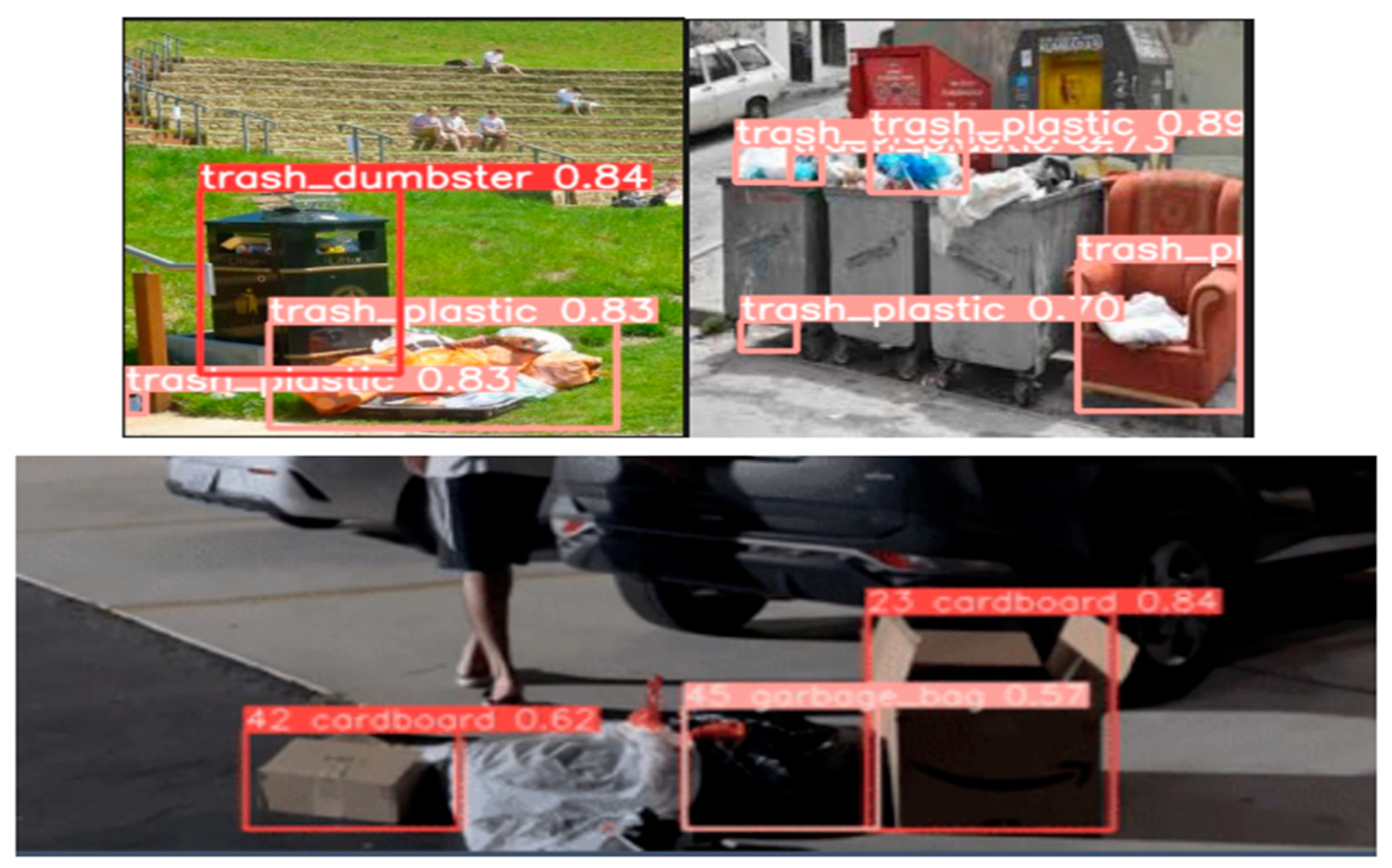

4.5. Experimental Results

4.5.1. License Plate Detection Model

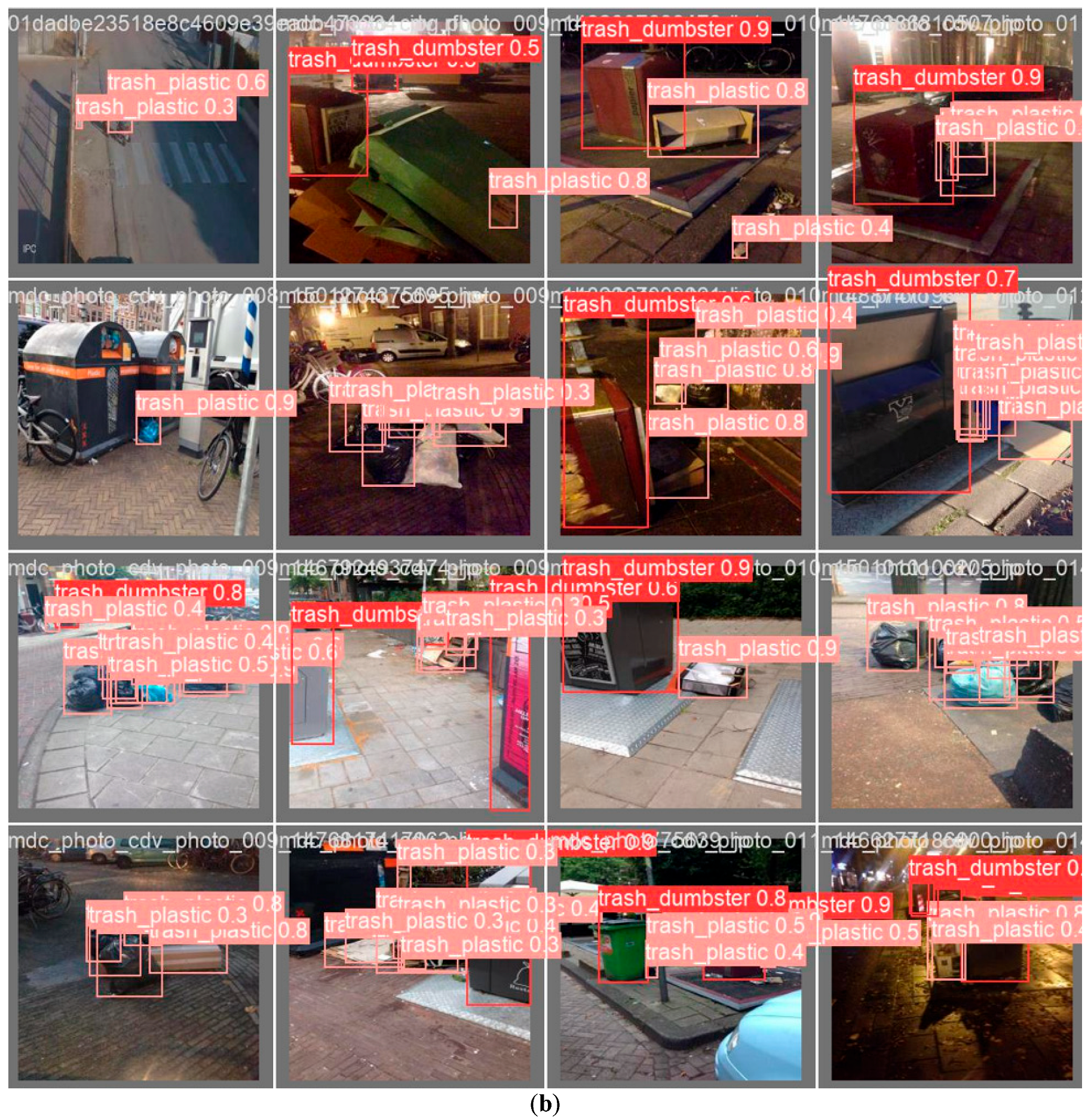

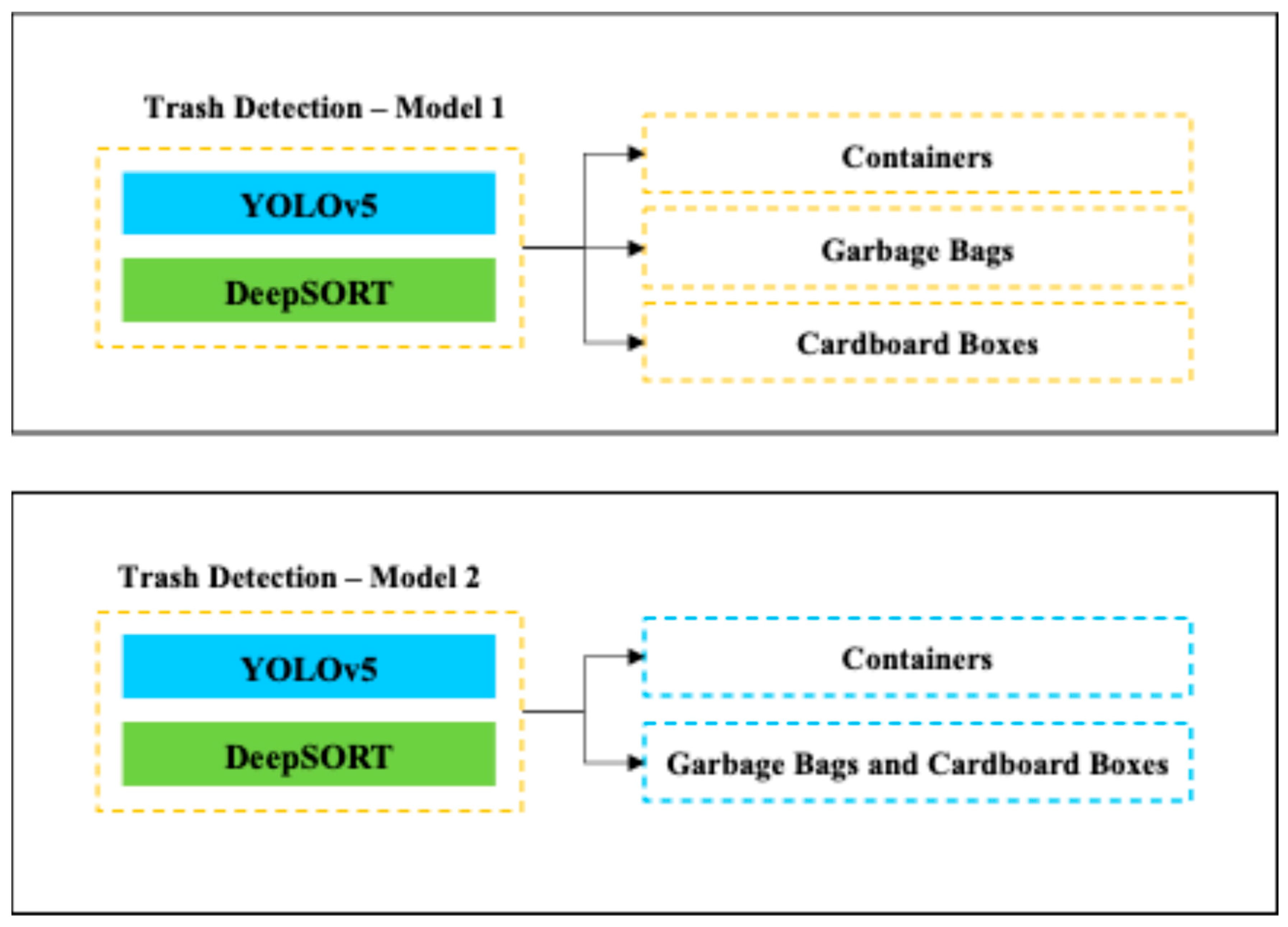

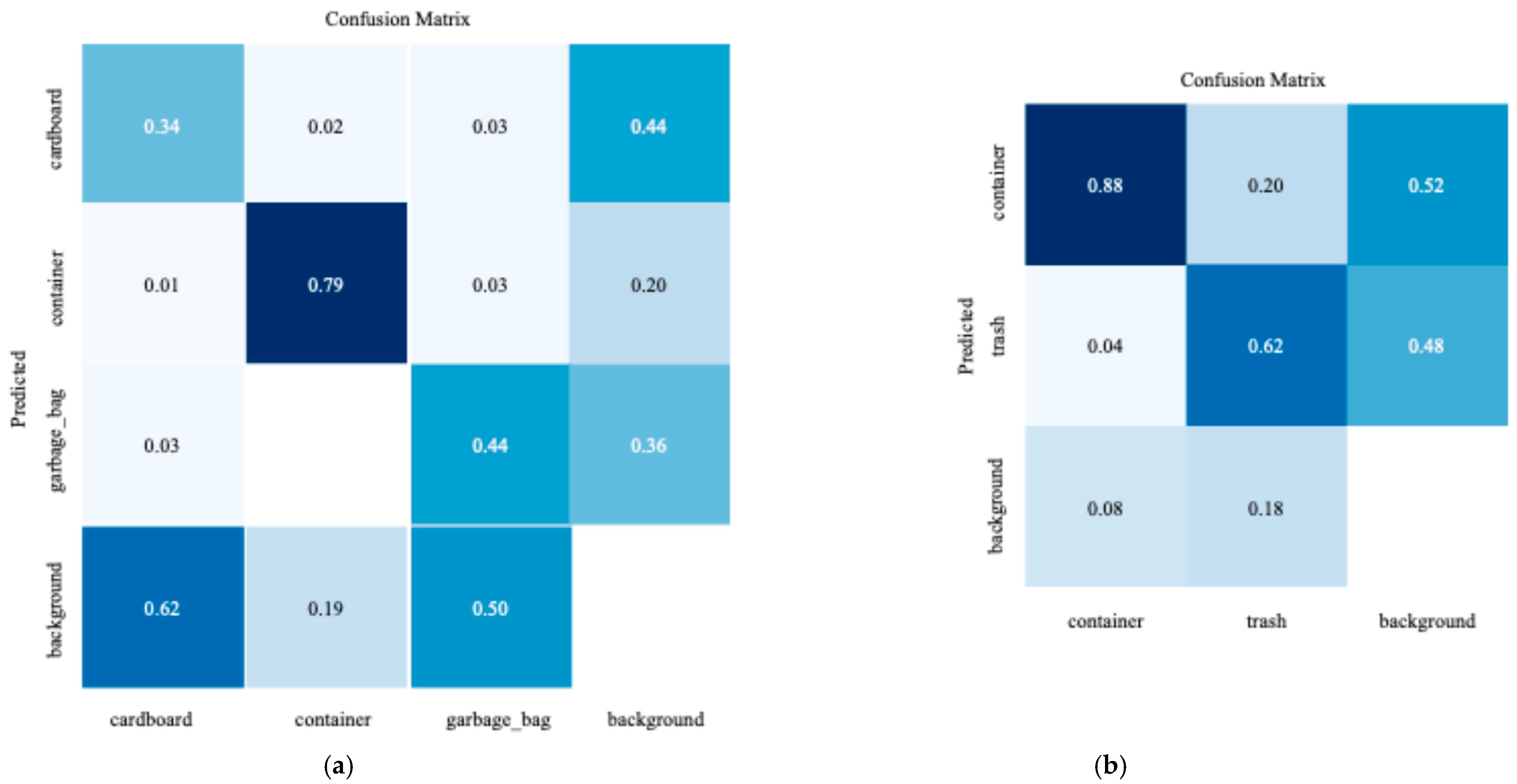

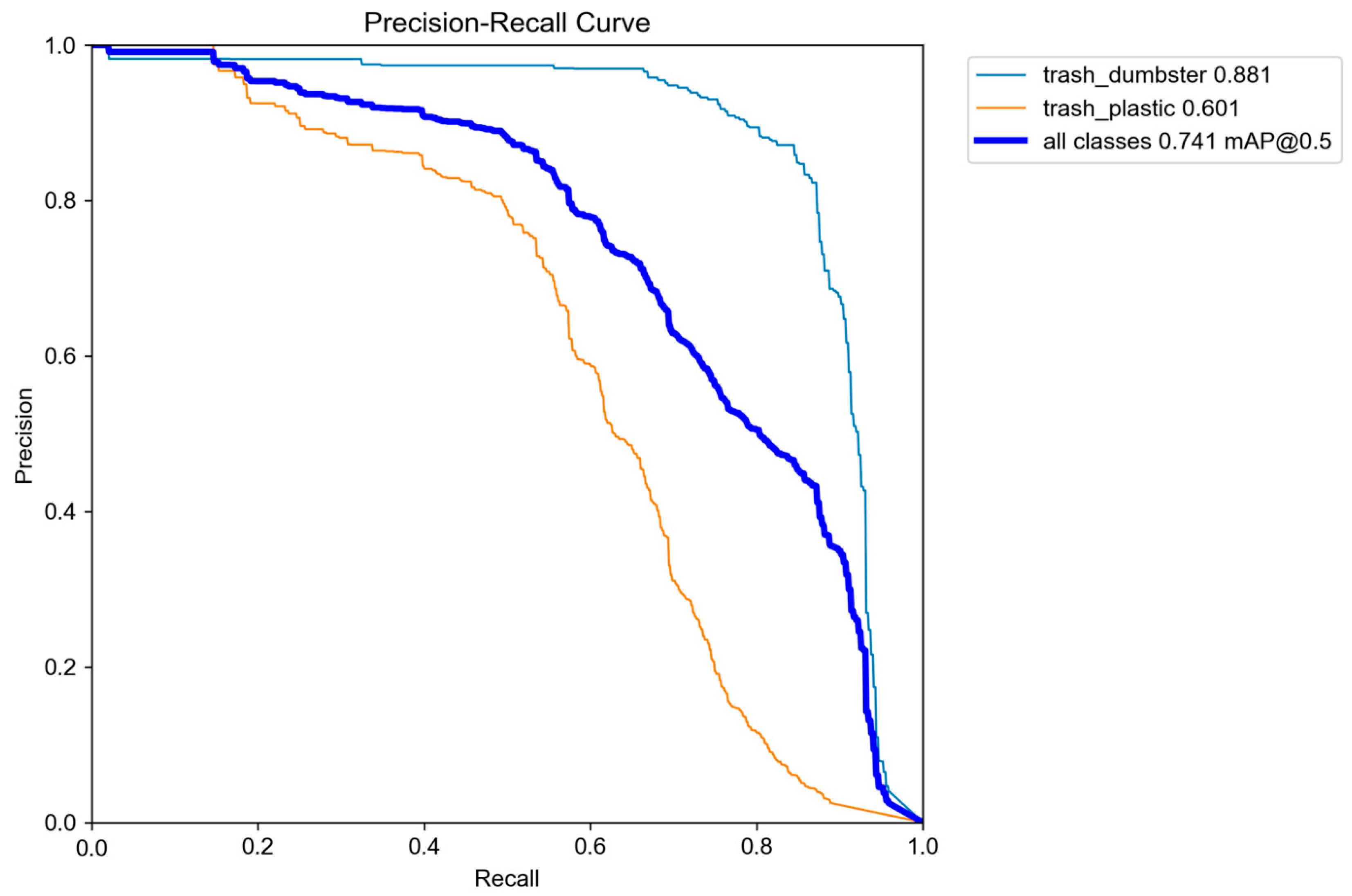

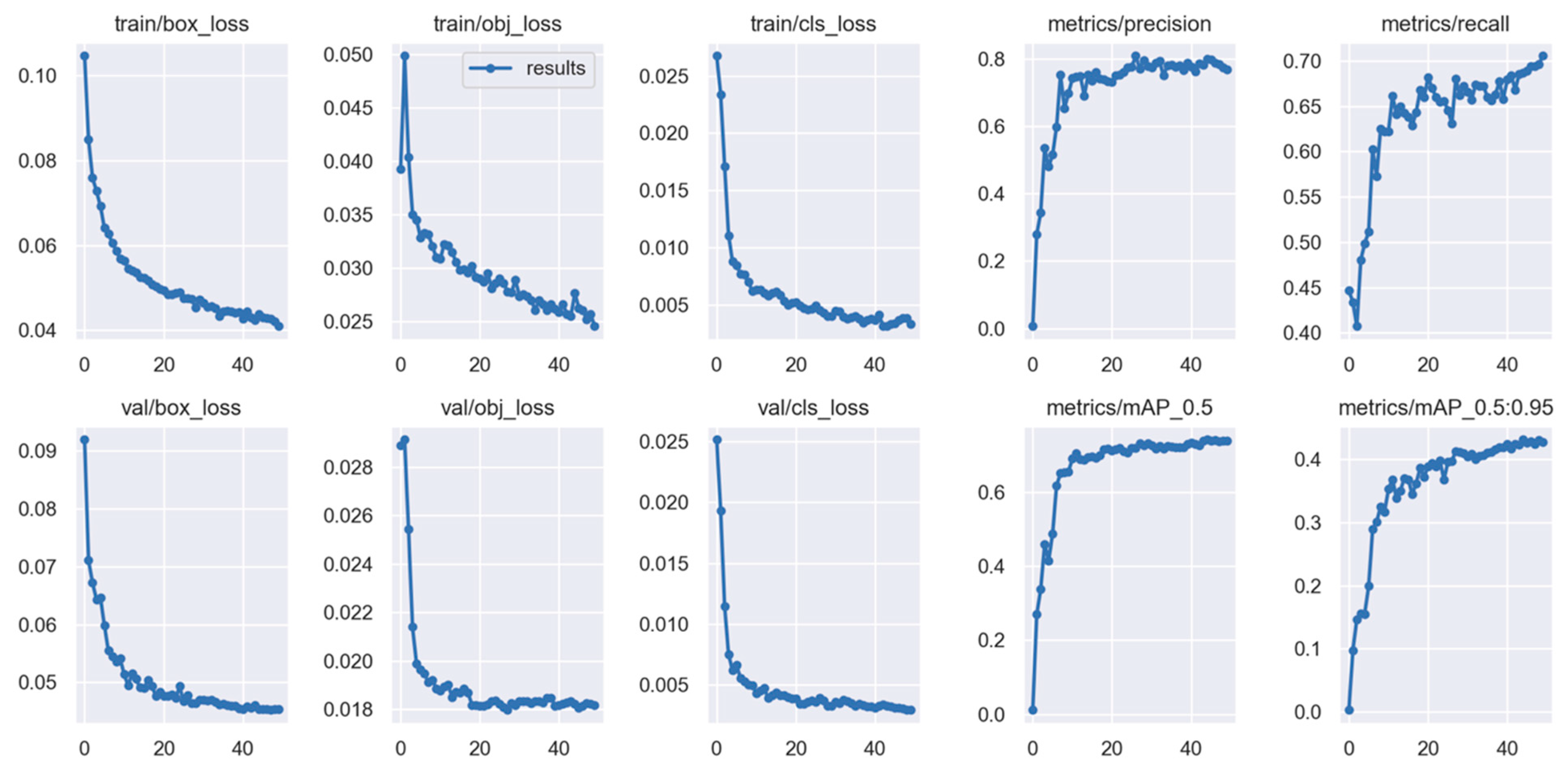

4.5.2. Trash Detection Model

4.5.3. Person Detection Model

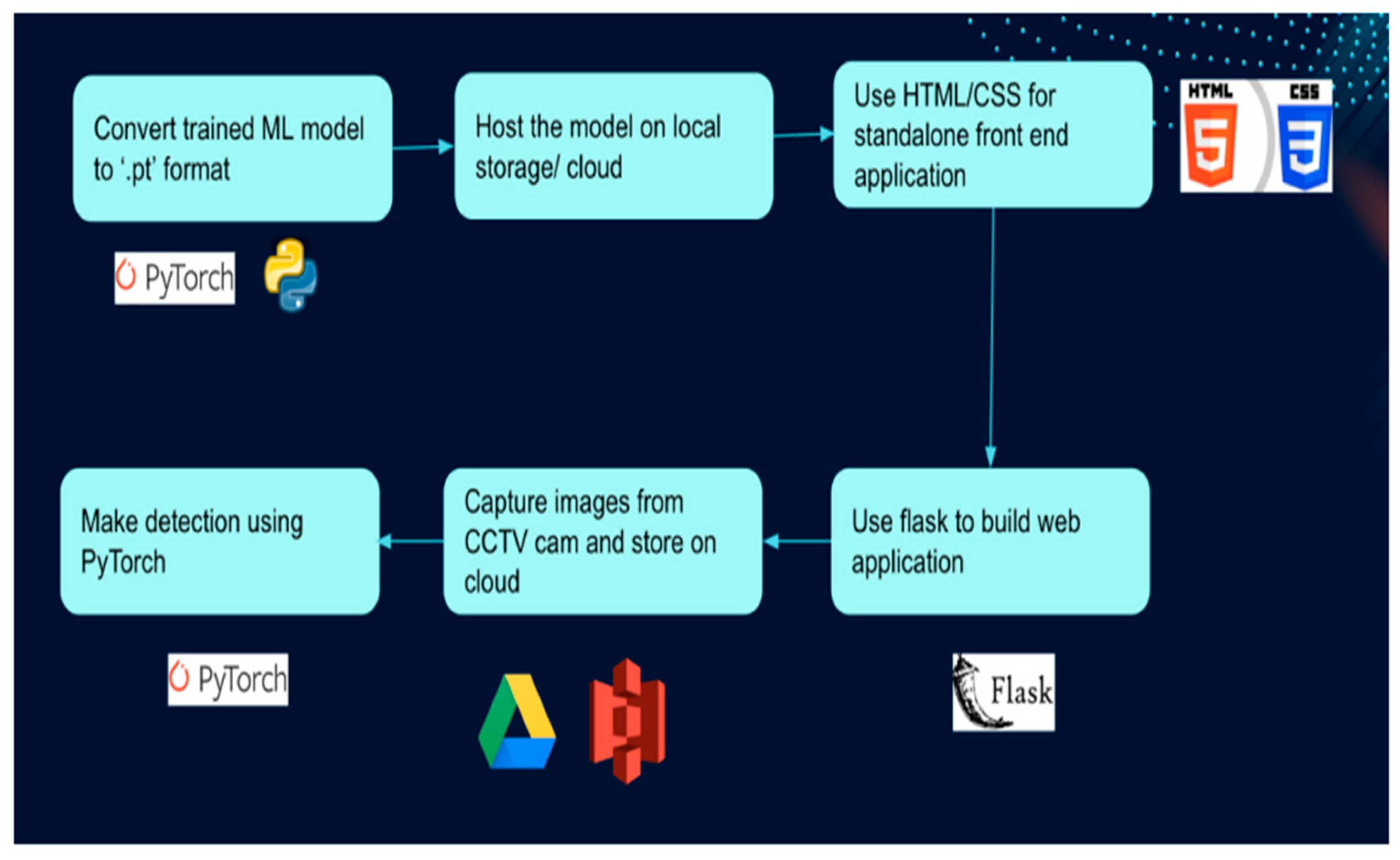

5. System Development

5.1. System Requirements Analysis

5.2. System Design

6. Conclusions

6.1. Summary

6.2. Recommendations for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Ebi, K. 6 Cities That Are Fighting Trash with Technology (and Winning!)|Smart Cities Council. 2016. Available online: https://www.smartcitiescouncil.com/article/6-cities-are-fighting-trash-technology-and-winning (accessed on 24 August 2023).

- Devesa, M.R.; Brust, A.V. Mapping Illegal Waste Dumping Sites with Neural-Network Classification of Satellite Imagery. arXiv 2021, arXiv:2110.08599. [Google Scholar]

- Karale, A.; Kayat, W.; Shiva, A.; Hopkins, D.; Nenkova, A. Cleaning Up Philly’s Streets: A Cloud-Based Machine Learning Tool to Identify Illegal Trash Dumping. Available online: https://fisher.wharton.upenn.edu/wp-content/uploads/2019/06/PhillyTrash.pdf (accessed on 10 May 2022).

- Akula, A.; Shah, A.K.; Ghosh, R. Deep Learning Approach for human action recognition in infrared images. Cogn. Syst. Res. 2018, 50, 146–154. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Bae, K.; Yun, K.; Kim, H.; Lee, Y.; Park, J. Anti-litter surveillance based on person understanding via multi-task learning. In Proceedings of the British Machine Vision Conference (BMVC), Virtual Event, 7–10 September 2020; pp. 1–13. [Google Scholar]

- Yun, K.; Kwon, Y.; Oh, S.; Moon, J.; Park, J. Vision-based Garbage Dumping Action Detection For Real-World Surveillance Platform. ETRI J. 2019, 41, 494–505. [Google Scholar] [CrossRef]

- Matsumoto, S.; Takeuchi, K. The effect of community characteristics on the frequency of illegal dumping. Environ. Econ. Policy Stud. 2011, 13, 177–193. [Google Scholar] [CrossRef]

- Youme, O.; Bayet, T.; Dembele, J.M.; Cambier, C. Deep learning and remote sensing: Detection of dumping waste using UAV. Procedia Comput. Sci. 2021, 185, 361–369. [Google Scholar] [CrossRef]

- Begur, H.; Dhawade, M.; Gaur, N.; Dureja, P.; Gao, J.; Mahmoud, M.; Huang, J.; Chen, S.; Ding, X. An edge-based smart mobile service system for illegal dumping detection and monitoring in San Jose. In Proceedings of the 2017 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), San Francisco, CA, USA, 4–8 August 2017. [Google Scholar] [CrossRef]

- Coccoli, M.; De Francesco, V.; Fusco, A.; Maresca, P. A cloud-based cognitive computing solution with interoperable applications to counteract illegal dumping in Smart Cities. Multimed. Tools Appl. 2021, 81, 95–113. [Google Scholar] [CrossRef]

- Dahi, I.; El Mezouar, M.C.; Taleb, N.; Elbahri, M. An edge-based method for effective abandoned luggage detection in complex surveillance videos. Comput. Vis. Image Underst. 2017, 158, 141–151. [Google Scholar] [CrossRef]

- Sulaiman, N.; Jalani, S.N.H.M.; Mustafa, M.; Hawari, K. Development of Automatic Vehicle Plate Detection System. In Proceedings of the 2013 IEEE 3rd International Conference on System Engineering and Technology, Shah Alam, Malaysia, 19–20 August 2013. [Google Scholar] [CrossRef][Green Version]

- Torres, R.N.; Fraternali, P. Learning to identify illegal landfills through scene classification in aerial images. Remote Sens. 2021, 13, 4520. [Google Scholar] [CrossRef]

- Sarker, N.; Chaki, S.; Das, A.; Alam Forhad, S. Illegal trash thrower detection based on HOGSVM for a real-time monitoring system. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 5–7 January 2021. [Google Scholar] [CrossRef]

- Carolis, B.D.; Ladogana, F.; Macchiarulo, N. Yolo TrashNet: Garbage detection in video streams. In Proceedings of the 2020 IEEE Conference on Evolving and Adaptive Intelligent Systems (EAIS), Bari, Italy, 27–29 May 2020. [Google Scholar] [CrossRef]

- Pedropro. Pedropro/Taco: Trash Annotations in Context Dataset Toolkit. GitHub. n.d. Available online: https://github.com/pedropro/TACO (accessed on 23 March 2022).

- Srivastava, S.; Divekar, A.V.; Anilkumar, C.; Naik, I.; Kulkarni, V.; Pattabiraman, V. Comparative analysis of Deep Learning Image Detection Algorithms. J. Big Data 2021, 8, 66. [Google Scholar] [CrossRef]

- Laroca, R.; Cardoso, E.; Lucio, D.; Estevam, V.; Menotti, D. On the cross-dataset generalization in license plate recognition. In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Virtual Event, 6–8 February 2022. [Google Scholar] [CrossRef]

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. A robust real-time automatic license plate recognition based on the YOLO detector. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–10. [Google Scholar]

- Common Objects in Context, COCO. Available online: https://cocodataset.org/#home (accessed on 13 May 2022).

- Trash Annotations in Context, tacodataset.org. Available online: http://tacodataset.org/ (accessed on 13 May 2022).

- Yolo Is Back! Version 4 Boasts Improved Speed and Accuracy. Synced. 13 August 2020. Available online: https://syncedreview.com/2020/04/27/yolo-is-back-version-4-boasts-improved-speed-and-accuracy/ (accessed on 23 September 2022).

- UFPR-3DFE Dataset—Laboratório Visão Robótica e Imagem. Laboratório Visão Robótica e Imagem—Laboratório de Pesquisa ligado ao Departamento de Informática, 24 September 2019. Available online: https://web.inf.ufpr.br/vri/databases/uf (accessed on 13 May 2022).

- Larxel. Car License Plate Detection. Kaggle. 1 June 2020. Available online: https://www.kaggle.com/andrewmvd/car-plate-detection (accessed on 23 March 2022).

- Sanyam. Understanding Multiple Object Tracking Using DeepSORT. LearnOpenCV. 11 November 2022. Available online: https://learnopencv.com/understanding-multiple-object-tracking-using-deepsort/ (accessed on 23 September 2022).

- Dabholkar, A.; Muthiyan, B.; Srinivasan, S.; Ravi, S.; Jeon, H.; Gao, J. Smart illegal dumping detection. In Proceedings of the 2017 IEEE Third International Conference on Big Data Computing Service and Applications (BigDataService), Redwood City, CA, USA, 6–9 April 2017. [Google Scholar] [CrossRef]

- Optical Character Recognition (OCR): Definition & How to Guide. n.d. Available online: https://www.v7labs.com/blog/ocr-guide (accessed on 25 September 2022).

- Mccarthy, J. Object Tracking with Yolov5 and Sort. Medium. 27 July 2021. Available online: https://medium.com/@jarrodmccarthy12/object-tracking-with-yolov5-and-sort-589e3767f85c (accessed on 23 September 2022).

- Liu, Y. An improved faster R-CNN for object detection. In Proceedings of the 2018 11th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 8–9 December 2018. [Google Scholar] [CrossRef]

- Meel, V. Yolov3: Real-Time Object Detection Algorithm (Guide). Viso.ai. 2 January 2023. Available online: https://viso.ai/deep-learning/yolov3-overview/ (accessed on 23 September 2022).

- Orac, R. What’s New in Yolov4? Medium. 24 August 2021. Available online: https://towardsdatascience.com/whats-new-in-yolov4-323364bb3ad3#:~:text=YOLO%20recognizes%20objects%20more%20precisely,boundary%20box%20around%20the%20object (accessed on 23 September 2022).

- Rath, S. Yolov5—Fine Tuning & Custom Object Detection Training. LearnOpenCV. 28 November 2022. Available online: https://learnopencv.com/custom-object-detection-training-using-yolov5/#:~:text=YOLO%20is%20short%20for%20You,are%20four%20versions% (accessed on 25 September 2022).

- Evaluating Object Detection Models Using Mean Average Precision. KDnuggets. n.d. Available online: https://www.kdnuggets.com/2021/03/evaluating-object-detection-models-using-mean-average-precision.html#:~:text=To%20evaluate%20object%20detection%20models,model (accessed on 25 September 2022).

- Majumder, S. Object Detection Algorithms-R CNN vs. Fast-R CNN vs. Faster-R CNN. Medium. 4 July 2020. Available online: https://medium.com/analytics-vidhya/object-detection-algorithms-r-cnn-vs-fast-r-cnn-vs-faster-r-cnn-3a7bbaad2c4a (accessed on 23 September 2022).

- Bileschi, S. CBCL StreetScenes Challenge Framework. 27 March 2007. Available online: https://web.mit.edu/ (accessed on 13 May 2022).

- The Virat Video Dataset. VIRAT Video Data. n.d. Available online: https://viratdata.org/#getting-data (accessed on 23 March 2022).

- kevinlin311tw. Kevinlin311tw/ABODA: Abandoned Object Dataset. GitHub. n.d. Available online: https://github.com/kevinlin311tw/ABODA (accessed on 23 March 2022).

- Kinetics. Deepmind. n.d. Available online: https://deepmind.com/research/open-source/kinetics (accessed on 23 March 2022).

| Reference | Region | Purpose | Input Parameters | Model | Results |

|---|---|---|---|---|---|

| [3] | USA | Identify timing and location of illegal dumping actions from closed-circuit television (CCTV) feeds | RGB Images (Data: ImageNet dataset, Google Images with equal proportion of w/trash and w/o trash) | ResNet | Accuracy of 60.3% |

| [4] | South Asia | Detect human actions for ambient assisted living (AAL) | IR Images (Data: 5278 images sampled from thermal videos) | LeNet | Accuracy of 87.4% |

| [12] | Europe | Detect abandoned objects (AOs) using edge information | Video dataset (Data: PETS2007, AVSS2007, CDNET2014, ABODA) | Stable edge detection, clustering | Precision, recall, accuracy, F-measure |

| [13] | South Asia | Develop Automatic Number Plate Recognition (ANPR) system | RGB Images (Data: 50 images captured from a digital camera) | Optical character recognition (OCR), Radial Basis Function (RBF), Probabilistic Neural Network (PNN) | Accuracy |

| [14] | Europe | Identify illegal landfills through scene classification in aerial images | RGB Images (Data: 3000 images provided by the Environmental Protection Agency of the Region of Lombardy (ARPA)) | ResNet50 and Feature Pyramid Network (FPN) | Precision of 88%, recall of 87% |

| [10] | USA | Develop an edge-based smart mobile service system for illegal dumping detection and monitoring | RGB Images (Data: dataset with 9963 images and 24,640 annotated objects provided by the Environment Service Department in San Jose) | R-CNN with VGG, Inception V3 | Accuracy of 91.3% |

| [7] | East Asia | Detect garbage dumping actions in surveillance camera footage | RGB Images (Data: COCO dataset with 330,000 images) | R-CNN, R-PCA, and CNN | Accuracy of 68.1% |

| [8] | Europe | Develop a cloud-based cognitive computing solution to counteract illegal dumping in smart cities | Video (Data: surveillance videos captured by the municipality and security agencies) | TrashNet Densenet121, DenseNet169, InceptionResnetV2, MobileNet | Accuracy of 95.1% |

| [9] | All | Garbage detection technique in video streams | RGB Images (Data: Google images— 2265) | YOLO v3 | Precision of 68% |

| Model | License Plate Detection | Object Detection | Action Detection | Advantages | Disadvantages |

|---|---|---|---|---|---|

| ResNet [3,11,14] | x |

|

| ||

| FPN [14] | x |

|

| ||

| LeNet [4] | x |

|

| ||

| Inception [10] | x |

|

| ||

| R-CNN [8,10] | x | x |

|

| |

| 3D CNN [15] | x | x |

|

| |

| YOLO [16,17] | x | x |

|

|

| Purpose | Reference | Details | Dataset | Model | Accuracy |

|---|---|---|---|---|---|

| CNN for object detection and classification | [19,20] | LP detection and character detection | UFPR-ALPR and SSIG SegPlate | YOLOv4-tiny and modified CR-NET | 78% |

| CNN for object detection | [9] | Garbage detection technique in video streams | Google Images | YOLOv3 | 68% |

| CNN for action detection | [7] | Detect garbage dumping actions in surveillance cameras | COCO dataset, self-collected | R-CNN, R-PCA, and CNN | 68% |

| Integrated Illegal Dumping Detection model for classification | Our approach | Person detection, trash detection, LP detection, character detection, and decision algorithm | COCO, TACO, Waymo, UFPR-ALPR, authors collected dataset | YOLOv5, DeepSORT, Tesseract OCR | 97% |

| Sub-Task | Dataset | Videos | Description |

|---|---|---|---|

| Object detection | COCO [21] | 123,000 | COCO dataset has a total 330 K images out of which >200 k images are labeled. It supports 1.5 million object instances spanning 80 object classes. |

| Action detection | TACO [17,22] | 1500 | TACO dataset presently contains 1500 images of litter with 4784 annotations and 3746 images. |

| Action detection | Waymo [23] | 1000 | Waymo dataset has 1000 images from vehicle cameras during day and nighttime with high-quality labels for 4 object classes. |

| License plate detection | UFPR-ALPR [24] | 450 | UFPR-ALPR dataset contains 4500 fully annotated photos (nearly 30,000 LP characters) from 150 cars in real-world circumstances in which both vehicle and camera (inside another vehicle) are moving. |

| Combined task | Authors collected videos | 180 | Video dataset of dumping actions which includes object detection and action detection along with license plate detection. |

| Model | Advantages | Disadvantages |

|---|---|---|

| CNN | Good for classification of objects | Slow and less accurate |

| Faster R-CNN [30] | Fast and uses RPN | Not suitable for real-time detection |

| YOLOv3 [31] | Real-time detection | Cannot detect small objects |

| YOLOv4 [23,32] | High accuracy and speed | Less accuracy and speed than YOLOv5 |

| YOLOv5 [33] | Highest accuracy and inference speed | Higher training time |

| Method | mAP0.50 | mAP0.5–0.95 | Precision | Recall | Batch Size | Input Resolution |

|---|---|---|---|---|---|---|

| Medium Yolov5 | 0.74 | 0.62 | 0.89 | 0.78 | 16 | 256 × 256 |

| Yolov3-Spp | 0.69 | 0.54 | 0.81 | 0.75 | 16 | 416 × 416 |

| Faster RCNN | 0.67 | 0.31 | 0.64 | 0.48 | 8 | 416 × 416 |

| Measure | Model 1 (3 Output Classes) | Model 2 (2 Output Classes) |

|---|---|---|

| Precision | 0.647 | 0.831 |

| Recall | 0.612 | 0.724 |

| mAP | 0.549 | 0.741 |

| Module Name | Hw/Sw Environment | YOLO | DeepSORT | SAR | Total Time |

|---|---|---|---|---|---|

| Car and person detection and tracking | Google Colab Pro Processor: Tesla P100-PCIE Memory: 16 GB | 0.039 s | 0.031 s | NA | 0.070 s |

| Garbage detection and tracking | Google Colab Pro Processor: Tesla P100-PCIE Memory: 16 GB | 0.189 s | 0.029 s | NA | 0.218 s |

| License plate detection and recognition | Google Colab Pro Processor: Tesla P100-PCIE Memory: 16 GB | 0.032 s | NA | 0.172 s Robust Scanner: 0.14 s | 0.204 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pathak, N.; Biswal, G.; Goushal, M.; Mistry, V.; Shah, P.; Li, F.; Gao, J. Smart City Community Watch—Camera-Based Community Watch for Traffic and Illegal Dumping. Smart Cities 2024, 7, 2232-2257. https://doi.org/10.3390/smartcities7040088

Pathak N, Biswal G, Goushal M, Mistry V, Shah P, Li F, Gao J. Smart City Community Watch—Camera-Based Community Watch for Traffic and Illegal Dumping. Smart Cities. 2024; 7(4):2232-2257. https://doi.org/10.3390/smartcities7040088

Chicago/Turabian StylePathak, Nupur, Gangotri Biswal, Megha Goushal, Vraj Mistry, Palak Shah, Fenglian Li, and Jerry Gao. 2024. "Smart City Community Watch—Camera-Based Community Watch for Traffic and Illegal Dumping" Smart Cities 7, no. 4: 2232-2257. https://doi.org/10.3390/smartcities7040088

APA StylePathak, N., Biswal, G., Goushal, M., Mistry, V., Shah, P., Li, F., & Gao, J. (2024). Smart City Community Watch—Camera-Based Community Watch for Traffic and Illegal Dumping. Smart Cities, 7(4), 2232-2257. https://doi.org/10.3390/smartcities7040088