1. Introduction

According to the World Health Organization (WHO), more than 70 million people are currently hearing impaired, and this number is unfortunately increasing. It is estimated that by 2050, the number of people with hearing impairment and permanent hearing loss (deafness) could reach 2.5 billion [

1]. This increase means that more than 700 million people worldwide will need hearing rehabilitation [

1]. SL is a form of communication that people with hearing loss use to communicate their thoughts, feelings, and knowledge through gestures instead of verbal communication. Currently, there are negative aspects of the use of SL in the world, including the low level of learning of this language by hearing people, the almost complete absence of employees who understand this language in government agencies, and the very small number of sign language interpreters. To solve such problems, researchers are developing various types of human–machine interfaces (HMIs). Recent scientific studies show that the use of electromyography in gesture recognition provides significant advantages [

2,

3,

4].

EMG is a method of measuring the electrical activity of muscles. Recording EMG signals can be carried out in two ways: invasive and non-invasive [

5]. In the invasive method, the EMG signal is obtained by inserting an electrode into the muscle. In the non-invasive method, the EMG signal is obtained by placing sEMG (surface electromyography) electrodes on the skin surface. The main advantage of the non-invasive EMG method is the ease of placement of the electrodes on the skin.

Sign language recognition (SLR) plays an important role in simplifying human–machine interaction. Filipowska A. et al. [

6] focused their research on recognizing 24 Polish sign language (PSL) gestures and game controls based on EMG signals. The data were collected using two different EMG sensors, BIOPAC MP36 and MyoWare 2.0. Machine learning algorithms such as LR, SVM, k-NN, and convolutional neural networks (CNNs) were used for classification [

6]. CNNs achieved high accuracy (98.32% for BIOPAC, 95.53% for MyoWare). These results also showed that it is possible to achieve effective SLR using cheaper sensors.

Tateno S. et al. [

7], in their study, aimed to develop participant-independent SLR based on EMG signals to recognize 20 common American SL gestures [

7]. The muscle activity pattern of the EMG signal was analyzed and a bilinear model was used to reduce individual differences in these signals. To save computational resources, the most important features were selected and the LSTM algorithm was used as a classifier for motion detection. The proposed approach achieved high-accuracy gesture recognition (94.5–100%) in real time from 20 participants.

Ruiliang Su et al. [

8] collected data using wearable devices with accelerometers and EMG sensors on both hands to identify 121 frequently used subwords in Chinese sign language. In the study, an accurate and reliable SLR system was proposed using an RF classifier built on the basis of improved DT. The proposed method achieved an average accuracy of 98.25% and the RF algorithm was shown to perform stably even on poor-quality training samples. The presented approach demonstrated the feasibility of building a wearable and reliable EMG-ACC-based SLR system that can be applied in practice.

Currently, there are many DSs designed for SLR using EMG, and information about them is presented in

Table 1.

Table 1 presents a collection of the literature from 2015 to 2024. The studies in this table were analyzed based on different numbers of participants, sessions, and devices for detecting sign language from sEMG signals. Early studies (e.g., [

9,

15]) widely used eight-channel Myo Armband sEMG devices, involving relatively more subjects (10) and sessions (10), which varied in classification accuracy between 80% and 100%. By 2020, research had expanded and experiments with different devices (e.g., Delsys Trigno [

10] and SS2LB [

11]) had been conducted, and these methods have helped to achieve high (81% and 95.48%) accuracies based on different classifiers such as RF and Linear Discriminant Analysis. Recent studies (e.g., [

16] and our DS) have been based on new devices (Terylene and Biosignalsplux) and modern classification algorithms (CNN-CBAM and RF), and although they have a relatively small number of channels (four and six), they have achieved high accuracy (92.32% and 97%) [

16]. The classifier in the literature with a result of 92.32% showed a lower result than the model we present. This is due to the fact that, in our study, there are a large number of participants and a small number of channels. This shows that the technological development of sensors placed on the muscles and the optimization of the number of channels give good results.

The practical significance of this research is explained, first of all, by the fact that a functional system was created based on a low-channel, compact, and energy-efficient EMG platform. The proposed solution uses only four EMG channels, which significantly reduces technical complexity, optimizes the use of hardware resources, and increases the overall efficiency of the system. Due to the low-channel approach, the stages of initial filtering, feature extraction, and classification of EMG signals can be performed in real time. This allows the system to be used on mobile devices or microcontroller-based platforms.

The compactness and energy efficiency of the system also allow it to be used in everyday life as a wearable device. Such devices are of great importance as a communication tool, especially for users with hearing or speech limitations. The proposed technology detects bioelectric signals that occur during the user’s hand movements, associates them with previously defined gestures, and provides output in the form of appropriate text or a synthesized voice. This process allows information to be transmitted without the need for any verbal or visual gestures from the user. Another important aspect is that the flexible algorithmic architecture of the system (signal preprocessing, feature extraction, and machine learning-based classification) allows it to be adapted to different user needs.

While different studies have investigated EMG-based sign language recognition, our work is unique in utilizing a large corpus of subject-specific demographic data captured entirely from hearing-impaired individuals and an optimized low-channel configuration to enable real-time inference at low power consumption. In aggregation, this is a decision factor suitable for practical and scalable exploration rarely covered in previous works.

In this study, six sign words (fruit names: apple, pear, apricot, nut, cherry, raspberry) were recorded from 46 participants using a four-channel device for 3 weeks, with each action recorded 10 times.

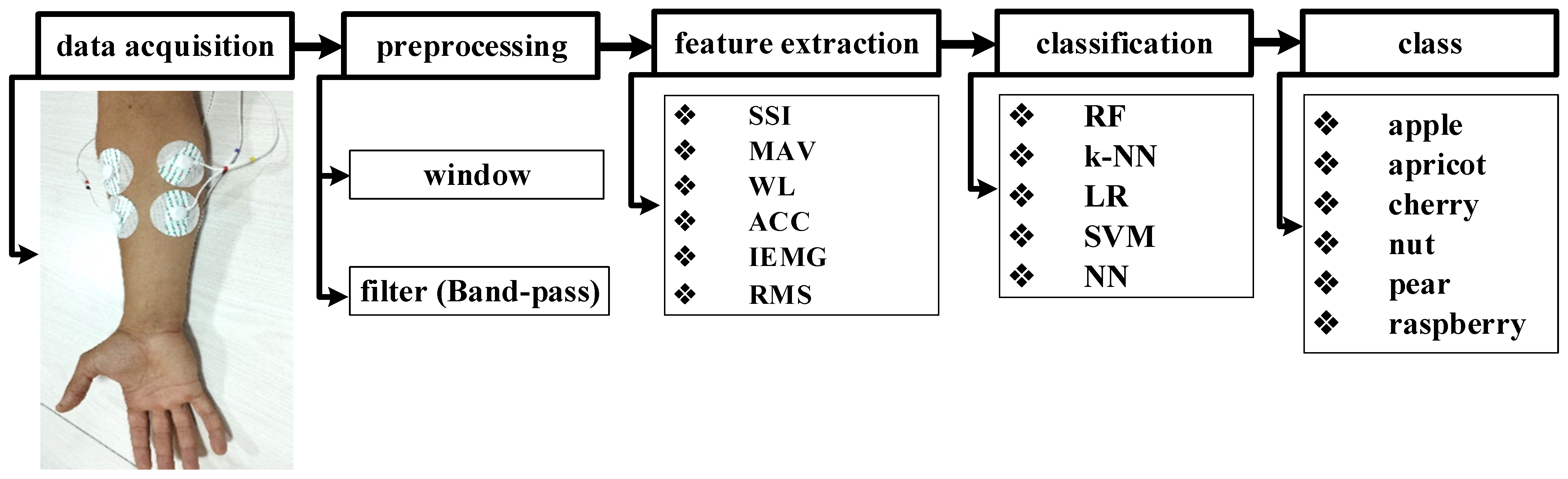

The general scheme for organizing the DS and classification is presented in

Figure 1. This process is organized step-by-step. First, a dataset is formed. In the next stage, the raw signals are cleaned of various noises through preprocessing, and the necessary filtering operations are performed. After that, features are extracted. In the final stage, each sign action is assigned to the appropriate class using classification algorithms.

2. Dataset Organization

2.1. Device

Special tests were conducted to organize the DS. During the experiments, it was adjusted taking into account the characteristics of hand movements of people with hearing impairments.

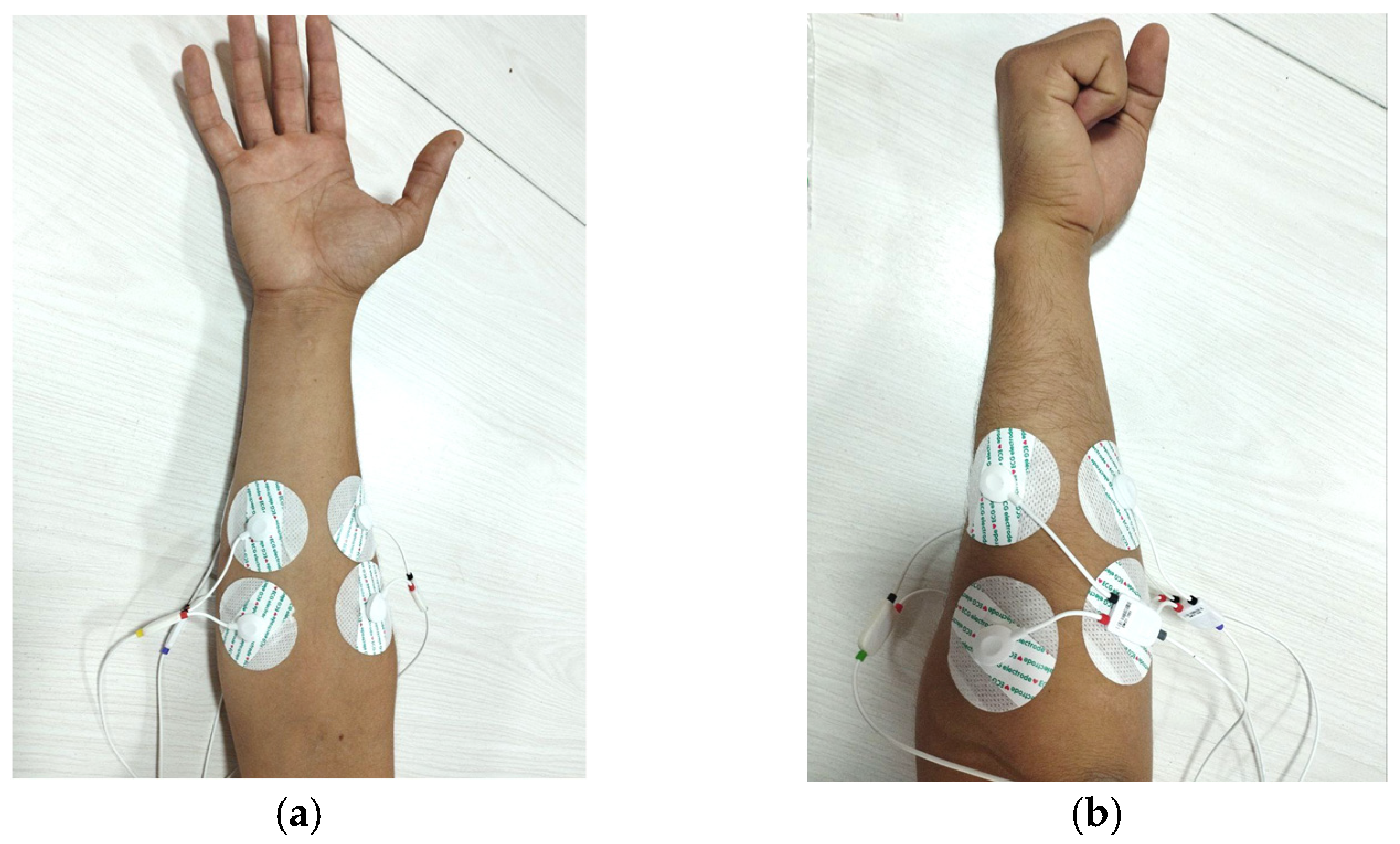

A four-channel Biosignalsplux EMG device developed by PLUX Wireless Biosignals (PLUX Wireless Biosignals S.A., Lisbon, Portugal) was used to record the EMG signal (

Figure 2). The signal was recorded at a frequency of 1000 Hz. The data were recorded non-invasively by placing electrodes on the skin. In the device shown in the picture, the ports marked 1-4 indicate the incoming channels. The port below port 4 is used to recharge the device.

Electrodes were used to record the signal and were placed in the innervation zones of the muscles by the nervous system (

Figure 3).

The sensors of the four-channel Biosignalsplux EMG device were placed on the brachioradialis, flexor carpi radialis, extensor carpi ulnaris, and flexor carpi ulnaris muscles of the right hand, which are most active during gesture movements, in the order listed in

Table 2 (

Figure 3a,b).

2.2. DS Structure

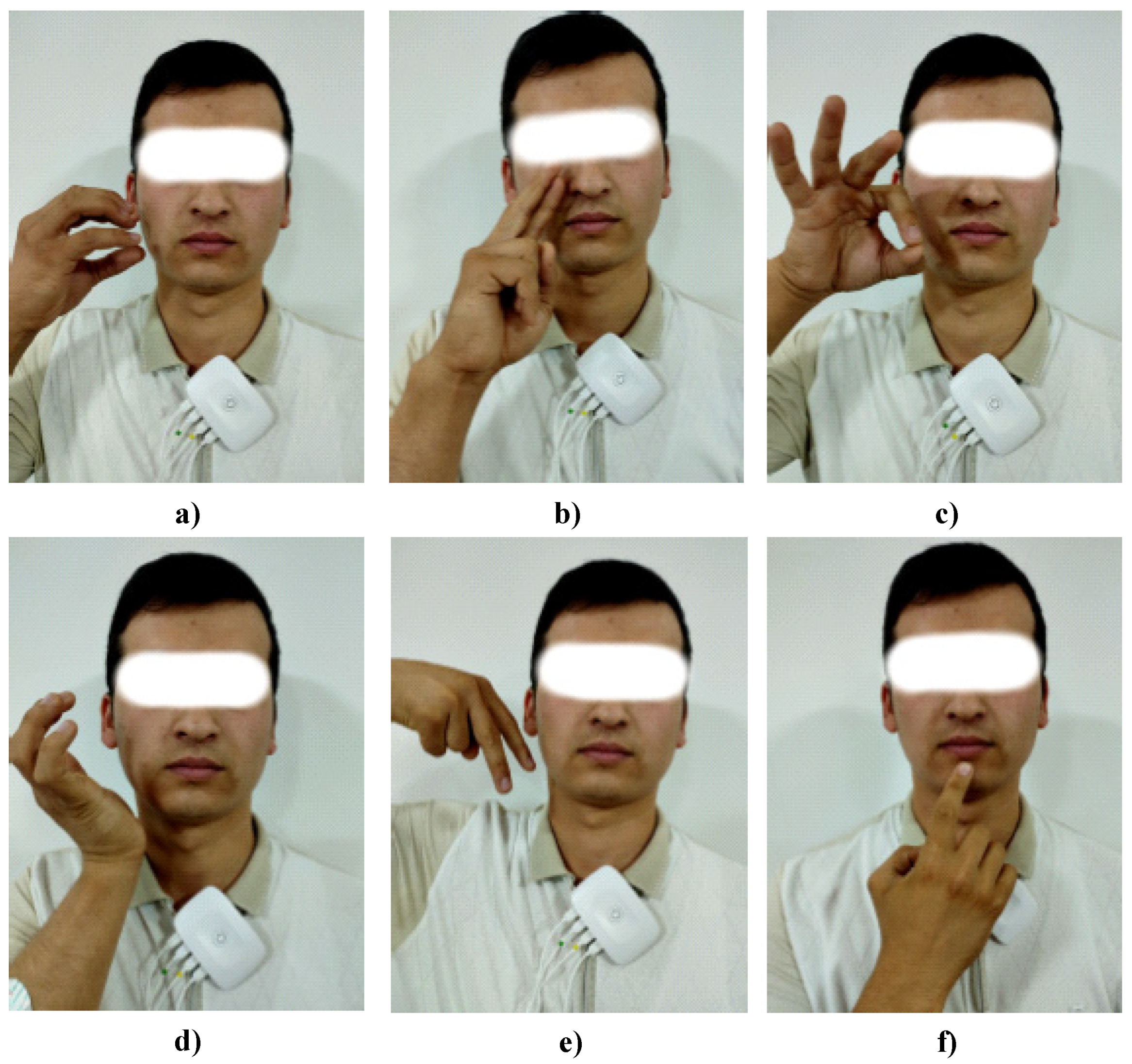

The study involved the recognition of gestural actions for the names of six fruits: apple, pear, apricot, nut, cherry, and raspberry (

Figure 4). This choice was made primarily to ensure the reproducibility and stability of the EMG signals in the experimental conditions. Given the inherently noisy nature of the EMG signal, movements were selected that reliably distinguished the differences in muscle activity between each gesture. Therefore, it was considered appropriate to limit the model to six distinct and distinct gestures, each with its own unique muscle patterns.

Each subject repeated the gestural actions 10 times. Each session was conducted once for 1 week. Thirty sessions were conducted over 3 weeks. The size of the DS was as follows:

The participants for the experiment were senior students of the “Karshi city specialized boarding school No. 17 for disabled children with special educational needs” (Uzbekistan). EMG signals were obtained with the consent of the subjects participating in the study. The experiment was conducted on 46 students, including 18 girls and 28 boys.

In contrast to other studies where most of the gesture samples had been either provided by healthy subjects or mimicked, the current dataset was built by individuals who normally work with sign language in their daily lives. This increased the linguistic and physiological validity of the EMG signals collected with this system, and promoted model generalizability in similar recognition tasks among the target population.

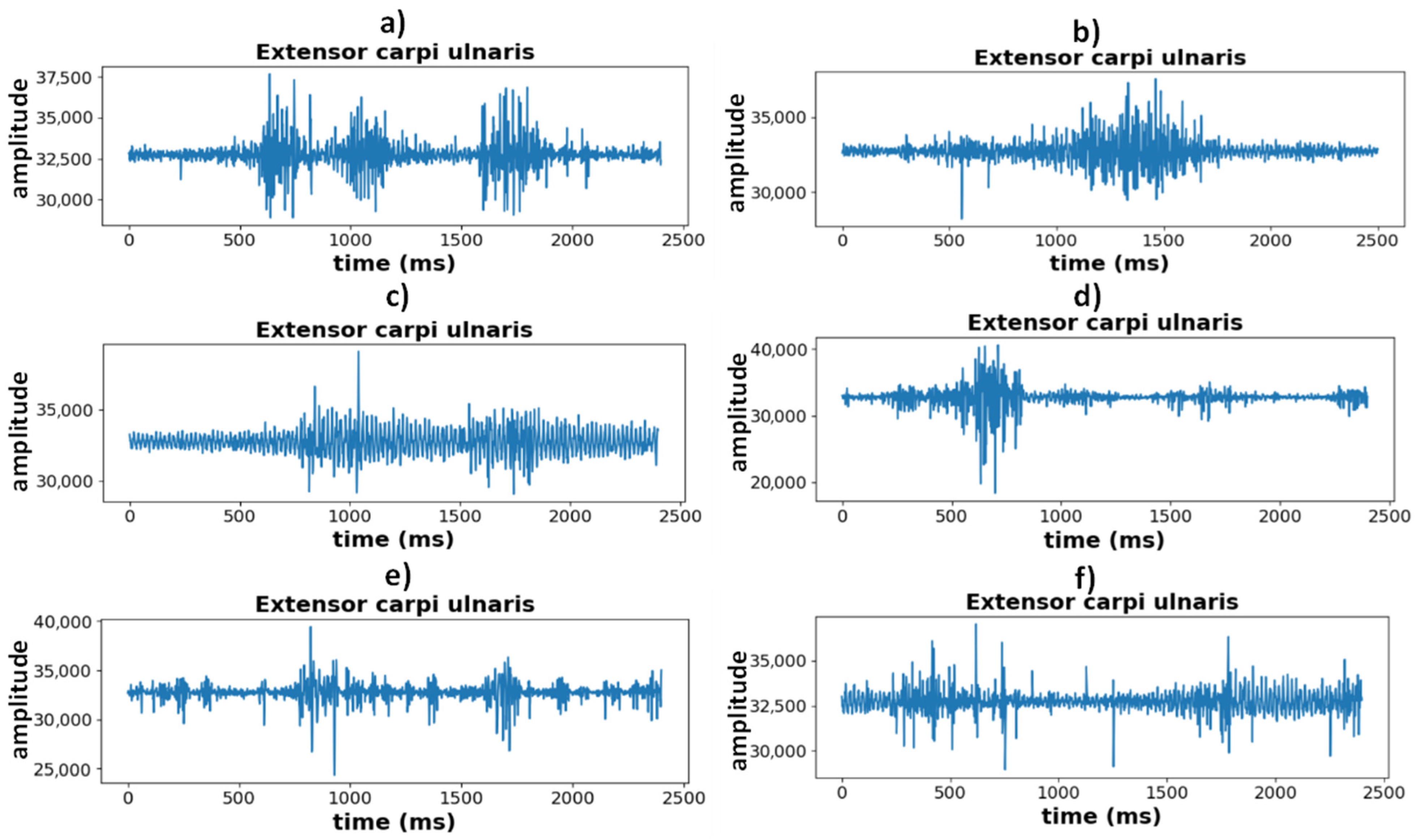

As an example, sample segments of the EMG signals recorded from the extensor carpi ulnaris muscle (channel 2) are visualized in

Figure 5. This image shows the time course of the EMG signal corresponding to each movement.

A band-pass filter was used to eliminate noise and artifacts from the EMG signals. During signal monitoring and analysis, a specific procedure was employed to find out the best window size and overlap ratio. While end-to-end modeling was designed to be effective, we experimented with several hyperparameters extensively to ensure high classification performance using the following window lengths: 100 ms, 200 ms, and 300 ms, with overlap values of 0%, 25%, and 50%. The classification accuracies of all algorithms for the window and overlap settings are given in

Table 3 with respect to their corresponding results.

Finally, the results of the evaluation indicate that a window size of 200 ms and an overlap percentage value at 0% gives better trade-offs between accuracy and computational cost across all algorithms. In particular, the RF algorithm obtained 97% accuracy at 200 ms with no overlap. This is supported by the achieved indicators of 96% for kNN, and in NN it was close to 92%, LR 92%, and SVM 90%. These high results are due to the maintenance of segment independence during classification, which reduces the model generalization risk when the overlap value is 0%. In addition, all algorithms exhibited a drop in classification accuracies when the overlap value was increased to 25% and 50%. For example, the RF algorithm yielded an accuracy of 97% when the window size was 200 ms and overlap was 0%, but this value dropped to 94% at an overlap of 25% and further to 92% at an overlap of 50%. This trend can be easily justified since as the overlap increases, segments will become more alike with one another and the repetition of data causes an adverse effect on the generalization power of the model. At the same time, it was also noticed that a high overlap value is associated with computational burden as well as heavier load. It was concluded that the best trade-off between classification performance and computational cost is achieved by keeping the overlap at 0% (i.e., with peaks for each spot).

Tests were similarly run with 100 ms and 300 ms windows, but the results showed poorer performance in comparison to the data with a 200 ms window for RF. The accuracies in the 100 ms windows were 88–90% and about 87–89% in the 300 ms window.

According to these results, we created the main working parameters for all following experiments in the study: a 200 ms window and a 0% overlap. This approach was found to be optimal for ensuring the accuracy of the classification results, computational efficiency, and overall stability of the system.

3. Feature Extraction and Classification

This section describes in detail the steps involved in generating a feature vector for detecting and classifying various sign language gestures using EMG signals. Experiments were also conducted using several modern and efficient classification algorithms that allow for automatic gesture discrimination based on these feature vectors, and the results were analyzed.

3.1. Signal Amplitude

EMG signals are characterized by a high level of variability. This variability is caused by several factors: the electrical resistance of the human skin surface, the technical quality of the electrodes used, and physiological and technical factors, such as the fact that the anatomical location of the muscle tendons and their innervation zones significantly affect the stability of these signals [

17].

In modern scientific research, various preprocessing steps are being implemented in order to reduce such discrepancies, including signal filtering, segmentation, and signal amplitude normalization.

In this study, the amplitude values of the EMG signal in the resting state were used as a basis and were evaluated using the signal-to-noise ratio (SNR). This approach allows for the direct comparison of signals before and during movement. This plays an important role in the process of accurately distinguishing and classifying the different movements of sign language.

The SNR formula is expressed as follows:

Here, Pgesture is the average signal strength during active movement, and Pidle is the average signal strength during an idle state.

SNR is the ratio of the useful signal strength to the noise strength and is the main indicator in assessing the quality of EMG signals. The higher the SNR value, the cleaner and more accurate the signal. A small SNR value indicates that the signal is noisy and unreliable. Signals with a high SNR value are considered to be of good quality and suitable for analysis. Signals with an average SNR value are satisfactory, and the results should be treated with caution. Low SNR values indicate a low-quality and unreliable signal, and additional filtering is recommended. As part of the preprocessing process, the signal-to-noise ratio (SNR) was systematically evaluated to ensure the integrity of the EMG recordings. Specifically, the SNR was calculated by comparing the signal power during the active muscle contraction phase to the power of the baseline noise segment captured immediately before the onset of each gesture. This approach provided a quantitative measure of signal clarity, helping to distinguish meaningful physiological activity from background noise. Trials with SNR values falling below the 5 dB threshold were classified as low-quality or noisy recordings and were therefore excluded from the dataset. By filtering out such unreliable trials, this step significantly improved the robustness, consistency, and overall quality of the input data used for downstream machine learning-based classification. Consequently, this preprocessing measure played a critical role in enhancing the performance and generalizability of the classification model.

The SNR results calculated based on the average values of the signal amplitudes in the four selected EMG channels for each gesture movement are shown in

Figure 6. The analysis results revealed that some gestures showed significantly stronger electromyographic activity than others. In particular, the Cherry class had the highest SNR, averaging 15 dB. This indicates that the muscle activity during the gesture was strong. The Nut class also showed a high electromyographic response, coming in second with an average SNR of 13 dB.

Figure 5 also shows that the EMG signal belonging to these classes is noise-free, clear, and has high amplitude.

The Apple and Raspberry classes showed values of 10 dB and 8 dB, respectively, indicating that their muscle activity level was moderate.

However, the SNR value was significantly lower for some gestures. For example, the Pear class showed an average SNR of 7 dB, while the Apricot class achieved the lowest value of 5 dB. This indicates that the signal-to noise ratio of EMG signals during these gestures was low and the muscle activity was relatively weak.

Figure 5 visually shows that the EMG signals for these gestures are significantly noisy.

3.2. Feature Extraction

The proposed DS has its own unique features that distinguish it from other existing EMG collections by several important parameters. In particular, the large number of subjects performing gestures in this collection, the repetition of each gesture movement several times, and the fact that the EMG signals are obtained from hearing-impaired people communicating in SL increase the reliability and analytical accuracy of the data. Therefore, this DS serves as an important source for extracting continuous electromyographic features characteristic of gestures and their effective classification.

Many scientific studies have been conducted on the detection and classification of movements or gestures using electromyographic signals. In this work, we use the following feature indicators, which have been proven effective in previous experiments [

18]:

1. Simple Square Integral is a time-domain property that represents the total energy of the sEMG signal. SSI reflects the changes in amplitude and duration in the signal. It is calculated using the following formula:

where x

i is the value of the EMG signal at the i

th point, and N is the total number of samples. SSI is particularly useful in detecting high-energy muscle activity, especially in analyzing the difference between static and dynamic movements.

2. Average Amplitude Change (AAC) refers to the average change between consecutive points in a signal.

AAC is also used to assess dynamic changes in muscle activity and to identify high-frequency components. This method allows for amplitude-based analysis without directly analyzing the spectral composition of the signal.

3. Mean Absolute Value (MAV) is used to estimate the overall activity in the signal and indicates the intensity of the sEMG signal:

This method is characterized by its low complexity, but reliable performance in real-time systems. MAV is effective in assessing the degree of muscle contraction, and describes the main amplitude components of the sEMG signal without filtering.

4. Integrated EMG signal (IEMG) Mean Absolute Value is used to assess the overall activity in the signal and indicates the intensity of the movement of the sEMG signal:

Although similar in appearance to MAV, IEMG provides a value accumulated over the total time and takes into account the time dimension along with the amplitude. This feature is used to assess the overall muscle strength characteristics of the sEMG segments.

5. Waveform Length (WL) reflects changes in the amplitude and frequency components of the signal and is calculated as follows:

The WL feature indicates the complexity of the EMG signal, i.e., its inclusion of multi-frequency components and frequently changing amplitudes. It is a sensitive and informative feature, especially in distinguishing sEMG classes depending on the type of movement.

6. Root Mean Square (RMS) represents the statistical power of the signal amplitude:

The RMS method is widely used in EMG signal analysis due to its robustness against random noise and its effectiveness in providing a reliable quantitative measure of muscle strength. RMS serves as a consistent and objective indicator of muscle activation levels, making it especially useful in applications such as movement analysis and fatigue assessment.

These features, in addition to expressing the statistical aspects of the signal over time, also reflect its amplitude, frequency, and complexity characteristics. In previous scientific studies, the classification results based on these parameters showed an accuracy of up to 99% [

18]. However, these high results are mainly due to the small number of movements (only three gestures) and the limited number of subjects (20).

3.3. Classification

Instead of relying solely on traditional machine learning algorithms such as RF, more advanced architectures like CNNs and Transformers offer the potential to automatically and more deeply extract meaningful features from data. These deep learning (DL) models are especially advantageous when working with large-scale and high-quality datasets. However, several technical and practical limitations prevent their full and effective application in the context of our study:

CNNs and Transformers typically require large volumes of data to perform effectively. These models consist of millions of parameters, and when trained on small or moderately sized datasets, they are prone to overfitting and poor generalization. Our experiments were conducted using a low-channel (four-channel) sEMG system with a relatively limited dataset. As a result, the direct application of DL models is not well suited to our data constraints and is unlikely to yield optimal results [

19].

CNNs are inherently designed to process two- or three-dimensional spatial data, such as images. In contrast, sEMG signals are one-dimensional, time-dependent bioelectrical signals, making them less compatible with CNNs in their raw form. Although transformations such as the Short-Time Fourier Transform (STFT) or Wavelet Transform can convert these signals into spectrotemporal representations suitable for CNN input, doing so introduces additional preprocessing steps and increases computational complexity. Moreover, research on the CNN-based classification of fruit-related hand gestures using sEMG remains limited, and the true effectiveness of CNNs in this area has not yet been fully explored [

20].

Low-channel sEMG systems—such as those with only four electrodes—capture significantly less spatial and muscle activity information compared to high-density setups. Since DL models generally rely on rich and diverse feature sets, applying them to low-channel data often results in overparameterization, leading to suboptimal training outcomes.

One of the key challenges of using CNNs, and especially Transformer models, lies in their high computational requirements. Transformer models, in particular, are resource-intensive due to their multi-layer self-attention mechanisms. Our goal is to develop a real-time gesture recognition system that operates with minimal latency and computational overhead. Therefore, we opted for five conventional classification algorithms—RF, k-NN, LR, SVM, and NN—which are known for their efficiency, robustness, and ability to handle features with various structures [

21,

22,

23,

24,

25].

During the training process, 80% of the available dataset was separated for training and the remaining 20% for testing purposes. This division allowed us to assess the generalization ability of the model and identify cases of overfitting.

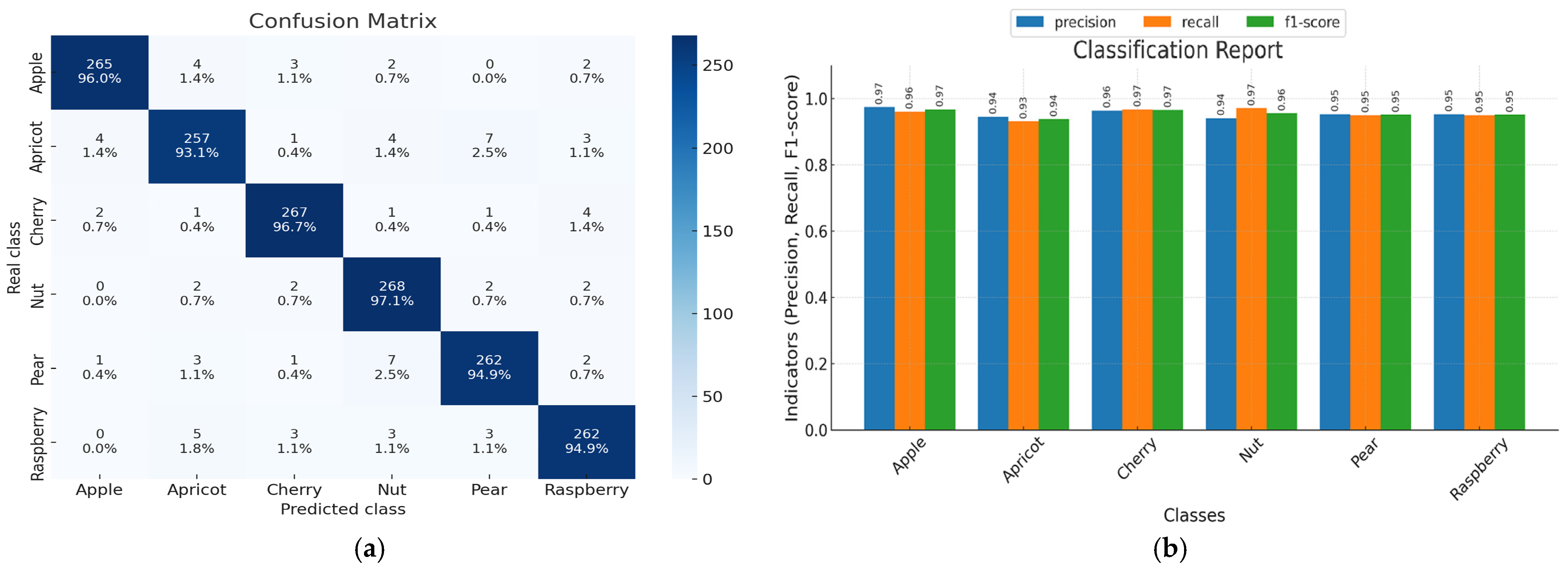

Confusion matrix and classification report are tools for evaluating classifiers for classification models [

26]. They provide a visual representation of the evaluation of a classification algorithm. The confusion matrix and classification report of the RF model used in the study are shown in

Figure 7.

Evaluation of the classification model across all gesture classes showed high accuracy in the prediction head. The classes of Nut (97.1%) and Cherry (96.7%) achieved the highest accuracies, as the gestures representing these samples were the most clearly distinguished from each other.

The Apricot class, in contrast, exhibited moderate misclassification at most (93.1%). From the confusion matrix analysis, it was found that some samples from the Apricot class were incorrectly classified as Pear (7.2%) and Apple (1.4%), since they shared the same characteristics of specific EMG signals.

This is further supported by the Precision, Recall and F1-score metrics seen below. As an example, the F1-score for the Apricot class was 0.93 approximately, suggesting a slightly reduced authority for that gesture compared to the others. Therefore, a comparison of the confusion matrix and classification report could be used to delve into inter-class misclassification patterns and further refine model performance in other areas.

A complete and comprehensive statistical analysis was conducted to evaluate the reliability, accuracy, and generalization of the developed classification algorithms. In addition to the overall classification accuracy, other core diagnostic metrics (sensitivity, specificity, and F1-score, along with 95% Confidence Intervals (CIs)) were calculated for each of these models using the held-out test data. Sensitivity means that the model is good at predicting an actual true positive case, and specificity means being efficient at not missing negatives. F1-score, as the harmonic mean of precision and recall, is a relatively stable metric in cases of imbalanced class distributions. To test for the stability of this metric, we derived reliable confidence intervals by performing 1000-iteration non-parametric bootstrapping. The results showed significant differences in a few of the key metrics, especially sensitivity and F1-score, between SVM-opposing k-NN (p < 0.05), thus highlighting the fact that certain classifiers are more competent than others at capturing differential discriminative windows in EMG signal data with high resolution.

3.4. Results

Table 4 shows the comparison statistical study of the five popular classifiers—SVM, RF, K-NN, NN, and LR—used in the EMG signal for gesture recognition. Three main performance metrics, sensitivity, specificity and F1-score, were calculated for each model with their corresponding 95% Confidence Intervals (CIs) to indicate the robustness of the results across validation folds. In all three metrics, RF achieved the best performance globally, with the highest F1-score (0.92 ± 0.012), which indicates that it is a powerful model for capturing sophisticated patterns within EMG signals in generalizability terms. SVM additionally delivered high sensitivity (0.88 ± 0.020) and specificity (0.90 ± 0.018), which is likely a reflection of its robustness to extreme features, thereby serving as an appropriate baseline model in these extreme test conditions. On the other hand, LR showed poorer performance than SVM across all metrics—particularly F1-score (0.85 ± 0.017)—hinting that is limited in modeling non-linear dependencies within EMG data.

The classification accuracy was calculated separately for each gesture class, and based on these results, a generalized assessment was made based on their statistical weights.

The experimental tests showed that, based on the selected feature set, all classifiers—RF, LR, SVM, NN, and kNN algorithms—demonstrated high performance in classifying gestures. However, the accuracy level for each gesture movement was different, and some differences were observed between these algorithms (

Figure 8).

In addition to the standard random data split, cross-subject and cross-session validation protocols were employed to evaluate the robustness of the proposed model under more realistic implementation scenarios. Specifically, Leave-One-Subject-Out (LOSO) validation was conducted, where each participant was used as the test set in turn, while the remaining participants formed the training set. Similarly, cross-session validation was performed by training the model on data collected during weeks 1 and 2, and testing it on data from week 3.

In both validation settings, the RF classifier consistently demonstrated high performance: the average accuracy reached 94% in cross-subject validation and 92% in cross-session validation (

Table 5). These results highlight the model’s strong generalizability across different users and varying recording sessions.

4. Discussion

The number of device channels is important in the process of recording EMG signals. In most studies, an eight-channel Myo Armband was used. However, due to the large number of channels in this device, making calculations during the processing of signals coming from them is difficult. This leads to an increase in the amount of time when working in real-time mode.

Current versions of sEMG devices have some limitations in being fully functional in real-world conditions, particularly due to wearability, inconvenience in electrode placement, and external factors affecting signal stability. However, sEMG technologies have developed significantly in recent years: for example, wireless, compact, and skin-integrated devices, as well as Myo Armband devices in the form of a bracelet, may be used as HMIs in the future without causing any discomfort to the user. In this work, we did not focus on ergonomic requirements, but rather on the initial stages of establishing a DS and the results so far.

This study used a four-channel Biosignalsplux device for the dataset creation and classification with high performance. Previous reports on related approaches have usually been performed with fewer subjects, and were restricted to non-differentiated populations (healthy individuals) or lacked a wide variety of sessions presented, and no continuous assessment was conducted in real time. By contrast, we instantiate a system that overcomes such limitations by leveraging realistic data generation that is representative of the demography to coordinate an installation environment corresponding to practical hardware and signal processing efficiency in real-world deployment.

The study was based on the low-channel sEMG-based fruit gesture recognition system, and this protocol was selected for use with a limited set of six fruit-related gestures (semantic constraint) (and not because these are typical signs in sign language) for initial evaluation with minimum inter-class variability in terms of semantic gesture or kinematic gesture differences. Running the experiments in this controlled environment allowed us to objectively assess whether the proposed methods can be applied at all and how well they work under certain conditions while obtaining a set of experimental results that are only immediately generalizable to a larger dataset.

We are well aware that sign languages themselves are naturally occurring rich and expressive systems with a large lexicon of used-at-least-once signs in daily use, representing thousands of words and abstract concepts (deaf communities do not actually exploit many of the word classes defined by spoken language)—significantly more than the scope of our current dataset. Most saliently, the proposed approach was designed with scalability in mind. These approaches would all drive the feature extraction pipeline and classification model needed, but are still limited by the six (or possibly eight) classes that the existing low-channel hardware can handle. In this kind of system, it may be helpful to first identify broad gesture categories—such as hand shape or movement type—before moving on to the more detailed recognition of specific gestures.

Additionally, using techniques like transfer learning and customizing the system for individual users could make it easier to expand the gesture vocabulary without needing to retrain the model from scratch each time. Future work will also involve collecting data from a larger and more diverse group of participants. This will help improve the model’s ability to generalize across different people, regional sign language variations, and signing styles—making it more useful and reliable in real-world communication.

As can be seen from the results of the study, the highest accuracy was observed in the Cherry class, which achieved 97% accuracy in the RF algorithm, and 93% and 96% in the NN and kNN algorithms, respectively.

The Nut class also had high accuracy, with RF and kNN algorithms classifying it with 96.8% and 96% accuracy, respectively. On the contrary, the lowest classification accuracy was observed for the Apricot class. The LR algorithm for this gesture achieved 82% accuracy, while other models also achieved relatively low results: 85% (SVM), 87% (NN), 92% (kNN), and 93% (RF). The reason for the low classification results for the Apricot class is that, as shown in the confusion matrix in

Figure 6, the EMG signal values recorded when this class was executed have some similarities to the EMG signal values recorded when the Nut, Pear, and Raspberry classes were executed.

In addition, the level of muscle activity during the apricot gesture is much lower than for other gestures. This directly affects the SNR of the EMG signal, and the analysis results showed that the SNR value for the Apricot class was 5 dB. Due to the low SNR, the accuracy rate for this class in the LR model was 82%. However, the RF model, which was found to be the most effective in our study, achieved an accuracy of 93% for the apricot gesture. This result is considered sufficient for practical use, given the four channels and the simplicity of the system.

Overall, these results indicate that the level of complexity and the differences in electromyographic responses between gestures have a significant impact on the results of the classifiers. The RF algorithm is an effective solution for determining the level of muscle activity in the process of analyzing EMG signals, as it provides high accuracy and works stably with noisy data. Since muscle activity in EMG signals is complex and varied, the ensemble-based approach of the RF algorithm allows for a deep analysis of this complexity. In addition, the RF model is able to extract important features of classes in DSs, which helps us to understand the relationship between them more deeply. Therefore, the RF classifier showed superior results compared to other models in all types of movements.

The kNN model is characterized by its simplicity, but when there are similarities and noisy values between EMG signals, the results are not stable. In our study, the classification accuracy of the kNN model was relatively low due to the closeness of the EMG signal features between the Apricot, Nut, and Pear classes.

Although the LR model is effective when some classes of EMG signals can be separated by linear boundaries, it is precisely in our study DS that the LR model performed worse than powerful classifiers such as RF due to the complexity of the movements and the uncertainty of muscle activity. This model relies more on the linear relationship of the signal features.

The SVM model can work well on small datasets, but the complex and high-dimensional features of the EMG signal, as well as the similarities between different classes, limited the effectiveness of the SVM model in our study DS.

The NN model was also tested in our study and showed satisfactory results among all classifiers. However, due to the specific characteristics of electromyographic signals and the complex and uncertain distribution of muscle activity in different gestures, the NN model gave a lower result than the RF. Moreover, the NN model fully demonstrates its advantages on datasets with larger volumes and more complex structures. In general, this distribution of classification results was due to the characteristics of the EMG signal, the complex relationship between classes, and the ability of the model to work with this type of data.

5. Study Limitations and Future Work

Although the proposed system achieved promising results in gesture classification using a low-channel EMG device, the following limitations and future work should be noted.

In this study, the real-world noise factors still need to be more comprehensively tested. The factors in these practical conditions, like motion artifacts, electrode displacement, long-term signal stability, and incremental signal drift, might have a significant influence on the classification performance. Characteristics such as age, sex, and cognitive function were included in our analysis with preprocessing techniques including band-pass filtering and segmentation, but were taken separately from this study.

Although we realize that the work in this area is incremental, our work brings together various practical aspects (such as low-channel acquisition, real-time viability, and conspecific data) into a single reproducible platform. In future work, we intend to build on this foundation both in scope by enlarging the vocabulary (e.g., adding words or phrases), and in flexibility by integrating adaptable modeling (e.g., support for other MT approaches).

Overall, this research is an important step in the field of energy-efficient and compact gesture recognition systems based on EMG signals, and is a promising platform for creating highly effective communication tools for people with disabilities.

6. Conclusions

This article presents the recognition of six fruit names in SL using EMG signals. A total of 46 hearing-impaired participants participated in the DS collection process. An analysis of previous work in the field of gesture recognition was performed. The analysis revealed that the location of the electrodes, the number of participants and sessions during the DS collection process, and the number of gesture movements affect the classification accuracy. At the same time, the study achieved a high recognition rate using a small number of channels (four channels), unlike other studies.

The reduction in the number of channels reduces the signal processing time, reduces the computational load, and allows for stable operation in real-time classification systems.

A signal-to-noise ratio analysis of the gesture movements performed in the study was conducted. As a result of this analysis, the highest indicators were shown by the Cherry and Nut classes at 15 dB and 13 dB, respectively. The lowest was 5 dB due to the low activity of the selected muscles during the execution of the apricot gesture. At the same time, the EMG signals were filtered to remove various noise and artifacts. In the next stage, the SSI, ACC, IEMG, WL, RMS, and MAV features of the EMG signal were selected and the DS was created using these features. RF, LR, SVM, NN, and kNN classification algorithms were used to recognize the six gesture movements, and they achieved an accuracy ranging from 84% to 97%. Of these, the RF algorithm showed the best result with an accuracy of 97%. Although these values are lower than those in other studies, the DS created here can be used in other studies or in HMI systems to detect gesture movements.