A Deep Learning Model for Forecasting Velocity Structures of the Loop Current System in the Gulf of Mexico

Abstract

:1. Introduction

2. Method

2.1. Dataset

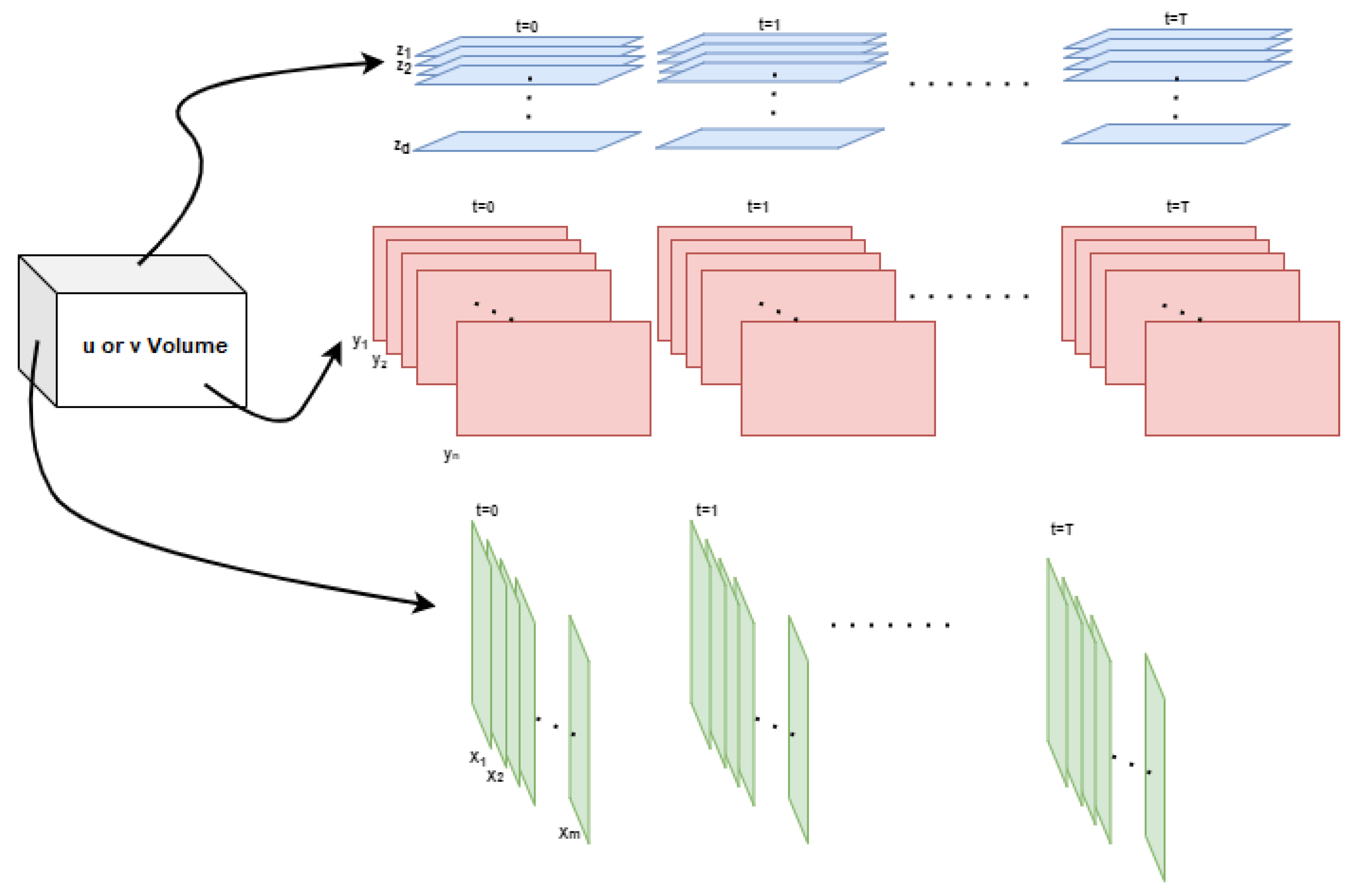

2.2. 4-Dimensional Tensor Slicing

2.3. Volume Slicing Induced Errors

- and are the mean and variance of , respectively.

- and are the mean and the variance of , respectively.

- is the covariance of and .

- are used to stabilize the ratio with a weak denominator.

- is the dynamic range of the gridded velocity values.

- and are the default values of the two scale factors.

3. Deep Learning Prediction Model

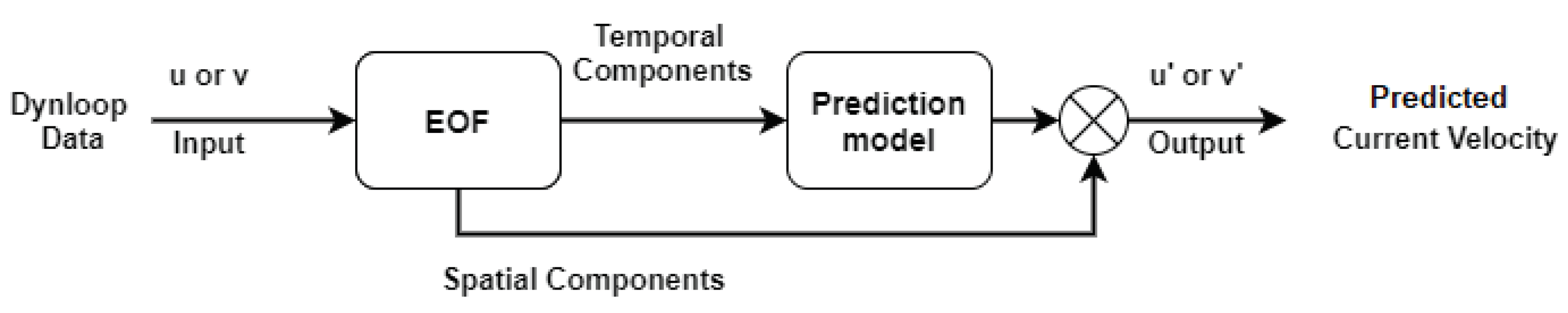

3.1. Empirical Orthogonal Functions

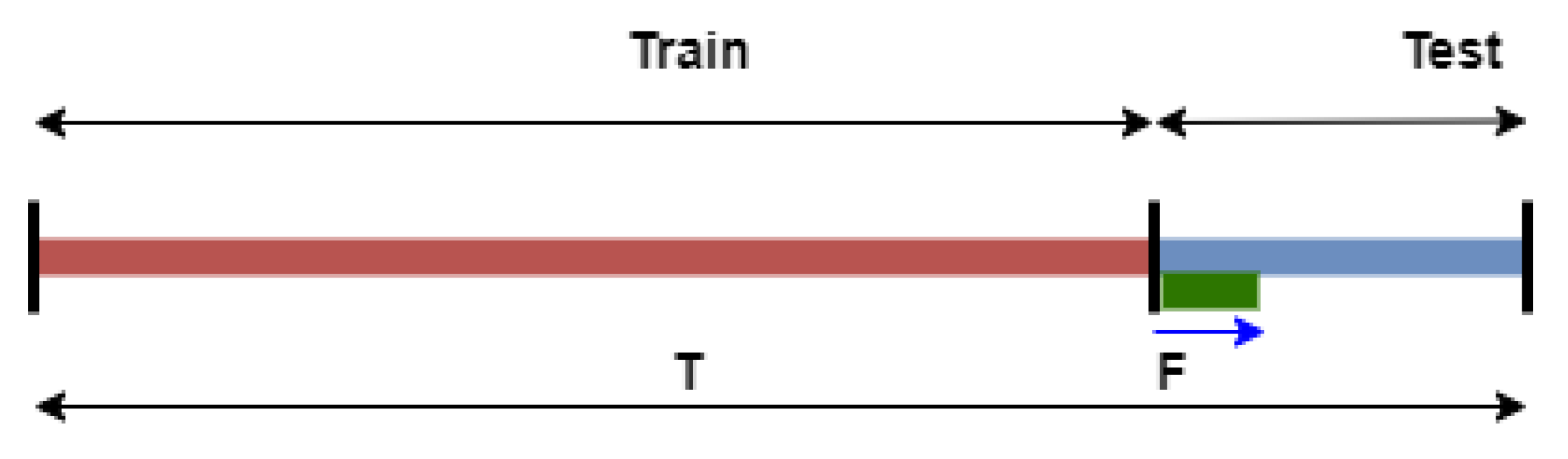

3.2. Deep Learning Model: Long Short-Term Memory Network

3.3. Prediction Procedure

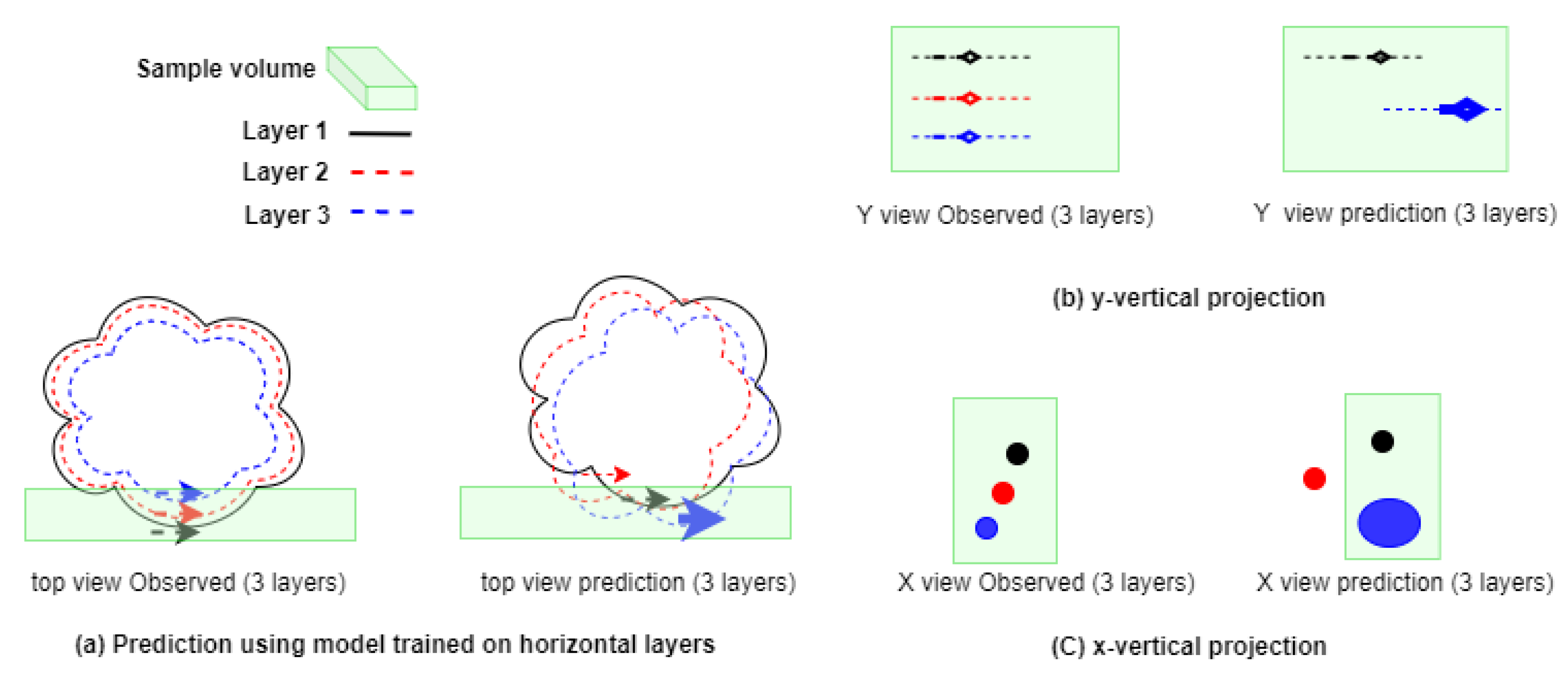

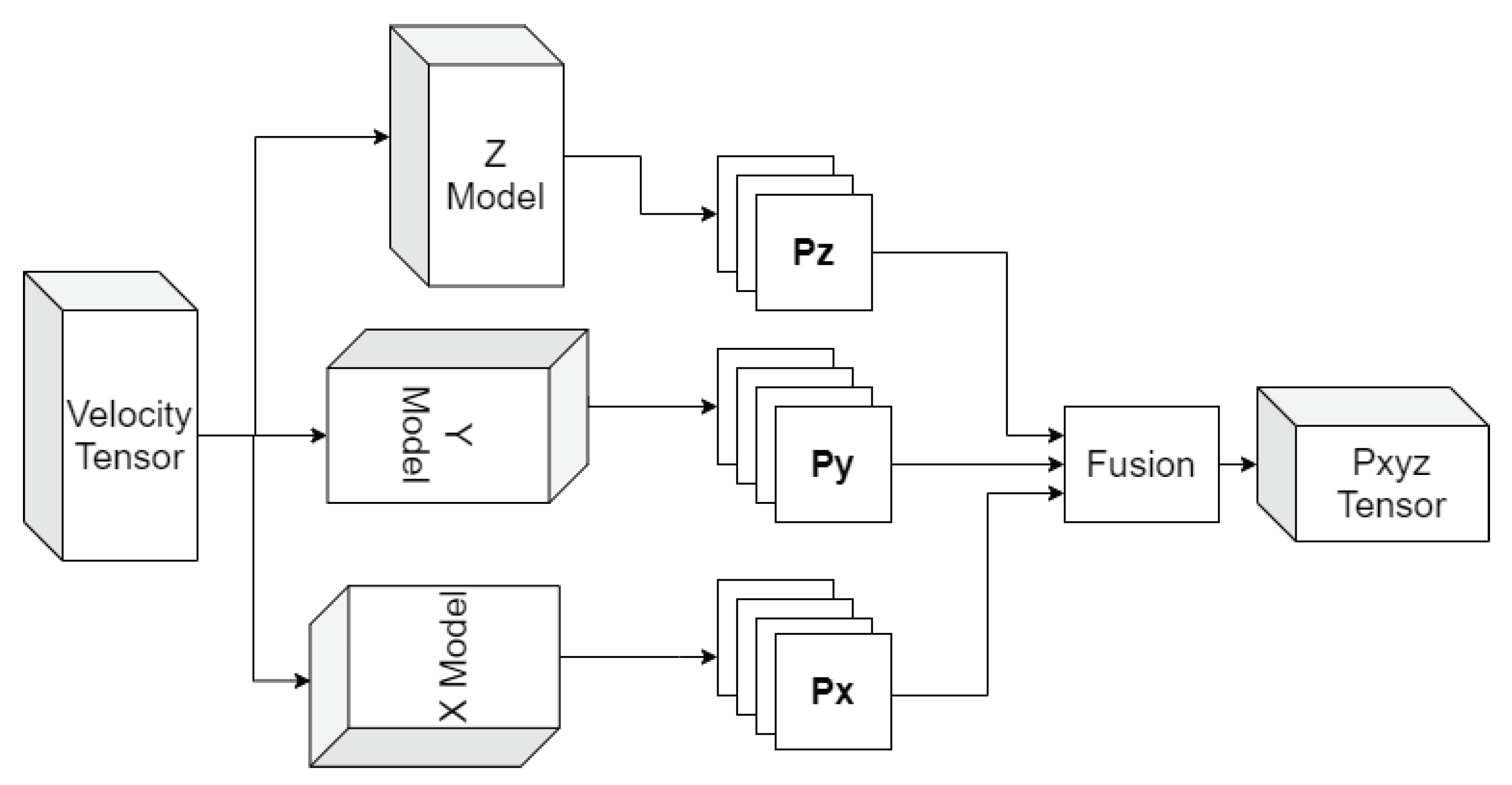

3.4. Layered Prediction Model Approach

4. Layered Prediction Experiments

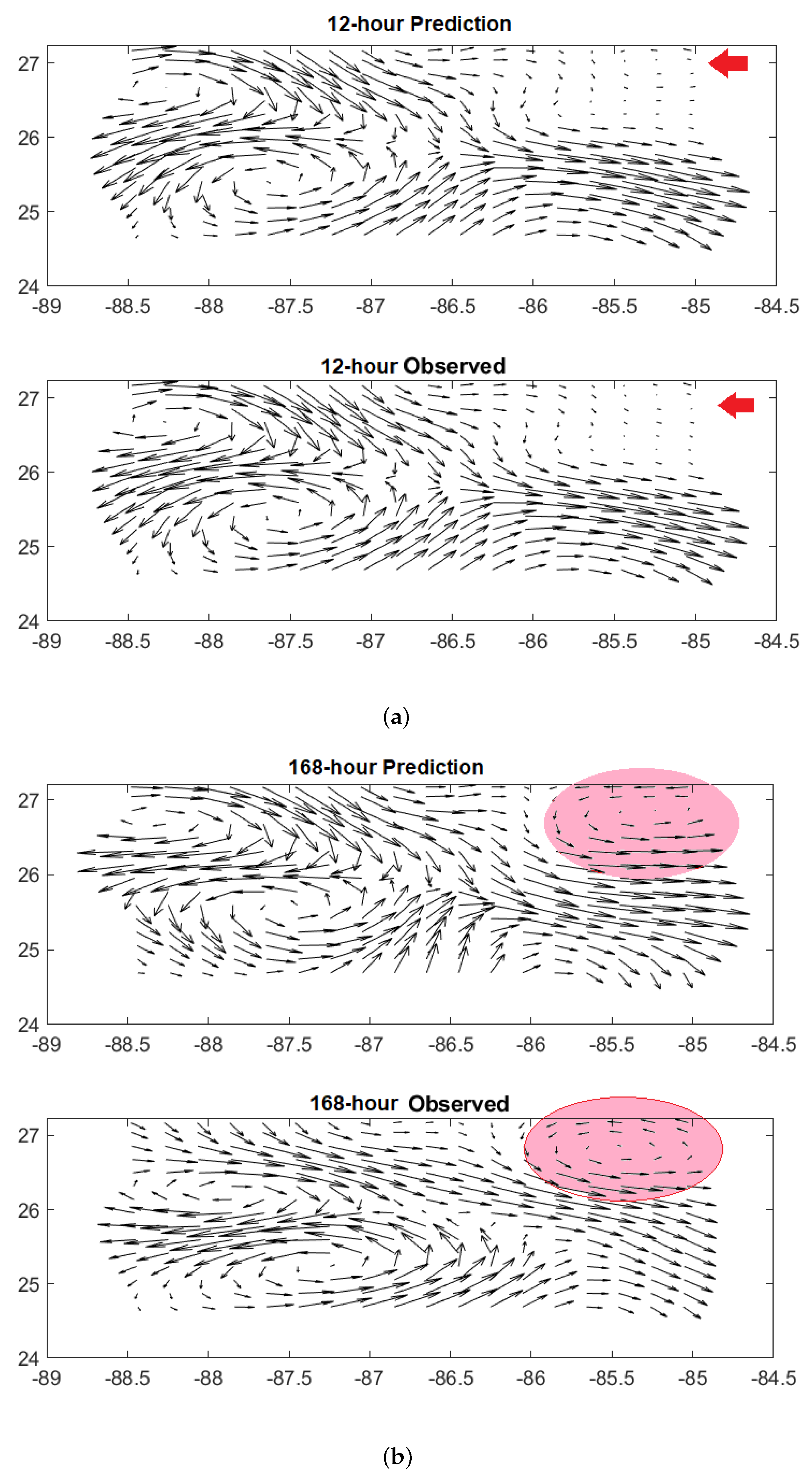

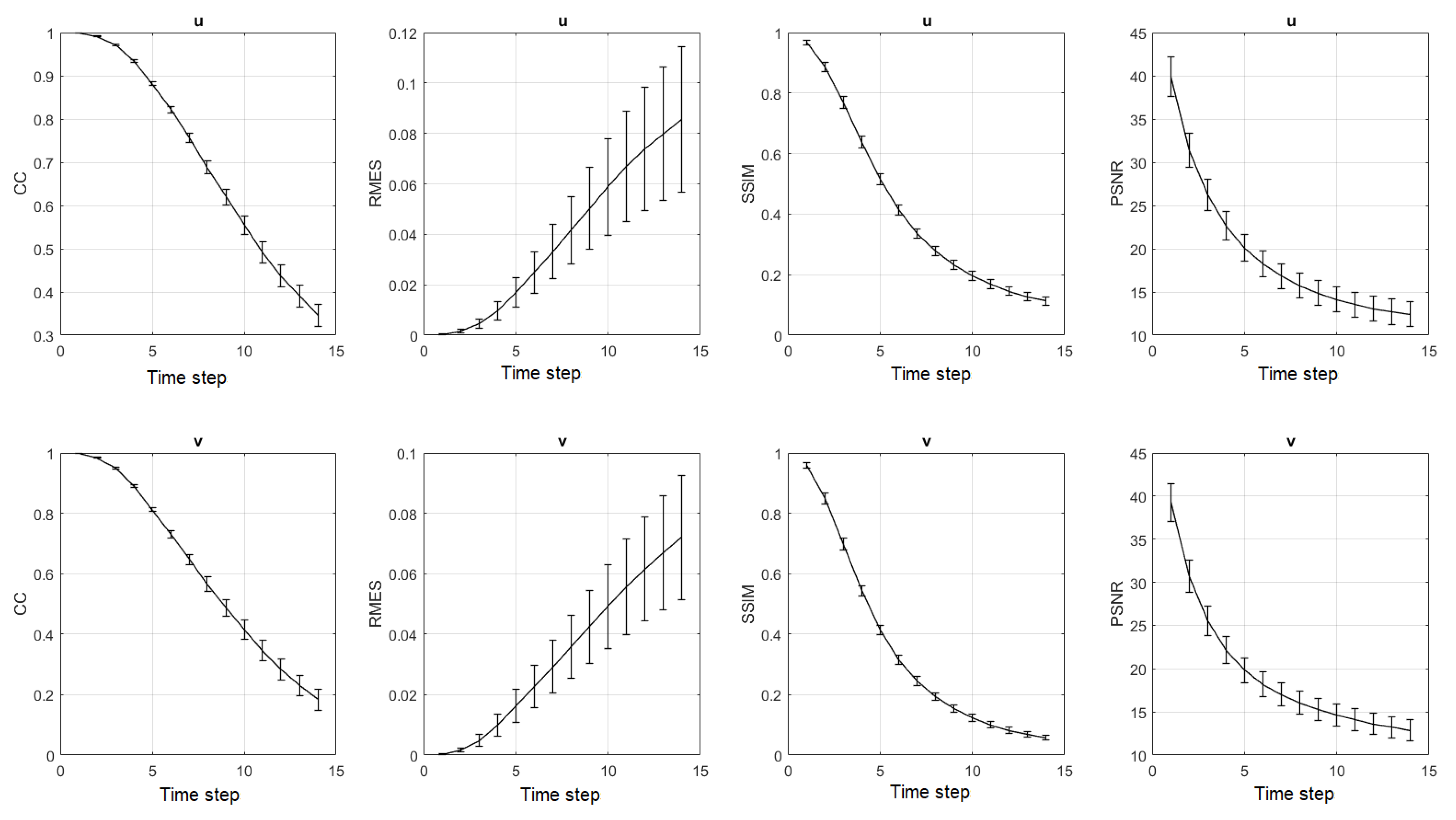

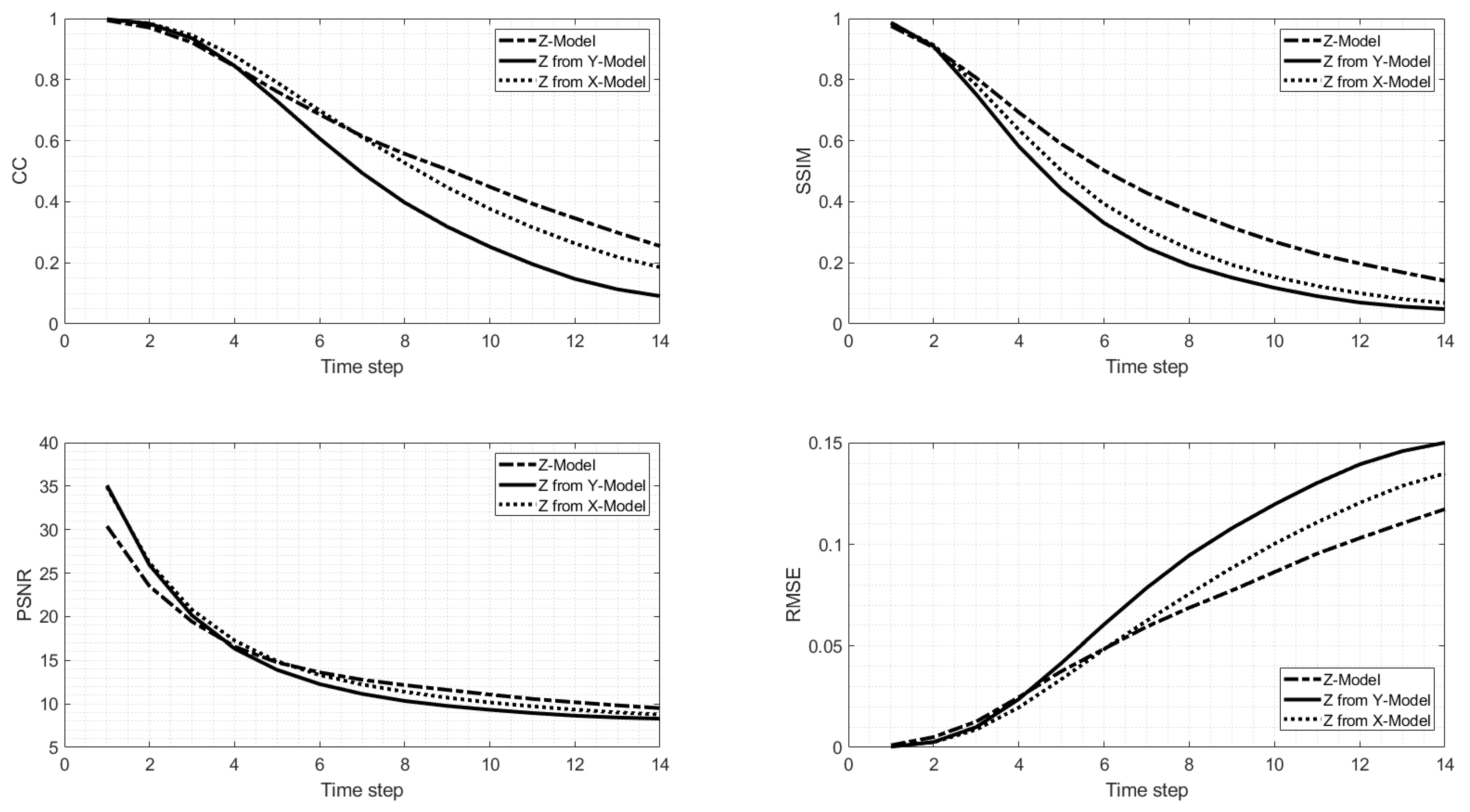

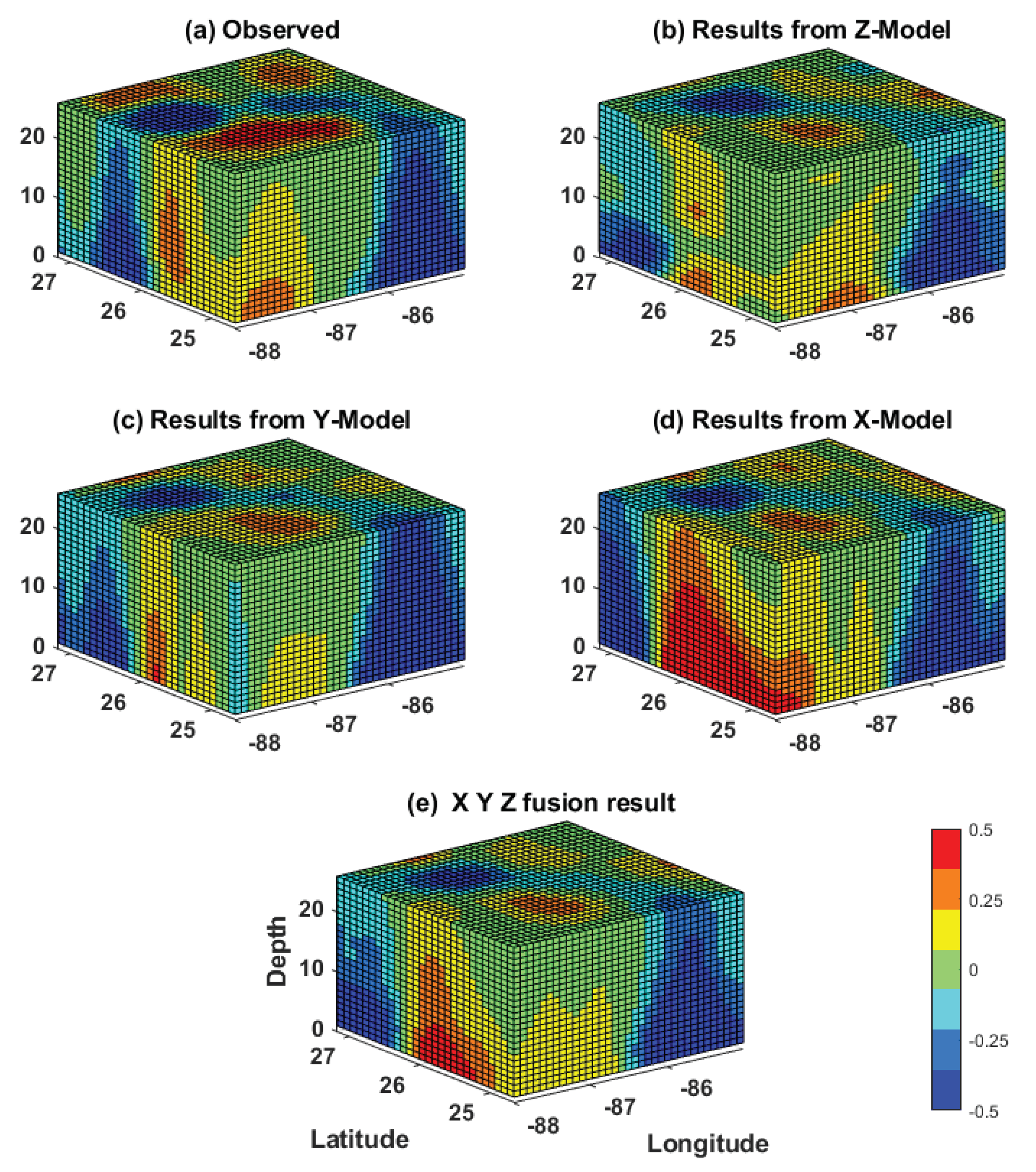

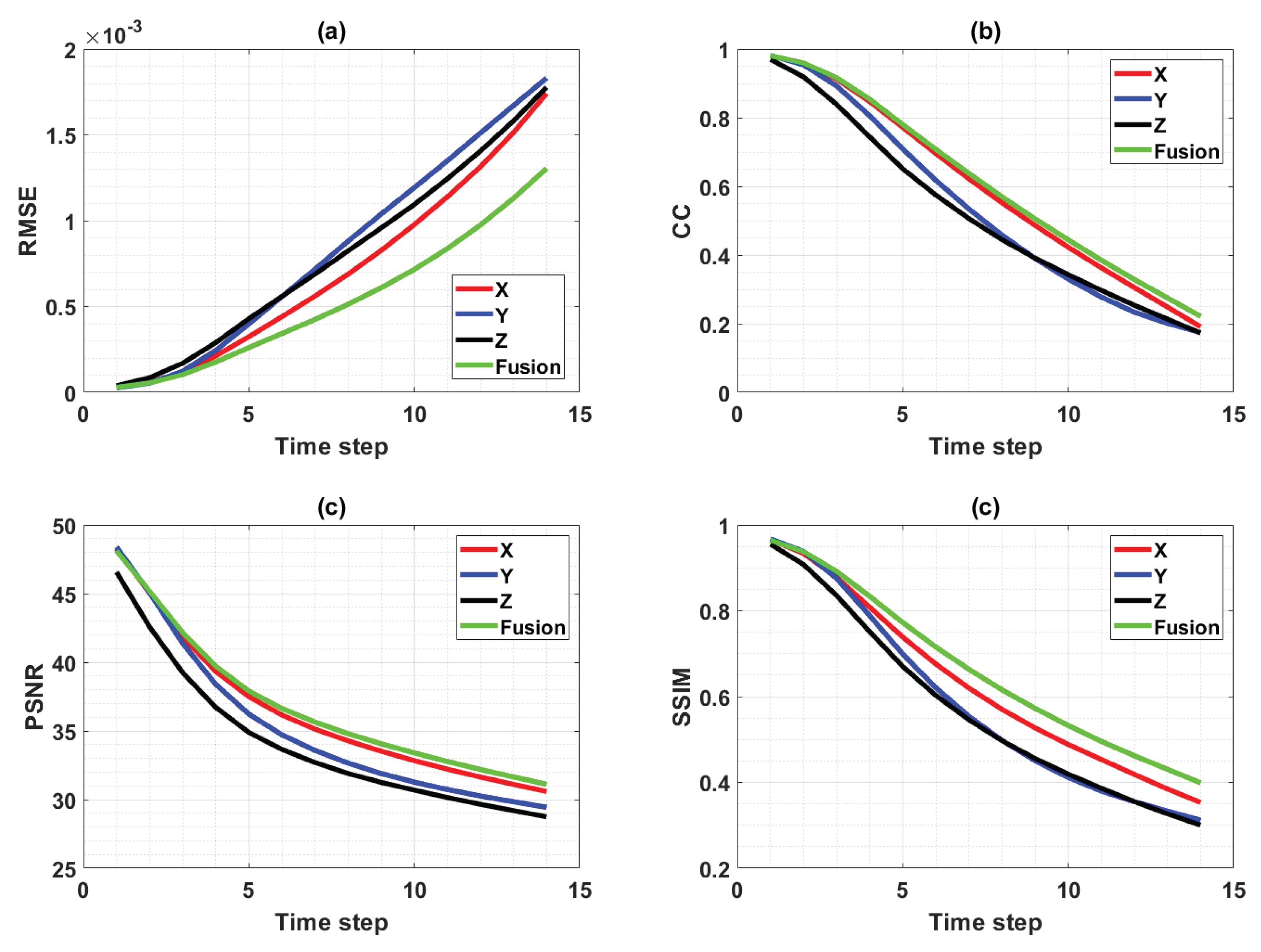

4.1. Model Z Velocity Predictions

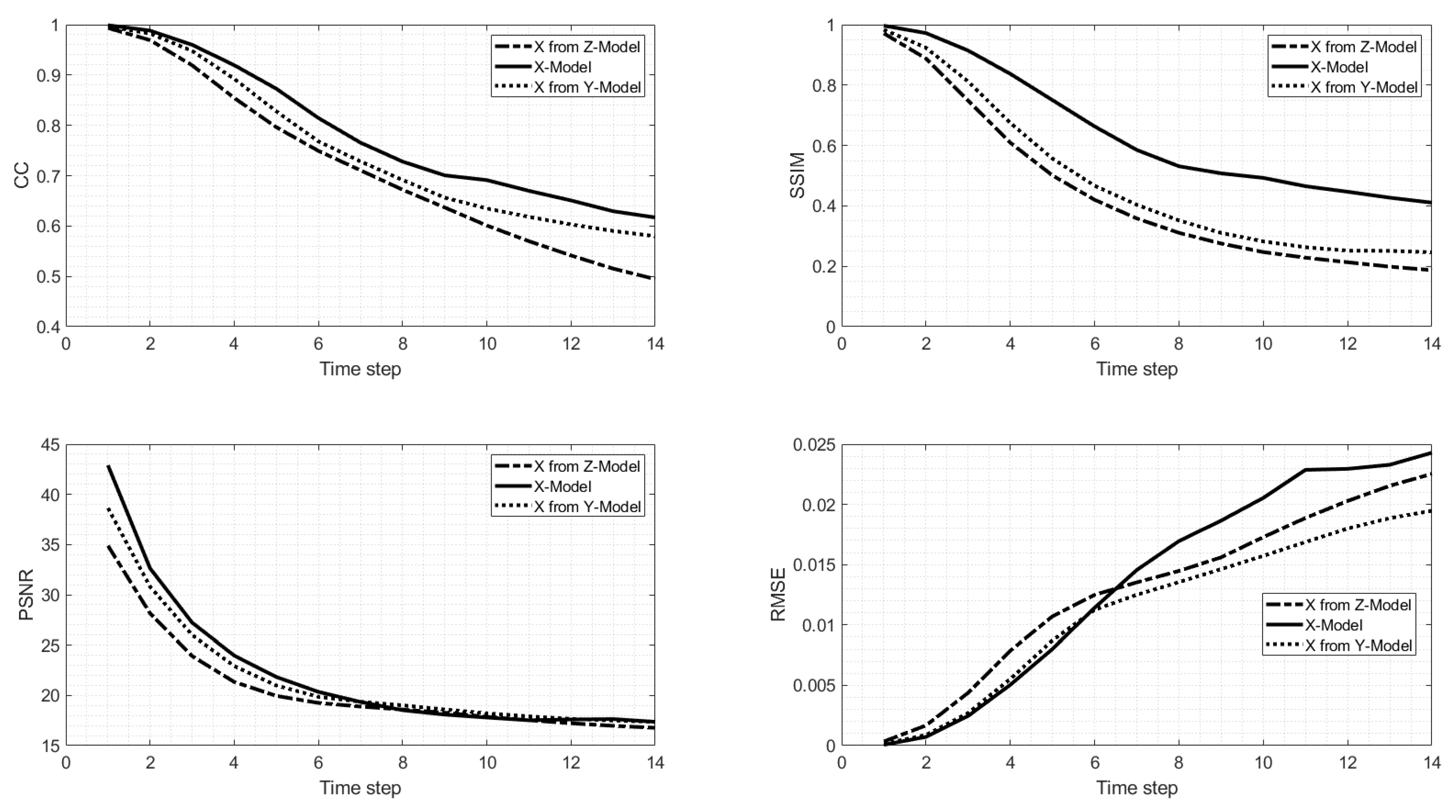

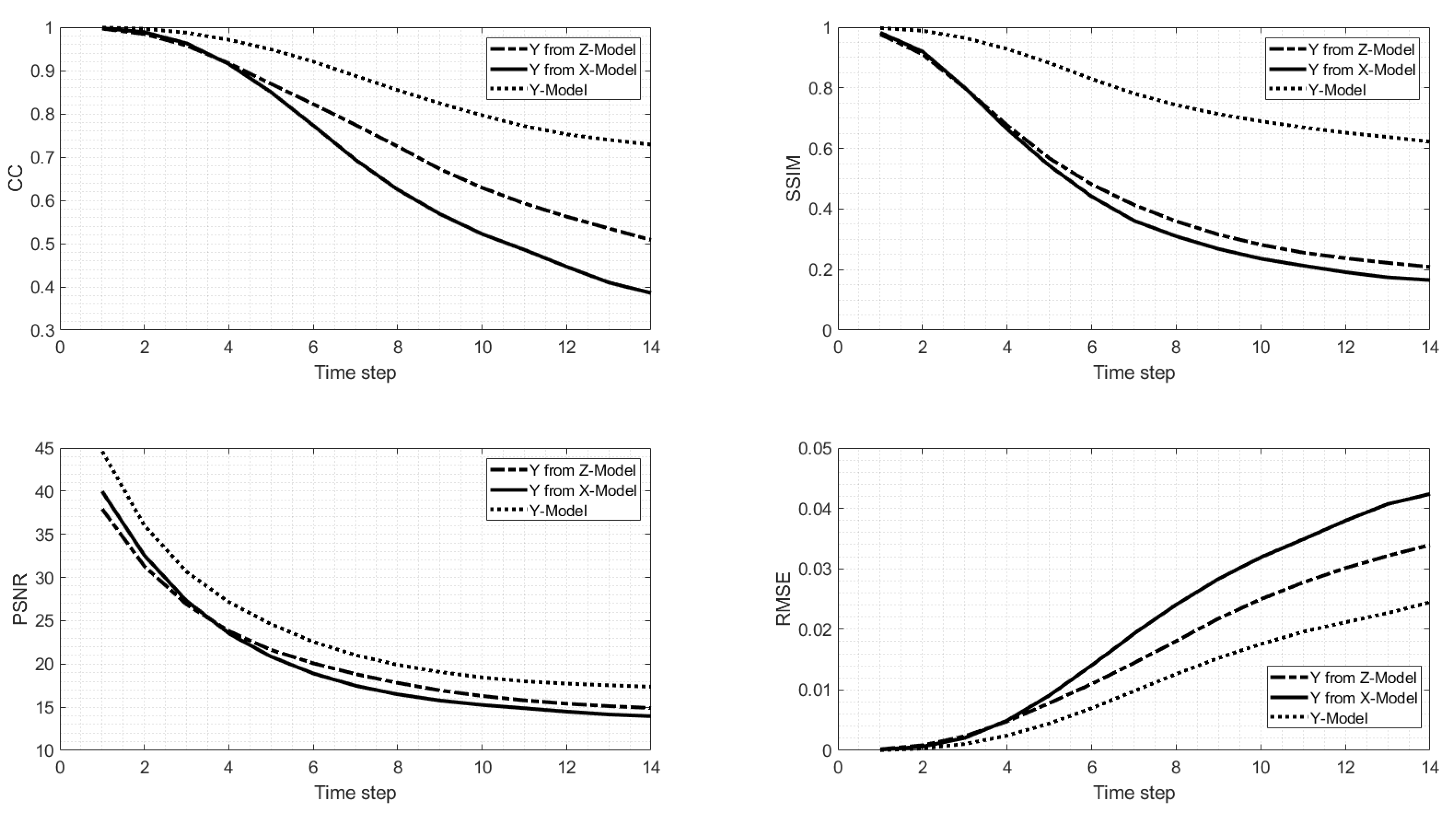

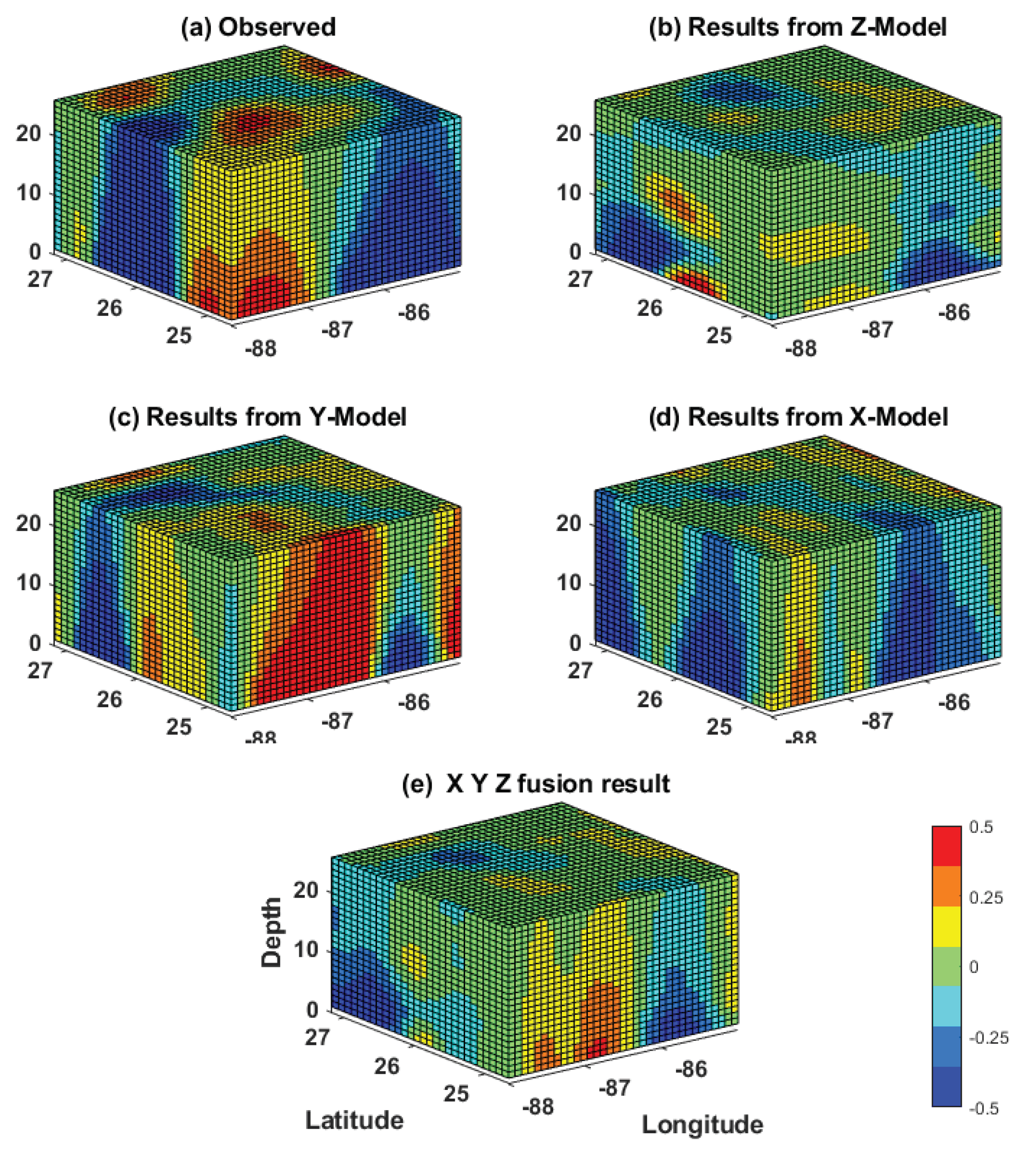

4.2. Directional Velocity Structure Prediction Dependency

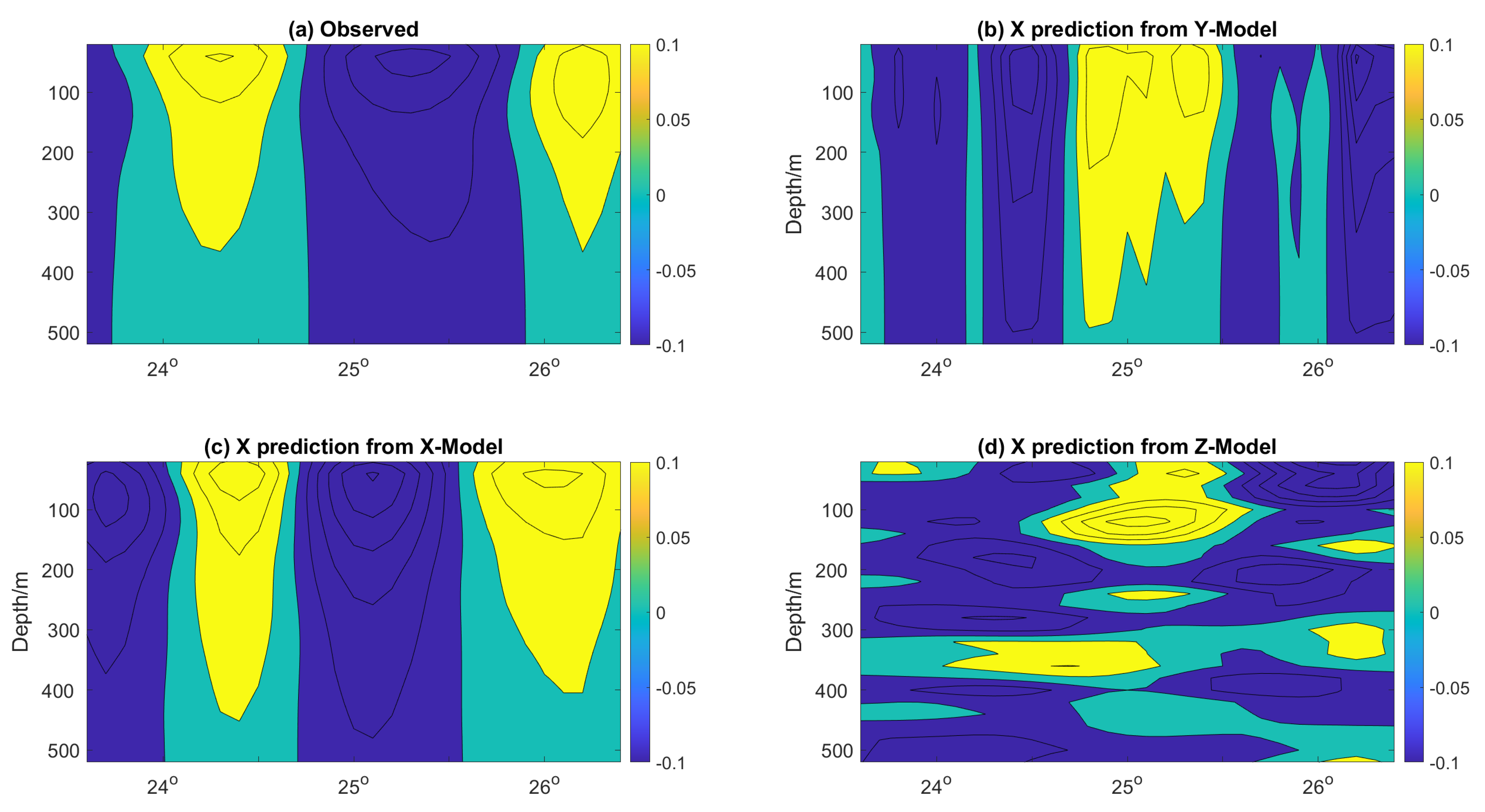

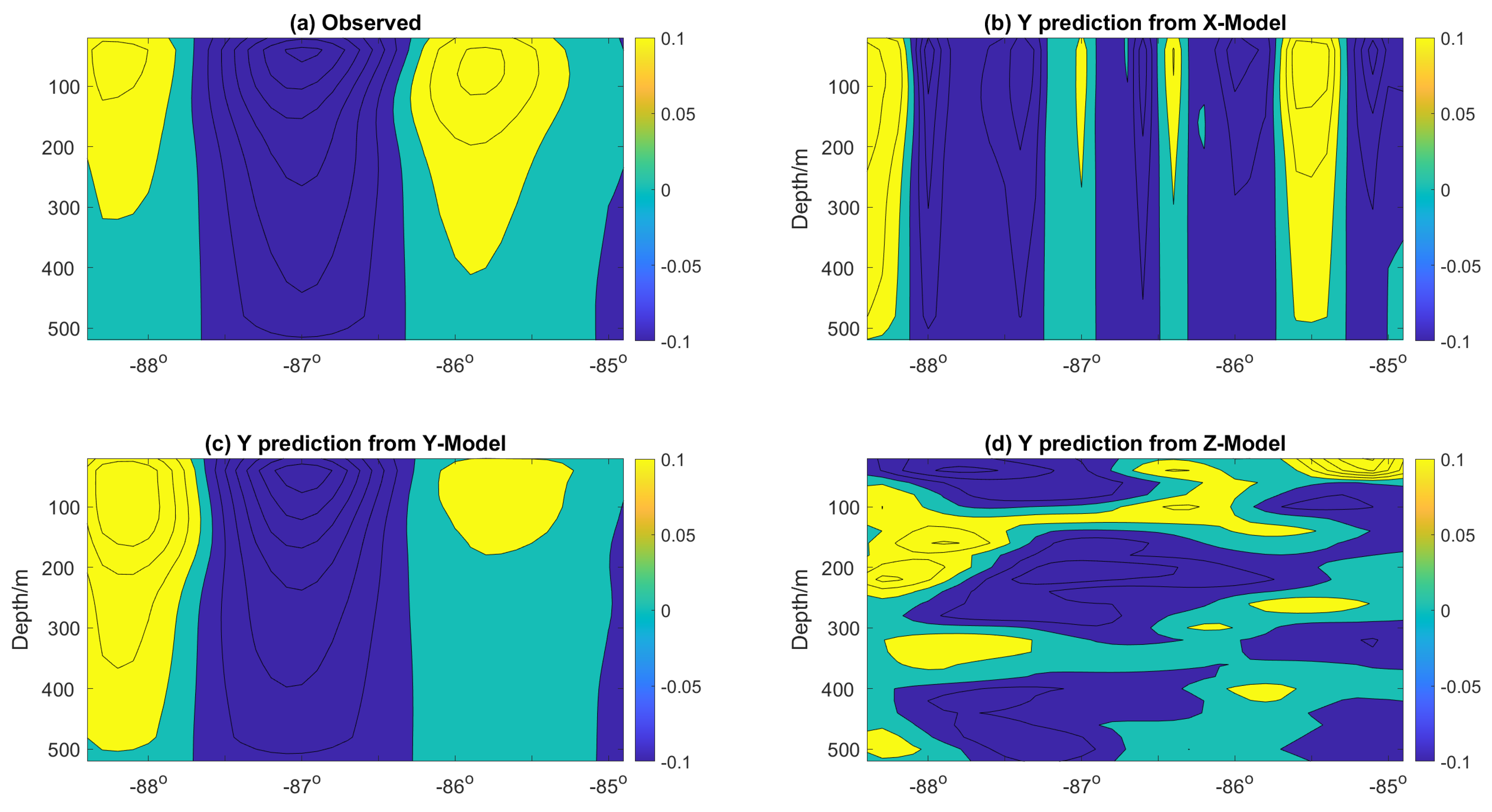

4.3. Vertical Velocity Structure Prediction

4.4. Fusion of the Models’ Predictions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, N. The global ocean data assimilation experiment (GODAE). In Proceedings of the Monitoring the Oceans in the 2000s: An Integrated Approach, Biarritz, France, 15–17 October 1997. [Google Scholar]

- Schiller, A.; Davidson, F.; DiGiacomo, P.M.; Wilmer-Becker, K. Better Informed Marine Operations and Management: Multidisciplinary Efforts in Ocean Forecasting Research for Socioeconomic Benefit. Bull. Am. Meteorol. Soc. 2016, 97, 1553–1559. [Google Scholar] [CrossRef] [Green Version]

- Vinayachandran, P.N.; Davidson, F.; Chassignet, E.P. Toward Joint Assessments, Modern Capabilities, and New Links for Ocean Prediction Systems. Bull. Am. Meteorol. Soc. 2020, 101, E485–E487. [Google Scholar] [CrossRef] [Green Version]

- MERSEA IP. List of Internal Metrics for the MERSEA-GODAE Global Ocean: Specification for Implementation; Mercator Ocean: Ramonville Saint-Agne, France, 14 March 2006; Available online: https://www.clivar.org/sites/default/files/documents/wgomd/GODAE_MERSEA-report.pdf (accessed on 12 September 2021).

- Chao, Y.; Li, Z.; Farrara, J.; McWilliams, J.C.; Bellingham, J.; Capet, X.; Chavez, F.; Choi, J.K.; Davis, R.; Doyle, J.; et al. Development, implementation and evaluation of a data-assimilative ocean forecasting system off the central California coast. Deep Sea Res. Part II Top. Stud. Oceanogr. 2009, 56, 100–126. [Google Scholar] [CrossRef]

- Shulman, I.; Paduan, J.D. Assimilation of HF radar-derived radials and total currents in the Monterey Bay area. Deep Sea Res. Part II Top. Stud. Oceanogr. 2009, 56, 149–160. [Google Scholar] [CrossRef] [Green Version]

- Cooper, C.; Danmeier, D.; Frolov, S.; Stuart, G.; Zuckerman, S.; Anderson, S.; Sharma, N. Real Time Observing and Forecasting of Loop Currents in 2015. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 2–5 May 2016. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Salehinejad, H.; Sankar, S.; Barfett, J.; Colak, E.; Valaee, S. Recent advances in recurrent neural networks. arXiv 2017, arXiv:1801.01078. [Google Scholar]

- Al-Rfou, R.; Choe, D.; Constant, N.; Guo, M.; Jones, L. Character-Level Language Modeling with Deeper Self-Attention. Proc. AAAI Conf. Artif. Intell. 2019, 33, 3159–3166. [Google Scholar] [CrossRef] [Green Version]

- Immas, A.; Do, N.; Alam, M.R. Real-time in situ prediction of ocean currents. Ocean Eng. 2021, 228, 108922. [Google Scholar] [CrossRef]

- Banan, A.; Nasiri, A.; Taheri-Garavand, A. Deep learning-based appearance features extraction for automated carp species identification. Aquac. Eng. 2020, 89, 102053. [Google Scholar] [CrossRef]

- Shamshirband, S.; Rabczuk, T.; Chau, K.W. A Survey of Deep Learning Techniques: Application in Wind and Solar Energy Resources. IEEE Access 2019, 7, 164650–164666. [Google Scholar] [CrossRef]

- Muhamed Ali, A.; Zhuang, H.; Ibrahim, A.; Oneeb, R.; Huang, M.; Wu, A. A machine learning approach for the classification of kidney cancer subtypes using mirna genome data. Appl. Sci. 2018, 8, 2422. [Google Scholar] [CrossRef] [Green Version]

- Fan, Y.; Xu, K.; Wu, H.; Zheng, Y.; Tao, B. Spatiotemporal modeling for nonlinear distributed thermal processes based on KL decomposition, MLP and LSTM network. IEEE Access 2020, 8, 25111–25121. [Google Scholar] [CrossRef]

- Wang, J.L.; Zhuang, H.; Chérubin, L.M.; Ibrahim, A.K.; Muhamed Ali, A. Medium-Term Forecasting of Loop Current eddy Cameron and eddy Darwin formation in the Gulf of Mexico with a Divide-and-Conquer Machine Learning Approach. J. Geophys. Res. Oceans 2019, 124, 5586–5606. [Google Scholar] [CrossRef]

- Dukhovskoy, D.; Leben, R.; Chassignet, E.; Hall, C.; Morey, S.; Nedbor-Gross, R. Characterization of the uncertainty of loop current metrics using a multidecadal numerical simulation and altimeter observations. Elsevier 2015, 100, 140–158. [Google Scholar] [CrossRef]

- Donohue, K.A.; Watts, D.; Hamilton, P.; Leben, R.; Kennelly, M. Loop Current Eddy formation and baroclinic instability. Dyn. Atmos. Ocean. 2016, 76, 195–216. [Google Scholar] [CrossRef] [Green Version]

- Vukovich, F.M.; Maul, G.A. Cyclonic eddies in the eastern Gulf of Mexico. J. Phys. Oceanogr. 1985, 15, 105–117. [Google Scholar] [CrossRef]

- Sturges, W.; Leben, R. Frequency of Ring Separations from the Loop Current in the Gulf of Mexico: A Revised Estimate. J. Phys. Oceanogr. 2000, 30, 1814–1819. [Google Scholar] [CrossRef]

- Leben, R.R. Altimeter-derived loop current metrics. Geophys. Monogr. Am. Geophys. Union 2005, 161, 181. [Google Scholar]

- Chérubin, L.M.; Sturges, W.; Chassignet, E.P. Deep flow variability in the vicinity of the Yucatan Straits from a high-resolution numerical simulation. J. Geophys. Res. Ocean. 2005, 110. [Google Scholar] [CrossRef] [Green Version]

- Chérubin, L.M.; Morel, Y.; Chassignet, E.P. Loop Current Ring Shedding: The Formation of Cyclones and the Effect of Topography. J. Phys. Oceanogr. 2006, 36, 569–591. [Google Scholar] [CrossRef]

- Oey, L.; Ezer, T.; Lee, H. Loop Current, rings and related circulation in the Gulf of Mexico: A review of numerical models and future challenges. Geophys. Monogr. Am. Geophys. Union 2005, 161, 31. [Google Scholar]

- Chassignet, E.; Hurlburt, H.; Metzger, E.; Smedstad, O.; Cummings, A.; Halliwell, G.; Bleck, R.; Baraille, R.; Wallcraft, A.; Lozano, C.; et al. US GODAE: Global Ocean Prediction with the HYbrid Coordinate Ocean Model (HYCOM). Oceanography 2009, 22, 64–75. [Google Scholar] [CrossRef]

- Rowley, C.; Mask, A. Regional and coastal prediction with the Relocatable Ocean Nowcast/Forecast System. Oceanography 2014, 27, 44–55. [Google Scholar] [CrossRef] [Green Version]

- Gopalakrishnan, G.; Cornuelle, B.D.; Hoteit, I.; Rudnick, D.L.; Owens, W.B. State estimates and forecasts of the loop current in the Gulf of Mexico using the MITgcm and its adjoint. J. Geophys. Res. Ocean. 2013, 118, 3292–3314. [Google Scholar] [CrossRef] [Green Version]

- Hamilton, P.; Lugo-Fernández, A.; Sheinbaum, J. A Loop Current experiment: Field and remote measurements. Dyn. Atmos. Ocean. 2016, 76, 156–173. [Google Scholar] [CrossRef]

- Donohue, K.A.; Watts, D.R.; Tracey, K.L.; Greene, A.D.; Kennelly, M. Mapping circulation in the Kuroshio Extension with an array of current and pressure recording inverted echo sounders. J. Atmos. Ocean. Technol. 2010, 27, 507–527. [Google Scholar] [CrossRef] [Green Version]

- Daley, R. Atmospheric Data Analysis; Number 2; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Watts, D.R.; Sun, C.; Rintoul, S. A two-dimensional gravest empirical mode determined from hydrographic observations in the Subantarctic Front. J. Phys. Oceanogr. 2001, 31, 2186–2209. [Google Scholar] [CrossRef]

- Suranjana, S.; Moorthi, S.; Pan, H.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Kistler, R.; Woollen, J.; Behringer, D.; et al. The NCEP climate forecast system reanalysis. Bull. Am. Meteorol. Soc. 2010, 91, 1015–1057. [Google Scholar]

- Arakawa, A.; Lamb, V.R. Computational Design of the Basic Dynamical Processes of the UCLA General Circulation Model. Methods in Computational Physics. Adv. Res. Appl. 1977, 17, 173–265. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Zeng, X.; Li, Y.; He, R. Predictability of the loop current variation and eddy shedding process in the Gulf of Mexico using an artificial neural network approach. J. Atmos. Ocean. Technol. 2015, 32, 1098–1111. [Google Scholar] [CrossRef] [Green Version]

- Casagrande, G.; Stephan, Y.; Varnas, A.C.W.; Folegot, T. A novel empirical orthogonal function (EOF)-based methodology to study the internal wave effects on acoustic propagation. IEEE J. Ocean. Eng. 2011, 36, 745–759. [Google Scholar] [CrossRef]

- Beckers, J.M.; Rixen, M. EOF calculations and data filling from incomplete oceanographic datasets. J. Atmos. Ocean. Technol. 2003, 20, 1839–1856. [Google Scholar] [CrossRef]

- Thomson, R.E.; Emery, W.J. Data Analysis Methods in Physical Oceanography; Newnes: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative Study of CNN and RNN for Natural Language Processing. arXiv 2017, arXiv:1702.01923. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Ali, A.M.; Zhuang, H.; Ibrahim, A.K.; Wang, J.L. Preliminary results of forecasting of the loop current system in Gulf of Mexico using robust principal component analysis. In Proceedings of the 2018 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Louisville, KY, USA, 6–8 December 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- National Academies of Sciences Engineering and Medicine. Understanding and Predicting the Gulf of Mexico Loop Current: Critical Gaps and Recommendations; National Academies Press: Washington, DC, USA, 2018. [Google Scholar]

- Wang, J.L.; Zhuang, H.; Chérubin, L.; Muhamed Ali, A.; Ibrahim, A. Loop Current SSH Forecasting: A New Domain Partitioning Approach for a Machine Learning Model. Forecasting 2021, 3, 570–579. [Google Scholar] [CrossRef]

- Haddad, R.A.; Akansu, A.N. A class of fast Gaussian binomial filters for speech and image processing. IEEE Trans. Signal Process. 1991, 39, 723–727. [Google Scholar] [CrossRef]

- Harlan, J.; Terrill, E.; Hazard, L.; Keen, C.; Barrick, D.; Whelan, C.; Howden, S.; Kohut, J. The Integrated Ocean Observing System high-frequency radar network: Status and local, regional, and national applications. Mar. Technol. Soc. J. 2010, 44, 122–132. [Google Scholar] [CrossRef] [Green Version]

| Training Direction | Single Layer | All Layers |

|---|---|---|

| Z direction | 25.22 s | 649.43 s |

| Y direction | 26.32 s | 722.8 s |

| X direction | 25.16 s | 652.15 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muhamed Ali, A.; Zhuang, H.; VanZwieten, J.; Ibrahim, A.K.; Chérubin, L. A Deep Learning Model for Forecasting Velocity Structures of the Loop Current System in the Gulf of Mexico. Forecasting 2021, 3, 934-953. https://doi.org/10.3390/forecast3040056

Muhamed Ali A, Zhuang H, VanZwieten J, Ibrahim AK, Chérubin L. A Deep Learning Model for Forecasting Velocity Structures of the Loop Current System in the Gulf of Mexico. Forecasting. 2021; 3(4):934-953. https://doi.org/10.3390/forecast3040056

Chicago/Turabian StyleMuhamed Ali, Ali, Hanqi Zhuang, James VanZwieten, Ali K. Ibrahim, and Laurent Chérubin. 2021. "A Deep Learning Model for Forecasting Velocity Structures of the Loop Current System in the Gulf of Mexico" Forecasting 3, no. 4: 934-953. https://doi.org/10.3390/forecast3040056

APA StyleMuhamed Ali, A., Zhuang, H., VanZwieten, J., Ibrahim, A. K., & Chérubin, L. (2021). A Deep Learning Model for Forecasting Velocity Structures of the Loop Current System in the Gulf of Mexico. Forecasting, 3(4), 934-953. https://doi.org/10.3390/forecast3040056