Abstract

The present study employs daily data made available by the STR SHARE Center covering the period from 1 January 2010 to 31 January 2020 for six Viennese hotel classes and their total. The forecast variable of interest is hotel room demand. As forecast models, (1) Seasonal Naïve, (2) Error Trend Seasonal (ETS), (3) Seasonal Autoregressive Integrated Moving Average (SARIMA), (4) Trigonometric Seasonality, Box–Cox Transformation, ARMA Errors, Trend and Seasonal Components (TBATS), (5) Seasonal Neural Network Autoregression (Seasonal NNAR), and (6) Seasonal NNAR with an external regressor (seasonal naïve forecast of the inflation-adjusted ADR) are employed. Forecast evaluation is carried out for forecast horizons h = 1, 7, 30, and 90 days ahead based on rolling windows. After conducting forecast encompassing tests, (a) mean, (b) median, (c) regression-based weights, (d) Bates–Granger weights, and (e) Bates–Granger ranks are used as forecast combination techniques. In the relative majority of cases (i.e., in 13 of 28), combined forecasts based on Bates–Granger weights and on Bates–Granger ranks provide the highest level of forecast accuracy in terms of typical measures. Finally, the employed methodology represents a fully replicable toolkit for practitioners in terms of both forecast models and forecast combination techniques.

1. Introduction

1.1. Motivation

With an average annual growth rate of +4.9% from 2018 to 2019, bednights in European cities grew more than twice as fast as bednights at the national level of the EU-28 countries over the same period [1]. The Austrian capital, Vienna, ranked eighth in terms of bednights out of 119 European cities with 18.6 mn bednights in 2019, which corresponded to a growth of +7.0% from 2018 to 2019 [1]. The current COVID-19 pandemic notwithstanding, these figures make the Austrian capital one of the most popular city destinations of Europe, for leisure and business travelers alike. Despite the increase in Airbnb and similar types of non-traditional accommodation in the city [2], the vast majority of tourists to Vienna stay in one of its 422 hotels with their 34,250 rooms (data as of 2019; [3]).

Accurate hotel room demand forecasts (particularly daily forecasts) are crucial for successful hotel revenue management (e.g., for revenue-maximizing pricing) in a fast-paced and competitive industry [4,5,6,7,8]. Besides their hotel’s absolute performance, (revenue) managers are typically also interested in the relative performance of their hotel with respect to the relevant peer group (also known as the competitive set; [9]) within or beyond the same destination: other hotels from the same hotel class, those that cater to the same type of travelers [10], or those belonging to the same hotel chain [11,12]. Therefore, daily hotel room demand data that are aggregated per hotel class constitute a particularly worthwhile data source for hotel room demand forecasting. Another advantage of aggregated data per hotel class is that these do not suffer from the lack of representativity that individual hotel-level data would.

The data for this study were generously made available by the STR SHARE Center (https://str.com/training/academic-resources/share-center (accessed on 26 November 2021)) in March 2020 and consist of the daily raw data from seven Trend Reports for Vienna for the period from 1 January 2010 to 31 January 2020 () for the hotel classes ‘luxury’ (17 properties as of 31 January 2020), ‘upper upscale’ (31 properties), ‘upscale’ (54 properties), ‘upper midscale’ (76 properties), ‘midscale’ (58 properties), and ‘economy’ (142 properties). STR undertakes this classification primarily according to the hotels’ ADR (https://str.com/data-insights/resources/faq (accessed on 26 November 2021)). The Trend Reports also contain the hotel class ‘all’ (378 properties), i.e., the total of the aforementioned hotel classes. This corresponds to a coverage of approximately 90% of the 422 hotels operating in Vienna in 2019 [3].

Apart from conforming with the characteristics of the data (i.e., weekly and annual seasonal as well as other patterns in the daily data), all candidate forecast models have been selected based on the principles of parsimony and feasibility, so that practitioners (e.g., a revenue manager working in a particular hotel; [5]) can easily and in a timely manner produce and use them by employing mostly automated routines. Sorokina et al. [13] arrive at a similar conclusion. Sensibly, the creation and evaluation of one-day-ahead forecasts should be achievable in the course of one day; thereby, ruling out forecast models such as the Seasonal Autoregressive Integrated Moving Average model with an external regressor (SARIMAX; [14]) that are associated with an excessive computational burden. These principles also rule out complex recurrent neural network models such as the Long Short-Term Memory (LSTM) model [15] or the deep learning method proposed by Law et al. [16], which, in turn, has shown better predictive performance than neural network models or Support Vector Machines (SVM) for the case of Macau. Similar results have been found for the Kernel Extreme Learning Machine (KELM) proposed by Sun et al. [17] that has been successfully applied to data for Beijing.

Consequently, the Seasonal Naïve, the Error Trend Seasonal (ETS) model [18,19], the Seasonal Autoregressive Integrated Moving Average (SARIMA) model [14], the Trigonometric Seasonality, Box–Cox Transformation, ARMA Errors, Trend and Seasonal Components (TBATS) model [20], the Seasonal Neural Network Autoregression (Seasonal NNAR) model [21], and a variant of the latter model, the Seasonal NNAR model with an external regressor [21] were selected. As an external regressor, the seasonal naïve (i.e., 365-days-ahead) forecast of the inflation-adjusted Average Daily Rate (ADR), an important realized price measure in hotel revenue management [22,23], has been employed. In more general terms, own price has long been identified as one of the most important economic drivers of tourism demand [24].

As long as different forecast models contain different and useful information, combining them with different forecast combination techniques has been shown to yield even more accurate predictions [25]. To avoid any detrimental impact of underperforming forecast models, the two-step forecast combination procedure suggested by Costantini et al. [26] is employed. Step 1 of this procedure consists of a forecasting encompassing test [27,28]. Only those models surviving these forecast encompassing tests are considered for forecast combination in step 2 of the procedure in terms of the subsequent forecast combination techniques. These techniques also follow the aforementioned principles of parsimony and feasibility. Thus, they represent five “classical” techniques that have proven to be effective in a variety of empirically relevant forecasting situations [29,30,31,32]. These are the mean and the median forecast [28,33], regression-based weights [34], Bates–Granger weights [35], and Bates–Granger ranks [36].

1.2. Related Literature

Accurate demand forecasts are the basis of most business decisions in the tourism industry [24]. Tourism products and services are described as highly perishable because (leisure) tourism demand is highly sensitive to external shocks such as natural or human-made disasters [37]. For instance, the lost revenue from an unsold hotel room cannot be regenerated. Moreover, accurate hotel room demand forecasts are important for planning (e.g., staff scheduling, renovation periods) or balancing overbookings with “no shows” given limited capacities [38]. Therefore, improving the accuracy of tourism demand forecasts is consistently near the top of the agenda for both academics and industry practitioners. This continuous interest has also resulted in two tourism forecast competitions to date [39,40], the latest one specifically focuses on forecasting tourism demand amid the COVID-19 pandemic: three teams have taken part in this competition, producing and evaluating forecasts for three different world regions, notably Africa [41], Asia and the Pacific [42], and Europe [43]. Specifically, during the COVID-19 pandemic, hybrid scenario forecasting (i.e., different quantitative forecasting scenarios coupled with expert judgment) has proven worthwhile [44].

Concerning tourism demand forecasting in general, Athanasopoulos et al. [45] evaluate the predictive accuracy of five hierarchical forecast approaches applied to domestic Australian tourism data. Using data from Hawaii, Bonham et al. [46] employ a vector error correction model to forecast tourism demand. Kim et al. [47] evaluate a number of univariate statistical models in producing interval forecasts for Australia and Hong Kong. In addition to using data from Hong Kong, Song et al. [48] develop and evaluate a time-varying parameter structural time series model. Andrawis et al. [49] explore the benefits of forecast combinations for tourism demand for Egypt. Gunter and Önder [50] assess various uni- and multivariate statistical models to forecast monthly tourist arrivals to Paris from various source markets. Athanasopoulos et al. [51] employ bagging (i.e., bootstrap aggregation) to improve the forecasting of tourism demand for Australia. Li et al. [52] use the Baidu index as a web-based leading indicator to forecast tourist volume within principal component analysis and neural network approaches. Finally, Panagiotelis et al. [53] employ Australian tourism flow data to empirically demonstrate their theoretical conclusion that bias correction before forecast reconciliation leads to higher predictive accuracy compared to using only one of these two approaches.

Pertaining to hotel room demand forecasting in particular, Rajopadhye et al. [6] employ the classical Holt–Winters exponential smoothing model. Haensel and Koole [4] forecast both single bookings as well the aggregate booking curve based on daily data. Google search engine data are used as web-based leading indicators by Pan et al. [54] to predict hotel room demand. Teixeira and Fernandes [55] explore the predictive ability of different neural network models in comparison to univariate statistical models. Song et al. [56] show that hybrid approaches (i.e., a combination of statistical models and expert judgment) improve the forecast accuracy of hotel room demand forecasts for Hong Kong. Yang et al. [57] analyze the predictive ability of the traffic volume of the website of a destination management organization, another web-based leading indicator, for estimating hotel room demand. Guizzardi and Stacchini [58] investigate the usefulness of information on tourism supply in forecasting hotel arrivals. Pereira [5] investigates the ability of the TBATS model to accommodate multiple seasonal patterns simultaneously. Different Poisson mixture models are used by Lee [59] to improve short-term forecast accuracy. In addition, Guizzardi et al. [60] use ask price data from online travel agencies as a leading indicator for daily hotel room demand forecasting. Finally, only a few further tourism and hotel room demand forecasting studies based on daily data have been published to date, with the publications by Ampountolas [61], Bi et al. [62], Chen et al. [63], Schwartz et al. [64] and Zhang et al. [65,66] representing some noteworthy exceptions.

This short review of exemplary studies does not claim to be complete. However, it can be concluded that numerous quantitative forecast models (i.e., uni- and multivariate statistical models, machine learning models and, more recently, hybrids of these two), as well as forecast combination and aggregation techniques, have been applied to generate point, interval, and density forecasts in the ample tourism demand forecasting literature. Their precision has been evaluated using different forecast accuracy measures and statistical tests of superior predictive accuracy for a variety of destinations, source markets, sample periods, data frequencies, forecast horizons, and tourism demand measures. Jiao and Chen [67] or Song et al. [68] can be consulted for recent comprehensive reviews of this literature, as a more detailed review of the tourism forecasting literature lies beyond the scope of this study. However, these recent comprehensive reviews confirm the conventional wisdom yielded by earlier studies that there is no single best tourism demand forecast model able to produce tourism demand forecasts characterized by superior forecast accuracy on all occasions [69,70].

Besides past realizations of the tourism demand measure to allow for habit persistence, economic drivers of tourism demand such as own and competitor’s prices, tourist incomes, marketing expenditures, etc., and dummy variables capturing one-off events have been employed as predictors of tourism demand in multivariate forecast models [24]. For Vienna, with the exception of Smeral [71], almost all published tourism demand forecasting studies to date have been dedicated to web-based leading indicators as predictors, while employing monthly tourist arrivals aggregated at the city level as their tourism demand measure [72,73,74,75].

Therefore, the first contribution of this study lies in the first-time use of more disaggregated hotel class data with a daily frequency and an economic predictor—seasonal naïve forecast of the inflation-adjusted ADR—as an external regressor in one of the forecast models for this important European city destination. This also allows for the evaluation of one-day-ahead and one-week-ahead hotel room demand forecasts, which are crucial for hotel revenue management [5].

The second contribution is the thorough assessment of the accuracy of six forecast models and five forecast combination techniques in terms of four different forecast accuracy measures for seven hotel classes and four forecast horizons: daily, weekly, monthly, and quarterly, which correspond to different planning horizons in hotel revenue management, ranging from the aforementioned very short-term (one-day-ahead and one-week-ahead; [5]) to medium-term planning horizons. The relatively long sample period allows the evaluation of pseudo-ex-ante out-of-sample point forecasts based on rolling windows and at least 185 counterfactual observations (for ), thereby being more robust to potential structural breaks compared to expanding windows [76]. This makes the methodology and the results of this study relevant for the post-COVID-19 period: once the impact of this severe structural break has vanished and the tourism industry has recovered, tourism and hotel room demand forecasting for normal times will become feasible again.

The third contribution is based on the provision of a fully replicable forecasting toolkit for practitioners in terms of both forecast models and forecast combination techniques that are based on mostly automated routines and, therefore, respect the principles of parsimony and feasibility. This toolkit also enables revenue managers working in smaller (boutique) hotels that do not belong to an international hotel chain (i.e., without access to a professional revenue management system; [13,38]) to employ the proposed methodology on their own hotel-level dataset to easily create and use reliable hotel room demand forecasts and, in the following, benchmark their own hotel against the performance of other hotels from the relevant peer group.

The fourth contribution of this study lies in its use of the seasonal naïve forecast of the inflation-adjusted ADR as an external regressor, which makes (a) the forecast evaluation completely ex-ante and, thereby, attends to a recent call in the tourism demand forecasting literature for more ex-ante forecasting [77] and (b) avoids any impact of unrelated general price level changes. Moreover, this variable is employed within the Seasonal NNAR model with an external regressor [21], which has not been used regularly in tourism demand forecasting to date.

Finally, the fifth contribution of this research is the first-time application of the two-step forecast combination procedure suggested by Costantini et al. [26] in a hotel room demand forecasting setting. While having become more popular in general tourism demand forecasting research—beginning with the pioneering contribution by Fritz et al. [78], Song et al. [68] count 17 studies on tourism demand forecast combination in their recent review study covering 211 key papers published between 1968 and 2018—very few studies have employed any type of forecast combination in a hotel room demand forecasting context; the research by Song et al. [56], Fiori and Foroni [79], as well as Schwartz et al. [80] being notable exceptions.

The remainder of this study is structured as follows. Section 2 describes the data. Section 3 presents the employed forecast models and forecast combination techniques. Section 4 lays out the forecasting procedure and presents and discusses the forecast evaluation results. Section 5 draws some overall conclusions including managerial implications and limitations. Supporting tables are provided in Appendix A.

2. Data

The forecast variable of interest in the data made available by the STR SHARE Center is hotel room demand (i.e., the number of rooms sold per day by hotel class), while the seasonal naïve (i.e., 365-days-ahead) forecast of the ADR (in euros) is employed as the external regressor in one of the forecast models (see Section 3), thereby ensuring that the forecasts produced by this model are ex-ante. Given a time span of more than ten years, and to avoid any impact of unrelated general price level changes, the ADR has been inflation-adjusted using Austria’s monthly Harmonized Index of Consumer Prices (HICP) obtained from Statistics Austria with 2015 as its base year. The temporal disaggregation of the HICP, which was necessary for inflation adjustment, was undertaken using the ‘tempdisagg’ package for R [81]. All further calculations were also performed in R [82] and RStudio [83], thereby drawing primarily on the functions implemented in the ‘forecast’ package [84,85].

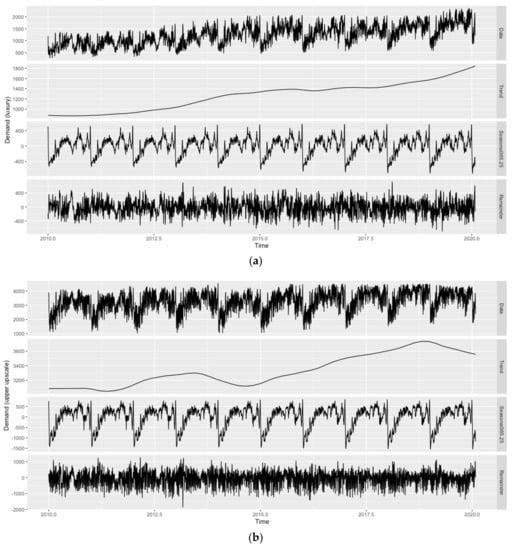

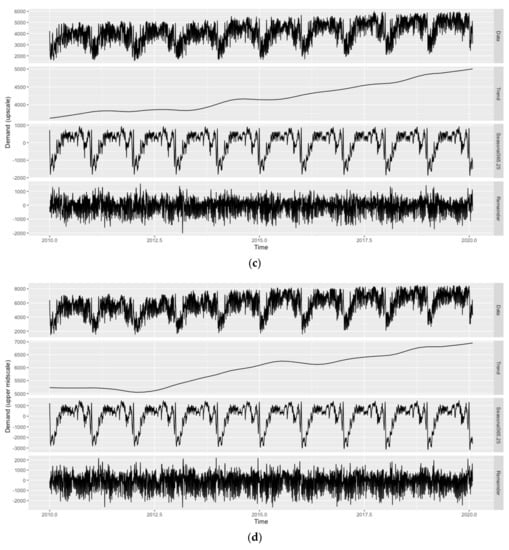

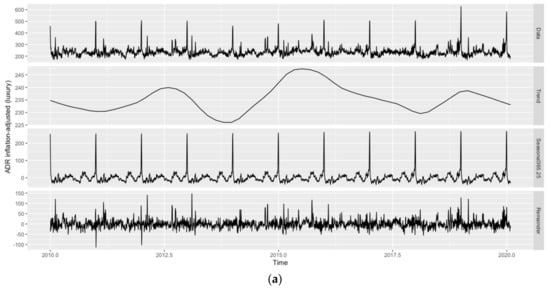

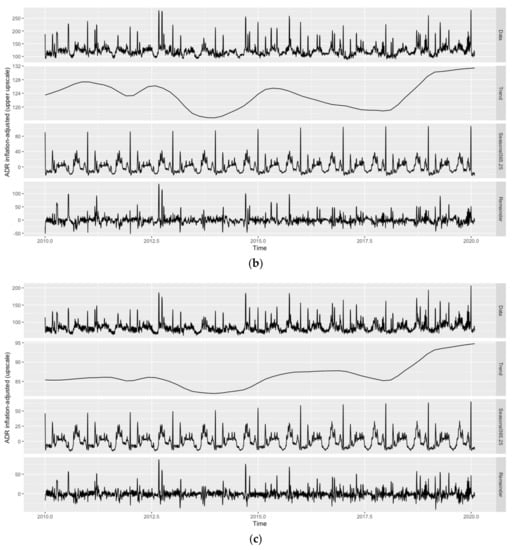

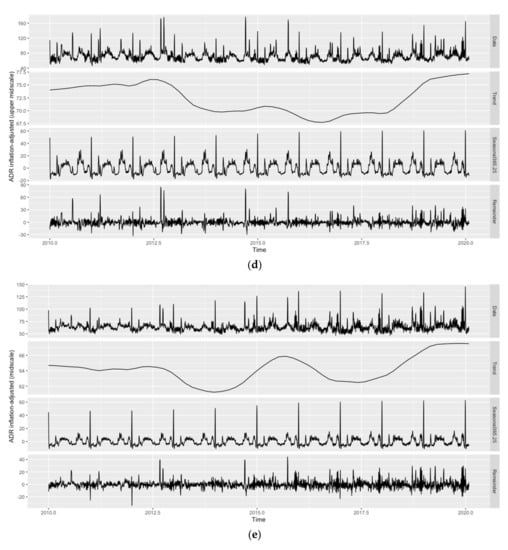

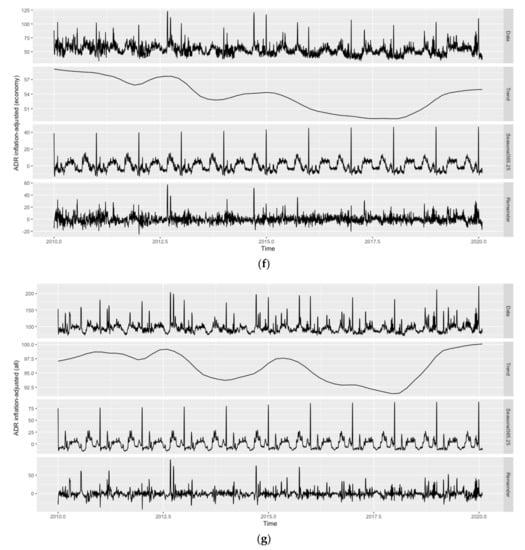

Due to the daily frequency of the data, the presence of seasonal patterns is likely. Figure 1 (hotel room demand) and 2 (inflation-adjusted ADR) show the original time series as well as its trend, weekly () and annual seasonal patterns (), and remainder components across all hotel classes as obtained by Seasonal-Trend decomposition using Locally estimated scatterplot smoothing (STL decomposition; [86]), while employing the ‘mstl()’ function of the ‘forecast’ package. Given the weekly and annual seasonality across variables and hotel classes, with distinct troughs in hotel room demand for most of January and February and on Sundays, the applicability of forecast models allowing for seasonal patterns is evident (see Figure 1). As can also be seen from Figure 1, both patterns are comparably less pronounced for the ‘luxury’ hotel class. Since the annual seasonal pattern has a much higher amplitude than the weekly seasonal pattern across hotel classes, the focus of this study is on the former. Moreover, the weekly seasonal pattern also appears to be less regular.

Figure 1.

Evolution and STL decomposition of hotel room demand in Vienna. Hotel classes from top to bottom: (a) ‘luxury’, (b) ‘upper upscale’, (c) ‘upscale’, (d) ‘upper midscale’, (e) ‘midscale’, (f) ‘economy’, and (g) ‘all’. Source: STR SHARE Center, own illustration using R.

In line with Pereira [5], quarterly or monthly seasonal patterns are not visible and would not be reasonable either, as, for instance, the first days of January still belong to the Christmas/New Year high season, while the remainder of the month belongs to the aforementioned low season. Similarly, January and February observations belong to the same quarter as March observations, yet March cannot be characterized as part of the annual trough. It should further be noted that STL decomposition has only been applied to showcase the different trends and seasonal components in the data. How to deal with any of these components in the forecast models, e.g., whether to treat trends as stochastic or deterministic if present, is determined during the model selection stage (see Section 3).

Since the sample period runs from 1 January 2010 to 31 January 2020 (i.e., after the Financial Crisis/Great Recession period from 2008 to 2009 and before the COVID-19 pandemic starting in March 2020), no structural breaks are visible for either of the variables across hotel classes. Vienna is a popular destination for Meetings, Incentives, Conventions and Exhibitions (MICE) tourism, which mostly follows a regular schedule and can, therefore, be considered part of the seasonal component. The only major one-eff event during the sample period, the Eurovision Song Contest taking place in Vienna in May 2015, did not seem to have a noticeable impact on hotel room demand across hotel classes (other major one-off events taking place in Vienna but outside the sample period can be found with the 2008 UEFA European Football Championship or the terrorist attacks of November 2020). Concerning trending patterns, a continuing upward trend for hotel room demand is visible across hotel classes, with the exception of the ‘upper upscale’ hotel class toward the end of the sample period (see Figure 1).

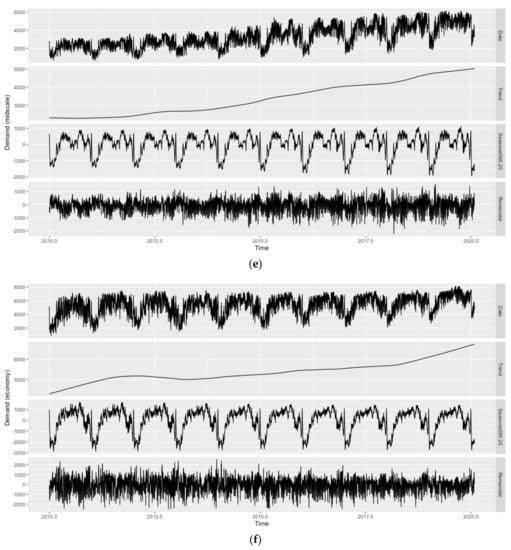

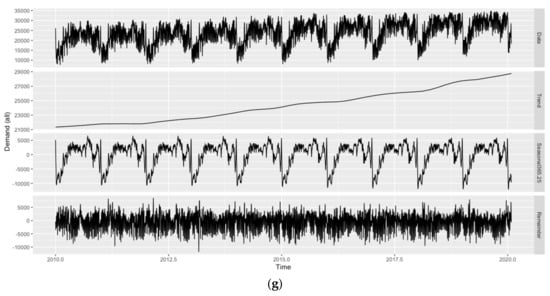

Similar to hotel room demand, inflation-adjusted ADR peaks during the Christmas/New Year high season, but also features two smaller peaks: one in the first half of the year and the other in the second half (see Figure 2). The latter pattern is present in all hotel classes, yet comparably less pronounced for the ‘luxury’ and ‘midscale’ hotel classes. As can also be seen from Figure 2, the amplitude of annual seasonality is comparably high for the ‘luxury’ hotel class. All hotel classes, except for ‘luxury’ and ‘economy’, show a moderate upward trend in terms of the inflation-adjusted ADR over the whole sample period. Similar to hotel room demand, the weekly seasonal pattern is much less pronounced than the annual seasonal pattern across hotel classes and less regular. However, in contrast to hotel room demand, the trend in the inflation-adjusted ADR also shows cyclical behavior, with the years from 2013 to 2014 and 2016 to 2017 representing the cycle’s troughs.

Figure 2.

Evolution and STL decomposition of inflation-adjusted ADR in Vienna. Hotel classes from top to bottom: (a) ‘luxury’, (b) ‘upper upscale’, (c) ‘upscale’, (d) ‘upper midscale’, (e) ‘midscale’, (f) ‘economy’, and (g) ‘all’. Source: STR SHARE Center, own illustration using R.

3. Methodology

This study employs six different forecast models suitable for seasonal data, as well as five different forecast combination techniques. Section 3.1 gives a brief overview of the six applied forecast models, whereas Section 3.2 reviews the five applied forecast combination techniques. All forecasts from the single models are obtained using the ‘forecast’ package while combining those forecasts and the forecast evaluation (see Section 4) are carried out in EViews Version 11. In the following, both hotel room demand and the inflation-adjusted ADR are employed in natural logarithms to ensure variance stabilization. Detailed estimation results and in-sample goodness-of-fit measures across forecast models and hotel classes are available from the author upon request.

3.1. Forecast Models

3.1.1. Seasonal Naïve

The first and simplest model forecasting hotel room demand, , applied in this study is the Seasonal Naïve forecast, which also serves as a benchmark, which should, ideally, be outperformed by more sophisticated forecast models. In the Seasonal Naïve model, the forecast of in period , with denoting the forecast origin and the forecast horizon, corresponds to the realization of on the same day one year previously. Thus:

where in Equation (1) denotes the length of the annual seasonal pattern.

3.1.2. Error Trend Seasonal (ETS)

The second forecast model employed is the ETS model developed by Hyndman et al. [18,19]. This is a state-space framework comprising various traditional exponential smoothing methods and consists of one signal equation in the forecast variable and various state equations for the different components of the data. In general, the following ETS specifications are possible:

where in Equation (2) denotes additive, multiplicative, none, additive damped, and denotes multiplicatively damped [21]. The optimal model specification of this and the remaining three forecast models is selected by the minimum Akaike Information Criterion (AIC; [87]) for all hotel classes and their total. As the ‘ets()’ function of the ‘forecast’ package cannot deal with seasonal patterns within daily data, the ‘stlm()’ function is employed. This function first deseasonalizes the data with STL decomposition and then parses the deseasonalized data to the ‘ets()’ function, where the search for optimal model specifications is carried out only for non-seasonal ETS models. Finally, the forecast values are reseasonalized by applying the last year of the seasonal component obtained from STL decomposition [84,85].

3.1.3. Seasonal Autoregressive Integrated Moving Average (SARIMA)

The third forecast model used in the present study is the SARIMA model [14]. A SARIMA model reads as follows:

where in Equation (3) denote lag polynomials of orders , respectively. represents the degree of seasonal integration of the forecast variable, , while represents the degree of non-seasonal integration. Finally, denotes a potentially non-zero mean and the error term.

As mentioned before, the optimal model specification (i.e., the optimal lag orders ) is selected by minimizing the AIC. The maximum lag order of the non-seasonal AR and MA components is set to (to indirectly allow for the weekly seasonal pattern), while the maximum lag order of the seasonal AR and MA components is set to . The maximum degree of non-seasonal integration is set to , whereas the maximum degree of seasonal integration is set to . The degree of seasonal integration is determined by conducting the Augmented Dickey–Fuller (ADF) unit root test. Given the daily frequency of the data, a measure of seasonal strength computed from an STL decomposition is employed to select the number of seasonal differences. In this study, the ‘auto.arima()’ function of the ‘forecast’ package is used to implement the SARIMA model while enabling parallel computing.

3.1.4. Trigonometric Seasonality, Box–Cox Transformation, ARMA Errors, Trend and Seasonal Components (TBATS)

The fourth forecast model under scrutiny is one that can deal with multiple seasonal patterns at a time (in the present case: and ): the TBATS model [20]. A TBATS model for the forecast variable reads as follows [20]:

Equation (4) represents the Box–Cox transformation, where as is employed in natural logarithm throughout. Equation (5) is the measurement equation in . Equation (6) is the equation for the local level in period . Equation (7) is the equation for the short-run trend in period with denoting the long-term trend. Equation (8) gives the ARMA process with assumed to be distributed and denoting lag polynomials of orders , respectively. indicates the damping parameter of the trend, whereas are smoothing parameters.

Equations (9)–(11) correspond to the trigonometric representation of the -th seasonal component . Equation (10) is the equation for the stochastic level of the -th seasonal component . Equation (11) is the equation for the stochastic growth in the level of the -th seasonal component . is defined as . represents the number of Fourier terms needed for the -th seasonal component. Finally, denote smoothing parameters [20]. The optimal model specification is again selected by minimizing the AIC (). The ‘tbats()’ function of the ‘forecast‘ package is used to implement the TBATS model in this study while enabling parallel computing.

3.1.5. Seasonal Neural Network Autoregression (Seasonal NNAR)

The fifth employed forecast model is the Seasonal NNAR model [21]. A Seasonal NNAR model for the forecast variable is a multilayer feed-forward neural network comprising (1) one input layer, (2) one hidden layer with several hidden neurons, and (3) one output layer [21]. Each layer consists of several nodes and receives inputs from the previous layer such that the sequence (1) → (2) → (3) holds. Consequently, the outputs of one layer correspond to the inputs of the subsequent layer. The inputs for a single hidden neuron () from the hidden layer are a linear combination consisting of a weighted average of the outputs of the input layer , which are transformed by a nonlinear sigmoid function to become the inputs for the output layer :

while the parameters and in Equation (12) are learned from the data [21]; starting from random starting weights for and and setting the decay parameter equal to 0.1, the neural network is trained 100 times, while the lag order of the seasonal AR component is set to . The optimal lag order of the non-seasonal AR component is again selected by minimizing the AIC. The number of hidden neurons , in turn, is determined according to the rule and rounded to the nearest integer [21]. In this study, the ‘nnetar()’ function of the ‘forecast’ package is used to implement the Seasonal NNAR model while enabling parallel computing.

3.1.6. Seasonal NNAR with an External Regressor

The sixth and final forecast model is the Seasonal NNAR model with an external regressor [21], a variant of the forecast model presented in Section 3.1.5. In this variant, the seasonal naïve forecast of inflation-adjusted ADR is employed across hotel classes as a candidate predictor (see Section 2). Also here, the ‘nnetar()’ function of the ‘forecast’ package is used to implement the Seasonal NNAR model with an external regressor while enabling parallel computing.

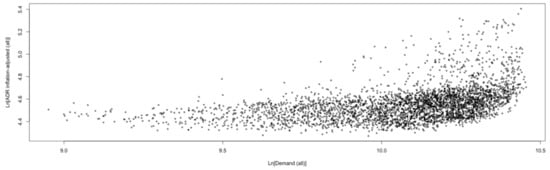

As can be seen from Figure 3, hotel room demand and inflation-adjusted ADR feature a positive correlation for hotel class ‘all’, which ostensibly appears to violate the law of demand. However, it should be noted that the ADR is not the price offered to customers before booking a hotel, but the price realized as a result of successful hotel revenue management [22,23]. Thus, Giffen or Veblen effects can be safely ruled out. Calculating correlation coefficients between the remaining components of the two variables after STL decomposition (in order to preclude any potentially confounding influence of the trend or seasonal components) reveals only positive values for all hotel classes: ‘luxury’ (0.25), ‘upper upscale’ (0.44), ‘upscale’ (0.45), ‘upper midscale’ (0.39), ‘midscale’ (0.38), ‘economy’ (0.27), and ‘all’ (0.48). Graphs for the remaining hotel classes are available from the author upon request.

Figure 3.

Scatterplot of hotel room demand and inflation-adjusted ADR in Vienna for hotel class ‘all’. Source: STR SHARE Center, own illustration using R.

Apart from these non-negligible positive correlations, the null hypothesis of bivariate Granger causality tests [88]—of inflation-adjusted ADR not Granger-causing hotel room demand (both in natural logarithms)—is rejected at the 0.1% significance level across hotel classes when using the ‘grangertest()’ function. Consequently, it is ex-ante and very likely that the information contained in the inflation-adjusted ADR is relevant to the forecaster at the forecast origin in terms of improving forecast accuracy (yet, these results do not claim or imply any exogeneity of the inflation-adjusted ADR). Detailed test results are available from the author upon request.

3.2. Forecast Combination Techniques

In the following, a combined forecast, , for different forecast horizons, (), is to be understood as a combination of (), not perfectly collinear single forecasts, , observed at the same time point, ().

3.2.1. Mean Forecast

The first and simplest forecast combination technique applied in this study is the mean forecast, which also serves as a benchmark, which should, ideally, be outperformed by more sophisticated forecast combination techniques [28,33]. It reads as follows:

3.2.2. Median Forecast

A time-varying alternative to the mean forecast that is more robust to outliers is the median forecast, whereby the median forecast at each point in time receives a weight of 1 and all other forecasts a weight of 0 [28,33]:

3.2.3. Regression-Based Weights

The combined forecast with regression-based weights as obtained from ordinary least squares (OLS) regression [34], where the intercept is included to correct for forecast bias, reads as follows:

3.2.4. Bates–Granger Weights

Bates and Granger [35] recommend assigning higher weights to those single forecasts characterized by a lower mean square error (MSE), thus, rewarding those forecast models with a better historical track record:

3.2.5. Bates–Granger Ranks

Finally, Aiolfi and Timmermann [36] suggest using the rank of the MSE of the single forecasts to make Bates–Granger-type weights independent of correlations between forecast errors:

4. Forecasting Procedure and Forecast Evaluation Results

4.1. Forecasting Procedure

Given the relatively long sample period, pseudo-ex-ante out-of-sample point forecasts from the forecast models for the forecast horizons , and days ahead are produced based on rolling windows moving one day ahead at a time. Due to the absence of structural breaks (see Section 2) and the associated computational burden, all optimal model specifications per hotel class are only selected once. For the same reasons, all forecast models are only estimated once per hotel class for the first rolling window, which ranges from 1 January 2010 to 31 January 2019. The evaluation window for , thus, ranges from 1 February 2019 to 30 January 2020, resulting in 364 counterfactual observations. For , it ranges from 7 February 2019 to 30 January 2020, resulting in 358 observations. For , it ranges from 2 March 2019 to 30 January 2020, resulting in 335 observations. Finally, for , the evaluation window ranges from 1 May 2019 to 30 January 2010, resulting in 275 counterfactual observations.

Forecast accuracy is measured in terms of the root mean square error (RMSE), the mean absolute error (MAE), the mean absolute percentage error (MAPE), the mean absolute scaled error (MASE), as well as the sum of ranks over these four measures. One forecast combination technique (i.e., regression-based weights) and one forecast accuracy measure (i.e., MASE), require the splitting of the evaluation samples into training and test sets [34,89]. In doing so, the first 90 observations per forecast horizon, forecast model, and hotel class are withheld for the training set. Thus, the different forecast accuracy measures can be calculated based on test sets comprising 274 (), 268 (), 245 (), and 185 () forecast values, respectively. However, a forecast encompassing test with the null hypothesis that a specific forecast model contains all information enclosed in the remaining forecast models [27,28] is carried out as step 1 of the two-step forecast combination procedure suggested by Costantini et al. [26] to investigate if combining the forecasts obtained from (some of) the forecast models is a viable option in the first place. Only those models surviving these forecast encompassing tests are considered for forecast combination in step 2 of the procedure in terms of the different forecast combination techniques.

4.2. Forecast Evaluation Results

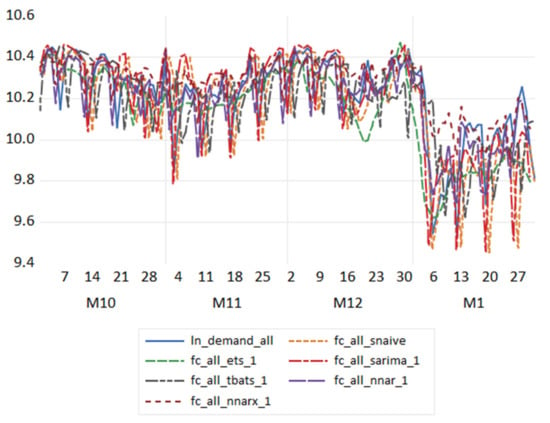

Figure 4 shows an exemplary visual comparison of all forecast models for hotel class ‘all’ and for the period from 1 October 2019 to 31 January 2020 (for better visibility). Prior to consulting the forecast accuracy measures, a mere visual inspection of this graph shows that none of the employed forecast models are widely off track and that they are able to pick up the seasonal drop in hotel room demand after New Year. Graphs for the remaining hotel classes and forecast horizons are available from the author upon request (in Figure 4 as well as in Table 1 and Table A1, Table A2, Table A3, Table A4, Table A5 and Table A6, the Seasonal NNAR model (with an external regressor) is abbreviated as ‘NNAR(X)’.).

Figure 4.

Historical data (solid line) and forecast comparison graph of all forecast models for hotel class ‘all’ and for the period from 1 October 2019 to 31 January 2020. Source: STR SHARE Center, own illustration using EViews Version 11.

Table 1.

Forecast evaluation results for the hotel class ‘all’. Source: STR SHARE Center, own calculations using R and EViews.

Table 1 and Table A1, Table A2, Table A3, Table A4, Table A5 and Table A6 in Appendix A summarize the forecast evaluation results for all forecast models, forecast combination techniques, forecast horizons, and hotel classes: Table 1 for ‘all’, Table A1 for the hotel class ‘luxury’, Table A2 for ‘upper upscale’, Table A3 for ‘upscale’, Table A4 for ‘upper midscale’, Table A5 for ‘midscale’, and Table A6 for ‘economy’. The smallest RMSE, MAE, MAPE, MASE, and sum of rank values for each hotel class and forecast horizons are indicated in boldface. According to the various forecast accuracy measures, the best forecast model or forecast combination technique, respectively, per hotel class and forecast horizon is typically indicated consistently, with only six exceptions for the RMSE (‘upscale’ for , ‘upper midscale’ for , ‘economy’ for , ‘all for ), which is not too surprising as this is the only employed forecast accuracy measure based on squared forecast errors.

Given that six forecast models and five forecast combination techniques are competing, the lowest possible sum of ranks across the four forecast accuracy measures is equal to 4, whereas the highest possible sum of ranks equals 44. Given the six hotel classes and four forecast horizons, a total of 28 cases in terms of the sum of ranks can be distinguished, which are analyzed in more detail in the following. Except for regression-based weights (most prominently: ‘economy’ for ), none of the forecast models or forecast combination techniques result in extremely high forecast errors and should, therefore, not be discarded from the beginning. In four cases (‘upper midscale’ for , ‘economy’ for , ‘all’ for ), regression-based weights cannot even be calculated, as the matrix of the OLS regression is singular and, therefore, cannot be inverted. As already noted by Nowotarski et al. [90], the high correlation of the predictions stemming from the forecast models can make unconstrained regression-based weights unstable. Therefore, the use of this particular forecast combination technique is not recommended for hotel revenue managers, nor beyond this group.

With respect to the single models, the ETS model is able to achieve the lowest sum of ranks in four cases (‘luxury’ for , ‘economy’ for ), the Seasonal NNAR model in three cases (‘luxury’ for , ‘upscale’ for , ‘midscale’ for ), and the SARIMA model in two cases (‘upper upscale’ for , ‘all’ for ). The Seasonal Naïve model, the TBATS model, and the Seasonal NNAR model with an external regressor never achieve the lowest sum of ranks. The fact the that simple Seasonal Naïve benchmark is outperformed by at least one competing forecast model or forecast combination technique on each occasion proves the general viability of using more complex forecasting approaches. One reason why the TBATS model does not perform so well could be the fact that one of two seasonal patterns in the data, the weekly seasonal pattern, is not particularly pronounced (see Section 2). Including the seasonal naïve forecast of the inflation-adjusted ADR as an external regressor in the Seasonal NNAR model does not seem to have a positive effect on forecast accuracy either, at least not directly. However, the information included in these forecast models should not be discarded. As all F-test statistics of the forecast encompassing tests are statistically significantly different from zero—at least at the 10% level and in many cases even at the stricter 0.1% level—all forecast models seem to possess some unique information and, therefore, survive step 1 of the two-step forecast combination procedure suggested by Costantini et al. [26]. Thus, all forecast models should be considered for forecast combination.

Apart from the aforementioned regression-based weights, the relevance of forecast combination materializes in terms of superior forecast accuracy of the remaining forecast combination techniques in 19 of 28 cases. The mean forecast is characterized by the lowest sum of ranks in one case (‘upper upscale’ for ). The time-varying and comparably more robust median forecast achieves the lowest sum of ranks in five cases (‘upper upscale’ for , ‘upscale’ for , ‘all’ for ), as does the Bates–Granger weights approach (‘upper midscale’ for , ‘midscale’ for , ‘all’ for ). Finally, Bates–Granger ranks, which make Bates–Granger-type weights independent of correlations between forecast errors and can, therefore, be interpreted as a refinement of traditional Bates–Granger weights, achieve the lowest sum of ranks in eight cases (‘upper upscale’ for , ‘upper midscale’ for , ‘midscale’ for , ‘economy’ for , ‘all’ for ). Together, combined forecasts based on Bates–Granger weights and Bates–Granger ranks provide the highest level of forecast accuracy in the relative majority of cases (i.e., in 13 of 28).

5. Conclusions

The present study employed daily data made available by the STR SHARE Center over the period from 1 January 2010 to 31 January 2020 for six Viennese hotel classes and their total. The forecast variable of interest was hotel room demand. As forecast models, (1) Seasonal Naïve, (2) ETS, (3) SARIMA, (4) TBATS, (5) Seasonal NNAR, and (6) Seasonal NNAR with an external regressor (seasonal naïve forecast of the inflation-adjusted ADR) were employed. Forecast evaluation was carried out for forecast horizons = 1, 7, 30, and 90 days ahead based on rolling windows. As forecast combination techniques, (a) mean, (b) median, (c) regression-based weights, (d) Bates–Granger weights, and (e) Bates–Granger ranks were calculated.

In the relative majority of cases (i.e., in 13 of 28), combined forecasts based on Bates–Granger weights and Bates–Granger ranks provided the highest level of forecast accuracy in terms of typical forecast accuracy measures (RMSE, MAE, MAPE, and MASE) and their lowest sum of ranks. The mean and the median forecast performed best in another six cases, thus, making forecast combination a worthwhile endeavor in 19 of 28 cases. However, due to its instability, forecast combination with regression-based weights is not recommended. Concerning single models, the ETS model was able to achieve the lowest sum of ranks in four cases, the Seasonal NNAR model in three cases, and the SARIMA model in two cases. Although the Seasonal Naïve model, the TBATS model, and the Seasonal NNAR model with an external regressor never achieved the lowest sum of ranks, considering the information contained in these models proved worthwhile for forecast combination according to forecast encompassing test results.

One limitation of this study is its temporal and geographical focus. Another limitation is that the data used therein are not freely available and need to be purchased. Furthermore, data at the individual hotel level were not available to the author, which, however, would have suffered from a lack of representativity. Nonetheless, the suggested forecast models and two-step forecast combination procedure can be applied to other time periods, (city) destinations, and datasets, thus, making this research fully replicable. Especially practitioners (e.g., revenue managers working in smaller (boutique) hotels without access to a professional revenue management system; [13,38]) benefit from the results of this study as it provides a toolkit in terms of employing the proposed methodology on their own hotel-level dataset to easily generate reliable hotel room demand forecasts. If a single hotel did not possess a long enough sample of time-series data, also expanding windows instead of rolling windows could be easily implemented.

Once the author gets access to data beyond 31 January 2020, an investigation of predictive performance for the forecast models and forecast combination techniques during the COVID-19 pandemic within adequately designed forecasting scenarios along the lines of Zhang et al. [44] would be of particular interest, as would observing which of the employed forecast models would be the fastest to pick up any directional changes. As this would constitute a forecasting exercise not for normal but rather for turbulent times (i.e., during and with a severe structural break), such an exercise would call for a separate investigation. Another idea for future research could be the inclusion of seasonal naïve forecasts of web-based leading indicators as predictors in addition to the seasonal naïve forecast of the inflation-adjusted ADR, for which, of course, daily data would need to be available. Not only would this approach satisfy the need for this type of predictor to be ex-ante [77] but would also take up a recent recommendation by Hu and Song [91] to combine these two types of tourism (and hotel room) demand predictors. Finally, other multi-step forecast combination procedures based on the Model Confidence Set (MCS; [92]) as suggested by Amendola et al. [93] or Aras [94] could be considered.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of these data. Data was obtained from STR SHARE Center and are available from the author with the permission of STR SHARE Center.

Acknowledgments

The author would like to express his particular gratitude toward Steve Hood, Senior Vice President of Research at STR and Director at STR SHARE Center for generously providing the data. Moreover, he wants to thank the two anonymous reviewers of this journal, Bozana Zekan, and David Leonard, as well as the participants of the 14th International Conference on Computational and Financial Econometrics (12/2020), of Modul University’s Research Seminar (6/2021), and of the 41st International Symposium on Forecasting (6/2021) for their helpful comments and suggestions for improvement.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A

Table A1.

Forecast evaluation results for the hotel class ‘luxury’. Source: STR SHARE Center, own calculations using R and EViews.

Table A1.

Forecast evaluation results for the hotel class ‘luxury’. Source: STR SHARE Center, own calculations using R and EViews.

| h = 1 | Forecast encompassing tests | h = 7 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_LUXURY_SNAIVE | 16.32009 | 0.0000 | FC_LUXURY_SNAIVE | 12.52591 | 0.0000 | ||||||||

| FC_LUXURY_ETS_1 | 2.85836 | 0.0156 | FC_LUXURY_ETS_7 | 10.00088 | 0.0000 | ||||||||

| FC_LUXURY_SARIMA_1 | 10.92253 | 0.0000 | FC_LUXURY_SARIMA_7 | 16.40607 | 0.0000 | ||||||||

| FC_LUXURY_TBATS_1 | 18.63967 | 0.0000 | FC_LUXURY_TBATS_7 | 8.035354 | 0.0000 | ||||||||

| FC_LUXURY_NNAR_1 | 19.29124 | 0.0000 | FC_LUXURY_NNAR_7 | 20.33636 | 0.0000 | ||||||||

| FC_LUXURY_NNARX_1 | 9.485913 | 0.0000 | FC_LUXURY_NNARX_7 | 20.49514 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_LUXURY_SNAIVE | 0.209518 | 0.161318 | 2.15446 | 0.79043 | 44 | FC_LUXURY_SNAIVE | 0.209037 | 0.160055 | 2.138264 | 0.829619 | 33 | ||

| FC_LUXURY_ETS_1 | 0.087544 | 0.064085 | 0.857725 | 0.314005 | 4 | FC_LUXURY_ETS_7 | 0.170569 | 0.123925 | 1.661811 | 0.642345 | 4 | ||

| FC_LUXURY_SARIMA_1 | 0.095704 | 0.071188 | 0.952914 | 0.348809 | 20 | FC_LUXURY_SARIMA_7 | 0.207878 | 0.157969 | 2.113442 | 0.818806 | 29 | ||

| FC_LUXURY_TBATS_1 | 0.191881 | 0.158181 | 2.124453 | 0.775059 | 40 | FC_LUXURY_TBATS_7 | 0.207064 | 0.16589 | 2.225319 | 0.859863 | 34 | ||

| FC_LUXURY_NNAR_1 | 0.109821 | 0.079734 | 1.062345 | 0.390682 | 33 | FC_LUXURY_NNAR_7 | 0.191916 | 0.146502 | 1.947688 | 0.759369 | 24 | ||

| FC_LUXURY_NNARX_1 | 0.108945 | 0.087597 | 1.17971 | 0.42921 | 35 | FC_LUXURY_NNARX_7 | 0.229023 | 0.172272 | 2.29216 | 0.892943 | 43 | ||

| Mean forecast | 0.098279 | 0.077238 | 1.031541 | 0.378453 | 24 | Mean forecast | 0.173872 | 0.131478 | 1.752108 | 0.681494 | 8 | ||

| Median forecast | 0.089816 | 0.06866 | 0.917676 | 0.336422 | 10 | Median forecast | 0.179482 | 0.13769 | 1.833744 | 0.713693 | 19 | ||

| Regression-based weights | 0.107467 | 0.079449 | 1.060927 | 0.389286 | 28 | Regression-based weights | 0.234508 | 0.171612 | 2.284869 | 0.889522 | 41 | ||

| Bates–Granger weights | 0.089893 | 0.069103 | 0.922908 | 0.338592 | 16 | Bates–Granger weights | 0.176806 | 0.133044 | 1.772162 | 0.689612 | 12 | ||

| Bates–Granger ranks | 0.089622 | 0.068704 | 0.917177 | 0.336637 | 10 | Bates–Granger ranks | 0.181203 | 0.135121 | 1.798803 | 0.700377 | 17 | ||

| h = 30 | Forecast encompassing tests | h = 90 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_LUXURY_SNAIVE | 8.055472 | 0.0000 | FC_LUXURY_SNAIVE | 17.73284 | 0.0000 | ||||||||

| FC_LUXURY_ETS_30 | 17.69353 | 0.0000 | FC_LUXURY_ETS_90 | 7.065177 | 0.0000 | ||||||||

| FC_LUXURY_SARIMA_30 | 55.00696 | 0.0000 | FC_LUXURY_SARIMA_90 | 43.44456 | 0.0000 | ||||||||

| FC_LUXURY_TBATS_30 | 2.085499 | 0.0679 | FC_LUXURY_TBATS_90 | 25.83146 | 0.0000 | ||||||||

| FC_LUXURY_NNAR_30 | 23.52884 | 0.0000 | FC_LUXURY_NNAR_90 | 8.878909 | 0.0000 | ||||||||

| FC_LUXURY_NNARX_30 | 16.90916 | 0.0000 | FC_LUXURY_NNARX_90 | 25.27719 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_LUXURY_SNAIVE | 0.213606 | 0.163953 | 2.18938 | 0.95581 | 28 | FC_LUXURY_SNAIVE | 0.231818 | 0.180371 | 2.409105 | 1.450767 | 32 | ||

| FC_LUXURY_ETS_30 | 0.185534 | 0.141494 | 1.895716 | 0.824879 | 4 | FC_LUXURY_ETS_90 | 0.204164 | 0.155037 | 2.067238 | 1.247 | 21 | ||

| FC_LUXURY_SARIMA_30 | 0.335021 | 0.302819 | 4.019495 | 1.765369 | 44 | FC_LUXURY_SARIMA_90 | 0.468366 | 0.438595 | 5.809883 | 3.527725 | 44 | ||

| FC_LUXURY_TBATS_30 | 0.215911 | 0.171995 | 2.302796 | 1.002693 | 32 | FC_LUXURY_TBATS_90 | 0.236342 | 0.191731 | 2.562032 | 1.542139 | 36 | ||

| FC_LUXURY_NNAR_30 | 0.201226 | 0.153374 | 2.036935 | 0.894137 | 12 | FC_LUXURY_NNAR_90 | 0.17141 | 0.131833 | 1.764189 | 1.060365 | 4 | ||

| FC_LUXURY_NNARX_30 | 0.232117 | 0.188084 | 2.492954 | 1.096489 | 40 | FC_LUXURY_NNARX_90 | 0.203281 | 0.166045 | 2.214327 | 1.33554 | 23 | ||

| Mean forecast | 0.196003 | 0.160388 | 2.130115 | 0.935027 | 22 | Mean forecast | 0.213697 | 0.178811 | 2.371894 | 1.43822 | 28 | ||

| Median forecast | 0.194566 | 0.157631 | 2.095555 | 0.918954 | 18 | Median forecast | 0.190474 | 0.154669 | 2.058517 | 1.24404 | 16 | ||

| Regression-based weights | 0.229081 | 0.183769 | 2.437347 | 1.071333 | 36 | Regression-based weights | 0.399399 | 0.374997 | 5.017681 | 3.016191 | 40 | ||

| Bates–Granger weights | 0.196449 | 0.157564 | 2.092582 | 0.918564 | 17 | Bates–Granger weights | 0.180765 | 0.143159 | 1.904875 | 1.151462 | 8 | ||

| Bates–Granger ranks | 0.192562 | 0.153455 | 2.03924 | 0.894609 | 11 | Bates–Granger ranks | 0.186505 | 0.149609 | 1.988532 | 1.203341 | 12 |

Table A2.

Forecast evaluation results for the hotel class ‘upper upscale’. Source: STR SHARE Center, own calculations using R and EViews.

Table A2.

Forecast evaluation results for the hotel class ‘upper upscale’. Source: STR SHARE Center, own calculations using R and EViews.

| h = 1 | Forecast encompassing tests | h = 7 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_UPPER_UPSCALE_SNAIVE | 12.59821 | 0.0000 | FC_UPPER_UPSCALE_SNAIVE | 3.392179 | 0.0055 | ||||||||

| FC_UPPER_UPSCALE_ETS_1 | 31.43229 | 0.0000 | FC_UPPER_UPSCALE_ETS_7 | 12.89008 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_SARIMA_1 | 14.70312 | 0.0000 | FC_UPPER_UPSCALE_SARIMA_7 | 36.16254 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_TBATS_1 | 32.66598 | 0.0000 | FC_UPPER_UPSCALE_TBATS_7 | 11.18837 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_NNAR_1 | 42.66397 | 0.0000 | FC_UPPER_UPSCALE_NNAR_7 | 23.78411 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_NNARX_1 | 32.5047 | 0.0000 | FC_UPPER_UPSCALE_NNARX_7 | 21.28089 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_UPPER_UPSCALE_SNAIVE | 0.150782 | 0.110127 | 1.355925 | 0.700727 | 40 | FC_UPPER_UPSCALE_SNAIVE | 0.151458 | 0.110214 | 1.357374 | 0.72622 | 35 | ||

| FC_UPPER_UPSCALE_ETS_1 | 0.127043 | 0.090924 | 1.118678 | 0.57854 | 32 | FC_UPPER_UPSCALE_ETS_7 | 0.129237 | 0.092119 | 1.133693 | 0.606988 | 20 | ||

| FC_UPPER_UPSCALE_SARIMA_1 | 0.082216 | 0.061995 | 0.761406 | 0.394468 | 4 | FC_UPPER_UPSCALE_SARIMA_7 | 0.147454 | 0.10595 | 1.305618 | 0.698123 | 28 | ||

| FC_UPPER_UPSCALE_TBATS_1 | 0.169696 | 0.130443 | 1.604181 | 0.829996 | 44 | FC_UPPER_UPSCALE_TBATS_7 | 0.176288 | 0.133954 | 1.647736 | 0.882647 | 41 | ||

| FC_UPPER_UPSCALE_NNAR_1 | 0.09789 | 0.064829 | 0.798285 | 0.412501 | 13 | FC_UPPER_UPSCALE_NNAR_7 | 0.136968 | 0.094979 | 1.170773 | 0.625834 | 24 | ||

| FC_UPPER_UPSCALE_NNARX_1 | 0.141889 | 0.100997 | 1.251798 | 0.642634 | 36 | FC_UPPER_UPSCALE_NNARX_7 | 0.167772 | 0.140907 | 1.714852 | 0.928461 | 43 | ||

| Mean forecast | 0.100858 | 0.069609 | 0.859669 | 0.442915 | 23 | Mean forecast | 0.117539 | 0.08629 | 1.062078 | 0.56858 | 10 | ||

| Median forecast | 0.103047 | 0.068461 | 0.84682 | 0.435611 | 22 | Median forecast | 0.115785 | 0.083706 | 1.031675 | 0.551554 | 4 | ||

| Regression-based weights | 0.103007 | 0.069989 | 0.864106 | 0.445333 | 27 | Regression-based weights | 0.15941 | 0.109911 | 1.354395 | 0.724223 | 33 | ||

| Bates–Granger weights | 0.094567 | 0.064543 | 0.797838 | 0.410681 | 8 | Bates–Granger weights | 0.11867 | 0.086254 | 1.062423 | 0.568343 | 10 | ||

| Bates–Granger ranks | 0.09646 | 0.06613 | 0.817481 | 0.420779 | 15 | Bates–Granger ranks | 0.119586 | 0.08653 | 1.066684 | 0.570162 | 16 | ||

| h = 30 | Forecast encompassing tests | h = 90 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_UPPER_UPSCALE_SNAIVE | 3.355434 | 0.0060 | FC_UPPER_UPSCALE_SNAIVE | 8.339223 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_ETS_30 | 13.10286 | 0.0000 | FC_UPPER_UPSCALE_ETS_90 | 12.23476 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_SARIMA_30 | 48.48259 | 0.0000 | FC_UPPER_UPSCALE_SARIMA_90 | 40.91501 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_TBATS_30 | 4.104489 | 0.0014 | FC_UPPER_UPSCALE_TBATS_90 | 4.07548 | 0.0016 | ||||||||

| FC_UPPER_UPSCALE_NNAR_30 | 28.218 | 0.0000 | FC_UPPER_UPSCALE_NNAR_90 | 9.439183 | 0.0000 | ||||||||

| FC_UPPER_UPSCALE_NNARX_30 | 26.92072 | 0.0000 | FC_UPPER_UPSCALE_NNARX_90 | 5.191002 | 0.0002 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_UPPER_UPSCALE_SNAIVE | 0.148575 | 0.107054 | 1.319481 | 0.74533 | 31 | FC_UPPER_UPSCALE_SNAIVE | 0.157708 | 0.11205 | 1.385445 | 1.045935 | 33 | ||

| FC_UPPER_UPSCALE_ETS_30 | 0.136023 | 0.097876 | 1.204554 | 0.681431 | 20 | FC_UPPER_UPSCALE_ETS_90 | 0.156272 | 0.11275 | 1.391787 | 1.052469 | 35 | ||

| FC_UPPER_UPSCALE_SARIMA_30 | 0.175671 | 0.139149 | 1.709719 | 0.968782 | 43 | FC_UPPER_UPSCALE_SARIMA_90 | 0.193707 | 0.166604 | 2.041383 | 1.555172 | 44 | ||

| FC_UPPER_UPSCALE_TBATS_30 | 0.180117 | 0.138577 | 1.703138 | 0.964799 | 41 | FC_UPPER_UPSCALE_TBATS_90 | 0.19031 | 0.143063 | 1.764517 | 1.335427 | 40 | ||

| FC_UPPER_UPSCALE_NNAR_30 | 0.14954 | 0.102743 | 1.272274 | 0.715316 | 26 | FC_UPPER_UPSCALE_NNAR_90 | 0.145923 | 0.097087 | 1.211011 | 0.906263 | 24 | ||

| FC_UPPER_UPSCALE_NNARX_30 | 0.144508 | 0.103856 | 1.278423 | 0.723065 | 27 | FC_UPPER_UPSCALE_NNARX_90 | 0.129768 | 0.09578 | 1.182614 | 0.894062 | 20 | ||

| Mean forecast | 0.121522 | 0.086009 | 1.061401 | 0.598811 | 4 | Mean forecast | 0.125623 | 0.086778 | 1.074851 | 0.810033 | 15 | ||

| Median forecast | 0.123368 | 0.086304 | 1.066479 | 0.600865 | 8 | Median forecast | 0.127363 | 0.085061 | 1.056062 | 0.794005 | 13 | ||

| Regression-based weights | 0.16142 | 0.116878 | 1.434824 | 0.813727 | 36 | Regression-based weights | 0.148471 | 0.10298 | 1.282483 | 0.961271 | 28 | ||

| Bates–Granger weights | 0.123404 | 0.086468 | 1.067652 | 0.602007 | 12 | Bates–Granger weights | 0.124862 | 0.082487 | 1.024688 | 0.769978 | 8 | ||

| Bates–Granger ranks | 0.125265 | 0.087882 | 1.084757 | 0.611851 | 16 | Bates–Granger ranks | 0.124484 | 0.081786 | 1.015742 | 0.763435 | 4 |

Table A3.

Forecast evaluation results for the hotel class ‘upscale’. Source: STR SHARE Center, own calculations using R and EViews.

Table A3.

Forecast evaluation results for the hotel class ‘upscale’. Source: STR SHARE Center, own calculations using R and EViews.

| h = 1 | Forecast encompassing tests | h = 7 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_UPSCALE_SNAIVE | 10.68013 | 0.0000 | FC_UPSCALE_SNAIVE | 3.763008 | 0.0026 | ||||||||

| FC_UPSCALE_ETS_1 | 18.0499 | 0.0000 | FC_UPSCALE_ETS_7 | 16.43747 | 0.0000 | ||||||||

| FC_UPSCALE_SARIMA_1 | 13.25403 | 0.0000 | FC_UPSCALE_SARIMA_7 | 23.51177 | 0.0000 | ||||||||

| FC_UPSCALE_TBATS_1 | 20.36749 | 0.0000 | FC_UPSCALE_TBATS_7 | 3.979467 | 0.0017 | ||||||||

| FC_UPSCALE_NNAR_1 | 31.86091 | 0.0000 | FC_UPSCALE_NNAR_7 | 17.49965 | 0.0000 | ||||||||

| FC_UPSCALE_NNARX_1 | 19.63853 | 0.0000 | FC_UPSCALE_NNARX_7 | 25.43725 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_UPSCALE_SNAIVE | 0.147264 | 0.105814 | 1.25046 | 0.635558 | 40 | FC_UPSCALE_SNAIVE | 0.146775 | 0.104603 | 1.236859 | 0.648801 | 30 | ||

| FC_UPSCALE_ETS_1 | 0.12122 | 0.089742 | 1.059891 | 0.539023 | 36 | FC_UPSCALE_ETS_7 | 0.12351 | 0.090806 | 1.072854 | 0.563225 | 14 | ||

| FC_UPSCALE_SARIMA_1 | 0.086949 | 0.065638 | 0.773686 | 0.394246 | 16 | FC_UPSCALE_SARIMA_7 | 0.140314 | 0.107549 | 1.269103 | 0.667074 | 31 | ||

| FC_UPSCALE_TBATS_1 | 0.151155 | 0.117417 | 1.38433 | 0.70525 | 44 | FC_UPSCALE_TBATS_7 | 0.163656 | 0.123155 | 1.452541 | 0.76387 | 40 | ||

| FC_UPSCALE_NNAR_1 | 0.09542 | 0.068672 | 0.810531 | 0.412469 | 28 | FC_UPSCALE_NNAR_7 | 0.131214 | 0.098472 | 1.162606 | 0.610774 | 24 | ||

| FC_UPSCALE_NNARX_1 | 0.108023 | 0.074073 | 0.881948 | 0.44491 | 32 | FC_UPSCALE_NNARX_7 | 0.173428 | 0.147058 | 1.722723 | 0.912129 | 44 | ||

| Mean forecast | 0.08931 | 0.068087 | 0.80515 | 0.408955 | 23 | Mean forecast | 0.116477 | 0.09382 | 1.105795 | 0.58192 | 19 | ||

| Median forecast | 0.085773 | 0.062397 | 0.739612 | 0.374779 | 6 | Median forecast | 0.112698 | 0.089774 | 1.058664 | 0.556824 | 4 | ||

| Regression-based weights | 0.091553 | 0.066134 | 0.781449 | 0.397225 | 21 | Regression-based weights | 0.146156 | 0.119663 | 1.407305 | 0.742211 | 35 | ||

| Bates–Granger weights | 0.085494 | 0.063218 | 0.747981 | 0.37971 | 11 | Bates–Granger weights | 0.11554 | 0.092599 | 1.092029 | 0.574346 | 15 | ||

| Bates–Granger ranks | 0.084703 | 0.063118 | 0.746597 | 0.37911 | 7 | Bates–Granger ranks | 0.113999 | 0.090585 | 1.069051 | 0.561855 | 8 | ||

| h = 30 | Forecast encompassing tests | h = 90 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_UPSCALE_SNAIVE | 7.713762 | 0.0000 | FC_UPSCALE_SNAIVE | 8.92849 | 0.0000 | ||||||||

| FC_UPSCALE_ETS_30 | 11.33217 | 0.0000 | FC_UPSCALE_ETS_90 | 10.60228 | 0.0000 | ||||||||

| FC_UPSCALE_SARIMA_30 | 45.18074 | 0.0000 | FC_UPSCALE_SARIMA_90 | 38.6814 | 0.0000 | ||||||||

| FC_UPSCALE_TBATS_30 | 4.37342 | 0.0008 | FC_UPSCALE_TBATS_90 | 5.635154 | 0.0001 | ||||||||

| FC_UPSCALE_NNAR_30 | 23.04718 | 0.0000 | FC_UPSCALE_NNAR_90 | 7.991132 | 0.0000 | ||||||||

| FC_UPSCALE_NNARX_30 | 16.66686 | 0.0000 | FC_UPSCALE_NNARX_90 | 4.471509 | 0.0007 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_UPSCALE_SNAIVE | 0.143986 | 0.102046 | 1.207862 | 0.697688 | 28 | FC_UPSCALE_SNAIVE | 0.152244 | 0.10743 | 1.275666 | 1.019928 | 26 | ||

| FC_UPSCALE_ETS_30 | 0.130202 | 0.094587 | 1.117744 | 0.646691 | 18 | FC_UPSCALE_ETS_90 | 0.14967 | 0.109134 | 1.291643 | 1.036105 | 28 | ||

| FC_UPSCALE_SARIMA_30 | 0.195951 | 0.171029 | 2.005473 | 1.169325 | 44 | FC_UPSCALE_SARIMA_90 | 0.249733 | 0.226964 | 2.657518 | 2.154769 | 40 | ||

| FC_UPSCALE_TBATS_30 | 0.16709 | 0.125448 | 1.479581 | 0.857688 | 40 | FC_UPSCALE_TBATS_90 | 0.179385 | 0.132474 | 1.564942 | 1.257692 | 36 | ||

| FC_UPSCALE_NNAR_30 | 0.127569 | 0.095069 | 1.123633 | 0.649987 | 20 | FC_UPSCALE_NNAR_90 | 0.123605 | 0.089921 | 1.070174 | 0.853699 | 7 | ||

| FC_UPSCALE_NNARX_30 | 0.148088 | 0.123284 | 1.447415 | 0.842893 | 35 | FC_UPSCALE_NNARX_90 | 0.131224 | 0.111262 | 1.311484 | 1.056308 | 30 | ||

| Mean forecast | 0.118768 | 0.098832 | 1.163905 | 0.675714 | 22 | Mean forecast | 0.127693 | 0.107207 | 1.264759 | 1.017811 | 20 | ||

| Median forecast | 0.113171 | 0.091585 | 1.079986 | 0.626167 | 4 | Median forecast | 0.118367 | 0.094622 | 1.119729 | 0.89833 | 14 | ||

| Regression-based weights | 0.148492 | 0.118216 | 1.390091 | 0.808243 | 33 | Regression-based weights | 0.343877 | 0.315221 | 3.688137 | 2.992671 | 44 | ||

| Bates–Granger weights | 0.115741 | 0.094581 | 1.114778 | 0.64665 | 12 | Bates–Granger weights | 0.118989 | 0.093121 | 1.101989 | 0.88408 | 9 | ||

| Bates–Granger ranks | 0.114364 | 0.091986 | 1.084922 | 0.628908 | 8 | Bates–Granger ranks | 0.118142 | 0.09315 | 1.102108 | 0.884355 | 10 |

Table A4.

Forecast evaluation results for the hotel class ‘upper midscale’. Source: STR SHARE Center, own calculations using R and EViews.

Table A4.

Forecast evaluation results for the hotel class ‘upper midscale’. Source: STR SHARE Center, own calculations using R and EViews.

| h = 1 | Forecast encompassing tests | h = 7 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_UPPER_MIDSCALE_SNAIVE | 11.61764 | 0.0000 | FC_UPPER_MIDSCALE_SNAIVE | 4.157892 | 0.0012 | ||||||||

| FC_UPPER_MIDSCALE_ETS_1 | 19.69565 | 0.0000 | FC_UPPER_MIDSCALE_ETS_7 | 16.79542 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_SARIMA_1 | 10.70191 | 0.0000 | FC_UPPER_MIDSCALE_SARIMA_7 | 7.740781 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_TBATS_1 | 23.99187 | 0.0000 | FC_UPPER_MIDSCALE_TBATS_7 | 8.468066 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_NNAR_1 | 36.74004 | 0.0000 | FC_UPPER_MIDSCALE_NNAR_7 | 49.7005 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_NNARX_1 | 30.11163 | 0.0000 | FC_UPPER_MIDSCALE_NNARX_7 | 44.3505 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_UPPER_MIDSCALE_SNAIVE | 0.154413 | 0.111311 | 1.270464 | 0.762263 | 40 | FC_UPPER_MIDSCALE_SNAIVE | 0.153571 | 0.109713 | 1.252948 | 0.732128 | 32 | ||

| FC_UPPER_MIDSCALE_ETS_1 | 0.130171 | 0.094177 | 1.072478 | 0.644929 | 32 | FC_UPPER_MIDSCALE_ETS_7 | 0.132346 | 0.095101 | 1.083157 | 0.63462 | 24 | ||

| FC_UPPER_MIDSCALE_SARIMA_1 | 0.120813 | 0.090894 | 1.034914 | 0.622447 | 28 | FC_UPPER_MIDSCALE_SARIMA_7 | 0.122774 | 0.093009 | 1.059256 | 0.62066 | 20 | ||

| FC_UPPER_MIDSCALE_TBATS_1 | 0.185388 | 0.138964 | 1.582794 | 0.951632 | 44 | FC_UPPER_MIDSCALE_TBATS_7 | 0.197467 | 0.151017 | 1.719871 | 1.007754 | 37 | ||

| FC_UPPER_MIDSCALE_NNAR_1 | 0.112159 | 0.073619 | 0.839085 | 0.504146 | 15 | FC_UPPER_MIDSCALE_NNAR_7 | 0.147702 | 0.100869 | 1.151594 | 0.673111 | 28 | ||

| FC_UPPER_MIDSCALE_NNARX_1 | 0.143351 | 0.097083 | 1.116488 | 0.664829 | 36 | FC_UPPER_MIDSCALE_NNARX_7 | 0.196358 | 0.163066 | 1.842401 | 1.088159 | 39 | ||

| Mean forecast | 0.105621 | 0.076692 | 0.876682 | 0.525191 | 21 | Mean forecast | 0.11977 | 0.091278 | 1.03999 | 0.609109 | 15 | ||

| Median forecast | 0.107828 | 0.07443 | 0.852308 | 0.5097 | 16 | Median forecast | 0.121131 | 0.090112 | 1.029058 | 0.601328 | 13 | ||

| Regression-based weights | 0.110452 | 0.074948 | 0.854265 | 0.513248 | 20 | Regression-based weights | NA | NA | NA | NA | NA | ||

| Bates–Granger weights | 0.104599 | 0.071789 | 0.821863 | 0.491615 | 8 | Bates–Granger weights | 0.118249 | 0.088597 | 1.010089 | 0.591218 | 8 | ||

| Bates–Granger ranks | 0.10049 | 0.070738 | 0.809422 | 0.484417 | 4 | Bates–Granger ranks | 0.113537 | 0.084368 | 0.962971 | 0.562998 | 4 | ||

| h = 30 | Forecast encompassing tests | h = 90 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_UPPER_MIDSCALE_SNAIVE | 5.059947 | 0.0002 | FC_UPPER_MIDSCALE_SNAIVE | 7.203316 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_ETS_30 | 31.25303 | 0.0000 | FC_UPPER_MIDSCALE_ETS_90 | 20.62793 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_SARIMA_30 | 6.553232 | 0.0000 | FC_UPPER_MIDSCALE_SARIMA_90 | 13.77828 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_TBATS_30 | 10.31844 | 0.0000 | FC_UPPER_MIDSCALE_TBATS_90 | 6.328832 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_NNAR_30 | 45.47215 | 0.0000 | FC_UPPER_MIDSCALE_NNAR_90 | 20.43492 | 0.0000 | ||||||||

| FC_UPPER_MIDSCALE_NNARX_30 | 50.12204 | 0.0000 | FC_UPPER_MIDSCALE_NNARX_90 | 9.165978 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_UPPER_MIDSCALE_SNAIVE | 0.150579 | 0.106045 | 1.212837 | 0.72194 | 33 | FC_UPPER_MIDSCALE_SNAIVE | 0.160822 | 0.11237 | 1.288928 | 1.011158 | 28 | ||

| FC_UPPER_MIDSCALE_ETS_30 | 0.142759 | 0.102213 | 1.16413 | 0.695852 | 27 | FC_UPPER_MIDSCALE_ETS_90 | 0.168164 | 0.121242 | 1.383578 | 1.090993 | 32 | ||

| FC_UPPER_MIDSCALE_SARIMA_30 | 0.120818 | 0.089784 | 1.024021 | 0.611237 | 19 | FC_UPPER_MIDSCALE_SARIMA_90 | 0.145529 | 0.10166 | 1.165304 | 0.914784 | 24 | ||

| FC_UPPER_MIDSCALE_TBATS_30 | 0.201919 | 0.15406 | 1.754807 | 1.048819 | 40 | FC_UPPER_MIDSCALE_TBATS_90 | 0.216381 | 0.163326 | 1.863756 | 1.469684 | 43 | ||

| FC_UPPER_MIDSCALE_NNAR_30 | 0.147478 | 0.098983 | 1.131851 | 0.673863 | 25 | FC_UPPER_MIDSCALE_NNAR_90 | 0.140949 | 0.090053 | 1.038815 | 0.810339 | 14 | ||

| FC_UPPER_MIDSCALE_NNARX_30 | 0.14995 | 0.108434 | 1.233429 | 0.738204 | 35 | FC_UPPER_MIDSCALE_NNARX_90 | 0.176822 | 0.154206 | 1.748699 | 1.387618 | 39 | ||

| Mean forecast | 0.116518 | 0.086368 | 0.986083 | 0.587981 | 7 | Mean forecast | 0.1312 | 0.097158 | 1.111862 | 0.874273 | 18 | ||

| Median forecast | 0.12022 | 0.086695 | 0.992224 | 0.590208 | 12 | Median forecast | 0.133106 | 0.096652 | 1.108297 | 0.86972 | 16 | ||

| Regression-based weights | NA | NA | NA | NA | NA | Regression-based weights | 0.220861 | 0.122861 | 1.4209 | 1.105561 | 38 | ||

| Bates–Granger weights | 0.118086 | 0.08563 | 0.977683 | 0.582957 | 5 | Bates–Granger weights | 0.123792 | 0.08717 | 0.999745 | 0.784397 | 4 | ||

| Bates–Granger ranks | 0.12197 | 0.088546 | 1.010438 | 0.602809 | 17 | Bates–Granger ranks | 0.123879 | 0.088538 | 1.014578 | 0.796707 | 8 |

Table A5.

Forecast evaluation results for the hotel class ‘midscale’. Source: STR SHARE Center, own calculations using R and EViews.

Table A5.

Forecast evaluation results for the hotel class ‘midscale’. Source: STR SHARE Center, own calculations using R and EViews.

| h = 1 | Forecast encompassing tests | h = 7 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_MIDSCALE_SNAIVE | 12.47588 | 0.0000 | FC_MIDSCALE_SNAIVE | 6.065493 | 0.0000 | ||||||||

| FC_MIDSCALE_ETS_1 | 12.97135 | 0.0000 | FC_MIDSCALE_ETS_7 | 16.50515 | 0.0000 | ||||||||

| FC_MIDSCALE_SARIMA_1 | 15.6279 | 0.0000 | FC_MIDSCALE_SARIMA_7 | 17.11077 | 0.0000 | ||||||||

| FC_MIDSCALE_TBATS_1 | 16.66577 | 0.0000 | FC_MIDSCALE_TBATS_7 | 10.11534 | 0.0000 | ||||||||

| FC_MIDSCALE_NNAR_1 | 12.59657 | 0.0000 | FC_MIDSCALE_NNAR_7 | 29.50288 | 0.0000 | ||||||||

| FC_MIDSCALE_NNARX_1 | 27.12821 | 0.0000 | FC_MIDSCALE_NNARX_7 | 22.57572 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_MIDSCALE_SNAIVE | 0.198359 | 0.149464 | 1.764835 | 0.645697 | 44 | FC_MIDSCALE_SNAIVE | 0.196909 | 0.147358 | 1.740813 | 0.634191 | 32 | ||

| FC_MIDSCALE_ETS_1 | 0.139426 | 0.10471 | 1.237665 | 0.452356 | 31 | FC_MIDSCALE_ETS_7 | 0.14303 | 0.107842 | 1.274764 | 0.464124 | 17 | ||

| FC_MIDSCALE_SARIMA_1 | 0.147828 | 0.109716 | 1.296446 | 0.473982 | 36 | FC_MIDSCALE_SARIMA_7 | 0.147991 | 0.110906 | 1.310721 | 0.477311 | 28 | ||

| FC_MIDSCALE_TBATS_1 | 0.193753 | 0.146462 | 1.730178 | 0.632728 | 40 | FC_MIDSCALE_TBATS_7 | 0.209606 | 0.160089 | 1.889038 | 0.688982 | 36 | ||

| FC_MIDSCALE_NNAR_1 | 0.111587 | 0.078623 | 0.927261 | 0.339658 | 14 | FC_MIDSCALE_NNAR_7 | 0.144692 | 0.109454 | 1.290204 | 0.471062 | 21 | ||

| FC_MIDSCALE_NNARX_1 | 0.145639 | 0.102291 | 1.22408 | 0.441906 | 29 | FC_MIDSCALE_NNARX_7 | 0.25491 | 0.228384 | 2.670795 | 0.982906 | 43 | ||

| Mean forecast | 0.109145 | 0.083609 | 0.989367 | 0.361198 | 18 | Mean forecast | 0.135208 | 0.110499 | 1.300815 | 0.475559 | 22 | ||

| Median forecast | 0.109676 | 0.080362 | 0.952814 | 0.347171 | 16 | Median forecast | 0.132179 | 0.105 | 1.238691 | 0.451893 | 12 | ||

| Regression-based weights | 0.130433 | 0.097348 | 1.147484 | 0.420552 | 24 | Regression-based weights | 0.25954 | 0.202013 | 2.386749 | 0.869412 | 41 | ||

| Bates–Granger weights | 0.099987 | 0.074098 | 0.878583 | 0.32011 | 4 | Bates–Granger weights | 0.128134 | 0.103116 | 1.215175 | 0.443785 | 8 | ||

| Bates–Granger ranks | 0.10295 | 0.07736 | 0.916934 | 0.334202 | 8 | Bates–Granger ranks | 0.127872 | 0.102305 | 1.206033 | 0.440294 | 4 | ||

| h = 30 | Forecast encompassing tests | h = 90 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_MIDSCALE_SNAIVE | 8.051956 | 0.0000 | FC_MIDSCALE_SNAIVE | 14.28808 | 0.0000 | ||||||||

| FC_MIDSCALE_ETS_30 | 22.73777 | 0.0000 | FC_MIDSCALE_ETS_90 | 27.2534 | 0.0000 | ||||||||

| FC_MIDSCALE_SARIMA_30 | 28.42832 | 0.0000 | FC_MIDSCALE_SARIMA_90 | 40.94966 | 0.0000 | ||||||||

| FC_MIDSCALE_TBATS_30 | 12.20004 | 0.0000 | FC_MIDSCALE_TBATS_90 | 11.54967 | 0.0000 | ||||||||

| FC_MIDSCALE_NNAR_30 | 31.67838 | 0.0000 | FC_MIDSCALE_NNAR_90 | 10.81172 | 0.0000 | ||||||||

| FC_MIDSCALE_NNARX_30 | 17.79038 | 0.0000 | FC_MIDSCALE_NNARX_90 | 19.46184 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_MIDSCALE_SNAIVE | 0.188546 | 0.141499 | 1.674244 | 0.657053 | 33 | FC_MIDSCALE_SNAIVE | 0.18858 | 0.13858 | 1.647037 | 0.792361 | 33 | ||

| FC_MIDSCALE_ETS_30 | 0.157724 | 0.120631 | 1.425813 | 0.560152 | 28 | FC_MIDSCALE_ETS_90 | 0.183316 | 0.131655 | 1.563532 | 0.752766 | 29 | ||

| FC_MIDSCALE_SARIMA_30 | 0.146072 | 0.111624 | 1.320218 | 0.518328 | 24 | FC_MIDSCALE_SARIMA_90 | 0.164475 | 0.125866 | 1.493872 | 0.719666 | 24 | ||

| FC_MIDSCALE_TBATS_30 | 0.212878 | 0.161995 | 1.911795 | 0.752227 | 43 | FC_MIDSCALE_TBATS_90 | 0.227322 | 0.173027 | 2.047555 | 0.989319 | 40 | ||

| FC_MIDSCALE_NNAR_30 | 0.135514 | 0.099713 | 1.17782 | 0.463019 | 14 | FC_MIDSCALE_NNAR_90 | 0.119451 | 0.084798 | 1.009821 | 0.484851 | 4 | ||

| FC_MIDSCALE_NNARX_30 | 0.173619 | 0.147572 | 1.730051 | 0.685253 | 35 | FC_MIDSCALE_NNARX_30 | 0.176501 | 0.158707 | 1.863329 | 0.907442 | 34 | ||

| Mean forecast | 0.125688 | 0.10148 | 1.197074 | 0.471224 | 18 | Mean forecast | 0.131658 | 0.103267 | 1.22353 | 0.590451 | 19 | ||

| Median forecast | 0.127389 | 0.100462 | 1.186192 | 0.466497 | 16 | Median forecast | 0.131807 | 0.10135 | 1.202228 | 0.579491 | 17 | ||

| Regression-based weights | 0.230626 | 0.155407 | 1.850398 | 0.721635 | 41 | Regression-based weights | 0.300342 | 0.244455 | 2.89074 | 1.397724 | 44 | ||

| Bates–Granger weights | 0.12383 | 0.099175 | 1.170179 | 0.460521 | 4 | Bates–Granger weights | 0.120008 | 0.092549 | 1.097306 | 0.529169 | 8 | ||

| Bates–Granger ranks | 0.124534 | 0.099202 | 1.170633 | 0.460646 | 8 | Bates–Granger ranks | 0.123028 | 0.094259 | 1.118035 | 0.538946 | 12 |

Table A6.

Forecast evaluation results for the hotel class ‘economy’. Source: STR SHARE Center, own calculations using R and EViews.

Table A6.

Forecast evaluation results for the hotel class ‘economy’. Source: STR SHARE Center, own calculations using R and EViews.

| h = 1 | Forecast encompassing tests | h = 7 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_ECONOMY_SNAIVE | 6.092352 | 0.0000 | FC_ECONOMY_SNAIVE | 2.352535 | 0.0412 | ||||||||

| FC_ECONOMY_ETS_1 | 12.2109 | 0.0000 | FC_ECONOMY_ETS_7 | 12.50384 | 0.0000 | ||||||||

| FC_ECONOMY_SARIMA_1 | 13.72428 | 0.0000 | FC_ECONOMY_SARIMA_7 | 8.127302 | 0.0000 | ||||||||

| FC_ECONOMY_TBATS_1 | 13.75434 | 0.0000 | FC_ECONOMY_TBATS_7 | 6.718792 | 0.0000 | ||||||||

| FC_ECONOMY_NNAR_1 | 27.23227 | 0.0000 | FC_ECONOMY_NNAR_7 | 50.29536 | 0.0000 | ||||||||

| FC_ECONOMY_NNARX_1 | 37.51873 | 0.0000 | FC_ECONOMY_NNARX_7 | 51.63246 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_ECONOMY_SNAIVE | 0.176198 | 0.137092 | 1.563729 | 0.887775 | 44 | FC_ECONOMY_SNAIVE | 0.173817 | 0.135209 | 1.542639 | 0.848455 | 29 | ||

| FC_ECONOMY_ETS_1 | 0.119563 | 0.088066 | 1.006098 | 0.570294 | 32 | FC_ECONOMY_ETS_7 | 0.123077 | 0.08995 | 1.027336 | 0.564449 | 4 | ||

| FC_ECONOMY_SARIMA_1 | 0.12982 | 0.093676 | 1.071824 | 0.606623 | 36 | FC_ECONOMY_SARIMA_7 | 0.136969 | 0.099649 | 1.139359 | 0.625311 | 15 | ||

| FC_ECONOMY_TBATS_1 | 0.169487 | 0.127447 | 1.457169 | 0.825316 | 40 | FC_ECONOMY_TBATS_7 | 0.179777 | 0.138302 | 1.578964 | 0.867864 | 36 | ||

| FC_ECONOMY_NNAR_1 | 0.108318 | 0.078243 | 0.890772 | 0.506683 | 21 | FC_ECONOMY_NNAR_7 | 0.172872 | 0.136709 | 1.556156 | 0.857868 | 31 | ||

| FC_ECONOMY_NNARX_1 | 0.110788 | 0.082157 | 0.944479 | 0.532029 | 28 | FC_ECONOMY_NNARX_7 | 0.186704 | 0.15257 | 1.732784 | 0.957398 | 40 | ||

| Mean forecast | 0.098787 | 0.080377 | 0.916509 | 0.520502 | 22 | Mean forecast | 0.127547 | 0.104008 | 1.183706 | 0.652665 | 15 | ||

| Median forecast | 0.093476 | 0.073889 | 0.844286 | 0.478488 | 8 | Median forecast | 0.123531 | 0.099516 | 1.133467 | 0.624477 | 8 | ||

| Regression-based weights | 0.105929 | 0.074291 | 0.848172 | 0.481091 | 14 | Regression-based weights | 1.367922 | 0.869485 | 10.01118 | 5.45614 | 44 | ||

| Bates–Granger weights | 0.094157 | 0.075669 | 0.862742 | 0.490014 | 15 | Bates–Granger weights | 0.129583 | 0.105358 | 1.198625 | 0.661136 | 19 | ||

| Bates–Granger ranks | 0.09154 | 0.073058 | 0.833371 | 0.473106 | 4 | Bates–Granger ranks | 0.133243 | 0.107317 | 1.220427 | 0.673429 | 23 | ||

| h = 30 | Forecast encompassing tests | h = 90 | Forecast encompassing tests | ||||||||||

| Forecast | F-stat | F-prob | Forecast | F-stat | F-prob | ||||||||

| FC_ECONOMY_SNAIVE | 2.961128 | 0.0130 | FC_ECONOMY_SNAIVE | 13.66408 | 0.0000 | ||||||||

| FC_ECONOMY_ETS_30 | 15.34169 | 0.0000 | FC_ECONOMY_ETS_90 | 17.83844 | 0.0000 | ||||||||

| FC_ECONOMY_SARIMA_30 | 8.226793 | 0.0000 | FC_ECONOMY_SARIMA_90 | 20.18907 | 0.0000 | ||||||||

| FC_ECONOMY_TBATS_30 | 12.1509 | 0.0000 | FC_ECONOMY_TBATS_90 | 16.26509 | 0.0000 | ||||||||

| FC_ECONOMY_NNAR_30 | 44.403 | 0.0000 | FC_ECONOMY_NNAR_90 | 26.51577 | 0.0000 | ||||||||

| FC_ECONOMY_NNARX_30 | 37.03156 | 0.0000 | FC_ECONOMY_NNARX_90 | 12.34442 | 0.0000 | ||||||||

| Forecast accuracy measures | Forecast accuracy measures | ||||||||||||

| Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | Forecast | RMSE | MAE | MAPE (%) | MASE | Sum of ranks | ||

| FC_ECONOMY_SNAIVE | 0.171852 | 0.133959 | 1.528859 | 0.899712 | 32 | FC_ECONOMY_SNAIVE | 0.183235 | 0.143956 | 1.646537 | 1.140363 | 36 | ||

| FC_ECONOMY_ETS_30 | 0.134652 | 0.099565 | 1.13631 | 0.668711 | 15 | FC_ECONOMY_ETS_90 | 0.164148 | 0.12201 | 1.39557 | 0.966515 | 32 | ||

| FC_ECONOMY_SARIMA_30 | 0.136686 | 0.100909 | 1.153947 | 0.677737 | 22 | FC_ECONOMY_SARIMA_90 | 0.160152 | 0.120599 | 1.382939 | 0.955338 | 28 | ||

| FC_ECONOMY_TBATS_30 | 0.187686 | 0.144575 | 1.649421 | 0.971012 | 37 | FC_ECONOMY_TBATS_90 | 0.21483 | 0.161054 | 1.839831 | 1.275807 | 40 | ||

| FC_ECONOMY_NNAR_30 | 0.186306 | 0.152604 | 1.734526 | 1.024938 | 39 | FC_ECONOMY_NNAR_90 | 0.151895 | 0.119437 | 1.369897 | 0.946133 | 24 | ||