Bootstrap Methods for Correcting Bias in WLS Estimators of the First-Order Bifurcating Autoregressive Model

Abstract

1. Introduction

2. The BAR(1) Model and WLS Estimation

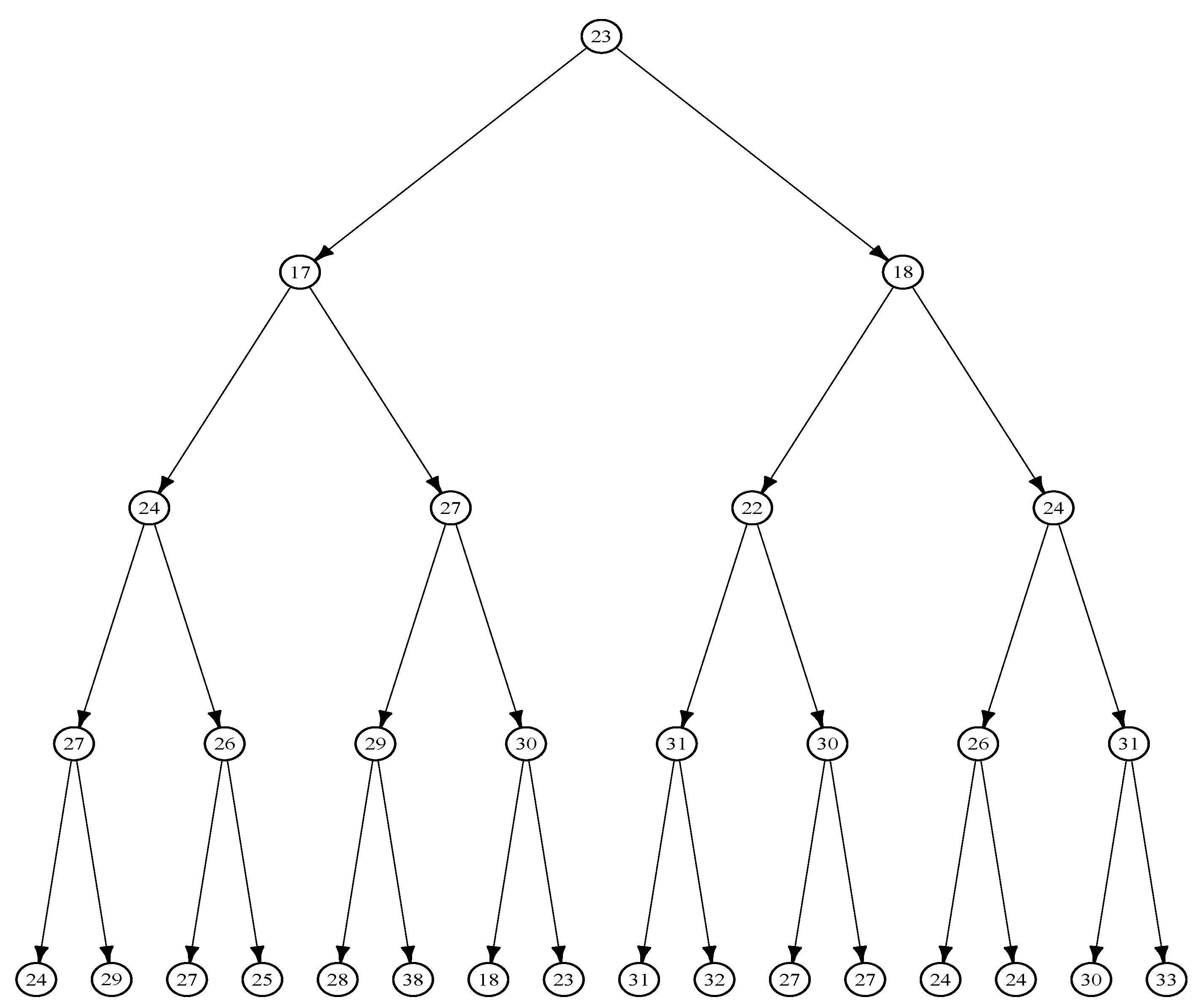

2.1. The BAR(1) Model

2.2. WLS Estimation

3. Bias in the WLS Estimators for the BAR(1) Model

4. Bias-Corrected WLS Estimators for the BAR(1) Model

4.1. Traditional Bootstrap Bias Correction

| Algorithm 1 Single Bootstrap Bias-Corrected WLS Estimation for (SBC) |

| Input |

| Observed tree: |

| WLS estimates of the BAR(1) model coefficients: and |

| Centered residuals: for |

| Number of bootstrap resamples: B |

| 1: for each to B do |

| 2: Set , the final observation from an initial binary tree§ of size , where is the first observation in the original tree |

| 3: for each to do |

| 4: Sample with replacement a pair from the set of pairs |

| 5: Compute |

| 6: end for |

| 7: Construct the bootstrap tree |

| 8: Compute the WLS estimate from |

| 9: end for |

| 10: Estimate the bias of as |

| Output: The single bootstrap bias-corrected WLS estimate of is given by: |

| 11: Determine the optimal by implementing a grid search over a candidate set and evaluate |

| Output: Given optimal , the shrinkage-adjusted estimate is given by: |

| Algorithm 2 Fast Double Bootstrap Bias-Corrected WLS Estimation for (FDBC) |

| Input: |

| Observed tree: |

| WLS estimates of the BAR(1) model coefficients: and |

| Centered residuals: , for |

| Number of phase 1 bootstrap resamples: |

| Number of phase 2 bootstrap resamples: |

| 1: for each to do |

| 2: Set , where is the last observation in an initial binary tree of size , with as the first observation in |

| 3: for each to do |

| 4: Sample with replacement a pair from the set |

| 5: Compute: |

| 6: end for |

| 7: Construct the bootstrap tree |

| 8: Compute the first-phase bootstrap WLS estimates and from |

| 9: Obtain the first-phase bootstrap residuals: |

| 10: Compute centered bootstrap residuals: |

| 11: for each to do |

| 12: Apply steps 2–6 to using , , and the centered residuals |

| 13: Construct the second-phase bootstrap tree |

| 14: Compute the second-phase bootstrap WLS estimate from |

| 15: end for |

| 16: end for |

| 17: Estimate the single bootstrap bias of : |

| 18: Compute the double bootstrap bias adjustment factor: |

| Output: Fast double bootstrap bias-corrected WLS estimate for : |

| 19: Determine the optimal by implementing a grid search over a candidate set and evaluate |

| Output: Given optimal , the shrinkage-adjusted estimate is given by: |

4.2. Shrinkage-Based Bias Correction

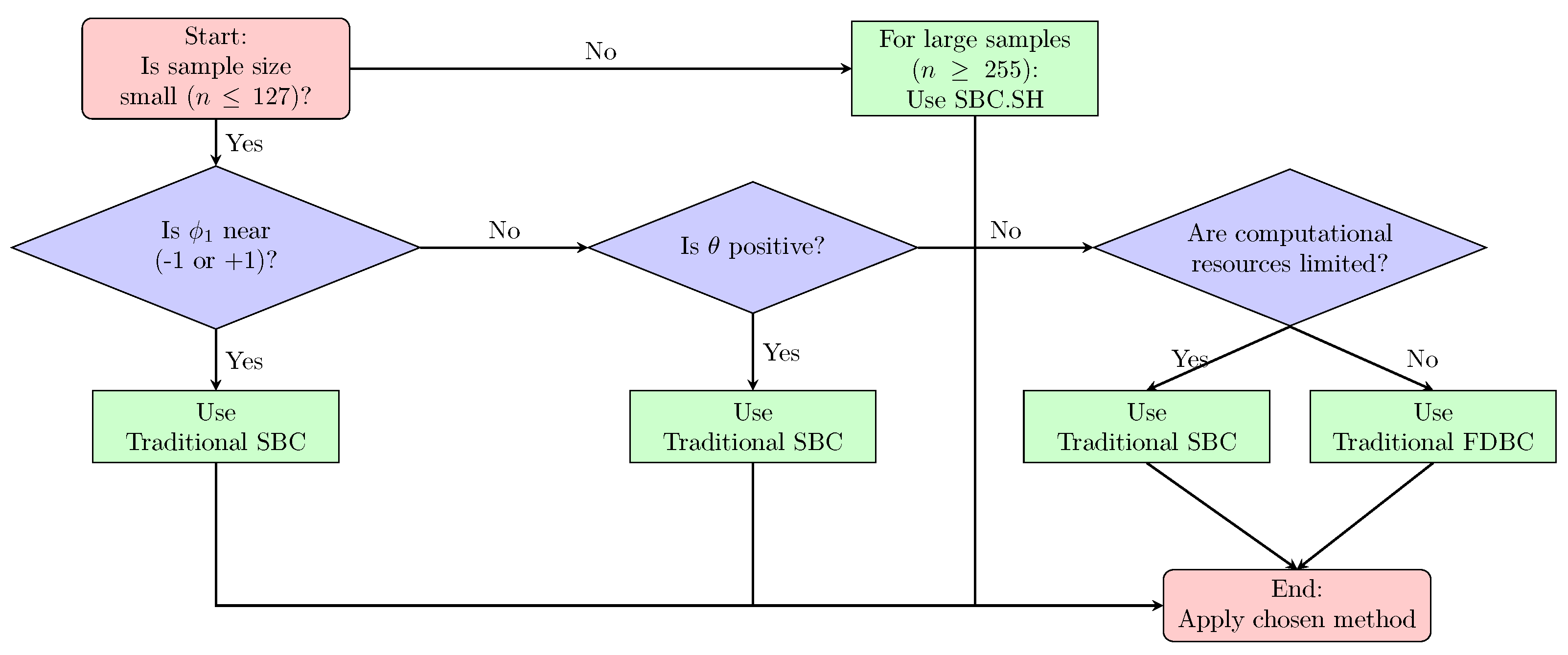

5. Empirical Results

5.1. Simulation Setup

5.2. Simulation Results

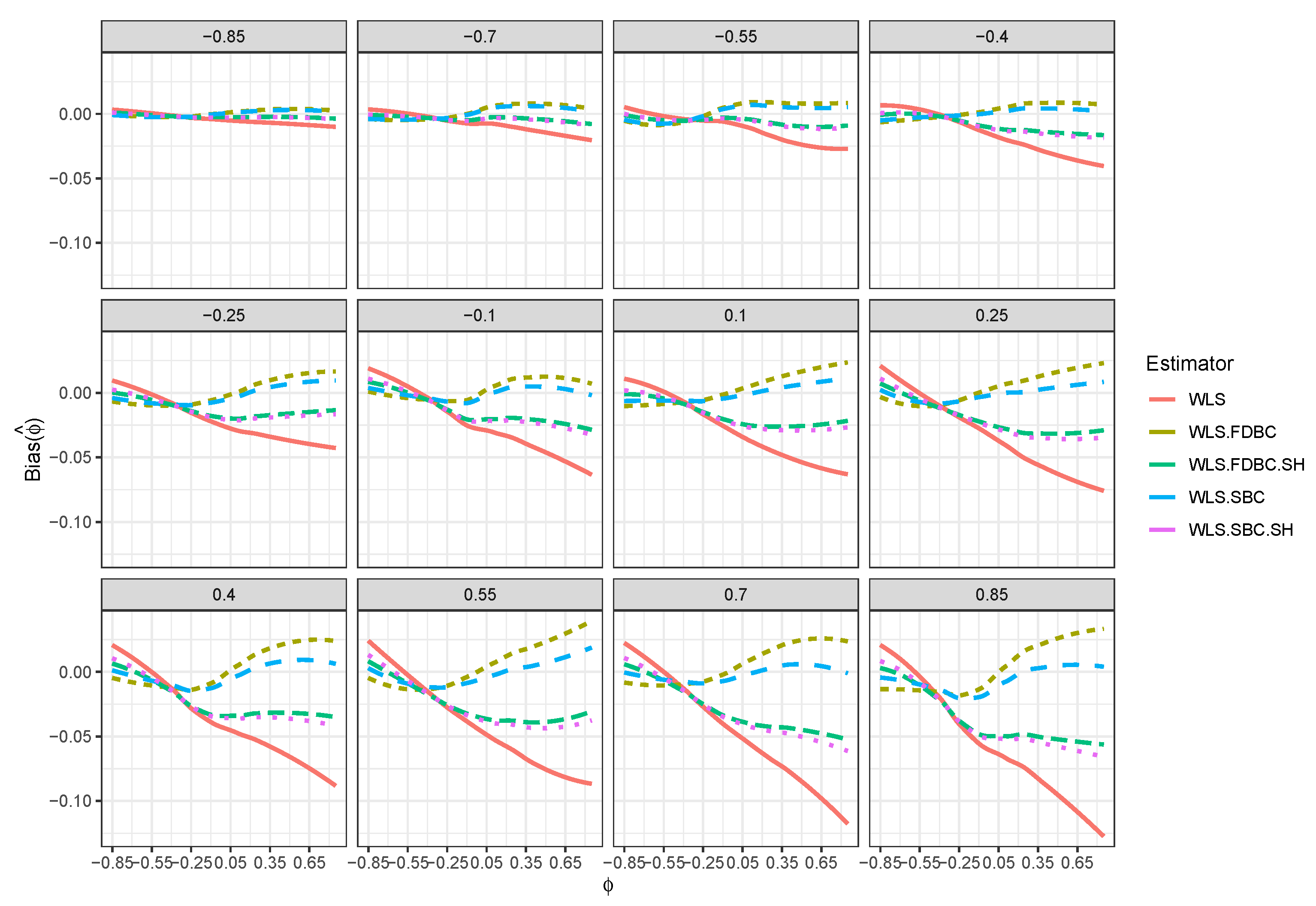

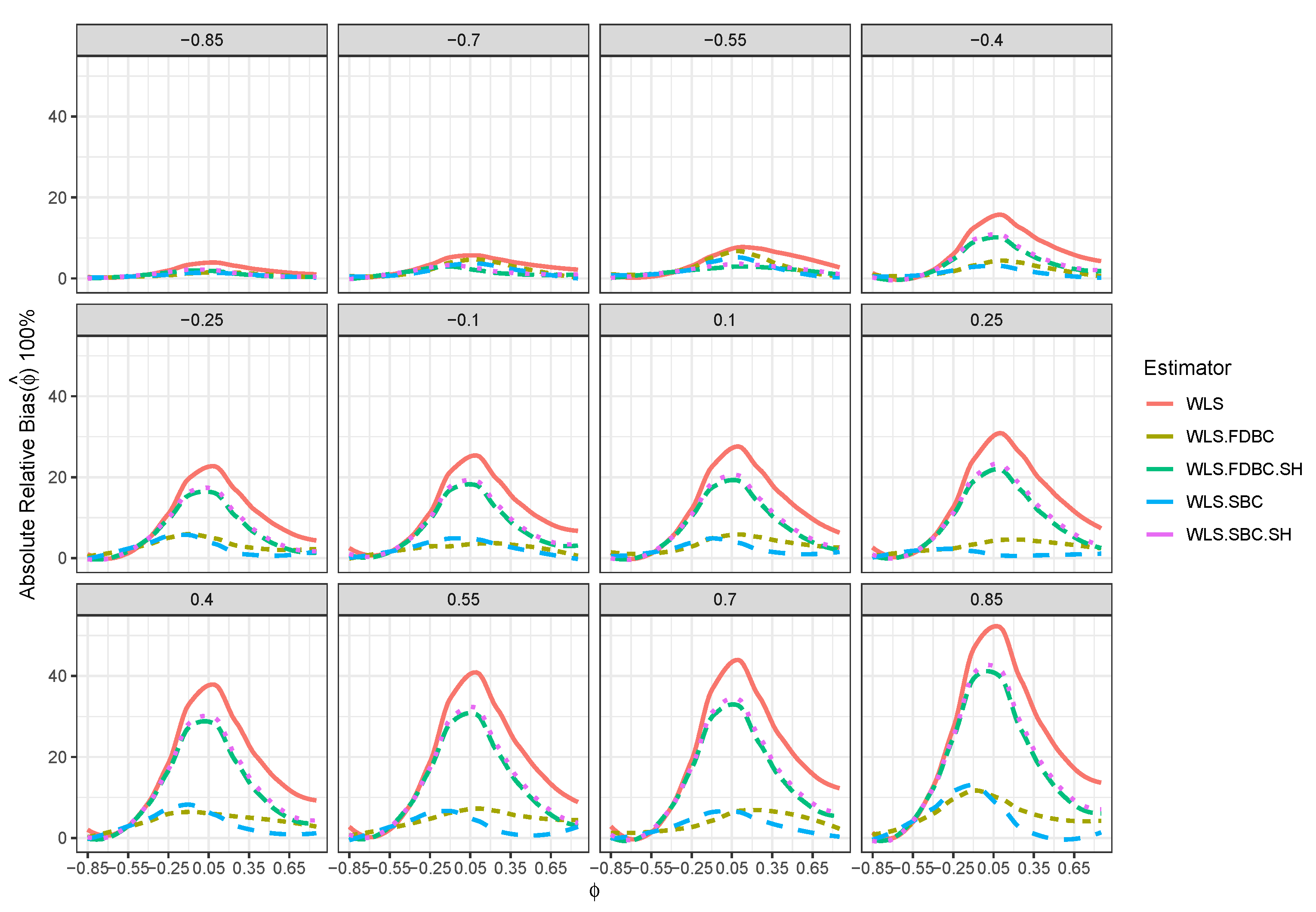

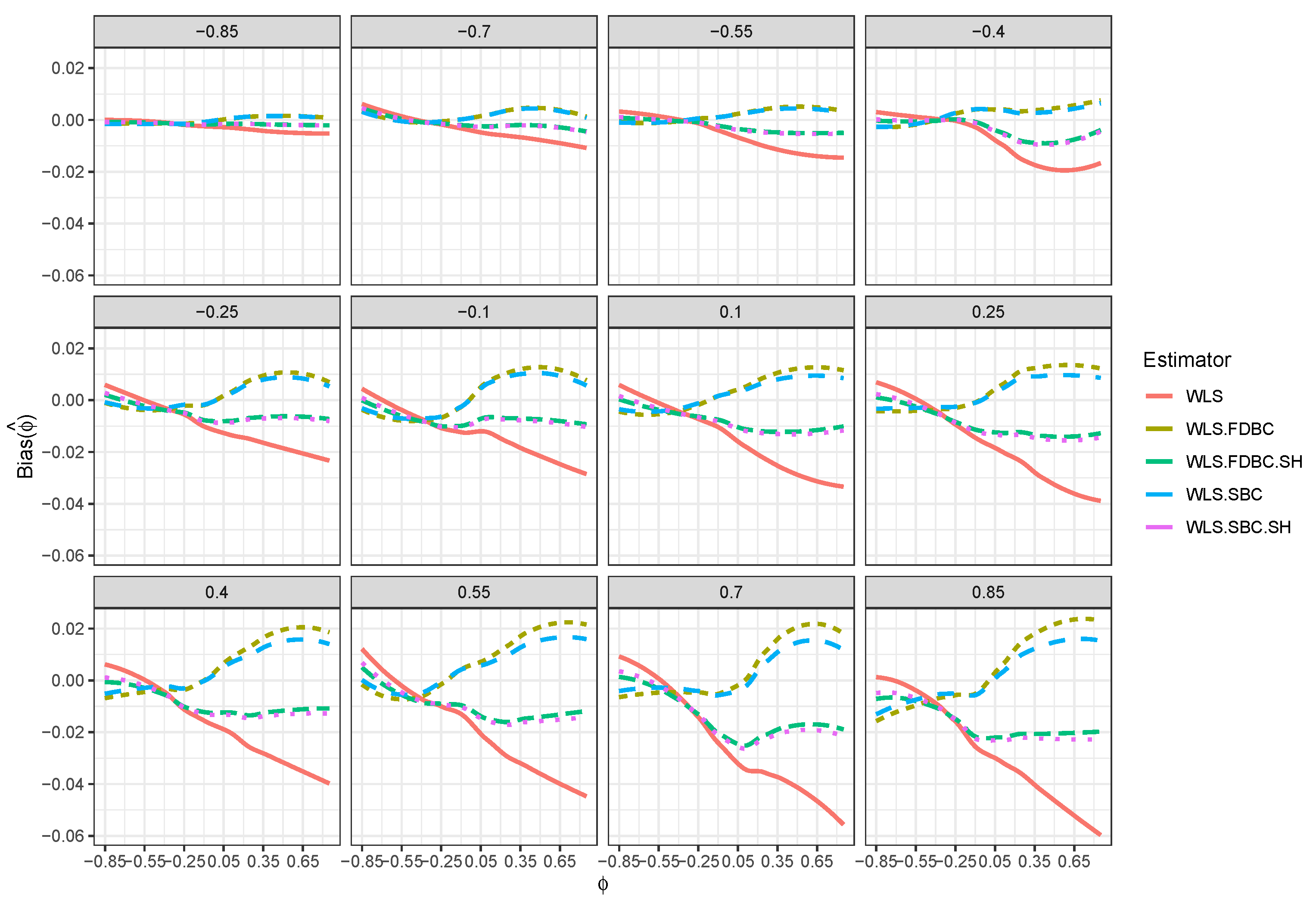

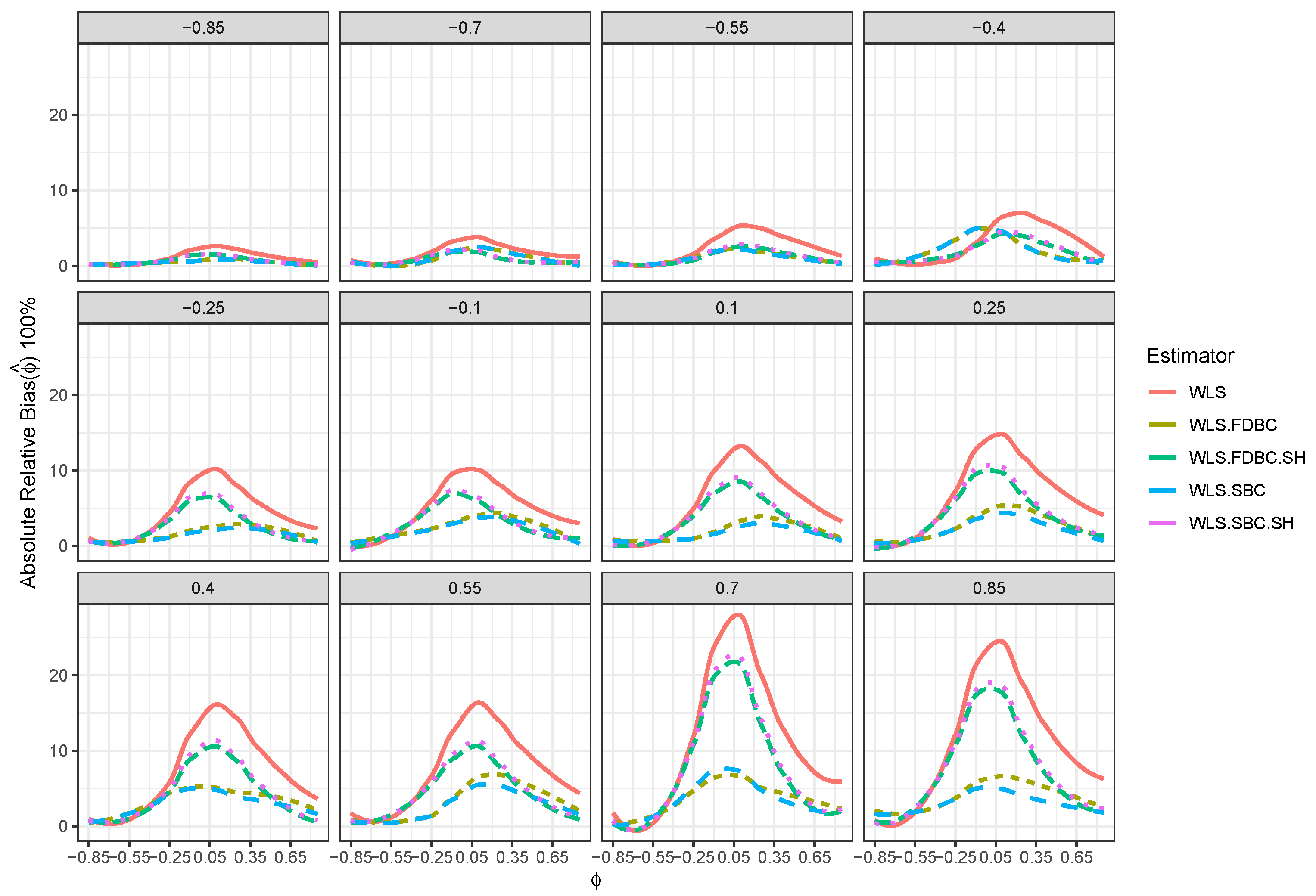

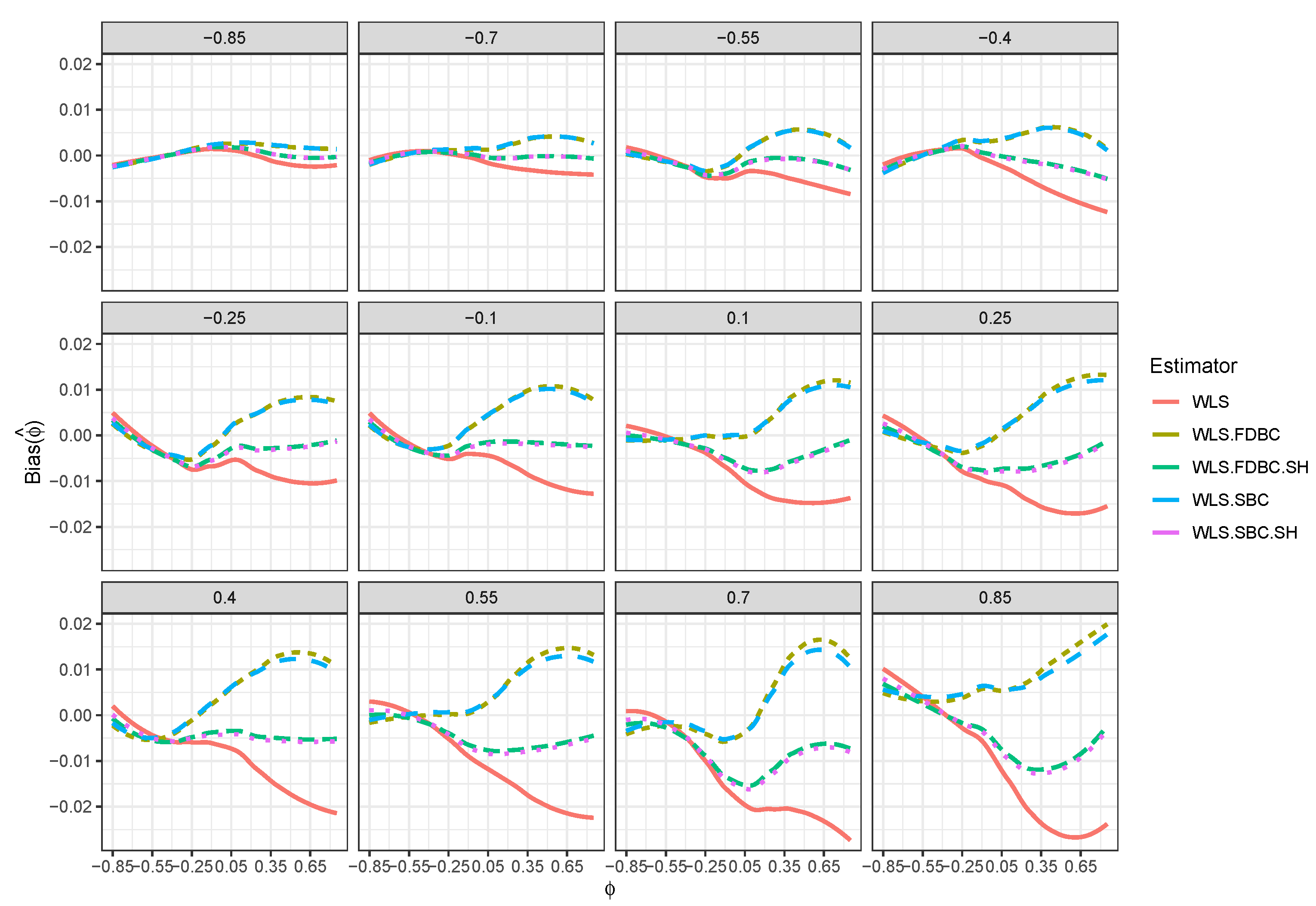

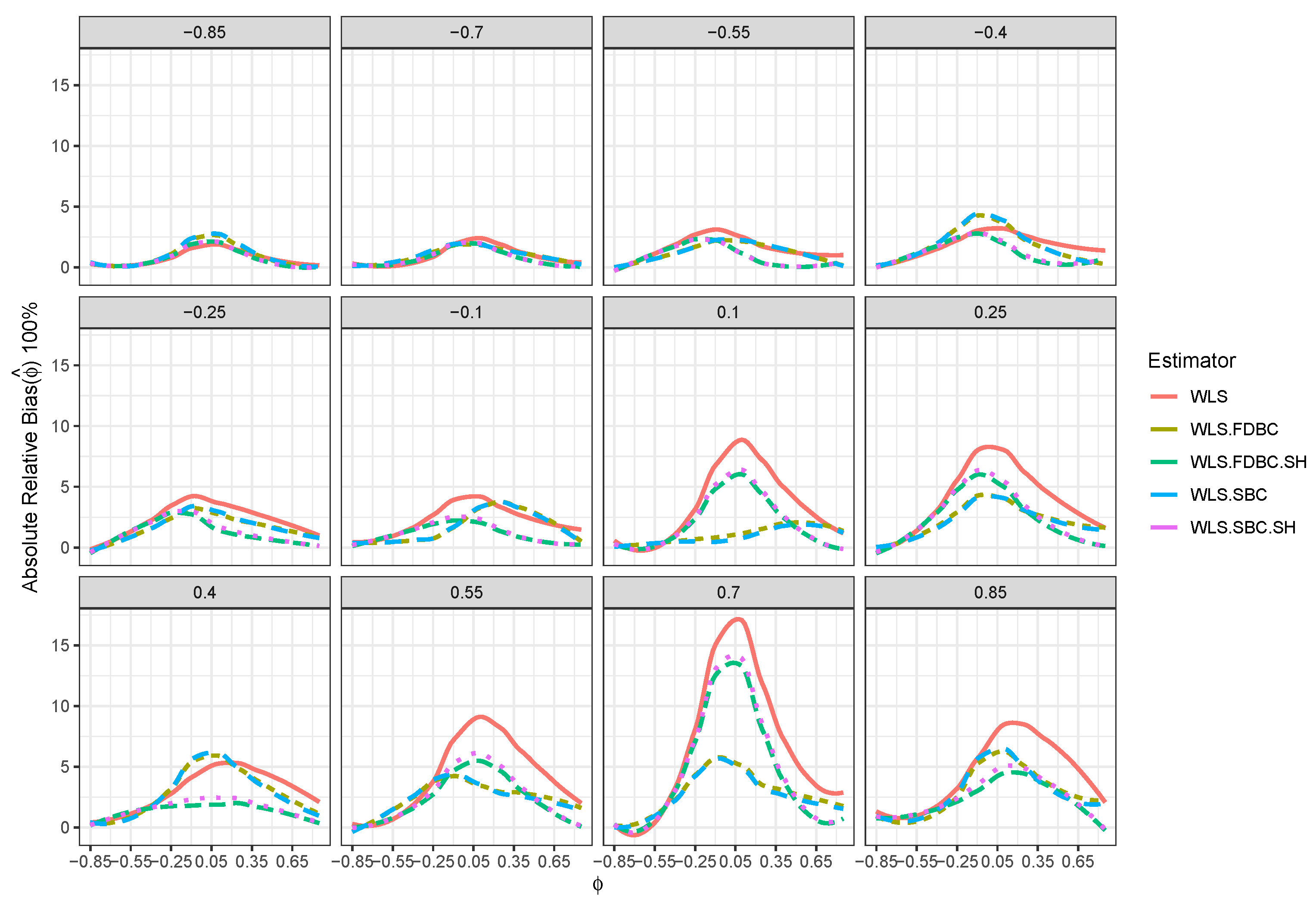

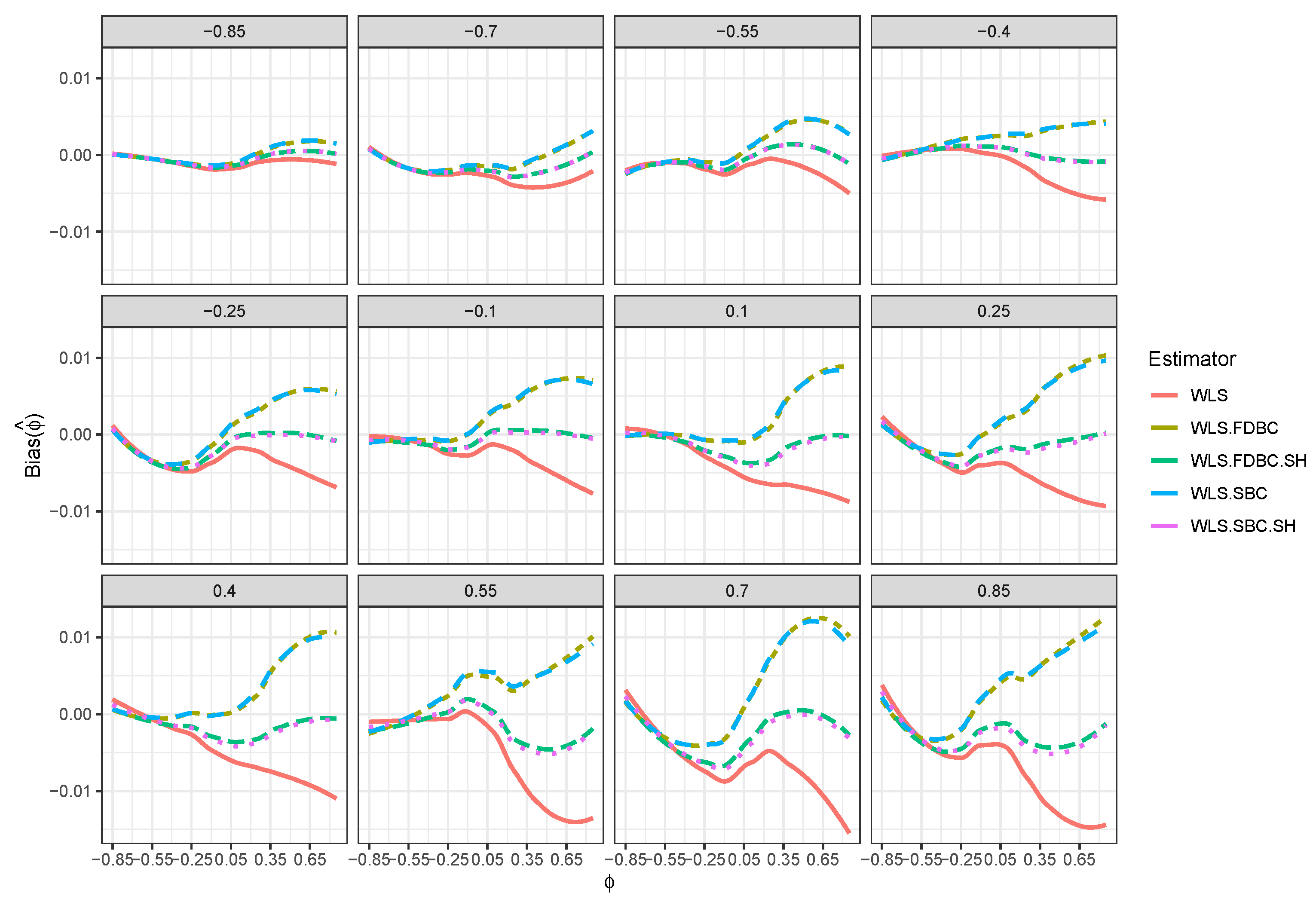

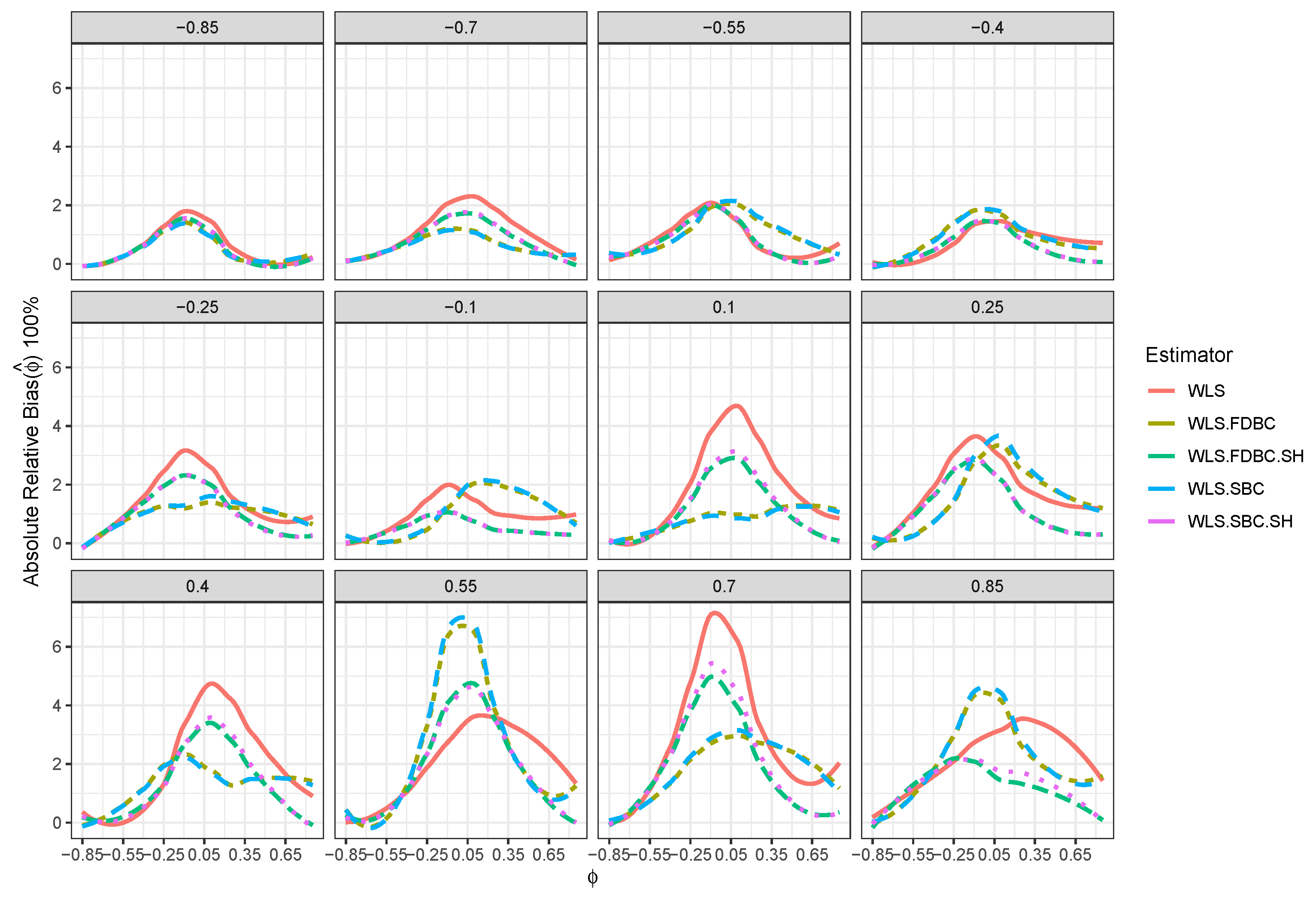

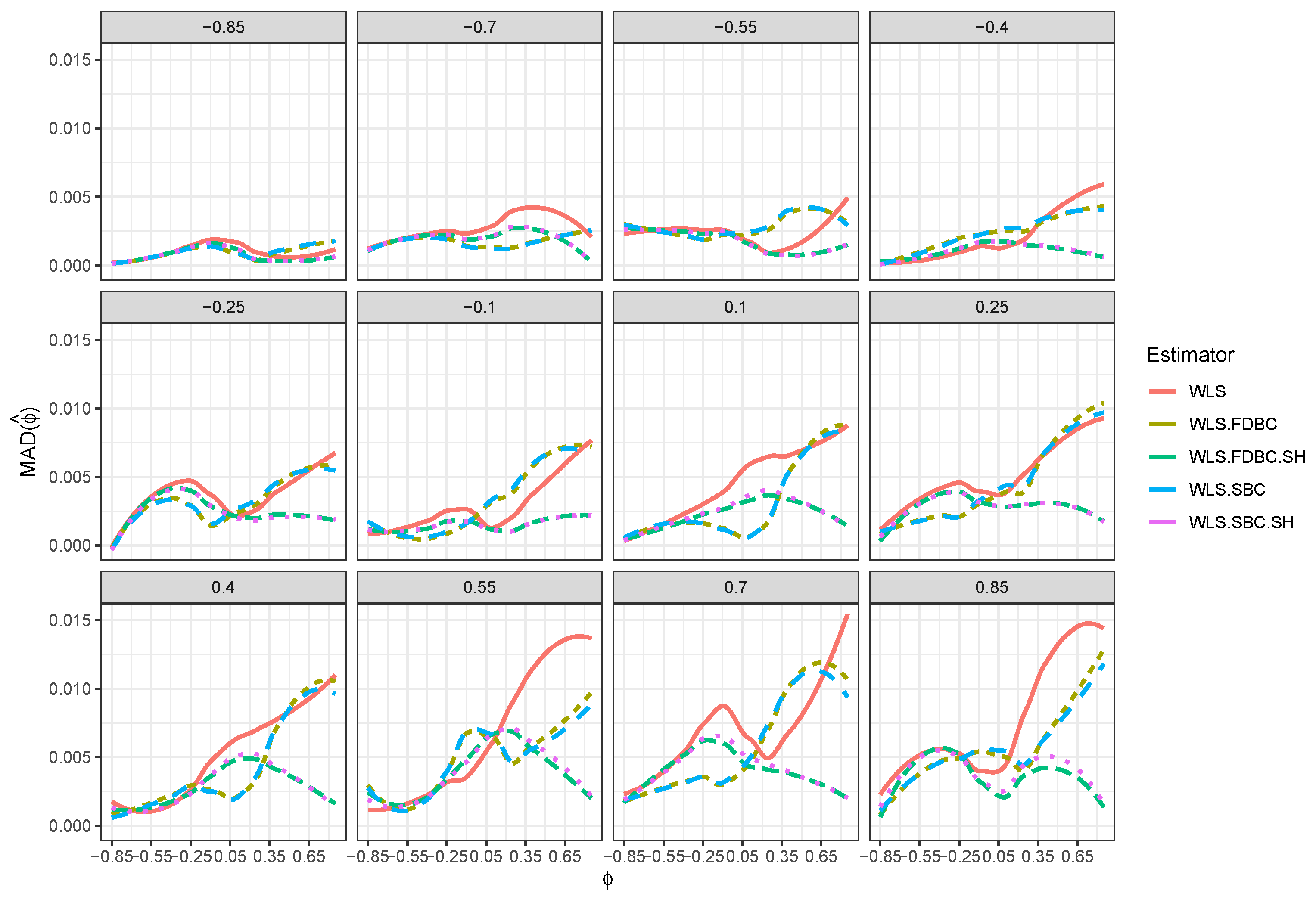

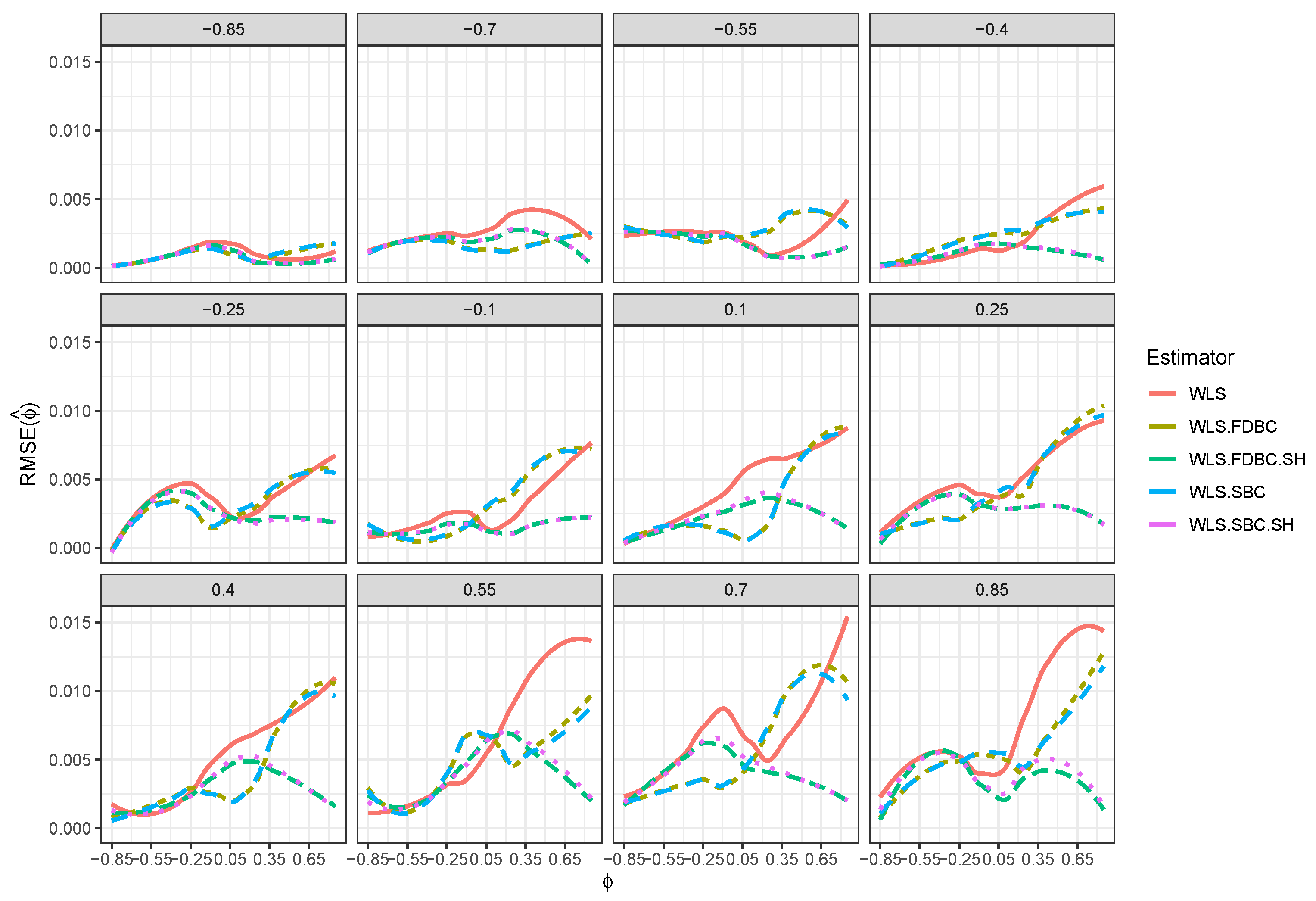

- In general, the proposed bias-correcting estimators significantly diminished the bias of the WLS estimator across almost all combinations of and . This enhancement is particularly evident in small samples, where the WLS estimator exhibits a consistent and pronounced bias, especially as shifts from to depending on the sample size.

- Both the traditional single bootstrap () and fast double bootstrap () bias-corrected estimators reduce bias more effectively than the single bootstrap () and fast double bootstrap () bias-corrected estimators based on the shrinking approach. Notably, the single bootstrap estimator outperforms the fast double bootstrap estimator, particularly for small sample sizes (e.g., ). For example, Figure 4 shows that the single bootstrap bias-corrected estimator reduced the bias by compared to the WLS estimator for and with . Moreover, Figure 8 shows that the traditional single and fast double bootstrap bias-corrected estimators reduced the bias by about compared to the WLS estimator for and with .

- Despite the bias reduction, some residual bias remains when both and are positive, with the bias increasing as and approach a positive boundary.

- It is noteworthy that the single bootstrap bias correction often surpasses the fast double bootstrap bias correction in small samples and offers a significantly reduced computational cost, making it a favorable option for practical use.

- For larger sample sizes, , the single bootstrap () and fast double bootstrap () bias-corrected estimators based on the shrinking approach perform better than the traditional single and fast double bootstrap estimators in most of the cases, especially when and is positive. It is crucial to highlight that traditional bootstrap methods resulted in overcorrection or undercorrection estimators as the sample size increased. The shrinking approach effectively avoids this problem.

- Although the shrinkage-based bias correction yields noticeable improvements for large sample sizes, some bias remains, particularly when both and are positive. This bias tends to increase as the values of and approach the upper end of their positive boundary.

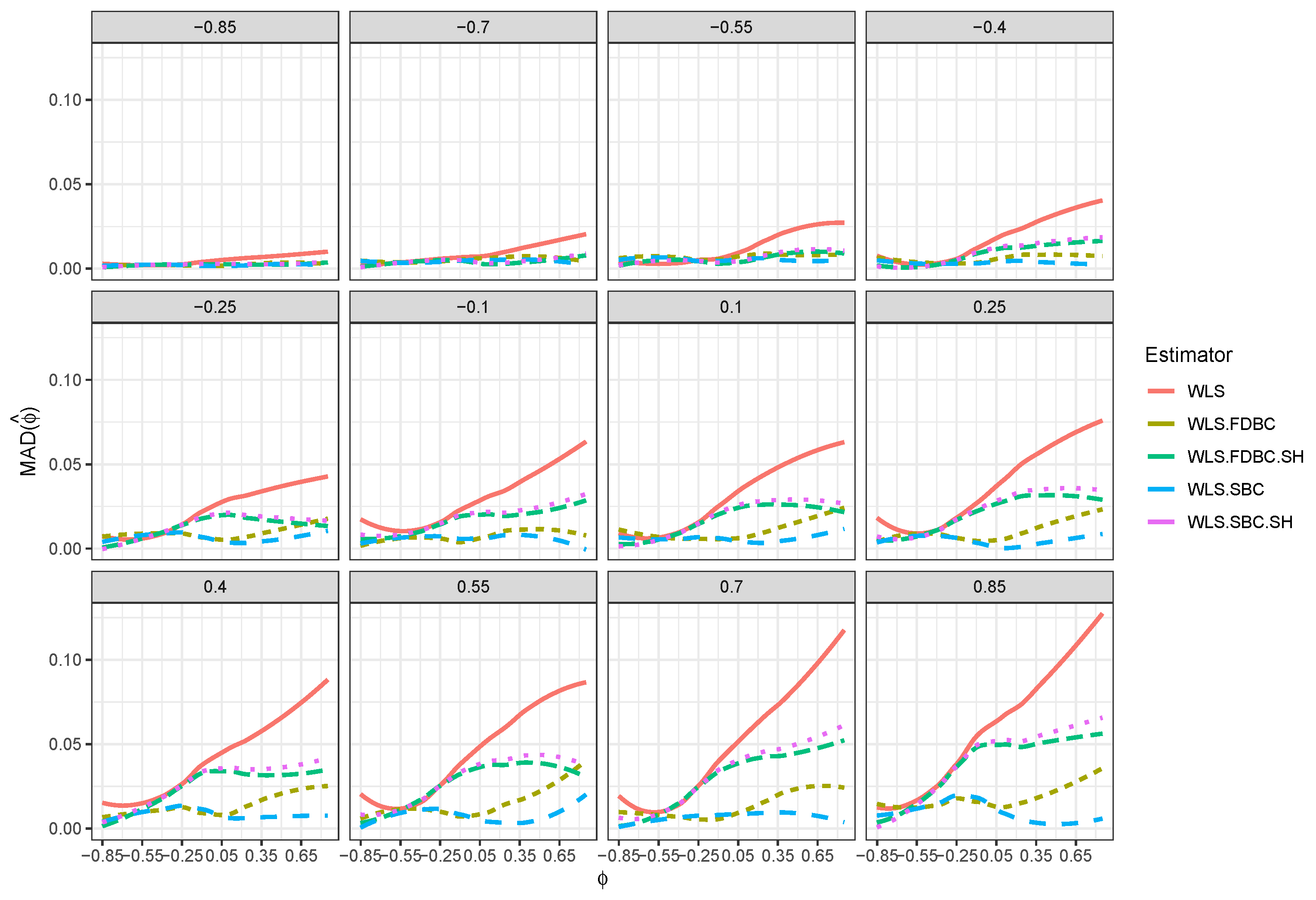

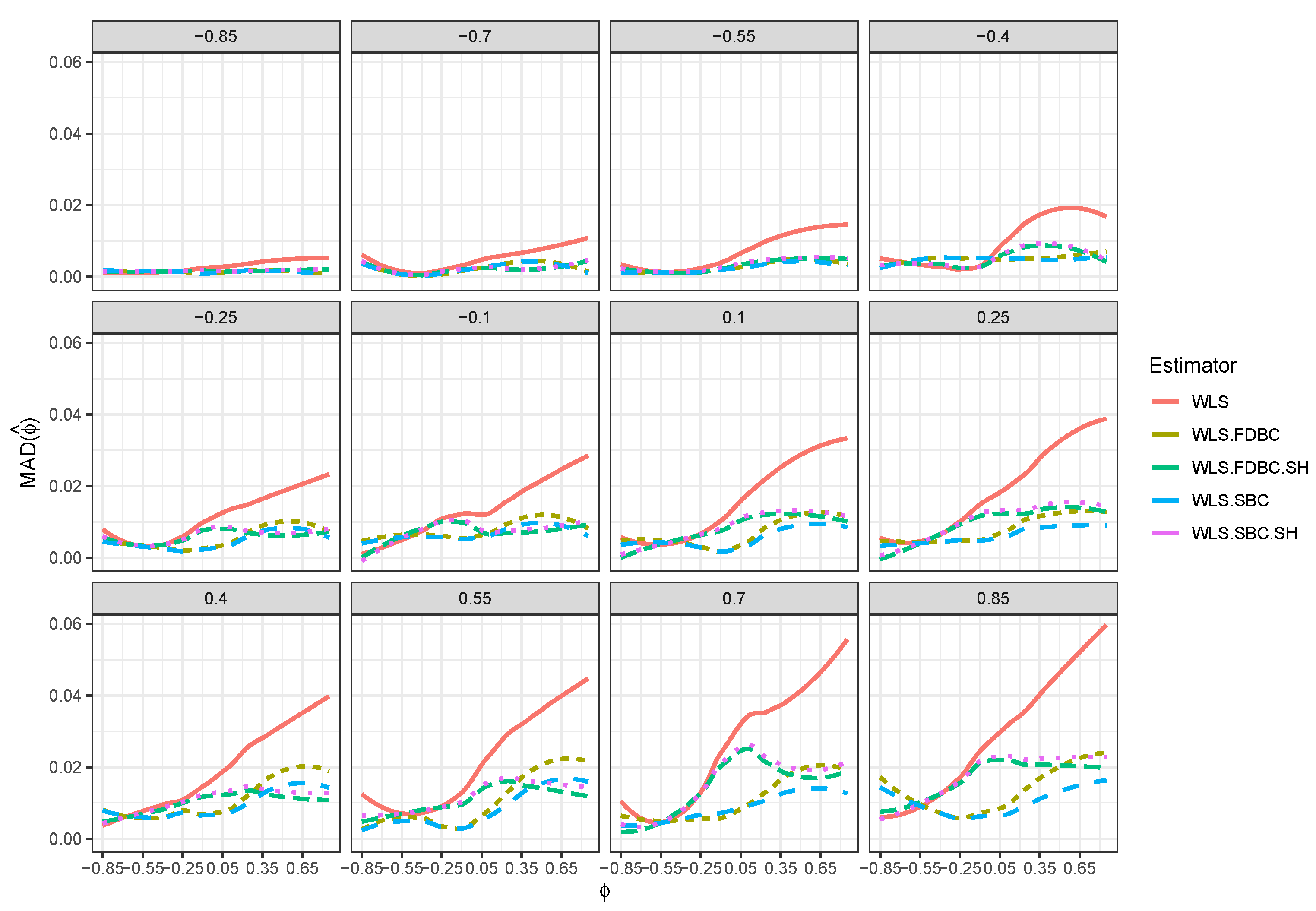

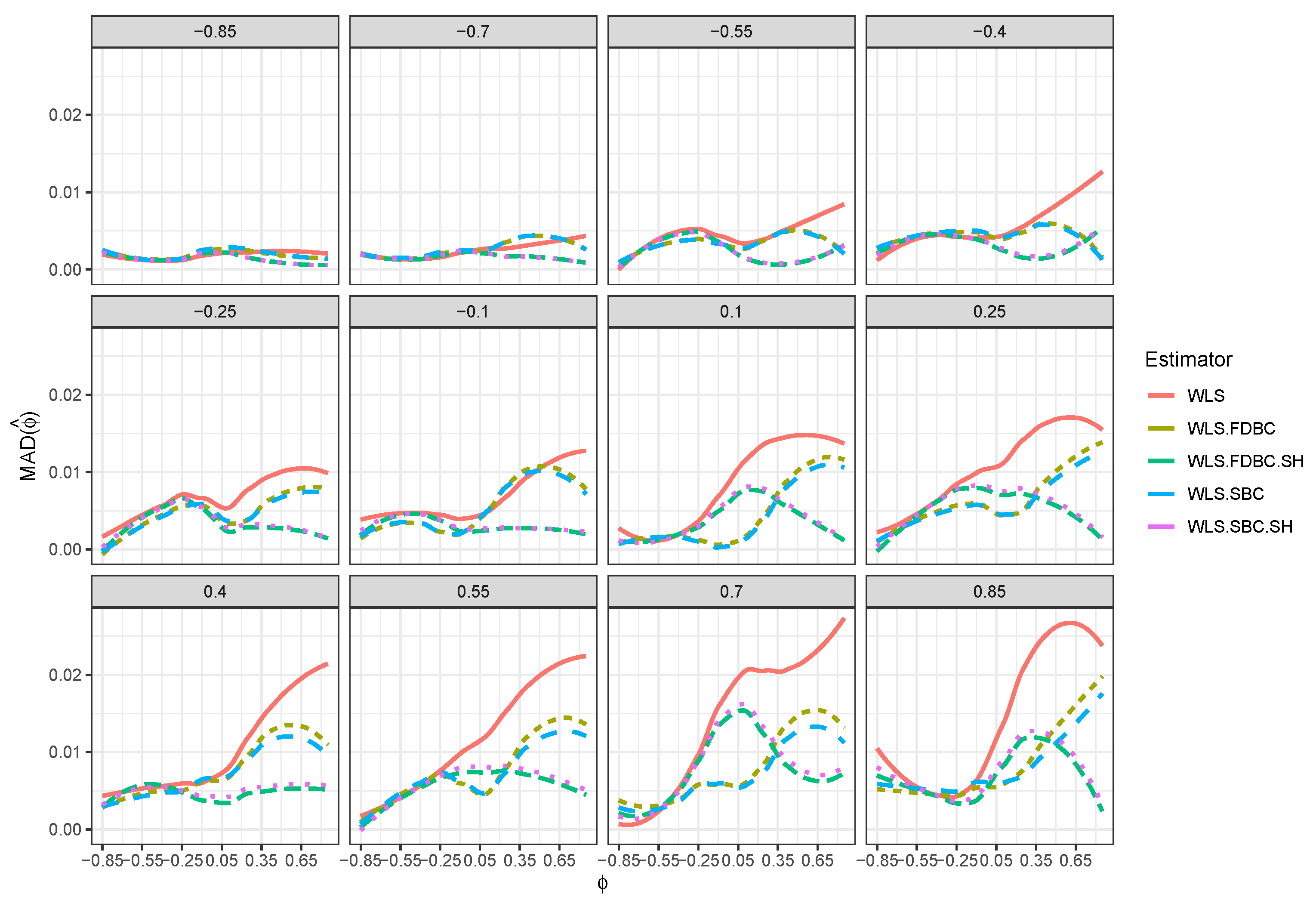

- All bootstrap methods yielded substantial reductions in both root mean squared error (RMSE) and mean absolute deviation (MAD) relative to the WLS estimator across all sample sizes and for all positive values of .

- Traditional bootstrap bias-correction methods demonstrated notable improvements in RMSE and MAD, particularly for smaller sample sizes (e.g., ) and positive values of , when compared to the uncorrected WLS estimator. For example, Figure 5 and Figure 6 show that when , RMSE and MAD improved by about 50% for the single and double bootstrap based on the shrinking approach, compared with the RMSE and MAD of the original WLS estimator . Moreover, improvements reached nearly 100% for the traditional single and double bootstrap methods in most cases.

- For larger sample sizes (e.g., ), bootstrap bias correction methods based on the shrinkage approach achieved greater reductions in RMSE and MAD than both the WLS estimator and the traditional bootstrap methods, especially across most combinations of and . For example, when , the RMSE and MAD for shrinkage-based bootstrap bias correction methods are better than, or at least equivalent to, those of WLS. In contrast, the RMSE and MAD for the traditional single and double bootstrap methods were sometimes higher than those for WLS, particularly when values are close to zero or greater than .

- Bias reduction to nearly zero from both bootstrap estimators is most evident when was near the boundaries (i.e., close to or ), particularly for small sample sizes such as . Moreover, the fast double bootstrap method outperformed the single bootstrap approach in reducing bias in the WLS estimators.

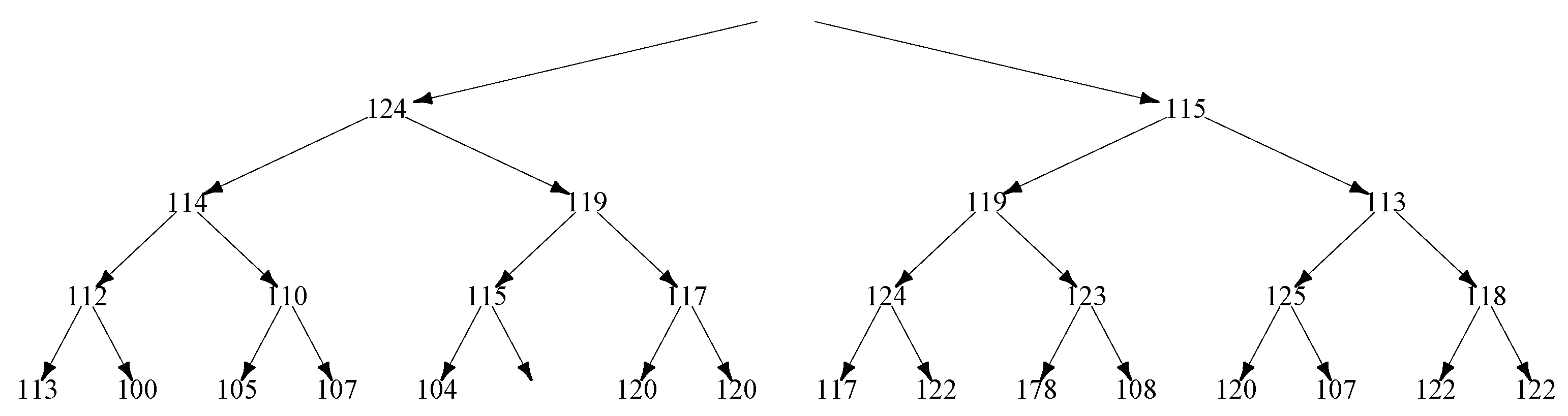

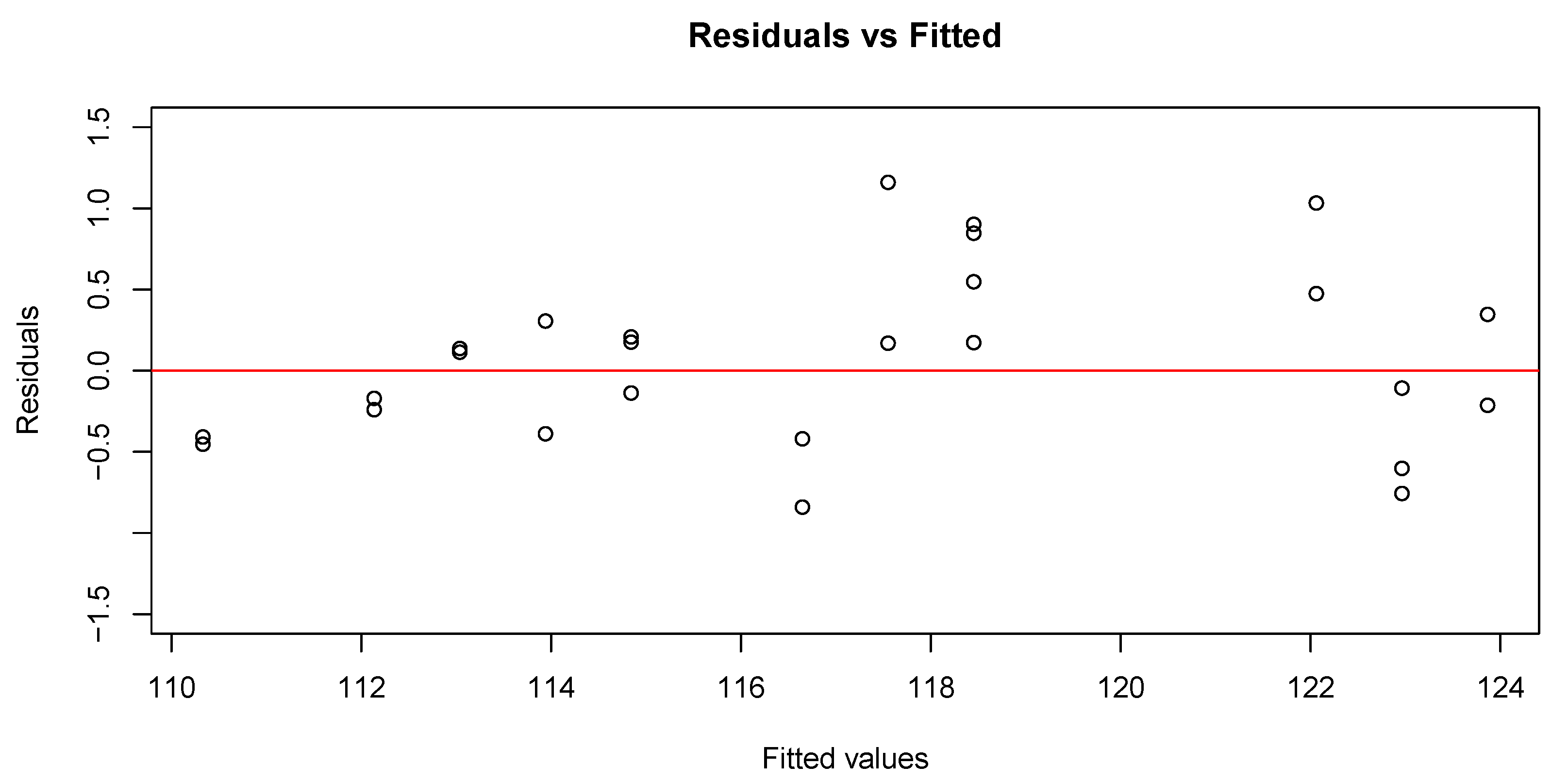

6. Application

7. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cowan, R. Statistical Concepts in the Analysis of Cell Lineage Data. In Proceedings of the 1983 Workshop Cell Growth Division; Latrobe University: Melbourne, Australia, 1984; pp. 18–22. [Google Scholar]

- Hawkins, E.D.; Markham, J.F.; McGuinness, L.P.; Hodgkin, P.D. A single-cell pedigree analysis of alternative stochastic lymphocyte fates. Proc. Natl. Acad. Sci. USA 2009, 106, 13457–13462. [Google Scholar] [CrossRef] [PubMed]

- Kimmel, M.; Axelrod, D. Branching Processes in Biology; Springer: New York, NY, USA, 2005. [Google Scholar]

- Sandler, O.; Mizrahi, S.P.; Weiss, N.; Agam, O.; Simon, I.; Balaban, N.Q. Lineage correlations of single cell division time as a probe of cell-cycle dynamics. Nature 2015, 519, 468–471. [Google Scholar] [CrossRef] [PubMed]

- Cowan, R.; Staudte, R. The Bifurcating Autoregression Model in Cell Lineage Studies. Biometrics 1986, 42, 769–783. [Google Scholar] [CrossRef] [PubMed]

- Huggins, R.M. A law of large numbers for the bifurcating autoregressive process. Commun. Stat. Stoch. Model. 1995, 11, 273–278. [Google Scholar] [CrossRef]

- Bui, Q.; Huggins, R. Inference for the random coefficients bifurcating autoregressive model for cell lineage studies. J. Stat. Plan. Inference 1999, 81, 253–262. [Google Scholar] [CrossRef]

- Huggins, R.M.; Basawa, I.V. Extensions of the Bifurcating Autoregressive Model for Cell Lineage Studies. J. Appl. Probab. 1999, 36, 1225–1233. [Google Scholar] [CrossRef]

- Huggins, R.; Basawa, I. Inference for the extended bifurcating autoregressive model for cell lineage studies. Aust. N. Z. J. Stat. 2000, 42, 423–432. [Google Scholar] [CrossRef]

- Zhou, J.; Basawa, I. Least-squares estimation for bifurcating autoregressive processes. Stat. Probab. Lett. 2005, 74, 77–88. [Google Scholar] [CrossRef]

- Terpstra, J.T.; Elbayoumi, T. A law of large numbers result for a bifurcating process with an infinite moving average representation. Stat. Probab. Lett. 2012, 82, 123–129. [Google Scholar] [CrossRef]

- Elbayoumi, T.; Terpstra, J. Weighted L1-Estimates for the First-order Bifurcating Autoregressive Model. Commun. Stat. Simul. Comput. 2016, 45, 2991–3013. [Google Scholar] [CrossRef]

- Elbayoumi, T.M.; Mostafa, S.A. On the estimation bias in first-order bifurcating autoregressive models. Stat 2021, 10, e342. [Google Scholar] [CrossRef]

- Hurwicz, L. Least-squares bias in time series. In Statistical Inference in Dynamic Economic Models; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1950; pp. 365–383. [Google Scholar]

- Huggins, R.M.; Marschner, I.C. Robust Analysis of the Bifurcating Autoregressive Model in Cell Lineage Studies. Aust. N. Z. J. Stat. 1991, 33, 209–220. [Google Scholar] [CrossRef]

- Staudte, R.G. A bifurcating autoregression model for cell lineages with variable generation means. J. Theor. Biol. 1992, 156, 183–195. [Google Scholar] [CrossRef]

- Elbayoumi, T.; Mostafa, S. Bias Analysis and Correction in Weighted-L1 Estimators for the First-Order Bifurcating Autoregressive Model. Stats 2024, 7, 1315–1332. [Google Scholar] [CrossRef]

- Blandin, V. Asymptotic results for random coefficient bifurcating autoregressive processes. Statistics 2014, 48, 1202–1232. [Google Scholar] [CrossRef]

- MacKinnon, J.G.; Smith, A.A. Approximate bias correction in econometrics. J. Econom. 1998, 85, 205–230. [Google Scholar] [CrossRef]

- Berkowitz, J.; Kilian, L. Recent developments in bootstrapping time series. Econom. Rev. 2000, 19, 1–48. [Google Scholar] [CrossRef]

- Tanizaki, H.; Hamori, S.; Matsubayashi, Y. On least-squares bias in the AR(p) models: Bias correction using the bootstrap methods. Stat. Pap. 2006, 47, 109–124. [Google Scholar] [CrossRef]

- Patterson, K. Bias Reduction through First-order Mean Correction, Bootstrapping and Recursive Mean Adjustment. J. Appl. Stat. 2007, 34, 23–45. [Google Scholar] [CrossRef]

- Liu-Evans, G.D.; Phillips, G.D. Bootstrap, Jackknife and COLS: Bias and Mean Squared Error in Estimation of Autoregressive Models. J. Time Ser. Econom. 2012, 4, 1–33. [Google Scholar] [CrossRef]

- Hall, P. The Bootstrap and Edgeworth Expansion; Springer: New York, NY, USA, 1992. [Google Scholar]

- Lee, S.M.S.; Young, G.A. The effect of Monte Carlo approximation on coverage error of double-bootstrap confidence intervals. J. R. Stat. Soc. Ser. 1999, 61, 353–366. [Google Scholar] [CrossRef]

- Shi, S.G. Accurate and Efficient Double-bootstrap Confidence Limit Method. Comput. Stat. Data Anal. 1992, 13, 21–32. [Google Scholar] [CrossRef]

- Chang, J.; Hall, P. Double-bootstrap methods that use a single double-bootstrap simulation. Biometrika 2015, 102, 203–214. [Google Scholar] [CrossRef]

- Ouysse, R. A Fast Iterated Bootstrap Procedure for Approximating the Small-Sample Bias. Commun. Stat. Simul. Comput. 2013, 42, 1472–1494. [Google Scholar] [CrossRef]

- Tibbe, T.D.; Montoya, A.K. Correcting the Bias Correction for the Bootstrap Confidence Interval in Mediation Analysis. Front. Psychol. 2022, 13, 810258. [Google Scholar] [CrossRef]

- Song, E.; Lam, H.; Barton, R.R. A Shrinkage Approach to Improve Direct Bootstrap Resampling Under Input Uncertainty. INFORMS J. Comput. 2024, 36, 1023–1039. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2022. [Google Scholar]

- Elbayoumi, T.; Mostafa, S. Bifurcatingr: Bifurcating Autoregressive Models, R package version 2.1.0; R Foundation for Statistical Computing: Vienna, Austria, 2024; Available online: https://CRAN.R-project.org/package=bifurcatingr (accessed on 25 August 2025).

- Huggins, R.M. Robust Inference for Variance Components Models for Single Trees of Cell Lineage Data. Ann. Stat. 1996, 24, 1145–1160. Available online: http://www.jstor.org/stable/2242586 (accessed on 25 August 2025). [CrossRef]

- Elbayoumi, T.; Mostafa, S. Impact of Bias Correction of the Least Squares Estimation on Bootstrap Confidence Intervals for Bifurcating Autoregressive Models. J. Data Sci. 2024, 22, 25–44. [Google Scholar] [CrossRef]

| Parameter | Values |

|---|---|

| Sample size (n) | 31, 63, 127, 255 |

| Autoregressive coefficient (ϕ1) | ±0.10, ±0.25, ±0.55, ±0.85 |

| Error correlation (θ) | ±0.10, ±0.25, ±0.55, ±0.85 |

| Intercept (ϕ0) | 10 (fixed) |

| Error distribution | Bivariate normal with mean zero |

| Variance structure | Heteroscedastic: |

| Number of Monte Carlo replications | 10,000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elbayoumi, T.; Usman, M.; Mostafa, S.; Zayed, M.; Aboalkhair, A. Bootstrap Methods for Correcting Bias in WLS Estimators of the First-Order Bifurcating Autoregressive Model. Stats 2025, 8, 79. https://doi.org/10.3390/stats8030079

Elbayoumi T, Usman M, Mostafa S, Zayed M, Aboalkhair A. Bootstrap Methods for Correcting Bias in WLS Estimators of the First-Order Bifurcating Autoregressive Model. Stats. 2025; 8(3):79. https://doi.org/10.3390/stats8030079

Chicago/Turabian StyleElbayoumi, Tamer, Mutiyat Usman, Sayed Mostafa, Mohammad Zayed, and Ahmad Aboalkhair. 2025. "Bootstrap Methods for Correcting Bias in WLS Estimators of the First-Order Bifurcating Autoregressive Model" Stats 8, no. 3: 79. https://doi.org/10.3390/stats8030079

APA StyleElbayoumi, T., Usman, M., Mostafa, S., Zayed, M., & Aboalkhair, A. (2025). Bootstrap Methods for Correcting Bias in WLS Estimators of the First-Order Bifurcating Autoregressive Model. Stats, 8(3), 79. https://doi.org/10.3390/stats8030079