1. Introduction

In aging research, determining the onset of cognitive decline is highly relevant, since its accurate and early detection allows for a better understanding of the aging process, its characteristics, and factors associated with its onset [

1]. Early detection is therefore critical for the implementation of preventive or therapeutic interventions that can slow or mitigate cognitive decline. The inaccurate estimation of this onset can have significant and potentially harmful consequences; for instance, overestimation of cognitive decline onset may result in delayed care provision for older adults. On the other hand, underestimating the onset of cognitive decline can lead to unnecessary anxiety and worry. Caregivers may think that older persons are in a more advanced state of cognitive deterioration than they are, negatively impacting their quality of life and emotional well-being. Therefore, there is a need for methods that permit the accurate estimation of the onset of cognitive decline in older adults, backed by solid data and appropriate clinical assessments.

Change-point (CP) models are commonly used in aging research for estimating the onset of cognitive decline [

2,

3,

4] and answering questions concerned with the timing of processes such as terminal decline [

5]. The detection of the moment at which a CP occurs in the trajectory of a stochastic process is a problem that has been addressed from multiple perspectives in different disciplines. It is a common problem in time series analysis [

6] and of utmost interest in longitudinal studies, where a set of individuals is followed over time. Although both types of studies aim to obtain predictions about the behavior of paths of stochastic processes, longitudinal studies usually pay more attention to the determinants of the phenomenon under investigation. In this sense, statistical analysis is frequently performed in the context of regression models, often including random effects. Change-point regression models are commonly formulated in longitudinal studies within a framework based on linear mixed models [

7,

8,

9] and more specifically, in aging research [

10,

11,

12]. Commonly used model specifications include a change over time that may well be abrupt, such as in the Broken stick model (BSM) [

13], or gradual, as in the Bacon & Watts model (BWM) [

14] and the Bent cable regression model (BCR) [

15].

Formulating models suitable for longitudinal data within a differential equation (DE) framework relaxes linearity assumptions like those imposed in linear mixed-effects models. Regarding the explicative features of cognitive decline, the use of mixed models within a non-linear setting maintains the advantages of this methodology, such as describing population and individual variation and accounting for dependent data, while also allowing the incorporation of aspects concerning the onset of the decline phase and the factors associated with its delay or advancement, as well as model the speed at which this process occurs.

We introduce a Bayesian non-linear mixed effects model in which the longitudinal trajectory is modeled through a differential equation (DE). DE models are increasingly used in longitudinal studies [

16,

17,

18], allowing the representation of complex dynamics where the passage of time plays a fundamental role. This novel research contribution focuses on aspects such as describing the temporal evolution of a specific phenomenon and predicting future observations. It is also possible to consider the mean of a longitudinal mixed-effects model as a particular case of a linear DE.

The rest of the paper is organized as follows. In

Section 2, we review three CP regression models that are often used in practice, and then focus on the new DE model we propose. Statistical inference for these models is presented under a Bayesian framework, and we provide criteria for the model selection stage. In

Section 3, we describe a simulation study designed to evaluate the performance of the DE model under different data-generating processes (DGPs) comparing it against the models presented in

Section 2. An application of cognitive data from the English Longitudinal Study of Aging (ELSA) [

19] is illustrated in

Section 4, where the proposed DE model shows superior prediction accuracy than the other three models and, on average, shifts the estimate of the onset of the cognitive decline two years later. Finally, in

Section 5, we draw conclusions and propose future lines of research.

2. Materials and Methods

We formulate CP regression models within a non-linear mixed modeling framework (NLMM) as described by [

20]. Assuming that the

measurements of the

i-th individual are contained on the vector

, we adopt the hierarchical definition of NLMM expressed through the following equations:

where

and

.

Under this specification, the outcome

(response of subject

i at time

) is a non-linear function of time and a set of parameters

. Equation (

1) is often referred to as the “individual-level model”. Additionally, the parameters of this equation may include random effects

. Equation (

2) is often referred to as the “population-level model”. In addition, both random effects (

) and model errors (

) are assumed to follow a normal distribution with zero mean and variance parameters

and

, respectively. An advantage of the NLMM lies in its ability to make predictions about the future values of a particular individual or an average individual.

The most commonly used CP models presented in the literature, as well as our new proposal, are introduced below. All the models are formulated within an NLMM framework by only considering an intercept for each parameter in the populational equation. However, the formulation of models with explanatory variables can be easily generalized.

2.1. Change Point Models

Several formulations of CP models have been used in the literature. Here we recall the BSM as a piecewise linear model with one free knot. It is characterized by the following piecewise-continuous linear function:

Another characterization has been provided in the BWM with a smooth transition function given as

where tanh(.) denotes the hyperbolic tangent function.

Finally, we consider the BCR where two linear functions are connected by a quadratic polynomial:

Including random effects and explanatory variables in these models is optional; this choice reflects which parameter is allowed to vary among individuals, as presented in Equation (

1). Although the interpretation of the intercept

and slope parameters

differs across models, the parameter associated with the CP

has the same meaning in all three models. Equations (

4) and (

5) propose smoother alternatives to the abrupt change of the BSM, where the smoothness of such change is controlled by the value of the transition parameter

and a smooth function (a hyperbolic tangent and a quadratic polynomial, respectively).

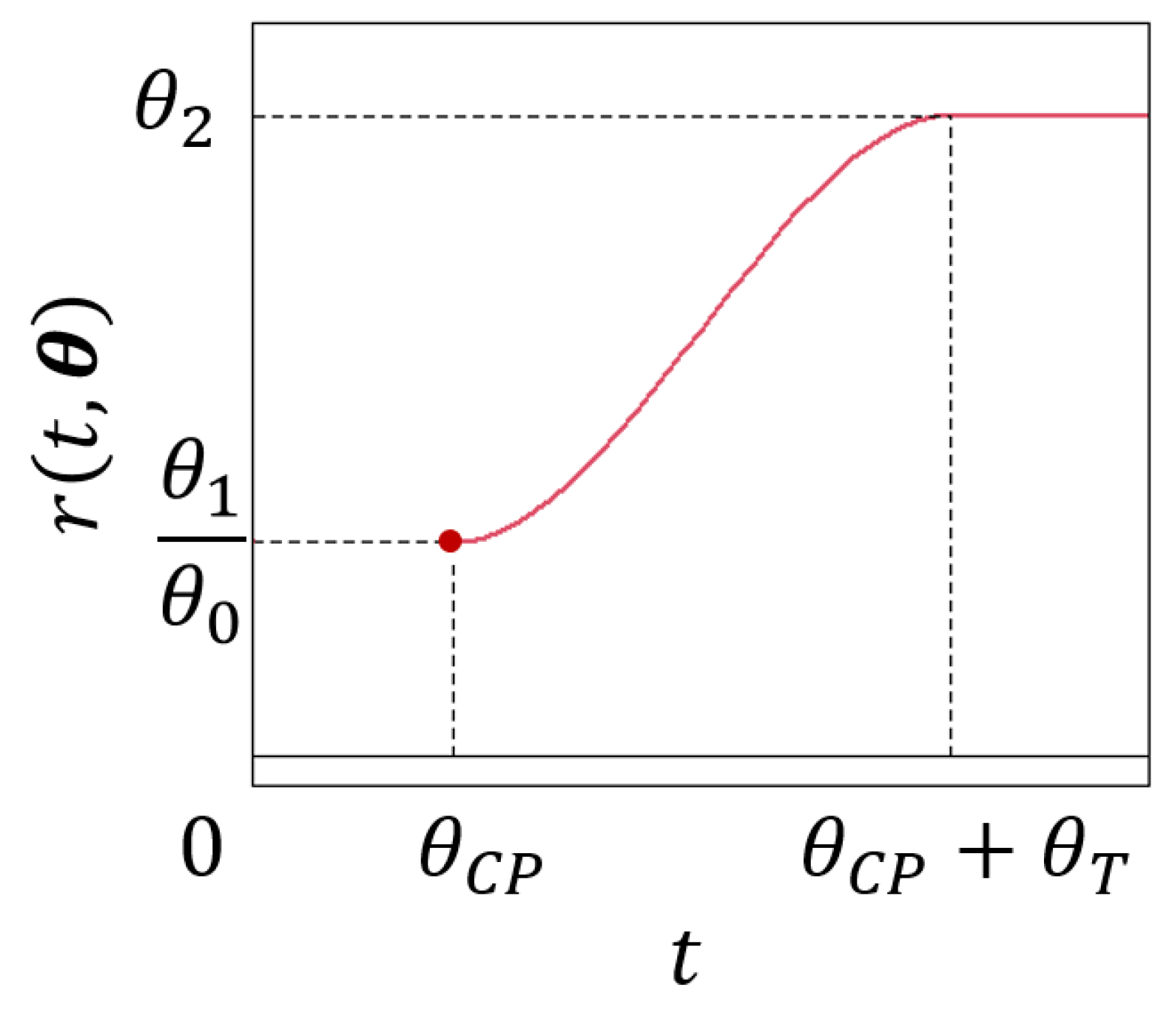

2.2. Differential Equation Model (DEM)

Unlike the three previous alternatives, the model presented in Equation (

6) is based on the description of a rate of change that is not constant over the course of aging. The mean trajectory is described by a simple exponential decay DE where the key element of this model is the rate function

:

The family of solutions obtained by solving this simple DE is determined from the specification of the rate function

. In this study, a non-decreasing rate function as presented in

Figure 1 is proposed. This specification of the rate function resembles the mean function corresponding to the BCR model where two straight lines are connected by a polynomial. However, the obtained mean function poses a different alternative.

Up to the point where the deterioration process begins, the rate of change is zero, which translates into a horizontal trajectory of the

f function at the value

. Then there is a transition period where the rate begins to increase up to a maximum value where it stabilizes. From this moment on, the decay is proportional to the cognitive state. Equation (

7) presents the components of the rate function:

As in the previous cases, the CP is modeled through the parameter . Additionally, a transition period (of length ) around the CP is considered. Lastly, is a polynomial of third degree that smoothly connects both parts of the rate function. To this end, it enforces the following constraints:

The coefficients of this polynomial can be found solving a linear system (see

Appendix A). It is important to note that the cubic polynomial

used to define the transition in the rate function does not introduce additional free parameters into the model. Instead, its four coefficients are entirely determined by the continuity and differentiability constraints imposed at the boundaries of the transition period. Therefore, the polynomial serves strictly as a smooth interpolant ensuring a gradual change in the rate function, and does not contribute additional flexibility in terms of parameter estimation. As a consequence, the number of parameters estimated in the DEM remains comparable to that of the other models considered. Under this specification, it is possible to obtain the closed analytical expression for the

function presented in Equation (

8)

After expanding the expression in the first part of Equation (

8),

could be thought as an intercept like parameter for the linear segment before the CP. Applying straightforward algebra, it is possible to expand the exponential expression in (

8) as follows:

Being . Additionally, by redefining , the structure of the exponential decay becomes clearer.

Nonetheless, we prefer to present the model by means of Equations (

6) and (

7) since they provide a clearer interpretation of the parameters.

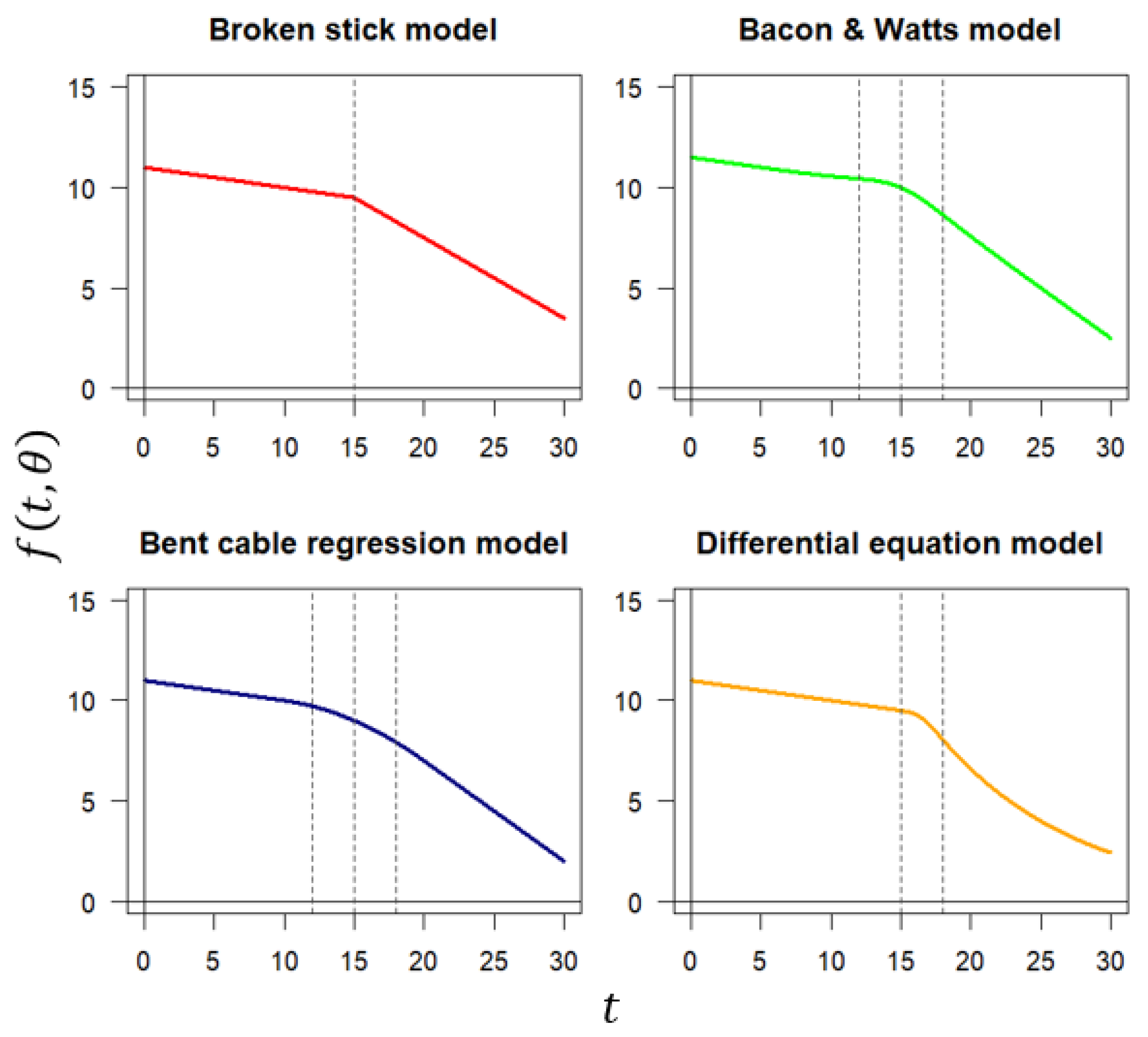

To better understand how these models work,

Figure 2 compares the mean trajectory of the four alternatives presented in Equations (

3)–(

6).

Figure 2 shows how the four models consider three phases in the mean function. The BSM consists of two linear segments with different slopes abruptly joined at the CP. The other alternatives adhere to this pattern, adding a “transition” phase between.

Regarding the transition parameter, it has different meanings in the four models. In the BWM, its meaning is not trivial, but a “radius of curvature” (see page 528 of ref. [

14]) can be constructed around the CP allowing for a smoother transition between both regimes. The BSM can be viewed as a limiting case of the BWM when the transition parameter equals zero, resulting in an abrupt transition. In the case of the BCR, the transition parameter represents the semi-amplitude of the transition period around the CP. Finally, in the DEM, it is the amplitude of the period that connects both the linear and the decay phases. This period starts at the CP and ends at beginning of the decline phase.

The DEM has the advantage that the value of the function

is never less than zero. This property may be advantageous in applications where the response variable is inherently non-negative, such as cognitive scores. Additionally, as seen in

Figure 2, is the only specification capable of capturing a non-linear behavior after the CP. The latter is relevant since, as will be presented in scenarios based on Monte Carlo simulation, an inappropriate choice at CP model selection could result in significant biases in estimating the CP.

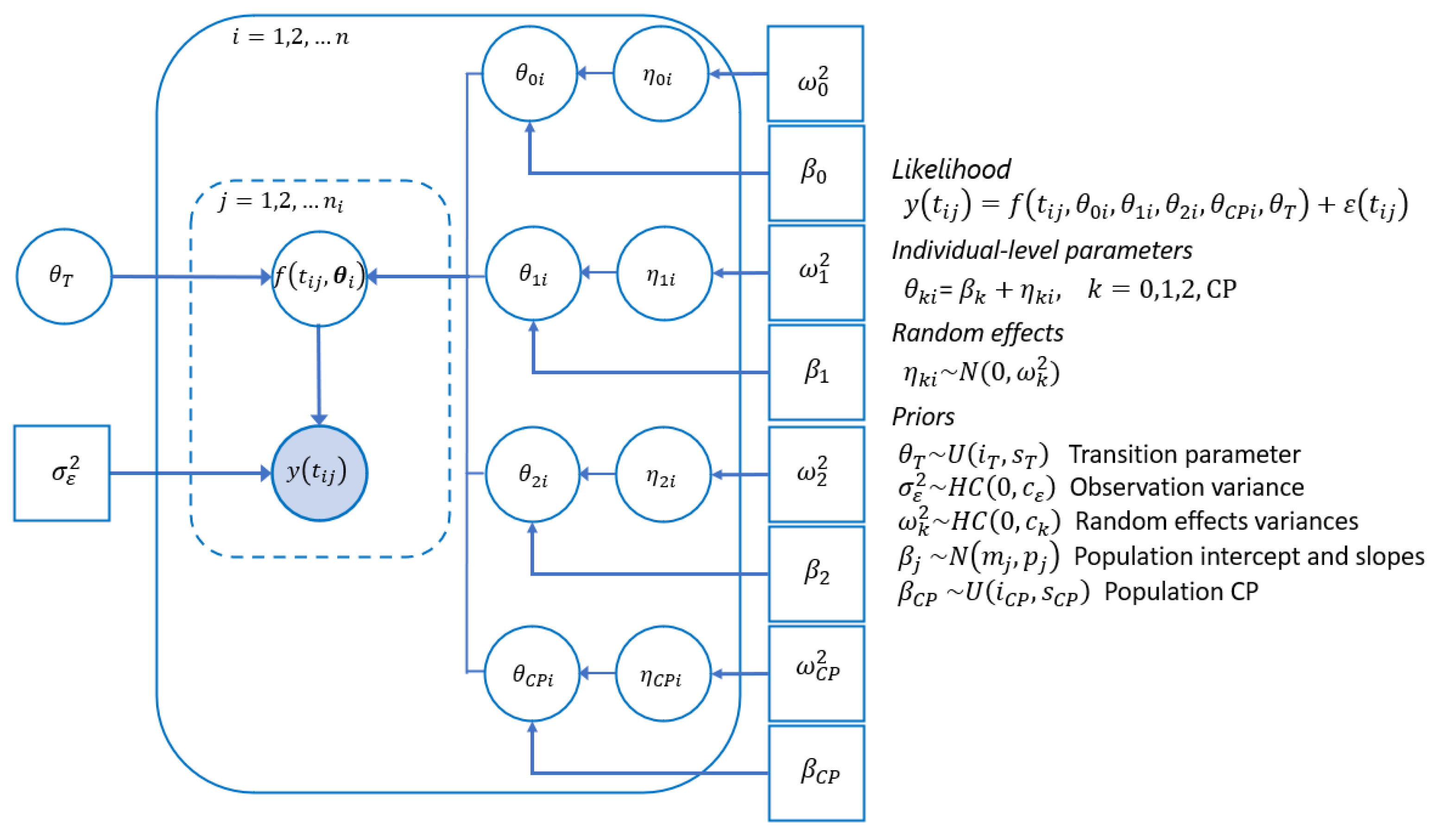

2.3. Bayesian Inference

Due to the inclusion of random effects, a CP and the specification of a transition parameter, the proposed DEM belongs to the family of non-linear models and requires iterative and computationally demanding estimation methods. Hence, we opted for the use of a Bayesian estimation approach, that can better handle a (possibly) large number of random effects without resorting to numerical methods to solve high-dimensional integrals [

21]. As we will see below, the estimation techniques were based on efficient Markov chain Monte Carlo (MCMC) algorithms.

We selected the following prior distributions. We assigned non-informative Gaussian priors to the fixed effects with precision parameters set to 0.001. On the other hand, for the CP and the transition parameter we propose uniform priors whose range accounted for the duration of the study. Finally, as suggested by [

22,

23], we assigned half-Cauchy distributions as a weakly informative prior for the parameters associated with the error and random effects variances. These choices allowed us to write the models described above as in

Figure 3:

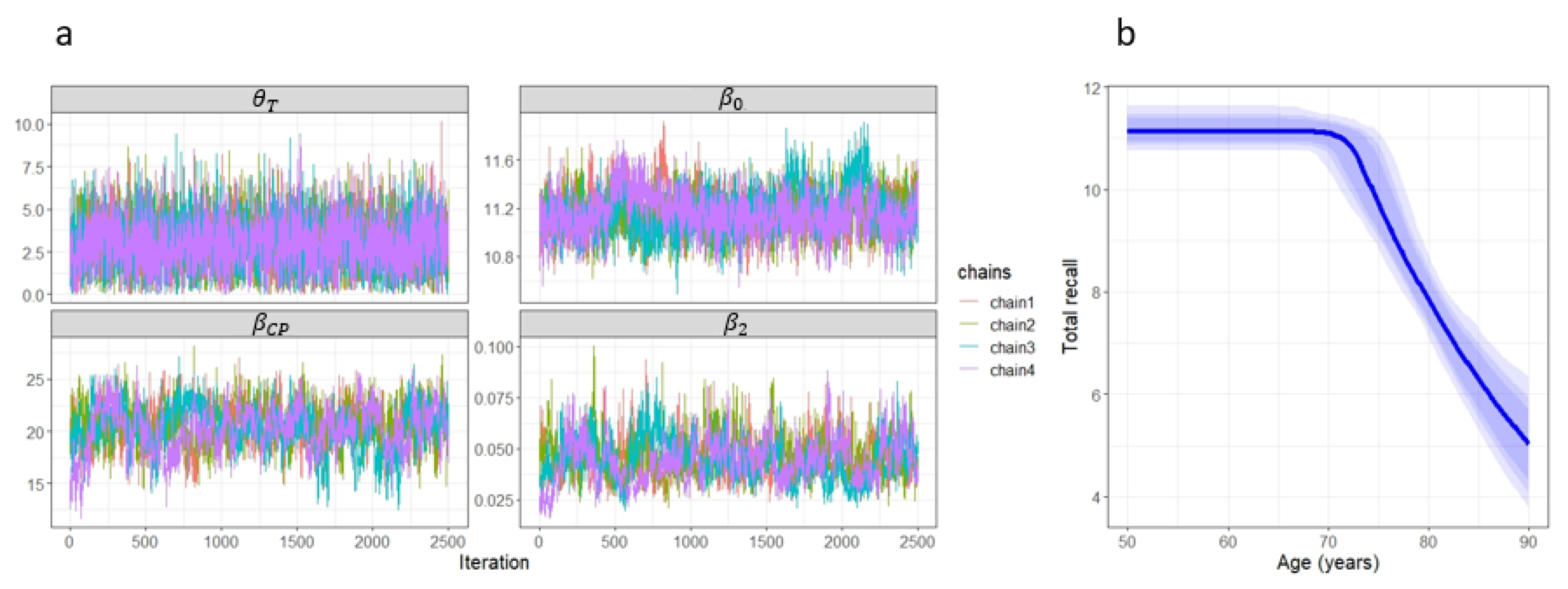

In all cases, we run four parallel Markov chains of model parameters for 5000 iterations each. After discarding the first 2500 iterations of each chain, we assess the convergence of the MCMC algorithm with the four separate Markov chain samples of size 2500. Convergence was monitored using the

statistic, trace plots, and the effective sample size [

24]. These checks were systematically applied in both the simulation study and the application to real-world data from ELSA. All statistical analyses were conducted using R [

25] making use of the MCMC algorithms available in the rstan and rjags libraries [

26,

27].

2.4. Model Selection

One way to perform model selection within a Bayesian framework is by using the marginal likelihood [

28]. In model selection,

K competing models are considered and researchers are interested in the relative plausibility of each model

given the priors and the data. This relative plausibility is contained in the posterior model probability

of model

given the data:

where

is the marginal likelihood of the data under the

model. Thus, from the initial representation of modeling uncertainty contained in

and

, the posterior distribution of the model

updates this uncertainty quantification after observing the data. For the applications considered in this study, the calculations necessary to compute these quantities were obtained using the algorithms of the bridgesampling R library [

29].

In addition, another extensively used indicator is the widely applicable information criteria (WAIC) [

30]:

where

and

represent the mean and variance with respect to the posterior distribution

. Unlike posterior model probabilities, WAIC evaluates out-of-sample prediction accuracy. To this end, it uses the log-predictive density (which is a more general quantity than the mean squared error) and a bias correction that take into account the effective number of parameters. For the purposes of this study, the algorithms used to obtain the WAIC were those contained in the loo R library [

31].

The fit indicators described above were introduced to assess the performance of the new DEM against BSM, BWM, and BCR. In this paper, the emphasis is on the better estimation of the CP. For this reason, the comparison is conducted in terms of bias and interval coverage. Lastly, to explore the overall fit of each model, the posterior model probabilities as well as the WAIC value are provided.

To accomplish this, we proposed three simulation-based experiments. The aim of these experiments is to compare the fit of the models in different situations and to determine their performance to estimate the CP. Finally, the performance of the DEM versus the other competitors is presented using real cognitive data from ELSA.

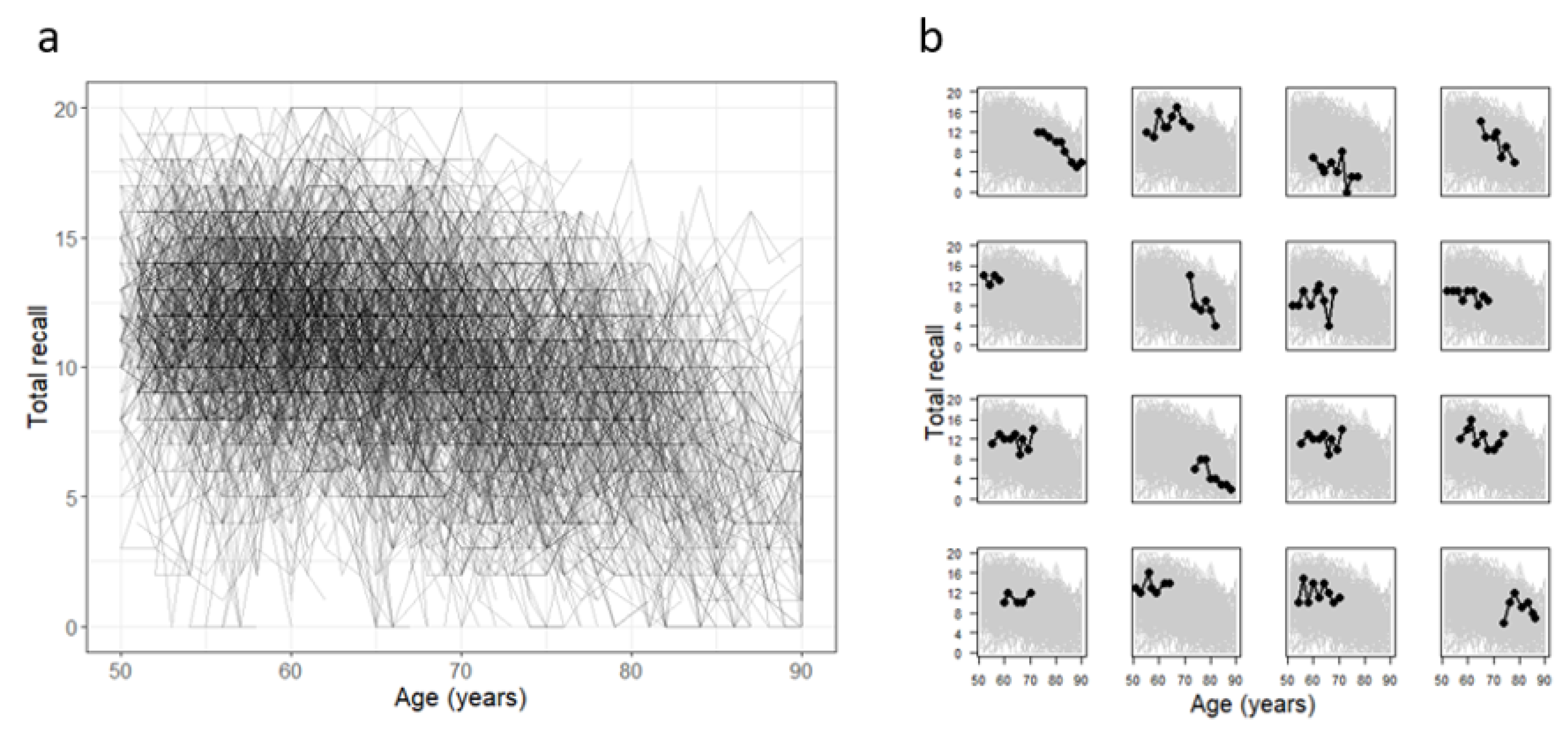

3. Simulation Study

We designed a simulation study based on three experiments. The first has the DEM as the data-generating process (DGP). In this case, not only is the DEM expected to present the best fit, but it is also expected to find negative biases in the other three model specifications when estimating the CP. The second scenario corresponds to a situation where the DGP is the BSM. We considered this scenario to assess how robust the DEM is when it is not the appropriate model. Finally, the third scenario is similar to the first one, but limiting the follow-up period after the CP so that the curvature of the trajectory is not sufficiently decisive to point to the DEM as the best model.

In all scenarios, we simulated 1000 data sets composed of 50 individuals observed on 10 occasions at random times between 0 and 20. The value of the parameter

is set to 0, since the model is designed for application in studies of cognitive decline, where it is natural to assume that the cognitive state of the individuals remains constant until the moment when cognitive decline begins. This assumption is consistent with previous literature (e.g., Karr et al., 2018 [

1]), which suggests cognitive performance remains relatively stable prior to decline. For this reason, each scenario considered a horizontal trajectory up to the CP, which was fixed in the middle of the follow-up period

. In Scenario 2, the slope after the CP

was set at

, while Scenarios 1 and 3 considered a decline rate of

. For all cases, the value of

was set at 11, the observation noise had a variance

of

, and the random effects had variances of

,

, and 2 for the intercept, the slope (or rate), and the CP, respectively. Finally, the transition parameter (

) was set to 3. The algorithm to generate the data sets follows the pseudo-code presented in Algorithm 1.

The parameters used in the simulation study were selected to reflect typical values observed in empirical cognitive aging research. In particular, the intercept value corresponds to the average total recall score observed among cognitively healthy older adults in the English Longitudinal Study of Ageing (ELSA) [

32]. The post-decline rate was set to −0.5, representing a moderate deterioration compatible with prior studies, which report average annual declines in total recall scores ranging from 0.3 to 0.5 points [

33]. This value was chosen to ensure that the change point is identifiable without being unrealistically steep. The remaining parameters were chosen to reproduce plausible levels of observation noise and inter-individual variability, and to allow for a meaningful transition period (

) across different model specifications.

| Algorithm 1 Data simulation for the s-th scenario. |

- 1:

for to N do - 2:

for to do - 1.

Using a uniform distribution, generate 10 random times between 0 and (). - 2.

Generate individual parameters from population-level Equation ( 2) using inputs and simulated random effects (see Figure 3) . - 3.

Generate observations using the individual-level Equation ( 1).

- 3:

end for - 4:

end for

|

In each scenario, the four models considered were fitted to each of the 1000 data sets using the hierarchical Bayesian non-linear mixed model proposed in

Figure 3. The choices of prior distributions could be observed in

Appendix B (see

Table A1). Information on the posterior distribution of the parameters was extracted after verifying the convergence of the MCMC algorithm (see the distribution of the

statistic and

for the

parameter in

Figure A1 in

Appendix C). The posterior median (PM) of the CP was used as the point estimator, and credibility intervals (CrI) were constructed using the 0.025 and 0.975 sample quantiles (higher posterior density intervals were also constructed but did not differ significantly from those reported in

Table 1). The performance of the four models in estimating CP was evaluated through the bias of the

estimate, calculated as the difference between the real value and its corresponding estimate. It was considered that a successful (unbiased) estimation should include zero within the CrI of the bias. Likewise, using the CrI of

obtained in each of the 1000 data sets (as well as the true value of the CP), the effective coverage of this parameter was approximated in all models for each scenario. In this case, it is desirable that these values be as close as possible to 95%. Additionally, the values obtained from the posterior distribution of the parameters of each model were used to calculate posterior model probabilities and WAIC (and its standard error). We followed the pseudo-code presented in Algorithm 2 to obtain estimates, intervals, and fit indicators from every data set.

| Algorithm 2 Data simulation for the sth scenario. |

Require: N simulated data sets Ensure: bias, effective coverage, and posterior probabilities

- 1:

for to N do - 1.

Fit , , and to data set k. - 2.

Get PM and 95% CrI of CP. - 3.

Compute fit indicators.

- 2:

end for

Summarize values obtained in c). |

Table 1 presents the PM and the 95% CrI for the CP parameter, its bias, and the effective coverage probability of CrI. Finally, posterior model probability and WAIC are presented for the four models in each scenario.

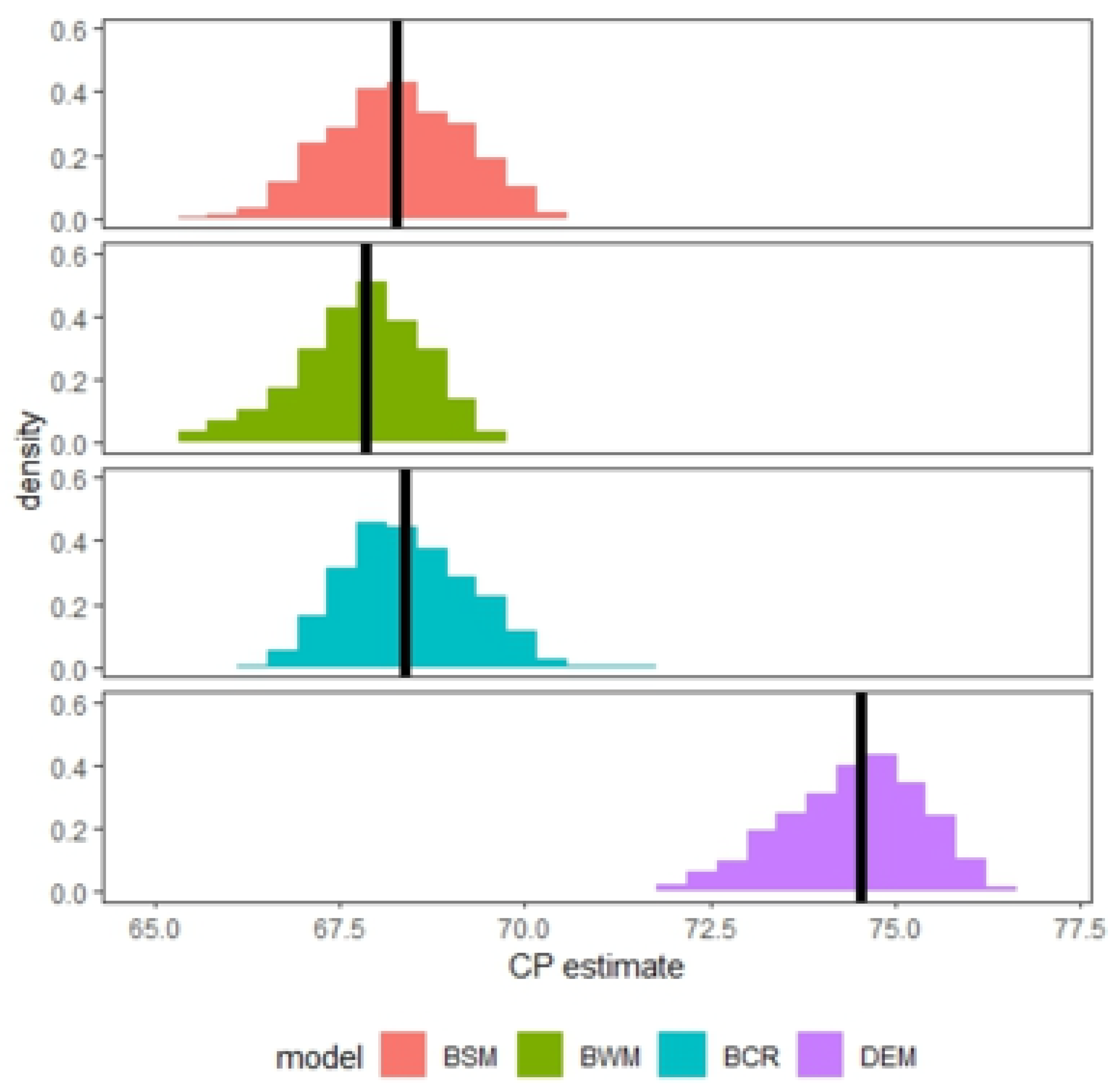

The results presented in

Table 1 suggest that the DEM has a superior performance when the decline after the CP shows enough curvature (Scenario 1). This can be seen in the posterior model probability, the lowest value of the WAIC estimate, on the unbiased nature of the CP estimator (the credible interval for the bias covers the value zero without being too wide), and on the effective posterior coverage probability of the credible interval of the true CP being very close to the nominal value of 95%. Furthermore, the performance of the BSM is suboptimal, as it exhibits a negative bias in the estimation of the CP.

In addition, it can be observed that in the other two scenarios, the DEM performance competes with the other alternatives, even in the worst case (Scenario 2). If the true decline is either linear (Scenario 2) or close to linear (Scenario 3), the CP estimated has a negligible median bias and a high posterior coverage close to the true CP value. It is worth noting that, even when the post-CP curvature is not sufficiently pronounced (Scenario 3), the posterior model probability indicates moderate evidence in favor of DEM.

5. Conclusions

This paper introduced a non-linear alternative based on a new DEM specification to commonly used CP models and presented its performance compared to the models most commonly used in the literature. Model fitting was performed in a Bayesian context within the R framework using the or libraries. For the purposes of this study, model estimation using resulted in shorter runtimes than those required by . Nevertheless, parameter estimation could also be undertaken straightforwardly within a frequentist framework. Comparison among models was conducted by computing model selection indicators that rely on the MCMC samples.

Different simulation scenarios were implemented to explore the performance of the DEM with reference to the BSM, BWM, and BCR models. From the analysis of the results of the simulation design, we observed that, when the cognitive decline phase had a sufficiently pronounced curvature, the DEM presented the best fit and produced a less biased estimator for the CP with higher posterior coverage in the credible interval. However, when there were not enough observations in the period where the curvature manifests itself, the performance of the DEM decreased. Furthermore, when the DGP did not exhibit curvature in the post-CP phase, the performance of DEM was found to be on par with that of its competitors.

Ultimately, in the illustration of the models, we used actual cognitive data from the ELSA study. The results showed a slightly better fit of the DEM, which suggested an onset of the cognitive decline stage an average of 6 years later than the other models. This result is of particular interest in the area of aging because it provides vital information for health policy planning. In conclusion, it is worth noting that the model described in this paper is not meant to replace the models proposed in the literature, but rather to serve as a viable alternative, offering commendable statistical properties, transparent parameter interpretation, and biologically plausible features (such as the mean function consistently avoiding intersection with the x-axis). Regarding its use, it should be preferred when there are reasons to include non-linear behavior in the modeling stage.

Future research will focus on (1) extending the DEM to incorporate explanatory variables (e.g., genetic risk factors) and handle missing data patterns common in longitudinal aging studies, (2) developing open-source software tools to facilitate clinical adoption, (3) applications to other neurodegenerative processes, and (4) personalized intervention timing based on model-derived decline trajectories. These developments will further bridge statistical innovation with clinical practice in aging research.