1. Introduction

Logistic regression stands as a key method in statistical modeling, particularly for binary classification, where the outcome variable is dichotomous. Its widespread use in various fields, including epidemiology, social sciences, and machine learning, is due to its ability to model the probability of an event occurring as a function of one or more predictor variables [

1]. Unlike a linear model, which assumes a continuous outcome, logistic regression uses a logistic function to convert the linear combination of predictors into a probability, making it well-suited for categorical outcomes. This ability to estimate probabilities directly makes logistic regression invaluable for risk assessment, predictive modeling, and hypothesis testing in several applied contexts.

However, multicollinearity, characterized by a high correlation among predictor variables, adds complexity to the interpretation and reliability of logistic regression models [

2]. Although multicollinearity does not introduce bias into the coefficient estimates in the same manner as in linear regression, it significantly inflates the standard errors of these coefficients [

3]. Inflated standard errors lead to decreased t-statistics (or Wald statistics) associated with the coefficients, which reduces the likelihood of detecting statistically significant effects. This increases the risk of Type II errors, where genuine relationships between predictors and the outcome may be overlooked [

4]. As a result, the practical implications of multicollinearity can lead to misleading conclusions, ultimately compromising the model’s usefulness for both predictive and explanatory purposes. Although the coefficients are not biased, their stability diminishes, resulting in substantial changes in the estimated coefficients when there are slight variations in the data. This instability makes it challenging to determine the true magnitude and direction of the predictors’ effects [

5]. Multicollinearity also complicates the assessment of variable importance. The high correlation among predictors complicates the identification of their individual impacts on the outcome, risking misleading conclusions about their influence [

6]. The interpretation of odds ratios, which are central to logistic regression, can also be problematic. With inflated standard errors, the confidence intervals around the odds ratios broaden, making it difficult to draw precise inferences about the magnitude of the effects. This complicates the practical application of the model findings [

7].

To mitigate multicollinearity in logistic regression, researchers often use regularization techniques like Ridge Regression, Lasso, and Elastic Net [

8]. These methods are intended to minimize the overlap between predictor variables, strengthen the consistency of coefficient estimates, and boost the overall reliability and clarity of the model.

Hoerl and Kennard (1970) introduced ridge regression to address multicollinearity in engineering data. Their work showed that a nonzero ridge parameter (

k) can lower the MSE of ridge regression compared to the variance of the Ordinary Least Squares (OLS) estimator [

9]. Since their pioneering contribution, extensive research has been conducted to refine and enhance the ridge regression methodologies. A multitude of scholars have proposed innovative estimators for the ridge parameter, making remarkable strides in the field. Their contributions not only enrich existing knowledge but also open new avenues for exploration and understanding. Notable contributions include those of McDonald & Schwing, 1973; Hoerl et al., 1975; McDonald & Galarneau, 1975; J. F. & P, 1976; Dempster et al., 1977; Gibbons, 1981; Schaeffer et al., 1984; Schaeffer, 1986; Walker & Birch, 1988; Kibria, 2003; Khalaf & Shukur, 2005 [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20] and very recently Muniz & Kibria, 2009; Månsson et al., 2010; Kibria et al., 2012; Hefnawy & Farag, 2014; Aslam, 2014; A. V. Dorugade, 2014; Arashi & Valizadeh, 2015; Ayinde & Lukman, 2016; Lukman & Ayindez, 2017; Melkumova & Shatskikh, 2017; Lukman et al., 2018, 2019; Herawati et al., 2018, 2024; Yüzbaşı et al., 2020; Golam Kibria & Lukman, 2020; Kibria, 2023; Hoque & Kibria, 2023; Mermi et al., 2024; Nayem et al., 2024; Hoque & Kibria, 2024; Yasmin & Kibria, 2025, among others [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40]. These contributions have played a critical role in the ongoing refinement of ridge regression techniques, ensuring their continued relevance in statistical modeling and analyses.

Despite extensive research on ridge regression, relatively little attention has been devoted to its application in logistic regression. Schaeffer et al. (1984) presented a logistic ridge regression (LRR) estimator, and later research investigated different approaches for estimating the ridge parameter (

k) and evaluated their effectiveness using Monte Carlo simulations [

16,

17,

23]. Recently, Mermi et al. (2024) carried out a thorough comparative evaluation of 366 ridge parameter estimators introduced over various periods, taking into account elements like the number of independent variables, sample size, correlation among predictors, and variance of errors [

38]. In the present study, we incorporated 16 of the most effective estimators from Mermi et al. (2024). Furthermore, we utilized seven estimators that Kibria and Lukman (2020) and Kibria (2023) specifically suggested for non-normal population distributions, guaranteeing a thorough assessment of logistic ridge regression across different modeling scenarios [

34,

36].

In the presence of multicollinearity, selecting an optimal estimator requires a careful balance between minimizing the MSE and maximizing the classification accuracy. MSE minimization is crucial for obtaining precise parameter estimates and reducing prediction errors, particularly in regression contexts, where deviations from true values carry significant implications [

5]. However, an exclusive focus on the MSE may fail to account for the importance of classification accuracy, especially in binary or categorical response settings, where correct classification is critical for decision-making [

7]. The selection of an estimator must strategically weigh these competing metrics, emphasizing MSE reduction in scenarios where precise numerical predictions are paramount and prioritizing classification accuracy when robust categorical predictions are the primary objective. As evidenced by numerous simulation studies, the optimal estimator is often context-dependent, varying based on the specific application and the relative costs associated with prediction errors versus misclassifications. Unlike much of the existing literature that focuses predominantly on linear models or limits evaluation to MSE, this study emphasizes both MSE and classification accuracy to provide a more comprehensive assessment of the model performance in the presence of multicollinearity. While previous studies have explored various ridge estimators in linear regression contexts, there remains a significant gap in systematically evaluating their effectiveness within logistic regression frameworks, particularly under high multicollinearity and non-normal data conditions. By incorporating recently developed and robust ridge parameter estimators tailored for such scenarios, this study aims to address this gap and offer practical guidance for the selection of estimators in binary classification problems. Therefore, it is crucial to conduct a thorough assessment of both the MSE and classification accuracy to make a well-informed choice concerning estimator selection when multicollinearity is present.

This research intends to conduct an in-depth comparison of 23 ridge regression estimators alongside traditional LR, Lasso, EN, and GRR. This examination goes beyond the typical emphasis on MSE by also assessing classification accuracy in situations of multicollinearity. The objective of this study is to enhance the existing literature on ridge regression by systematically evaluating the performance of estimators under various levels of multicollinearity. Additionally, real-world datasets will be utilized to corroborate the findings obtained from simulations, thereby demonstrating the practical applicability of the methodologies employed.

2. Methodology

The OLS estimator of

is the following:

In regression analysis, let

Y be the response,

X be a matrix of predictors, and

θ be a parameter vector. The

ε denotes the random error. When the response variable is binary, standard OLS regression is no longer suitable, and logistic regression is employed instead. In this section, we present LRR, initially proposed by Schaefer et al. (1984), and explore its enhancements through more recent advancements [

16]. The refinements aim to enhance the performance and stability of logistic ridge regression, making it a more robust alternative in cases of multicollinearity.

Let the

th observation of the response,

, follows a Bernoulli distribution, denoted as

, where

represents the probability of success associated with the

th observation. The parameter

is modeled as a function of the predictors using the logit link, ensuring that predicted probabilities remain within the unit interval.

where

is the

data matrix with

predictors, and

is the

coefficients vector. The approach of maximum likelihood for estimating

involves maximizing the subsequent log-likelihood.

This can be achieved by setting the first derivative of the expression equal to zero. As a result, the maximum likelihood estimates are found by solving the resulting equation:

As this equation is nonlinear in nature, the Newton-Raphson method must be employed to find a solution. As a result, the solution to the previously mentioned equation can be obtained through the subsequent iterative weighted least squares method.

where

, and

The asymptotic covariance matrix of the maximum likelihood estimator is the inverse of the matrix of second derivatives:

and the asymptotic MSE equals:

where

is the eigenvalue of

matrix. A key drawback of the maximum likelihood estimate is that its asymptotic variance can inflate due to high correlation among independent variables, leading to small eigenvalues. To address this multicollinearity issue, Schaefer et al. (1984) proposed using the following LRR estimator [

16]:

Numerous ridge estimators have been suggested in the literature for different kinds of models, as mentioned previously, to determine the ridge parameter k. For this study, we selected the 16 most effective estimators identified by Mermi et al. (2024) based on their performance in different statistical settings [

38]. In addition, we incorporated seven estimators introduced by Kibria & Lukman (2020) and Kibria (2023) which are specifically designed for asymmetric data [

34,

36]. These 23 estimators were selected to ensure a comprehensive comparison that spanned both classical and modern approaches to ridge parameter selection. Including estimators suited for asymmetric data is particularly important because such data structures frequently arise in applied settings and can adversely affect the performance of conventional ridge estimators [

9,

19]. The comprehensive set of 23 ridge estimators utilized in this study, encompassing both simulation-based analyses and real-life applications, is systematically presented in

Table 1.

2.1. Generalized Ridge Regression (GRR)

In 2017, Yang and Emura presented a GRR estimator that uses non-uniform shrinkage instead of the conventional uniform shrinkage method, replacing the identity matrix with a diagonal matrix

[

44].

For a binary response variable

, the GRR estimator can be adapted by incorporating it into the penalized logistic regression framework. The parameter estimates

are derived by minimizing the penalized negative log-likelihood, as follows:

where

is the predicted probability from logistic regression,

is the shrinkage parameter,

is the threshold parameter, and

is a diagonal matrix encoding non-uniform penalties for each coefficient [

45].

where

is a standardized initial estimate of

,

, and

serves as an initial estimator based on a simple componentwise pseudo-regression.

This formulation allows for adaptive shrinkage, where coefficients associated with stronger initial signals (larger ) are penalized less, while weaker signals are shrunk more aggressively, thus promoting both interpretability and generalization in high-dimensional binary classification problems.

2.2. Least Absolute Shrinkage and Selection Operator (Lasso)

Lasso (Tibshirani, 1996) is an effective regression method that conducts variable selection and regularization at the same time, enhancing both the precision and clarity of statistical models [

46]. Unlike ridge regression, which uses the squared

norm, Lasso employs the

norm to reduce the total of squared differences while adhering to a limitation on the overall absolute size of the coefficients. This constraint promotes sparsity in the model, effectively mitigating multicollinearity and identifying a subset of truly relevant predictors [

46,

47].

where

is the log-likelihood of the binary logistic model,

is the tuning (regularization) parameter,

is the coefficient vector,

is the

norm to shrink coefficients toward zero, n is the sample size, and p is the number of predictors.

2.3. Elastic Net (EN)

The EN, which was introduced by Zou and Hastie in 2005, extends Lasso by combining penalties from both the

norm (Lasso) and

norm (ridge), addressing limitations in variable selection [

48]. This dual regularization reduces coefficients like ridge regression while enforcing sparsity by setting some to zero, as in the case of Lasso. The

norm ensures model sparsity, while the

norm alleviates restrictions on the number of selected predictors, enabling more flexibility [

49]. EN’s ability to select more predictors than Lasso makes it a powerful tool for variable identification, as demonstrated in spectral data analysis [

50]. For a binary outcome variable, the EN reduces the penalized negative log-likelihood of the logistic regression model to calculate the best-parameter vector.

Then the EN estimator becomes:

where

is the predicted probability that

,

is the coefficient vector,

is the regularization strength, and

is the weight between the

norm and

norm (

Ridge,

Lasso

.

In this study for GRR, Lasso, and EN, the optimum , tuning parameter was obtained using 5-fold cross-validation.

2.4. Simulation Study

The objective of this research is to evaluate the effectiveness of the leading ridge estimators against LR, Lasso, EN, and GRR, focusing on minimizing MSE and enhancing accuracy. Due to the infeasibility of a theoretical comparison, a Monte Carlo simulation study has been performed utilizing the R programming language [

51]. The method used for generating data for the models adheres to a recognized procedure [

19].

where

indicates the correlation between two predictors,

denotes the independent pseudo-random variable, and

P indicates the number of independent variables. Moreover, the response,

was obtained through the Bernoulli

distribution, where:

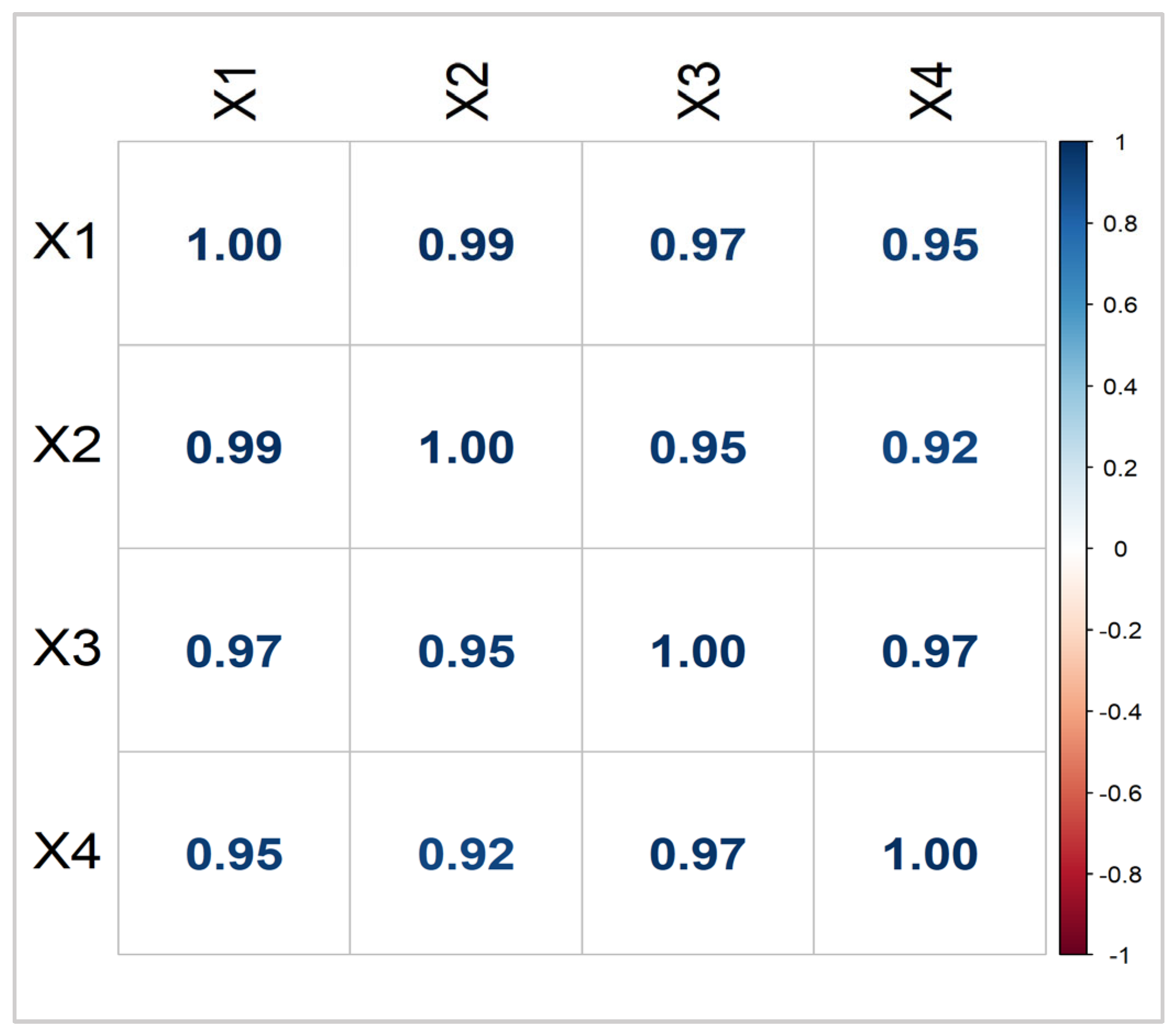

In the modeling process, we considered three distinct values for the number of independent variables (, and 10), the correlation between the predictors (, and 0.99), and three sample sizes (, and 300). The procedure for generating data for the predictors was carried out according to the established values of P, and n. In order to confirm the reliability of the findings, the experiment was conducted 5000 times.

Following the theoretical foundation established by Newhouse and Oman (1971), which posits that the MSE is minimized when the coefficient vector

aligns with the normalized eigenvector corresponding to the largest eigenvalue of the

matrix, the simulation study computes MSE by comparing estimated coefficient vectors,

, derived from various models, to the true

[

52]. This true

is determined for each simulation by extracting and normalizing the eigenvector associated with the largest eigenvalue of

, ensuring

, thereby providing a theoretically grounded benchmark for evaluating estimator performance through the calculation of the average squared difference between

and

. The average estimated MSE was computed using the formula applied to all simulations [

39].

where

is the estimators,

is the parameter, and

N = 5000.

In the evaluation of the classification performance, accuracy was used as a key metric to quantify the proportion of correctly predicted binary outcomes. For each simulation, we applied a 70% training and testing split on the simulated data. Models were trained on the training subset, and predictions were generated for the held-out test set. The predicted values, which were initially probabilities derived from the logistic regression link function, were converted into binary classifications using a threshold of 0.5. Specifically, if the predicted probability exceeded 0.5, the observation was classified as 1; otherwise, it was classified as 0.

The models’ overall accuracy was calculated using a confusion matrix based on the total number of correctly classified observations [

53].

where TP—true positive, TN—negative, FP—false positive, and FN—false negative, accuracy serves as the comprehensive metric for the model’s ability to make correct predictions across the entire dataset. For each simulation, accuracy values were calculated, and the average accuracy was then computed across all simulations, considering different combinations of parameters (

n,

P, and

) and models.

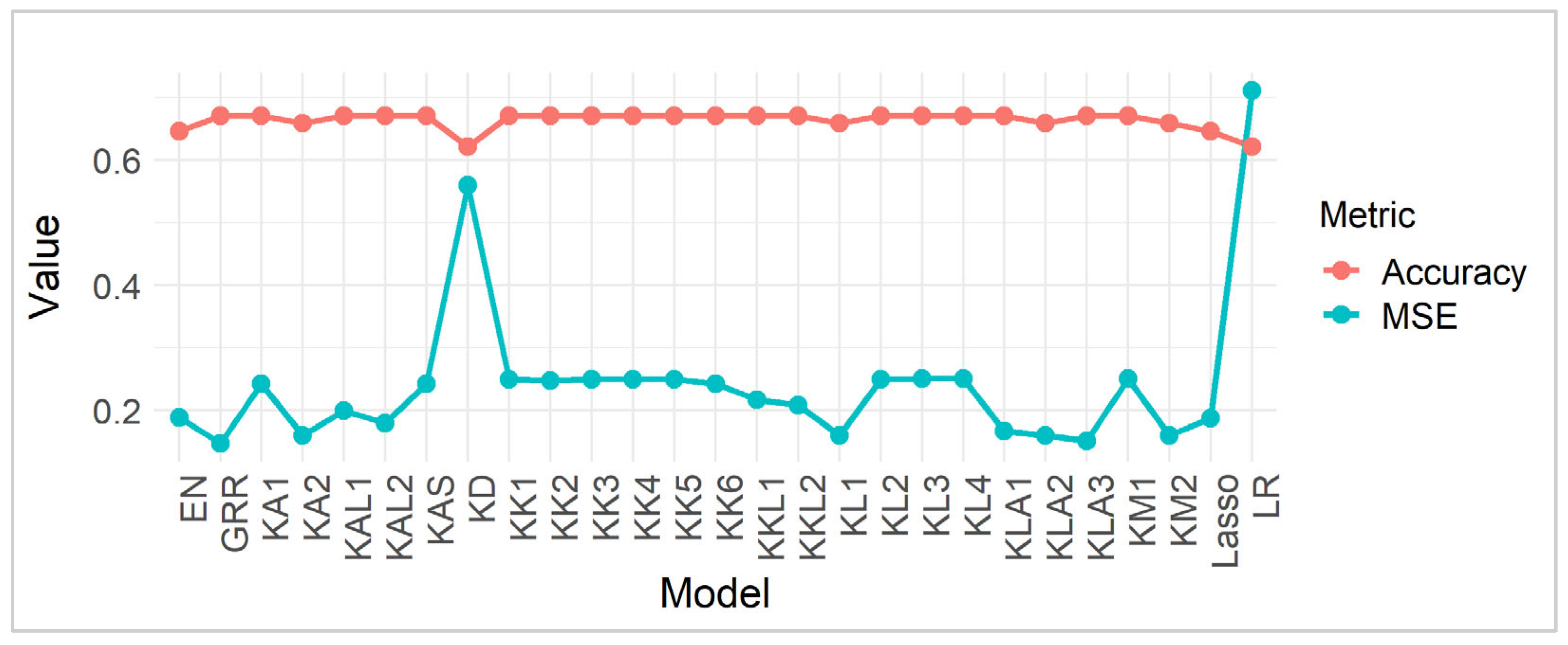

3. Results & Discussion

In this part, we carried out an extensive simulation study to assess the effectiveness of ridge estimators relative to conventional logistic regression, elastic net, Lasso, and generalized ridge regression. The results, summarized in

Table 2,

Table 3,

Table 4 and

Table 5, present the MSE and classification accuracy of the estimators under different conditions. Specifically, this study examines the impact of correlation coefficients (0.80, 0.90, 0.95, and 0.99). The findings, systematically detailed in these tables, illustrate the effects of different correlation structures, the number of independent variables, and sample sizes on the estimator performance.

Table 2 compares the MSE and Accuracy across various regression models under moderate multicollinearity (correlation = 0.80) for different predictor counts (

P = 3, 5, 10) and sample sizes (

n = 100, 200, 300). For

P = 3,

consistently achieves the lowest MSE (0.088), demonstrating its effectiveness in low-dimensional settings. As

P increases to 5,

remains optimal, with an MSE of 0.082. However, for

P = 10, GRR performs best, with an MSE of 0.064 at

n = 300, highlighting its capacity for high-dimensional data. LR exhibits the highest MSE, indicating limitations in multicollinear environments. EN and Lasso show competitive performance, especially with larger P and n, benefiting from regularization.

In terms of accuracy, most models show stable performance, with slight improvements as sample size increases. For P = 3, ridge estimators like , , and maintain an accuracy of 0.736 at n = 100, rising to 0.738 at n = 300. With P = 5, models such as GRR, EN, and Lasso achieve accuracies of 0.764 to 0.766 at n = 300, demonstrating effectiveness in moderate complexity. For P = 10, GRR leads with an accuracy of 0.812 at n = 300, showing its robustness in high-dimensional spaces.

Table 3 shows that under high multicollinearity (correlation = 0.90), MSE values increase, indicating greater prediction difficulty. Focusing on the non-penalized methods first, we see that LR exhibits the highest MSE, particularly as

increases, indicating its vulnerability to overfitting with more predictors. GRR substantially reduces MSE compared to LR, highlighting the benefits of

regularization. As expected, increasing sample size (

) generally decreases MSE for all methods, as more data provides a better estimate of the underlying relationships. For the ridge estimators, we observe generally low and stable MSE across different

and

, suggesting that these methods are less sensitive to the increase in predictor dimension in this context. However, some estimators like

, and

show relatively higher MSE compared to others, indicating potential challenges in capturing the underlying data structure with specific kernels. Notably,

, and

achieve some of the lowest MSE values, suggesting good performance in this scenario.

Regarding accuracy, the table displays the proportion of correctly classified instances for each estimator under the same conditions. Like the MSE trends, the accuracy tends to improve with increasing sample size () for most methods, reflecting the benefit of more data. LR, which suffered from a high MSE, also exhibits the lowest accuracy, reinforcing the detrimental effect of overfitting. In contrast, the penalized methods, Lasso and EN, demonstrate higher accuracy, with GRR achieving the highest accuracy among the estimators. The ridge estimators, except for , and , achieve high and relatively consistent accuracy across different and , often surpassing the traditional methods. This suggests that the ridge estimators are effective in capturing the non-linear relationships in the data, leading to better classification performance. The methods , and , which had low MSE, also maintain high accuracy, further indicating their effectiveness. The methods , , and that showed relatively higher MSE also show lower accuracy compared to other ridge estimators, suggesting a link between MSE and classification performance.

Table 4 presents the MSE for various estimators across different parameter settings (

P = 3, 5, 10) and sample sizes (

n = 100, 200, 300) given a correlation of 0.95. Firstly, within the ridge estimators, we observe a general trend of decreasing MSE as the sample size (

n) increases, as expected due to the larger amount of information available for estimation. Notably, the estimators

,

and

consistently demonstrate lower MSE values, particularly as

P increases, suggesting superior performance in capturing the underlying relationship under higher dimensionality. In contrast, the

estimator exhibits significantly higher MSE, indicating poor performance and potential instability. Among the traditional methods, GRR consistently achieves the lowest MSE across all settings, highlighting its effectiveness in handling high correlations and varying dimensions. EN and Lasso also demonstrate reasonable performance, with the MSE decreasing as the sample size increases, albeit not as effectively as the GRR. LR shows the highest MSE, especially with larger

P values, indicating its sensitivity to high correlation and dimensionality.

The accuracy metric presented alongside the MSE provides insights into the classification performance of the estimators. Similar to the MSE trends, we observe that the accuracy generally improves with increasing sample size across all estimators. However, the ridge estimators show remarkably consistent and high accuracy, often reaching 83.7% for P = 10 and n = 300. This suggests that these estimators are robust and effective in capturing underlying patterns, even with increased dimensionality. GRR, EN, and Lasso also exhibit good accuracy, with GRR showing slightly better performance, especially at higher P values. LR, while showing improvement with larger sample sizes, lags the other methods in terms of accuracy, consistent with its higher MSE.

Table 5 reveals the impact of extremely high multicollinearity (correlation of 0.99) on the MSE. The near-perfect correlation increases the MSE values across all models, highlighting the difficulty of accurate prediction under extreme dependencies. The ridge estimators, particularly

,and

consistently exhibit the lowest MSE across all parameter settings (

P = 3, 5, 10) and sample sizes. Importantly, their MSE values decrease as the sample size increases, demonstrating the desired consistency and effectiveness in handling the strong dependencies. In contrast, the

estimator shows significantly higher MSE, indicating poor performance and a lack of robustness to high correlation. Among the classical methods, GRR stands out with the lowest MSE and exhibits a clear trend of decreasing MSE with increasing sample size, suggesting a superior ability to mitigate the impact of high correlation. EN and Lasso also show reasonable performance, with MSE decreasing as the sample size increases, although not as effectively as GRR. LR exhibits the highest MSE, especially with larger

P values, and shows less consistent improvement with increasing sample size, indicating its struggle to handle the strong linear dependency of the data.

From

Table 5, we observe that the accuracy generally improves with increasing sample size across all estimators. The ridge estimators, specifically

,

and

, demonstrate remarkably consistent and high accuracy, often reaching 84.3% for

P = 10 and

n = 300. This suggests that these estimators are robust and effective in capturing the underlying patterns, even with extreme correlations. GRR also exhibits good accuracy, showing slightly better performance, especially at higher

P values. EN and Lasso show comparable accuracy to GRR, while LR lags the other methods in terms of accuracy, consistent with its higher MSE.

5. Concluding Remarks

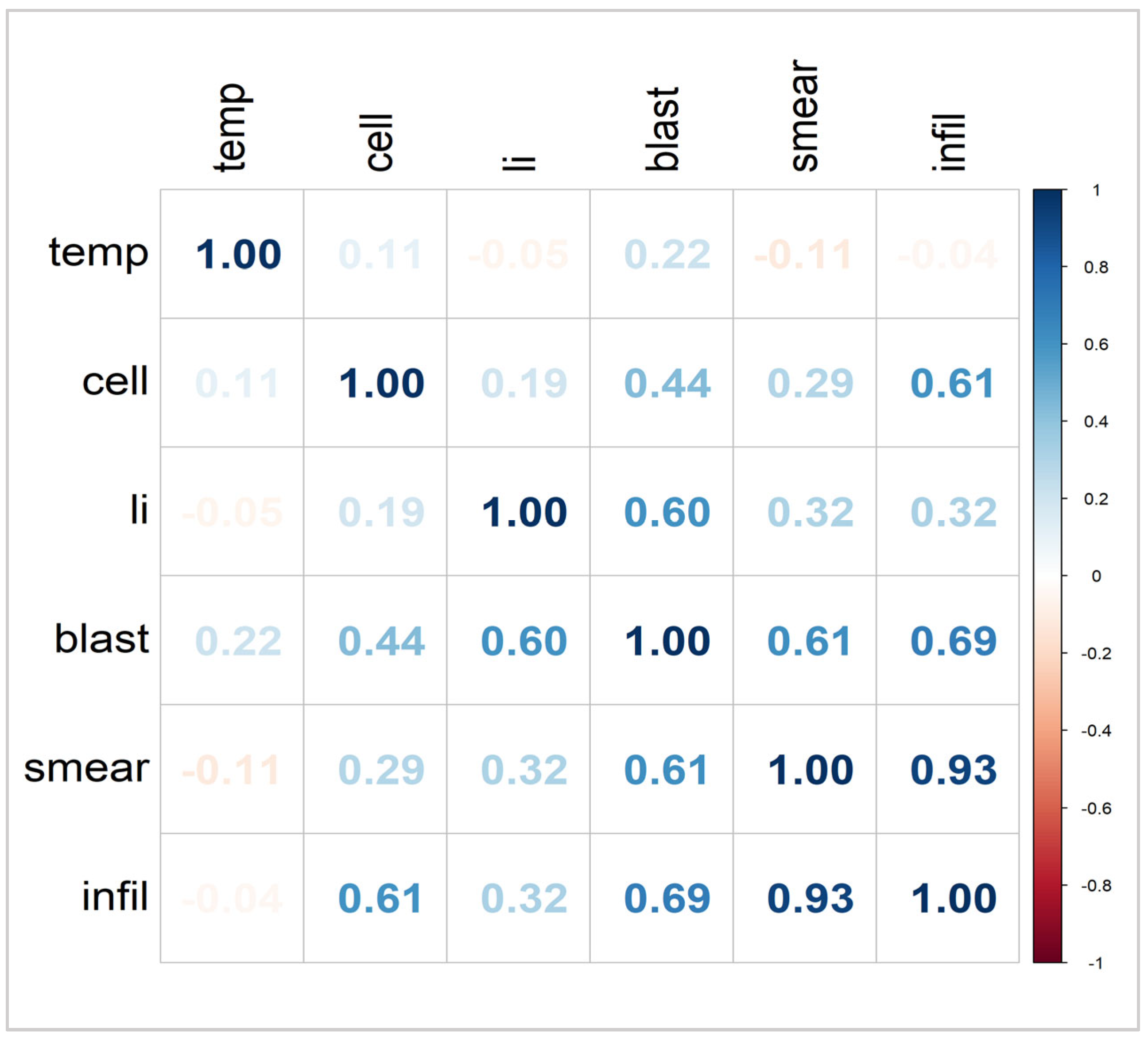

This study aims to evaluate the effectiveness of ridge regression, GRR, Lasso, and Elastic Net within the context of a logistic regression model by balancing the MSE with prediction accuracy. Given that a theoretical assessment of the estimators cannot be performed, a comprehensive simulation study has been carried out to assess their performance across various parametric conditions. The simulation studies, focusing on varying levels of correlation (0.80, 0.90, 0.95, and 0.99), consistently demonstrate the superior performance of ridge estimators with varying penalties, particularly , and , for small samples and GRR for large samples in terms of both MSE and accuracy. These methods exhibit desirable characteristics, such as lower MSE, indicating a better fit and consistent reduction of MSE with increasing sample size, highlighting robustness. Furthermore, they achieve high accuracy, suggesting reliable prediction capabilities even under severe multicollinearity. The simulation findings are corroborated by the real-world applications involving municipal and cancer remission data. Specifically, in the municipal data, characterized by high correlations among predictors, the GRR achieves the lowest MSE, aligning with the simulation results and demonstrating its effectiveness in mitigating overfitting and improving prediction accuracy in large multicollinear datasets. In the cancer remission data, a small-sample scenario with high multicollinearity, the models with lower MSE, including several ridge estimators and GRR, exhibit more realistic accuracy, suggesting robustness and reduced overfitting compared to models with inflated accuracy and high MSE, which are indicative of overfitting.

The alignment of the simulation findings with actual applications highlights the significance of using suitable statistical techniques, especially when dealing with multicollinearity and different sample sizes. The ridge estimators and GRR emerge as robust and reliable choices, demonstrating their ability to balance bias and variance, minimize prediction errors, and maintain high classification accuracy. These findings have significant implications for practical applications, highlighting the potential of these methods to enhance predictive modeling in diverse fields where multicollinearity and limited sample sizes are common challenges.

While ridge estimators and GRR show strong predictive performance, it is important to note the limitations of logistic regression, particularly the assumption of linearity in the logit and the interpretability challenges posed by coefficient shrinkage, which can bias odds ratio estimates [

58]. Future research could explore advanced regularization techniques such as Smoothly Clipped Absolute Deviation (SCAD), which was proposed by Fan and Li (2001) [

59], and adaptive Lasso, which offers variable selection capabilities and improved theoretical properties. Bayesian ridge regression also provides a flexible framework for incorporating prior information and quantifying uncertainty. Additionally, machine learning models, such as random forests and gradient boosting, can effectively handle multicollinearity and capture complex nonlinear relationships, offering valuable alternatives for high-dimensional and correlated data [

48].