Abstract

In real-life inter-related time series, the counting responses of different entities are commonly influenced by some time-dependent covariates, while the individual counting series may exhibit different levels of mutual over- or under-dispersion or mixed levels of over- and under-dispersion. In the current literature, there is still no flexible bivariate time series process that can model series of data of such types. This paper introduces a bivariate integer-valued autoregressive of order 1 (BINAR(1)) model with COM-Poisson innovations under time-dependent moments that can accommodate different levels of over- and under-dispersion. Another particularity of the proposed model is that the cross-correlation between the series is induced locally by relating the current observation of one series with the previous-lagged observation of the other series. The estimation of the model parameters is conducted via a Generalized Quasi-Likelihood (GQL) approach. The proposed model is applied to different real-life series problems in Mauritius, including transport, finance, and socio-economic sectors.

1. Introduction

In real-life series applications, it is commonly seen that the current observation in a counting process is influenced by the previous-lagged observation of another related series. Likewise, a popular example in the literature is the tick by tick data from the New York Stock Exchange (NYSE) market that concerns the trading intensity of stocks from different competing firms. Specifically, Pedeli and Karlis [1] referred to the intraday bid and ask quotes of AT&T stock on the NYSE market where these researchers remarked that the fluctuation in the investment level of intraday bid quotes of AT&T at a specified time point was affected by the transaction level of the ask quotes of AT&T at the previous time point. Similar scenarios of this nature can be encountered in different real-life socio-economic instances such as in time series related to day and night accidents over a same area or the number of thefts or larceny cases and unemployed people in a populated region. The analysis of such series of data poses a number of challenges. Firstly, both series are commonly influenced by some dynamic time-variant factors that lead to time-changing moments and correlations. Secondly, the series are often subject to different levels of over-, under-, or mixed-dispersion. From the papers published in this field of research [1,2,3,4,5,6], there is yet no bivariate time series process that accommodates for the mixed-dispersion of a wider range under non-stationary conditions based on the cross-correlation design specified above.

However, under a simpler cross-correlation design, Jowaheer et al. [7] have most recently proposed a bivariate integer-valued autoregressive process of order 1 (BINAR(1)M1) with COM-Poisson innovations featured under time-dependent moments that were induced through dynamic explanatory variables. The COM-Poisson is a discrete distribution re-introduced by Shmueli et al. [8] that can model a wider range of over- and under-dispersion and has shown its capability in different univariate areas of application (see Lord et al. [9,10], Sellers and Shmueli [11], Nadarajah [12], Zhu [13], Guikema and Coffelt [14], Borle [15,16,17]). This BINAR(1)M1 was a simple extension of the classical INAR(1)-based binomial thinning process of McKenzie [18]. Jowaheer et al. [7] extended by assuming two INAR(1) series with COM-Poisson innovations where the cross-correlation was simply sourced from the correlated COM-Poisson innovations similar to the bivariate design proposed initially by Pedeli and Karlis [19,20], Karlis and Pedeli [21], and later by Mamode Khan et al. [22]. For this non-stationary BINAR(1)M1 process, Jowaheer et al. [7] showed, through Monte Carlo experiments, that the model can be effectively used to simulate and represent real-life experiments where different quantities of over-or under-dispersed or mixed-dispersed series of data are collected. However, as remarked, the local inter-relationship between cross-related observations of same and previous lags as depicted in the stock example above is ignored.

In the literature, another class of BINAR(1) models (BINAR(1)M2) has been developed to cater for the possible relationship between current counting series observation with previous-lagged other series observations. Pedeli and Karlis [1] proposed such a BINAR(1)M2 with correlated Poisson innovations where the source of inter-relationship was induced by the cross-related observations and the bivariate Poisson innovations [23]. In this model construction, the counting series was marginally found to follow a Hermite distribution and hence this model was appropriate for series exhibiting mutually mild over-dispersion of different quantum. However, these two sources of cross-correlation increase the number of parameters to be estimated and complicate the specification of the conditional likelihood. Another less complicated version of BINAR(1)M2 (BINAR(1)M3) was proposed by Ristic et al. [2] and Nastic et al. [3] where these authors assumed that the cross-correlation could be simply induced by relating the current series observation with the previous-lagged observation of the other series with the pair of innovations held independently. Ristic et al. [2] and Nastic et al. [3] developed the BINAR(1)M3 with geometric marginal based on the Negative Binomial (NB) thinning mechanism, which indicates that BINAR(1)M3 was only suited for modeling a series of restricted levels of over-dispersion. Interestingly, Nastic et al. [3] showed that this BINAR(1)M3 provides better Akaike information criterion (AICs) and root mean square error (RMSE) than the BINAR(1)M1 with NB innovations by Karlis and Pedeli [21] and BINAR(1)M2 with Poisson innovations by Pedeli and Karlis [1]. Overall, BINAR(1)M2 and BINAR(1)M3 were developed under stationary conditions only and lack the flexibility to model situations of varied levels of dispersion under set-ups comprising time-independent and time-dependent covariates. This paper aims at developing a versatile non-stationary BINAR(1) model of similar structure as BINAR(1)M3 with COM-Poisson innovations but under binomial thinning that could accommodate the different scenarios of dispersion, as well as the influence of the current series-counting observation by the previous-lagged observation of another series.

As for the inferential procedures, Conditional Maximum Likelihood Estimation (CMLE) and Least Squares (LS) techniques have been mostly used to estimate the unknown model parameters in the existing stationary BINAR(1)M1, BINAR(1)M2, and BINAR(1)M3 models [1,2,3,19,20]. Nastic et al. [3] demonstrated that CMLE and LS asymptotically yield estimates of almost the same level of efficiency, but LS is less computationally intensive. The computational cumbersomeness of CMLE was also discussed by Pedeli and Karlis [24] where the authors noticed that under bivariate time series modeling, the implementation of CMLE is not quite feasible due to the intensive Hessian entries. As for the non-stationary BINAR(1)M1 developed by Mamode Khan et al. [22] with Poisson innovations, it was seen that the specification of the conditional likelihood function was rather tedious, and alternatively, they developed a novel Generalized Quasi-Likelihood (GQL) estimator that depends only on the correct specification of the mean scores. The other components of GQL are the derivatives and auto-covariance structure. As for the auto-covariance specification, this was computed in its exact form using the auto-regressive structure but with the correlation parameters estimated by the method of moments. Mamode Khan et al. [22] prove that both GQL and CMLE yield estimates with an asymptotic level of efficiency (see also Sutradhar et al. [25]) but remarkably, GQL yields considerably fewer non-convergent simulations. In similar lines, Sunecher et al. [26] compared LS with GQL for bivariate integer-valued moving-average of order 1 (BINMA(1)) process. wherein GQL provides more superior efficient estimates than LS with fewer non-convergent failures. Thus, in this paper, we consider formulating a new GQL method since the bivariate auto-correlation structure is different from the BINMA(1) process.

The organization of the paper is as follows: In Section 2, the new BINAR(1) model with COM-Poisson innovations is constructed and its properties are derived. Prior to this, we outline the basics of the COM-Poisson model. In Section 3, the GQL algorithm is implemented to estimate the regression, dispersion, and serial- and cross-dependence parameters. In Section 4, we present some numerical experiments to illustrate the different scenarios of over, under, and mixed dispersion under various combinations of the serial- and cross-correlation parameters. In Section 5, the model is applied to three real-life time series data in Mauritius: Firstly, the model is employed in analyzing series of daily day and night accidents on the motorway from the International Airport in Mauritius to the northern zone Grand Bay from 1 February to 30 November 2015. In the second instance, it is applied to the time series of intra-day transactions of two competing banks operating on the Stock Exchange of Mauritius (SEM) from 3 August 2015 to 12 October 2015. The third application consists of analyzing a socio-economic problem of the daily day and night frequently occurring thefts in the Capital City of Port Louis from 1 January to 30 November 2015. This paper ends with some concluding remarks in Section 6.

2. Model Development

Suppose that the BINAR(1) model is of the form

where ‘∘’ indicates the binomial thinning operator [27], wherein , for

and the so-called counting is a sequence of independent and identically distributed Bernoulli random variable with P, for .

and are the corresponding innovation terms for and , respectively, and , is marginally COM-Poisson with probability function

2.1. Properties of the COM-Poisson Model

In the above, . The COM-Poisson model (3) generalizes several discrete distributions. Likewise, under and , the geometric distribution becomes a special case of the COM-Poisson. Under , it generalizes the Poisson distribution with mean parameter and becomes Bernoulli when with the probability of success given by . Under any parametrization of and , the COM-Poisson is shown to be a member of the exponential family with link predictor function for and . Furthermore, is regarded as the dispersion parameter such that represents under-dispersion and over-dispersion.

The moment generating function (mgf) and probability generating function (pgf) are expressed as

while the moments of the COM-Poisson model are derived iteratively using

In particular, the mean and variance are given as follows:

However, these moments can be approximated in closed forms using the asymptotic approximation by Minka et al. [28]

that yields

or alternatively, from Equation (8), can be re-written as (see Mamode Khan and Jowaheer [29] and Mamode Khan [30]). Hence, under this parametrization, () is the pair of parameters to describe the COM-Poisson variable , such that .

In the context of the above approximated moments, Minka et al. [28], Shmueli et al. [8], and Zhu [13] presented some trial experiments that reveal that these approximations are more accurate for large . As also commented by Jowaheer et al. [7], for large and , the Var() outweighs the E(), while for large and , for the COM-Poisson , the E( Var() indicates under-dispersion, but in fact for , Nadarajah [12] provides an exact expression for the mean and variance based on generalized hypergeometric function. However, the approximation by Minka et al. [28] is rather more suitable as it explores the full range of .

Remark 1.

From Equation (8), it is deduced that any increase/decrease in causes a similar change in . As for the magnitude of the change from to for , the % change in is given by , while the % change in is . Thus, in the interpretation for the unit change in covariates, it is easier to represent the change in , but we note that for , the corresponding change in is considerably greater than the change in .

2.2. Properties of the BINAR(1) Model

Referring to the BINAR(1) model in Equation (1),

- The innovation terms and are independent, such that Corr() = 0. .

- We assume that the counting series has a mean, ; this is yet to be determined.

Some important thinning properties to be used for deriving the marginal moments are given in the following lemma:

Lemma 1.

- (a)

- , .

- (b)

- ,

- (c)

- Cov), .

The proof of Lemma 1 is shown in the Appendix A.

As for the covariance between (), it is given by the following:

Remark 2.

Under stationary moment conditions,

and solving simultaneously, we obtain

where

However, for the derivation of lag-h covariance expressions, we refer to Pedeli and Karlis [1] and Nastic et al. [3], where by denoting

these authors proved that with . Using this general lag-h auto-covariance formula, it is easy to derive the different diagonal and off-diagonal covariances with lag-h as shown in (21).

3. Estimation Methodology: The GQL Approach

The GQL approach is based on the extension of the classical Wedderburn [31] QL equation. This estimation approach is more flexible and parsimonious since it relies only on the two main moments and joint covariance expressions [32]. In fact, the GQL equation depends only on the correct specification of the mean scores, the derivatives, and the auto-covariance structure. After the construction of the GQL equation, it is solved iteratively using the Newton–Raphson technique to obtain updated estimates of the model parameters. Sutradhar et al. [25] proved that these model estimators are consistent, and under mild regularity conditions, follows an asymptotic normal distribution with mean 0 and covariance matrix .

Hence, this section presents a GQL estimation approach to estimate the parameters in the BINAR(1) model specified in Section 2. Note, indicates the transpose of [.]. The GQL equation is expressed as

Details of the components of Equation (22) are given in the following subsections:

3.1. The Derivative Matrix ()

The derivative matrix is expressed as , where with , where

Hence, the derivative entries with respect to for the covariate and are obtained iteratively as follows:

Under similar assumptions, the derivatives with respect to for are obtained iteratively as follows:

Similarly, the derivative expressions with respect to for are computed iteratively as follows:

3.2. The Auto-Covariance Matrix ()

3.3. Score Vector

The score vector with and corresponding with for .

The GQL in Equation (22) is solved iteratively using the Newton–Raphson

where are the estimates at the iteration and are the values of the expression at the iteration. The working of this algorithm is as follows: For an initial , we calculate the entries of the auto-covariance structure at and thereon use the iterative equation developed above and ultimately solve Equation (32) to obtain an updated [, ]. These updated values are again used to compute the entries of the auto-covariance structure and in turn Equation (32) is solved to obtain a new set of parameters. This cycle continues until convergence.

:

For true , lets define

where E.

By the Central Limit Theorem (CLT), .

Then E[] = 0 and Cov[.

From Lindeberg–Feller CLT [33],

Further, because is estimated by Equation (32), which by first order Taylor series expansion produces . Then,

and it follows

Remark 3.

Note for any change in to at time point t with unchanged, it signifies a % change in the link by which implies a % change in as (Refer to Remark 1) which may not be easier to compute since is of iterative form. Hence, it is more convenient to report the change in . Since the corresponding change in the mean is to be computed at each time point, we conclude that for , the change in the mean is considerably larger than the change in .

Remark 4.

Using the converged estimates, we derive the forecasting equations of the unconstrained BINAR(1) model with independent COM-Poisson innovations. The conditional expectation and variance of the one-step ahead forecast given by are expressed as follows:

with the following corresponding variances:

4. Numerical Evaluation

Using Equations (17)–(19), we illustrate in this section, for the different values of , , and , the possible scenarios where Var() exceeds or is lower than .

The results in Table 1, Table 2, Table 3 and Table 4 could only demonstrate that for different parameter inputs, there exists the possibility that the counting series are mutually or mixedly over- and under-dispersed with different quanta of dispersion.

Case A: :

Table 1.

Experimental results under different correlation and dispersion combinations.

Table 1.

Experimental results under different correlation and dispersion combinations.

| Var() | Var() | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.15 | 0.2 | 0.25 | 0.1 | 0.15 | 0.1 | 0.2 | 5.9 | 1.3 | 5.3 | 2 |

| 0.9 | 0.95 | 6.5 | 5.1 | 6.4 | 5.1 | ||||||

| 1.1 | 1.2 | 9.7 | 29.2 | 10.9 | 27.8 | ||||||

| 2 | 2.1 | 1978 | 17,186 | 719 | 2184 | ||||||

| 0.5 | 0.55 | 0.6 | 0.65 | 0.1 | 0.15 | 0.1 | 0.2 | 16.9 | 12.5 | 19.7 | 16 |

| 0.9 | 0.95 | 18.9 | 20.4 | 23 | 27 | ||||||

| 1.1 | 1.2 | 29.1 | 65.2 | 37.4 | 74.8 | ||||||

| 2 | 2.1 | 3143 | 17,314 | 2716 | 8567 | ||||||

| 0.9 | 0.1 | 0.11 | 0.8 | 0.1 | 0.15 | 0.1 | 0.2 | 131.5 | 233 | 86.5 | 281.8 |

| 0.9 | 0.95 | 147 | 304 | 98 | 368 | ||||||

| 1.1 | 1.2 | 227 | 692 | 156 | 755 | ||||||

| 2 | 2.1 | 24,450 | 137,849 | 14,165 | 81,309 | ||||||

| 0.1 | 0.15 | 0.2 | 0.25 | 0.8 | 0.9 | 0.1 | 0.2 | 0.26 | 0.15 | 0.37 | 0.31 |

| 0.9 | 0.95 | 1.4 | 1.5 | 1.7 | 1.691 | ||||||

| 1.1 | 1.2 | 1.8 | 1.9 | 2.17 | 2.19 | ||||||

| 2 | 2.1 | 3.45 | 3.94 | 4.03 | 4.08 | ||||||

| 0.5 | 0.55 | 0.6 | 0.65 | 0.8 | 0.9 | 0.1 | 0.2 | 0.84 | 0.82 | 1.18 | 1.19 |

| 0.9 | 0.95 | 4.29 | 5.2 | 5.7 | 6.4 | ||||||

| 1.1 | 1.2 | 5.4 | 6.6 | 7.3 | 8.2 | ||||||

| 2 | 2.1 | 10.5 | 13 | 13.7 | 15.7 | ||||||

| 0.9 | 0.1 | 0.11 | 0.8 | 0.8 | 0.9 | 0.1 | 0.2 | 6.5 | 13 | 5 | 16.4 |

| 0.9 | 0.95 | 33.4 | 72.9 | 23.4 | 85.6 | ||||||

| 1.1 | 1.2 | 42 | 92 | 30 | 108 | ||||||

| 2 | 2.1 | 82 | 180 | 57 | 209 |

Case B: :

Table 2.

Experimental results under different correlation and dispersion combinations.

Table 2.

Experimental results under different correlation and dispersion combinations.

| Var() | Var() | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.15 | 0.2 | 0.25 | 1 | 1 | 0.1 | 0.2 | 0.1627 | 0.1633 | 0.31 | 0.296 |

| 0.9 | 0.95 | 1.267 | 1.270 | 1.60 | 1.53 | ||||||

| 1.1 | 1.2 | 1.558 | 1.562 | 2.016 | 1.59 | ||||||

| 2 | 2.1 | 2.814 | 2.821 | 3.55 | 3.40 | ||||||

| 0.5 | 0.55 | 0.6 | 0.65 | 1 | 1 | 0.1 | 0.2 | 0.57 | 0.68 | 0.93 | 1 |

| 0.9 | 0.95 | 3.93 | 4.63 | 5.32 | 5.88 | ||||||

| 1.1 | 1.2 | 4.86 | 5.73 | 6.64 | 7.33 | ||||||

| 2 | 2.1 | 8.7 | 10.3 | 11.8 | 13 | ||||||

| 0.9 | 0.1 | 0.11 | 0.8 | 1 | 1 | 0.1 | 0.2 | 4.4 | 9.8 | 3.4 | 12.4 |

| 0.9 | 0.95 | 30.6 | 66 | 21.6 | 78.4 | ||||||

| 1.1 | 1.2 | 37.8 | 81.6 | 26.8 | 97.3 | ||||||

| 2 | 2.1 | 67.8 | 146 | 48 | 174 |

Case C: :

Table 3.

Experimental results under different correlation and dispersion combinations.

Table 3.

Experimental results under different correlation and dispersion combinations.

| Var() | Var() | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.15 | 0.2 | 0.25 | 1.1 | 1.2 | 0.1 | 0.2 | 0.13 | 0.17 | 0.27 | 0.30 |

| 0.9 | 0.95 | 1.21 | 1.17 | 1.49 | 1.34 | ||||||

| 1.1 | 1.2 | 1.47 | 1.41 | 1.83 | 1.63 | ||||||

| 2 | 2.1 | 2.54 | 2.42 | 3.04 | 2.67 | ||||||

| 0.5 | 0.55 | 0.6 | 0.65 | 1.1 | 1.2 | 0.1 | 0.2 | 0.48 | 0.63 | 0.80 | 0.94 |

| 0.9 | 0.95 | 3.72 | 4.31 | 4.97 | 5.37 | ||||||

| 1.1 | 1.2 | 4.53 | 5.23 | 6.1 | 6.6 | ||||||

| 2 | 2.1 | 7.77 | 8.91 | 10.26 | 10.97 | ||||||

| 0.9 | 0.1 | 0.11 | 0.8 | 1.1 | 1.2 | 0.1 | 0.2 | 3.71 | 8.59 | 2.93 | 10.94 |

| 0.9 | 0.95 | 28.9 | 61.8 | 20.3 | 73 | ||||||

| 1.1 | 1.2 | 35.2 | 75.3 | 24.8 | 89 | ||||||

| 2 | 2.1 | 60 | 128 | 42 | 151 | ||||||

| 0.1 | 0.15 | 0.2 | 0.25 | 2 | 2.5 | 0.1 | 0.2 | 0.129 | 0.228 | 0.335 | 0.308 |

| 0.9 | 0.95 | 0.97 | 0.74 | 1.17 | 0.81 | ||||||

| 1.1 | 1.2 | 1.11 | 0.83 | 1.33 | 0.91 | ||||||

| 2 | 2.1 | 1.6 | 1.12 | 1.82 | 1.20 | ||||||

| 0.5 | 0.55 | 0.6 | 0.65 | 2 | 2.5 | 0.1 | 0.2 | 0.511 | 0.752 | 0.947 | 1.03 |

| 0.9 | 0.95 | 2.97 | 3.1 | 3.92 | 3.81 | ||||||

| 1.1 | 1.2 | 3.39 | 3.52 | 4.48 | 4.32 | ||||||

| 2 | 2.1 | 4.82 | 4.89 | 6.23 | 5.95 | ||||||

| 0.9 | 0.1 | 0.11 | 0.8 | 2 | 2.5 | 0.1 | 0.2 | 3.98 | 9.71 | 3.31 | 11.9 |

| 0.9 | 0.95 | 23.1 | 47.1 | 16.1 | 55.5 | ||||||

| 1.1 | 1.2 | 26.37 | 53.6 | 18.4 | 63.2 | ||||||

| 2 | 2.1 | 37.5 | 75.4 | 25.8 | 88.7 |

Case D: :

Table 4.

Experimental results under different correlation and dispersion combinations.

Table 4.

Experimental results under different correlation and dispersion combinations.

| Var() | Var() | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.15 | 0.2 | 0.25 | 0.8 | 1.5 | 0.1 | 0.2 | 0.25 | 0.14 | 0.30 | 0.33 |

| 0.9 | 0.95 | 1.35 | 1.44 | 1.42 | 1.21 | ||||||

| 1.1 | 1.2 | 1.68 | 1.84 | 1.73 | 1.45 | ||||||

| 2 | 2.1 | 3.25 | 3.7 | 2.83 | 2.34 | ||||||

| 0.5 | 0.55 | 0.6 | 0.65 | 0.8 | 1.5 | 0.1 | 0.2 | 0.768 | 0.751 | 1.014 | 1.155 |

| 0.9 | 0.95 | 4 | 4.8 | 5 | 5.4 | ||||||

| 1.1 | 1.2 | 4.95 | 5.98 | 6.12 | 6.6 | ||||||

| 2 | 2.1 | 9.26 | 11.4 | 10.6 | 11.7 | ||||||

| 0.9 | 0.1 | 0.11 | 0.8 | 0.8 | 1.5 | 0.1 | 0.2 | 5.98 | 11.94 | 4.16 | 15.37 |

| 0.9 | 0.95 | 31 | 67 | 21 | 76 | ||||||

| 1.1 | 1.2 | 39 | 83 | 26 | 94 | ||||||

| 2 | 2.1 | 72 | 157 | 47 | 170 |

Some common remarks are as follows:

- (a)

- For and , Var() is lesser than for small and .

- (b)

- For a high serial-correlation and low pair of cross-correlations, as in , the counting series becomes hugely over-dispersed.

- (c)

- For relatively lower serial/cross-correlation values, the counting series are subject to under-dispersion/over-dispersion for both series or to some combination of over- and under-dispersion, but as the correlation increases, both counting series express huge over-dispersion.

- (d)

- For mixed dispersion, that is , we note that for low serial/cross-correlation parameters, there is a mixed dispersed series and as serial values increases, the data tend toward over-dispersion under both series.

In general, irrespective of any serial/cross-correlation parameters and values of , as increases, also increases. Hence, the proposed model is suitable for analyzing dispersed series of high and low levels of dispersion.

5. Application

5.1. Day and Night Road Accidents

Mauritius, an island of 2040 square kilometres, is located in the Indian Ocean and consists of 11 constituencies. Road accidents are one of the most pertinent and sensible statistical figures of paramount importance that have drawn the attention of the policy makers, road transport inspectors, and the public in general in Mauritius.

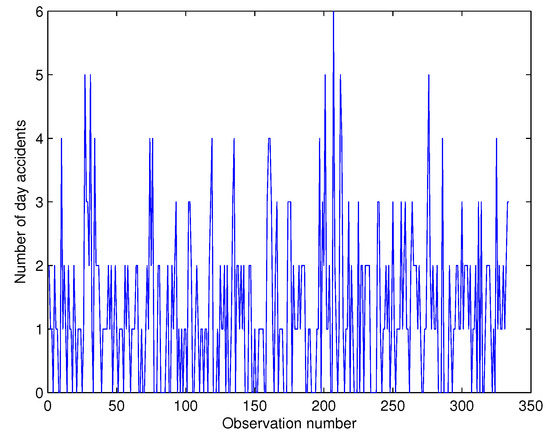

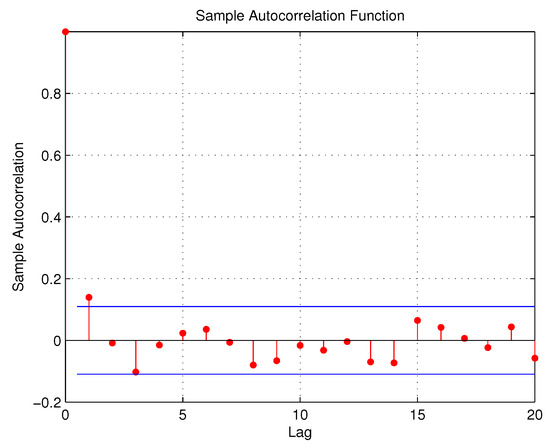

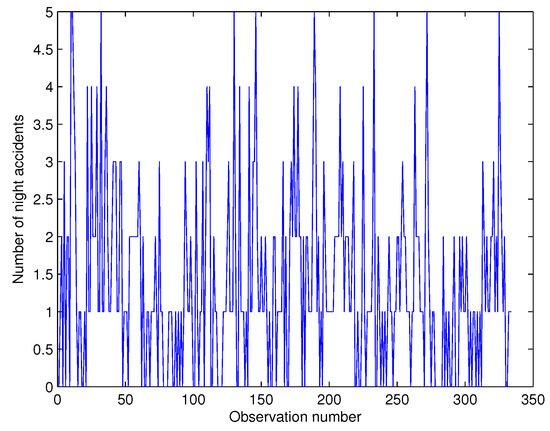

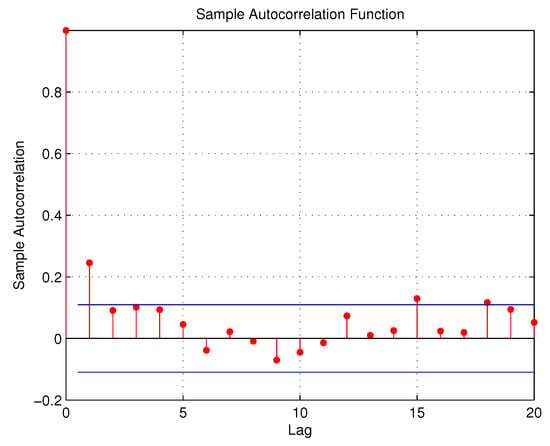

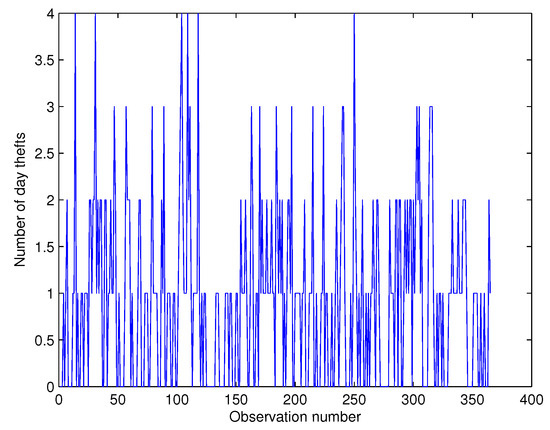

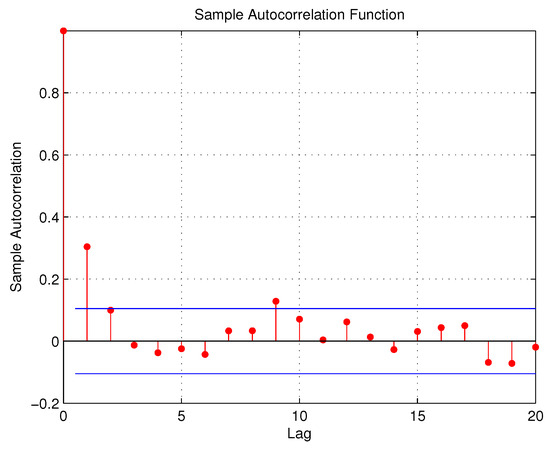

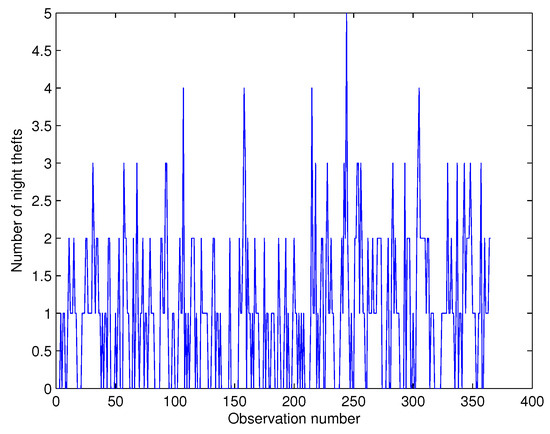

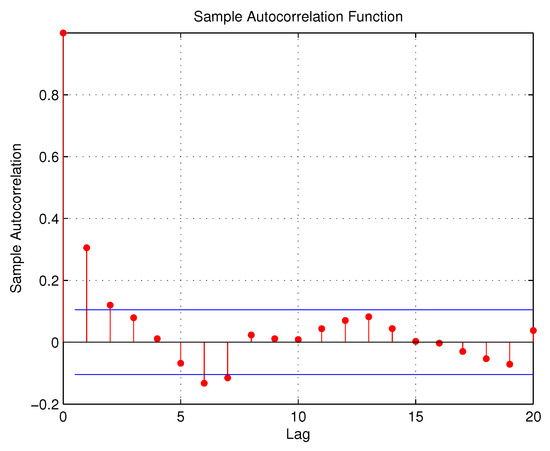

Thus, there is an urgent need to analyze these series of accidents and investigate the contributing effects. In this section, we focus on the daily number of day and night road accidents that happened in the motorway linking the International Airport to the tourist zone Grand Bay for the period of 1 February to 31 December 2015, totalling 334 paired observations. The time series and ACF plots are shown in Figure 1, Figure 2, Figure 3 and Figure 4:

Figure 1.

Time series plot for day accidents.

Figure 2.

ACF plot for day accidents.

Figure 3.

Time series plot for night accidents.

Figure 4.

ACF plot for night accidents.

We consider as the number of day accidents occurring in this region at the time point while is the number of night accidents in the same area at the time point. Since the data are collected commonly over the same area, and are more likely to be inter-related and in fact the sample cross-correlation estimate based on the Kendall tau estimator is 0.1164. More details about the summary statistics are provided in Table 5:

Table 5.

Summary statistics for the number of day and night accidents with the empirical correlation coefficients.

It is clear from the above measures that both and are over-dispersed series with effective serial-correlations. In the regression equation, the link function is assumed as follows— —where five covariates are considered, including Intercept term , number of traffic lights (TL) , number of roundabouts (RA) , number of speed cameras (NSC) , and number of policemen (NP) deployed in this area monthly for patrol. Based on the variance/mean ratios and ACF plots, it is convincing that the BINAR(1) model introduced in Section 2 is an appropriate model to analyze the above series. However, the model is applied to the accident series over the period 1 February 2015 to 30 November 2015, totalling 303 pairs of observations, while the accident series over the period 1 December 2015 to 31 December 2015 are used to validate the model. A comparison with the BINAR(1)M1 model introduced by Jowaheer et al. [7] is also provided. Table 6 displays the GQL estimates:

Table 6.

Day and night accidents: estimates of the regression parameters.

While Table 7 provides the serial- and cross-correlation estimates:

Table 7.

Day and night accidents: estimates of the dependence parameters.

From Table 6 and Table 7, we can clearly observe that the estimates of the BINAR(1)M3 model are slightly better than the estimates of the BINAR(1)M1 model, based on their respective standard errors. Hence, we only interpret the estimates of the BINAR(1)M3 model. From the correlation in Table 7, we note that the s.e values of the correlation parameters are significant and reliable. As for the regression coefficients table, the coefficients of the TL, NSC, and NP are negative, while the coefficient of the RA is positive for both series. We also deduce that for any additional TL, the link value related to the number of day accidents decreases by 5.5 percent, which also causes a decrease in the same measure for night accidents by 6.6 percent. Similarly, for one unit increase in NSC, there is an expected decrease of 9.6 percent in the link value related to the number of day accidents and 11 percent for night accidents. For one unit increase in NP, we expect a downfall of 12.2 percent for link value related to day accidents and 12.8 percent for night accidents. On the contrary, one unit increase in RA causes an increase in the link value related to the number of day accidents by 4.5 percent and 5.8 percent for night accidents. Using the forecasting Equations (37) and (38), we compute the one-step ahead forecasts for the number of day and night accidents based on the out-sample series over the period 1 December 2015 to 31 December 2015, which give corresponding out-sample RMSEs of 0.1378 and 0.1169, respectively.

This information is of extreme importance to the government, in particular to the Ministry of Public Infrastructure, so as to reinforce measures in collaboration with the Defence Ministry to employ and re-deploy police officers for patrol. In fact, NP seems to produce a more significant decrease than even the installation of speed cameras.

5.2. Trading Intensity of SBMH and MCB Transactions

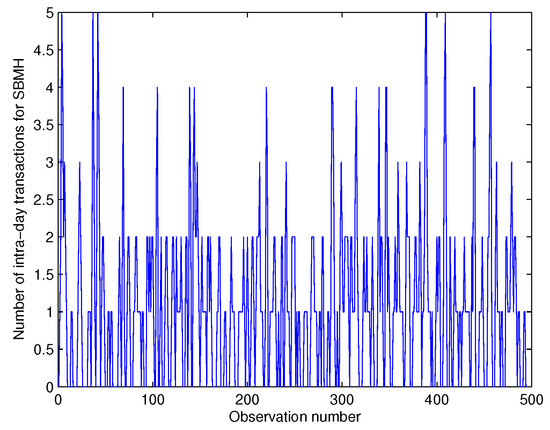

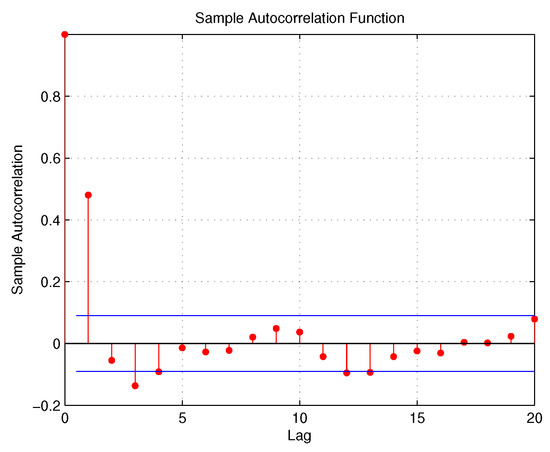

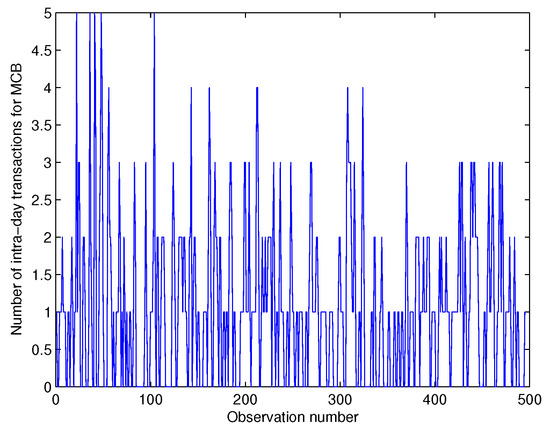

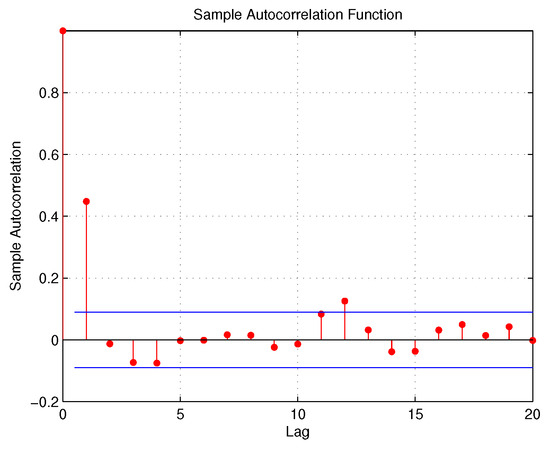

The Stock Exchange of Mauritius (SEM) is an important trading platform comprising 56 companies which operate in various sectors of the economy, with a market capitalization of USD 5880.56 million and annual turnover of USD 371.29 million. This section treats the number of intra-day transactions of the two most popular banks trading on the SEM, namely the State Bank of Mauritius Holdings (SBMH) and the Mauritius Commercial Bank (MCB). In this context, data were collected from the SEM at 30 min intervals over the period of 3 August 2015 to 16 October 2015, totalling 495 paired observations. The time series and ACF plots of the two stock series are provided in Figure 5, Figure 6, Figure 7 and Figure 8:

Figure 5.

Time series plot for SBMH.

Figure 6.

ACF plot for SBMH.

Figure 7.

Time series plot for MCB.

Figure 8.

ACF plot for MCB.

Let represent the number of intra-day transactions of SBMH and represent the number of intra-day transactions of MCB on the SEM. SBMH and MCB are two competing banks that dominate the SEM, and it is more likely that there exists a cross-correlation between and . Details about the summary statistics of the two stock series are provided in Table 8:

Table 8.

Summary statistics for the intra-day transactions of SBMH and MCB with the empirical correlation coefficients.

From Table 8, we confirm there is a substantial sample serial-correlation of the intra-day transactions of SBMH and MCB and also the existence of sample Kendall’s tau cross-correlation. We also note that the two stock series are over-dispersed based on the variance/mean ratio values. In the regression equation, the link function , where along with the intra-day transactions, data on the three common covariates that influence the two series were also collected: news effect (), which is coded as 1 for any new information filtering the domestic market and 0 for no such news; the Friday effect (), with 1 indicating Friday and 0 for the other days of the week; and time of the day effect (), with 1 representing trading conducted in the afternoon (12:00–13:30) and 0 for the rest of the trading session. Hence, the BINAR(1) model is used to analyze the two stock time series from 3 August 2015 to 12 October 2015, totalling 459 paired observations, while the stock series from 13 October 2015 to 16 October 2015 is used to validate the model. A comparison with the BINAR(1)M1 model is also conducted. The estimates of the regression, dispersion, and dependence parameters are shown in Table 9:

Table 9.

Intra-day transactions for SBMH and MCB: GQL estimates of the regression and dispersion parameters.

With serial and cross-correlation parameters, as shown in Table 10:

Table 10.

Intra-day transactions for SBMH and MCB: estimates of the dependence parameters.

From Table 9 and Table 10, we can observe that the estimates of the BINAR(1)M3 are slightly better than the estimates of the BINAR(1)M1 model. Hence, we provide only the estimates of the BINAR(1)M3 model. From Table 10, we can deduce that the correlation parameters are significant and reliable and the regression effects are all positive. Hence, this indicates that for any new information that enters the stock market, the link value related to the number of intra-day transactions of SBMH increases by 20.2 percent, while a 15.3 percent increase is noted for MCB. Similarly, on Fridays, we expect an increase in the link value related to the number of intra-day transactions of SBMH by 18.8 percent and 15.8 percent for MCB. As for time effect, in the afternoon, we expect an increase in the link value related to the number of intra-day transactions of SBMH by 13.2 percent and 14.5 percent for MCB. Using the one-step ahead forecasting Equations (37) and (38), we compute the one-step ahead forecasts for the intra-day transactions of SBMH and MCB from 13 October 2015 to 16 October 2015, with corresponding out-sample RMSEs of 0.2166 and 0.1971, respectively. These results henceforth indicate that the level of investment at SBMH is more conducive to business purposes.

5.3. Day and Night Thefts

We also propose here to apply the model in Section 2 to the series of daily day and night thefts that have occurred in the capital city of Port Louis in Mauritius from the period 1 January 2015 to 31 December 2015, which amounts to 365 paired series. In this part, the theft series comprise any sort of stolen object or material of any value, such as money, vehicles, or jewels, among others, recorded in the police stations in the capital city. Principally, this area of the island is the busiest place, with shopping malls, business and government offices, and with one of the most attractive spots on the Indian Ocean: the Caudan Waterfront. Port Louis is also popular for the horse racing competition usually held on Saturdays and Sundays. Moreover, the area also has gambling houses and casinos. The time series and ACF plots are shown in Figure 9, Figure 10, Figure 11 and Figure 12:

Figure 9.

Time series plot for day thefts.

Figure 10.

ACF plot for day thefts.

Figure 11.

Time series plot for night thefts.

Figure 12.

ACF plot for night thefts.

Assume the number of day thefts is and number of night thefts is . The summary statistics of the two series are shown in Table 11:

Table 11.

Summary statistics for the number of day and night thefts with the empirical correlation coefficients.

From Table 11, we note that the two series exhibit sample serial-correlations, as well as sample cross-correlation Kendall’s tau. The variance/mean ratios indicate that both series are under-dispersed. Hence, based on the variance/mean ratios and the ACF plots, the BINAR(1) model introduced in Section 2 can be used to analyze the two under-dispersed series. Notably, we shortlist some factors that could explain the fluctuation in these series, with the following link function in the regression equation——where five possible covariates are considered, including number of police patrol (NP), buildings equipped with alarm system (AS), street cameras (SC), number of unemployed people in the area (UP), and number of drug addicts in the city (DA). The information on the number of drug addicts was obtained from the rehabilitation center in Port Louis. The BINAR(1) model is applied to the two series from 1 January 2015 to 30 November 2015, totalling 334 paired observations, while the theft series from 1 December 2015 to 31 December 2015 are used to verify the reliability of the model. A comparison with the BINAR(1)M1 model is also provided. Table 12 provides the GQL estimates as follows:

Table 12.

Day and night thefts: estimates of the regression parameters.

While the serial- and cross-correlation estimates are provided in Table 13:

Table 13.

Day and night thefts: estimates of the dependence parameters.

From Table 12 and Table 13, the estimates of the BINAR(1)M3 models is slightly better than the BINAR(1)M1 model. Hence, we interpret only the estimates of the BINAR(1)M3 model. The correlation values in Table 13 confirm the existence of serial- and cross-correlation. As for the covariates effects, we can deduce that NP, AS, and SC are negative, while UP and DA are positive for both series. For some more detailed measurements, we note that for a unit change in NP, we could expect a decrease in the link value by 9.9 percent in day thefts and by 9.1 percent correspondingly in night thefts. Similarly, a change in AS induces an expected decrease of 7.4 percent for day thefts and 4.7 percent for night thefts. For a unit increase in SC, there is an expected decrease of 5.5 percent for link value related to day thefts and 4.5 percent for night thefts. As for DA and UP, both indicate some expected increases in day and night thefts. A unit increase in DA causes an expected increase for link values related to the number of day thefts by 13.6 percent and 14 percent for night thefts, while a unit increase in UP causes an increase in the link value related to the number of day thefts by 12.2 percent and 8.9 percent for night thefts. Using the forecasting Equations (37) and (38), the one-step ahead forecasts for the number of day and night thefts are computed based on the out-sample series from 1 December 2015 to 31 December 2015, with corresponding out-sample RMSEs of 0.1385 and 0.1241, respectively. These results are clear indications that street cameras have to be installed to track these day and night burglaries.

6. Concluding Remarks

This paper unveils some important findings that have many practical implications in society. Firstly, in Section 2, a new BINAR(1) model with COM-Poisson innovations (BINAR(1)M3) under time-dependent moments is proposed and is shown numerically to accommodate all forms of mutual and mixed levels of dispersion. In fact, the proposed model demonstrates lots of flexibility in considering bivariate series of different quanta of over, under, and distinct mixed levels of dispersion, which the existing BINAR(1) model with Poisson and NB innovations fails to account for. In addition, the model also takes into account the local interrelationship between cross-related observations of the same and previous lags. This paper also introduces a suitable GQL estimation approach that requires only the correct specification of the mean scores and auto-covariance structure. Last but not least, the model has been applied to different fields of bivariate series data and a comparison has also been provided with another existing BINAR(1)M1 model. The BINAR(1)M3 and BINAR(1)M1 models are both applied on three different applications to demonstrate the flexibility of these models for modeling counting responses of different entities, which are commonly influenced by time-dependent covariates, with different or mixed levels of over- and under-dispersion. For all three applications, it is clearly shown that the estimates of the BINAR(1)M3 model are slightly better than the estimates of the BINAR(1)M1 model, based on the standard errors. Hence, the results of the BINAR(1)M3 model are reliable and in particular indicate to policy makers, with solid evidence, where government funding should be invested to promote safety, social well-being, and social justice with respect to accidents, the stock market, and crime. Thus, it is commendable for statisticians to use such a BINAR(1)M3 model for their analysis, forecasting, and advice for policy implications.

Author Contributions

Conceptualization, N.M.K.; methodology, Y.S.; software, N.M.K.; validation, Y.S.; formal analysis, N.M.K.; investigation, Y.S.; resources, N.M.K.; data curation, N.M.K.; writing—original draft preparation, N.M.K.; writing—review and editing, N.M.K. and Y.S.; visualization, Y.S.; supervision, Y.S.; project administration, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

The authors hereby declare that there is no funding statement to disclose for this research and the APC was funded by a discount voucher.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is unavailable due to privacy restrictions of the Mauritius Police Force and the Stock Exchange of Mauritius.

Conflicts of Interest

The authors declare that there was no conflict of interest.

Appendix A

Proof of Lemma 1.

- (a)

- , .

- (b)

- Var

- (c)

- Cov

□

References

- Pedeli, X.; Karlis, D. Some properties of multivariate INAR(1) processes. Comput. Stat. Data Anal. 2013, 67, 213–225. [Google Scholar] [CrossRef]

- Ristic, M.; Nastic, A.; Jayakumar, K.; Bakouch, H. A bivariate INAR(1) time series model with geometric marginals. Appl. Math. Lett. 2012, 25, 481–485. [Google Scholar] [CrossRef]

- Nastic, A.; Ristic, M.; Popovic, P. Estimation in a bivariate integer-valued autoregressive process. Commun.-Stat.-Theory Methods 2016, 45, 5660–5678. [Google Scholar] [CrossRef]

- Sunecher, Y.; Mamode Khan, N.; Koodoruth, J.; Jannoo, Z.; Rampat, S.; Jowaheer, V.; Arashi, M. Larceny Trend in some areas of Mauritius via a Bivariate INAR(1) model with dispersed COM-Poisson innovations. Commun. Stat. Case Stud. Appl. 2019, 4, 69–81. [Google Scholar] [CrossRef]

- Hassan, B.; Sunecher, Y.; Mamode Khan, N.; Jowaheer, V. A Non-stationary BINAR(1) Process with a simple cross dependence: Estimation with some properties. Aust. N. Z. J. Stat. 2020, 62, 25–48. [Google Scholar]

- Sunecher, Y.; Mamode Khan, N. On comparing and assessing robustness of some popular non-stationary BINAR(1) models. J. Risk Financ. Manag. 2024, 17, 100. [Google Scholar] [CrossRef]

- Jowaheer, V.; Mamode Khan, N.; Sunecher, Y. A BINAR(1) Time series model with Cross-correlated COM-Poisson innovations. Commun.-Stat.-Theory Methods 2018, 47, 1133–1154. [Google Scholar] [CrossRef]

- Shmueli, G.; Minka, T.; Borle, J.; Boatwright, P. A useful distribution for fitting discrete data. Appl. Stat. R. Stat. Soc. 2005, 54, 127–142. [Google Scholar] [CrossRef]

- Lord, D.; Geedipally, S.; Guikema, S. Extension of the application of Conway-Maxwell Poisson models: Analysing traffic crash data exhibiting underdispersion. Risk Anal. 2010, 30, 1268–1276. [Google Scholar] [CrossRef]

- Lord, D.; Guikema, S.; Geedipally, S. Application of Conway-Maxwell Poisson for analyzing motor vehicle crashes. Accid. Anal. Prev. 2008, 40, 1123–1134. [Google Scholar] [CrossRef]

- Sellers, K.; Borle, S.; Shmueli, G. The COM-Poisson model for count data: A survey of methods and applications. Appl. Stoch. Model. Bus. Ind. 2012, 22, 104–116. [Google Scholar] [CrossRef]

- Nadarajah, S. Useful moment and CDF formulations for the com-poisson distribution. Stat. Pap. 2009, 50, 617–622. [Google Scholar] [CrossRef]

- Zhu, F. Modelling Time series of counts with COM-Poisson INGARCH models. Math. Comput. Model. 2012, 56, 191–203. [Google Scholar] [CrossRef]

- Guikema, S.; Coffelt, J. A flexible count data regression model for risk analysis. Risk Anal. 2008, 28, 213–223. [Google Scholar] [CrossRef]

- Borle, S.; Boatwright, P.; Kadane, J.; Nunes, J.; Shmueli, G. Effect of product assortment changes on consumer retention. Mark. Sci. 2005, 24, 616–622. [Google Scholar] [CrossRef]

- Borle, S.; Boatwright, P.; Kadane, J. The timing of bid placement and extent of multiple bidding: An empirical investigation using ebay online auctions. Stat. Sci. 2006, 21, 194–205. [Google Scholar] [CrossRef]

- Borle, S.; Dholakia, U.; Singh, S.; Westbrook, R. The impact of survey participation on subsequent behavior: An empirical investigation. Mark. Sci. 2007, 26, 711–726. [Google Scholar] [CrossRef]

- McKenzie, E. Autoregressive moving-average processes with Negative Binomial and geometric marginal distributions. Adv. Appl. Probab. 1986, 18, 679–705. [Google Scholar] [CrossRef]

- Pedeli, X.; Karlis, D. A bivariate INAR(1) process with application. Stat. Model. Int. J. 2011, 11, 325–349. [Google Scholar] [CrossRef]

- Pedeli, X.; Karlis, D. Bivariate INAR(1) Models; Technical report; Athens University of Economics: Athina, Greece, 2009. [Google Scholar]

- Karlis, D.; Pedeli, X. Flexible Bivariate INAR(1) processes Using Copulas. Commun. Stat.-Theory Methods 2013, 42, 723–740. [Google Scholar] [CrossRef]

- Mamode Khan, N.; Sunecher, Y.; Jowaheer, V. Modelling a Non-Stationary BINAR(1) Poisson Process. J. Stat. Comput. Simul. 2016, 86, 3106–3126. [Google Scholar] [CrossRef]

- Kocherlakota, S.; Kocherlakota, K. Regression in the Bivariate Poisson Distribution. Commun.-Stat.-Theory Methods 2001, 30, 815–825. [Google Scholar] [CrossRef]

- Pedeli, X.; Karlis, D. On composite likelihood estimation of a multivariate INAR(1) model. J. Time Ser. Anal. 2013, 34, 206–220. [Google Scholar] [CrossRef]

- Sutradhar, B.; Jowaheer, V.; Rao, P. Remarks on asymptotic efficient estimation for regression effects in stationary and non-stationary models for panel count data. Braz. J. Probab. Stat. 2014, 28, 241–254. [Google Scholar] [CrossRef]

- Sunecher, Y.; Mamodekhan, N.; Jowaheer, V. Estimating the parameters of a BINMA Poisson model for a non-stationary bivariate time series. Commun. Stat. Simul. Comput. 2016, 46, 6803–6827. [Google Scholar] [CrossRef]

- Steutel, F.; Van Harn, K. Discrete analogues of self-decomposability and stability. Ann. Probab. 1979, 7, 3893–3899. [Google Scholar] [CrossRef]

- Minka, T.; Shmueli, G.; Kadane, J.; Borle, S.; Boatwright, P. Computing with the COM-Poisson Dustribution; Technical report; Carnegie Mellon University: Pittsburgh, PA, USA, 2003. [Google Scholar]

- Mamode Khan, N.; Jowaheer, V. Comparing joint GQL estimation and GMM adaptive estimation in COM-Poisson longitudinal regression model. Commun.-Stat.-Simul. Comput. 2013, 42, 755–770. [Google Scholar] [CrossRef]

- Mamode Khan, N. A robust algorithm for estimating regression and dispersion parameters in non-stationary longitudinally correlated COM-Poisson. J. Comput. Math. 2016, 19, 25–36. [Google Scholar] [CrossRef]

- Wedderburn, R. Quasi-likelihood functions, generalized linear models, and the Gauss-Newton method. Biometrika 1974, 61, 439–447. [Google Scholar]

- Sutradhar, B.C.; Das, K. Miscellanea. On the efficiency of regression estimators in generalised linear models for longitudinal data. Biometrika 1999, 86, 459–465. [Google Scholar] [CrossRef]

- Amemiya, T. Advanced Econometrics; Harvard University Press: Cambridge, UK, 1985. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).