Abstract

Using geometric considerations, we provided a clear derivation of the integral representation for the error function, known as the Craig formula. We calculated the corresponding power series expansion and proved the convergence. The same geometric means finally assisted in systematically deriving useful formulas that approximated the inverse error function. Our approach could be used for applications in high-speed Monte Carlo simulations, where this function is used extensively.

MSC:

62E15; 62E17; 60E15; 26D15

1. Introduction

High-speed Monte Carlo simulations are used for across a broad spectrum of applications, from mathematics to economics. As input for such simulations, the probability distributions are usually generated by pseudo-random number sampling, a method derived from the work of John von Neumann in 1951 []. In the era of “big data”, such methods have to be fast and reliable, and a sign of this necessity was the release of Quside’s inaugural processing unit in 2023 []. However, these samplings need to be cross-validated by exact methods, and for this, the knowledge of analytical functions that describe the stochastic processes, and among those, the error function, are of tremendous importance.

By definition, a function is called analytic if it is locally given by a converging Taylor series expansion. Even if a function itself is not found to be analytic, its inverse could be analytic. The error function could be given analytically, and one of these analytic expressions was the integral representation found by Craig in 1991 []. Craig mentioned this representation only briefly and did not provide a derivation of it. Since then, there have been a couple of derivations of this formula [,,]. In Section 2, we describe an additional one that is based on the same geometric considerations as employed in []. In Section 3, we provide the series expansion for Craig’s integral representation and show the rapid convergence of this series.

For the inverse error function, the guidance for special functions (e.g., []) do not unveil such an analytic property. Instead, this function has to be approximated. Known approximations date back to the late 1960s and early 1970s [,]) and include semi-analytical approximations by asymptotic expansion (e.g., [,,,,,]. Using the same geometric considerations, as shown in Section 4, we developed a couple of useful approximations that can easily be implemented in different computer languages, resulting in the deviations from an exact treatment. In Section 5, we discuss our results and evaluate the CPU time. Section 6 contains our conclusions.

2. Derivation of Craig’s Integral Representation

The authors of [] provided an approximation for the integral over the Gaussian standard normal distribution that is obtained by geometric considerations and is related to the cumulative distribution function via , where is the Laplace function. The same considerations apply to the error function that is related to via

Translating the results of [] into the error function, we obtained the approximation of order p by the following:

where the values () are found in the intervals between and . A method for selecting those values was extensively described in [], where the authors showed the following:

for . With times larger precision, the following was expressed:

for and . For the parameters of the upper limits of those intervals, we calculated the deviation by the following:

Given the values with , with the limit , the sum over n in Equation (2) could be replaced by an integral with measure to obtain the following:

3. Power Series Expansion

The integral in Equation (6) could be expanded into a power series in ,

with

where . The coefficients could be expressed by the hyper-geometric function, , also known as Barnes’ extended hyper-geometric function. However, we could derive a constraint for the explicit finite series expression for that rendered the series in Equation (7) convergent for all values of t. In order to be self-contained, the intermediate steps to derive this constraint and to show the convergence were shown by the following, in which the sum over the rows of Pascal’s triangle was required:

Returning to Equation (8), we had . Therefore,

The result in Equation (8) led to the following:

where the existence of a real number is between and 1, such that . We found the following:

Because of , there was again a real number in the corresponding open interval so that the following was true:

As the latter was the power series expansion of , which was convergent for all values of t, the original series was then also convergent and, thus, with the limiting value shown in Equation (7). A more compact form of the power series expansion was expressed by the following:

4. Approximations for the Inverse Error Function

Based on the geometric approach described in [], we were able to describe simple, useful formulas that, when guided by consistently higher orders of the approximation (2) for the error function, led to consistently more advanced approximations of the inverse error function. The starting point was the degree , that is, the approximation in Equation (3). Inverting led to , and using the parameter from Equation (3) yielded the following:

For , the relative deviation from the exact value t was less than , and for , the deviation was less than . Therefore, for , a more precise formula has to be used. As such, higher values for E appeared only in of the cases, so this would not significantly influence the CPU demand.

Continuing with , we inserted into Equation (2) to obtain the following:

where and are the same as for Equation (4). Using the derivative of Equation (1) and approximating this by the difference quotient, we obtained the following:

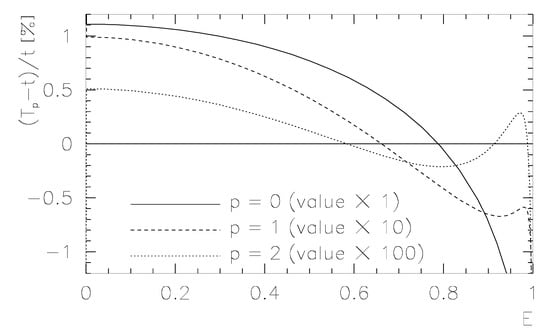

resulting in . In this case, for the larger interval , the relative deviation was less than . Using instead of and inserting instead of , we obtained with a relative deviation of maximally for the same interval. The results are shown in Figure 1.

Figure 1.

Relative deviations for the static approximations.

The method could be optimized by a method similar to the shooting method in boundary problems, which would add dynamics to the calculation. Suppose that following one of the previous methods, for a particular argument E, we found an approximation for the value of the inverse error function of this argument. Using , we could adjust the improved result

by inserting and calculating A for . In general, this procedure provided a vanishing deviation close to . In this case as well as for , in the interval , the maximal deviation was slightly larger than , while up to the deviation was restricted to . A more general ansatz

could be adjusted by inserting for and , and yielded the system of equations:

with . Therefore, could be solved for A and B to obtain the following:

For , we obtained a relative deviation of . For , the maximal deviation was . Finally, an adjustment of

led to the following:

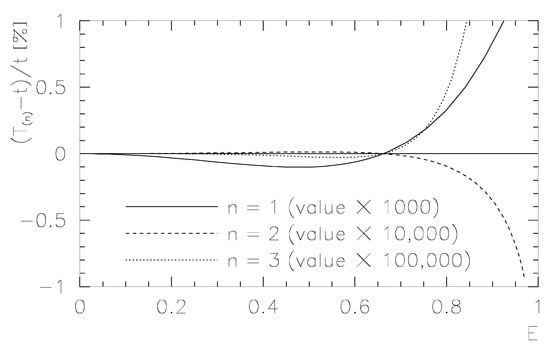

where . For , the relative deviation was restricted to , while up to , the maximal relative deviation was . The results for the deviations of () for linear, quadratic, and cubic dynamical approximation are shown in Figure 2.

Figure 2.

Relative deviations for the dynamical approximations (the degree was set as ).

5. Discussion

In order to test the feasibility and speed, we coded our algorithm in the computer language C under Slackware 15.0 (Linux 5.15.19) on an ordinary HP laptop with an Intel® Core™2 Duo CPU P8600 @ 2.4GHz with 3MB memory used. The dependence of the CPU time for the calculation was estimated by calculating the value times in sequence. The speed of the calculation did not depend on the value for E, as the precision was not optimized. This would be required for practical application. Using an arbitrary starting value , we performed this test, and the results are shown in Table 1. An analysis of this table showed that a further step in the degree p doubled the runtime while the dynamics for increasing n added a constant value of approximately seconds to the result. Though the increase in the dynamics required the solution of a linear system of equations and the coding of the results, this endeavor was justified, as by using the dynamics, we could increase the precision of the results without sacrificing the computational speed.

Table 1.

Runtime experiment for our algorithm under C for and different values of n and p (CPU time in seconds). As indicated, the errors are in the last displayed digit, i.e., s.

The results for the deviations in Figure 1 and Figure 2 were multiplied by increasing the decimal powers in order to ensure the results were comparable. This indicated that the convergence was improved in each of the steps for p and n, at least by the corresponding inverse power, while the static approximations in Figure 1 showed both deviations were close to , and for higher values of E, the dynamical approximations in Figure 2 showed no deviation at and moderate deviations for higher values. However, the costs for an improvement step in either p or n was, at most, a 2-fold increase in CPU time. This indicated that the calculations and coding of expressions such as Equation (9) were justified by the increased precision. Given the goals for the precision, the user could decide to which degrees of p and n the algorithm should be developed. In order to prove the precision, in Table 2, we showed the convergence of our procedure for with fixed and increasing values of n. The last column shows the CPU times for runs of the algorithm proposed in [] with N given in the last column of the table in [], as coded in C.

Table 2.

Results for and increasing values of n for values of E approaching . The last column shows the CPU time for runs according to the algorithm proposed in [] for the values of N, given in the last column of the table displayed in [].

6. Conclusions

In this paper, we developed and described an approximation algorithm for the determination of the error function, which was based on geometric considerations. As demonstrated in this paper, the algorithm can be easily implemented and extended. We showed that each improvement step improved the precision by a factor of ten or more, with an increase in CPU time of, at most, a factor of two or more. In addition, we provided a geometric derivation of Craig’s integral representation of the error function and a converging power series expansion for this formula.

Author Contributions

Conceptualization, D.M.; methodology, D.M. and S.G.; writing—original draft preparation, D.M.; writing—review and editing, S.G. and D.M.; visualization, S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Regional Development Fund under Grant No. TK133.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Von Neumann, J. Various Techniques Used in Connection with Random Digits. In Monte Carlo Methods; Householder, A.S., Forsythe, G.E., Germond, H.H., Eds.; National Bureau of Standards Applied Mathematics Series; Springer: Berlin, Germany, 1951; Volume 12, pp. 36–38. [Google Scholar]

- Quside Unveils the World’s First Randomness Processing Unit. Available online: https://quside.com/quside-unveils-the-worlds-first-randomness-processing-unit (accessed on 27 February 2023).

- Craig, J.W. A new, simple and exact result for calculating the probability of error for two-dimensional signal constellations. In Proceedings of the 1991 IEEE Military Communication Conference, McLean, VA, USA, 4–7 November 1991; Volume 2, pp. 571–575. [Google Scholar]

- Lever, K.V. New derivation of Craig’s formula for the Gaussian probability function. Electron. Lett. 1998, 34, 1821–1822. [Google Scholar] [CrossRef]

- Tellambura, C.; Annamalai, A. Derivation of Craig’s formula for Gaussian probability function. Electron. Lett. 1999, 35, 1424–1425. [Google Scholar] [CrossRef]

- Stewart, S.M. Some alternative derivations of Craig’s formula. Math. Gaz. 2017, 101, 268–279. [Google Scholar] [CrossRef]

- Martila, D.; Groote, S. Evaluation of the Gauss Integral. Stats 2022, 5, 32. [Google Scholar] [CrossRef]

- Andrews, L.C. Special Functions of Mathematics for Engineers; SPIE Press: Oxford, UK, 1998; p. 110. [Google Scholar]

- Strecok, A.J. On the Calculation of the Inverse of the Error Function. Math. Comp. 1968, 22, 144–158. [Google Scholar]

- Blair, J.M.; Edwards, C.A.; Johnson, J.H. Rational Chebyshev Approximations for the Inverse of the Error Function. Math. Comp. 1976, 30, 827–830. [Google Scholar] [CrossRef]

- Bergsma, W.P. A new correlation coefficient, its orthogonal decomposition and associated tests of independence. arXiv 2006, arXiv:math/0604627. [Google Scholar]

- Dominici, D. Asymptotic analysis of the derivatives of the inverse error function. arXiv 2007, arXiv:math/0607230. [Google Scholar]

- Dominici, D.; Knessl, C. Asymptotic analysis of a family of polynomials associated with the inverse error function. arXiv 2008, arXiv:0811.2243. [Google Scholar] [CrossRef]

- Winitzki, S. A Handy Approximation for the Error Function and Its Inverse. Available online: https://www.academia.edu/9730974/ (accessed on 27 February 2023).

- Giles, M. Approximating the erfinv function. In GPU Computing Gems Jade Edition; Applications of GPU Computing Series; Morgan Kaufmann: Burlington, MA, USA, 2012; pp. 109–116. [Google Scholar]

- Soranzo, A.; Epure, E. Simply Explicitly Invertible Approximations to 4 Decimals of Error Function and Normal Cumulative Distribution Function. arXiv 2012, arXiv:1201.1320. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).