1. Introduction

Recent literature claims “persistent misconceptions” exist about the interpretation of data obtained from sampling of spatially autocorrelated phenomena. In spatially autocorrelated data, values at each sample location provide information about the values of neighboring locations as well as the sampled location itself. The supposed misconceptions include the calculation of a quantity called the effective sample size, the focus of this paper, which is a measure of the loss of information attributable to the effects of positive spatial autocorrelation (SA; similar neighboring values cluster on a map). Brus [

1], for example, contends that effective sample size calculations are inappropriate in a design-based analysis, in part because it considers attribute values as fixed rather than random quantities. The main objective of this paper is to demonstrate that this specific contention is incorrect, and that effective sample size is a meaningful quantity even with a random sampling selection implementation; the discussion here begins by briefly contextualizing it within a broader set of controversies about spatial statistical analyses.

Correlated data theory and practice has a history dating back to the early 1800s, with Laplace’s initial recognition of its presence in time series (e.g., [

2]). The addition of SA to this raw dependent data family did not occur until the early 1900s, with its popularization failing to materialize until roughly 1970. Its transition from a tacit to a mathematically formulated conceptualization was not without controversy. Lebart [

3], for example, used first spatial differences, fundamentally describing SA as being perfectly positive for each and every attribute variable in a dataset, to reduce/remove spatial dependence effects from factor analysis applications later, Besag et al. [

4] formulated the improper conditional autoregressive (ICAR) model partly in this manner, but retained an uncorrelated component coupled with the spatial differencing term in order to preserve the almost always prevailing less-than-perfect positive SA. Because his overcorrection was incapable of aptly capturing the exhibited latent degree of SA—moving data from zero, past intermediate, and to perfect positive SA—this transformation created little difference between the factor structures he calculated for these spatially adjusted variates and their corresponding unadjusted sources, discouraging many quantitative researchers at that time from pursuing emerging non-point pattern spatial statistics themes of that era. Since then, a number of other scholars directed criticisms at spatial statistical approaches to georeferenced data analyses. Wall [

5], for example, carefully scrutinizes the spatial correlation structures implied by two of the most commonly employed spatial regression models within the context of an irregular lattice geographic structure of areal units, concluding that they produce many counterintuitive or impractical, and hence essentially unintelligible, consequences (e.g., adjacent polygons with a relatively small SA degree, perhaps because of spatial heterogeneity effects). Furthermore, Wakefield [

6] argues that respecifying models to properly capture spatial dependence often is unwarranted for ecological regression analysis of geospatial environmental exposure and other epidemiological health data (e.g., because of data quality issues).

The spatial statistics/econometrics literature is replete with SA impact claims, the foremost being variance inflation. The publication of papers evaluating such assertions is one critical reaction to these allegations. Along these lines, Hawkins et al. [

7] appraise the contention found in the ecological literature that SA in standard ordinary least squares (OLS) estimated linear regression residuals results in shifts in the equation’s partial coefficients, which bias an interpretation of the role of covariates vis-à-vis response variable map patterns. Their validation study confirms what mathematical statistics already declared, namely that OLS yields unbiased linear regression coefficient estimates for both independent and spatially autocorrelated observations, cautioning that interpretation requires an explicit awareness of both geographic scale and resolution. Meanwhile, acknowledging the contemporary popularity of mixed models in statistics in general, and spatial statistics/econometrics in particular, which involves adding a spatially autocorrelated random effects term to a regression model specification (e.g., re [

4]), Hodges and Reich [

8] show how to avoid spatial confounding, which they attribute to, at least in part, the presence of collinearity in data arising from the tendency for measures taken at locations nearby each other to be more similar than measures taken at more distant locations. Hodges and Reich [

8] (p. 326) contend that they “debunk the common belief that introducing a spatially-correlated random effect adjusts fixed-effect estimates for spatially-structured missing covariates”.

Accompanying debates such as the preceding spatial confounding argument is one about the functional organization connecting locations in a SA conceptualization. Echoing Griffith and Lagona [

9], LeSage and Pace [

10] counter a nearly universal consensus, distinct from the concern about endogeneity, that estimates and inferences from spatial regression models are sensitive to even moderate changes in the definition governing this articulation. Nevertheless, for model identification issues affiliated with this linkage system and other reasons, Partridge et al. [

11] note that econometricians remain skeptical about spatial econometric approaches that utilize SA effects to estimate geographic spillovers in regional outcomes. This type of skepticism also appears in the spatial sampling literature. Lark and Cullis [

12] insist that the inferential basis supporting standard OLS estimation for a linear regression model is design-based, requiring that the collection of georeferenced data be in accordance with an appropriate random-grounded sampling design, a protocol rarely used for gathering soil data; hence, they conclude that OLS methods are not applicable for analyzing such data. In the same vein, Brus [

1], for example, challenges declarations pertaining to sampling and variance inflation, and hence the use of other designs apart from simple random sampling (RS) for compiling geospatial data, as well as the application of the independent and identically distributed (iid) assumption to parent populations in such data analyses.

The primary objective of this paper is to augment the general literature in defense of spatial statistics/econometrics, and to reenforce earlier rebuttals such as those already reiterated here. Specifically, we address the debatable contentions posited by Brus with a goal of furnishing further clarification about them; at least part of this difference of opinion reflects that between the design- and model-based inference approaches (e.g., see [

13,

14]). Part of our underlying viewpoint emphasizes that iid random variables (RVs) provide a mathematical framework for RS, which is a special (not the sole) case of this concept’s implementation: knowing the output of one iid RV, whether for a population or a sample (à la [

15]), supplies no information about the outputs of the others. After all, joint distributions for independent RVs differ from those for conditionally dependent RVs. Products of probability density/mass functions conceptualize this former, whereas Markov chain Monte Carlo (MCMC; [

16]) formulations, for example, conceptualize this latter, situation. In addition, not only real-world geographic landscapes, but also time series, matched/correlated/paired/dependent observational units, and social network collections of phenomena, rarely constitute random mixtures (see [

17]); rather, they embrace trans-observational structure meriting quantitative recognition similar to calculating means, variances, and skewness and kurtosis measures.

2. The Case Brus Promotes: A Summary and Traditional Numerical Counterexamples

Brus [

1] (pp. 689, 694, 696) raises provocative points about geographic probability sampling when he states.

Remarkably, in other publications we can read that the classical formula [for finite population sampling?] for the variance of the estimated population mean with SRS [simple RS] underestimates the true variance for populations showing spatial structure (see for instance Griffith [

17] and Plant [

18]). The reasoning is that due to the spatial structure there is less information in the sample data about the population mean.

Another persistent misconception is that when estimating the variance of the estimated mean of a spatial population or the correlation of two variables of a population we must account for autocorrelation of the sample data. This misconception occurs, for instance, in [

17] and in [

18]). The reasoning is that, due to the [SA] in the sample data, there is less information in the data about the parameter of interest, and so the effective sample size is smaller than the actual sample size.

… Griffith [

17] confused the population mean and the model mean, Wang et al. [

19] confused the population variance with the sill variance (a priori variance) of the random process that has generated the population [

20].

A focal point in this set of disputes is variance inflation.

This section encapsulates output from Monte Carlo simulation experiments employing pseudo-random numbers that characterize two conventional numerical illustrations often anecdotally presented in pedagogic circumstances to exemplify variance inflation. The first is the instance of doubling to n an original sample of size n/2 drawn according to a SRS design by repeating each of its selected entries; educators can devise similar demonstrations for other sampling designs. The next subsection extends this pretend prospect to legitimate matched observations schemes. The third is the instance of a sample of size n comprising two distinct and unmistakably separated clusters of n/2 values, a data configuration resembling two repeated measures plagued by measurement error.

2.1. Samples Artificially Enlarged by Repeating Each of Their Selected Observations

One simulation experiment engages correlated data, with n/2 pairs of perfectly correlated (i.e., ρ = 1) observations. For simplicity, the synthetic data consist of pseudo-random numbers drawn from a normal distribution with μ = 0 and σ = 1. This experiment has 10,000 replications, and affords a comparison of output for this contrived sample with a SRS sample of size n; each sample contains the same initial n/2 SRS selections. The only factor manipulated in this experiment was sample composition.

Table 1 tabulations summarize selected output from this simulation experiment. One finding gleaned from this table is the well-known confirmation that SRS yields results consistent with the central limit theorem (CLT); slight differences appearing between the two sets of calculations are attributable to sampling error. Another finding is variance inflation attributable to correlated observations, the same variety discussed by Griffith [

17], Plant [

18], and Wang et al. [

19], and discounted by Brus [

1]; Griffith [

17] treats this specific source within the context of the effective sample size notion. Indeed, this particular variance inflation, as indexed by the reported estimated sampling distribution variance ratios, reveals the actual sample size doubling that occurs in the contrived cases.

In summary, evidence exists advocating that assumption violations unintentionally induced by correlated data make alterations to conventional SRS design statistical theory. Ensuing sections of this paper substantiate that this property extends to geographic sampling settings involving SA.

2.2. Ignoring the Correlation in Correlated/Paired/Matched Samples

The preceding example imitates real-world possibilities, such as the genuine traditional testing of the difference between two population means hypothesis that is an exemplar of the investigation of an average outcome before and after an intervention. An analyst may manage this situation in one of two general ways: (1) draw two independent random samples, each of size n for balance, one before and the other after some controlled or natural disruption; or, (2) draw a single random sample of n matched/correlated/paired/dependent observations, recording each pair of before and after measurements. This second choice helps to control for certain potential sources of unwanted variation. The design-based approach acknowledges the latent temporal autocorrelation here by adopting the orthodox practice of analyzing the differences of before–after value pairings, setting, conceding, and perpetuating a habit of handling autocorrelation when sampling.

Table 2 summarizes output for a basic second simulation experiment exemplifying this latter approach. As in the preceding section,

Table 2 discloses marked variance inflation attributable to correlated observations.

In this longstanding statistical problem, dating back to work by Hotelling around 1930, the independence affiliated with RS differs from the pairwise observational correlation attributable to matching/pairing, a standing analogous to that of SA in geospatial data. Nonetheless, the RS design embraces it (e.g., collecting pre- and post-event measurements requires a coordinated effort beyond simple/unconstrained RS) in order to decrease the sampling distribution variance, here by about one third—again, design-based approaches customarily account for this in their variance estimation via differencing. Ignoring this sampling design facet would allow an analyst to pretend that a sample size has twice as many entries as it truly has (i.e., the effective sample size here is nk, not 2nk), and that the difference of two means standard error is nearly three times greater than its true magnitude. A real-world example of this possibility occurred during the early years of the United States Environmental Protection Agency’s Environmental Monitoring and Assessment Program (US EPA EMAP), underwater soil samples collected from the Chesapeake Bay and other near-coastal water sea beds along the US Atlantic Ocean and Gulf of Mexico shorelines were coded and then distributed to private chemistry laboratories for assaying, with the goal of determining which companies should receive subsequent government contracts through this project. For quality control purposes, both the same and different laboratories received sets of soil sample bags with some containing near-duplicate (i.e., some would say SA, whereas others would say measurement error, at work) content because of their side-by-side borehole locations. One entrepreneur deciphered the adopted code, and merely again reported first replicate bag results rather than assaying duplicate samples: he treated a sample smaller than n as being of size n. Because he reported identical results for replicate bags, project scientists detected his deception, preventing him from receiving a subsequent project contract. Recognizing and accounting for correlation in observations matters!

2.3. Samples Grouping into Two Equal-Sized Disparate-Valued Subsets of Observation

A third simulation experiment was bivariate in nature, and involved clustered data grouped into two clearly distinct subsets, each with n/2 pairs of uncorrelated (i.e., ρ = 0) observations. Once more, for simplicity, the synthetic data consist of pseudo-random numbers drawn from a normal distribution with (μx,1, μy,1) = (5, 5) and (μx,2, μy,2) = (10, 10), and σx = σy = 0.5; this chosen variance ensures that the two subset clusters are distinct and concentrated. The experiment had 10,000 replications, and supported comparing output for a SRS sample of size n with a stratified sample of size four. The only factor manipulated in this experiment was the design sample size.

Geometrically speaking, the pedagogic feature of interest here is that determining a straight line linear regression on a two-dimensional scatterplot requires only two different points, which always are collinear; by reducing one of the two point clouds to a single point, this specimen also more commonly offers an excellent illustration about the influence of outliers on linear correlation and regression estimation. Maintaining a minimum number of degrees of freedom as well as a balanced design in an affiliated simulation experiment requires increasing each minimum stratified RS stratum sample size nk, where k denotes the number of strata, here from one to two (i.e., two draws from each cluster).

Table 3 tabulations report selected summary output from the simulation experiment. One finding gleaned from this table is the well-known confirmation that SRS yields results that improve in accuracy and precision by increasing sample size n, with trajectories converging upon their corresponding population parameter values. Another corroborated well-known finding is that stratified RS is capable of furnishing more representative samples that can better estimate population parameters with smaller sampling variances; this trait, although not guaranteed to arise, holds for the relatively larger sample sizes sometimes utilized in soil science research, for example.

In closing this section, evidence exists endorsing the common practice of using stratified RS designs for more efficient data collection. However, the illustration in this section is for emphasis only; no inconsistencies exist between what design- and model-based approaches tell the scientific community (e.g., see [

21], ([

22], §13.2)). Ensuing sections of this paper substantiate that this property also extends to geographic sampling venues involving SA. As with the preceding exemplar, variance inflation occurs in SRS vis-à-vis stratified RS, a similar kind to that discussed by Griffith [

17], Plant [

18], and Wang et al. [

19], and denigrated by Brus [

1].

4. Alternative Sampling Designs and Model-Based Inference

For a variety of reasons, not all data collection profits from, nor do collected data automatically relate to, SRS or its alternative data randomization procedures. Correlated data in general, and the presence of SA in particular, furnish(es) one justification for departure from an all-encompassing exploitation of randomness.

When planning data collection, a principal reason to sample is to engage in an efficient and effective use of finite resources while surveying a population. A sample size n should be large enough to make a statement about given parameters describing its parent population with a pre-specified precision and a stipulated resources cost. The arithmetic mean CLT, for example, enables such an outcome. However, researchers end up with only a single, but not necessarily a typical, sample, whose data also may need to display bonus salient features to bolster its value. Seeking such desirable add-ons motivated the invention of more sophisticated designs, such as stratified RS, whose important supplemental quality is improved representativeness (see

Table 3 and

Table 4)—chiefly by reducing the selection probability of certain anomalous sample composition possibilities, sometimes to zero, while (perhaps uniformly) increasing the probability of all achievable samples.

After collecting data, whose gathering was potentially in some non- or constrained random fashion (e.g., its sampling design deviates from SRS), a researcher has the option to shift to model-based inference. Relocating the all-important random aspect for scientific research from probability sampling to the obtained attribute values themselves, a sample becomes a realization of a joint distribution of RVs, the superpopulation, a notion apparently first put forth by Cochran [

26], and the fundamental basis of most time series analyses as well as MCMC methods especially popular in Bayesian map analysis and frequentist spatial statistics. In its traditional version of this context, variance estimation derives from OLS theory, and thus, variance heteroscedasticity replaces known, and appealingly equally likely, probabilities as the primary worry. The resulting inferential basis has a model validity foundation, emphatically accenting the adequacy of its description via goodness-of-fit and other diagnostics. Randomness here stems from the assumption of the existence of a superpopulation, and hence some stochastic process producing an observed realization, not from a sampling design. SRS requires independent probabilities in its selection of observations (e.g., all possible samples of size n have an equal probability of being selected), whereas this model-based approach requires independence among the observations themselves; accordingly, the presence of nonzero SA necessitates the adoption of appropriate techniques to account for it. Although they are functional in their individual forms, coupling design- and model-based strategies has at least one synergistic advantage, namely that their combination allows inferences to be robust with respect to possible model misspecification.

4.1. Some Reasons Why Designs Utilize Other Than Simple/Unrestricted RS

In response to representativeness needs, statistically informed experimental designs embracing more than RS strive to plan data collection efficiently and effectively, within a sampling framework, in such a way that subsequent statistical analysis of any sampled data yields valid and objective statistical inferences. In doing so, each strategy essentially pre-determines the appropriate statistical techniques for subsequently analyzing data collected with its implemented strategy. Balance frequently is one of the important elements: an equal number of observations drawn from various segments of a population to enhance statistical power, to improve efficiency of sampling estimates, and to create certain data analytic orthogonality conditions. Unbalanced data have a skewed distribution of observations across targeted population subgroups, with methodologists habitually describing such unbalanced datasets as being messy [

45]. Areal unit dependencies (e.g., SA) and/or nonlinear relationships (e.g., the specification and estimation of auto- models [

46]) tend to permeate such untidy sample data, a common hallmark of observational studies, increasing the risk of promoting inexplicable model specifications (e.g., re the spatial autoregressive critique by Wall [

4]). A balanced geospatial sample data assemblage scheme relates directly to the Horvitz–Thompson estimators, for which balance ensures that picked auxiliary variable sample totals are the same or almost the same as their true population counterparts. Balance introduces the importance of a design establishing a suitable sample size for achieving not only satisfactory population coverage (e.g., a need for the full range of interpoint distances to estimate a semivariogram model; see [

47]), but also a particular degree of statistical power.

Real-world sample data also tend to be what methodologists label dirty (i.e., are incomplete and/or include outliers/anomalies; e.g., [

48]). Such data may contain corruptions from inaccuracies and/or inconsistencies, or may mandate variance-stabilizing transformations, both of which necessitate remedial action by an analyst. This context certainly impacts any determination of the appropriate subsequent statistical techniques for analyzing data collected with an implemented plan. SA plays a role here, as espoused by, for example, Griffith and Liau [

49]. Geostatistical kriging, which exploits SA, symbolizes the importance of missing data imputation.

An adequate sample size, vis-à-vis statistical power [

50], combines with methodologists’ impressions of noisy data: numerical facts and figures characterized by unsystematic uncertainty/variability/stochastic error, containing obscured/masked trends of various degrees that conceal a true alternate hypothesis. This issue partners with the contemporary debate about substantive significance (e.g., [

51]), namely the size of a(n) effect/relationship, and statistical significance, which entails detailed description of the nature of the uncertainty involved. Both an extensive literature and practice exist devoted to computing sufficient sample sizes for the uncovering of a precise size effect. Effective geographic sample size explicitly addresses this magnitude of difference between a posited null and its affiliated alternative hypothesis, as well.

Although these few dimensions of experimental design are not comprehensive, they demonstrate the relevance and importance of acknowledging, and even accounting for, the presence of non-zero SA when engaging in RS. Each is outside the purview of the CLT. However, each is capable of having a profound influence upon the execution of RS, highlighting the importance of this paper’s focus.

4.2. Some Reasons Why SA Encourages Model-Based Inference

In model-based inference, each real-world y

i is a random, rather than a fixed (as in design-based inference), quantity (see

Appendix A). Now the random component comes from stochastic processes affiliated with RV Y in a superpopulation, in lieu of SRS. This model-based conceptualization presents the following two different inference problems pertaining to: (1) the realization mean,

, which varies from realization to realization in a way analogous to how this calculation varies across all possible SRS samples of size n; and, (2) the superpopulation mean counterpart to

, namely μ. The simplest descriptive model specification (i.e., no covariates) posits

Y = μ

1 +

ε, where

Y,

1, and

ε are n-by-1 vectors, with all of the elements of

1 being one, and the elements of

ε being drawings from a designated probability model (e.g., normal) with a zero mean—because scalar μ already is in the postulated specification—and finite nonzero variance; this is the case treated by Griffith [

17]. It spawns a

estimate with an attendant superpopulation variance estimator that simplifies to the one for SRS applied to an infinite population (i.e., no correction factor; hence the approximation variety for finite populations having n ≤ 0.05N) [

52] (p. 43).

The presence of non-zero SA for the covariate-free specification alters OLS to GLS estimation for model-based calculations. The preceding linear model specification remains the same, whereas the variance estimation changes from

where, respectively, n-by-n matrix

I denotes the identity matrix and

V denotes the SA structure operator instilling geographic covariation. This conversion signals the importance of whether or not matrix

V ≠

I matters when implementing SRS. Both expert opinion and content in

Table 3 and

Table 4 imply that it does.

4.3. A Monte Carlo Simulation Experiment Investigating Design- vs. Model-Based Inference

Brus [

1] (p. 689) claims that Griffith [

17] and Plant, per [

18], perhaps among others, brand the classical SRS variance formula for

as underestimating the true σ

2 in the presence of SA (see §2). His contention compels an exploration of the meaning of underestimation in terms of the way he uses the word, which, in part, becomes conditional upon the extent or scale of the geographic landscape in question.

Table 4 already illustrates that variance estimation is insensitive to a change in sampling design from SRS to stratified RS when an attribute variable exhibits a purely random mixture geographic distribution Moreover, if strata are ineffective (e.g., they capture the presence of zero SA) in a stratified design, the estimated variance is expected to be the same as that for SRS. However, if these strata are effective (i.e., they capture prevailing non-zero SA, grouping within each stratum units that had roughly similar y-values), then the stratified variance estimator would capture the improved precision of the estimated mean achieved by this stratified design. Although design-based inference does not assume that all populations are random with no structure, the design-based variance estimators properly take into account features of strata and/or clusters. This is not the outcome when positive SA prevails because similar values cluster on its map, recurrently materializing as a conspicuous two-dimensional pattern, catapulting the notion of variance inflation—the only SRS variance estimation declaration made in Griffith [

17]—to the forefront of SA distortion concerns (see

Table 4).

For generalizability purposes, the fifth simulation experiment employed a moderate, rather than pronounced, degree of positive SA, and only the fairly universally agreed upon minimum sample size of 30. Its output is sufficient to exemplify that applying SRS to geospatial data containing SA for only a subregion of a larger landscape can cause underestimation of the variance, even though this same estimator furnishes an accurate variance estimate when applied to the entire parent geographic landscape under study (see

Table 5), whose boundaries almost always are unknown. In this context, both sets of variances conform to regular mathematical statistical theory, such as correct Type I error probabilities (see

Table 5). A clustering of similar values when SA prevails reduces geographic variation within a subregion—paralleling the preceding stratified RS, with a region in this circumstance mirroring an individual stratum in that instance—while increasing contrasts among the given subregions (i.e., SA reduces within region, while increasing between regions, geographic variance); these discrepancies account for the missing components empowering SA to decrease the regional variation reported in

Table 5. Trying to align a study area with its true but indeterminate larger scale landscape is all but impossible, explaining why variance underestimation, such as that critiqued in Brus’s [

1] comments, may well occur. In effect, an analysis unknowingly is of a subdomain: estimated variances tend to vary over the different subdomains, with these subdomain variances not matching the variance estimated for samples from the full study region. A strategy for correcting this situation is becoming aware of the existence of such subdomains, after which design-based methodology certainly exists for properly handling them.

In summary, latent nonzero SA means that geotagged attribute values exhibit a two-dimensional organization deviating from the unpredictability depicting a fully random mixture of values. This data trait, in turn, can result in an incorrect identification of a target population, resulting in SRS producing an underestimation of the attribute’s variance. The elusiveness of almost always invisible geographic landscape boundaries argues for taking SA into account when sampling and analyzing geospatial data, and consulting model-based methodology for a reminder about helpful tools, such as effective geographic sample size, to which stratified SA also alludes.

5. The Role of iid in Sampling Designs

Preceding discussion tackles observational independence issues, the first letter in iid, with this initial i signifying that multiplication with either individual sample or population marginal probabilities is well-founded; this section mostly concentrates on the remaining id part of iid. Brus [

1] (p. 289) states that “… in statistics [iid] is not a characteristic of populations, so the concept of iid populations does not make sense.” At best, this announcement seems intransigent, mainly given that mainstream mathematical statistical theory deriving the CLT that supports SRS statistical inference considers iid to be a pillar of SRS theory, whereas techniques such as Monte Carlo simulation and MCMC can couch superpopulations within the same framework category. Invoking this property allows the statistical/sampling distribution of the arithmetic mean,

, to have asymptotic convergence to a normal distribution with increasing sample size, n. In addition, iid enables an analytical computation of the rate of convergence across different RVs, a valuable input for deciding upon a SRS design value for n. Hoeffding [

53] posits a moment matching theorem establishing the convergence in probability of density functions, which pertains here: if skewness asymptotically goes to zero, and kurtosis asymptotically goes to three (i.e., excess kurtosis asymptotically goes to zero), then the sampling distribution under study closely mimics a normal RV probability density function (PDF), one described by the CLT.

SRS from an infinite normal RV population is the most straightforward illustrative case. This RV’s moment generating function (MGF) is M

Y(t) =

. Its accompanying arithmetic mean MGF is

, with the population iid property authorizing the product of the n individual observation MGFs as a power function. The resulting general sample MGF is that for a normal RV with a mean of μ and a variance of σ

2/n, exact CLT outcomes. In other words, an n = 1 iid normal RV population sampling distribution achieves instant convergence, as the law of large numbers also confirms for samples of size n = 1. The question applied researchers find themselves asking, then, is how to detect the underlying RV nature of their data. Answering this question diverts them to an inspection of a histogram portrayal of their sample of attribute values, and hence often a goodness-of-fit test for the theoretical RV they hypothesize. Recognizing that determining an appropriate sample size for meaningful evaluation of normality is a difficult task (all goodness-of-fit diagnostics suffer from the following dual weaknesses: small sample sizes allow identification of only the most aberrant, whereas very large sample sizes invariably magnify trivial departures from normality, often delivering either statistically nonsignificant but substantively important, or statistically significant but substantively unimportant, inferential conclusions; accordingly, normality diagnostic statistics require a minimum sample size before they become informative; tests of normality have notoriously low power to detect non-normality in small samples). Graphs appearing in Razali and Yap [

54] corroborate this contention; only normal approximations, the recipients of such assessments, exist in the real world, as conventional statistical wisdom purports that n needs to be between 30 and 100 for a sound statistical inference about a RV name. Razali and Yap [

54] also furnish some contemporary wisdom based upon evidence from Shapiro–Wilk normality diagnostic statistical power simulation experiments: the minimum sample size for deciding upon an underlying RV appears to be about 250 (e.g., the chi-squared, gamma, and uniform RVs), and maybe more (e.g., the t-distribution RV) in order to maximize statistical power.

Table 6 tabulates specimen results for a small array of RVs that span the complete spectrum of PDF forms, all with μ = 1 and σ = 1 by construction: bell-shaped, right-skewed, uniform, symmetric sinusoidal (i.e., U-shaped beta), and mixed (a blend of these previous four RVs). The first four of these table entries involve iid RV populations, with their analytics being expressions similar to the preceding normal RV power function. In other words, the sampling distribution MGF is

rather than

—the more traditional mathematical expression that may be rewritten as a convex combination of separate orthodox PDFs (e.g., see

Table 6)—representing sampled independent but non-id observations.

The nature of an iid population RV impacts the CLT convergence to normality with increasing n, in turn possibly establishing different appropriate minimum sample sizes for the SRS design-based sampling distribution of

to adequately conform to a bell-shaped curve. This data analysis aspect is important for making proper design-based statistical inferences (e.g., the construction of arithmetic mean confidence intervals). Because population RV symmetry impacts the sampling distribution skewness rate of convergence, the focus here is on the kurtosis rate of convergence, which

Table 6 documents as potentially fluctuating across RVs, justifying the need for RV-specific SRS minimum sample sizes. Some of these RVs have the increasing-n trajectory converging from above (e.g., exponential), whereas others have it converging from below (e.g., uniform and sinusoidal).

Research about non-iid RVs also has a long history, stretching back to its most cited early mixture publication by Pearson [

10]. Zheng and Frey [

55] ascertain that sample size, convex combination mixing weights, and degree of separation between component RVs impacts RS outcomes for a mixture RV. Sampling variance decreases with increasing sample size (that tendency from the CLT appears to apply; see

Table 6), increasing separation between component RVs improves stability of RS results as well as increasing parameter independence.

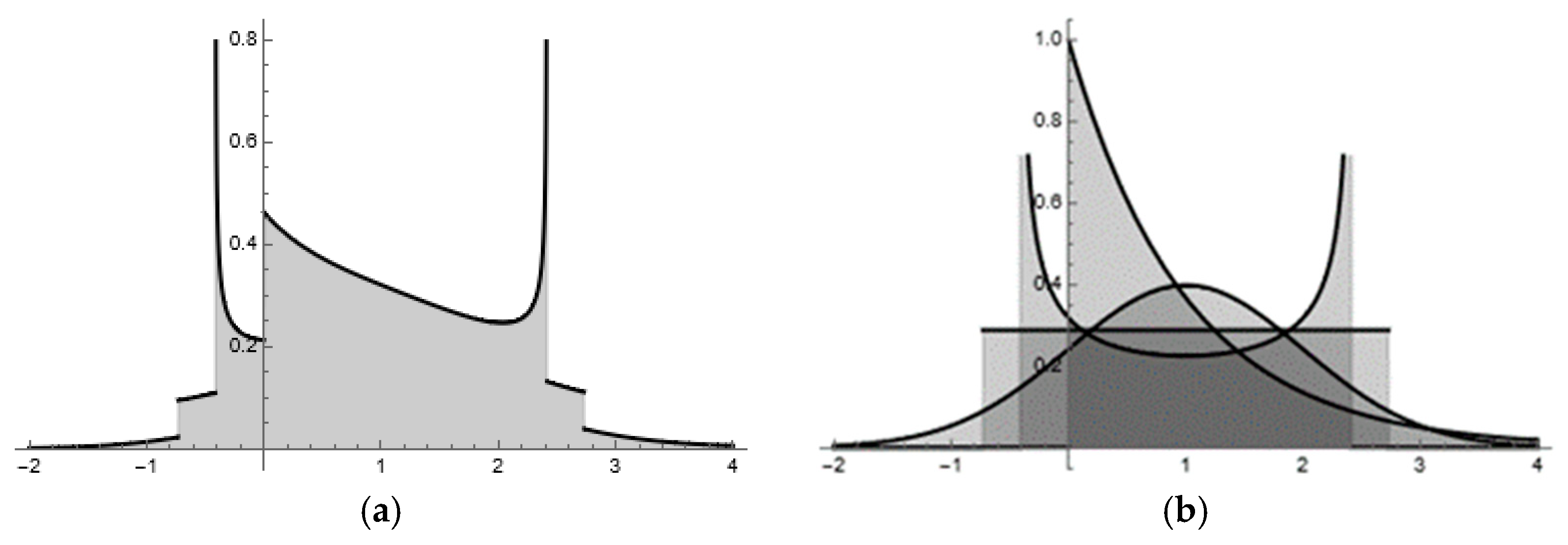

Table 6 reports results for a mixture [

56,

57,

58,

59] of different RVs [here the respective population weights attached to RVs are the same, namely ¼, in the mixture PDF convex combination (

Figure 1a), from which SRS drawings are made], partly illustrating that a principal consequence of non-iid population RVs (i.e., population heterogeneity)—here they vary by only their respective natures, not their means or variances (

Figure 1b)—is induced by a correlation between pairs of sample means and variances [

60], and a conceivable breakdown of part of the SRS-based CLT theory attributable to ‘a “bulge” in the confidence interval in the region of cumulative probability representing the inflection point between [RV] components’ [

55] (p. 568) that is capable of shrinking or inflating a confidence interval. Thus, maintaining inferential integrity requires remedial action that aims to stabilize variability and uncertainty estimates for larger sample sizes, such as resorting to bootstrapping techniques [

55]. For example, given the four familiar specimen RVs in

Table 6 (see

Figure 1b for a portrayal of their overlaid supports), their respective asymptotic sample size trajectories enter the considerably narrow kurtosis interval of 3 ± 0.049 with concomitant sample sizes of 1, 123, 25, and 31, which essentially is in keeping with conventional wisdom.

Meanwhile, the designated specimen mixture RV has a theoretical mean and variance of one, skewness of 0.5, and excess kurtosis of 0.825, naively designating it as a leptokurtic RV; however,

Figure 1a pictures otherwise. This concocted mixture has virtually no separation of components (

Figure 1b). It encounters a serious drawback vis-à-vis minimum sample size because mixture RVs: (1) have convergence rates that are a linear combination of those for their separate component RVs—after appropriate mixing weight substitutions,

Table 6 entries become simplifications of expression −3(5p

N + 25p

E + p

U − 5)/(10n

2), where p

N, p

E, and p

U, respectively, denote the mixing weights attached to the normal, exponential, and uniform RV components [i.e., p

S = (1 − p

N − p

E − p

U) for the sinusoidal RV]; (2) almost always encompass unidentified component RVs; and, (3) usually entail unknown mixing weights. These first two points jointly mean that the largest component RV minimum sample size defines n for ensuring that the CLT begins to regulate a sampling distribution; hence, n often tends to be near the end, rather than the beginning, of the routine n = 30–100 range. Meanwhile, the set of mixing weights, p

j, follows a multinomial distribution, impacting the minimum sample size when one goal of a sampling design is to draw a sample composition closely proportional to the prevailing set of mixing weights (i.e., representativeness—avoiding the 14 medleys other than at least one observation from each RV—unattainable with stratified RS because of ignorance about mixture properties). Accordingly, the smallest p

j harmonizes with one of the escorting RV convergence rates to delineate a suitable minimum sample size; e.g., invoking the 6σ principle, if the minimum p

j is 0.05, then, for example, 0.05 − √[(0.05)(0.95)/n] = 0.04 ⇒ n = 2850 ⇒ N ≥ 57,000 (magnitudes more akin to those for the law of large numbers). In other words, suppressing the id part of the iid assumption tends to increase the necessary minimum sample size, exemplifying the notorious inclination for mixture RVs to present statisticians with serious technical challenges.

In general, CLT convergence still occurs if either a single dominant RV subsample size, n

j, or the cumulative component n

js (i.e., these subset sizes sum to n) across RVs, goes to infinity. Unfortunately, when mixture distribution inflection points occur at component RV means, CLT-based confidence intervals can become distorted, which is one of the prominent examples of technical challenges arising when dealing with this category of RV [

55]. Furthermore, without iid, the critical RV assumptions revert to a finite mean and a finite variance; without id, distinctiveness of component RVs may become critical, and SRS sampling variance can deteriorate. Nonetheless, among its inventory of well-known properties, the classical CLT still fails to preside over a mixture containing a Cauchy RV.

6. Discussion, Implications, and Concluding Comments

Contrary to assertions by Brus [

1], SA in sample data does inflate the sample variance, whether inference is design- or model-based.

Table 1 demonstrates this feature by displaying some simple simulation experiment results. Brus defines the sample variance to be that given by the classical formula for SRS RVs no matter what the degree of autocorrelation, and according to this definition, the sample variance is cast as being independent of autocorrelation; but this is not a particularly useful definition. Because, as the spatial statistics literature repeatedly emphasizes, each sample in a spatially autocorrelated dataset provides some information about the attribute values of its neighbors, a sample from such a dataset provides less information than would an equal sized sample of independent observations. Consequently, numerous distinguished statisticians offer methods for estimating an effective sample size, which provides a measure of the effect of SA on the precision of a chosen sample. This paper furnishes additional evidence corroborating this expert opinion. Additionally, knowledge of this quantity can provide useful information in the devising of future georeferenced sampling ventures. In the meantime, merging the design- and model-based perspectives, we proposed the following more comprehensive definition of effective geographic sample size: the number of equivalent iid or SRS geotagged observations that account for variance inflation attributable to SA uncovered by, respectively, the redundant information made explicit in spatial model specifications, or the tessellation stratified RS design effect made explicit in this geographic sampling plan [

61].

A classical statistics problem paralleling this SA complication occurs when a sample collection takes place at the same location but at different dates following either a before and after controlled intervention, or a repeated measurements, protocol. A sample collected at n locations according to one of these two protocols has a size n, even though it contains 2n observations, the very essence of effective sample size.

According to Brus [

1], the concept of iid RVs applies only to samples, and not to populations. Again, this is a case of developing a definition to suit one’s own purposes. By definition, any sample is a subset of a population, and if every sample drawn from a given population is iid, then one can consider iid to be a property of that population. A cursory inspection of the mathematical statistics literature reveals that this characterization is a pilar of the CLT. In addition, the spatial structure of a population is important when deciding about which sampling scheme to adopt. SRS is only one of many different forms of spatial sampling, examples of which are replete in the literature, as well as mentioned in a forgoing section of this paper. SRS frequently performs rather poorly in terms of individually selected samples drawn in realistic situations when compared with competing designs, a comparison well known and consistently true about both the design- and model-based approaches, because of certain SA effects; simulation results reported in this paper corroborate this claim.

The presence of SA in a dataset often motivates a model-based approach to analysis, for both practical and theoretical reasons. Webster and Oliver [

20] show that the most efficient sampling pattern is that conducted using a regular hexagonal grid (i.e., tessellation stratified RS following the US EPA EMAP design), which geographically spaces nearer sample points to the greatest extent possible. A square grid is almost as efficient. Analyzing such a grid sample, if its locations in the sampled domain are random, may use a design-based procedure, but the organization of spatially autocorrelated data favors a model-based analysis. In particular, SRS from even mildly autocorrelated data can lead to both an inefficient estimate of a regional arithmetic mean, as well as an underestimation of the accompanying regional geographic variance. Simulation experiments summarized in this paper also verify these contentions.

Further simulation experiments demonstrate that the presence of SA does not alter a SRS sampling distribution. Nevertheless, ignoring SA risks failing to recognize the latent variance inflation that it induces. Accordingly, both theoretical and practical considerations frequently weigh against the use of SRS in the collection of data known or suspected of possessing a high degree of SA, and in the context of the frequently asserted dichotomy between model- and design-based analysis, this preference implies the use of a model-based analysis. Moreover, as noted in a preceding paragraph, numerical experiments demonstrate that applying SRS to a subregion of a larger geographic landscape can, if the data are spatially autocorrelated, result in an underestimation of the variance, even though this same estimator furnishes an accurate variance estimate when applied to the entire parent geographic landscape under study. Clearly, a need exists for a better appreciation and demystification of differences between design- and model-based inference. With regard to the controversy addressed in this paper, Brus [

1] appears to complain more about fundamental design- rather than model-based approaches to spatial sampling, a crucial distinction he himself also previously recognized [

14].

A final theme warranting commentary here is the phrase “model assisted estimators” that Brus [

1] (especially pp. 37–38) uses, which seems very much akin to, for example, Griffith’s [

17] (especially pp. 753–755) “model-informed, design-based” conception. Griffith’s contention [

17] maintains that SA plays an important role if a researcher wants to pre-determine sample size with statistical power in mind. This viewpoint reflects Brus’s [

1] model-assisted assertion that combines probability sampling and estimators built upon superpopulation models in order to realize a potential increase in the accuracy of design-based estimates. He discloses that the model-assisted approach relates to variance estimators exploiting auxiliary information correlated with the target variable of estimation (e.g., Y), such as SA, which Stehman and Overton [

62] link to geographic tessellation stratified RS to secure more representative spatial sample outcomes (i.e., an estimator of a mean with a smaller standard error) in the presence of SA (e.g., how to incorporate unequal inclusion probabilities when hexagonal strata vary in size). Considerable consistency seems to exist here between what were labeled model-informed and model-assisted constructions.

In summary, the planning of a sampling campaign must, if it is to make optimal use of expended resources, employ all available information in designing its sampling scheme. The widespread undertaking of pre-sampling sample size determination exercises confirms that failure to incorporate informative calculations, whose practical usefulness was demonstrated in innumerable previous studies, simply because these calculations fail to satisfy an artificially constructed theoretical standard, can only detract from the effectiveness of these plans. More generally, acknowledging the broader contextualization of this problem, this paper bolsters the defense of spatial statistics/econometrics. More specifically, it rebukes debatable contentions posited by Brus [

1], among others, furnishing further clarification about them.