2.1. Approach

Within the NLP research flow specializing on the legal domain, this work positions itself among the downstream tasks (

Table 1). Like [

10,

13], it uses NER to address a real-life issue, but with a greater number of labels, of different data types and frequencies: where [

10] dealt with labels of roughly balanced annotation numbers, we deal with some labels expected to annotate a few word sequences and other ones expected to annotate many such sequences. Another difference is that this study has involved a significant annotation effort: 1706 cases have been subject to 27,703 manual annotations.

Proceedings data are common to different areas of law (or types of litigation). There is basically one set of proceedings data for all civil matters and another one for criminal matters. Criminal court cases are subject to more publicity restrictions than civil ones, are available to the public in smaller numbers and will come last in the French government’s open data program schedule. That is why this research is limited to civil cases.

We built up a dataset that, though not pretending to be representative of all law areas, focuses on three different cases: road accidents, commercial leases and banking or finance-related litigation. The underlying assumption is that if the quality of extraction is about the same among these three areas of law, then it is likely other ones will provide similar quality.

The research does not intend to devise a new learning algorithm that would deliver a quality higher than that produced by existing ones. Though it does seek which algorithm, among several ones, applies in what context and delivers the best result for court data, its purpose is more practical: the identification of the algorithm, or the set of algorithms, available off the shelf that provides the highest quality for court data. We will not try to optimize further and will keep the default values of hyperparameters proposed by our tool.

Court case files are dirtier than most classical NER datasets [

17]. Some legal editors have conducted preliminary data cleansing on cases originated by jurisdictions. They then used their machine-learning experience on court data by pseudonymizing and structuring them, before trying to carry out some more value-added work. Such tasks are off-putting and time-consuming while bringing few scientific lessons. This research therefore seeks to deliver results in one go, directly from raw texts, despite the rather poor quality of their rendition.

2.2. Dataset

For any natural-language processing, a dataset should have sufficient examples to ensure the best balance in the variety of data and language [

18]. In our case, the learning of proceedings data should be based on a stock of cases, not only recent cases, and therefore, the learning dataset should be representative of the historically different writing conventions and templates. Our policy was to collect well-distributed cases from the past 15 to 20 years, depending on their level of availability, which varies by jurisdiction. Our dataset is composed of cases from the three jurisdictional degrees and focuses on three law areas (

Table 2).

One first batch of cases, all on civil responsibility matters, comes from three judicial tribunals: 46 from Chambéry, 119 from Saint-Etienne and 149 from Grenoble, for a total of 314. They had been scanned by each tribunal as PDF images, then OCR’d to PDF text by Université Savoie Mont Blanc (USMB) and then converted to TXT files. As these cases have not been pseudonymized, they will not be published in the context of this research.

A second batch was supplied by Doctrine, a French legal technology. It includes 250 cases on banking law and another 250 on commercial leases. They have been pseudonymized with Doctrine’s proprietary ML-based algorithm and randomly selected from their database upon our request. In many cases, parties were not only pseudonymized but actually erased, which damages the intelligibility of the text. USMB and Doctrine are warmly thanked for these corpora.

Three datasets of appellate cases have been downloaded from Légifrance, the portal of Direction de l’Information Légale et Administrative (DILA), the French national gazette editor. These cases have been pseudonymized by DILA and been subject to no subsequent data cleaning. They are published for having been “selected according to the methods specific to each order of jurisdiction”, so they are not necessarily statistically representative of the procedural paths. The datasets are fairly well geographically distributed among appellate courts. The Court of Cassation, the supreme court and Légifrance each use their own template for the same case, and each one varies over time. We selected supreme court cases with the help of a page of the Court of Cassation’s website that has been clustering cases since 2014, by area of law, such as road accidents, commercial leases and banking. We downloaded the body of their text from Légifrance, in HTML format, and pasted them to as many TXT files.

Because of legal publicity restrictions and policy variations in the collection and centralization of court cases by supreme courts, the uncertain comprehensiveness of the available corpora makes up the first bias of analysis. French cases raise other issues. Though syntax is generally correct, punctuation hazards, missing blanks and words pseudonymized by error are likely to hinder entity recognition. Many cases are verbose, with redundant arguments or citations, sometimes scattered in different sections. Moreover, court cases are generally not structured. Of appellate cases, 45% do not have an explicit title for all sections [

19]. The last chapter, “the outcome” (

le dispositif), which describes the operative part, is the only section whose starting point is systematically showed, generally by the words “

par ces motifs”.

2.3. Labels

Selected labels describe information related to civil proceedings. About half of them are common to the three jurisdictional orders (see

Table 3).

Plaintiff, defendant and condemned (“losing”) party are three labels that represent one party to a litigation. The plaintiff is the party who initiates a procedure, through a so-called assignation or saisine, in a first-instance judgment, or the party who, unsatisfied with the solution of the first-instance judge, lodged an appeal. The defendant is the party seeking confirmation of the first-instance judgment, in an appellate judgment, and we also use that label name in the context of a first-instance judgment to describe the party whom the introductory act is aimed at. One might wonder why we should bother to extract the parties to a trial given that names of natural persons are, or are supposed to be, “occulted” (Art. L111-13 of the code of judicial organization). One first answer is that in the absence, most of the time, of a unique identifier of the case preceding an appellate case, every indirect attribute of a case may help the matching. One name of a legal person (ex: “BNP Paribas”), one first name (ex: “Véronique”) or one title (‘Madame’, ‘Maître’) may be enough to identify a party among many first-instance cases made a given day by a given chamber of a given jurisdiction the case at the origin of a given appellate case. One second answer is to preserve the very intelligibility of the case, as long as cases recognize the parties to a trial by their name, not by their role (essentially plaintiff versus defendant).

The label “date of birth” is proposed as a complementary identifier of a party who is a natural person. It may help disambiguate between different judgments made the same day in the same jurisdiction, when matched with appellate cases to build judicial pathways.

The other date labels define as many judicial milestones. One of these is the date of bankruptcy act. Parties that are legal persons may be subject to acts such as

redressement judiciaire or

liquidation judiciaire, which may happen during the litigation timespan. This helps to avoid any such date is being mistaken for a judicial milestone of the current case and helps to avoid getting many false positives, especially under the label “date of judgment”. The other dates do refer to the current case. The label “date of introductory act” (

date d’assignation) refers to the initial seizure of a tribunal by a party. The label “date of judgment” excludes the definition of “date of injunction” (

date de référé), a type of ordinance that may be ordered by the same first-instance jurisdiction, which we preferred to annotate with a dedicated label. It is also distinct from the “date of appellate case”, which is issued following an appeal. The “date of expertise” refers to the date when any expert designated by a judgment hands over their report to the judge. The “date of pre-trial” (

date de mise en état) marks an update of the litigation case, such as when the case is “joined” with one other case or several other related cases or when it closes the instruction phase. We would expect that if more than one identifier of a first-instance judgment were to appear in the text of a judgment, a pre-trial date would also appear. A long procedure may involve an assignment, an injunction, the handover of a report by a judicial expert, a first-instance judgment, an appeal, an appellate case, a pre-trial, another appellate case, a final appeal and a final appellate case.

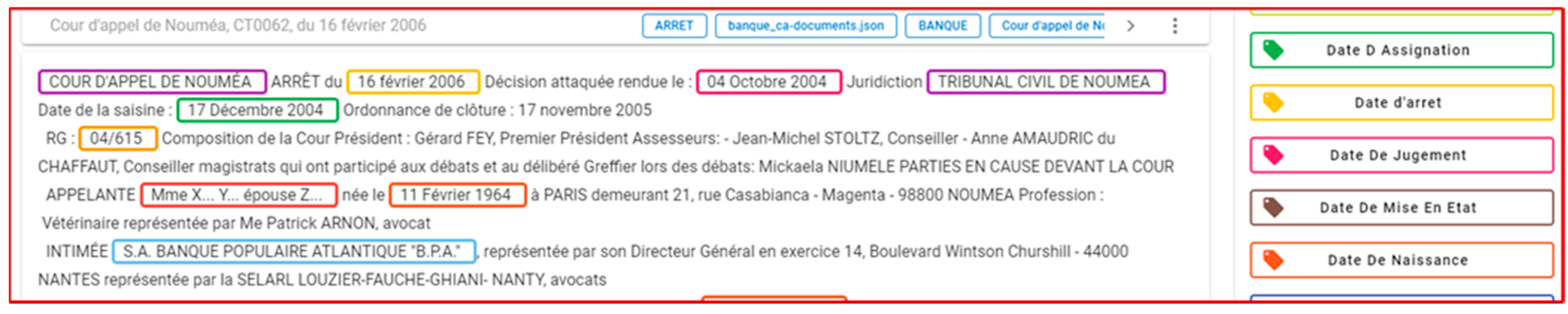

Figure 1 shows an example.

The “date of appeal” had been included before realizing that it had little relevance, insofar as French law states any appeal can be lodged only within the fortnight following the first-instance judgment.

The label “jurisdiction” is defined as the combination of either a tribunal (of first-instance) or a court (of appeal) with a city (in French, a ressort), when it has competence in a given territory. Court of Cassation and Tribunal des Conflits, which have national competence, are also defined as jurisdictions. We added the “chamber” label to cater to large jurisdictions where cases can be rendered by different courtrooms.

We decided to dedicate a label to the “referring jurisdiction” (

juridiction de renvoi). The appellate court may hand over the case to a lower jurisdiction or to other magistrates of the same jurisdiction that has been subject to the appeal. As opposed to other annotations of jurisdictions, it may announce an upcoming case and therefore have no date and no chamber associated to it. We believe that this distinction will help reusers separate the past proceedings from the next one in their analysis (see

Figure 1).

The label “legal citation” is used to annotate the articles of law invoked by the parties or by the judge, whereas “case-law citation” refers to some case not directly related to the litigation in progress. Many fewer annotations are expected, France having a civil-law tradition, not a common-law system, where previous court cases play a decisive role in every case.

We made the choice to use two separate labels for the Id of the appellate case and the Id of the first-instance case. An analysis of their proximity to the dates of appeal and trial judgment may be sufficient to assign a unified label to either type of proceeding. So that will be considered later.

Though ECLI, the European Case Law identifier, could be generated with even greater quality through some regular expression (regexp) algorithm, “ECLI” has been added as a label because it is a case identifier like three other labels (see

Table 1).

For a tribunal, the outcome consists in setting a type of case or naming the losing party (or parties). For an appellate court, it is often a confirmation or a reversal, but the judge may decide on alternative or supplemental provisions. The outcome of a case is binary only at the level of the supreme court. Three labels are therefore needed to describe the operative part of the case. We introduced “type of case” and “losing party” specifically for tribunals, and “outcome”, applicable to the other two degrees, is a Boolean that basically classifies the case as a confirmation or a reversal.

Our set of labels include two labels with an amount type: “costs of instance”, annotated only for first-instance cases, and “non-recoverable costs”. The latter represents the amount dedicated to refund legal fees, mostly paid to lawyers, different from the costs incurred by the proceedings and paid to the jurisdiction, which are fully recoverable by law. Such amounts can be found in cases of every jurisdictional order.

We also adopted “for further account”, a Boolean that, if true, invites the reuser to collect and read previous related cases for a comprehensive understanding of the context. In such a case, this means the case could be unfit for some reuses, such as predictive justice. Finally, we created the label “law area”, some metadata available only for Court of Cassation cases, to help us conduct analyses on that axis.

Case categories (

nature d’affaire civile, or NAC) have not been annotated. They are too seldom mentioned by the judge or difficult to recognize, so learning them would prove ineffective. Nor have the names of attorneys been extracted, but [

20] in their own research already studied the extraction of appellant’s and appellee’s lawyers.

Table 4 shows how manual annotations break down per label.

2.4. Tool

Kairntech is a French startup specializing in AI applied to text. The company is developing Sherpa [

21], a natural-language processing (NLP) platform for software developers. It manages both classification and NER. It includes a graphical interface to annotate and a workbench to test different training algorithms. It caters to French, among several other languages. We use the

segment (sentence) as the basic learning unit. Sherpa defines as a “dataset” the set of documents or segments that have been subject to at least one manual annotation. It is divided into a training dataset and a test dataset. The application of an experiment to a dataset produces a model, characterized by quality indicators: precision rate, recall rate and f-measure. The precision rate divides the number of correct annotations by that of all returned annotations. The recall rate is the number of correct annotations divided by the number of annotations that should have been returned. The F1 measure is the harmonic mean of precision and recall.

For NER, the workbench proposes to run CRF (conditional random fields), Spacy, Flair or DeLFT. CRF [

22], is proposed in five training methods: L-BFGS, L2SGD, PA, AROW and AP. Flair [

23], may be used with embeddings, which can be combined: Flair embeddings (contextualized string embeddings), bytecode and transformer embeddings.

DeLFT (deep learning framework for text) is a Keras and TensorFlow framework for text processing, focusing on NER and classification [

24]. Flair and DeLFT are both based on recurrent neural networks (RNNs). The DeLFT RNN layer may be followed by a CRF layer. This allows the model to use past and future annotations to set the current annotation [

25]. DeLFT can be combined with either ELMo embeddings [

26], or BERT embeddings, for English texts, but CamemBERT, its French variant, is not available for DeLFT. We used DeLFT configured with its ELMo embeddings.

The basic experiment uses the CRF algorithm with a training dataset representing 80% of the randomly selected segments in the dataset. Training on the basis of the 80% allows annotations to be projected onto the remaining 20% of segments. The comparison of the manual annotations in this 20% with the projected annotations after training allows for quality indicators to be calculated. We leave this distribution constant in our experimentations.

The tool does not automate cross validation: it is up to the user to multiply runs and to average the results.

2.5. Conduct of Training

To save ourselves the rental costs of a GPU, we sampled one out of 10 cases from our global dataset representing all three jurisdictional degrees. The sampled dataset resulted in 171 cases, 1136 segments and 2857 manual annotations. Each experiment was run on a reshuffled dataset.

We used each algorithm with its default set of hyperparameters. CRF was applied to segments with a maximal size of 1500 characters. Flair was used with the SGD tokenizer. Its learning rate was 0.1, its anneal factor 0.5, batch size 32, patience 3, 100 epochs max, top four layers for embeddings, no storage of embeddings, hidden size 256, 1 LSTM layer, word dropout probability 0.5 and locked dropout probability 0.05. DeLFT used ELMo embeddings. The size of the character embeddings to compute was 25, dropout 0.5, recurrent dropout 0.25, max epochs 50, optimizer was adam, learning rate 0.001, clip gradients 0.9, patience 5 and model type was bidirectional LSTM cum CRF. The maximum length of character sequence to compute embeddings was 30, the dimensionality of the character LSTM embeddings output space was 25, and the dimensionality of the word LSTM embeddings output space was 100. The batch size was 20, and the maximum number of checkpoints to keep was 5.

Among the different flavors of CRF, we chose CRF-pa, which seemed to deliver the best quality for a comparable model generation time. We looked at relative performances between labels.

Table 5 shows labels whose number of annotations (support) in the test dataset is below 100. The F1 scores are those of the first run and would prove volatile after other runs. A label such as “case-law citation”, with only nine annotations to reproduce, understandably provides a fragile result, dependent on the outcome of the split between train and test data.

To fine-tune the analyses per jurisdictional degree, law area or label while ensuring statistical representativity, we needed to work on the consolidated dataset. This in turn required us to rent GPUs (one NVIDIA T4 Tensor Core GPUs, eight vCPUs, 32 GB RAM) to get acceptable training times.