Bayesian Bootstrap in Multiple Frames

Abstract

1. Introduction

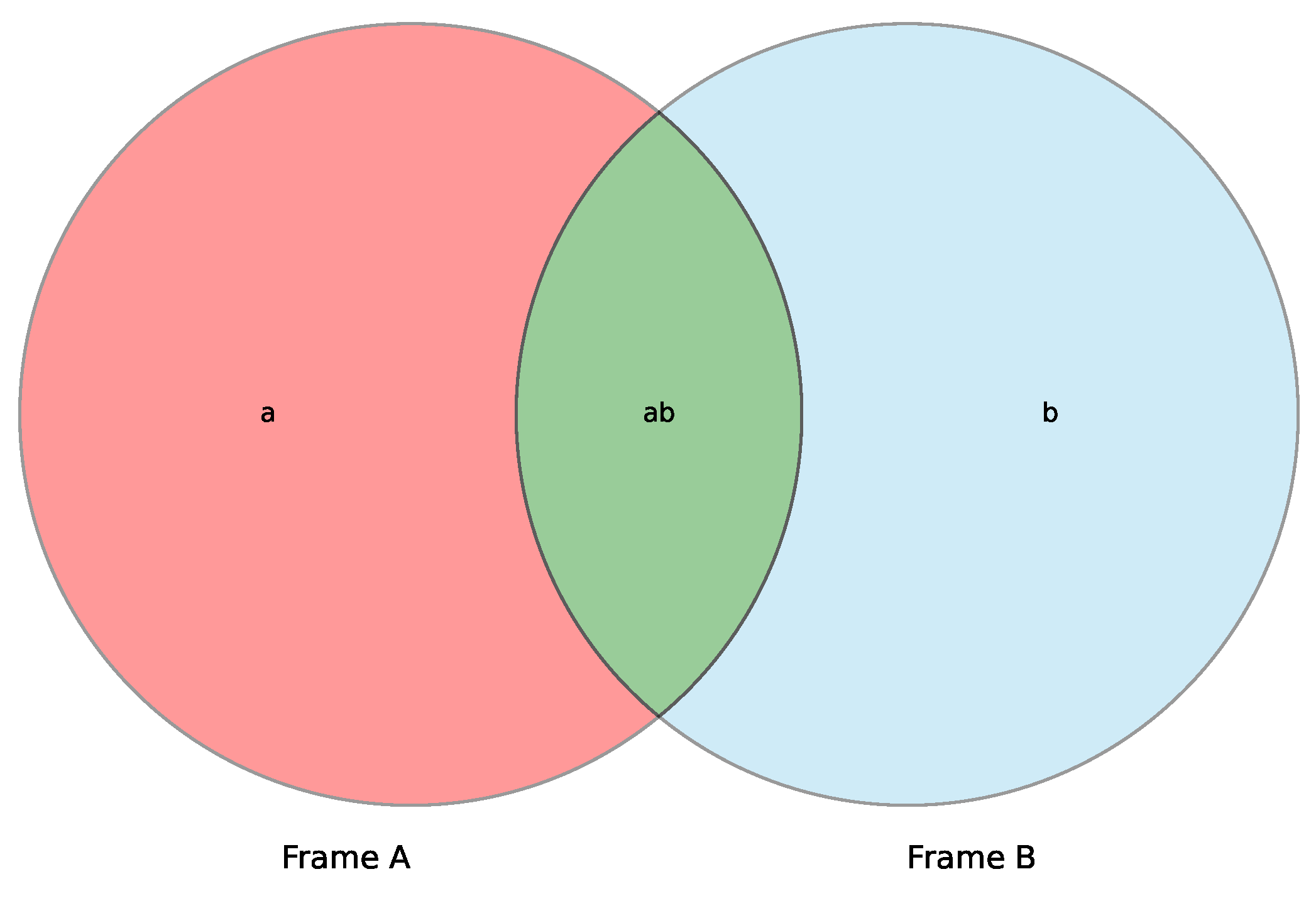

2. Multiple Frames and Variance Estimation

2.1. Variance Estimation for the Multiplicity Estimator

2.2. Frequentist Bootstrap for Variance Estimation

| Algorithm 1 Frequentist bootstrap |

for each frame q do |

for each bootstrap iteration b do |

for each stratum do |

(a) generate a synthetic sample of size using SRSWR |

(b) adjust unit-specific sampling weights using Equation (6) |

end for |

estimate population total using the q-th row of Equation (7) |

end for |

estimate bootstrap variance of the frame using (8) |

end for |

aggregate frame-specific variances (9) |

3. Bayesian Bootstrap in Multiple Frames

The Proposed Algorithm

| Algorithm 2 Bayesian bootstrap |

for each frame q do |

for each bootstrap iteration do |

for each stratum do |

(a) generate a synthetic sample of size using the |

Pólya Urn model on the original sample |

(b) construct by concatenating the original sample with (12) |

(c) -sized sampled is drawn from |

(d) adjust unit-specific sampling weights using Equation (13) |

end for |

estimate population total using the q-th row of Equation (14) |

end for |

estimate bootstrap variance of the frame using Equation (15) |

end for |

aggregate frame-specific variances (16) |

4. Simulation Study

4.1. Set-Up

- -

- A Gamma distribution with parameters (1.5, 2);

- -

- A Gamma distribution with parameters (2, 4).

4.2. Main Results

5. Case Study

- -

- by simple random sampling in each stratum:

- -

- by simple random sampling.

6. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hartley, H.O. Multiple frame surveys. In Proceedings of the Social Statistics Section, American Statistical Association, Washington, DC, USA, 7–10 September 1962; Volume 19, pp. 203–206. [Google Scholar]

- Hartley, H.O. Multiple frame methodology and selected applications. Sankhya 1974, 36, 118. [Google Scholar]

- Fuller, W.A.; Burmeister, L.F. Estimators for samples selected from two overlapping frames. In Proceedings of the Social Statistics Section; American Statistical Association: Boston, MA, USA, 1972; Volume 245249. [Google Scholar]

- Bankier, M.D. Estimators based on several stratified samples with applications to multiple frame surveys. J. Am. Stat. Assoc. 1986, 81, 1074–1079. [Google Scholar] [CrossRef]

- Kalton, G.; Anderson, D.W. Sampling rare populations. J. R. Stat. Soc. Ser. A (General) 1986, 149, 65–82. [Google Scholar] [CrossRef]

- Skinner, C.J. On the efficiency of raking ratio estimation for multiple frame surveys. J. Am. Stat. Assoc. 1991, 86, 779–784. [Google Scholar] [CrossRef]

- Mecatti, F. A single frame multiplicity estimator for multiple frame surveys. Surv. Methodol. 2007, 33, 151–157. [Google Scholar]

- Singh, A.C.; Mecatti, F. Generalized multiplicity-adjusted Horvitz-Thompson estimation as a unified approach to multiple frame surveys. J. Off. Stat. 2011, 27, 633. [Google Scholar]

- Lohr, S.L.; Raghunathan, T.E. Combining survey data with other data sources. Stat. Sci. 2017, 32, 293–312. [Google Scholar] [CrossRef]

- Wu, C.; Thompson, M.E. Dual Frame and Multiple Frame Surveys. In Sampling Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2020; pp. 305–317. [Google Scholar]

- Lohr, S. Multiple-frame surveys for a multiple-data-source world. Surv. Methodol. 2021, 47, 229–264. [Google Scholar]

- Ranalli, M.G.; Arcos, A.; del Mar Rueda, M.; Teodoro, A. Calibration estimation in dual-frame surveys. Stat. Methods Appl. 2016, 25, 321–349. [Google Scholar] [CrossRef]

- Rueda, M.d.M.; Arcos, A.; Molina, D.; Ranalli, M.G. Estimation techniques for ordinal data in multiple frame surveys with complex sampling designs. Int. Stat. Rev. 2018, 86, 51–67. [Google Scholar] [CrossRef]

- Sánchez-Borrego, I.; Arcos, A.; Rueda, M. Kernel-based methods for combining information of several frame surveys. Metrika 2019, 82, 71–86. [Google Scholar] [CrossRef]

- del Mar Rueda, M.; Ranalli, M.G.; Arcos, A.; Molina, D. Population empirical likelihood estimation in dual frame surveys. Stat. Pap. 2021, 62, 2473–2490. [Google Scholar] [CrossRef]

- Lohr, S.; Rao, J.K. Estimation in multiple-frame surveys. J. Am. Stat. Assoc. 2006, 101, 1019–1030. [Google Scholar] [CrossRef]

- Lohr, S.L. Multiple-frame surveys. In Handbook of statistics; Elsevier: Amsterdam, The Netherlands, 2009; Volume 29, pp. 71–88. [Google Scholar]

- Skinner, C.J.; Rao, J.N. Estimation in dual frame surveys with complex designs. J. Am. Stat. Assoc. 1996, 91, 349–356. [Google Scholar] [CrossRef]

- Lohr, S.L.; Rao, J. Inference from dual frame surveys. J. Am. Stat. Assoc. 2000, 95, 271–280. [Google Scholar] [CrossRef]

- Demnati, A.; Rao, J.N.; Hidiroglou, M.A.; Tambay, J.L. On the allocation and estimation for dual frame survey data. In Proceedings of the Survey Research Methods Section; American Statistical Association: Boston, MA, USA, 2007; pp. 2938–2945. [Google Scholar]

- Lohr, S. Recent developments in multiple frame surveys. Cell 2007, 46, 6. [Google Scholar]

- Aidara, C.A.T. Quasi Random Resampling Designs for Multiple Frame Surveys. Statistica 2019, 79, 321–338. [Google Scholar]

- Efron, B. Bootstrap methods: Another look at the jackknife. Ann. Stat. 1979, 7, 1–26. [Google Scholar] [CrossRef]

- Shao, J. Impact of the bootstrap on sample surveys. Stat. Sci. 2003, 18, 191–198. [Google Scholar] [CrossRef]

- Lahiri, P. On the impact of bootstrap in survey sampling and small-area estimation. Stat. Sci. 2003, 18, 199–210. [Google Scholar] [CrossRef]

- Rao, J.N.; Wu, C. Resampling inference with complex survey data. J. Am. Stat. Assoc. 1988, 83, 231–241. [Google Scholar] [CrossRef]

- Sitter, R.R. A resampling procedure for complex survey data. J. Am. Stat. Assoc. 1992, 87, 755–765. [Google Scholar] [CrossRef]

- Rubin, D.B. The bayesian bootstrap. Ann. Stat. 1981, 9, 130–134. [Google Scholar] [CrossRef]

- Lo, A.Y. A Bayesian bootstrap for a finite population. Ann. Stat. 1988, 16, 1684–1695. [Google Scholar] [CrossRef]

- Aitkin, M. Applications of the Bayesian bootstrap in finite population inference. J. Off. Stat. 2008, 24, 21. [Google Scholar]

- Carota, C. Beyond objective priors for the Bayesian bootstrap analysis of survey data. J. Off. Stat. 2009, 25, 405. [Google Scholar]

- Dong, Q.; Elliott, M.R.; Raghunathan, T.E. Combining information from multiple complex surveys. Surv. Methodol. 2014, 40, 347. [Google Scholar]

- Mecatti, F.; Singh, A.C. Estimation in multiple frame surveys: A simplified and unified review using the multiplicity approach. J. Soc. Fr. Stat. 2014, 155, 51–69. [Google Scholar]

- Cocchi, D.; Ievoli, R. Resampling Procedures for Sample Surveys. In Wiley StatsRef: Statistics Reference Online; Wiley: Hoboken, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- McCarthy, P.J. Pseudo-replication: Half samples. Rev. Inst. Int. Stat. 1969, 37, 239–264. [Google Scholar] [CrossRef]

- Miller, R.G. The jackknife-a review. Biometrika 1974, 61, 1–15. [Google Scholar]

- Sitter, R.R. Comparing three bootstrap methods for survey data. Can. J. Stat. 1992, 20, 135–154. [Google Scholar] [CrossRef]

- Mashreghi, Z.; Haziza, D.; Léger, C. A survey of bootstrap methods in finite population sampling. Stat. Surv. 2016, 10, 1–52. [Google Scholar] [CrossRef]

- Rao, J.; Wu, C. Pseudo–empirical likelihood inference for multiple frame surveys. J. Am. Stat. Assoc. 2010, 105, 1494–1503. [Google Scholar] [CrossRef]

- Dong, Q.; Elliott, M.R.; Raghunathan, T.E. A nonparametric method to generate synthetic populations to adjust for complex sampling design features. Surv. Methodol. 2014, 40, 29. [Google Scholar] [PubMed]

- Lo, A.Y. Bayesian statistical inference for sampling a finite population. Ann. Stat. 1986, 14, 1226–1233. [Google Scholar] [CrossRef]

- Frigyik, B.A.; Kapila, A.; Gupta, M.R. Introduction to the Dirichlet Distribution and Related Processes; Technical Report, UWEETR-2010-0006; Department of Electrical Engineering, University of Washignton: Washignton, DC, USA, 2010. [Google Scholar]

- Arcos, A.; Molina, D.; Ranalli, M.G.; del Mar Rueda, M. Frames2: A Package for Estimation in Dual Frame Surveys. R J. 2015, 7, 52–72. [Google Scholar] [CrossRef][Green Version]

| Frame A | Frame B | Frame C |

|---|---|---|

| a(A) | b(B) | c(C) |

| ab(A) | ab(B) | ac(C) |

| ac(A) | bc(B) | bc(C) |

| abc(A) | abc(B) | abc(C) |

| RB | CV | |||

|---|---|---|---|---|

| BB | FB | BB | FB | |

| 0.05 | −8.328 | −6.198 | 0.288 | 0.279 |

| 0.15 | −7.575 | −6.459 | 0.173 | 0.168 |

| 0.40 | −13.828 | −11.546 | 0.166 | 0.150 |

| RB | CV | |||

|---|---|---|---|---|

| BB | FB | BB | FB | |

| 0.05 | −1.216 | −3.541 | 0.316 | 0.312 |

| 0.15 | −0.217 | −0.095 | 0.196 | 0.181 |

| 0.40 | −7.141 | −5.935 | 0.137 | 0.130 |

| RB | CV | |||

|---|---|---|---|---|

| BB | FB | BB | FB | |

| 0.05 | −21.996 | 62.258 | 0.375 | 1.088 |

| 0.15 | −12.827 | 56.689 | 0.236 | 0.755 |

| 0.40 | −12.849 | 49.886 | 0.174 | 0.605 |

| RB | CV | |||

|---|---|---|---|---|

| BB | FB | BB | FB | |

| 0.05 | −14.944 | 74.108 | 0.432 | 1.211 |

| 0.15 | −2.479 | 68.636 | 0.266 | 0.848 |

| 0.40 | −2.010 | 58.881 | 0.159 | 0.688 |

| Variable | FB | BB | (FB − BB)/FB |

|---|---|---|---|

| Feeding | 348.24 | 280.12 | 19.56% |

| Clothing | 6.33 | 5.40 | 14.74% |

| Leisure | 2.50 | 1.88 | 24.52% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cocchi, D.; Marchi, L.; Ievoli, R. Bayesian Bootstrap in Multiple Frames. Stats 2022, 5, 561-571. https://doi.org/10.3390/stats5020034

Cocchi D, Marchi L, Ievoli R. Bayesian Bootstrap in Multiple Frames. Stats. 2022; 5(2):561-571. https://doi.org/10.3390/stats5020034

Chicago/Turabian StyleCocchi, Daniela, Lorenzo Marchi, and Riccardo Ievoli. 2022. "Bayesian Bootstrap in Multiple Frames" Stats 5, no. 2: 561-571. https://doi.org/10.3390/stats5020034

APA StyleCocchi, D., Marchi, L., & Ievoli, R. (2022). Bayesian Bootstrap in Multiple Frames. Stats, 5(2), 561-571. https://doi.org/10.3390/stats5020034