1. Introduction

Normality plays an important role in statistical analysis and there are numerous methods for normality testing presented in the literature. Koziol [

1] and Slate [

2] used the properties of normal distribution function to assess multivariate normality. Reference [

3] checked normality using a class of goodness-of-fit tests and this kind of method was also discussed in [

4,

5]. Various statistics have also been used in recent years, such as the Cramér-Von Mises(CM) statistic [

5], skewness and kurtosis [

6], sample entropy [

7], Shapiro–Wilk’s W statistic [

8] and the Kolmogorov-Smirnov(KS) statistic (see also in [

9,

10,

11]).

It is noticed that many studies of the aforementioned statistics are based on univariate normality, while the practical research we concentrate on is based on multivariate normality. Therefore, generalization should be used to enlarge the conclusions from univariate to multivariate. This is a common practice in multivariate normality testing when some useful statistics are adopted. Projection methods such as principle component analysis (PCA) can be exploited to obtain such achievement, as described in [

8,

12]. Convenient principle component analysis can project a high dimensional dataset into several lower dimensions in independent directions, then statistical tests in each direction can be summarized together to give a total test for multivariate normality, using the fact that the joint probability distribution is the product of all marginal probability distributions for independent variables. With the help of these orthogonal projections, the dimension can be reduced and the computation can be more efficient.

In this paper, the Jarque–Bera statistic, a combination of skewness and kurtosis, instead of the two statistics, as in [

8], is investigated to test the normality in each principle direction. Then, a new kind of statistic

is constructed to test the high-dimensional normality. The performance of the proposed method and its empirical power of testing are illustrated based on some high-dimensional simulated data.

This paper is organized as follows—

Section 2 provides the theory of principle component analysis and gives the methodologies of statistical inference for multivariate normality. In

Section 3, some simulated examples of normal data and non-normal data are used to illustrate the efficiency of our proposed method. Two real examples are then investigated in

Section 4 to verify the methods’ effectiveness.

2. High-Dimensional Normality Test Based on PC-Type JB Statistic

For observed data

with sample size

n and dimension

p, the principle component analysis reduces the dimension of

p-variate random vector

through linear combinations, and it searches the linear combinations with larger spread among the observed value of

, i.e., the larger variances. Specifically, it searches for the orthogonal directions

, which satisfy

Denoted by , the covariance matrix of , the eigenvalue and principle components can be obtained by spectral decomposition of the covariance matrix . Therefore, the observed data can be projected to the archived lower-dimension space by , which gives the projected observed matrix .

For each

, the skewness and kurtosis can be calculated by

where

stands for the sample mean. Then, the univariate JB statistic can be given by

To test the normality of high-dimensional data,

, define

where

r stands for the number of principle components ultimately selected, which satisfies:

Considering the hypothesis:

Under the null hypothesis

, the JB statistic will be asymptotically

distributed [

13], then the

will be asymptotically

distributed. For a given significance

, the critical region will be

Upon , an exact critical region can be deduced, and therefore the testing can be implemented based on these critical regions.

Evaluating the performance of the proposed PC-type Jarque–Bera testing depends on (1) whether the orthogonal axes are chosen due to the cumulative proportion; and (2) whether the hypothesis is rejected or accepted. Composed by the well known power function, the error will be:

where

is the probability of a Type-I error and

is the probability of a Type-II error. Therefore, we can see that the power is a non-decreasing function of the parameter

s.

3. Numerical Simulations

To evaluate the performance of the aforementioned testing, some simulation experiments are carried out in this section.

3.1. Normally Distributed Data

A series of normally distributed data were investigated with different data dimension p and different sample size n. Let simulated data matrix , where . Consider two kinds of covariance matrix:

- (I)

;

- (II)

.

Define

where

is the number of rejected samples,

is the number of samples that obey the normal distribution.

Table 1,

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6 describe separately the Empirical power of the PC-type JB testing

compared with

-type statistics

,

[

14],

-type statistics

,

[

14], Mardia’s method

[

15], Srivastava’s method

[

16], Kauyuki’s method

,

[

16], Kazuyuki’s method

[

17] in these two cases with significance level

= 0.01, 0.05, 0.10 respectively.

From the table above we can conclude that in the case of normal data, the empirical power of is small and stable whenever is large or small. Although the empirical power of each statistic converges to the given significance level as n increases, the performance of (especially when = 0.1), , , and is not so good as p increase. Besides, it is noticed that , and are inapplicable to whereas still works well. For all six tables, the numbers in bold represent the empirical power that is closest to the significance level among the eleven statistics in each situation.

3.2. Non-Normally Distributed Data

In this part, non-normal datasets are simulated to evaluate the performance of the proposed method according to Empirical power. Define

where

is the number of accepted samples,

is the number of samples that do not obey the normal distribution. The performance is evaluated in three databases as follows:

- (III)

every variable in was centralized, with independently identical distribution .

- (IV)

every variable in was centralized, with independently identical distribution .

- (V)

the first variables in are from distribution, while the last variables independently identically distributed from , where stands for the integer part of .

The performance of

compared with the

-type statistics

,

[

14],

-type statistics

,

[

14], Mardia’s statistics

,

[

15], Srivastava’s statistics

,

[

16], Kazuyuki’s statistic

[

17] and Rie’s statistic

[

18] are illustrated in

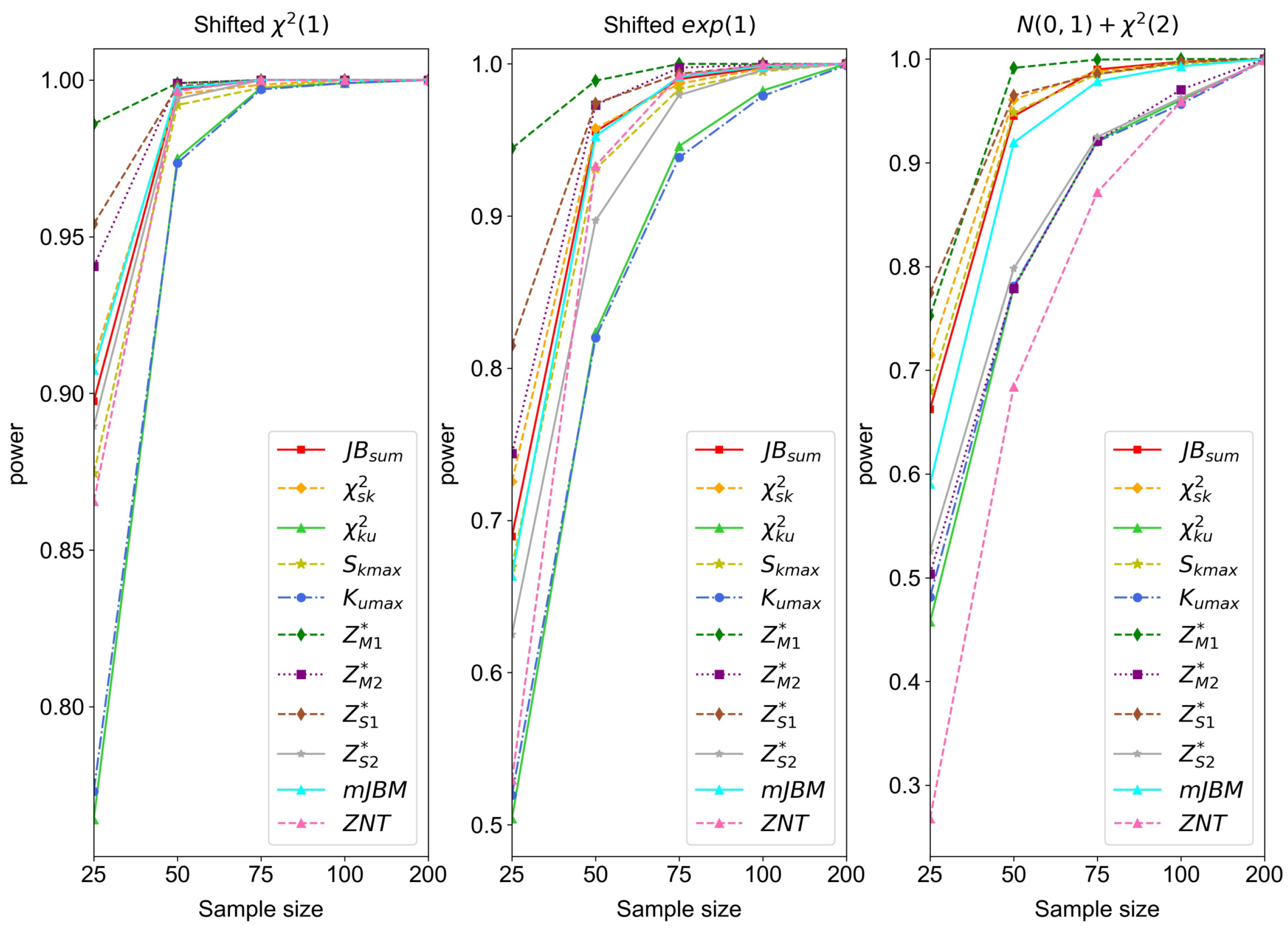

Figure 1,

Figure 2,

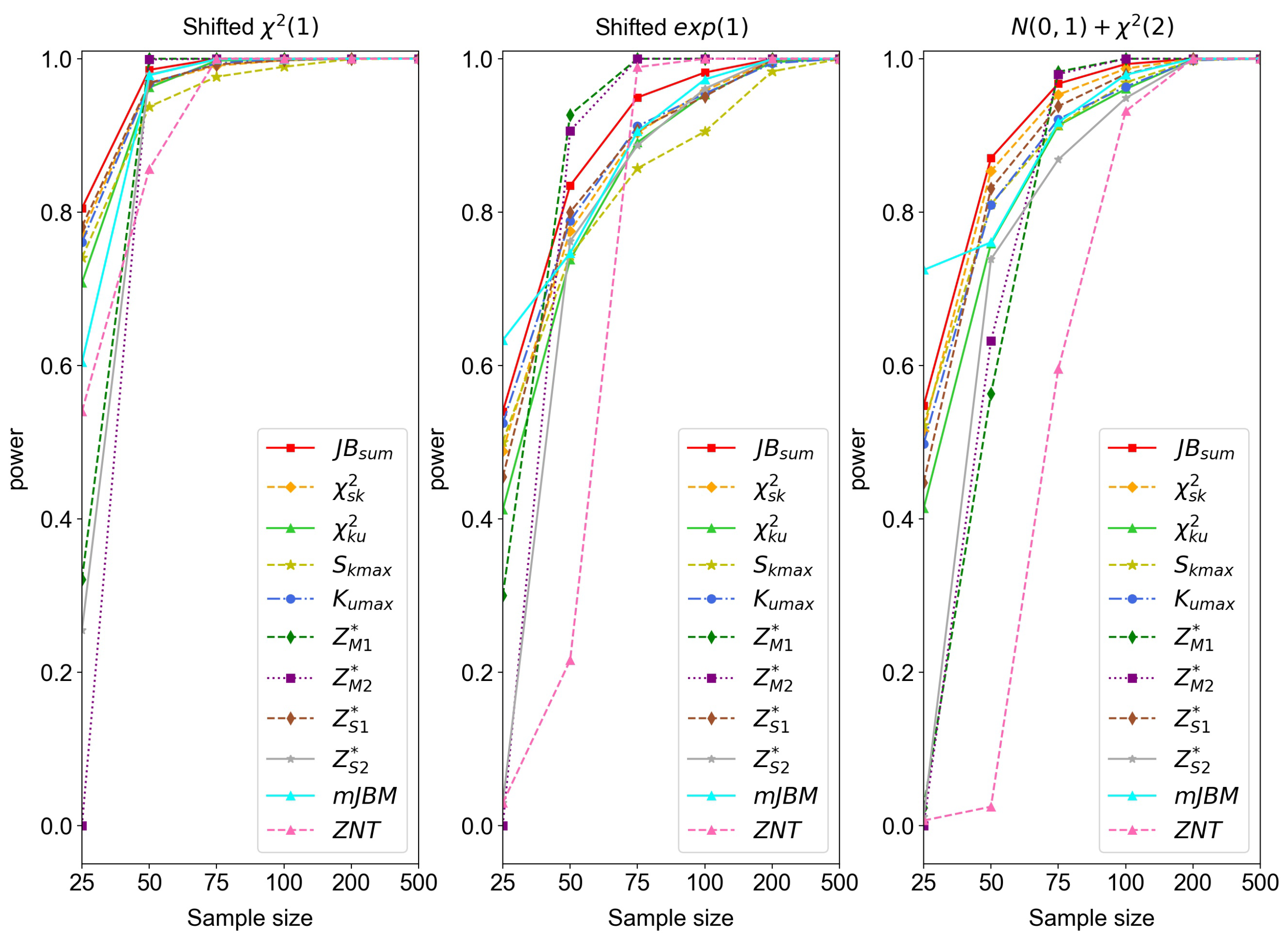

Figure 3,

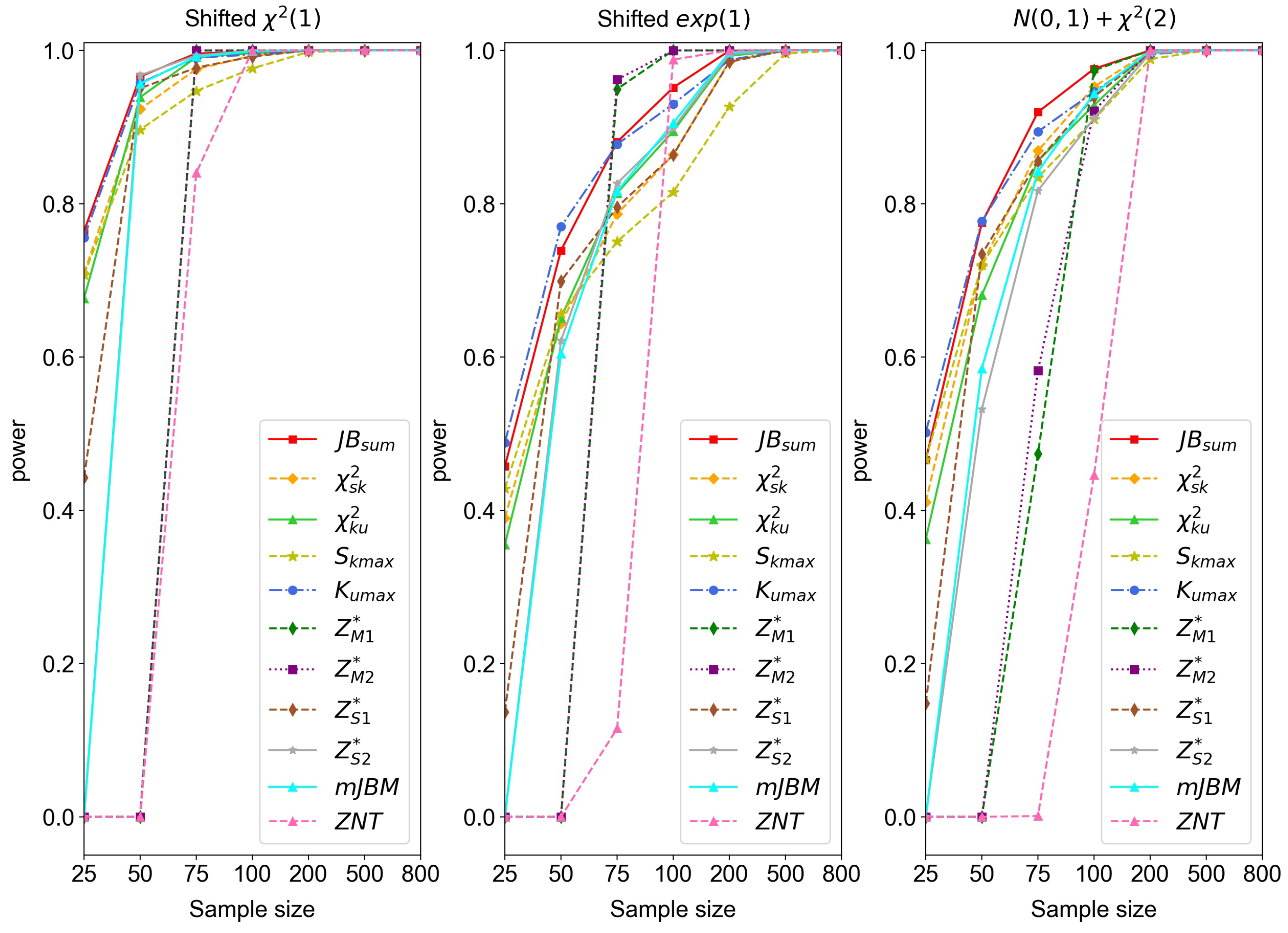

Figure 4 and

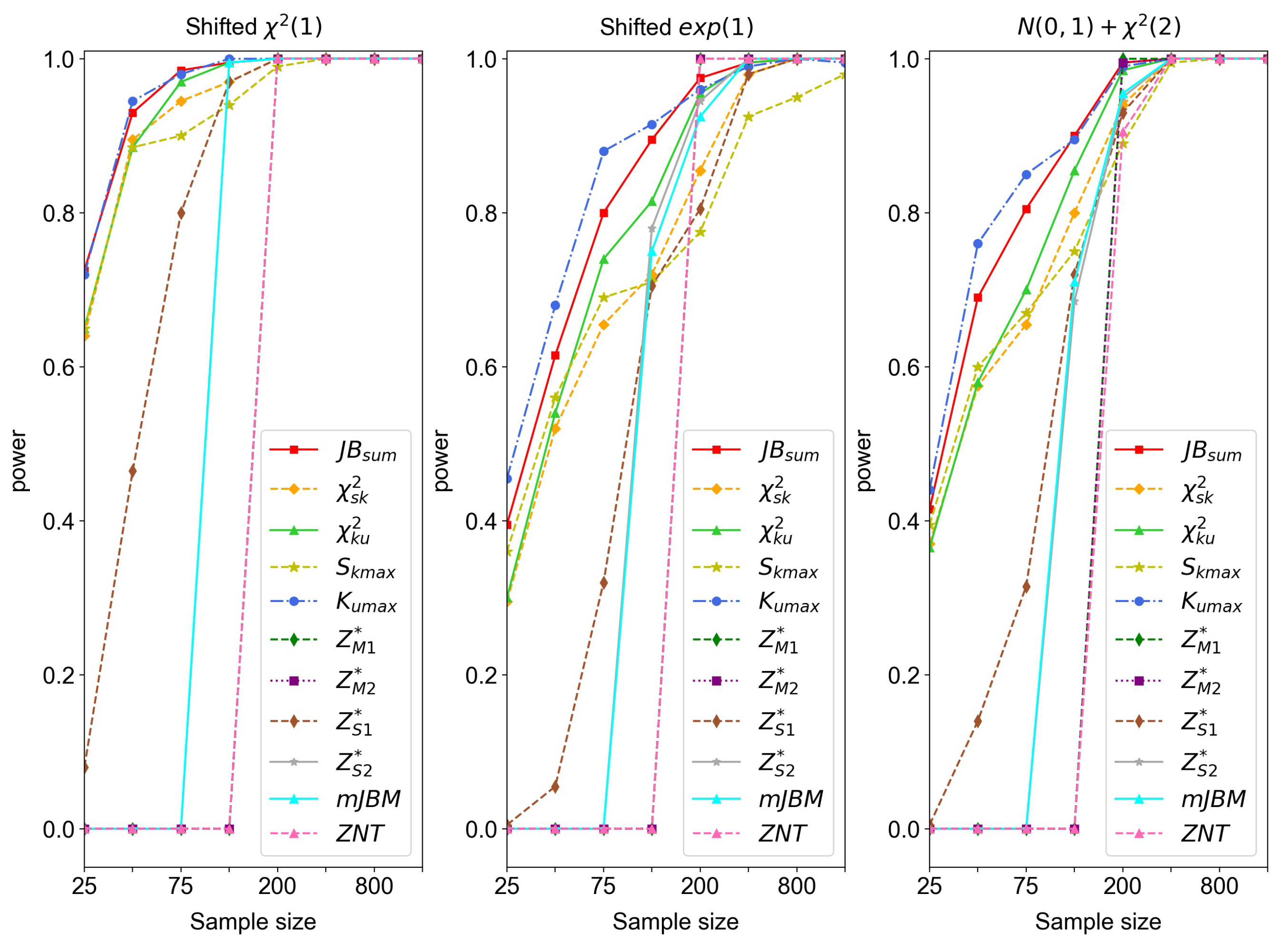

Figure 5. Since

,

, and

are based on the sum of

, we call them

-type.

and

come from the maximum of

, and thus we call them

-type.

All of these methods are studied in 2000 simulated data.

Figure 1,

Figure 2,

Figure 3,

Figure 4 and

Figure 5 show the comparisons of the empirical power of different dimension

p and various sample size

n.

- (1)

Figure 1 indicates that in the case of

,

’s performance is best in all three cases. Though

performs well in Case I and Case II, it is not as good in Case III. Comparatively,

,

and

perform similarly well and better than

and

.

- (2)

In the case of

, as in

Figure 2, although

and

perform better than

in Case II, they do not maintain stable results like

in Case III. In fact,

’s performance is generally better than the other methods mentioned here among all three cases.

- (3)

In

Figure 3, where

,

’s performance is best among others except

and

. As in

Figure 2,

and

are unstable in Case III when

p is close to

n. This phenomenon can also be seen in

. Combining the information shown in

Figure 2, we can see that

,

, and

are not as stable as

.

- (4)

With the increase in dimension, as seen in

Figure 4,

and

no longer perform as well as before, and

is still not stable enough when

n is close to

p. Although

’s performance is better than

’s at first, it is surpassed by the latter when

.

- (5)

In

Figure 5, as in

, the power of

is initially higher than

, and is eventually surpassed by

. Except for

,

’s performance is the best.

From the phenomenon above, we may conclude that performs well compared to the other statistics, in that its empirical power is relatively higher than the others and the corresponding simulation results are more stable. Thus, it can be used to test the non-normality of low- or high-dimensional data effectively.

4. Two Real Examples

In this section, we investigated two real examples to illustrate the performance of our proposed method compared with the nine aforementioned existing methods.

4.1. Spectf Heart Data Example

The SPECTF heart dataset [

19] provides data on cardiac single proton emission computed tomography (SPECT) images. It describes the diagnosis of cardiac single proton emission computed tomography (SPECT) images, and each patient is classified into two categories: normal and abnormal. The data contain 267 instances, with each instance belonging to a patient along with 44 continuous feature patterns summarized from the original SPECT images. The other attribute is a binary variable that indicates the diagnosis of each patient, with 0 for normal and 1 for abnormal.

In this dataset, we simultaneously evaluate the normality of the whole dataset and each class within it. The testing

p-value of each method mentioned above is shown in

Table 7. The highest

p-value of each relatively normal data set and the lowest

p-value of each relatively non-normal data set are in bold.

Let

describe the whole data set and

and

denote the normal class dataset and abnormal class dataset, respectively. We calculate the

p-values of our PC-type statistic as well as the

-type and

-type statistics and other methods mentioned in [

16,

17] of these three datasets. Since all ten statistics’

p-values of data

and

are very close to 0, we will not describe them here, which indicates a non-normal distribution of the whole dataset and abnormal dataset.

We may see from

Table 7 that

’s corresponding

p-values are a little different from the former two sets, in which the

p-values of

,

and

depart from 0. The relatively high

p-values motivate us to conduct a detailed survey to investigate the normality of the SPECTF heart data’s normal class by selecting some kinds of different variables that belong to a variety of degrees of normality.

In this normal category, we extract some variables and construct a new dataset

from several experiments. The selected variables included in

are

∼

∼

,

∼

∼

, and

∼

. We then compute the

p-values of this dataset, and the results are shown in

Table 7. It can be seen that all normality testing methods have a relatively high

p-value, which demonstrates the multivariate normality of set

. For comparison, we constructed another two datasets,

and

, which consist of several verified normal variables and non-normal variables, respectively. Specifically,

contains the variables

∼

∼

,

∼

and

, while

contains variables

∼

and

. From

Table 7 we can see the results of these two sets. This time, the

p-values of the ten methods are no longer as high as before, meaning that our method performs well in assessing the normality of normal and non-normal data.

4.2. Body Data Example

In this part, we analyze the normality of body data investigated in [

14] to show the consistency of our method with other existing methods and conclusions before. This data set contains 100 human individuals and each individual has 12 measurements of the human body (see [

14] for details). As before, the

p-values of the PC-type statistics and the

-type,

-type, and Kazuyuki’s statistics are computed.

Let

describe the whole dataset, and the multivariate normality of it can be investigated by the resulting

p-values of each method shown in

Table 7. Since all the

p-values approach 0, we may conclude that this dataset contains non-normal data. As with the discussion in [

14], we also investigate the other six datasets to show the validity of our proposed method, as well as making a comparison with other methods. For convenience, we denote

,

,

,

,

, and

. From

Table 8, we can conclude that the normality testing results of our proposed PC-type statistic

are nearly the same as those for

-type statistics,

-type statistics, and Kazuyuki’s methods. Since

,

,

, and

have multivariate distribution, whereas

and

have non-normal distribution [

14], our method is closer to the truth in the sense of relatively higher

p-values in multivariate normal situations and lower

p-values in non-normal situations. Same as in

Table 7, the highest

p-value of each normal data set and the lowest

p-value of each non-normal data set are in bold.

This phenomenon indicates that our proposed PC-type statistic constitutes an effective way of testing normality both in normal data and non-normal data, with more stable testing results.

5. Conclusions

The purpose of this paper is to use a JB-type testing method to test high-dimensional normality. The statistics we proposed here used the generalized statistic of JB statistics to test normality based on the dimensional reduction performed by PCA.

Through simulated experiments, we find that, in both low and high dimensions, performs well in testing normal and non-normal data and it is more stable than many other compared methods. Therefore, it can be used to test normality effectively.

From two real examples, we can also see that our proposed method possesses the superiority of stability in performing the normality testing of real datasets, as well as the inclination of detecting the true normality from the perspective of p-values.

Author Contributions

Data curation, Y.S.; Methodology, X.Z.; Project administration, X.Z.; Software, Y.S.; Supervision, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (No. 11971214, 81960309), sponsored by the Scientific Research Foundation for the Returned Overseas Chinese Scholars, Ministry of Education of China, and supported by Cooperation Project of Chunhui Plan of the Ministry of Education of China 2018.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

The authors would also like to thank Edit-in-chief and the referees for their suggestions to improve the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Koziol, J.A. On assessing multivariate normality. J. R. Stat. Soc. 1983, 45, 358–361. [Google Scholar] [CrossRef]

- Slate, E.H. Assessing multivariate nonnormality using univariate distributions. Biometrika 1999, 86, 191–202. [Google Scholar] [CrossRef]

- Romeu, J.L.; Ozturk, A. A comparative study of goodness-of-fit tests for multivariate normality. J. Multivar. Anal. 1993, 46, 309–334. [Google Scholar] [CrossRef]

- Székely, G.J.; Rizzo, M.L. A new test for multivariate normality. J. Multivar. Anal. 2005, 93, 58–80. [Google Scholar] [CrossRef]

- Chiu, S.N.; Liu, K.I. Generalized cramér-von mises goodness-of-fit tests for multivariate distributions. Comput. Stat. Data Anal. 2009, 53, 3817–3834. [Google Scholar] [CrossRef]

- Small, N.J.H. Marginal skewness and kurtosis in testing multivariate normality. J. R. Stat. Soc. Ser. (Appl. Stat.) 1980, 29, 85–87. [Google Scholar] [CrossRef]

- Zhu, L.-X.; Wong, H.L.; Fang, K.-T. A test for multivariate normality based on sample entropy and projection pursuit. J. Stat. Plan. Inference 1995, 45, 373–385. [Google Scholar] [CrossRef]

- Liang, J.; Tang, M.-L.; Chan, P.S. A generalized shapiro-wilk w statistic for testing high-dimensional normality. Comput. Stat. Data Anal. 2009, 53, 3883–3891. [Google Scholar] [CrossRef]

- Doornik, J.A.; Hansen, H. An omnibus test for univariate and multivariate normality. Oxf. Bull. Econ. Stat. 2008, 70, 927–939. [Google Scholar] [CrossRef]

- Horswell, R.L.; Looney, S.W. A comparison of tests for multivariate normality that are based on measures of multivariate skewness and kurtosis. J. Stat. Comput. Simul. 1992, 42, 21–38. [Google Scholar] [CrossRef]

- Tenreiro, C. An affine invariant multiple test procedure for assessing multivariate normality. Comput. Stat. Data Anal. 2011, 55, 1980–1992. [Google Scholar] [CrossRef]

- Liang, J.; Li, R.; Fang, H.; Fang, K.-T. Testing multinormality based on low-dimensional projection. J. Stat. Plan. Inference 2000, 86, 129–141. [Google Scholar] [CrossRef]

- Jönsson, K. A robust test for multivariate normality. Econ. Lett. 2011, 113, 199–201. [Google Scholar] [CrossRef]

- Liang, J.; Tang, M.-L.; Zhao, X. Testing high-dimensional normality based on classical skewness and kurtosis with a possible small sample size. Commun. Stat. Theory Methods 2019, 48, 5719–5732. [Google Scholar] [CrossRef]

- Mardia, K.V. Applications of some measures of multivariate skewness and kurtosis in testing normality and robustness studies. Sankhyá Indian J. Stat. Ser. B 1974, 36, 115–128. [Google Scholar]

- Kazuyuki, K.; Naoya, O.; Takashi, S. On Jarque-Bera tests for assessing multivariate normality. J. Stat. Adv. Theory Appl. 2008, 1, 207–220. [Google Scholar]

- Kazuyuki, K.; Masashi, H.; Tatjana, P. Modified Jarque-Bera Type Tests for Multivariate Normality in a High-Dimensional Framework. J. Stat. Theory Pract. 2014, 8, 382–399. [Google Scholar]

- Rie, E.; Zofia, H.; Ayako, H.; Takashi, S. Multivariate normality test using normalizing transformation for Mardia’s multivariate kurtosis. Commun. Stat. Simul. Comput. 2020, 49, 684–698. [Google Scholar]

- Dua, D.; Graff, C. UCI Machine Learning Repository; School of Information and Computer Science, University of California: Irvine, CA, USA, 2017. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).