Abstract

Multivariate nonnegative orthant data are real vectors bounded to the left by the null vector, and they can be continuous, discrete or mixed. We first review the recent relative variability indexes for multivariate nonnegative continuous and count distributions. As a prelude, the classification of two comparable distributions having the same mean vector is done through under-, equi- and over-variability with respect to the reference distribution. Multivariate associated kernel estimators are then reviewed with new proposals that can accommodate any nonnegative orthant dataset. We focus on bandwidth matrix selections by adaptive and local Bayesian methods for semicontinuous and counting supports, respectively. We finally introduce a flexible semiparametric approach for estimating all these distributions on nonnegative supports. The corresponding estimator is directed by a given parametric part, and a nonparametric part which is a weight function to be estimated through multivariate associated kernels. A diagnostic model is also discussed to make an appropriate choice between the parametric, semiparametric and nonparametric approaches. The retention of pure nonparametric means the inconvenience of parametric part used in the modelization. Multivariate real data examples in semicontinuous setup as reliability are gradually considered to illustrate the proposed approach. Concluding remarks are made for extension to other multiple functions.

Keywords:

associated kernel; Bayesian selector; dispersion index; model diagnostics; multivariate distribution; variation index; weighted distribution MSC:

62G07; 62G20; 62G99; 62H10; 62H12

1. Introduction

The d-variate nonnegative orthant data on are real d-vectors bounded to the left by the null vector , and they can be continuous, discrete (e.g., count, categorical) or mixed. For simplicity, we here assume either for semicontinuous or for counting; and, we then omit both setups of categorial and mixed which can be a mix of discrete and continuous data (e.g., [1]) or other time scales (see, e.g., [2]). Modeling such datasets of requires nonnegative orthant distributions which are generally not easy to handle in practical data analysis. The baseline parametric distribution (e.g., [3,4,5]) for the analysis of nonnegative continuous data is the exponential distribution (e.g., in Reliability) and that of count data is the Poisson one. However, there intrinsic assumptions of the two first moments are often not realistic for many applications. The nonparametric topic of associated kernels, which is adaptable to any support of probability density or mass function (pdmf), is widely studied in very recent years. We can refer to [6,7,8,9,10,11,12,13,14,15] for general results and more specific developments on associated kernel orthant distributions using classical cross-validation and Bayesian methods to select bandwidth matrices. Thus, a natural question of flexible semiparametric modeling now arises for all these multivariate orthant datasets.

Indeed, we first need a review of the recent relative variability indexes for multivariate semicontinuous ([16]) and count ([17]) distributions. The infinite number and complexity of multivariate parametric distributions require the study of different indexes for comparisons and discriminations between them. Simple classifications of two comparable distributions are done through under-, equi- and over-variability with respect to the reference distribution. We refer to [18] and references therein for univariate categorical data which does not yet have its multivariate version. We then survey multivariate associated kernels that can accommodate any nonnegative orthant dataset. Most useful families shall be pointed out, mainly as a product of univariate associated kernels and including properties and constructions. We shall focus on bandwidth matrix selections by Bayesian methods. Finally, we have to introduce a flexible semiparametric approach for estimating multivariate nonnegative orthant distributions. Following Hjort and Glad [19] for classical kernels, the corresponding estimator shall be directed by a given parametric part, and a nonparametric part which is a weight function to be estimated through multivariate associated kernels. What does it mean for a diagnostic model to make an appropriate choice between the parametric, semiparametric and nonparametric approaches in this multivariate framework? Such a discussion is to highlight practical improvements on standard nonparametric methods for multivariate semicontinuous datasets, through the use of a reasonable parametric-start description. See, for instance, [20,21,22] for univariate count datasets.

In this paper, the main goal is to introduce a family of semiparametric estimators with multivariate associated kernels for both semicontinuous and count data. They are meant to be flexible compromises between grueling parametric and fuzzy nonparametric approaches. The rest of the paper is organized as follows. Section 2 presents a brief review of the relative variability indexes for multivariate nonnegative orthant distributions, by distinguishing the dispersion for counting and the variation for semicontinuous. Section 3 displays a short panoply of multivariates associated kernels which are useful for semicontinuous and for counting datasets. Properties are reviewed with new proposals, including both appropriated Bayesian methods of bandwidths selections. In Section 4, we introduce the semiparametric kernel estimators with a d-variate parametric start. We also investigate the corresponding diagnostic model. Section 5 is devoted to numerical illustrations, especially for uni- and multivariate semicontinuous datasets. In Section 6, we make some final remarks in order to extend to other multiple functions, as regression. Eventually, appendixes are exhibited for technical proofs and illustrations.

2. Relative Variability Indexes for Orthant Distributions

Let be a nonnegative orthant d-variate random vector on , . We use the following notations: is the elementwise square root of the variance vector of ; is the diagonal matrix with diagonal entries and 0 elsewhere; and, denotes the covariance matrix of which is a symmetric matrix with entries such that is the variance of . Then, one has

where is the correlation matrix of ; see, e.g., Equations (2)–(36) [23]. It is noteworthy that there are many multivariate distributions with exponential (resp., Poisson) margins. Therefore, we denote a generic d-variate exponential distribution by , given specific positive mean vector and correlation matrix . Similarly, a generic d-variate Poisson distribution is given by , with positive mean vector and correlation matrix . See, e.g., Appendix A for more extensive exponential and Poisson models with possible behaviours in the negative correlation setup. The uncorrelated or independent d-variate exponential and Poisson will be written as and , respectively, for the unit matrix. Their respective d-variate probability density function (pdf) and probability mass function (pmf) are the product of d univariate ones.

According to [16] and following the recent univariate unification of the well-known (Fisher) dispersion and the (Jørgensen) variation indexes by Touré et al. [24], the relative variability index of d-variate nonnegative orthant distributions can be written as follows. Let and be two random vectors on the same support and assume , and fixed, then the relative variability index of with respect to is defined as the positive quantity

where “” stands for the trace operator and is the unique Moore-Penrose inverse of the associated matrix to . From (2), means the over- (equi- and under-variability) of compared to is realized if ( and , respectively).

The expression (2) of RWI does not appear to be very easy to handle in this general formulation on , even the empirical version and interpretations. We now detail both multivariate cases of counting ([17]) and of semicontinuous ([16]). An R package is recently provided in [25].

2.1. Relative Dispersion Indexes for Count Distributions

For , let be the matrix of rank 1. Then, of (2) is also of rank 1 and has only one positive eigenvalue, denoted by

and called generalized dispersion index of compared to with by [17]. For , is the (Fisher) dispersion index with respect to the Poisson distribution. To derive this interpretation of GDI, we successively decompose the denominator of (3) as

and the numerator of (3) by using also (1) as

Thus, makes it possible to compare the full variability of (in the numerator) with respect to its expected uncorrelated Poissonian variability (in the denominator) which depends only on . In other words, the count random vector is over- (equi- and under-dispersed) with respect to if ( and , respectively). This is a generalization in multivariate framework of the well-known (univariate) dispersion index by [17]. See, e.g., [17,26] for illustrative examples. We can modify to , as marginal dispersion index, by replacing in (3) with to obtain dispersion information only coming from the margins of .

More generally, for two count random vectors and on the same support with and , the relative dispersion index is defined by

i.e., the over- (equi- and under-dispersion) of compared to is realized if ( and , respectively). Obviously, GDI is a particular case of RDI with any general reference than . Consequently, many properties of GDI are easily extended to RDI.

2.2. Relative Variation Indexes for Semicontinuous Distributions

Assuming here and another matrix of rank 1. Then, we also have that of (2) is of rank 1. Similar to (3), the generalized variation index of compared to is defined by

i.e., is over- (equi- and under-varied) with respect to if ( and , respectively); see [16]. Remark that when , is the univariate (Jørgensen) variation index which is recently introduced by Abid et al. [27]. From (4) and using again (1) for rewritting the numerator of (6) as

of (6) can be interpreted as the ratio of the full variability of with respect to its expected uncorrelated exponential variability which depends only on . Similar to , we can define from . See [16] for properties, numerous examples and numerical illustrations.

The relative variation index is defined, for two semicontinuous random vectors and on the same support with and , by

i.e., the over- ( equi- and under-variation) of compared to is carried out if ( and , respectively). Of course, RVI generalizes GVI for multivariate semicontinuous distributions. For instance, one refers to [16] for more details on its discriminating power in multivariate parametric models from two first moments.

3. Multivariate Orthant Associated Kernels

Nonparametric techniques through associated kernels represent an alternative approach for multivariate orthant data. Let be independent and identically distributed (iid) nonnegative orthant d-variate random vectors with an unknown joint pdmf f on , for . Then the multivariate associated kernel estimator of f is expressed as

where is a given bandwidth matrix (i.e., symmetric and positive definite) such that (the null matrix) as , and is a multivariate (orthant) associated kernel, parameterized by and ; see, e.g., [10]. More precisely, we have the following refined definition.

Definition 1.

Let be the support of the pdmf to be estimated, a target vector and a bandwidth matrix. A parameterized pdmf on support is called “multivariate orthant associated kernel” if the following conditions are satisfied:

where denotes the corresponding orthant random vector with pdmf such that vector (the d-dimensional null vector) and positive definite matrix as (the null matrix), and stands for a symmetric matrix with entries for such that .

This definition exists in the univariate count case of [21,28] and encompasses the multivariate one by [10]. The choice of the orthant associated kernel satisfying assures the convergence of its corresponding estimator named of the second order. Otherwise, the convergence of its corresponding estimator is not guarantee for , a right neighborhood of 0, in Definition 1 and it is said a consistent first-order smoother; see, e.g., [28] for discrete kernels. In general, d-under-dispersed count associated kernels are appropriated for both small and moderate sample sizes; see, e.g., [28] for univariate cases. As for the selection of the bandwidth , it is very crucial because it controls the degree of smoothing and the form of the orientation of the kernel. As a matter of fact, a simplification can be obtained by considering a diagonal matrix . Since it is challenging to obtain a full multivariate orthant distribution for building a smoother, several authors suggest the product of univariate orthant associated kernels,

where , , belong either to the same family or to different families of univariate orthant associated kernels. The following two subsections are devoted to the summary of discrete and semicontinuous univariate associated kernels.

Before showing some main properties of the associated kernel estimator (8), let us recall that the family of d-variate classical (symmetric) kernels on (e.g., [29,30,31]) can be also presented as (classical) associated kernels. Indeed, from (8) and writting for instance

where “det” is the determinant operator, one has , and . In general, one uses the classical (associated) kernels for smoothing continuous data or pdf having support .

The purely nonparametric estimator (8) with multivariate associated kernel, of f, is generally defined up to the normalizing constant . Several simulation studies (e.g., Table 3.1 in [10]) are shown that (depending on samples, associated kernels and bandwidths) is approximatively 1. Without loss of generality, one here assumes as for all classical (associated) kernel estimators of pdf. The following proposition finally proves its mean behavior and variability through the integrated bias and integrated variance of , respectively. In what follows, let us denote by the reference measure (Lebesgue or counting) on the nonnegative orthant set and also on any set .

Proposition 1.

Let . Then, for all :

Proof.

Let . One successively has

which leads to the first result because f is a pdmf on . The second result on is trivial. □

The following general result is easily deduced from Proposition 1. To the best of our knwoledge, it appears to be new and interesting in the framework of the pdmf (associated) kernel estimators.

Corollary 1.

If , for all , then: and .

In particular, Corollary 1 holds for all classical (associated) kernel estimators. The two following properties on the corresponding orthant multivariate associated kernels shall be needed subsequently.

- (K1)

- There exists the second moment of :

- (K2)

- There exists a real largest number and such that

In fact, (K1) is a necessary condition for smoothers to have a finite variance and (K2) can be deduced from the continuous univariate cases (e.g., [32]) and also from the discrete ones (e.g., [28]).

We now establish both general asymptotic behaviours of the pointwise bias and variance of the nonparametric estimator (8) on the nonnegative orthant set ; its proof is given in Appendix B. For that, we need the following assumptions by endowing with the Euclidean norm and the associated inner product such that .

- (a1)

- The unknown pdmf f is a bounded function and twice differentiable or finite difference in and and , which denote, respectively, the gradient vector (in the continuous or discrete sense, respectively) and the corresponding Hessian matrix of the function f at .

- (a2)

- There exists a positive real number such that as .

Note that (a2) is obviously a consequence of (K2).

Proposition 2.

Under the assumption (a1) on f, then the estimator in (8) of f verifies

for any . Moreover, if (a2) holds then

For and according to the proof of Proposition 2, one can easily write as follows:

where is the kth derivative or finite difference of the pdmf f under the existence of the centered moment of order of .

Concerning bandwidth matrix selections in a multivariate associated kernel estimator (8), one generally use the cross-validation technique (e.g., [10,20,28,33,34]). However, it is tedious and less precise. Many papers have recently proposed Bayesian approaches (e.g., [6,7,13,14,35,36] and references therein). In particular, they have recommended local Bayesian for discrete smoothing of pmf (e.g., [6,7,37]) and adaptive one for continuous smoothing of pdf (e.g., [13,35,36]).

Denote the set of positive definite [diagonal] matrices [from (9), resp.] and let be a given suitable prior distribution on . Under the squared error loss function, the Bayes estimator of is the mean of the posterior distribution. Then, the local Bayesian bandwidth at the target takes the form

and the adaptive Bayesian bandwidth for each observation of is given by

where is the leave-one-out associated kernel estimator of deduced from (8) as

Note that the well-known and classical (global) cross-validation bandwidth matrix and the global Bayesian one are obtained, respectively, from (14) as

and

3.1. Discrete Associated Kernels

We only present three main and useful families of univariate discrete associated kernels for (9) and satisfying (K1) and (K2).

Example 1

(categorical). For fixed , the number of categories and , one defines the Dirac discrete uniform (DirDU) kernel by

for , , with , and .

It was introduced in the multivariate setup by Aitchison and Aitken [38] and investigated as a discrete associated kernel which is symmetric to the target x by [28] in univariate case; see [7] for a Bayesian approach in multivariate setup. Note here that its normalized constant is always .

Example 2

(symmetric count). For fixed and , the symmetric count triangular kernel is expressed as

for , , with , , and

where ≃ holds for h sufficiently small.

It was first proposed by Kokonendji et al. [33] and then completed in [39] with an asymmetric version for solving the problem of boundary bias in count kernel estimation.

Example 3

(standard count). Let , the standard binomial kernel is defined by

for , , with , and as .

Here, tends to when and the new Definition 1 holds. This first-order and under-dispersed binomial kernel is introduced in [28] which becomes very useful for smoothing count distribution through small or moderate sample size; see, e.g., [6,7,37] for Bayesian approaches and some references therein. In addition, we have the standard Poisson kernel where follows the equi-dispersed Poisson distribution with mean , , and as . Recently, Huang et al. [40] have introduced the Conway-Maxwell-Poisson kernel by exploiting its under-dispersed part and its second-order consistency which can be improved via the mode-dispersion approach of [41]; see also Section 2.4 in [42].

3.2. Semicontinuous Associated Kernels

Now, we point out eight main and useful families of univariate semicontinuous associated kernels for (9) and satisfying (K1) and (K2). Which are gamma (G) of [43] (see also [44]), inverse gamma (Ig) (see also [45]) and log-normal 2 (LN2) by [41], inverse Gaussian (IG) and reciprocal inverse Gaussian by [46] (see also [47]), log-normal 1 (LN1) and Birnbaum–Saunders by [48] (see also [49,50]), and Weibull (W) of [51] (see also [50]). It is noteworthy that the link between LN2 of [41] and LN1 of [48] is through changing to . Several other semicontinuous could be constructed by using the mode-dispersion technique of [41] from any semicontinuous distribution which is unimodal and having a dispersion parameter. Recently, one has the scaled inverse chi-squared kernel of [52].

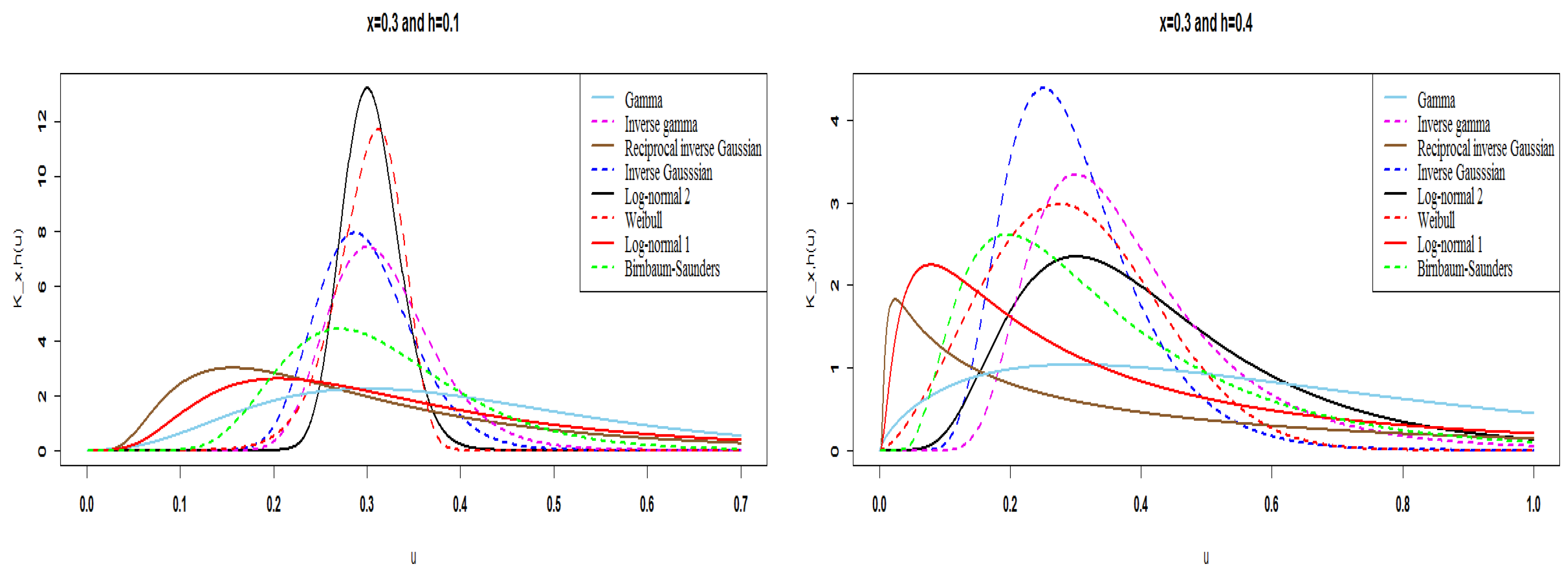

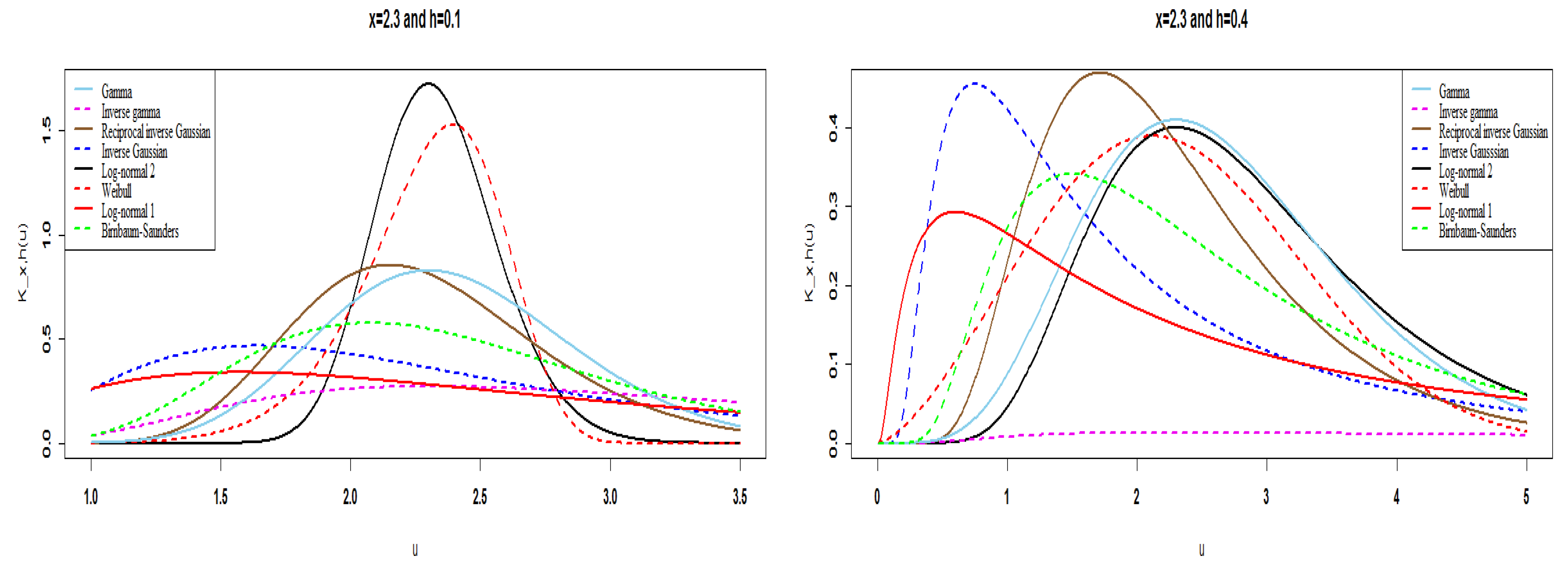

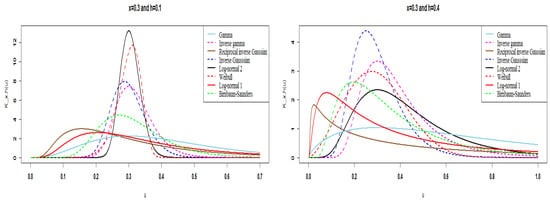

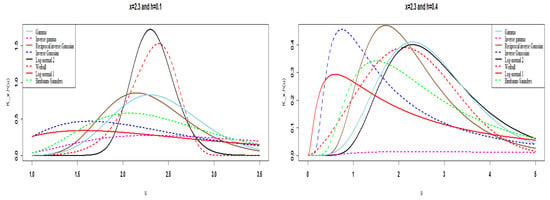

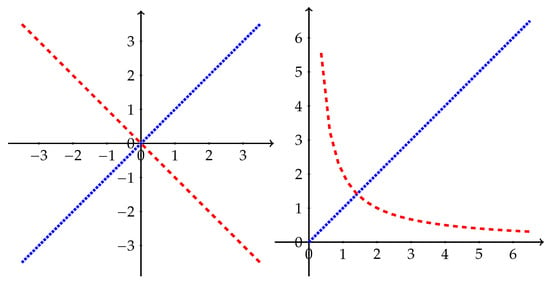

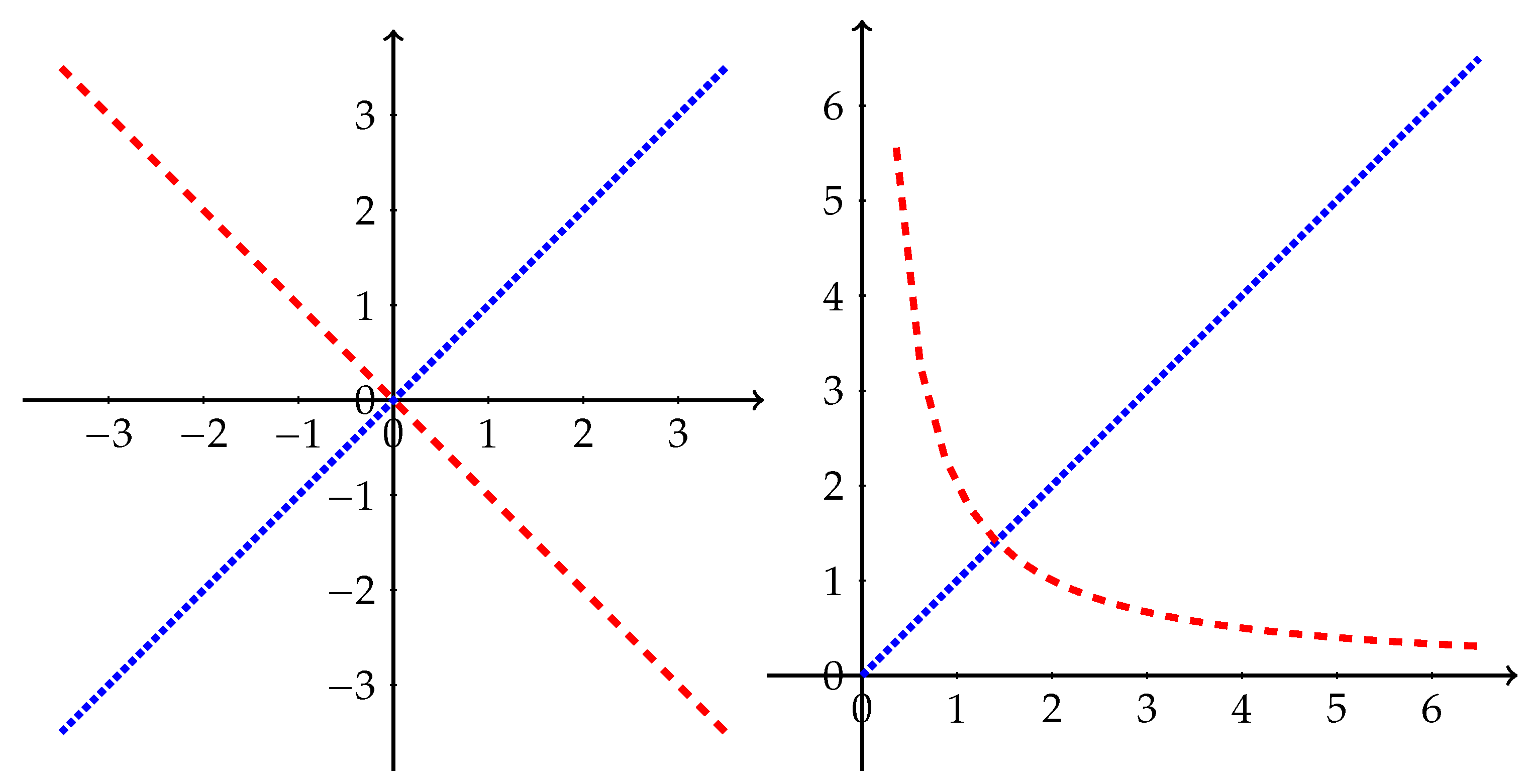

Table 1 summarizes these eight semicontinuous univariate associated kernels with their ingredients of Definition 1 and order of preference (O.) obtained graphically. In fact, the heuristic classification (O.) is done through the behavior of the shape and scale of the associated kernel around the target x at the edge as well as inside; see Figure 1 for edge and Figure 2 for inside. Among these eight kernels, we thus have to recommend the five first univariate associated kernels of Table 1 for smoothing semicontinuous data. This approach could be improved by a given dataset; see, e.g., [53] for cumulative functions.

Table 1.

Eight semicontinuous univariate associated kernels on and classifyed by “O.”

Figure 1.

Comparative graphics of the eight univariate semicontinuous associated kernels of Table 1 on the edge () with and .

Figure 2.

Comparative graphics of the eight univariate semicontinuous associated kernels of Table 1 inside () with and .

4. Semiparametric Kernel Estimation with d-Variate Parametric Start

We investigate the semiparametric orthant kernel approach which is a compromise between the pure parametric and the nonparametric methods. This concept was proposed by Hjort and Glad [19] for continuous data, treated by Kokonendji et al. [20] for discrete univariate data and, recently, studied by Kokonendji et al. [21] with an application to radiation biodosimetry.

Without loss of generality, we here assume that any d-variate pdmf f can be formulated (e.g., [54] for ) as

where is the non-singular parametric part according to a reference d-variate distribution with corresponding unknown parameters and is the unknown orthant weight function part, to be estimated with a multivariate orthant associated kernel. The weight function at each point can be considered as the local multiplicative correction factor aimed to accommodate any pointwise departure from the reference d-variate distribution. However, one cannot consider the best fit of parametric models as the start distribution in this semiparametric approach. Because the corresponding weight function is close to zero and becomes a noise which is unappropriated to smooth by an associated kernel, especially for the continuous cases.

Let be iid nonnegative orthant d-variate random vectors with unknown pdmf f on . The semiparametric estimator of (15) with (9) is expressed as follows:

where is the estimated parameter of . From (16), we then deduce the nonparametric orthant associated kernel estimate

of the weight function which depends on . One can observe that Proposition 1 also holds for . However, we have to prove below the analogous fact to Proposition 2.

4.1. Known d-Variate Parametric Model

Let be a fixed orthant distribution in (15) with known. Writing , we estimate the nonparametric weight function w by with an orthant associated kernel method, resulting in the estimator

The following proposition is proven in Appendix B.

Proposition 3.

It is expected that the bias here is quite different from that of (10).

4.2. Unknown d-Variate Parametric Model

Let us now consider the more realistic and practical semiparametric estimator presented in (16) of in (15) such that the parametric estimator of can be obtained by the maximum likelihood method; see [19] for quite a general estimator of . In fact, if the d-variate parametric model is misspecified then this converges in probability to the pseudotrue value satisfying

from the Kullback–Leibler divergence (see, e.g., [55]).

By writting this best d-variate parametric approximant, but this is not explicitly expressible as the one in (18). According to [19] (see also [20]), we can represent the proposed estimator in (16) as

Thus, the following result provides approximate bias and variance. We omit its proof since it is analogous to the one of Proposition 3.

Proposition 4.

Let be the best d-variate approximant of the unknown pdmf as (15) under the Kullback–Leibler criterion, and let be the corresponding d-variate weight function. As and under the assumption (a1) on f, then the estimator in (16) of f and refomulated in (19) satisfies

for any . Furthermore, if (a2) holds then we have of (11).

4.3. Model Diagnostics

The estimated weight function given in (17) provides useful information for model diagnostics. The d-variate weight function is equal one if the d-variate parametric start model is indeed the true pdmf. Hjort and Glad [19] proposed to check this adequacy by examining a plot of the weight function for various potential models with pointwise confidence bands to see wether or not is reasonable. See also [20,21] for univariate count setups.

In fact, without technical details here we use the model diagnostics for verifying the adequacy of the model by examining a plot of or

for all , , with a pointwise confidence band of for large n; that is to see how far away it is from zero. More precisely, for instance, is for pure nonparametric, it belongs to for semiparametric, and it is for full parametric models. It is noteworthy that the retention of pure nonparametric means the inconvenience of parametric part considered in this approach; hence, the orthant dataset is left free.

5. Semicontinuous Examples of Application with Discussions

For a practical implementation of our approach, we propose to use the popular multiple gamma kernels as (9) by selecting the adaptive Bayesian procedure of [13] to smooth . Hence, we shall gradually consider d-variate semicontinuous cases with for real datasets. All computations and graphics have been done with the R software [56].

5.1. Adaptive Bayesian Bandwidth Selection for Multiple Gamma Kernels

From Table 1, the function is the gamma kernel [43] given on the support with and :

where denotes the indicator function of any given event E. This gamma kernel appears to be the pdf of the gamma distribution, denoted by with shape parameter and scale parameter h. The multiple gamma kernel from (9) is written as with .

For applying (13) and (14) in the framework of semiparametric estimator in (16), we assume that each component , , of has the univariate inverse gamma prior distribution with same shape parameters and, eventually, different scale parameters such that . We here recall that the pdf of with is defined by

The mean and the variance of the prior distribution (21) for each component of the vector are given by for , and for , respectively. Note that for a fixed , , and if , then the distribution of the bandwidth vector is concentrated around the null vector .

From those considerations, the closed form of the posterior density and the Bayesian estimator of are given in the following proposition which is proven in Appendix B.

Proposition 5.

For fixed , consider each observation with its corresponding of univariate bandwidths and defining the subset and its complementary set . Using the inverse gamma prior of (21) for each component of in the multiple gamma estimator with and , then:

(i) the posterior density is the following weighted sum of inverse gamma

with , , , and ;

(ii) under the quadratic loss function, the Bayesian estimator of in (16) is

for with the previous notations of , , et .

Following Somé and Kokonendji [13] for nonparametric approach, we have to select the prior parameters and of the multiple inverse gamma of in (21) as follows: and , , to obtain the convergence of the variable bandwidths to zero with a rate close to that of an optimal bandwidth. For practical use, we here consider each .

5.2. Semicontinuous Datasets

The numerical illustrations shall be done through the following dataset of Table 2 which are recently used in [13] for non-parametric approach and only in the trivariate setup as semicontinuous data. It concerns three measurements (with ) of drinking water pumps installed in the Sahel. The first variable represents the failure times (in months) and, also, it is recently used by Touré et al. [24]. The second variable refers to the distance (in kilometers) between each water pump and the repair center in the Sahel, while the third one stands for average volume (in m) of water per day.

Table 2.

Drinking water pumps trivariate data measured in the Sahel with .

Table 3 displays all empirical univariate, bivariate and trivariate variation (6) and dispersion (3) indexes from Table 2. Hence, each , and is over-dispersed compared to the corresponding uncorrelated Poisson distribution. However, only (resp. ) can be considered as a bivariate equi-variation (resp. univariate over-variation) with respect to the corresponding uncorrelated exponential distribution; and, other , and are under-varied. In fact, we just compute dispersion indexes for curiosity since all values in Table 2 are positive integers; and, we here now omit the counting point of view in the remainder of the analysis.

Thus, we are gradually investing in semiparametric approaches for three univariates, three bivariates and only one trivariate from of Table 2.

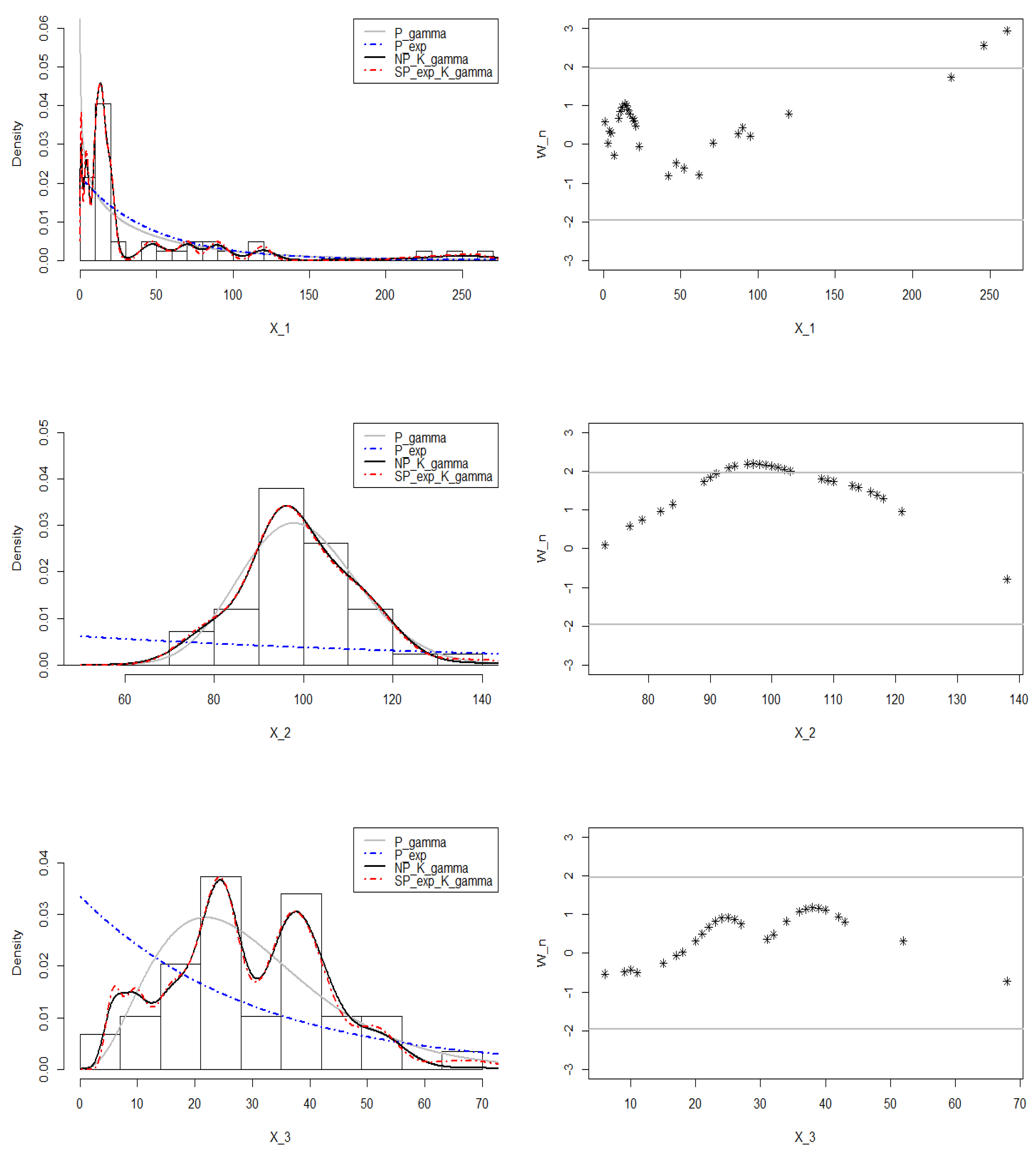

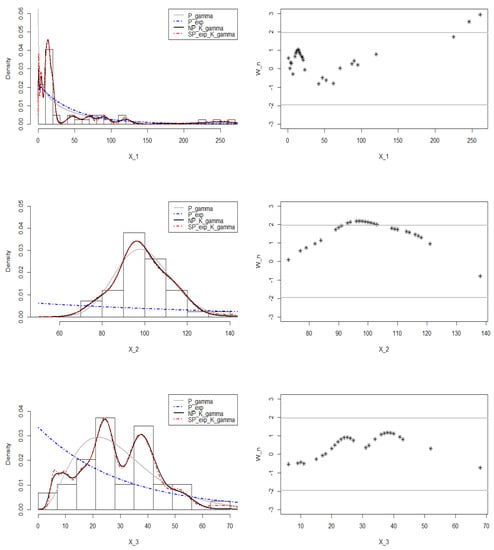

5.3. Univariate Examples

For each univariate semicontinuous dataset , , we have already computed the GVI in Table 3 which belongs in . This allows to consider our flexible semiparametric estimation with an exponential as start in (16) and using adaptive Bayesian bandwidth in gamma kernel of Proposition 5. Hence, we deduce the corresponding diagnostic percent from (20) for deciding an appropriate approach. In addition, we first present the univariate nonparametric estimation with adaptive Bayesian bandwidth in gamma kernel of [35] and then propose another parametric estimation of by the standard gamma model with shape () and scale () parameters.

Table 4 transcribes parameter maximum likelihood estimates of exponential and gamma models with diagnostic percent from Table 2. Figure 3 exhibits histogram, , , exponential, gamma and diagnostic graphs for each univariate data . One can observe differences with the naked eye between and although they are very near and with the same pace. The diagnostic graphics lead to conclude to semiparametric approach for and to full parametric models for and slightly also for . Thus, we have suggested the gamma model with two parameters for improving the starting exponential model; see, e.g., Table 2 in [54], for alternative parametric models.

Table 4.

Parameter estimates of models and diagnostic percents of univariate datasets.

Figure 3.

Comparative graphs of estimates of , and with their corresponding diagnostics.

5.4. Bivariate and Trivariate Examples

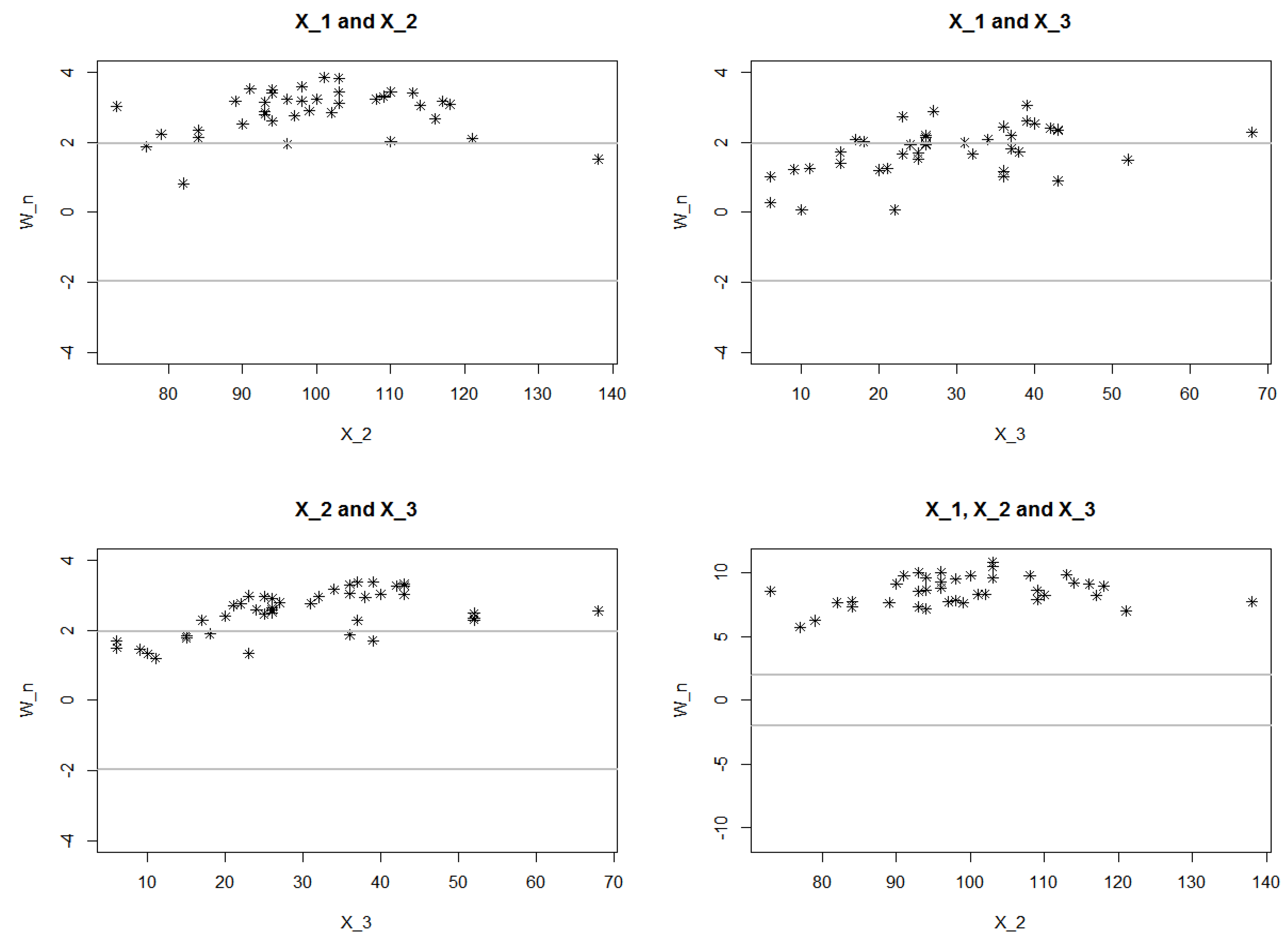

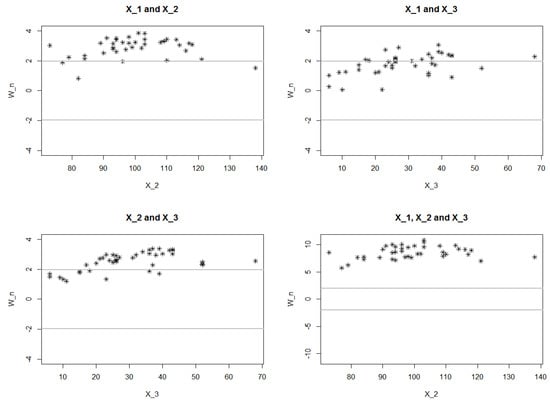

For the sake of flexibility and efficiency, we here analyze our proposed semiparametric estimation with an uncorrelated exponential as a start in (16) and using adaptive Bayesian bandwidth in gamma kernel of Proposition 5. This concerns all bivariate and trivariate datasets from Table 2 for which their GVI are in from Table 3. All the computation times are alsmost instantaneous.

Table 5 reports the main numerical results of the corresponding correlations, MVI, parameter estimates and finally diagnostic percent from (20) that we intentionally omit to represent some graphics in three or four dimensions. However, Figure 4 displays some representative projections of . From Table 5, the cross empirical correlations are closed to 0 and all MVI are smaller than 1 which allows us to consider uncorrelated exponential start-models. The maximum likelihood method is also used for estimating the parameters for getting the same results as in Table 4. Thus, the obtained numerical values of indicate semiparametric approaches for all bivariate datasets and the purely nonparametric method for the trivariate one; see [13] for more details on this nonparametric analysis. This progressive semiparametric analysis of the trivariate dataset of Table 2 shows the necessity of a suitable choice of the parametric start-models, which may take into account the correlation structure. Hence, the retention of pure nonparametric means the inconvenience of parametric part used in the modelization. Note that we could consider the Marshall–Olkin exponential distributions with nonnegative correlations as start-models; but, they are singular. See Appendix A for a brief review.

Table 5.

Correlations, MVI, parameter estimates and diagnostic percents of bi- and trivariate cases.

Figure 4.

Univariate projections of diagnostic graphs for bivariate and trivariate models.

6. Concluding Remarks

In this paper, we have presented a flexible semiparametric approach for multivariate nonnegative orthant distributions. We have first recalled multivariate variability indexes GVI, MVI, RVI, GDI, MDI and RDI from RWI as a prelude to the second-order discrimination for these parametric distributions. We have then reviewed and provided new proposals to the nonparametric estimators through multivariate associated kernels; e.g., Proposition 1 and Corollary 1. Both effective adaptive and local Bayesian selectors of bandwidth matrices are suggested for semicontinuous and counting data, respectively.

All these previous ingredients were finally used to develop the semiparametric modeling for multivariate nonnegative orthant distributions. Numerical illustrations have been simply done for univariate and multivariate semicontinuous datasets with the uncorrelated exponential start-models after examining GVI and MVI. The adaptive Bayesian bandwidth selection (13) in multiple gamma kernel (Proposition 5) were here required for applications. Finally, the diagnostic models have played a very interesting role in helping to the appropriate approach, even if it means improving it later.

At the meantime, Kokonendji et al. [37] proposed an in-depth practical analysis of multivariate count datasets starting with multivariate (un)correlated Poisson models after reviewing GDI and RDI. They have also established an equivalent of our Proposition 5 for the local Bayesian bandwidth selection (12) by using the multiple binomial kernels from Example 3. As one of the many perspectives, one could consider the categorial setup with local Bayesian version of the multivariate associated kernel of Aitchison and Aitken [38] from Example 1 of the univariate case.

At this stage of analysis, all the main foundations are now available for working in a multivariate setup such as variability indexes, associated kernels, Bayesian selectors and model diagnostics. We just have to adapt them to each situation encountered. For instance, we have the semiparametric regression modeling; see, e.g., Abdous et al. [57] devoted to counting explanatory variables and [22]. An opportunity will be opened for hazard rate functions (e.g., [51]). The near future of other functional groups, such as categorical and mixed, can now be considered with objectivity and feasibility.

Author Contributions

Conceptualization (C.C.K., S.M.S.); Formal analysis (C.C.K., S.M.S.); Investigation (C.C.K., S.M.S.); Methodology (C.C.K., S.M.S.); Software (C.C.K., S.M.S.); Supervision (C.C.K.); Writing—original draft preparation (C.C.K., S.M.S.); Writing—review and editing (C.C.K., S.M.S.). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The authors are grateful to the Associate Editor and two anonymous referees for their constructive comments. We also sincerely thank Mohamed Elmi Assoweh for some interesting discussions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GDI | Generalized dispersion index |

| GVI | Generalized variation index |

| iid | Independent and identically distributed |

| MDI | Marginal dispersion index |

| MVI | Marginal variation index |

| Probability density function | |

| pdmf | Probability density or mass function |

| pmf | Probability mass function |

| RDI | Relative dispersion index |

| RVI | Relative variation index |

| RWI | Relative variability index |

Appendix A. On a Broader d-Variate Parametric Models and the Marshall-Olkin Exponential

According to Cuenin et al. [58], taking in their multivariate Tweedie models of flexible dependence structure, another way to define the d-variate Poisson and exponential distributions is given by and , respectively. The symmetric variation matrix is such that , the mean of the corresponding marginal distribution is , and the non-negative correlation terms satisfy

with . Their constructions are perfectly defined having parameters as in and . Moreover, we attain the exact bounds of the correlation terms in (A1). Cuenin et al. [58] have pointed out the construction and simulation of the negative correlation structure from the positive one of (A1) by considering the inversion method.

The negativity of a correlation component is crucial for the phenomenon of under-variability in a bivariate/multivariate positive orthant model. Figure A1 (right) plots a limit shape of any bivariate positive orthant distribution with very strong negative correlation (in red), which is not the diagonal line of the upper bound () of positive correlation (in blue); see, e.g., [58] for details on both bivariate orthant (i.e., continuous and count) models. Conversely, Figure A1 (left) represents the classic lower () and upper () bounds of correlations on or finite support.

Figure A1.

Support of bivariate distributions with maximum correlations (positive in blue and negative in red): model on (left) and also finite support; model on (right), without finite support.

Figure A1.

Support of bivariate distributions with maximum correlations (positive in blue and negative in red): model on (left) and also finite support; model on (right), without finite support.

The d-variate exponential of Marshall and Olkin [59] is built as follows. Let and Z be univariate exponential random variables with parameters and , respectively. Then, by setting for , one has and for all . Each correlation lies in if and only if . From its survival (or reliability) function

its pdf can be written as

It is not absolutely continuous with respect to the Lebesgue measure in and has singularities corresponding to the cases where two or more of the ’s are equal. Karlis [60] has proposed a maximum likelihood estimation of parameters via an EM algorithm. Finally, Kokonendji et al. [16] have calculated

and

Hence, the Marshall–Olkin exponential model is always under-varied with respect to the MVI and over- or equi-varied with respect to GVI. If then is reduced to the uncorrolated with . However, the assumption of non-negative correlations between components is sometimes unrealistic for some analyzes.

Appendix B. Proofs of Proposition 2, Proposition 3 and Proposition 5

Proof of Proposition 2.

From Definition 1, we get (see also [10] for more details)

Next, using (A2), by a Taylor expansion of the function over the points and , we get

where is uniform in a neighborhood of . Therefore, taking the expectation in both sides of (A3) and then substituting the result in (A2), we get

where is uniform in a neighborhood of . The second Taylor expansion of the function over the points and allows to conclude the bias (10).

About the variance term, f being bounded, we have . It follows that:

□

Proof of Proposition 3.

Since one has and , it is enough to calculate and following Proposition 2 applied to for all .

Indeed, one successively has

which leads to the announced result of . As for , one also write

and the desired result of is therefore deduced. □

Proof of Proposition 5.

We have to adapt Theorem 2.1 of Somé and Kokonendji [13] to this semiparametric estimator in (16). First, the leave-one-out associated kernel estimator (14) becomes

Then, the posterior distribution deduced from (13) is exppressed as

and which leads to the result of Part (i) via Theorem 2.1 (i) in [13] for details. Consequently, we similarly deduce the adaptive Bayesian estimator of Part (ii). □

References

- Somé, S.M.; Kokonendji, C.C.; Ibrahim, M. Associated kernel discriminant analysis for multivariate mixed data. Electr. J. Appl. Statist. Anal. 2016, 9, 385–399. [Google Scholar]

- Libengué Dobélé-Kpoka, F.G.B. Méthode Non-Paramétrique par Noyaux Associés Mixtes et Applications. Ph.D. Thesis, Université de Franche-Comté, Besançon, France, 2013. [Google Scholar]

- Johnson, N.L.; Kotz, S.; Balakrishnan, N. Discrete Multivariate Distributions; Wiley: New York, NY, USA, 1997. [Google Scholar]

- Kotz, S.; Balakrishnan, N.; Johnson, N.L. Continuous Multivariate Distributions–Models and Applications, 2nd ed.; Wiley: New York, NY, USA, 2000. [Google Scholar]

- Balakrishnan, N.; Lai, C.-D. Continuous Bivariate Distributions, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- Belaid, N.; Adjabi, S.; Kokonendji, C.C.; Zougab, N. Bayesian local bandwidth selector in multivariate associated kernel estimator for joint probability mass functions. J. Statist. Comput. Simul. 2016, 86, 3667–3681. [Google Scholar] [CrossRef]

- Belaid, N.; Adjabi, S.; Kokonendji, C.C.; Zougab, N. Bayesian adaptive bandwidth selector for multivariate discrete kernel estimator. Commun. Statist. Theory Meth. 2018, 47, 2988–3001. [Google Scholar] [CrossRef]

- Funke, B.; Kawka, R. Nonparametric density estimation for multivariate bounded data using two non-negative multiplicative bias correction methods. Comput. Statist. Data Anal. 2015, 92, 148–162. [Google Scholar] [CrossRef]

- Hirukawa, M. Asymmetric Kernel Smoothing—Theory and Applications in Economics and Finance; Springer: Singapore, 2018. [Google Scholar]

- Kokonendji, C.C.; Somé, S.M. On multivariate associated kernels to estimate general density functions. J. Korean Statist. Soc. 2018, 47, 112–126. [Google Scholar] [CrossRef]

- Ouimet, F. Density estimation using Dirichlet kernels. arXiv 2020, arXiv:2002.06956v2. [Google Scholar]

- Somé, S.M.; Kokonendji, C.C. Effects of associated kernels in nonparametric multiple regressions. J. Statist. Theory Pract. 2016, 10, 456–471. [Google Scholar] [CrossRef]

- Somé, S.M.; Kokonendji, C.C. Bayesian selector of adaptive bandwidth for multivariate gamma kernel estimator on [0,∞)d. J. Appl. Statist. 2021. forthcoming. [Google Scholar] [CrossRef]

- Zougab, N.; Adjabi, S.; Kokonendji, C.C. Comparison study to bandwidth selection in binomial kernel estimation using Bayesian approaches. J. Statist. Theory Pract. 2016, 10, 133–153. [Google Scholar] [CrossRef]

- Zougab, N.; Harfouche, L.; Ziane, Y.; Adjabi, S. Multivariate generalized Birnbaum—Saunders kernel density estimators. Commun. Statist. Theory Meth. 2018, 47, 4534–4555. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Touré, A.Y.; Sawadogo, A. Relative variation indexes for multivariate continuous distributions on [0,∞)k and extensions. AStA Adv. Statist. Anal. 2020, 104, 285–307. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Puig, P. Fisher dispersion index for multivariate count distributions: A review and a new proposal. J. Multiv. Anal. 2018, 165, 180–193. [Google Scholar] [CrossRef]

- Weiß, C.H. On some measures of ordinal variation. J. Appl. Statist. 2019, 46, 2905–2926. [Google Scholar] [CrossRef]

- Hjort, N.L.; Glad, I.K. Nonparametric density estimation with a parametric start. Ann. Statist. 1995, 23, 882–904. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Senga Kiessé, T.; Balakrishnan, N. Semiparametric estimation for count data through weighted distributions. J. Statist. Plann. Infer. 2009, 139, 3625–3638. [Google Scholar] [CrossRef][Green Version]

- Kokonendji, C.C.; Zougab, N.; Senga Kiessé, T. Poisson-weighted estimation by discrete kernel with application to radiation biodosimetry. In Biomedical Big Data & Statistics for Low Dose Radiation Research—Extended Abstracts Fall 2015; Ainsbury, E.A., Calle, M.L., Cardis, E., Einbeck, J., Gómez, G., Puig, P., Eds.; Springer Birkhäuser: Basel, Switzerland, 2017; pp. 115–120. [Google Scholar]

- Senga Kiessé, T.; Zougab, N.; Kokonendji, C.C. Bayesian estimation of bandwidth in semiparametric kernel estimation of unknown probability mass and regression functions of count data. Comput. Statist. 2016, 31, 189–206. [Google Scholar] [CrossRef]

- Johnson, R.A.; Wichern, D.W. Applied Multivariate Statistical Analysis, 6th ed.; Pearson Prentice Hall: New Jersey, NJ, USA, 2007. [Google Scholar]

- Touré, A.Y.; Dossou-Gbété, S.; Kokonendji, C.C. Asymptotic normality of the test statistics for the unified relative dispersion and relative variation indexes. J. Appl. Statist. 2020, 47, 2479–2491. [Google Scholar] [CrossRef]

- Touré, A.Y.; Kokonendji, C.C. Count and Continuous Generalized Variability Indexes; The R Package GWI, R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Arnold, B.C.; Manjunath, B.G. Statistical inference for distributions with one Poisson conditional. arXiv 2020, arXiv:2009.01296. [Google Scholar]

- Abid, R.; Kokonendji, C.C.; Masmoudi, A. Geometric Tweedie regression models for continuous and semicontinuous data with variation phenomenon. AStA Adv. Statist. Anal. 2020, 104, 33–58. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Senga Kiessé, T. Discrete associated kernels method and extensions. Statist. Methodol. 2011, 8, 497–516. [Google Scholar] [CrossRef]

- Scott, D.W. Multivariate Density Estimation—Theory, Practice, and Visualization; Wiley: New York, NY, USA, 1992. [Google Scholar]

- Silverman, B.W. Density Estimation for Statistics and Data Analysis; Chapman and Hall: London, UK, 1986. [Google Scholar]

- Zougab, N.; Adjabi, S.; Kokonendji, C.C. Bayesian estimation of adaptive bandwidth matrices in multivariate kernel density estimation. Comput. Statist. Data Anal. 2014, 75, 28–38. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Libengué Dobélé-Kpoka, F.G.B. Asymptotic results for continuous associated kernel estimators of density functions. Afr. Diaspora J. Math. 2018, 21, 87–97. [Google Scholar]

- Kokonendji, C.C.; Senga Kiessé, T.; Zocchi, S.S. Discrete triangular distributions and non-parametric estimation for probability mass function. J. Nonparam. Statist. 2007, 19, 241–254. [Google Scholar] [CrossRef]

- Wansouwé, W.E.; Somé, S.M.; Kokonendji, C.C. Ake: R Package Discrete continuous associated kernel estimations. R J. 2016, 8, 259–276. [Google Scholar] [CrossRef]

- Somé, S.M. Bayesian selector of adaptive bandwidth for gamma kernel density estimator on [0,∞). Commun. Statist. Simul. Comput. 2021. forthcoming. [Google Scholar] [CrossRef]

- Ziane, Y.; Zougab, N.; Adjabi, S. Adaptive Bayesian bandwidth selection in asymmetric kernel density estimation for nonnegative heavy-tailed data. J. Appl. Statist. 2015, 42, 1645–1658. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Belaid, N.; Abid, R.; Adjabi, S. Flexible semiparametric kernel estimation of multivariate count distribution with Bayesian bandwidth selection. Statist. Meth. Appl. 2021. forthcoming. [Google Scholar]

- Aitchison, J.; Aitken, C.G.G. Multivariate binary discrimination by the kernel method. Biometrika 1976, 63, 413–420. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Zocchi, S.S. Extensions of discrete triangular distribution and boundary bias in kernel estimation for discrete functions. Statist. Probab. Lett. 2010, 80, 1655–1662. [Google Scholar] [CrossRef]

- Huang, A.; Sippel, L.; Fung, T. A consistent second-order discrete kernel smoother. arXiv 2020, arXiv:2010.03302. [Google Scholar]

- Libengué Dobélé-Kpoka, F.G.B.; Kokonendji, C.C. The mode-dispersion approach for constructing continuous associated kernels. Afr. Statist. 2017, 12, 1417–1446. [Google Scholar] [CrossRef]

- Huang, A. On arbitrarily underdispersed Conway-Maxwell-Poisson distributions. arXiv 2020, arXiv:2011.07503. [Google Scholar]

- Chen, S.X. Probability density function estimation using gamma kernels. Ann. Inst. Statist. Math. 2000, 52, 471–480. [Google Scholar] [CrossRef]

- Hirukawa, M.; Sakudo, M. Family of the generalised gamma kernels: A generator of asymmetric kernels for nonnegative data. J. Nonparam. Statist. 2015, 27, 41–63. [Google Scholar] [CrossRef]

- Mousa, A.M.; Hassan, M.K.; Fathi, A. A new non parametric estimator for Pdf based on inverse gamma distribution. Commun. Statist. Theory Meth. 2016, 45, 7002–7010. [Google Scholar] [CrossRef]

- Scaillet, O. Density estimation using inverse and reciprocal inverse Gaussian kernels. J. Nonparam. Statist. 2004, 16, 217–226. [Google Scholar] [CrossRef]

- Igarashi, G.; Kakizawa, Y. Re-formulation of inverse Gaussian, reciprocal inverse Gaussian, and Birnbaum-Saunders kernel estimators. Statist. Probab. Lett. 2014, 84, 235–246. [Google Scholar] [CrossRef]

- Jin, X.; Kawczak, J. Birnbaum-Saunders and lognormal kernel estimators for modelling durations in high frequency financial data. Ann. Econ. Financ. 2003, 4, 103–124. [Google Scholar]

- Marchant, C.; Bertin, K.; Leiva, V.; Saulo, H. Generalized Birnbaum–Saunders kernel density estimators and an analysis of financial data. Comput. Statist. Data Anal. 2013, 63, 1–15. [Google Scholar] [CrossRef]

- Mombeni, H.A.; Masouri, B.; Akhoond, M.R. Asymmetric kernels for boundary modification in distribution function estimation. REVSTAT 2019. forthcoming. [Google Scholar]

- Salha, R.B.; Ahmed, H.I.E.S.; Alhoubi, I.M. Hazard rate function estimation using Weibull kernel. Open J. Statist. 2014, 4, 650–661. [Google Scholar] [CrossRef]

- Erçelik, E.; Nadar, M. A new kernel estimator based on scaled inverse chi-squared density function. Am. J. Math. Manag. Sci. 2020. forthcoming. [Google Scholar] [CrossRef]

- Lafaye de Micheaux, P.; Ouimet, F. A study of seven asymmetric kernels for the estimation of cumulative distribution functions. arXiv 2020, arXiv:2011.14893. [Google Scholar]

- Kokonendji, C.C.; Touré, A.Y.; Abid, R. On general exponential weight functions and variation phenomenon. Sankhy¯a A 2020. forthcoming. [Google Scholar] [CrossRef]

- White, H. Maximum likelihood estimation of misspecified models. Econometrica 1982, 50, 1–26. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020; Available online: http://cran.r-project.org/ (accessed on 28 January 2021).

- Abdous, B.; Kokonendji, C.C.; Senga Kiessé, T. Semiparametric regression for count explanatory variables. J. Statist. Plann. Inference 2012, 142, 1537–1548. [Google Scholar] [CrossRef][Green Version]

- Cuenin, J.; Jørgensen, B.; Kokonendji, C.C. Simulations of full multivariate Tweedie with flexible dependence structure. Comput. Statist. 2016, 31, 1477–1492. [Google Scholar] [CrossRef]

- Marshall, A.W.; Olkin, I. A multivariate exponential distribution. J. Amer. Statist. Assoc. 1967, 62, 30–44. [Google Scholar] [CrossRef]

- Karlis, D. ML estimation for multivariate shock models via an EM algorithm. Ann. Inst. Statist. Math. 2003, 55, 817–830. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).