1. Introduction

Functional data analysis (FDA) continues to be an active and growing area of research as measurements from continuous processes are increasingly becoming prevalent in many fields. This type of data is functional data because they can be viewed as samples from curves. Recent key references in FDA include those of Hsing and Eubank [

1] and Kokoszka and Reimherr [

2]. Consider the following linear model where the response is a scalar, but the explanatory variable is function:

for

. The response

is a scalar value,

is a function,

is the intercept,

is a parameter curve, and

is uncorrelated random noise with zero mean and constant variance

. Model (

1) is also referred to as a scalar-on-function regression model. Here, we assume

, but note that any closed continuous domain

in

can be transformed to

. Further, we assume that the covariate functions are known.

The objective for model (

1) is to estimate the smooth parameter curve,

. The parameter curve quantifies the relationship between the scalar response and a functional explanatory variable in the presence of uncertainty. A review of some common approaches for estimating

are provided in [

3], and these approaches depend on some variant of a tuning parameter (smoothing parameter, number of knots, bandwidth, number of retained principal components, etc.), where the size of the tuning parameter controls the trade-off between the goodness-of-fit and the smoothness of the parameter curve estimate. Regarding inference for model (

1), some recently proposed methodologies include goodness-of-fit test [

4] and testing linearity in a FLM with a scalar response [

5]. Tekbudak et al. [

6] provided a comparison of these and other recent testing procedures for linearity in a FLM with a scalar response. In influence diagnostics, Cook’s distance [

7] and Pe

a’s distance [

8] are extended to a FLM with a scalar response [

9].

In this paper, our aim is to quantify the amount of information present in the covariate functions for estimating the parameter curve when

is identifiable and assess the influence of the amount of this information on the numerical stability of the parameter curve estimator. In addition, we review the performance of different smoothing parameter selection methods based on the amount of information present in the covariate functions for estimating

. To our knowledge, no study has explicitly focused on this aspect of model (

1). Here, the concept of the numerical stability (referred to simply as stability hereafter) is tied to the idea that the parameter curve estimate will not substantially change when the set of covariate functions are slightly altered. In

Section 2, we define a measure, denoted

, to quantify the amount of information present in the covariate functions for estimating

when the parameter curve is identifiable. There are various computational methods to estimate the smooth parameter curve,

, in model (

1). Our approach is to estimate

using penalized regression spline estimation. Penalized regression spline estimation of

and common smoothing parameter selection methods are discussed in

Section 3.

Section 4 proposes measures to assess the performance of smoothing parameter selection methods and the stability of a parameter curve estimator. A simulation study that assesses the relationship between

and the stability of the parameter curve estimator, as well as examines the performance of different smoothing parameter selection methods under varying

s, is given in

Section 5.

Section 6 provides a real data application of

. We conclude with discussion in

Section 7.

2. Number of Independent Pieces of Information in a FLM

Model (

1) can be ill-posed to varying degrees. An ill-posed problem refers to one for which no solution exists, the solution is not unique, or the solution is unstable [

10]. Cardot et al. [

11] provided theoretical conditions for the existence and uniqueness of a solution to (

1), where the solution falls in the space spanned by the eigenfunctions of the functional covariate’s covariance operator in which the model space is a separable Hilbert space of square integrable functions defined on

. In practice, it is generally assumed that theoretical conditions for identifiability are satisfied when estimating

in model (

1). In scalar-on-image regression models, Happ et al. [

12] studied the impact of structural assumptions of the parameter image, such as smoothness and sparsity, on the model estimates, as well as measures to assess to what degree the assumptions are satisfied.

Our focus is assessing how much information is present in the covariate functions to estimate the parameter curve in model (

1) when the parameter curve is identifiable. To our knowledge, no prior studies have given consideration to this aspect of model (

1) and its influence on the stability of the parameter curve estimator. Given the different areas of application of model (

1), assessing this relationship is essential as the stability of the parameter curve estimate would influence the reliability of model uncertainty estimates. We aim to study this aspect of model (

1) by proposing to quantify the information present in the covariate functions to estimate the parameter curve. The idea underlying this work is motivated by the work of Wahba [

13].

Wahba [

13] proposed the idea of the number of independent pieces of information to gauge if one can obtain a reasonable solution in a type of general smoothing spline model (GSSM). In this context, the number of eigenvalues provided by the eigendecomposition of the inner-product of the representer of a bounded linear functional with itself when scaled by the reciprocal of the variance of the error component in the model that are greater than one are considered to be the number of independent pieces of information. If the number of independent pieces of information in a GSSM is large, then a solution to a GSSM is recoverable. However, no explicit criterion was given to quantify how many pieces are required. Motivated by this, we define a measure for the number of independent pieces of information in the covariate functions for estimating

in model (

1). Let

be the eigenvalues from the eigendecomposition of

where

. We define the number of independent pieces of information in the covariate functions for estimating

as

where

is the variance of the error component in model (

1). Measure (

2) may be estimated by plugging in an estimate of

. An estimate of

is provide in

Section 3.

As an illustration of (

2),

Figure 1 contains four different sets of covariate functions that vary in their number of independent pieces of information. These covariate functions are used in a simulation study in

Section 5. Figures in this study were produced using the R packages ggplot2 [

14] and cowplot [

15]. If there is less information present in the covariate functions to estimate

as quantified by (

2), then one would anticipate that a reasonable or stable parameter curve estimator would be less feasible. To assess whether the size of

indicates the degree of stability of a solution to model (

1), we propose a stability measure of a parameter curve estimator in

Section 4. We address the relationship between

and the stability of the estimator in

Section 5.

3. Penalized Regression Spline Estimate of

Here, we review penalized regression spline estimation of the parameter curve

. Assuming that the parameter curve is smooth in the sense that it lies in a Sobolev space of order 4, we may seek an estimate of

by minimizing

where the term

penalizes curvature in the estimate. To ease notation, from here forward, we assume the intercept

, but a non-zero

value is easily incorporated into the computational approaches discussed.

We use the method of regularized basis functions [

16], with a B-spline basis of order 4 to minimize fitting criterion (

3) with respect to the parameter curve

. With this approach, each covariate function

and the parameter curve

are represented using a linear combination of B-spline basis functions. Let

be represented as

for

, where

denotes the

jth B-spline function of order 4. Similarly, we represent

as

. Now, let

where

and

denotes the

ith row of the matrix

that has elements

. Similarly, let

where

. In this basis representation framework, penalized least squares criterion (

3) is re-expressed as finding

to minimize

where

,

, and

. For a given

, an estimate of

is obtained by minimizing (

4) with respect to

via

where the subscript

signifies the dependence of the solution on the value of the smoothing parameter.

Various data-driven approaches have been proposed to select the smoothing parameter

in (

3). These methods include Akaike’s information criterion, Akaike’s information criterion corrected, cross-validation, generalized cross-validation criterion, L-curve criterion, restricted maximum likelihood, Schwarz information criterion, etc. Since the size of

controls the size of the penalty in (

3), most of these data-driven methods consist of two parts: one that measures the goodness of fit of the model and another that quantifies the complexity of the parameter curve estimate. Thus, these methods attempt to achieve an optimal balance between how well the model fits the data and the smoothness of the parameter curve estimate (see [

4,

9,

17,

18,

19], and others cited therein for examples of recent studies that have used one or more of these criteria to select the smoothing parameter in models of type (

1).)

In our study, we restrict our discussion to the following commonly used data-driven criteria: Akaike’s information criterion corrected (

), cross-validation (

), Schwarz information criterion (

), and the generalized cross-validation (

) criterion. The smoothing parameter selection methods used in our study are by no means exhaustive, nor are they meant to be. Rather, our intent is to explore if the amount of information present in the covariate functions for estimating

may effect the performance of given smoothing parameter selection method. For each criterion, the value of the smoothing parameter

that minimizes the criterion is assumed to be a reasonable value for

. Each criterion discussed here is dependent on the residuals sum of squares defined by

and the smoother matrix,

. With CV, for each

, we obtain an estimate of

based on minimizing

Denote the minimizing solution by

, where

symbolizes an estimate based on all observations except for the

ith case. Since there are

n cases that one can delete for a given

, a cross-validation score is defined as

A computationally friendly form of CV [

16] is defined as

where

denotes the

ith diagonal element of

.

The GCV criterion [

20] replaces the diagonal elements of the smoother matrix in the

formula by

to obtain

where

.

is referred to as the effective degrees of freedom [

21].

and

are commonly used to estimate

via

. An estimate of

may then be used to estimate

via

The term

in (

5) represents an inflation of the effective degrees of freedom (EDF) for

. Inflation of the EDF is used as a measure to guard against GCV selecting a smoothing parameter that over-fits the data in non-parametric models [

22]. Some simulation studies suggest

to be reasonable in non-parametric models [

22,

23]. The SIC criterion [

24] may be expressed as

The AICc criterion proposed by Hurvich et al. [

25] penalizes more complex estimates of

than does the

for smaller sample sizes, and it may be defined as

4. Quantifying Stability of and the Performance of Smoothing Parameter Selection Methods

An ideal value for the smoothing parameter, call it

, may be considered one that minimizes the integrated squared error,

To assess the performance of the smoothing parameter selection methods discussed in

Section 3, we use the median of the measure

Note that any penalized regression spline estimate of the parameter curve will be dependent on the chosen value of the smoothing parameter, but the notation of this dependence is suppressed for better readability. Relative to

, the estimator

is considered better the closer the median of (

8) is to 1. The further away the median of (

8) is from 1, the poorer is the performance of the smoothing parameter selection method. Measure (

8) was motivated by a measure proposed by Lee [

26] to compare smoothing parameter selection methods in smoothing splines.

To assess the stability of a parameter curve estimator, we propose a leave-one-out measure motivated by the DFFITS [

27] statistic. Specifically, we use the median of a leave-one-out integrated squared error measure,

where

and

represent estimators of

and

, respectively, based on all observations except for the

ith case, and where

minimizes criterion (

7). A large value of the median of (

9) would reflect a less stable estimator. If

is to be considered an appropriate measure of information in the covariate functions for estimating

, then large values of

would be associated with small values of the median of (

9) in the sense that slightly altering the set of covariate functions did not lead to large changes in the parameter curve estimate. Similarly, smaller values of

would be associated with larger values of the median of (

9). This is evaluated with a simulation study in the next section.

5. A Simulation Study

Using a simulation study, we examine the stability of a parameter curve estimator and the performance of the smoothing parameter selection methods at varying levels of

. The sampling distributions required to derive analytical formulas for the median of (

8) and (

9) are unattainable due to the dependence of the parameter curve estimator on the smoothing parameter. Therefore, a simulation estimate of the median of (

8) is obtained by

The subscript

g denotes the

gth simulated dataset. Similarly, we estimate the median of (

9) via

To obtain (

10) and (

11), simulated datasets are generated under various settings by the model,

for

, where

s are assumed to independent and identically normal random variables with mean 0 and standard deviation

. Overall, under four different sets of covariate functions,

simulated datasets were generated for each combination of

,

,

, and

is chosen for each setting to ensure that

, where

refers to the signal-to-noise ratio. As defined by Febrero-Bande et al. [

9],

, where

denotes the standard deviation of

for

. The different sets of covariate functions used in this study were first produced on a discretized scale of 50 equally spaced values. To ensure sufficient flexibility in their functional representations, the parameter curve and the set covariate functions are represented as functions using the approach described in

Section 3, with

and

, respectively.

The simulations are performed using R [

28], along with the extension and usage of code from the R package fda [

29]. In addition, the R packages dplyr [

30] and tidyr [

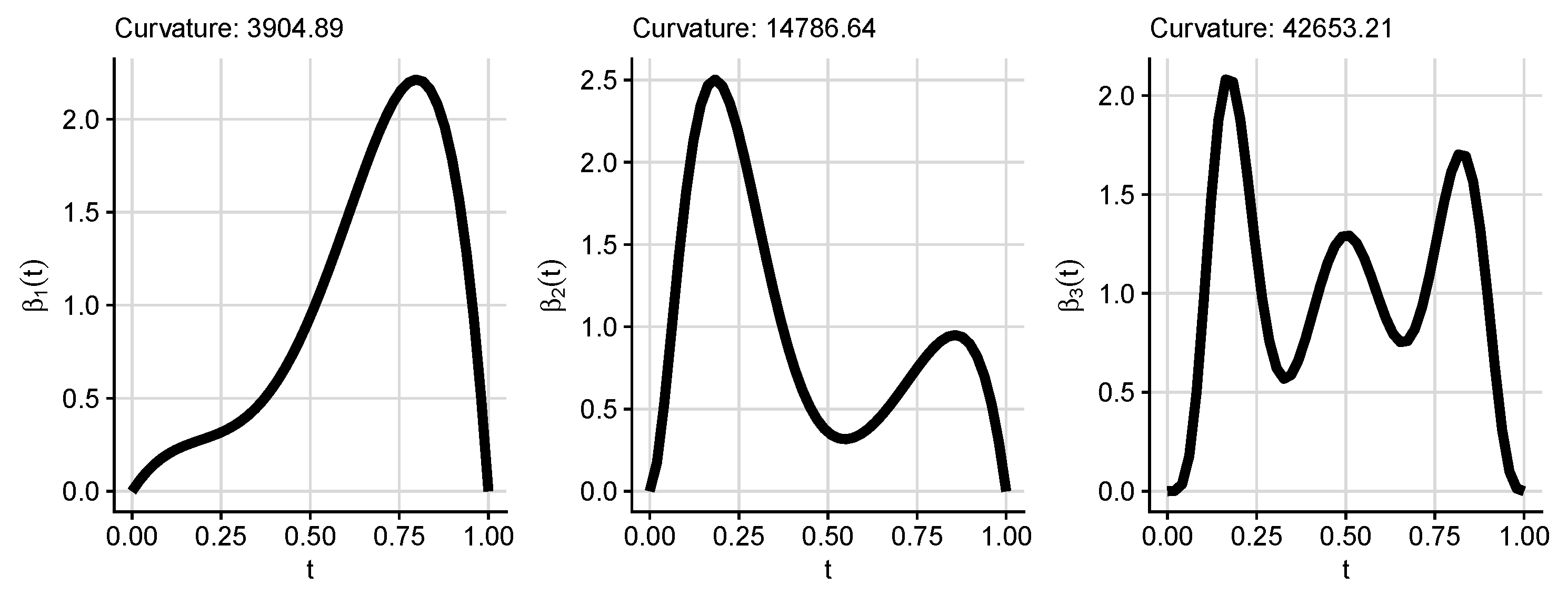

31] were used for data management. The parameter curves in this study (shown in

Figure 2) are defined as

where

and they were chosen to assess the performance of (

10) and (

11) at varying levels of complexity of the parameter curve in terms of their approximate curvature.

The different sets of covariate functions were generated under different conditions to ensure varying levels of

. The first set of covariate curves (

s) are produced by generating realizations from a normal random variable with mean 0 and standard deviation

that are randomly shifted about the y-axis by

, where

. We denote this first set of covariate curves as Covariate Set 1. Covariate Set 2 (

s) is created by generating realizations from a Gaussian process having an exponential covariogram with variance parameter 10 and scale parameter

. Covariate Set 3 (

s) is produced by generating realizations from a mixture of beta random variables randomly shifted about the y-axis. Covariate Set 4 (

s) is obtained by generating realizations from a Brownian motion process with variance parameter

. As an illustration, the four different sets of covariate functions used in this study are shown in

Figure 1 for a sample of size

at a specified signal-to-noise ratio.

Table 1,

Table 2 and

Table 3 provide the SMK when the parameter curves are

,

, and

, respectively, under each simulation setting and smoothing parameter selection method. The results are presented on the log scale to better depict the differences. Note that a small value of the

does not reflect whether a reasonable estimator of

was obtained but rather reflects the performance of the given smoothing parameter selection method relative to criterion (

7). Some of the differences between the smallest and the next smallest values of the SMK across the smoothing parameter selection methods for a given simulation scenario may not appear large. To better distinguish between the smallest and the next smallest values of the SMK, pairwise comparisons of the SMKs in a given simulation setting were performed using Mood’s median test with the Benjamini and Hochberg [

32] correction as implemented in the R package RVAideMemoire [

33] at significance level

. Here, we consider the performance of a smoothing parameter selection method to be favorable or best if it obtains the lowest

or an

that is not significantly different from the lowest

. We now summarize the favorable smoothing parameter selection method(s) that were most common across different simulation settings.

Under Covariate Set 1, consistently obtained the lowest across across all simulation settings. Under Covariate Set 2 at , , , and consistently preformed best under the different simulation settings. However, tended to performed best across all simulation settings at . At and the lower , generally performed best across the three parameter curves. At the higher , was the better smoothing parameter selection method. When the covariate functions assume the form of Covariate Set 3, and tended to perform just as well or better than the other smoothing parameter selection methods across all settings. Under Covariate Set 4 with , was consistently among the better methods for all settings. For , and were favorable under parameter curves and , whereas was favorable under . When , performed best or just as well as the other methods.

Covariate Sets 1 and 2 tended to have a lower in our study for a given sample size and , whereas Covariate Sets 3 and 4 had a higher . While a perfect one-to-one relationship does not appear evident between and the performance of a smoothing parameter selection method, tended to perform more favorably when ranged between two and nine across the three different parameter curves. For the higher values of , tended to perform just as well or better than the other methods more often than not. In addition, note that obtained a lower than for almost all simulation settings.

Table 4 shows the

under each simulation setting. The results are presented on the log scale to better depict the differences. The results show similar patterns or trends under each

. For a given parameter curve and sample size, the

decreases as

increases. Thus, the more information present in the covariate functions for estimating the parameter curve, the more stable the parameter curve estimator. We also note that, for a given covariate set, an increase in the sample size corresponds to a decrease in the

and to a non-decreasing

. This reassures that

may be viewed as a measure of the amount of information present in the covariate functions in the sense that under a given covariate set, more observed data tends to increase

to varying degrees and provide a smaller

. However, a large sample size does not imply a large

, as illustrated by Covariate Set 1. Similarly, a small sample size does not imply a low

, such as under Covariate Set 4. The differences in results under each

are in part due to the scaling involved in (

9) by

, where

would tend to be higher under

.

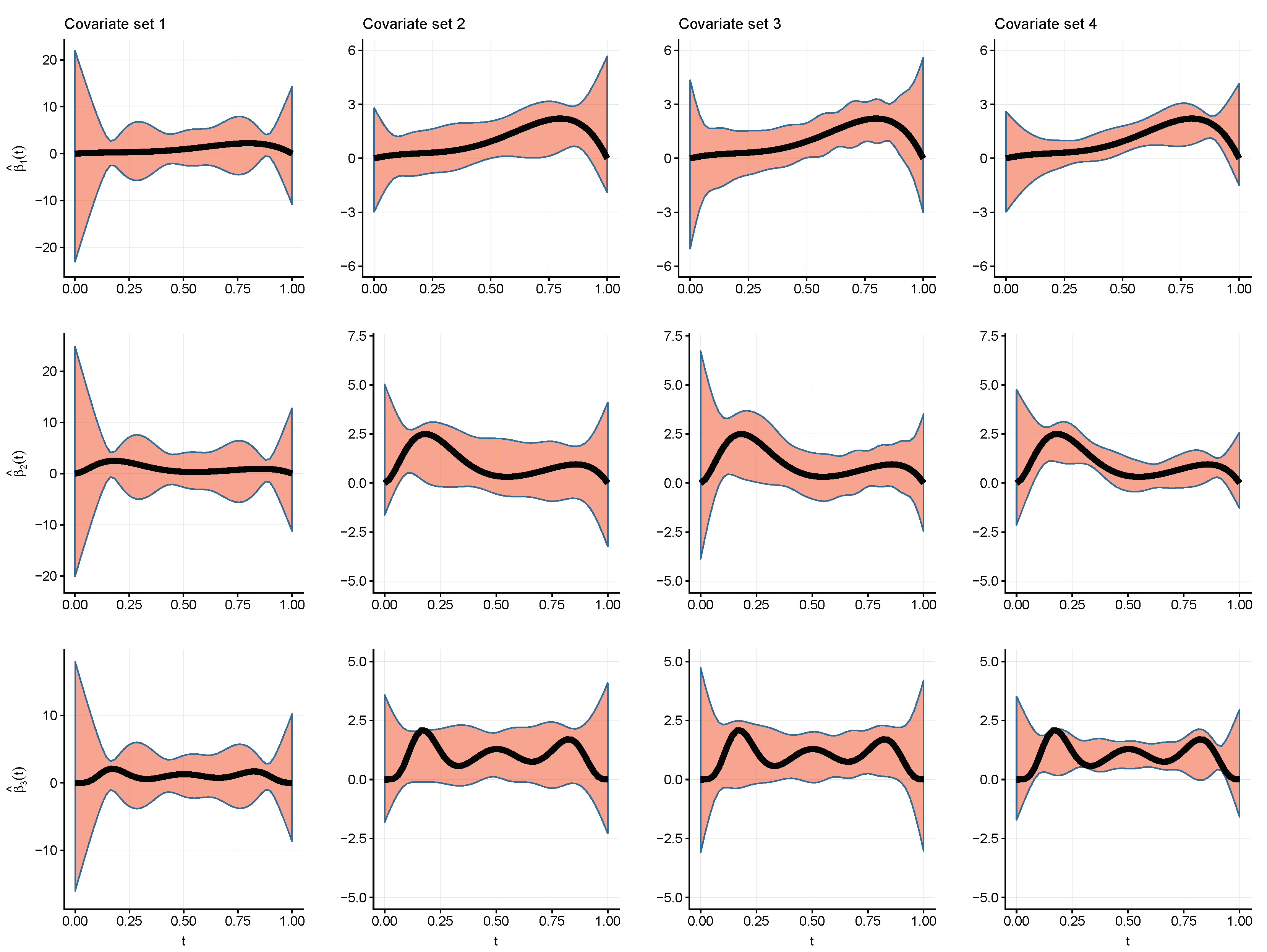

Recall that for smoothing parameter selection under a covariate set with a low

,

performed best or just as well as the other smoothing parameter selection methods. For covariate sets with a higher

,

was generally among the better smoothing parameter selection methods. To visualize the impact of

on the stability of the parameter curve estimate,

Figure 3 shows the resulting approximate expected value of the parameter curve estimator (computed across all parameter curve estimates in the simulation study) plus or minus two times the approximate standard deviation of the parameter curve estimator when using the preferred smoothing parameter selection method suggested by

Table 1,

Table 2 and

Table 3. For brevity, we only present the results for

. The parameter curve estimator, under Covariate Set 1, showed much higher variability than under the other covariate sets. Further, the covariate set with a higher

(Covariate Set 4) reflected the lowest variability. Similar behavior was exhibited at

and

. This behavior is consistent with the behavior of the

for a given covariate set and sample size. This reflects that a low

is associated with a less stable solution, which in turn may substantially increase the variability of a parameter curve estimator, where such variability would not be reflected in an observed confidence interval for the parameter curve.

In practice,

will need to be estimated due to its dependence on

. An estimate of this measure,

, is provided in (

6) using the estimate of

provided in

Section 3. The estimate of

will be dependent on a chosen value of the smoothing parameter. To better understand the impact of the chosen value of the smoothing parameter on (

6),

Table 5,

Table 6 and

Table 7 provide the average value of (

6), computed as the average over all simulated datasets, when parameter curves are

,

, and

, respectively, under each simulation setting and smoothing parameter selection method. On average, (

6) provides a reasonable estimate of

in the simulation settings considered.

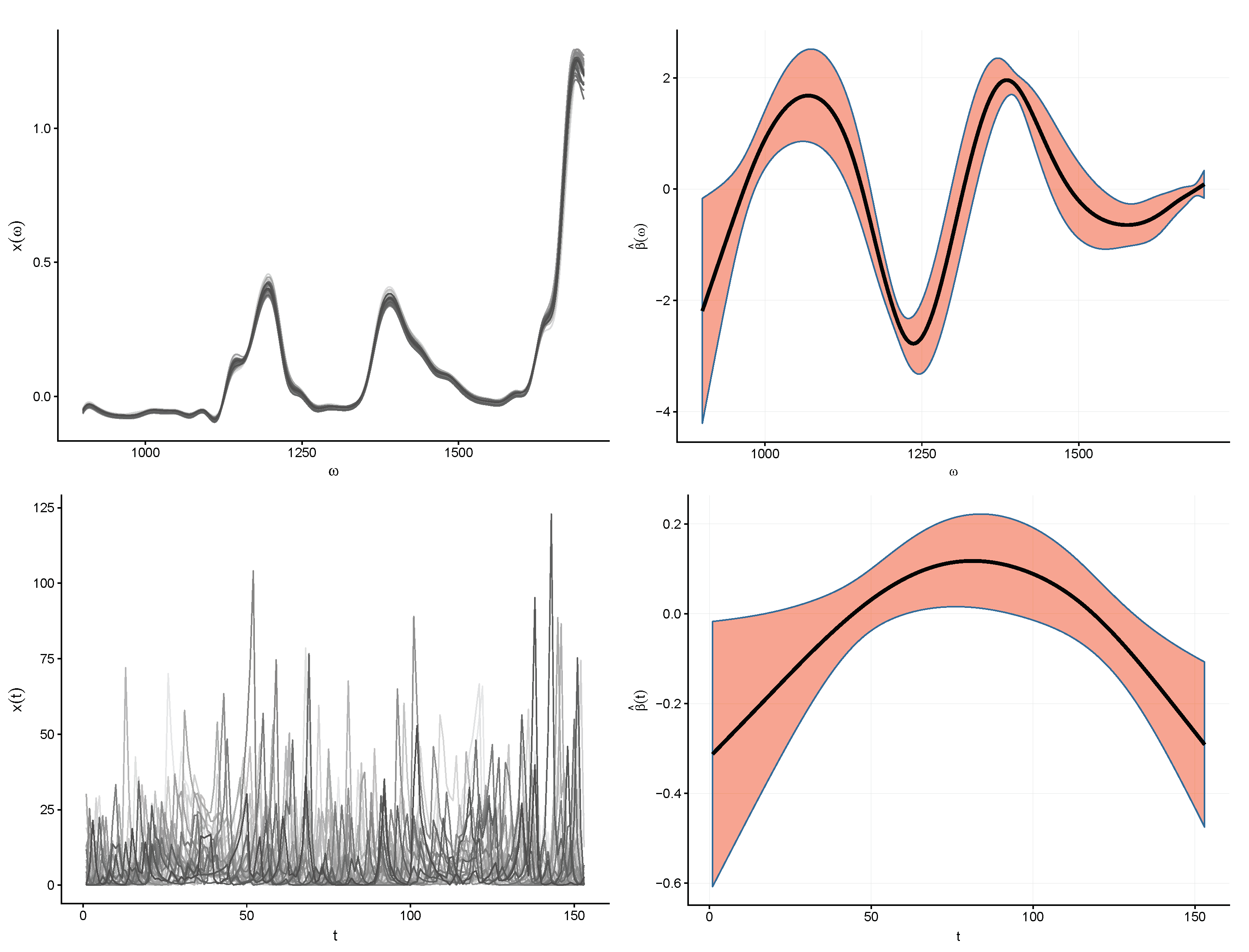

6. A Real Data Illustration

In this section, we illustrate the use of

with two real datasets: the gasoline dataset described by Kokoszka and Reimherr [

2] and used by Reiss and Ogden [

18] and the streamflow and precipitation data described by Masselot et al. [

34]. Model (

1) was applied to both datasets to model the relationship between a functional covariate and scalar response. The gasoline data consist of near-infrared reflectance spectra of 60 gasoline samples (measured in 2-nm intervals from 900 to 1700 nm), as well as the octane numbers for each gasoline sample. These data are available in the R package refund [

35]. The aim of modeling these data using model (

1) is to determine the association between the octane rating (response variable) and the near-infrared reflectance spectra curves (covariate curves). We represent the near-infrared reflectance spectra discretized measurements and the parameter curve as functions using the methods described in

Section 3, with

and

. The top left panel of

Figure 4 contains the near-infrared reflectance spectra curves,

for

. The estimated number of independent pieces of information in these covariate functions for estimating parameter curve is 5. Since

, we use the

for smoothing parameter selection because it performed just as well or better in our simulation study when

was small. The upper right panel shows the parameter curve estimate along with 95% point-wise confidence intervals for

when using

for smoothing parameter selection. Note that the estimated parameter curve has a positive effect in the intervals (

) and (

), implying that higher values of near-infrared reflectance spectra are associated with higher octane levels in these intervals. Lower values of near-infrared reflectance spectra are associated with lower octane levels in the intervals (

) and (

). For a given

, approximate point-wise confidence intervals may be constructed using the variance of the parameter curve estimator,

, where

[

16]. Since

is low, our simulation study suggest that the variability of the parameter curve is greater than what is reflected by the confidence interval.

We briefly summarize the streamflow and precipitation data, referring to Masselot et al. [

34] for further information on the study and the corresponding data. The data consist of yearly observations of the sum daily streamflow values from 1 July to 31 October, and yearly precipitation time series from 1 June to 31 October for years 1981–2012. These data were measured in areas of the Dartmouth River located in a region of the province of Quebec, Canada. In this study, investigators were interested in estimating and forecasting yearly total streamflow (scalar response) using the corresponding yearly precipitation profile (functional covariate). The precipitation time series and the parameter curve are represented as functions using the methods described in

Section 3, with

and

. However, since precipitation measurements are non-negative, we constrain the functional representation of the precipitation measurements to be non-negative by imposing non-negative constraints on the B-spline coefficients. The bottom left panel of

Figure 4 contains the precipitation curves,

for

, covering the daily time domain from June to October in a given year. For these data,

. We use the

since it performed just as well or better than the other methods in our simulation study when

was large. The lower right panel shows the parameter curve estimate along with 95% point-wise confidence intervals for

when using

for smoothing parameter selection. The estimated parameter curve shows that the effect of precipitation on total streamflow is negative in June, as well as in October. Due to the size of the

, our simulation study suggests that this parameter curve estimate is more stable than the one estimated for the gasoline data.

7. Discussion

We present a measure,

, for a FLM with a scalar response to determine how much information is present in the covariate curves for estimating the parameter curve

when the parameter curve is identifiable. To estimate the parameter curve in model (

1), penalized regression spline estimation is used, and we summarize several commonly used methods for selecting the smoothing parameter. To assess the stability of the parameter curve estimator under varying levels of

, we examine the

of a parameter curve estimator. The results show that the greater is

, the more stable is the parameter curve estimator in that it produces a smaller

than when

is smaller. Further, we assess the impact of

on smoothing parameter selection, and, while a one-to-one relationship is not clear between

and the performance of a smoothing selection method,

tends to perform just as well as or better than other methods when

is small, whereas

tends to perform just as well as or better than other methods when

is large.

Overall, our simulation study showed that the size of

impacts both the stability of a parameter curve estimator and the performance of the smoothing parameter selection methods. Future work will study if these results are consistent under alternative parameter curve estimation procedures. An interesting direction for future work is to investigate if shape constraints on the parameter curve could serve as a remedial measure for improving stability of the parameter curve estimator, particularly when

is low. Scenarios in which shape constraints are imposed on the parameter curve do arise in practice in a FLM with a scalar response (see [

36,

37] for some recent examples). Identifying problematic data in functional regression models remains critical and an on-going challenge. We hope this study encourages others to consider approximating

when applying model (

1) so that the amount of information present in the covariate curves for estimating parameter curve can be gauged. This, in turn, may provide guidance in choosing a smoothing parameter selection method, as well as considering the stability of the parameter curve estimate.