Abstract

The notion of median in one dimension is a foundational element in nonparametric statistics. It has been extended to multi-dimensional cases both in location and in regression via notions of data depth. Regression depth (RD) and projection regression depth (PRD) represent the two most promising notions in regression. Carrizosa depth is another depth notion in regression. Depth-induced regression medians (maximum depth estimators) serve as robust alternatives to the classical least squares estimator. The uniqueness of regression medians is indispensable in the discussion of their properties and the asymptotics (consistency and limiting distribution) of sample regression medians. Are the regression medians induced from RD, PRD, and unique? Answering this question is the main goal of this article. It is found that only the regression median induced from PRD possesses the desired uniqueness property. The conventional remedy measure for non-uniqueness, taking average of all medians, might yield an estimator that no longer possesses the maximum depth in both RD and cases. These and other findings indicate that the PRD and its induced median are highly favorable among their leading competitors.

1. Introduction

Regular univariate sample median defined as the innermost (deepest) point of a data set is unique (If the sample median is defined to be the point that minimizes the sum of its distances to sample points (i.e., , where are the given n sample points in ), then it is not unique. However, to overcome this drawback, conventionally it is defined as , where are ordered values of ’s and is the floor function. Namely, it is the innermost point (from both left and right direction) or the average of two deepest sample points. Hence, it is unique). The population median defined as the -th quantile (Recall, for any univariate distribution function F, and for , the quantity is called the pth quantile or fractile of F (see page 3 of Serfling (1980) [1])) of the underlying distribution (there are other versions of definition) is also unique. The most outstanding feature of the univariate median is its robustness. In fact, among all translation equivariant location estimators, it has the best possible breakdown point (Donoho (1982) [2]) (and the minimum maximum bias if underlying distribution has a unimodal symmetric density (Huber (1964) [3]). Besides serving as a promising robust location estimator, the univariate median also provides a base for a center-outward ordering (in terms of the deviations from the median), an alternative to the traditional left-to-right ordering.

To extend the univariate median to multidimensional settings and to share its outstanding robustness property and an alternative ordering scheme is desirable for multidimensional data. One approach, among others, is via notions of data depth. General notions of data depth have been increasingly pursued and studied (Liu, et al. (1999) [4], Zuo and Serfling (2000) (ZS00) [5]) since the pioneer proposal of Tukey (1975) [6] (see Donoho and Gasko (1992) [7]). Besides Tukey depth, another prevailing depth, among others, is the projection depth (PD) [5] (Liu (1992) [8], and Zuo (2003) [9]).

Depth notions in location have also been extended to regression. Regression depth (RD) of Rousseeuw and Hubert (1999) (RH99) [10], the most famous, exemplifies a direct extension of Tukey location depth to regression. Projection regression depth (PRD) of Zuo (2018a) (Z18a) [11] is another example of the extension of prominent PD in location to regression. The RD and PRD represent the two leading notions of depth in regression ([11]) which satisfy desirable axiomatic properties. Carrizosa depth (Carrizosa (1996) (C96)) [12] (defined in Section 2.2) is one of the other notions of depth in regression ([11]). One of the outstanding advantages of depth notions is that they can be directly employed to introduce median-type deepest estimating functionals (or estimators in the empirical case) for the location or regression parameters in a multi-dimensional setting based on a general min-max stratagem. The maximum (deepest) regression depth estimator (also called regression median) serves as a robust alternative to the classical least squares or least absolute deviations estimator for the unknown parameters in a general linear regression model:

where ⊤ denotes the transpose of a vector, and vector and parameter vector are in (), and is a random variable in . One can regard the observations as a sample from random vector .

Robustness of the median induced from RD and PRD have been investigated in Van Aelst and Rousseeuw (2000) (VAR00) [13] and Zuo (2018b) [14], respectively. These medians, just like their location or univariate counterpart, indeed possess high breakdown point robustness.

Regression median, as the deepest regression hyperplane, just like their location or univariate counterpart, is expected to be unique because non-uniqueness would result in vagueness in the inference (prediction and estimation) via regression median. Uniqueness is the indispensable feature and axiomatic property when one (i) investigates the population median, or (ii) deals with the convergence in probability or in distribution of the sample regression median to its inevitably unique population version (iii) it is also an essential property in the computation of the sample regression medians for the convergence of approximate algorithms. The uniqueness issue of multidimensional location medians has been addressed in Zuo (2013) [15].

Are the medians induced from regression depth notions via the min-max scheme generally unique? Answering this question is the goal of this article. It turns out that the regression depth-induced medians are not necessarily unique. The conventional remedy measure for this issue is taking average of all. It, however, might not work (in the sense that the resulting estimator might no longer possess the maximum depth) for both RD of RH99 and of C96. On the other hand, PRD-induced regression medians are unique.

The rest of article is organized as follows. Section 2 introduces leading regression depth notions and induced medians and show these medians indeed recover the regular univariate sample median in the special univariate case. Empirical examples of regression depth and medians and their behavior are illustrated in Section 3. Section 4 establishes general results on uniqueness of regression medians. Brief concluding remarks in Section 5 end the article.

2. Maximum Depth Functionals (Regression Medians)

Let be a generic non-negative functional on , where and is a collection of distributions of ( and P are used interchangeably).

If satisfies four axiomatic properties: (P1) (regression, scale and affine) invariance; (P2) maximality at center; (P3) monotonicity relative to any deepest point and (P4) vanishing at infinity, then it is called a regression depth functional (see [11] for details). The maximum regression depth functional, or the regression median, can be defined as

Note that might not be unique, and a conventional remedy measure is to take the average of all maximum depth points. Unfortunately, this could lead to a scenario where the resulting functional (or estimator) might not have the maximum depth any more. For detailed discussions on and , see [11]. In the following we elaborate three examples.

2.1. Median Induced from Regression Depth of RH99

Definition 1.

For any and the joint distribution P of in (1), [10] defined the regression depth of β, denoted hence by , to be the minimum probability mass that needs to be passed when tilting (the hyperplane induced from) β in any way until it is vertical. The maximum regression depth functional (regression median) is defined as

The definition above is rather abstract and not easy to comprehension, many characterizations of it, or equivalent definitions, have been given in the literature though, see, e.g., [11] and references cited therein.

2.2. Median Induced from Carrizosa Depth of C96

Among regression depth notions investigated in [11], Carrizosa depth

for any and underlying probability measure P associated with , was a pioneer regression depth notion introduced in [12] and thoroughly investigated in [11], where . As characterized in [11] (see Proposition 2.2 there), it turns out that ()

The maximum regression depth functional (or regression median) was then defined as

As shown in [11], always exists if the assumption: (A) , for any vertical hyperplane , holds. Unfortunately, as violates (P3) generally (see [11]), we will not focus on it in the sequel. On the other hand, under (A) RD above satisfies (P1)–(P4).

2.3. Median Induced from Projection Regression Depth of Z18a

Hereafter, assume that R is a univariate regression estimating functional which satisfies

(A1) regression, scale and affine equivariant. That is, respectively,

- , , and

- , , and

- , .

where are random variables. Throughout, the lower case x stands for a variable in while the bold for a vector in (). is the distribution of vector .

(A2) , where .

(A3) is continuous in and , and quasi-convex in , for , .

Let S be a positive scale estimating functional that is scale equivariant and location invariant.

R will be restricted to the form and T will be a univariate location functional that is location, scale and affine equivariant (see pages 158–159 of Rousseeuw and Leroy (1987) (RL87) [16] for definitions). Hereafter we assume that (A0) for any (see (I) of Remarks 4.1 for the explanations).

Examples of T include, among others, the mean, weighted mean, and quantile functionals. A particular example of is , where Med stands for the median functional. Typical examples of S include the standard deviation and weighted deviation functionals (Wu and Zuo (2008) [17]) and the median of absolute deviations (MAD) functional.

Equipped with a pair of T and S, we can introduce a corresponding projection based regression estimating functional. By modifying a functional in Marrona and Yohai (1993) [18] to achieve scale equivarance, [11] defined

which represents unfitness of at w.r.t. T along the . If R is a Fisher consistent regression estimating functional, then for some (the true parameter of the model) and . Thus overall, one expects to be small and close to zero for a candidate , independent of the choice of and . The magnitude of measures the unfitness of along the . Taking the supremum over all yields

the unfitness of at w.r.t. T. Now applying the min-max scheme, [11] obtained the projection regression estimating functional (also denoted by ) w.r.t. the pair

where the projection regression depth (PRD) functional was defined in [19] as

Just like S (which is for achieving scale invariance and is nominal), T sometimes is also suppressed in above functionals for simplicity. The authors of [11] showed that PRD satisfies (P1)–(P4).

For robustness consideration, in the sequel, is the fixed pair , unless otherwise stated. Hereafter, we write rather than . For this special choice of T and S, we have that

To end this section, we show that the three maximum depth estimators above indeed deserve to be called regression median since they recover the regular univariate sample median in the special univariate case. (The result below also holds true for the population case).

Proposition 1.

For univariate data, the , and all recover the univariate sample median.

Proof.

(i) For , this has already been discussed and claimed in [10] (page 390). So we only need to focus on the other two.

(ii) For , we no longer can use (5) and have to invoke (4). Note that in this case (no slope term any more).

That is, . The latter immediately leads to (the average of all solutions).

(iii) For , first we note that (without loss of generality, assume that )

When , it reduces to the following

It is readily seen that

where the first equality follows from (12) and the oddness of median operator, the second one follows from the translation equivalence (see page 249 of [16] for definition) of the median as a location estimator. The last display means that recovers the sample median. □

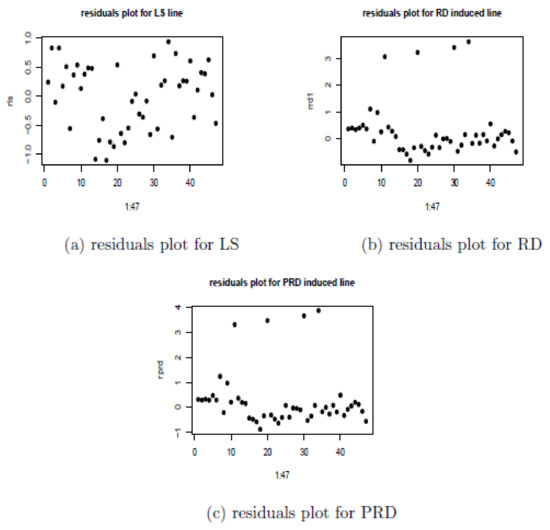

3. Examples of Regression Depths and Regression Medians

For a better comprehension of depth notions and depth-induced medians in the last section, we present empirical examples below. We will confine attention to RD and PRD only since is just the probability mass carried by the hyperplane determined by .

Example 1.

Example 3.1(Empirical RD and PRD) . What do empirical RD and PRD look like? To answer the question, 30 random bivariate standard normal points are generated (plotted in Figure 1) and RD and PRD are computed w.r.t. these points.

Figure 1.

Thirty bivariate standard normal points.

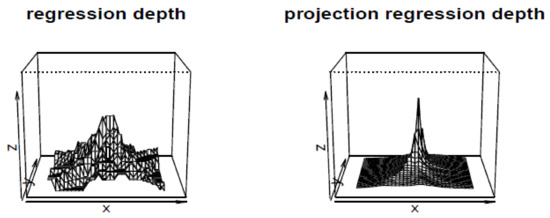

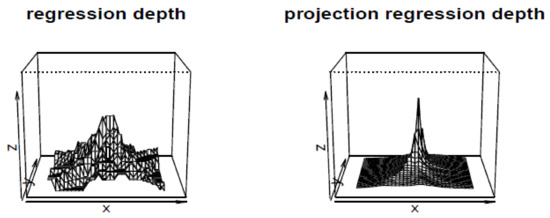

We select 961 equally spaced grid points from the square of with range of and , then treat each point as a and compute its regression depth (RD and PRD) w.r.t. the 30 bivariate normal points. The depths of these 961 points are plotted in Figure 2.

Figure 2.

Regression depth (RD) (left) and projection regression depth (PRD) (right) of 961 candidate parameter ’s w.r.t. 30 bivariate standard normal points.

Inspecting the Figure reveals that (i) sample RD function is a step-wise increasing function (each step in this case is ). For this roughly symmetric data case, it can attain maximum depth around the center of symmetry (the origin), while (ii) on the other hand, PRD is a strictly monotonically increasing function and attains its maximum value at the center of symmetry, sharply contrasting the behavior of RD around the center (one has a unique maximum depth point and the other is opposite (multiple maximum depth points)).

Example 2.

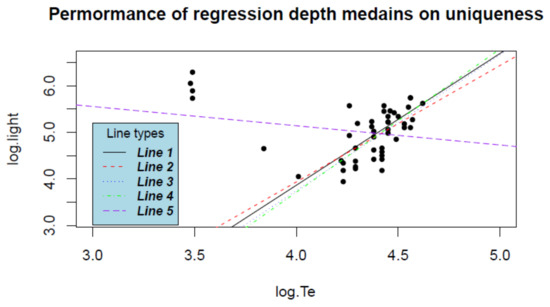

Uniqueness of medians induced from empirical RD and PRD This example illustrates the uniqueness behavior of the regression depth (RD and PRD)-induced medians in the empirical distribution case via a concrete example on the real data from the Hertzsprung–Russell diagram of the star cluster CYG OB1 (see Table 3 in chapter 2 of [16]), which contains 47 stars in the direction of Cygnus. Here, x is the logarithm of the effective temperature at the surface of the star (), and y is the logarithm of its light intensity (); see Figure 3 for the plot of the data set.

Figure 3.

Five regression depth median lines based on the data from Hertzsprung–Russell diagram of the star cluster CYG OB1 (solid black for ; dashed red, dotted blue, and dotdash green all for ; longdash purple for LS).

Five regression lines are plotted in Figure 3. Among them, three (dashed red, dotted blue, and dotdash green) are regression medians from RD, one (solid black) from PRD, and the other (longdash purple) is the least squares line. Note that the classical least squares regression estimator (as well as many traditional regression estimators) could be regarded as a depth-induced median under the general “objective depth” framework (see [11]). Thus, for the benchmark purpose, the least squares line is also plotted in Figure 3 alongside the four other median lines.

The LS line also justifies the legitimacy of the existence of RD- and PRD-induced medians (as robust alternatives) since the LS line fails to capture the main-sequence/pattern of the data cloud (stars) and is heavily affected by four giant stars whereas the other four depth medians resist the four leverage points (outliers) and catch the main trend/cluster.

It turns out that there exist three maximum depth lines (medians) induced from RD. Each of the three lines goes through exactly two data points. In terms of (intercept, slope) form, they are (−6.065000, 2.500000), (−8.586500, 3.075000), and (−7.903043, 2.913043). These lines are plotted by dash red, dotted blue, and dotdash green in Figure 3. All three possess regression depth . Note that the average of the three deepest lines is (−7.518181, 2.829348), which possesses RD . That is, it no longer possesses the maximum regression depth.

On the other hand, there exists only one maximum regression line (median), (−7.453665, 2.829416), induced from PRD, plotted in solid black in Figure 3, with PRD value . Incidentally, the LS line is (6.7934673, −0.4133039), plotted in longdash purple.

The computation issues of RD have been discussed in RH99, Rousseeuw and Struyf (1998) [20], and Liu and Zuo (2014) [21]. For the discussion on the computation of the PRD and induced regression medians, see Zuo (2019b) (Z19b) [22].

After obtaining , one can immediately get the fitted line (which has actually already been plotted in Figure 3), and the predicted values: , and hence the residuals: . All these involve the uniqueness issue, we first need to have a unique fitted line for each method. Here due to the non-uniqueness of the deepest RD lines, we select the first deepest line among the three as a representative. Then we construct a table with nine columns: 1st is the id’s of observations, 2nd is the explanatory variable values, 3rd is dependent variable values, 4th–6th are the predicted values for LS, RD, PRD methods, respectively, 7th–9th are the residuals for LS, RD, PRD methods, respectively (Table 1).

Table 1.

Residuals analysis for three regression methods.

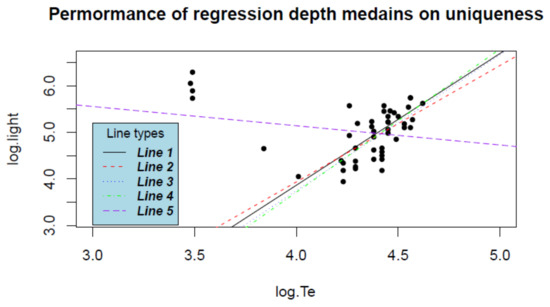

Next the residuals of three methods are plotted below in the Figure 4.

Figure 4.

Residuals plots for three types of regression methods for the star cluster data. (a) Residuals plot for the LS method. (b) Residuals plot for one of deepest line induced from RD. (c) Residuals plot for the unique deepest line induced from PRD.

Inspecting the residuals plot immediately reveals that in this case, the residuals of LS method are rather deceptively homogeneous, its plot fails to identify any outliers whereas the robust regression median lines all can easily spot the four obvious outliers and two groups of stars. Based on the residual plot, one can make some conclusions. For example, the four outliers are not necessarily errors but might be exceptional observations (they come from a different group of stars), and LS line does not provide a good fit (only explained of total variation in observations of y).

In the empirical distribution case, one can always take the average over all regression medians to take care of the non-uniqueness issue. Nevertheless, challenges arise computationally if there exist infinitely many medians in higher dimensions. Furthermore, the average sometimes will no longer be a deepest line/hyperplane (as seen in this example and more in Section 4).

The non-uniqueness issue is more vital with the population case since without the uniqueness, there will be no uniquely defined median and it is impossible to discuss the convergence (or consistency) and the limiting distribution of the unique empirical regression median.

4. Uniqueness of Regression Medians

From the empirical example in the previous section, we see that there can exist multiple empirical regression medians induced from RD while in the case of PRD there exists a unique one. These results are just empirical special examples and not for general cases. In the following, we address general cases and draw general conclusions.

4.1. Non-Uniqueness of and

Under certain symmetry assumption (e.g., regression symmetry of Rousseeuw and Struyf (2004) (RS04)) [19] and other conditions, the regression median induced from RD can be unique (see Theorem 3 and Corollary 3 of [19]). However, generally speaking, we have

Proposition 2.

is not unique in general. The average of all might not possess the maximum depth any more.

Proof.

A counterexample suffices.

In fact, the real data example 3.2 could serve as one counterexample, where one has three maximum depth lines and the average line no longer possesses the maximum RD value.

An even simpler counterexample could be constructed. Assume that there are three sample points , and . Then it is readily seen that three lines each of which formed by two sample points are and in terms of (intercept, slope) form and each line has the maximum RD whereas the average of all maximum depth lines is which has RD only . □

For special distributions, the median induced from Carrizosa depth can also be unique. But generally speaking, it is not.

Proposition 3.

is not unique in general. The average of all might not possess the maximum depth value any more.

Proof.

A counterexample suffices.

Denote by the hyperplane determined by for any and by the acute angle formed between the hyperplane and the horizontal hyperplane (y=0).

Assume that , (), , and each contains probability mass; any hyperline in contains no probability mass (); , and intersects with at a hyperline in the horizontal hyperplane .

Now in light of characterization (5) of , it is readily seen that at each , D attains the maximum depth value .

Let , then it is readily seen that the D, and is no longer a hyperplane with the maximum depth value. □

4.2. Uniqueness of

For two univariate random variables defined on the sample space , stands for , . We say that is strictly monotonic at point iff whenever , for any .

Proposition 4.

If (A0) holds and (i) is strictly monotonic at and (ii) satisfies (A1), (A2), and (A3), then exists uniquely.

Proof.

To prove the proposition, we first invoke the following result. □

Lemma 1

([11]). The PRD and satisfy the following propoerties.

- (i)

- The is regression, scale and affine equivariant in the sense thatrespectively.

- (ii)

- The maximum of PRD exists and is attained at a with .

- (iii)

- The PRD monotonically decreases along any ray stemming from a deepest point in the sense that for any and ,where is a maximum depth point of PRD for any .

Now we are in a position to prove the proposition.

Assume, w.l.o.g., that (since it does not involve and , it has nothing to do with the maximum depth point ). The existance of the maximum depth point (the regression median) is guaranteed in light of Lemma 4.1 above. We thus focus on the uniqueness. Assume that there are two maximum depth points . We seek a contradiction.

Let . By virtue of Lemma 4.1 above, is also a maximum depth point. By the invariance of the projection regression depth functional (see [11]) and Lemma 4.1 above, assume (w.l.o.g.) that .

For a given , write . In light of the continuity of T in , the generalized extreme value theorem on a compact set, and (A1), there exists a such that

For simplicity, denote by for . Then we have

Denote by the hyperline that connects and in the parameter space of . Consider two cases.

Case I does not concentrate on any single hyperplane. In light of this assumption, there exists at least one on in the parameter space such that . Assume (w.l.o.g.) that . By (15) and the strictly monotonicity of T, one has that for the defined in (14)

which is a contradiction. This completes the proof of the Case I.

Case II concentrates on a single hyperplane. This implies that there is a such that for any . This contradicts (A0), however. This completes the proof of Case II and thus the proposition.

Remark 1.

(I) (A0) automatically holds if has density or if is not degenerated to concentrate on a single dimensional hyperplane. The latter means all x lie on the same point for , and they lie on a single line for , and lie on a plane for , and so on.

(II) (A1), (A2) and (A3) hold for a large class of T, such as the mean, weighted mean (Wu and Zuo (2009) [23]), and quantile functionals.

(III) There also exists a large class of T that is strictly monotonic. For example (i) If = , then T is strictly monotonic at any β as long as the related expectations exist and whenever for any and . (ii) When , , where is the qth quantile associated with the random variable Z (i.e., ), then T is strictly monotonic at any β as long as the CDF of is not flat at β for a given .

(IV) The proposition covers the sample case. That is, when is replaced by its sample version in the proposition, we have the uniqueness of the sample regression median induced from PRD, which is very helpful in the practical computation of the median and consistent with the finding in Figure 2.

5. Concluding Remarks

5.1. Why Do We Care About the Non-Uniqueness of Regression Medians?

Uniqueness is actually implicitly assumed when we discuss the property (such as the Fisher consistency, regression, scale and affine equivariance, or asymptotic breakdown point) of regression medians. Without the uniqueness, (i) the sample regression median can never converge in probability or in distribution to its population version, (ii) deepest regression will yield more than one response and residual for a given , (iii) algorithms for the approximate computation of sample medians can never converge.

Uniqueness is so essential in our discussion of medians that there is a conventional remedy measure for non-uniqueness: to take average of all medians. This works in many scenarios, but not for and . This phenomenon for was first noticed by Mizera and Volauf (2002) [24] and Van Aelst et al. (2002) [25]. Concrete examples such as the real data Example 3.2 and the artificially constructed one in the proof of Proposition 4.1 are presented here though.

5.2. Why Do We Just Treat Three Regression Medians?

([12]) and RD ([10]) are two pioneer notions of regression depth. PRD was recently introduced in [11]. The latter systematically studied the three regression depth notions w.r.t. four axiomatic properties, that is, (P1), (P2), (P3) and (P4) (see Section 2). It is found out that both regression depth RD and projection regression depth PRD are real depth notions in regression since both satisfy (P1)–(P4). While the former needs an extra assumption (A) (see Section 2.2), the latter does not need any extra assumptions. On the other hand, Carrizosa depth violates (P3) in general, hence is not a real regression depth notion w.r.t. the definition in [23]. That motivates us to just focus on RD and PRD throughout.

5.3. Summary and Conclusions

In terms of robustness, both depth-induced medians are indeed robust. In fact, the median can asymptotically resist up to [13] contamination, whereas can resist up to [14] contamination without breakdown, sharply contrasting to the of the classical LS estimator.

In terms of efficiency, sample could possess a higher relative efficiency when compared with sample (see [22]).

Now in terms of uniqueness, again distinguishes itself from the leading depth median by generally possessing the desirable uniqueness property.

From the computational point of view, RD (and ) has an edge over PRD (and ). The former is relatively easier to compute than the latter (see [22]).

By virtue of the performance criteria above, we conclude that PRD and are promising options among the leading regression depths and their induced medians.

Funding

This research received no external funding.

Acknowledgments

The author thanks Hanshi Zuo for his careful proofreading of the manuscript and two anonymous referees for their helpful and constructive comments and suggestions, all of which have led to improvements in the manuscript. Special thanks go to the Managing Editor, Yuanyuan Yang for her enthusiastic invitation, encourage, and full support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Serfling, R.J. Approximation Theorems for Mathematical Statistics; John Wiley Sons Inc.: New York, NY, USA, 1980. [Google Scholar]

- Donoho, D.L. Breakdown Properties of Multivariate Location Estimators. Ph.D. Thesis, Harvard University, Cambridge, MA, USA, 1982. [Google Scholar]

- Huber, P.J. Robust estimation of a location parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

- Liu, R.Y.; Parelius, J.M.; Singh, K. Multivariate analysis by data depth: Descriptive statistics, graphics and inference. Ann. Stat. 1999, 27, 783–858, (With discussion). [Google Scholar]

- Zuo, Y.; Serfling, R. General notions of statistical depth function. Ann. Statist. 2000, 28, 461–482. [Google Scholar] [CrossRef]

- Tukey, J.W. Mathematics and the picturing of data. In Proceeding of the International Congress of Mathematicians, Vancouver; James, R.D., Ed.; Canadian Mathematical Congress: Montreal, QC, Canada, 1975; Volume 2, pp. 523–531. [Google Scholar]

- Dohono, D.L.; Gasko, M. Breakdown properties of location estimates based on halfspace depth and projected outlyingness. Ann. Stat. 1992, 20, 1803–1827. [Google Scholar] [CrossRef]

- Liu, R.Y. Data depth and multivariate rank tests. In L1-Statistical Analysis and Related Methods; Dodge, Y., Ed.; North-Holland: Amsterdam, The Netherlands, 1992; pp. 279–294. [Google Scholar]

- Zuo, Y. Projection-based depth functions and associated medians. Ann. Stat. 2003, 31, 1460–1490. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Hubert, M. Regression depth (with discussion). J. Am. Stat. Assoc. 1999, 94, 388–433. [Google Scholar] [CrossRef]

- Zuo, Y. On general notions of depth in regression. arXiv 2018, arXiv:1805.02046. [Google Scholar]

- Carrizosa, E. A characterization of halfspace depth. J. Multivar. Anal. 1996, 58, 21–26. [Google Scholar] [CrossRef]

- Van Aelst, S.; Rousseeuw, P.J. Robustness of Deepest Regression. J. Multivar. Anal. 2000, 73, 82–106. [Google Scholar] [CrossRef][Green Version]

- Zuo, Y.; Robustness of Deepest Projection Regression Depth Functional. Statistical Papers. 2019. Available online: https://doi.org/10.1007/s00362-019-01129-4 (accessed on 13 February 2020).

- Zuo, Y. Multidimensional medians and uniqueness. Comput. Stat. Data Anal. 2013, 66, 82–88. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Leroy, A. Robust Regression and Outlier Detection; Wiley: New York, NY, USA, 1987. [Google Scholar]

- Wu, M.; Zuo, Y. Trimmed and Winsorized Standard Deviations based on a scaled deviation. J. Nonparametr. Stat. 2008, 20, 319–335. [Google Scholar] [CrossRef]

- Maronna, R.A.; Yohai, V.J. Bias-Robust Estimates of Regression Based on Projections. Ann. Stat. 1993, 21, 965–990. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Struyf, A. Characterizing angular symmetry and regression symmetry. J. Stat. Plan. Inference 2004, 122, 161–173. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Struyf, A. Computing location depth and regression depth in higher dimensions. Stat. Comput. 1998, 8, 193–203. [Google Scholar] [CrossRef]

- Liu, X.; Zuo, Y. Computing halfspace depth and regression depth. Commun. Stat. Simul. Computat. 2014, 43, 969–985. [Google Scholar] [CrossRef]

- Zuo, Y. Computation of projection regression depth and its induced median. arXiv 2019, arXiv:1905.11846. [Google Scholar]

- Wu, M.; Zuo, Y. Trimmed and Winsorized means based on a scaled deviation. J. Stat. Plan. Inference 2009, 139, 350–365. [Google Scholar] [CrossRef]

- Mizera, I.; Volauf, M. Continuity of halfspace depth contours and maximum depth estimators: Diagnostics of depth-related methods. J. Multivar. Anal. 2002, 83, 365–388. [Google Scholar] [CrossRef]

- Van Aelst, S.; Rousseeuw, P.J.; Hubert, M.; Struyf, A. The deepest regression method. J. Multivar. Anal. 2002, 81, 138–166. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).