As a sudden disaster, fire often poses a serious threat to people’s lives and property. The latest figures from China’s National Fire and Rescue Administration reveal that a total of 908,000 fires were reported by fire and rescue teams throughout the nation in 2024, resulting in 2001 deaths, 2665 injuries, and direct economic losses of 7.74 billion yuan [

1]. These shocking figures highlight the urgency of fire prevention and control; thus, it is important to research efficient and accurate fire smoke detection technology. In the early stage of a fire, efficient flame and smoke detection can identify and give accurate warnings in time, saving time for emergency response. This technology can not only greatly improve the response speed of fire prevention and control, but also effectively reduce the casualty rate and property loss, and play an irreplaceable role in maintaining public safety. Therefore, conducting in-depth research on fire smoke detection technology and continuously improving detection accuracy is of great significance for safeguarding the lives and property of the people.

The detection of fire and smoke has always been a key area of research, and with the advancement of technology, detection techniques have continuously improved, from initial sensor-based detection to intelligent image recognition. In recent years, with the emergence of algorithms such as machine learning and deep learning, fire and smoke detection technology has also been advancing. Sensor-based detection primarily relies on physical characteristics such as the light intensity of flames, the concentration of smoke generation, and infrared thermal imaging. The use of various sensors for detection is easily affected by environmental factors. PARK et al. [

2,

3,

4,

5] conducted fire detection based on the physical characteristics of flames and fire scenes by analyzing the flame and the on-site environment. These studies used traditional image processing methods, which lack generalization ability and may not effectively address challenges posed by different types of fires or smoke, as well as complex environments. Later, with the emergence of machine learning, computer vision-based detection methods have become increasingly popular for fire detection. Qi X et al. [

6,

7,

8,

9,

10,

11] (Refer to

Table 1 of the article) improved fire detection classification and reduced false alarm rates by combining machine learning, deep learning, and computer vision methods.

With the advancement of deep learning technology, the YOLO series algorithm, as the main algorithm for first-stage object detection, has become the most used algorithm for flame and smoke detection by many researchers by virtue of its real-time performance and accuracy during detection. V.-H. Hoang [

12] proposed a hyperparameter tuning method based on Bayesian optimization to improve the performance of the YOLO model in fire detection. The method improves the detection accuracy and efficiency by automatically selecting hyperparameters, and the optimized model performs well in the fire identification task, providing an effective solution for the real-time fire monitoring system. S. Dalal [

13] proposed a hybrid feature extraction method combining the local binary mode (LBP) and convolutional neural network (CNN) and integrated it into the YOLO-v5 model for fire and smoke detection under different environmental conditions in smart cities. Saydirasulovich, S.N. [

14] improved the lightweight YOLOv5s model by combining deep separable convolution and the attention mechanism to improve the early detection effect of agricultural fires. The results show that the model can effectively detect early agricultural fires and reduce losses. In Chen, C. [

15], based on the PP-YOLO network structure, a stronger feature extraction module and improved loss function were introduced, which improved the model’s ability to identify small targets and flames in complex scenarios, effectively reduced false detections and missed detections, and provided a feasible solution for early fire warning. Deng et al. [

16,

17,

18,

19,

20,

21] (Refer to

Table 2 of the article) optimized the network architecture based on the YOLO series algorithms to enhance fire and smoke detection performance. By introducing novel modules and mechanisms—such as a dual-channel bottleneck structure, a small target detection strategy, and an efficient feature extraction and aggregation mechanism—the model improves the recognition capability for flames and smoke while reducing both False Positive and False Negative rates. Furthermore, the improved algorithm demonstrates enhanced adaptability and practicality across diverse environments. It emphasizes real-time performance and practicability, enabling the rapid and accurate detection of fire and smoke in actual monitoring systems. This provides robust technical support for early disaster warning. Talaat [

22] proposes an improved fire detection method for smart city environment optimization, which enhances the basic YOLO-v8 model by introducing the attention mechanism and feature fusion optimization, aiming to significantly improve the accuracy, real-time nature, and robustness of fire detection in complex urban scenarios, and finally realizes high-precision real-time fire warning capabilities. Deng, L. et al. [

23] proposed a lightweight AH-YOLO model based on adaptive receptive field modules and hierarchical feature fusion technology to realize the real-time remote monitoring of hangars. In order to overcome the limitations of existing forest fire detection algorithms in complex environments, such as insufficient feature extraction, high computational cost, and difficulty in deployment, Zhou, N. et al. [

24] introduced spatial multi-scale feature fusion and hybrid pooling modules to propose the YOLOv8n-SMMP forest fire detection model. Didis H M [

25] can analyze video in real time to detect early fire risks, which is suitable for large-scale rapid inspections, but may lead to missed detections due to signal blind spots. In contrast, the YOLO-CHBG model in this study is better at long-term monitoring tasks. Chaikarnjanakit T [

26] proposed a two-stage YOLO smoke detection model, which was combined with the dynamic risk warning mechanism to design a smoke detection dynamic warning system, which reduced its false alarm rate through two-level false alarm filtering.

Although existing fire detection methods are relatively well-established, achieving high-precision real-time detection remains challenging due to the rapidly changing dynamics and blurred boundaries characteristic of flames and smoke. The real-time performance of a model is primarily determined by its parameter count and computational complexity: generally, fewer parameters and lower computational requirements lead to faster inference speeds and stronger real-time capability. Therefore, when designing flame and smoke detection models, it is essential to prioritize two key optimizations while maintaining detection accuracy: first, model compression to enhance real-time detection performance, and second, enhancing feature representation capabilities to accurately capture the characteristics of fuzzy boundaries and ensure robust detection. This dual-pronged strategy, balancing lightweight design with feature enhancement, is critical for improving fire detection model performance.

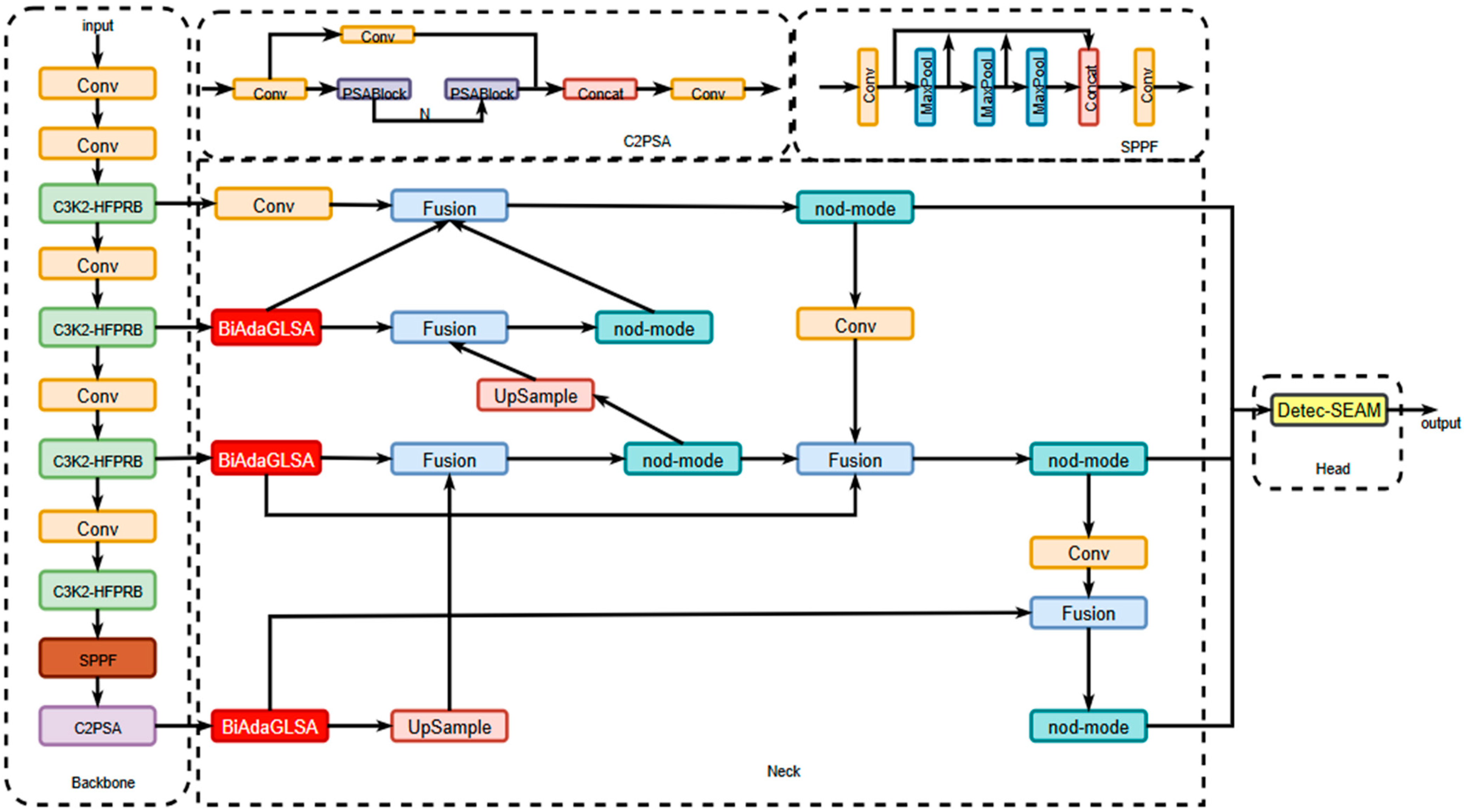

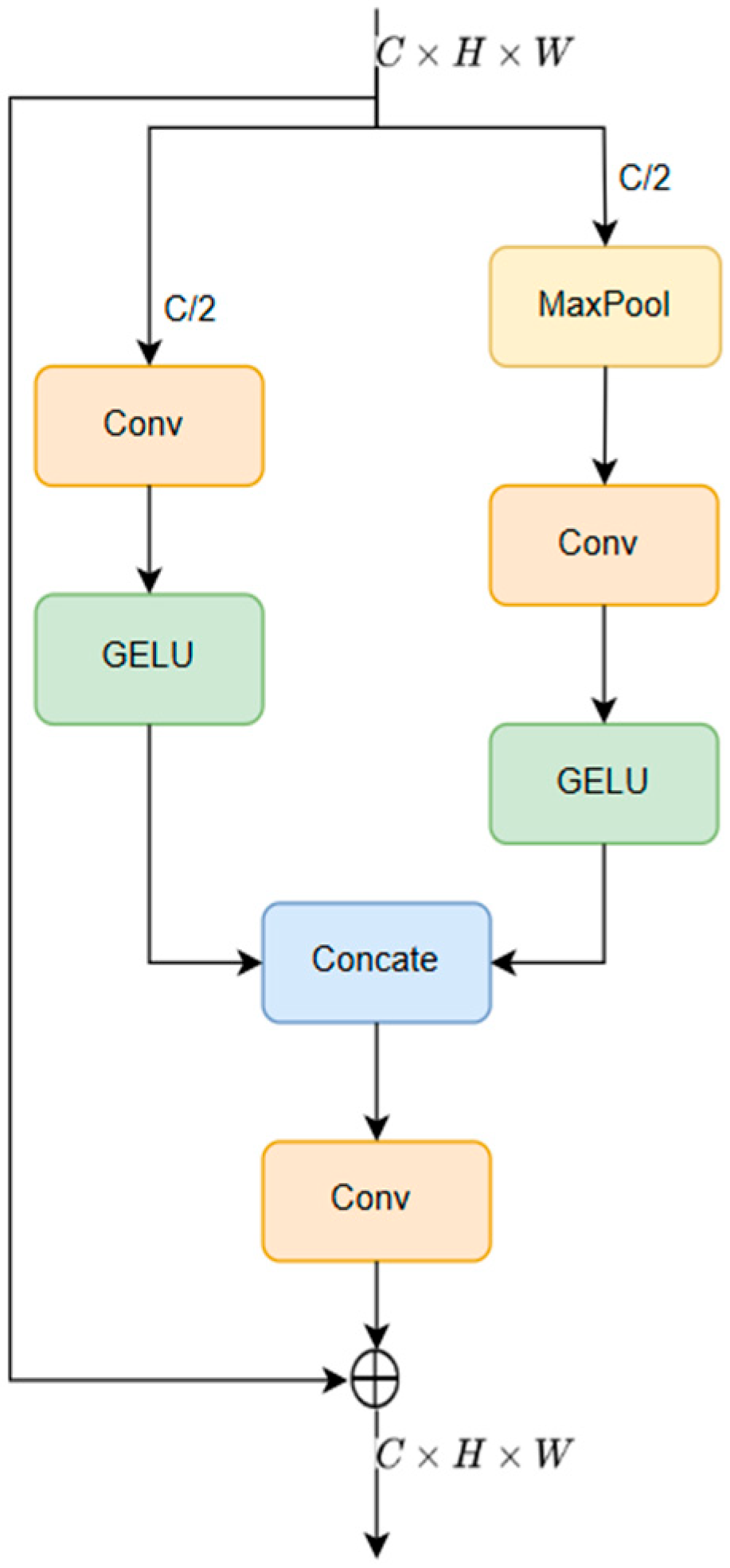

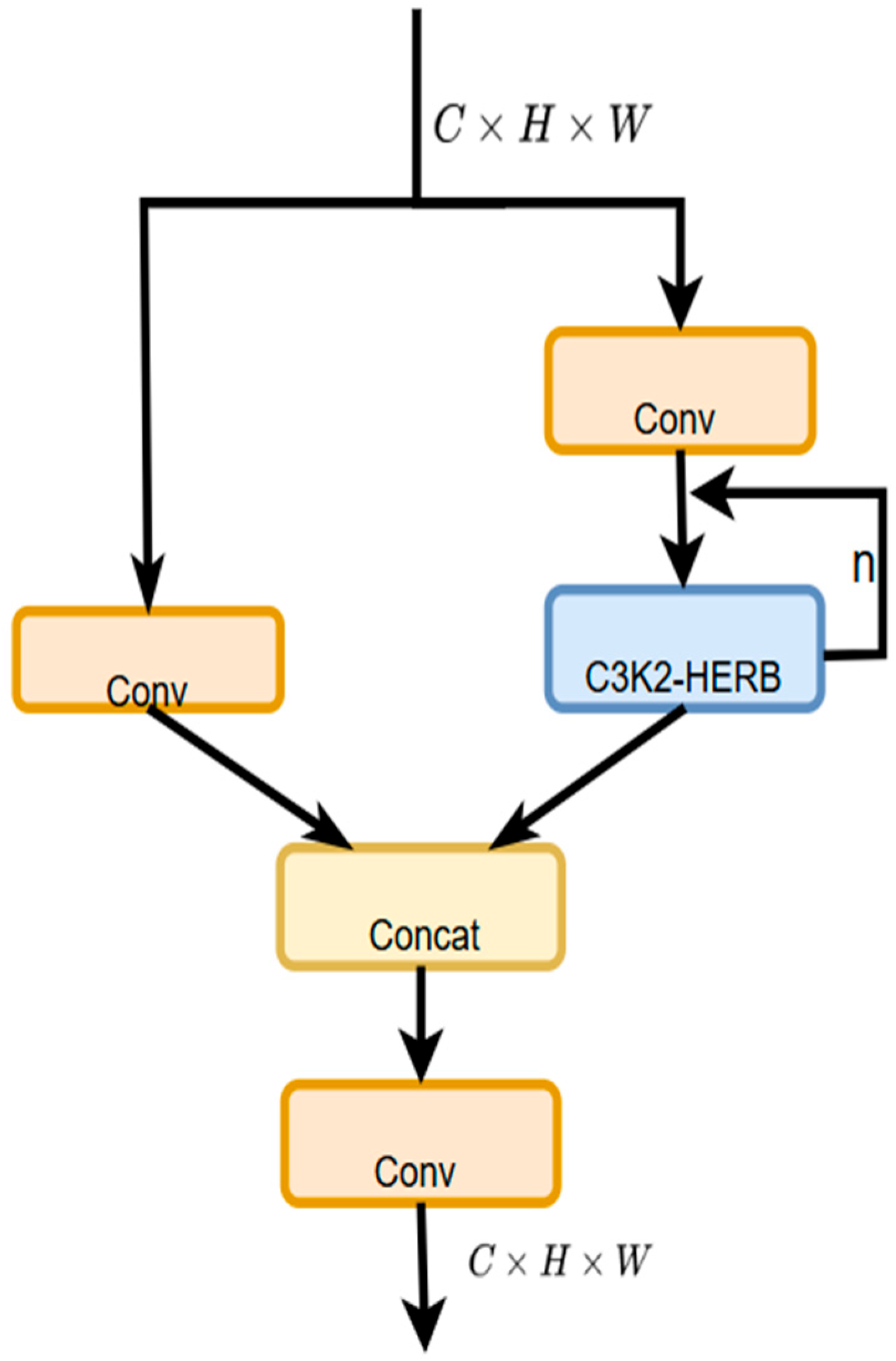

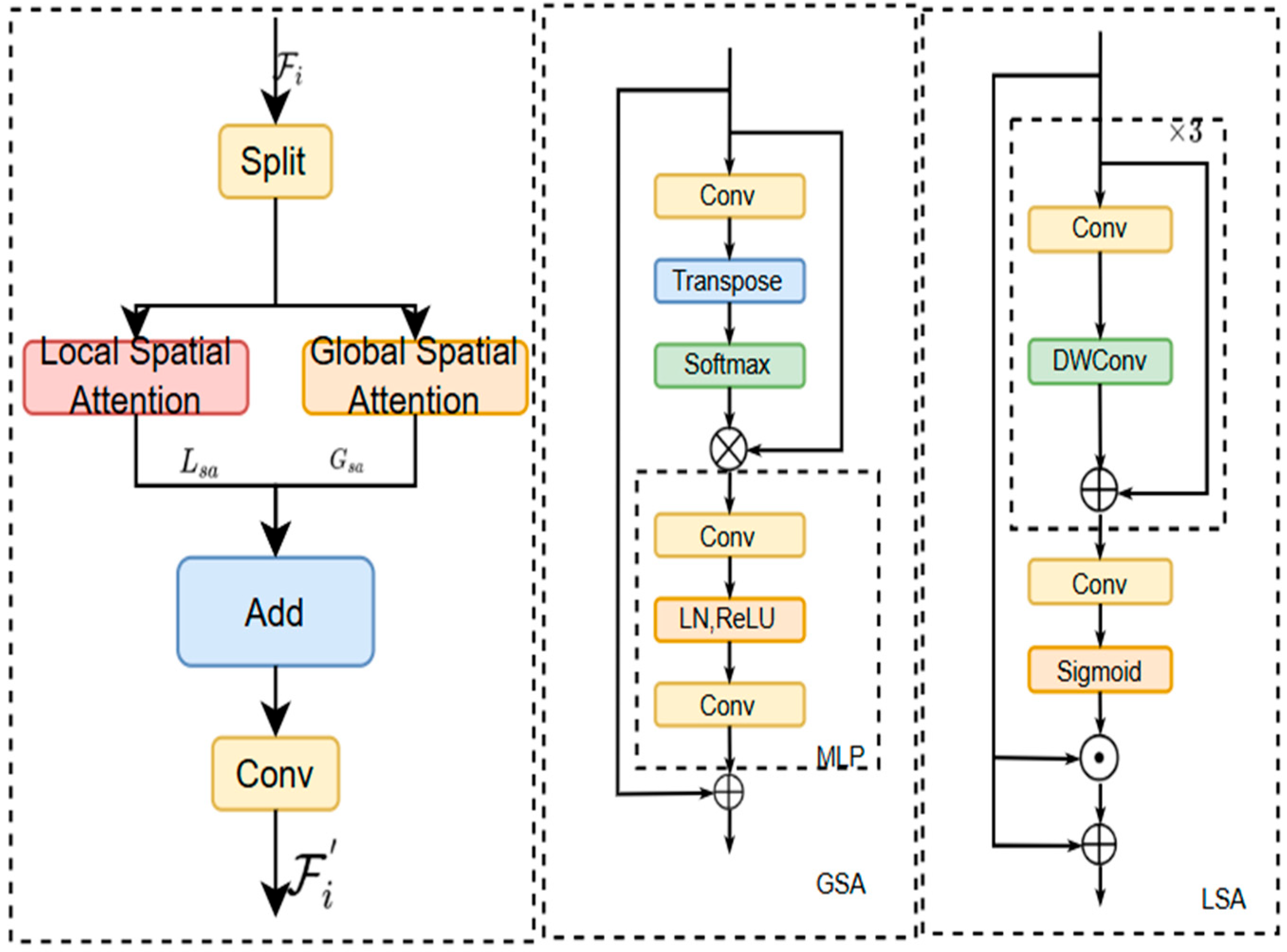

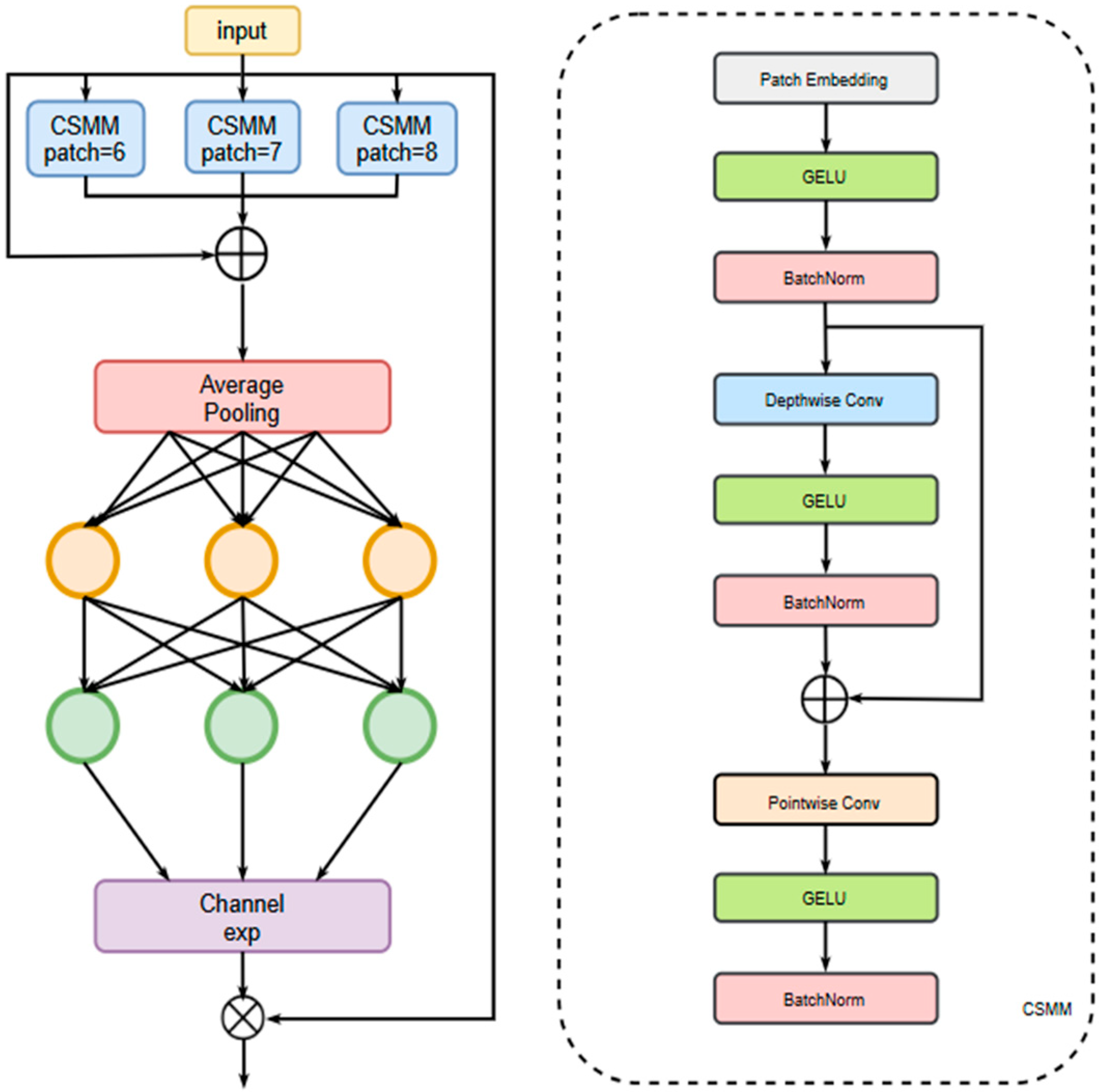

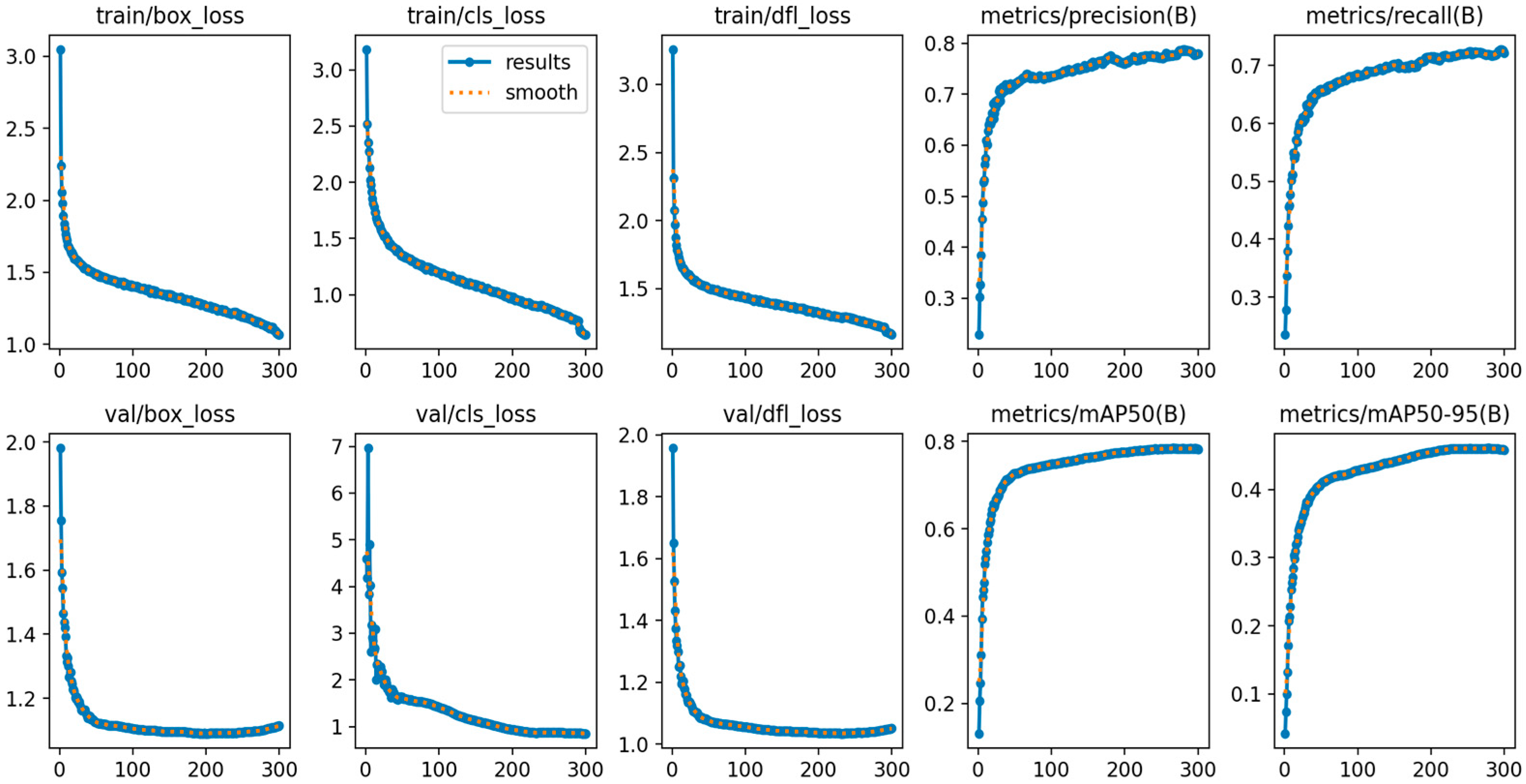

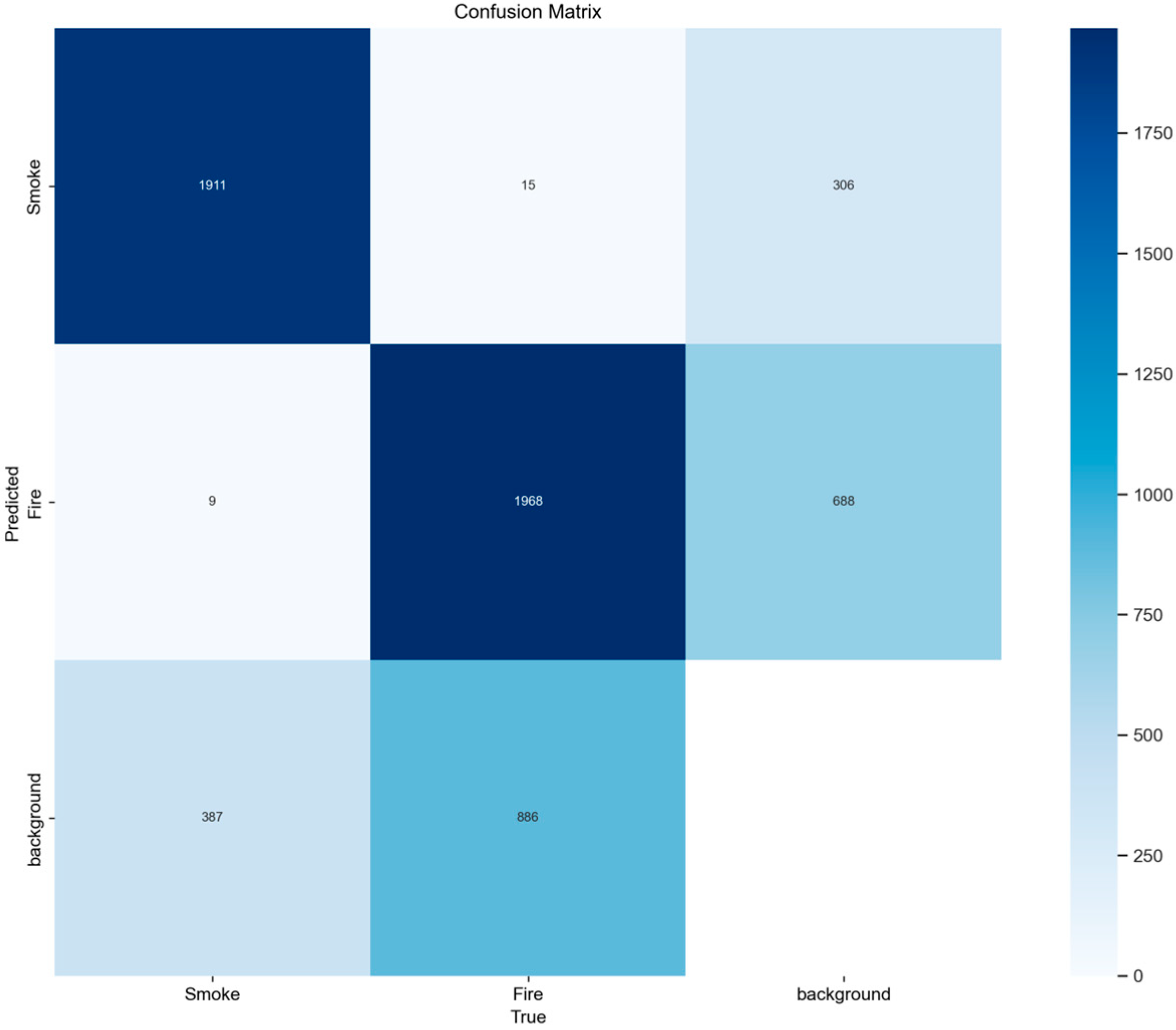

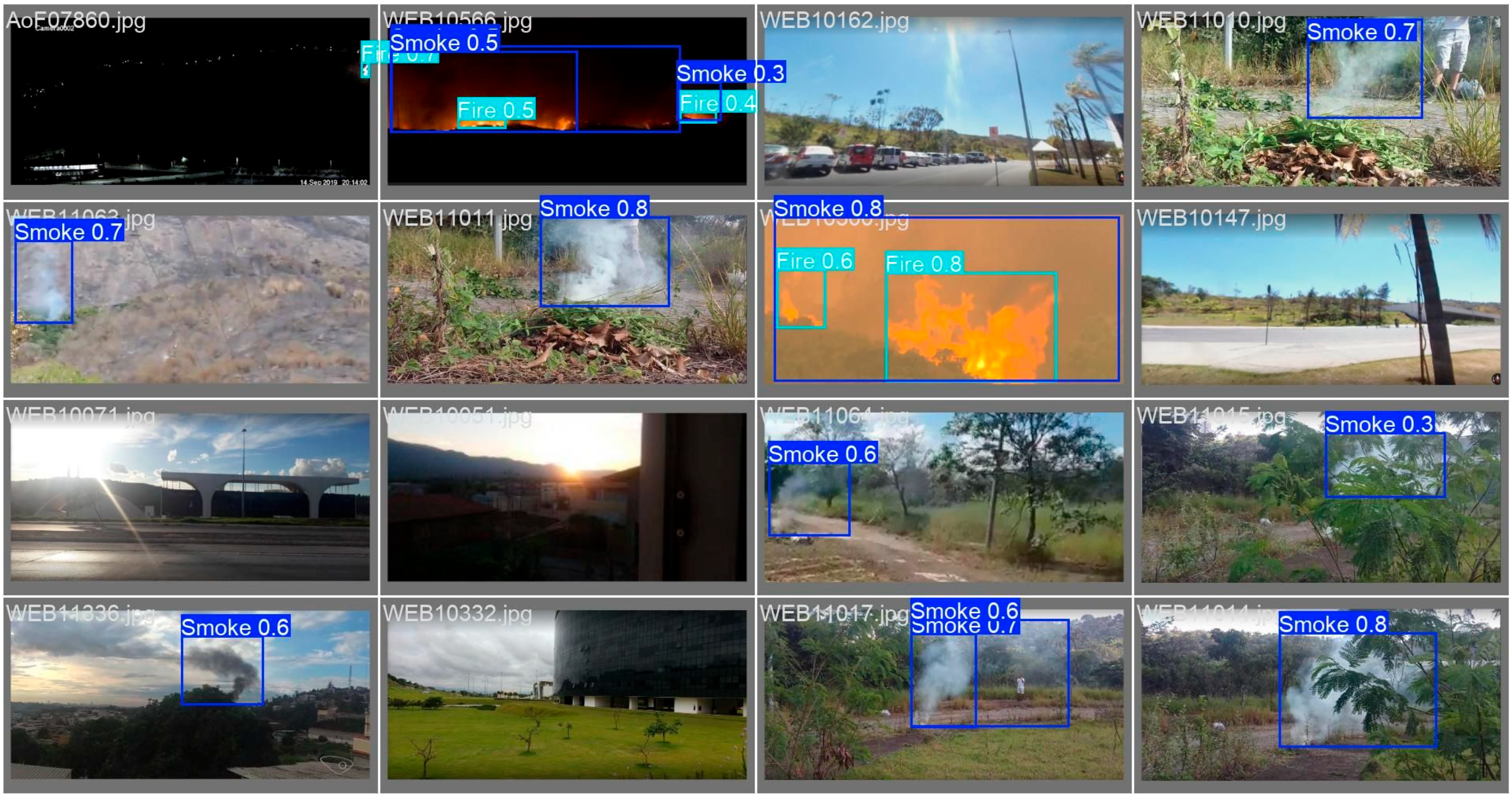

In this study, a YOLOv11-CHBG model with lightweight performance was proposed based on YOLOv11.