Lightweight UAV-Based System for Early Fire-Risk Identification in Wild Forests

Abstract

1. Introduction

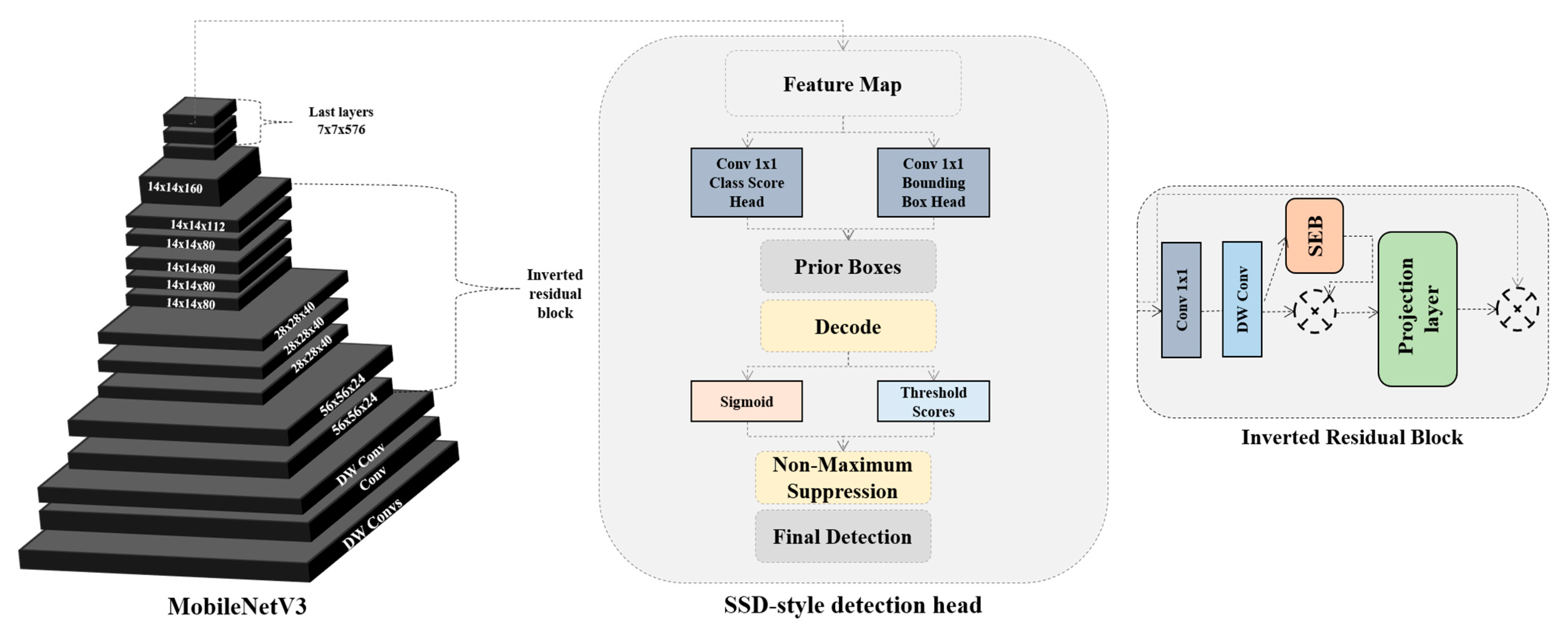

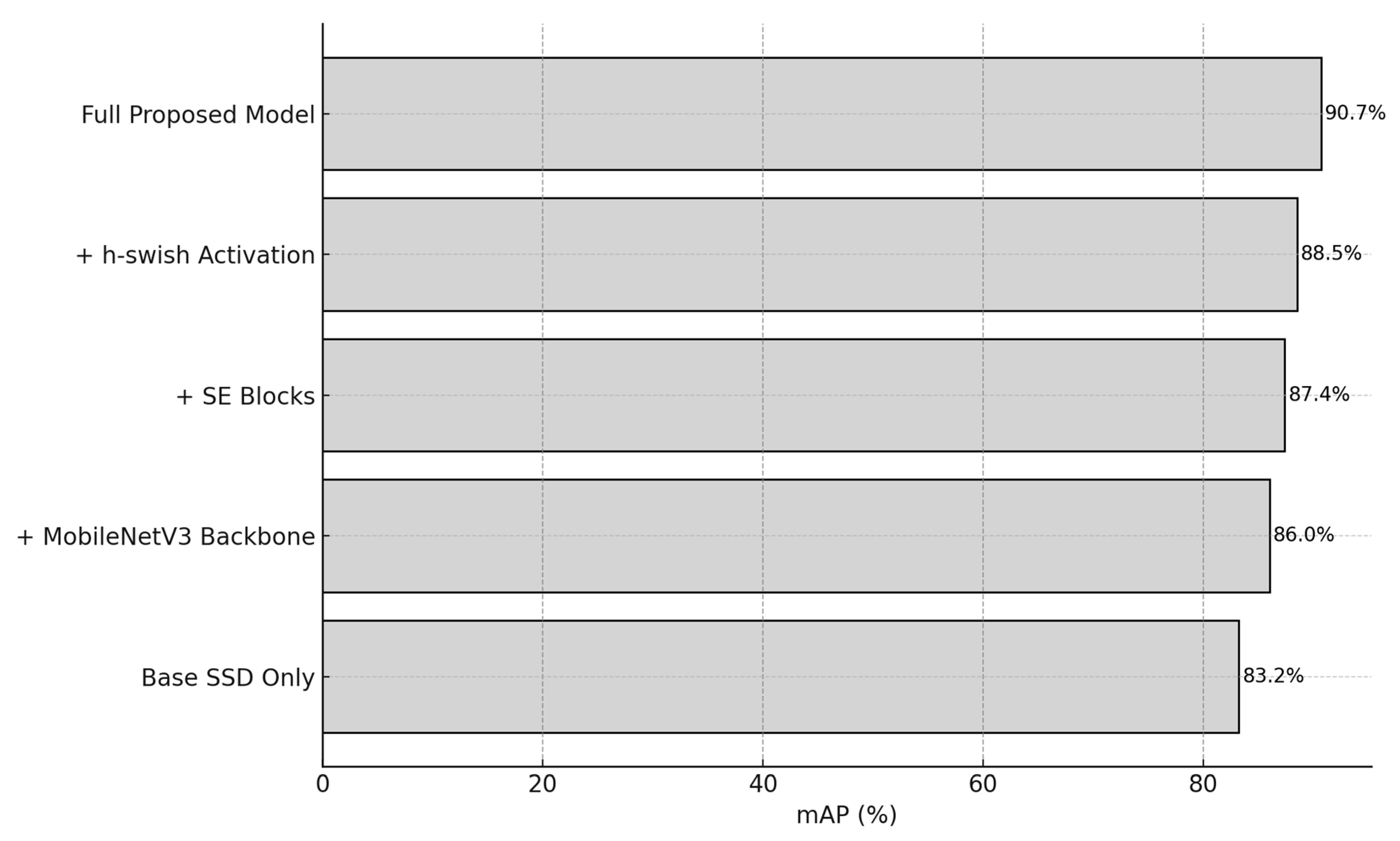

- We introduce a customized lightweight object detection architecture for UAV-based forest monitoring, which differs functionally from conventional MobileNetV3 + SSD pairings by integrating squeeze-and-excitation (SE) blocks in the mid-level feature layers, fine-tuning feature map resolution scales, and optimizing for small-object detection in cluttered, foliage-dense aerial scenes.

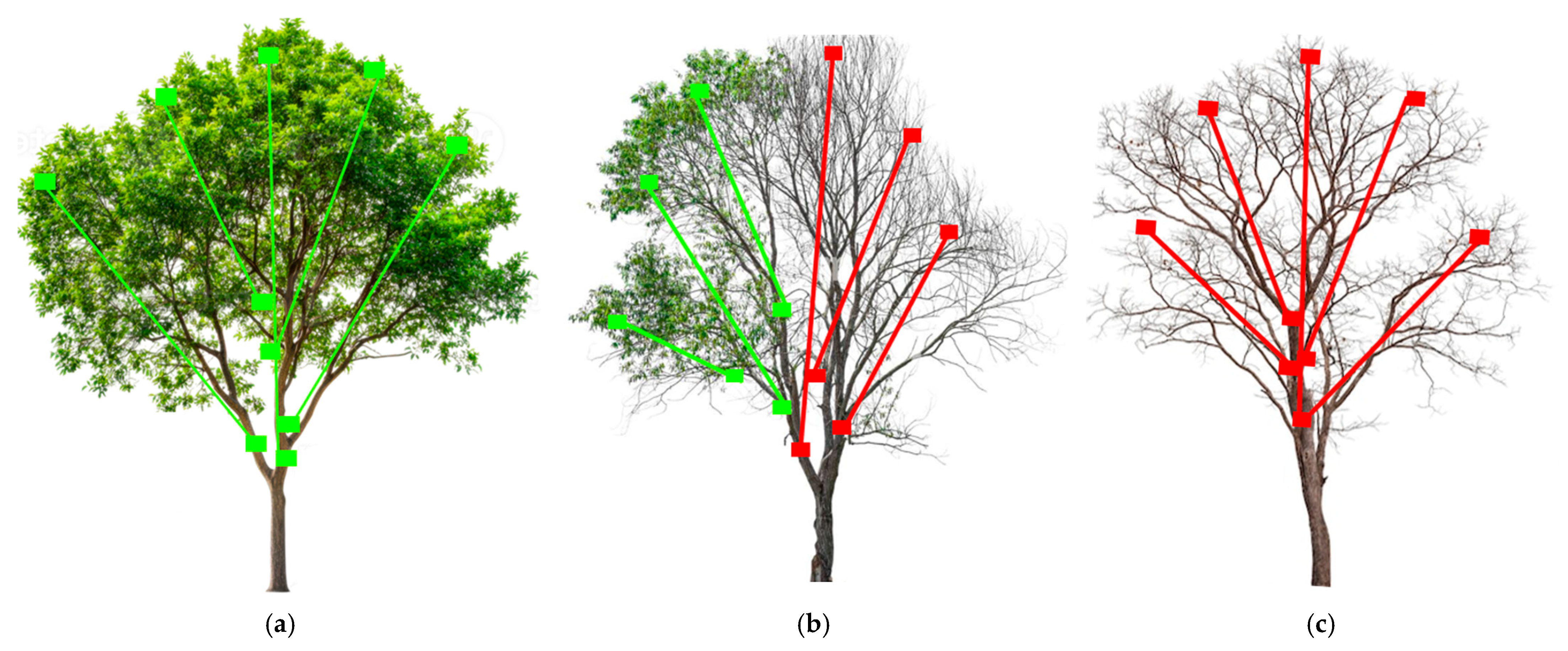

- We construct a domain-specific dataset of over 3000 annotated UAV images encompassing healthy, partially dead, and fully dead trees, collected across different seasons and environmental contexts to improve generalization under natural variability.

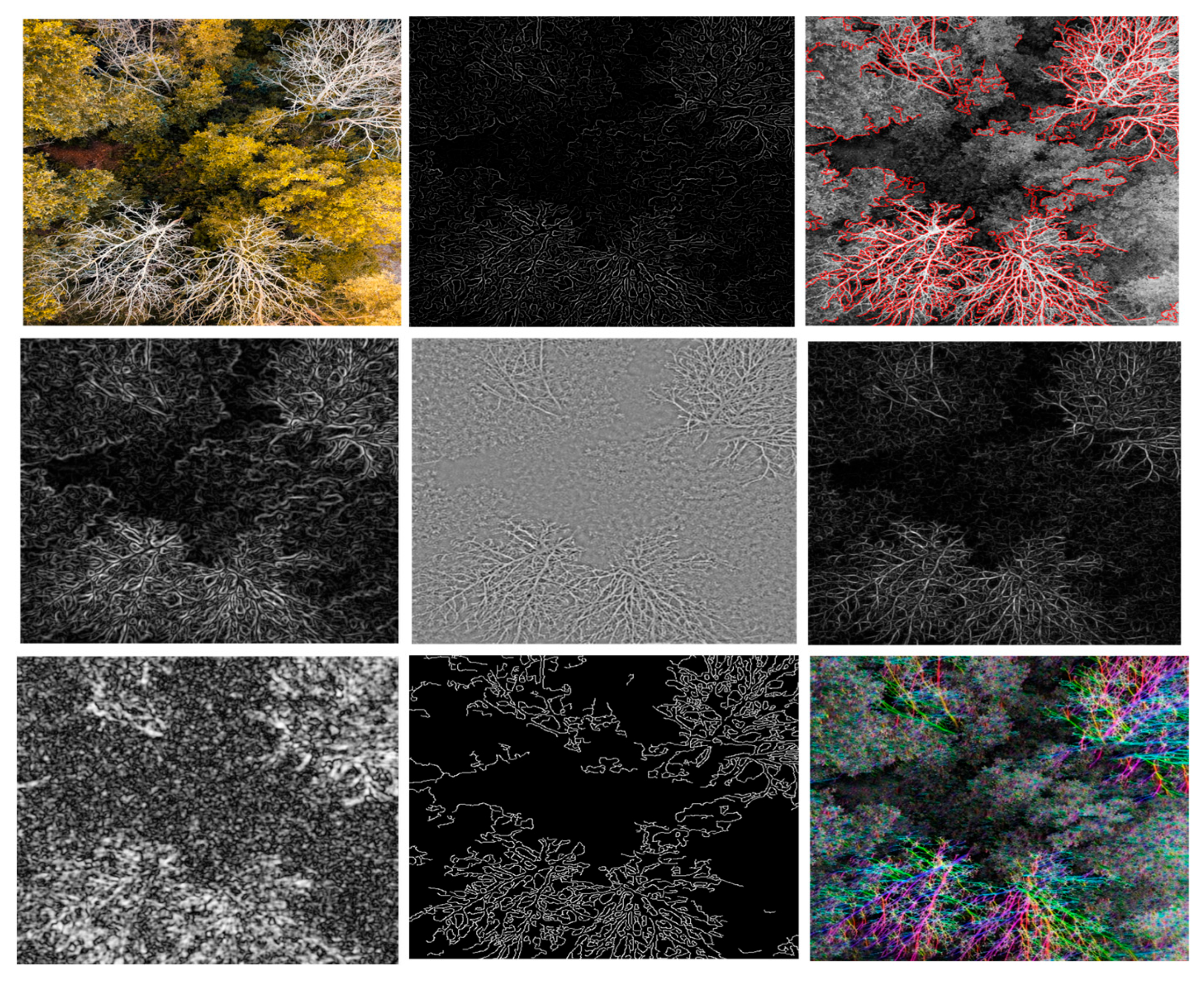

- We design a UAV-compatible pipeline that includes resolution-standardized image preprocessing, tree health-aware augmentation, and lightweight model tuning for Jetson Xavier NX deployment, ensuring a balance between high detection performance and real-time inference.

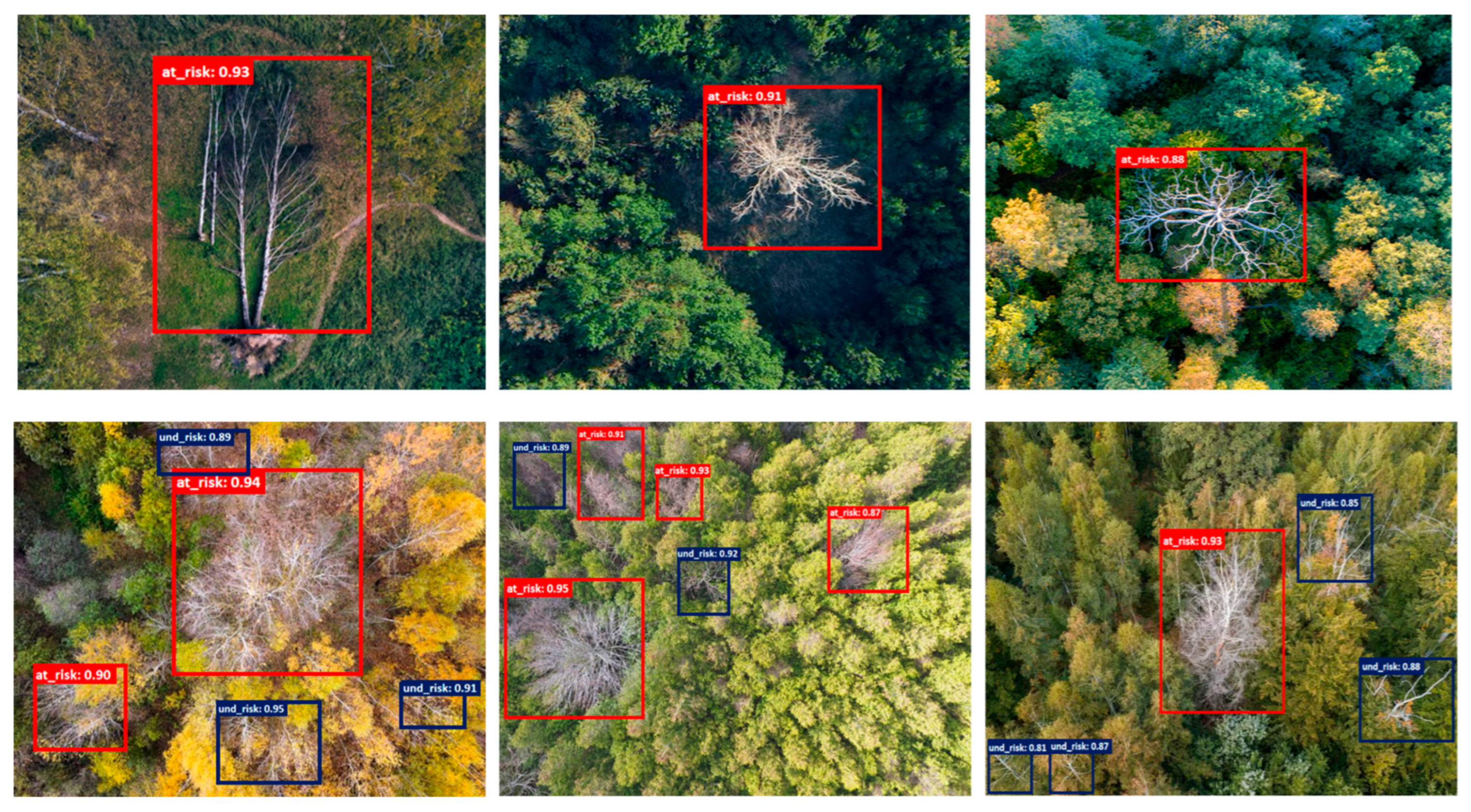

- We conduct a comprehensive comparison with 20 state-of-the-art detection models, demonstrating that our framework achieves superior precision and recall while maintaining a minimal model size and low latency suitable for edge deployment.

2. Related Works

3. Materials and Methods

3.1. Baseline Models

3.2. The Proposed Model

4. The Experiment and Results

4.1. Dataset

4.2. The Experimental Results

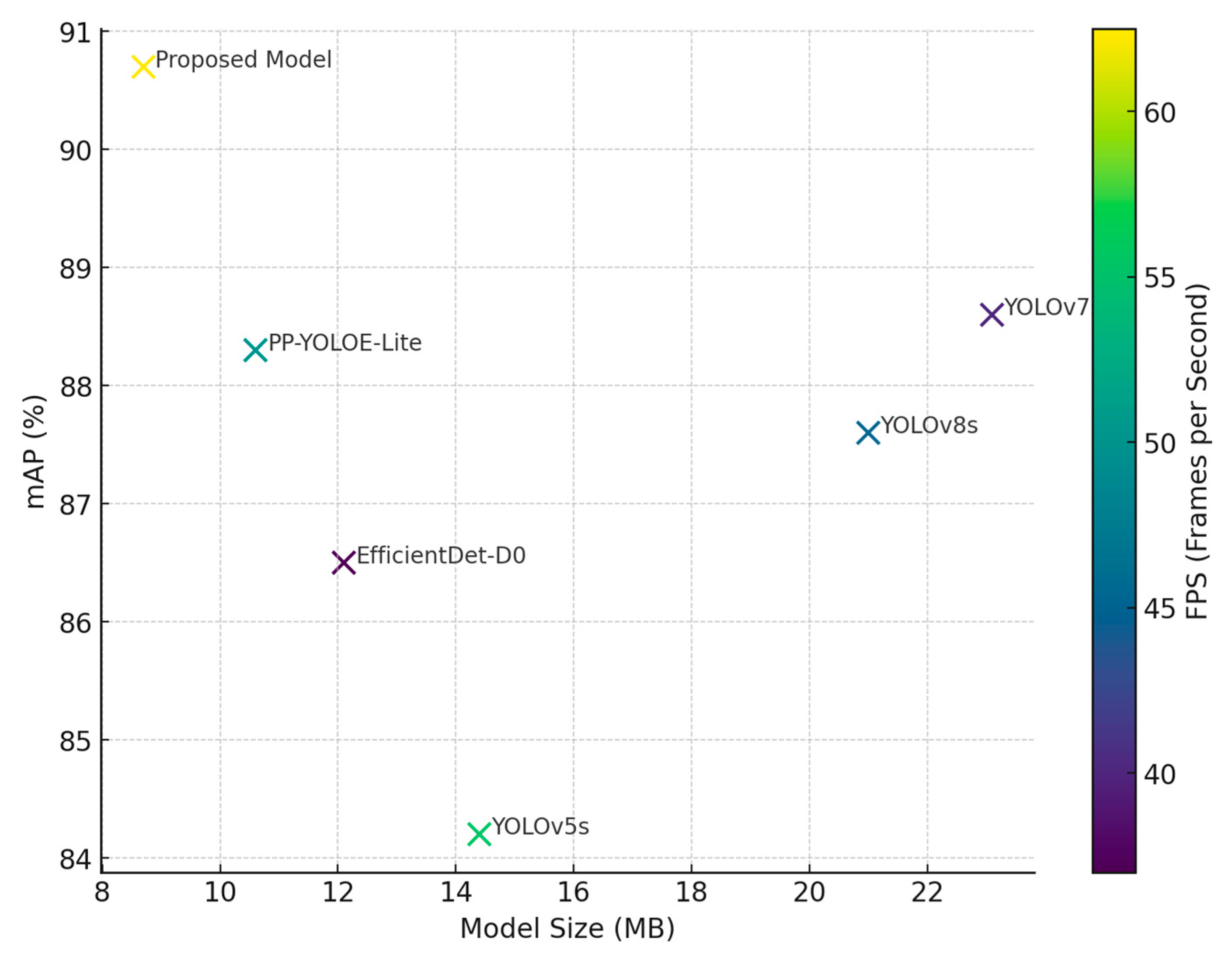

4.3. Comparing with SOTA Models

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bolan, S.; Sharma, S.; Mukherjee, S.; Isaza, D.F.G.; Rodgers, E.M.; Zhou, P.; Hou, D.; Scordo, F.; Chandra, S.; Siddique, K.H.; et al. Wildfires under changing climate, and their environmental and health impacts. J. Soils Sediments 2025, 25, 1057–1073. [Google Scholar] [CrossRef]

- Calderisi, G.; Rossetti, I.; Cogoni, D.; Fenu, G. Delayed Vegetation Mortality After Wildfire: Insights from a Mediterranean Ecosystem. Plants 2025, 14, 730. [Google Scholar] [CrossRef] [PubMed]

- Dai, D.; Yu, D.; Gao, W.; Perry, G.L.; Paterson, A.M.; You, C.; Zhou, S.; Xu, Z.; Huang, C.; Cao, D.; et al. Leaf Dry Matter Content Is Phylogenetically Conserved and Related to Environmental Conditions, Especially Wildfire Activity. Ecol. Lett. 2025, 28, e70056. [Google Scholar] [CrossRef] [PubMed]

- Aibin, M.; Li, Y.; Sharma, R.; Ling, J.; Ye, J.; Lu, J.; Zhang, J.; Coria, L.; Huang, X.; Yang, Z.; et al. Advancing forest fire risk evaluation: An integrated framework for visualizing area-specific forest fire risks using uav imagery, object detection and color mapping techniques. Drones 2024, 8, 39. [Google Scholar] [CrossRef]

- Liu, K.; Qin, B.; Hao, R.; Chen, X.; Zhou, Y.; Zhang, W.; Fu, Y.; Yu, K. Genetic analyses reveal wildfire particulates as environmental pollutants rather than nutrient sources for corals. J. Hazard. Mater. 2025, 485, 136840. [Google Scholar] [CrossRef] [PubMed]

- Tezcan, B.; Eren, T. Forest fire management and fire suppression strategies: A systematic literature review. Nat. Hazards 2025, 121, 10485–10515. [Google Scholar] [CrossRef]

- Prados, A.I.; Allen, M. Key Governance Practices That Facilitate the Use of Remote Sensing Information for Wildfire Management: A Case Study in Spain. Remote Sens. 2025, 17, 649. [Google Scholar] [CrossRef]

- Honary, R.; Shelton, J.; Kavehpour, P. A Review of Technologies for the Early Detection of Wildfires. ASME Open J. Eng. 2025, 4, 040803. [Google Scholar] [CrossRef]

- Watt, M.S.; Holdaway, A.; Camarretta, N.; Locatelli, T.; Jayathunga, S.; Watt, P.; Tao, K.; Suárez, J.C. Mapping Windthrow Risk in Pinus radiata Plantations Using Multi-Temporal LiDAR and Machine Learning: A Case Study of Cyclone Gabrielle, New Zealand. Remote Sens. 2025, 17, 1777. [Google Scholar] [CrossRef]

- Junttila, S. Remote sensing approaches for assessing and monitoring forest health. In Forest Microbiology; Academic Press: Cambridge, MA, USA, 2025; pp. 419–431. [Google Scholar]

- Liu, H.; Zhang, F.; Xu, Y.; Wang, J.; Lu, H.; Wei, W.; Zhu, J. Tfnet: Transformer-based multi-scale feature fusion forest fire image detection network. Fire 2025, 8, 59. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Bakhtiyor Shukhratovich, M.; Mukhiddinov, M.; Kakhorov, A.; Buriboev, A.; Jeon, H.S. Drone-Based Wildfire Detection with Multi-Sensor Integration. Remote Sens. 2024, 16, 4651. [Google Scholar] [CrossRef]

- Seidel, L.; Gehringer, S.; Raczok, T.; Ivens, S.N.; Eckardt, B.; Maerz, M. Advancing Early Wildfire Detection: Integration of Vision Language Models with Unmanned Aerial Vehicle Remote Sensing for Enhanced Situational Awareness. Drones 2025, 9, 347. [Google Scholar] [CrossRef]

- Congress, S.S.C.; Puppala, A.J.; Banerjee, A.; Patil, U.D. Identifying hazardous obstructions within an intersection using unmanned aerial data analysis. Int. J. Transp. Sci. Technol. 2021, 10, 34–48. [Google Scholar] [CrossRef]

- Vasilakos, C.; Verykios, V.S. Burned Olive Trees Identification with a Deep Learning Approach in Unmanned Aerial Vehicle Images. Remote Sens. 2024, 16, 4531. [Google Scholar] [CrossRef]

- Boroujeni, S.P.H.; Razi, A.; Khoshdel, S.; Afghah, F.; Coen, J.L.; O’Neill, L.; Fule, P.; Watts, A.; Kokolakis, N.M.T.; Vamvoudakis, K.G. A comprehensive survey of research towards AI-enabled unmanned aerial systems in pre-, active-, and post-wildfire management. Inf. Fusion 2024, 108, 102369. [Google Scholar] [CrossRef]

- Wang, D.; Sui, W.; Ranville, J.F. Hazard identification and risk assessment of groundwater inrush from a coal mine: A review. Bull. Eng. Geol. Environ. 2022, 81, 421. [Google Scholar] [CrossRef]

- Kureel, N.; Sarup, J.; Matin, S.; Goswami, S.; Kureel, K. Modelling vegetation health and stress using hypersepctral remote sensing data. Model. Earth Syst. Environ. 2022, 8, 733–748. [Google Scholar] [CrossRef]

- Felter, S.P.; Bhat, V.S.; Botham, P.A.; Bussard, D.A.; Casey, W.; Hayes, A.W.; Hilton, G.M.; Magurany, K.A.; Sauer, U.G.; Ohanian, E.V. Assessing chemical carcinogenicity: Hazard identification, classification, and risk assessment. Insight from a Toxicology Forum state-of-the-science workshop. Crit. Rev. Toxicol. 2021, 51, 653–694. [Google Scholar] [CrossRef] [PubMed]

- Drechsel, J.; Forkel, M. Remote sensing forest health assessment—A comprehensive literature review on a European level. Cent. Eur. For. J. 2025, 71, 14–39. [Google Scholar] [CrossRef]

- Zhao, Q.; Qu, Y. The retrieval of ground ndvi (normalized difference vegetation index) data consistent with remote-sensing observations. Remote Sens. 2024, 16, 1212. [Google Scholar] [CrossRef]

- Li, N.; Huo, L.; Zhang, X. Using only the red-edge bands is sufficient to detect tree stress: A case study on the early detection of PWD using hyperspectral drone images. Comput. Electron. Agric. 2024, 217, 108665. [Google Scholar] [CrossRef]

- Gao, S.; Yan, K.; Liu, J.; Pu, J.; Zou, D.; Qi, J.; Mu, X.; Yan, G. Assessment of remote-sensed vegetation indices for estimating forest chlorophyll concentration. Ecol. Indic. 2024, 162, 112001. [Google Scholar] [CrossRef]

- Korpela, I.; Polvivaara, A.; Hovi, A.; Junttila, S.; Holopainen, M. Influence of phenology on waveform features in deciduous and coniferous trees in airborne LiDAR. Remote Sens. Environ. 2023, 293, 113618. [Google Scholar] [CrossRef]

- Hamzah, H.; Zainal, M.H.; Zakaria, M.A. Unmanned Aerial Vehicle (UAV) in Tree Risk Assessment (TRA): A Systematic Review. Arboric. Urban For. 2025, 51. [Google Scholar] [CrossRef]

- Isa, M.M.; Zainal, M.H.; Zakaria, M.A.; Tahar, K.N.; Zhuang, Q. Utilizing Tree Risk Assessment (TRA) and Unmanned Aerial Vehicle (UAV) as a pre-determine tree hazard identification. Environ. Behav. Proc. J. 2025, 10, 359–366. [Google Scholar]

- Ecke, S.; Stehr, F.; Frey, J.; Tiede, D.; Dempewolf, J.; Klemmt, H.J.; Endres, E.; Seifert, T. Towards operational UAV-based forest health monitoring: Species identification and crown condition assessment by means of deep learning. Comput. Electron. Agric. 2024, 219, 108785. [Google Scholar] [CrossRef]

- Godinez-Garrido, G.; Gonzalez-Islas, J.C.; Gonzalez-Rosas, A.; Flores, M.U.; Miranda-Gomez, J.M.; Gutierrez-Sanchez, M.D.J. Estimation of Damaged Regions by the Bark Beetle in a Mexican Forest Using UAV Images and Deep Learning. Sustainability 2024, 16, 10731. [Google Scholar] [CrossRef]

- Mittal, P. A comprehensive survey of deep learning-based lightweight object detection models for edge devices. Artif. Intell. Rev. 2024, 57, 242. [Google Scholar] [CrossRef]

- Liu, H.I.; Galindo, M.; Xie, H.; Wong, L.K.; Shuai, H.H.; Li, Y.H.; Cheng, W.H. Lightweight deep learning for resource-constrained environments: A survey. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Oliveira, F.; Costa, D.G.; Assis, F.; Silva, I. Internet of Intelligent Things: A convergence of embedded systems, edge computing and machine learning. Internet Things 2024, 26, 101153. [Google Scholar] [CrossRef]

- Grzesik, P.; Mrozek, D. Combining machine learning and edge computing: Opportunities, challenges, platforms, frameworks, and use cases. Electronics 2024, 13, 640. [Google Scholar] [CrossRef]

| Model | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| SSD | 88.5 | 85.2 | 84.6 | 86.3 |

| Proposed model | 93.9 | 88.7 | 86.3 | 89.3 |

| Modification | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| SSD | 88.87 | 85.2 | 83.16 | 81.13 |

| YOLOv5s | 86.04 | 84.19 | 84.19 | 86.95 |

| YOLOv6s | 89.69 | 87.78 | 85.17 | 85.22 |

| YOLOv7s | 91.09 | 87.98 | 88.65 | 88.11 |

| YOLOv8s | 90.78 | 90.9 | 87.62 | 90.18 |

| YOLOv9s | 92.96 | 91.12 | 90.01 | 91.00 |

| Proposed model | 94.07 | 93.74 | 90.73 | 91.03 |

| Model | Backbone | Precision (%) | Recall (%) | mAP@0.5 (%) | F1-Score (%) |

|---|---|---|---|---|---|

| SSD | VGG16 | 88.5 | 85.2 | 84.6 | 86.3 |

| SSD-Lite | MobileNetV2 | 89.0 | 85.6 | 85.1 | 87.2 |

| YOLOv5s | CSPDarknet | 86.0 | 84.2 | 84.2 | 86.9 |

| YOLOv5m | CSPDarknet | 87.9 | 86.5 | 86.0 | 87.2 |

| YOLOv6s | EfficientRepV1 | 89.7 | 87.8 | 85.2 | 85.2 |

| YOLOv6n | EfficientRepLite | 88.3 | 85.9 | 83.9 | 86.1 |

| YOLOv7s | E-ELAN | 91.1 | 88.0 | 88.6 | 88.1 |

| YOLOv7-tiny | E-ELAN-lite | 89.8 | 87.0 | 86.4 | 87.3 |

| YOLOv8s | Efficient YOLO | 90.8 | 90.9 | 87.6 | 90.2 |

| YOLOv8n | Efficient YOLO | 89.3 | 87.1 | 85.3 | 87.9 |

| YOLOv9s | RT-DETR Backbone | 92.9 | 91.1 | 90.0 | 91.0 |

| Faster R-CNN | ResNet50 | 86.2 | 84.7 | 82.8 | 85.4 |

| RetinaNet | ResNet50 | 87.5 | 85.9 | 84.7 | 86.7 |

| EfficientDet-D0 | EfficientNetB0 | 90.1 | 88.6 | 86.5 | 89.3 |

| EfficientDet-D1 | EfficientNetB1 | 90.8 | 89.4 | 88.2 | 90.1 |

| NanoDet | ShuffleNetV2 | 87.2 | 83.9 | 82.5 | 85.5 |

| PP-YOLOE-Lite | MobileNetV3 | 91.5 | 89.8 | 88.3 | 90.6 |

| PicoDet | MobileNetV2 | 89.9 | 87.2 | 85.4 | 88.5 |

| CenterNet-Tiny | Hourglass-lite | 88.7 | 86.4 | 84.1 | 87.5 |

| Tiny-YOLOv4 | CSPDarknet-Tiny | 88.4 | 85.7 | 83.7 | 86.9 |

| Proposed Model | MobileNetV3-Small | 94.1 | 93.7 | 90.7 | 91.0 |

| Model | Model Size (MB) | Inference Time (ms/Image) | FPS (on Jetson Xavier NX) |

|---|---|---|---|

| YOLOv5s | 14.4 | 18 | 55.6 |

| YOLOv7s | 23.1 | 25 | 40.0 |

| YOLOv8s | 21.0 | 22 | 45.4 |

| EfficientDet-D0 | 12.1 | 27 | 37.0 |

| PP-YOLOE-Lite | 10.6 | 20 | 50.0 |

| Proposed Model | 8.7 | 16 | 62.5 |

| Condition | mAP@0.5 (%) |

|---|---|

| Overcast/Neutral | 90.7 |

| Bright/Direct Light | 89.3 |

| Hazy/Low-Light | 88.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdusalomov, A.; Umirzakova, S.; Kutlimuratov, A.; Mirzaev, D.; Dauletov, A.; Botirov, T.; Zakirova, M.; Mukhiddinov, M.; Cho, Y.I. Lightweight UAV-Based System for Early Fire-Risk Identification in Wild Forests. Fire 2025, 8, 288. https://doi.org/10.3390/fire8080288

Abdusalomov A, Umirzakova S, Kutlimuratov A, Mirzaev D, Dauletov A, Botirov T, Zakirova M, Mukhiddinov M, Cho YI. Lightweight UAV-Based System for Early Fire-Risk Identification in Wild Forests. Fire. 2025; 8(8):288. https://doi.org/10.3390/fire8080288

Chicago/Turabian StyleAbdusalomov, Akmalbek, Sabina Umirzakova, Alpamis Kutlimuratov, Dilshod Mirzaev, Adilbek Dauletov, Tulkin Botirov, Madina Zakirova, Mukhriddin Mukhiddinov, and Young Im Cho. 2025. "Lightweight UAV-Based System for Early Fire-Risk Identification in Wild Forests" Fire 8, no. 8: 288. https://doi.org/10.3390/fire8080288

APA StyleAbdusalomov, A., Umirzakova, S., Kutlimuratov, A., Mirzaev, D., Dauletov, A., Botirov, T., Zakirova, M., Mukhiddinov, M., & Cho, Y. I. (2025). Lightweight UAV-Based System for Early Fire-Risk Identification in Wild Forests. Fire, 8(8), 288. https://doi.org/10.3390/fire8080288