Performance Evaluation of Real-Time Image-Based Heat Release Rate Prediction Model Using Deep Learning and Image Processing Methods

Abstract

1. Introduction

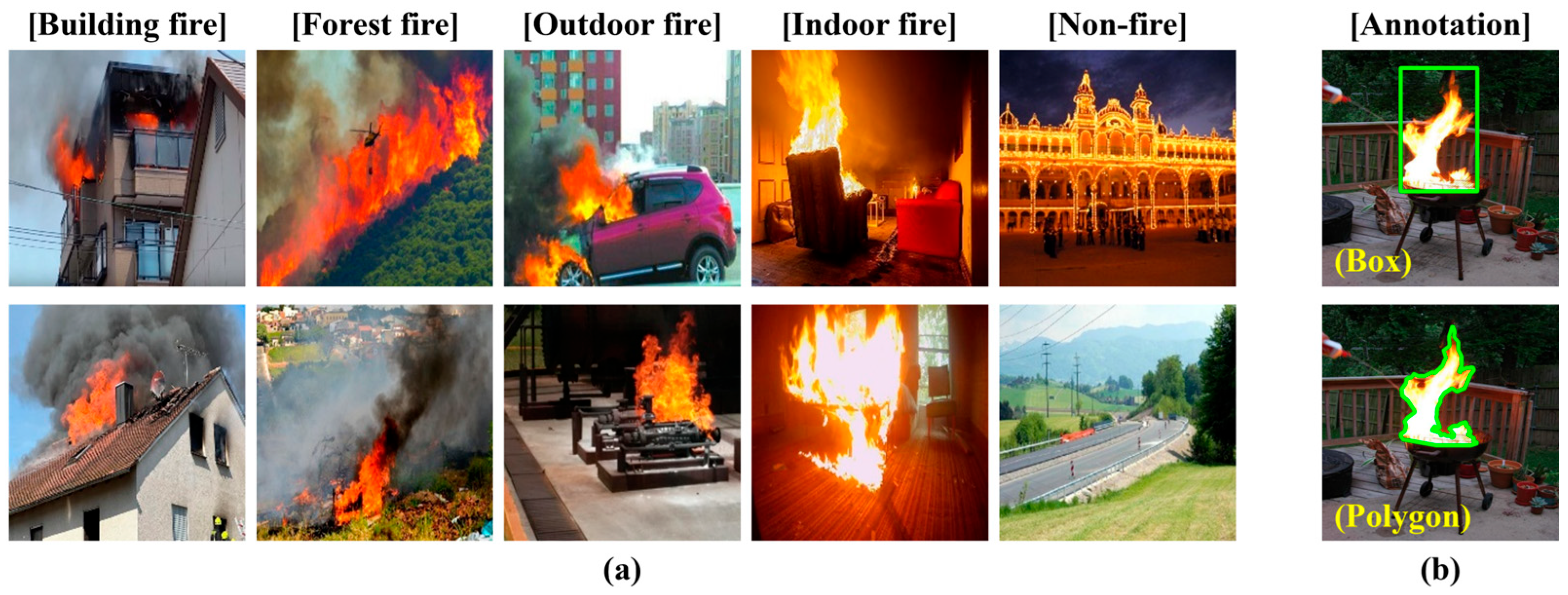

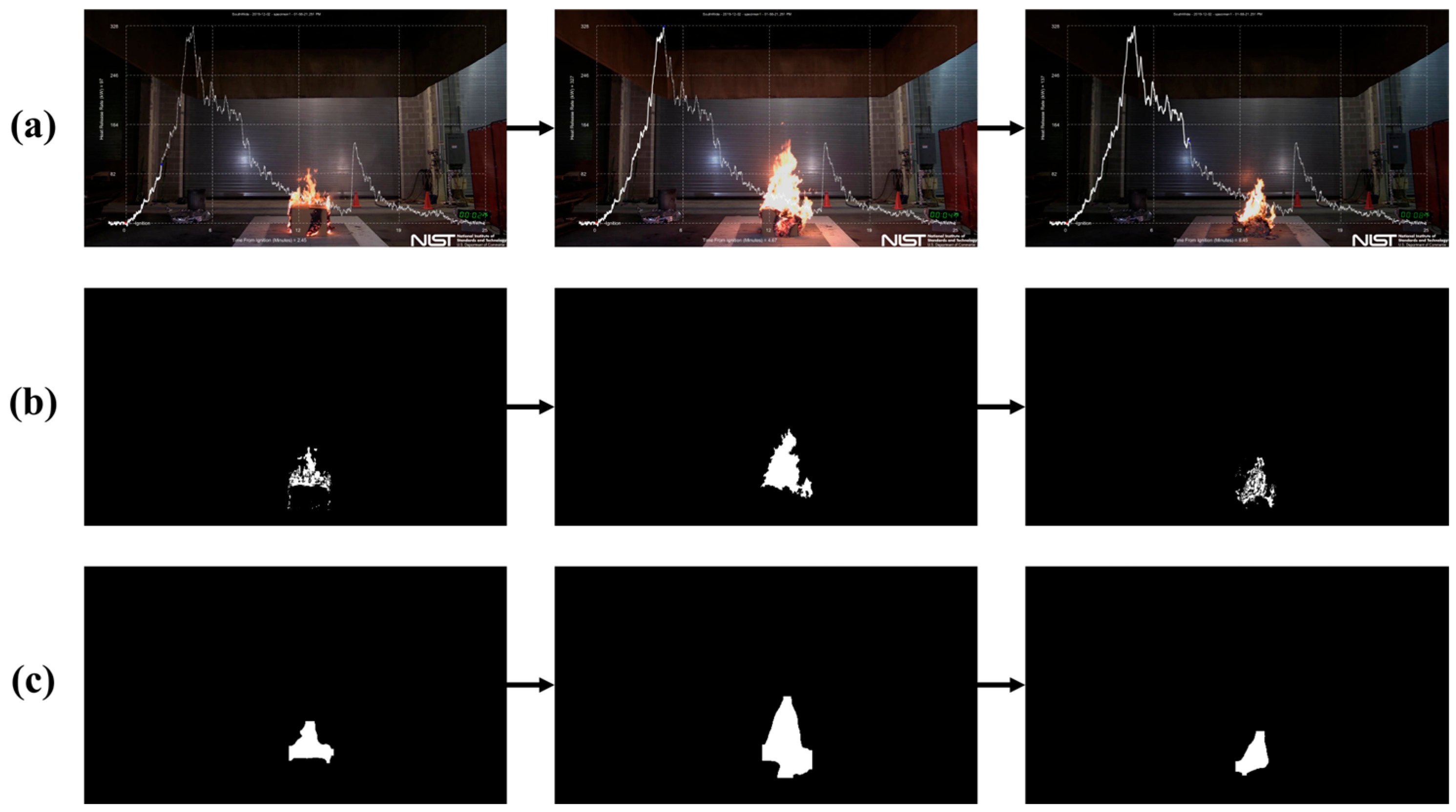

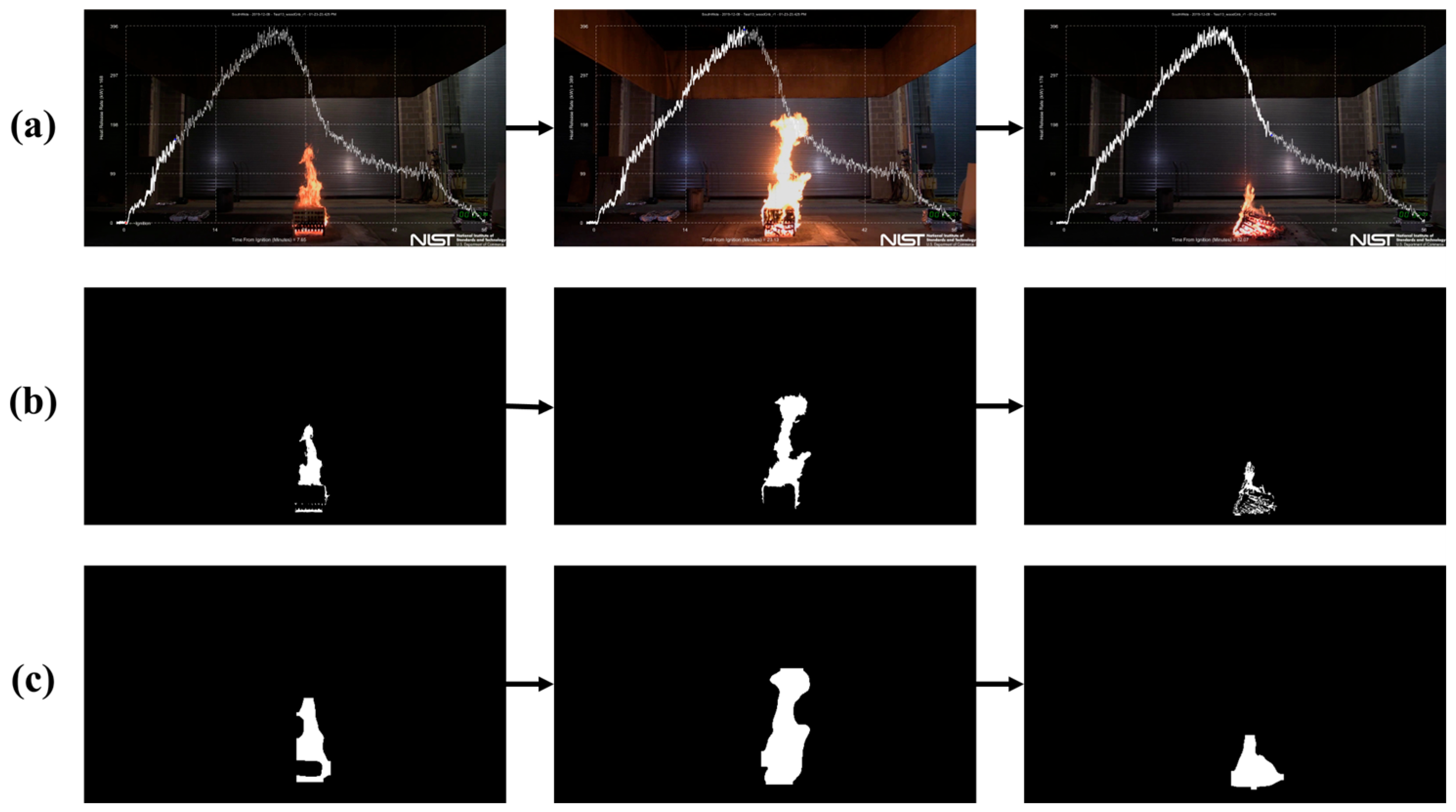

2. Materials and Methods

2.1. Image-Based Fire Heat Release Rate Prediction Methods

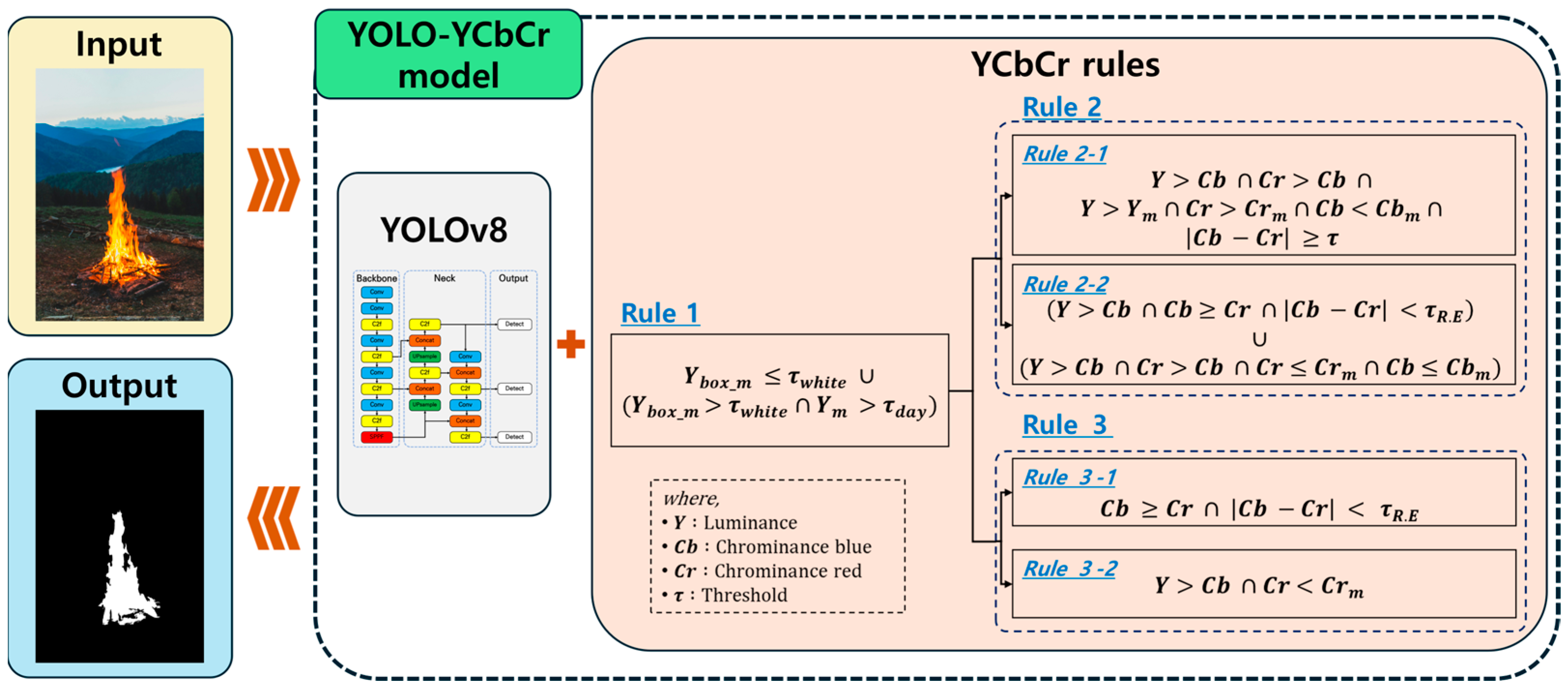

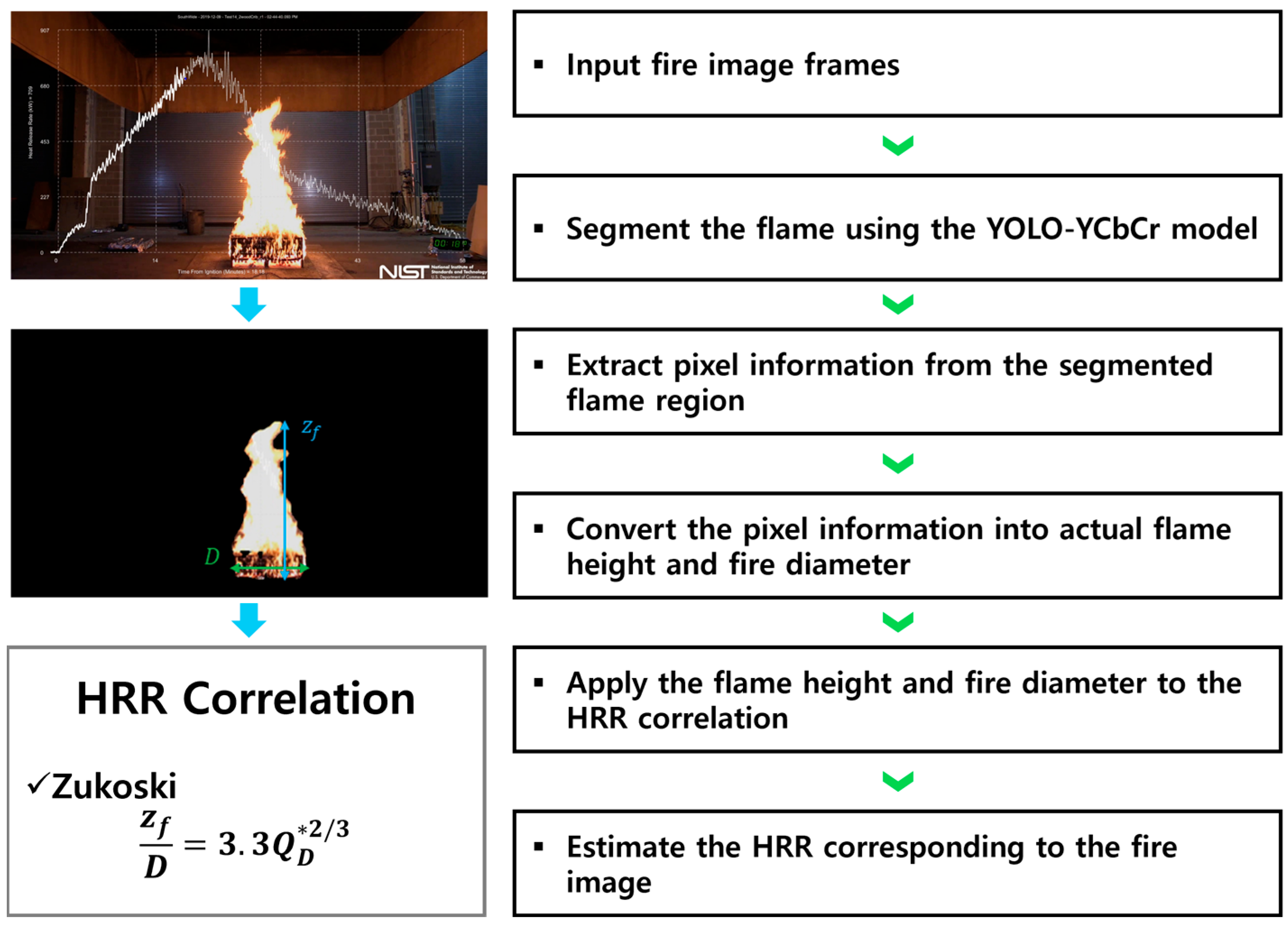

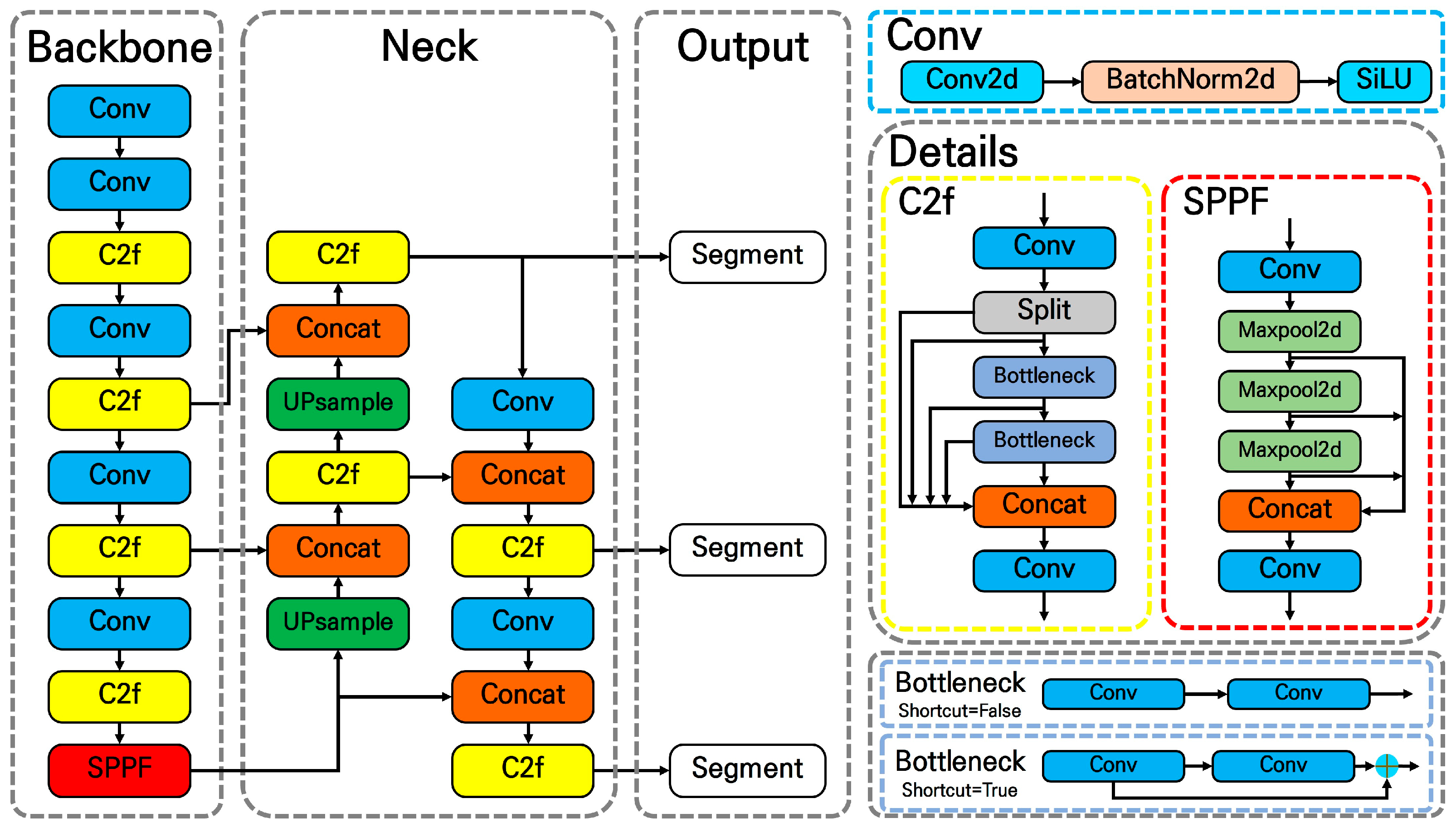

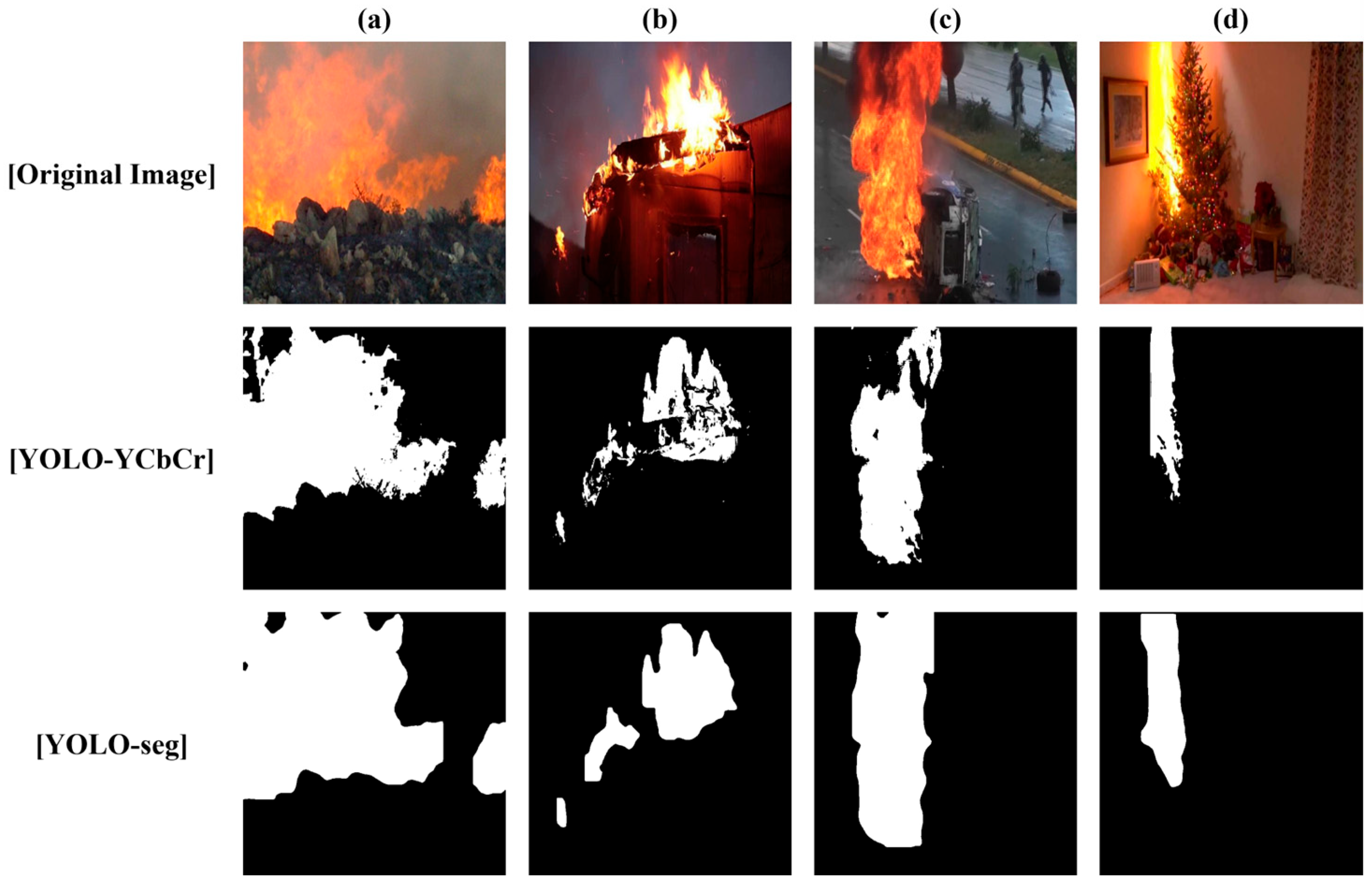

2.1.1. YOLO-YCbCr Segmentation-Model-Based HRR Prediction

2.1.2. YOLO Segmentation-Model-Based HRR Prediction

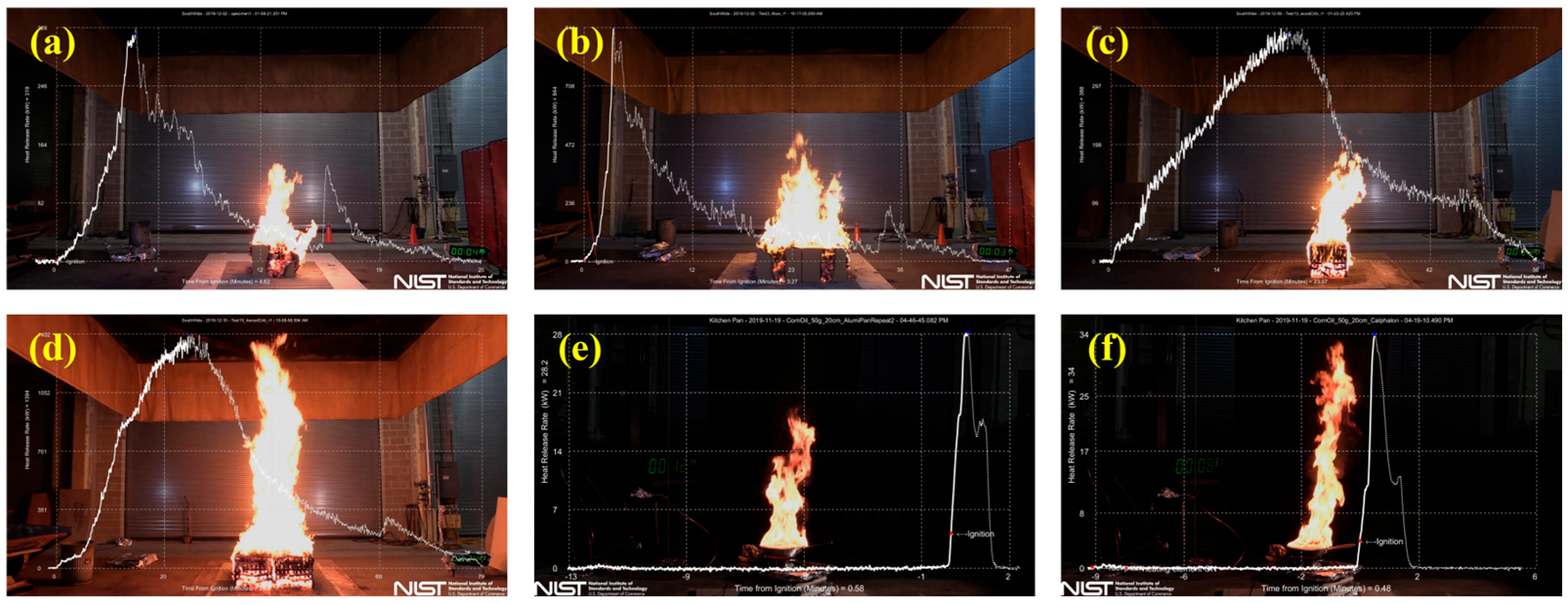

2.2. Performance Evaluation Method

3. Results and Discussion

3.1. Training and Test Results for Deep Learning Models

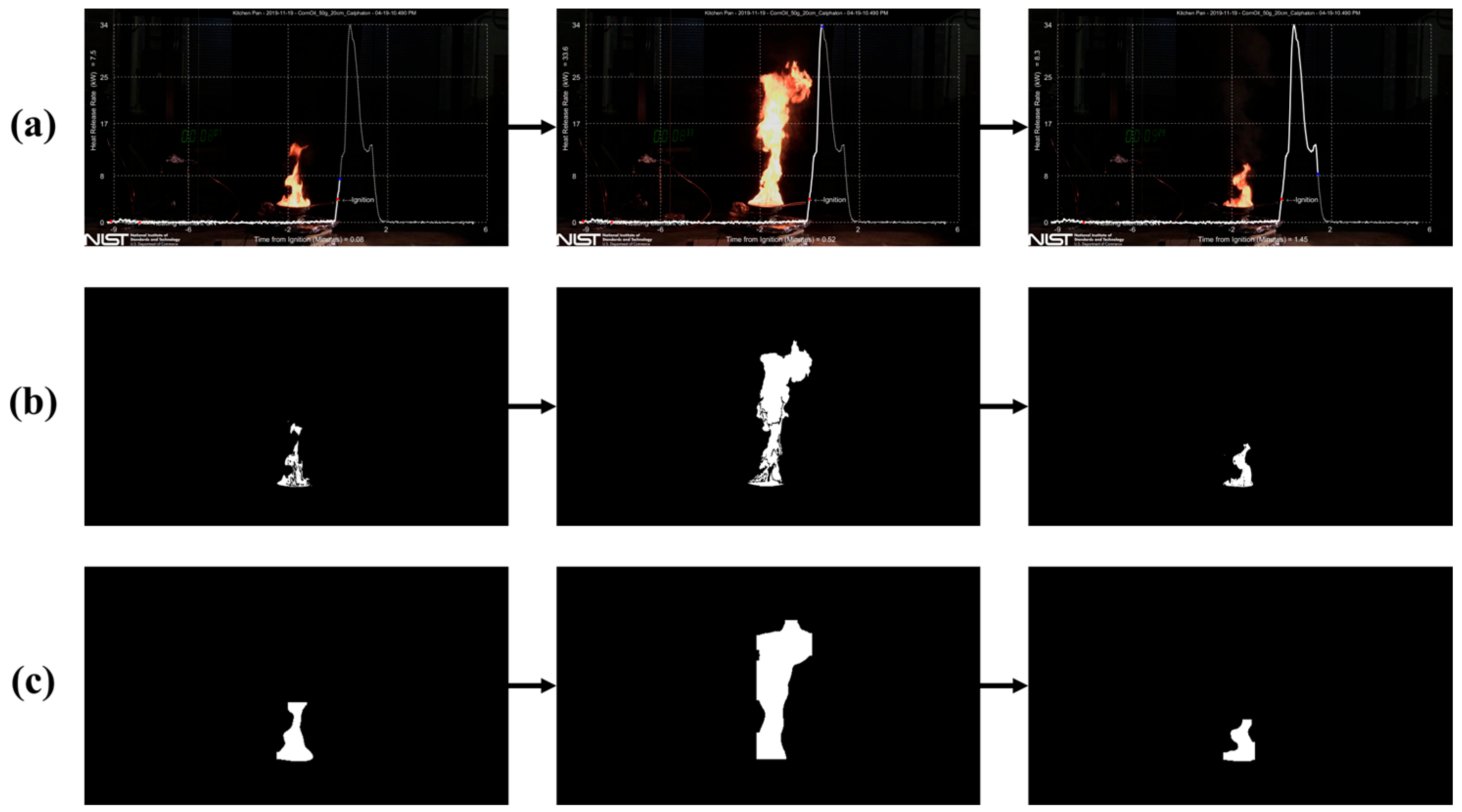

3.2. Evaluation of Flame Segmentation Performance

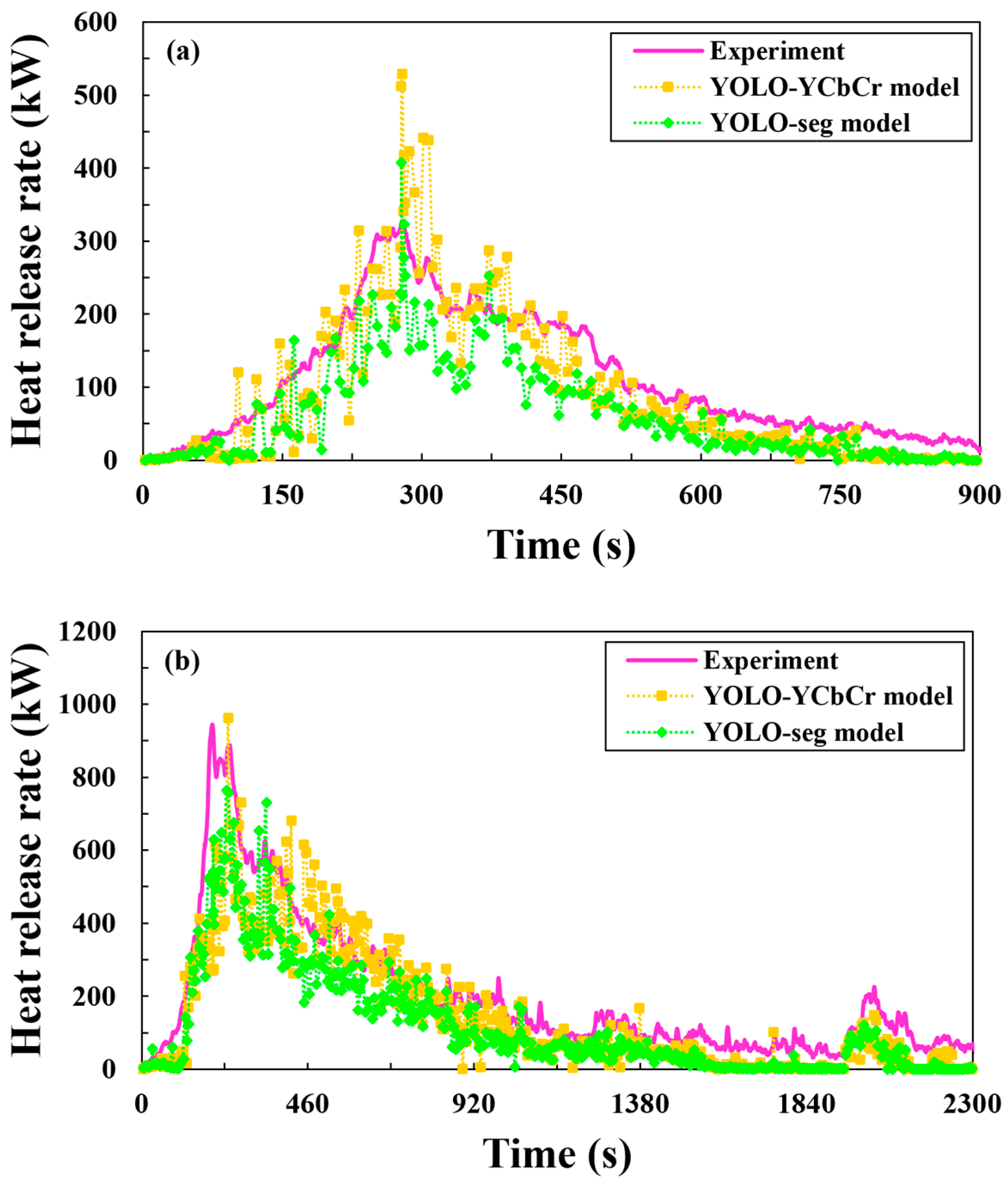

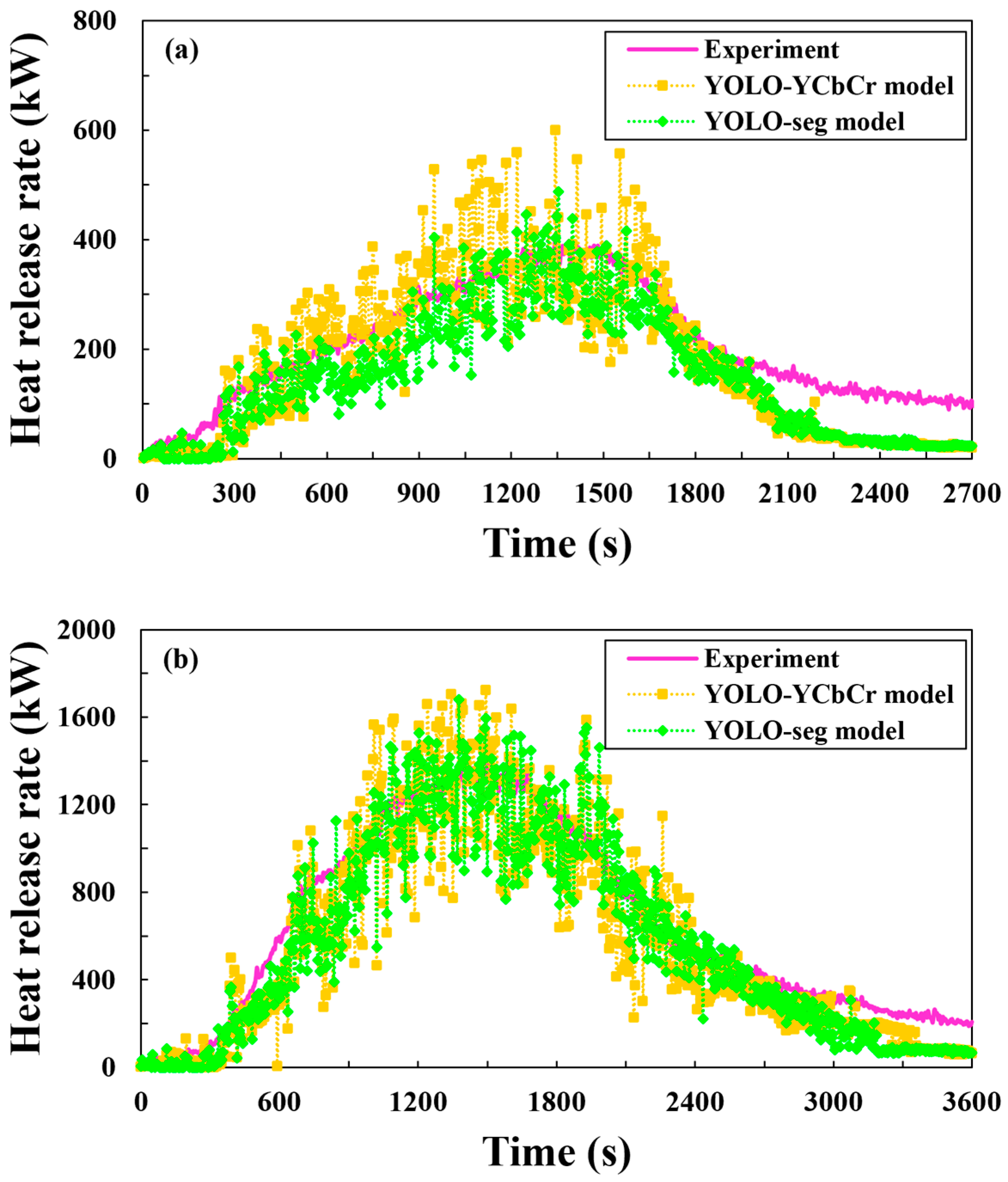

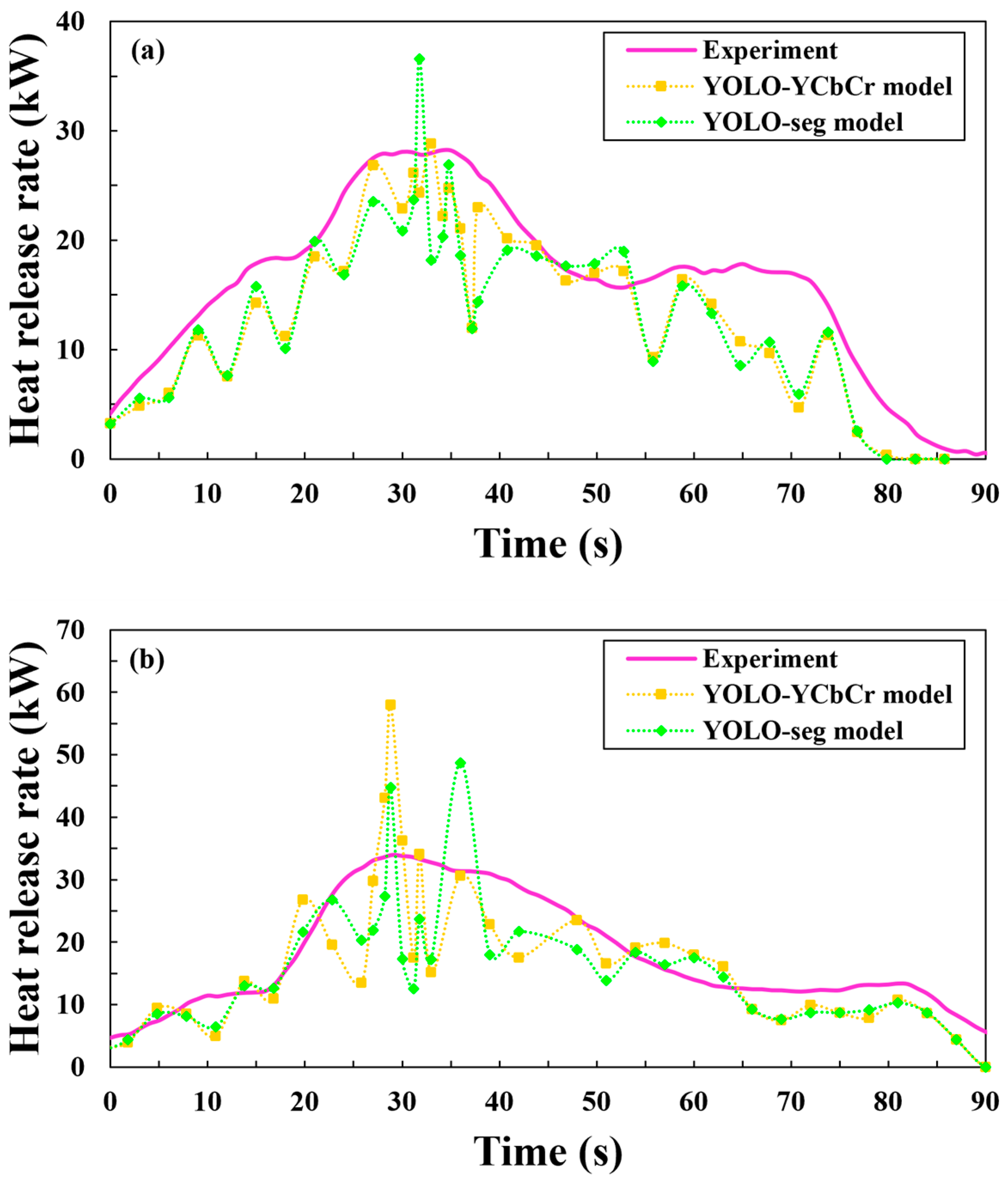

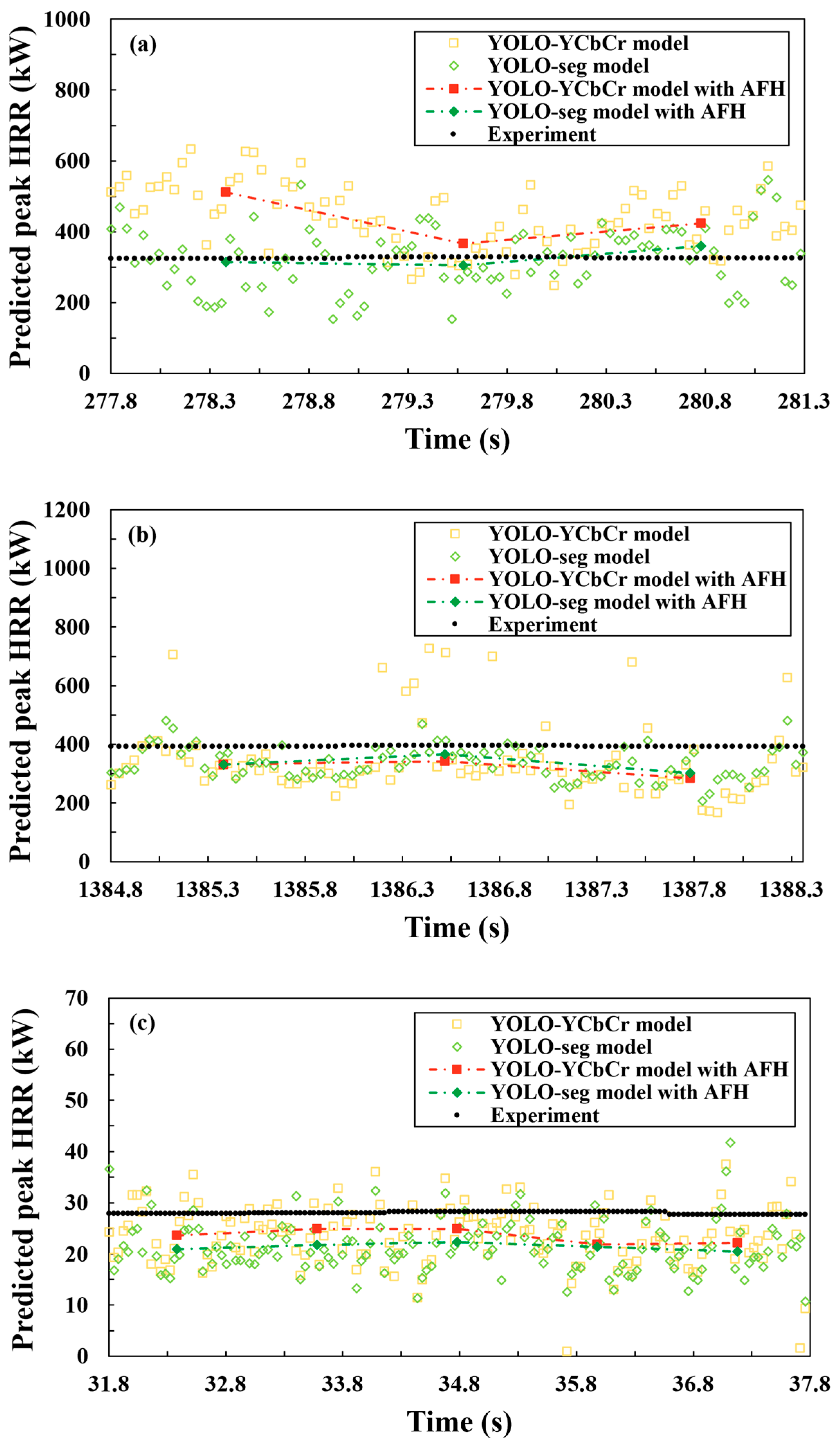

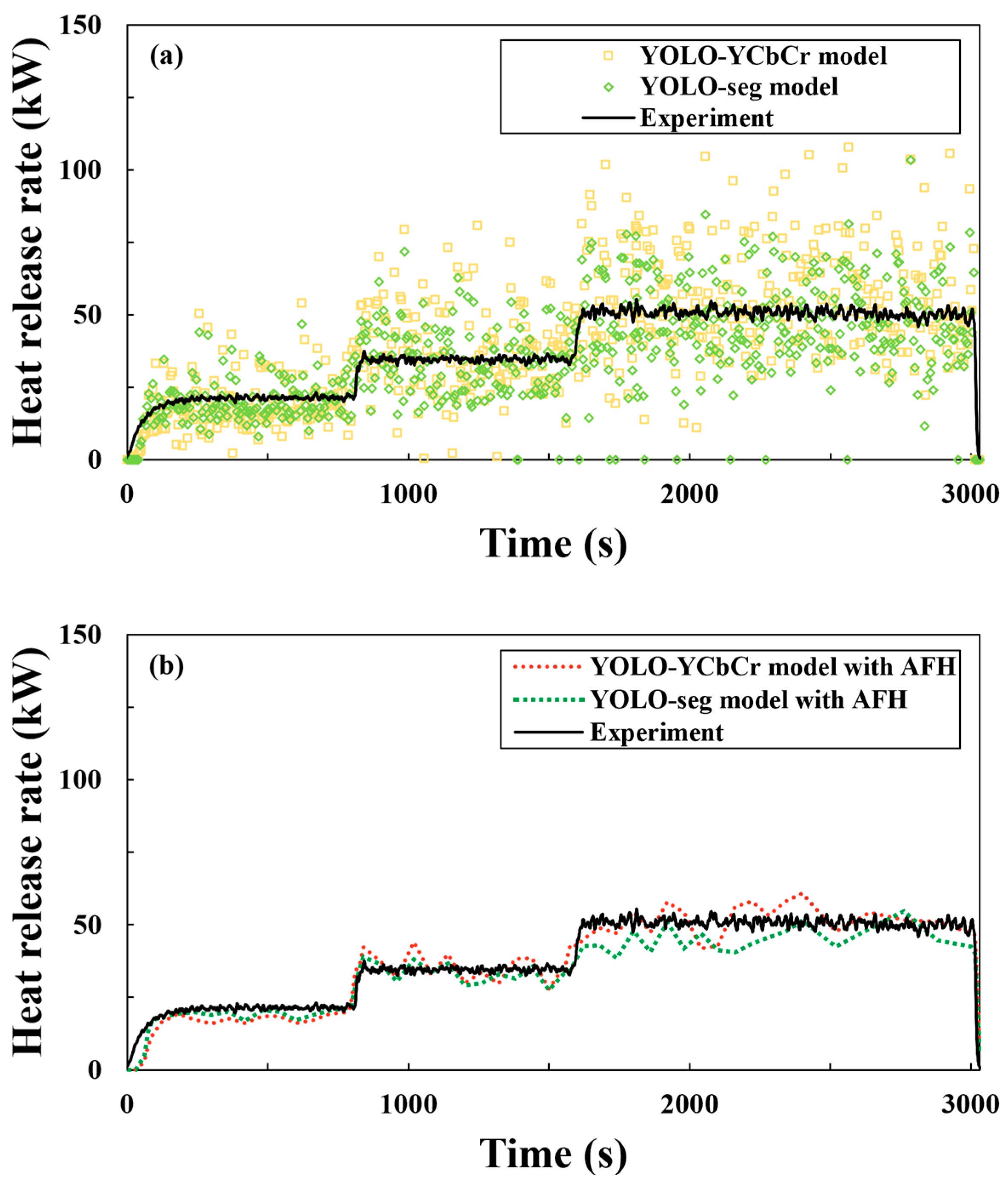

3.3. Evaluation of Fire Heat Release Rate Prediction Performance

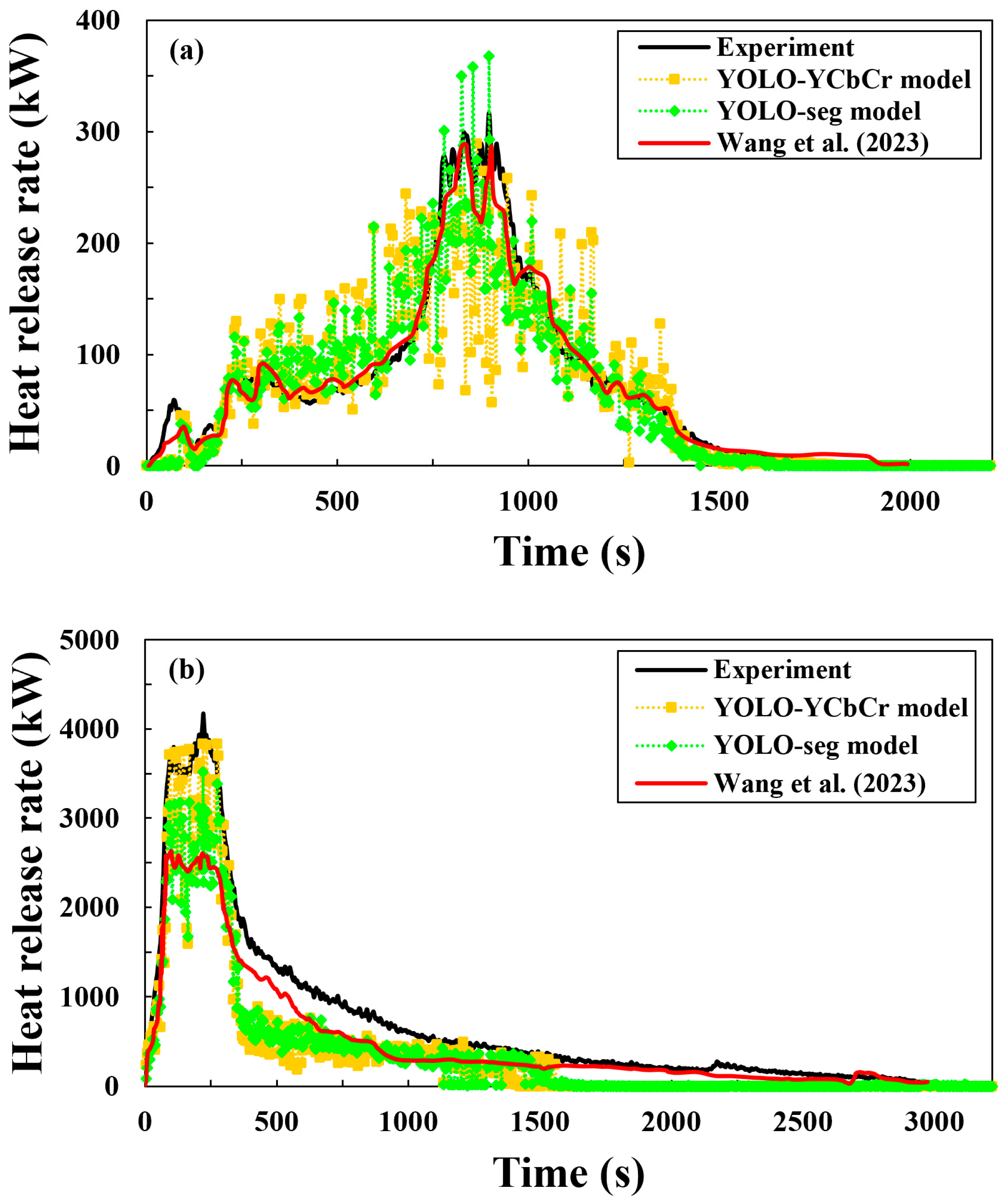

3.4. Performance Comparison with Sequence Modeling-Based HRR Prediction Model

4. Conclusions

- A novel, lightweight, image-based HRR prediction model was proposed by combining deep learning and image processing to extract physically meaningful flame features.

- The proposed fire-image-based HRR prediction models achieved R2 values ranging from 0.61 to 0.90, effectively capturing transient HRR trends. While frame-wise predictions caused fluctuations due to the limited video frames, applying the AFH significantly reduced these variations and improved the prediction performance.

- The YOLO-YCbCr-based model demonstrated high efficiency and applicability for transient HRR prediction. Further improvements are needed to incorporate temporal dynamics and refine the AFH method to enhance HRR prediction performance under diverse fire conditions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barbrauskas, V.; Peacock, R.D. Heat release rate: The single most important variable in fire hazard. Fire Saf. J. 1992, 18, 255–272. [Google Scholar] [CrossRef]

- Quintiere, J.G. Principles of Fire Behavior, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Hirschler, M.M. Use of heat release rate to predict whether individual furnishings would cause self-propagating fires. Fire Saf. J. 1999, 32, 273–296. [Google Scholar] [CrossRef]

- Babrauskas, V.; Grayson, S.J. Heat Release in Fires; Taylor & Francis: Oxford, UK, 1990. [Google Scholar]

- Johansson, N.; Svensson, S. Review of the use of fire dynamics theory in fire service activities. Fire Technol. 2019, 55, 81–103. [Google Scholar] [CrossRef]

- Ntzeremes, P.; Kirytopoulos, K. Evaluating the role of risk assessment for road tunnel fire safety: A comparative review within the EU. J. Traffic Transp. Eng. 2019, 6, 282–296. [Google Scholar] [CrossRef]

- Danzi, E.; Marmo, L.; Fiorentini, L. FLAME: A parametric fire risk assessment method supporting performance-based approaches. Fire Technol. 2021, 57, 721–765. [Google Scholar] [CrossRef]

- Babrauskas, V. Development of the cone calorimeter—A bench-scale heat release rate apparatus based on oxygen consumption. Fire Mater. 1984, 8, 81–95. [Google Scholar] [CrossRef]

- Parker, W.J. Calculations of the heat release rate by oxygen consumption for various applications. J. Fire Sci. 1984, 2, 380–395. [Google Scholar] [CrossRef]

- Thornton, W.M. XV. The relation of oxygen to the heat of combustion of organic compounds. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1917, 33, 196–203. [Google Scholar] [CrossRef]

- Mohd Tohir, M.Z.; Martín-Gómez, C. Evaluating fire severity in electric vehicles and internal combustion engine vehicles: A statistical approach to heat release rates. Fire Technol. 2025. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, G.; Zhu, G.; Yuan, D.; He, M. Prediction of fire source heat release rate based on machine learning method. Case Stud. Therm. Eng. 2024, 54, 104088. [Google Scholar] [CrossRef]

- Heskestad, G. Luminous heights of turbulent diffusion flame. Fire Saf. J. 1983, 5, 103–108. [Google Scholar] [CrossRef]

- Zukoski, E.E. Properties of Fire Plume. In SFPE Handbook of Fire Protection Engineering, 2nd ed.; National Fire Protection Association: Quincy, MA, USA, 1995. [Google Scholar]

- Ma, Q.; Chen, J.; Zhang, H. Heat release rate determination of pool fire at different pressure conditions. Fire Mater. 2018, 42, 620–626. [Google Scholar] [CrossRef]

- Huang, X.; Zhu, H.; Peng, L.; Zheng, Z.; Zeng, W.; Cheng, C.; Chow, W. An improved model for estimating heat release rate in horizontal cable tray fires in open space. J. Fire Sci. 2018, 36, 275–290. [Google Scholar] [CrossRef]

- Tan, Y.; Li, J.; Li, H.; Li, Z. Experimental study on fire temperature field of extra-long highway tunnel under the effect of auxiliary channel design parameters. Case Stud. Therm. Eng. 2025, 67, 105812. [Google Scholar] [CrossRef]

- Bochkov, V.S.; Kataeva, L.Y. wUUNet: Advanced Fully Convolutional Neural Network for Multiclass Fire Segmentation. Symmetry 2021, 13, 98. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, X.; Jiang, Y.; Huang, X.; Huang, G.G.Q.; Usmani, A. An intelligent tunnel firefighting system and small-scale demonstration. Tunn. Undergr. Space Technol. 2022, 120, 104301. [Google Scholar] [CrossRef]

- Ghosh, R.; Kumar, A. A hybrid deep learning model by combining convolutional neural network and recurrent neural network to detect forest fire. Multimed. Tools Appl. 2022, 81, 38643–38660. [Google Scholar] [CrossRef]

- Jin, C.; Wang, T.; Alhusaini, N.; Zhao, S.; Liu, H.; Xu, K.; Zhang, H. Video fire detection methods based on deep learning: Datasets, methods, and future directions. Fire 2023, 6, 315. [Google Scholar] [CrossRef]

- Yan, C.; Wang, Q.; Zhao, Y.; Zhang, X. YOLOv5-CSF: An improved deep convolutional neural network for flame detection. Soft Comput. 2023, 27, 19013–19023. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, W.; Liu, Y.; Jing, R.; Liu, C. An efficient fire and smoke detection algorithm based on an end-to-end structured network. Eng. Appl. Artif. Intell. 2022, 116, 105492. [Google Scholar] [CrossRef]

- Song, K.; Choi, H.; Kang, M. Squeezed fire binary segmentation model using convolutional neural network for outdoor images on embedded device. Mach. Vis. Appl. 2021, 32, 120. [Google Scholar] [CrossRef]

- Choi, H.; Jeon, M.; Song, K.; Kang, M. Semantic fire segmentation model based on convolutional neural network for outdoor image. Fire Technol. 2021, 57, 3005–3019. [Google Scholar] [CrossRef]

- Carmignani, L. Flame Tracker: An image analysis program to measure flame characteristics. SoftwareX 2021, 15, 100791. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, T.; Wu, X.; Huang, X. Predicting transient building fire based on external smoke images and deep learning. J. Build. Eng. 2022, 47, 103823. [Google Scholar] [CrossRef]

- Hu, L.; Lin, X. Research on the prediction method of tunnel fire heat release rate based on informer network. In Proceedings of the 3rd International Conference on Green Building, Civil Engineering and Smart City (GBCESC 2024), Kunming, China, 22–25 July 2024; pp. 856–867. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, T.; Huang, X. Predicting real-time fire heat release rate by flame images and deep learning. Proc. Combust. Inst. 2023, 39, 4115–4123. [Google Scholar] [CrossRef]

- Xu, L.; Dong, J.; Zou, D. Predict future transient fire heat release rates based on fire imagery and deep learning. Fire 2024, 7, 200. [Google Scholar] [CrossRef]

- Prasad, K. Predicting Heat Release Rate from Fire Video Data Part 1. Application of Deep Learning Techniques; Series (NIST IR 8521) Publication ID; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2024. [CrossRef]

- Roh, J.; Min, S.; Kong, M. Flame segmentation characteristics of YCbCr color model using object detection technique. Fire Sci. Eng. 2023, 37, 54–61. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8 Segmentation. Available online: https://yolov8.org/yolov8-segmentation (accessed on 4 August 2024).

- Lee, H.; Kim, W. The flame color analysis of color models for fire detection. J. Satell. Inf. Commun. 2013, 8, 52–57. [Google Scholar]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Bai, R.; Wang, M.; Zhang, Z.; Lu, J.; Shen, F. Automated construction site monitoring based on improved YOLOv8-seg instance segmentation algorithm. IEEE Access 2023, 11, 139082–139096. [Google Scholar] [CrossRef]

- NIST. Fire Calorimetry Database (FCD). Available online: https://nist.gov/el/fcd (accessed on 1 September 2024).

- Guo, Y.; Xiao, G.; Chen, J.; Wang, L.; Deng, H.; Liu, X.; Sun, Q.; Xiong, X. Investigation of ambient temperature effects on the characteristics of turbulent diffusion flames: An experimental approach. Process Saf. Environ. Prot. 2023, 175, 88–98. [Google Scholar] [CrossRef]

- Song, X.; Wang, Z.; Ge, S.; Li, W.; Lu, J.; An, W. Study on flame height and temperature distribution of double-deck bridge fire based on large-scale fire experiments. Therm. Sci. Eng. Prog. 2024, 47, 102319. [Google Scholar] [CrossRef]

- Ramesh, B.; George, A.D.; Lam, H. Real-time, low-latency image processing with high throughout on a multi-core SoC. In Proceedings of the 2016 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 13–15 September 2016; pp. 1–7. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roh, J.; Min, S.; Kong, M. Performance Evaluation of Real-Time Image-Based Heat Release Rate Prediction Model Using Deep Learning and Image Processing Methods. Fire 2025, 8, 283. https://doi.org/10.3390/fire8070283

Roh J, Min S, Kong M. Performance Evaluation of Real-Time Image-Based Heat Release Rate Prediction Model Using Deep Learning and Image Processing Methods. Fire. 2025; 8(7):283. https://doi.org/10.3390/fire8070283

Chicago/Turabian StyleRoh, Joohyung, Sehong Min, and Minsuk Kong. 2025. "Performance Evaluation of Real-Time Image-Based Heat Release Rate Prediction Model Using Deep Learning and Image Processing Methods" Fire 8, no. 7: 283. https://doi.org/10.3390/fire8070283

APA StyleRoh, J., Min, S., & Kong, M. (2025). Performance Evaluation of Real-Time Image-Based Heat Release Rate Prediction Model Using Deep Learning and Image Processing Methods. Fire, 8(7), 283. https://doi.org/10.3390/fire8070283