AI-Driven Boost in Detection Accuracy for Agricultural Fire Monitoring

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

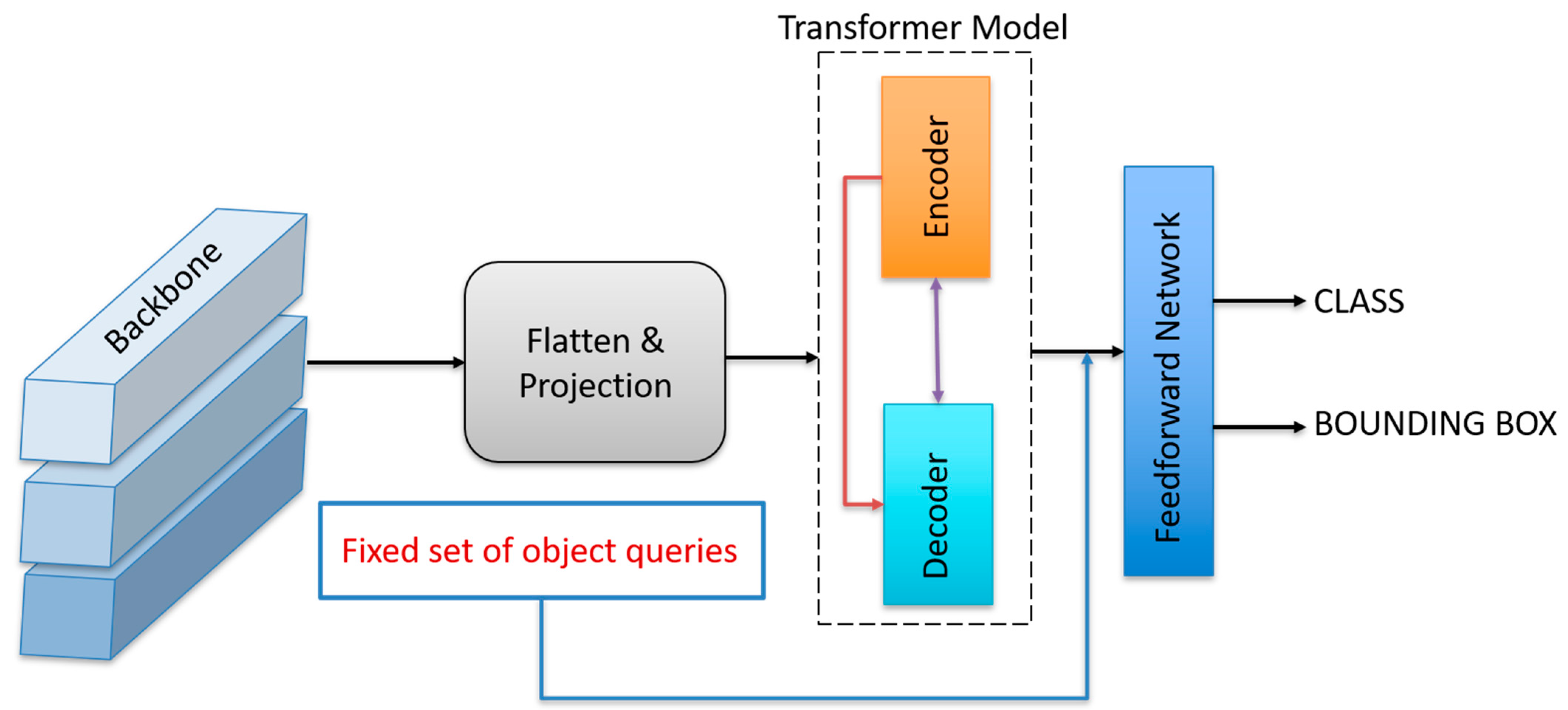

3.1. DETR

- Encoder: The encoder processes the spatially flattened image features, enriching them through multi-head self-attention and feedforward layers. Positional encodings are added to retain spatial information, compensating for the permutation-invariant nature of the transformers,

- Decoder: The decoder consists of a fixed set of learnable object queries that interact with the encoder outputs via cross-attention mechanisms. Each query is designed to attend to different spatial regions of the image and predict one object instance.

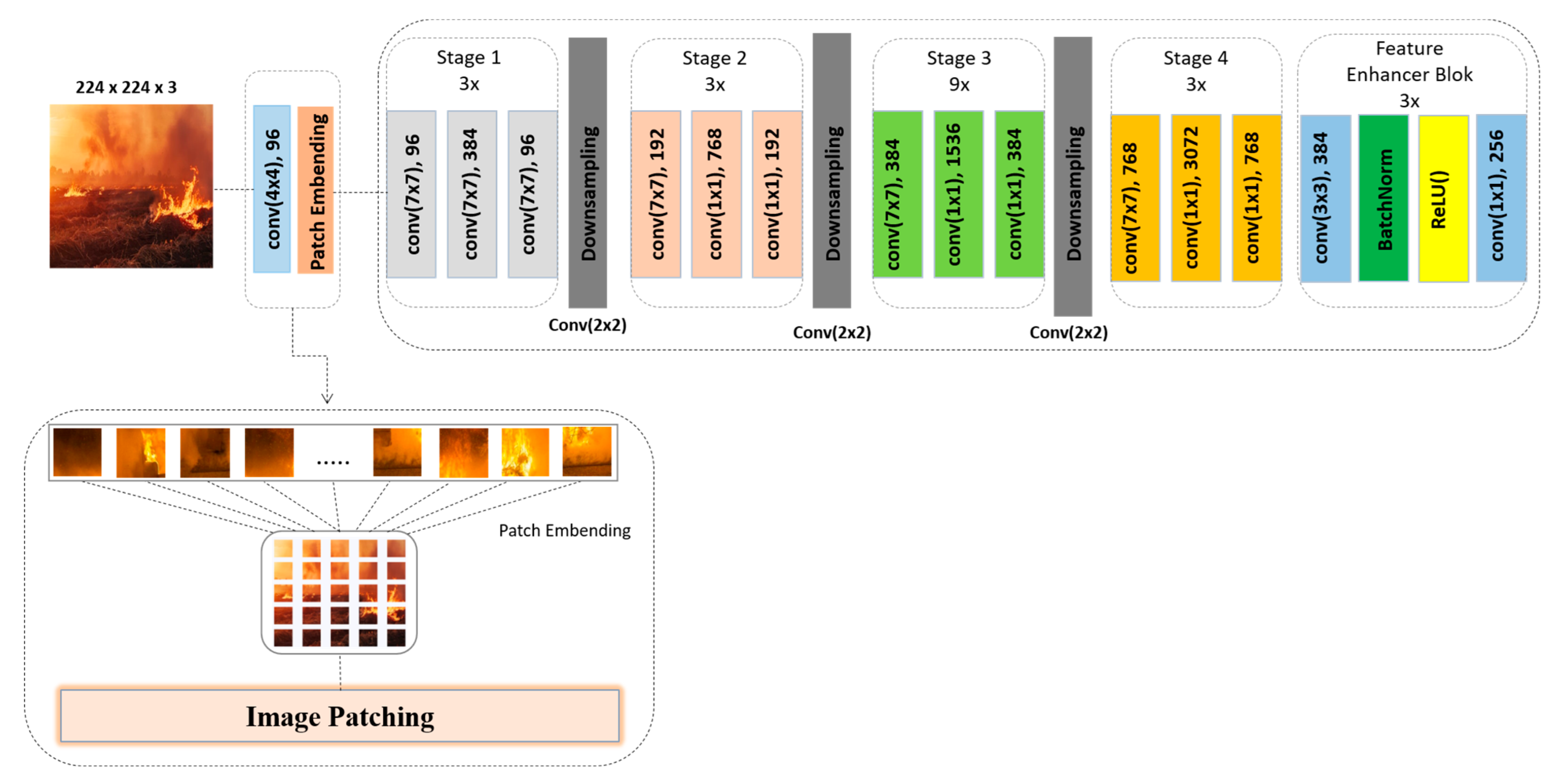

3.2. ConvNeXt

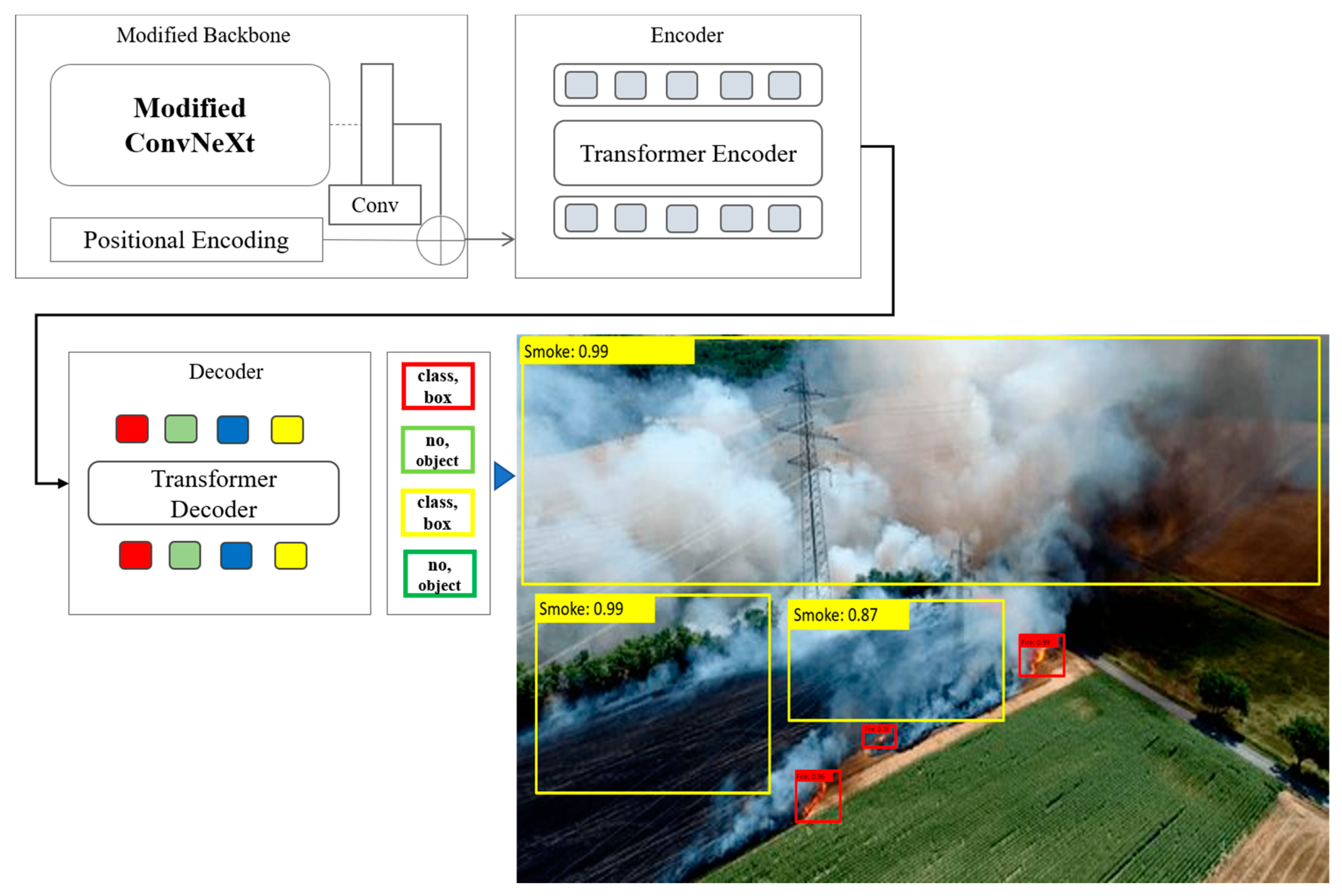

3.3. The Proposed Model

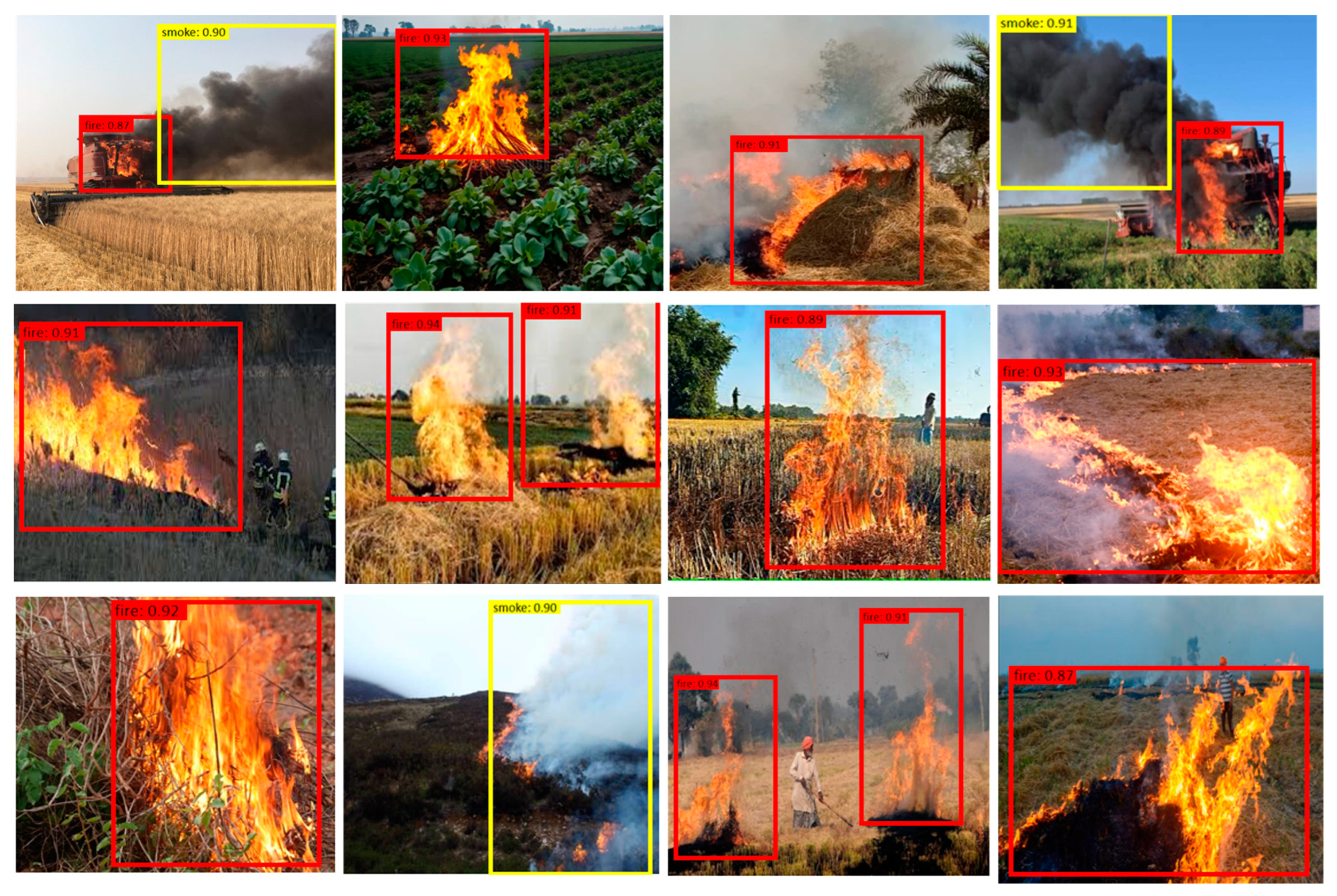

4. Experiment and Results

4.1. Dataset

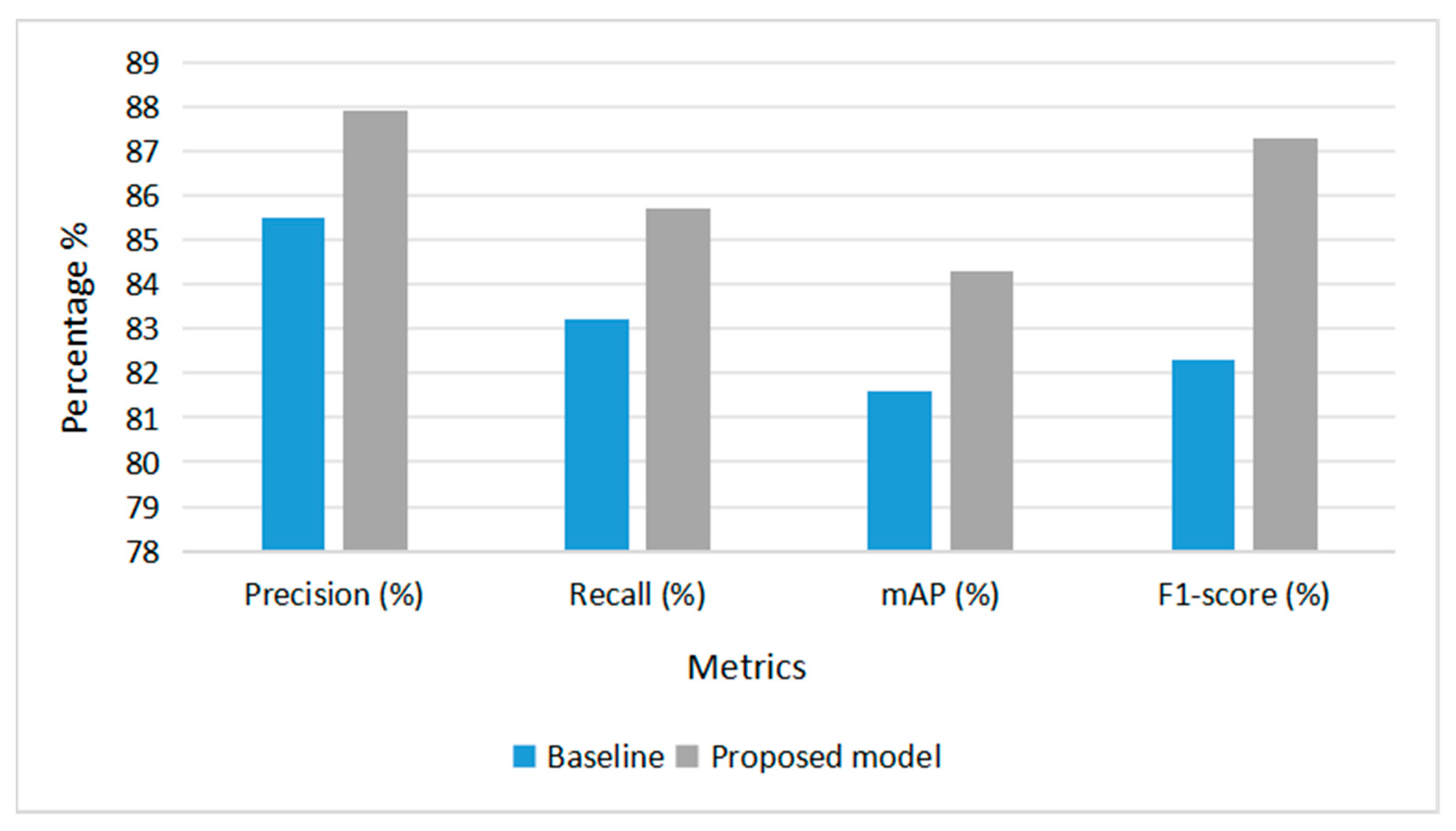

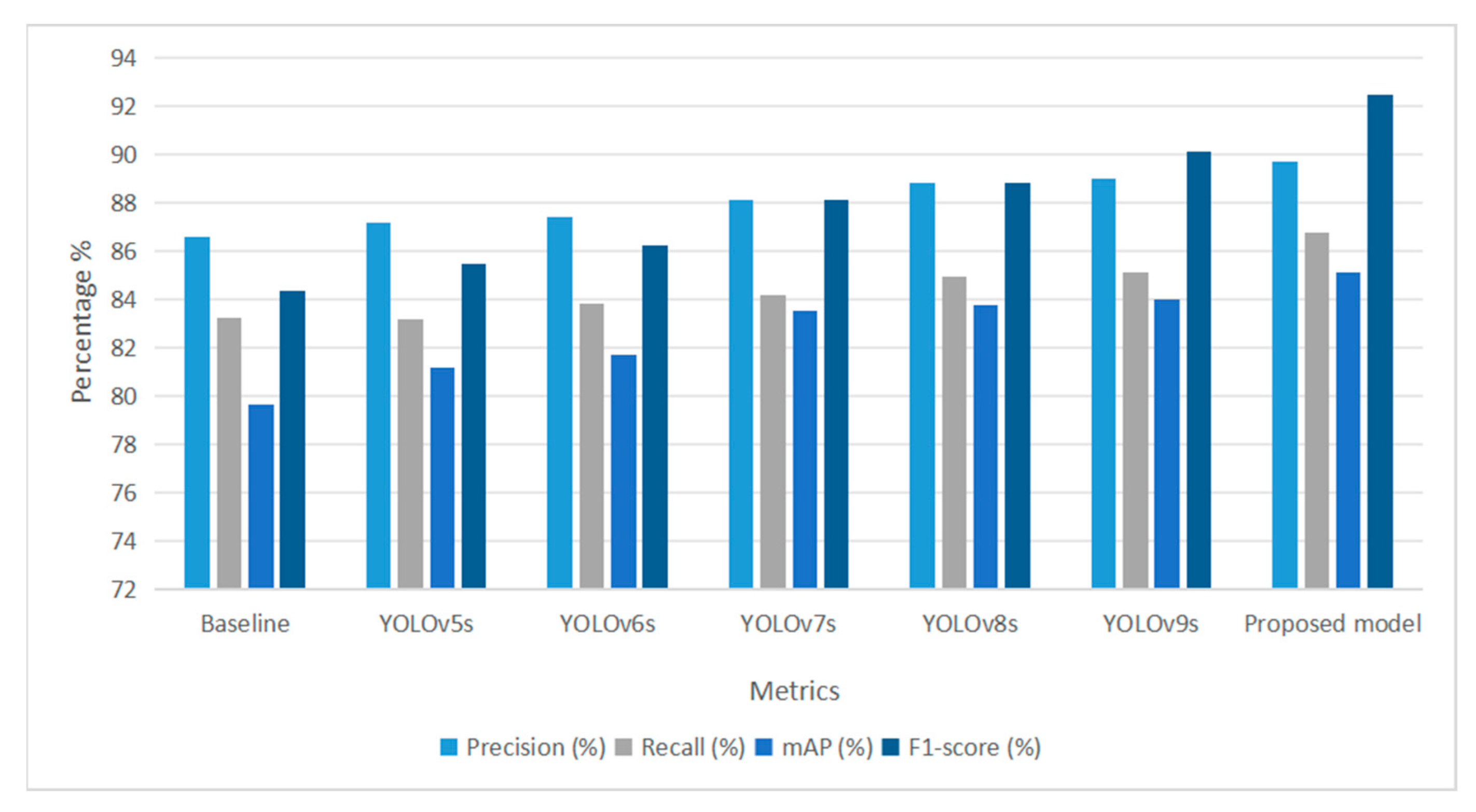

4.2. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Maharani, D.N.; Umami, N.; Yuliyani, U.; Kurniyawan, E.H.; Nur, K.R.M.; Kurniawan, D.E.; Afandi, A.T. Psychosocial Problems among Farmers in Agricultural Areas. Health Technol. J. HTechJ 2025, 3, 120–130. [Google Scholar] [CrossRef]

- Taboada-Hermoza, R.; Martínez, A.G. “No One Is Safe”: Agricultural Burnings, Wildfires and Risk Perception in Two Agropastoral Communities in the Puna of Cusco, Peru. Fire 2025, 8, 60. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Bakhtiyor Shukhratovich, M.; Mukhiddinov, M.; Kakhorov, A.; Buriboev, A.; Jeon, H.S. Drone-Based Wildfire Detection with Multi-Sensor Integration. Remote Sens. 2024, 16, 4651. [Google Scholar] [CrossRef]

- Morchid, A.; Alblushi, I.G.M.; Khalid, H.M.; El Alami, R.; Said, Z.; Qjidaa, H.; Cuce, E.; Muyeen, S.M.; Jamil, M.O. Fire detection and anti-fire system to enhance food security: A concept of smart agriculture systems-based IoT and embedded systems with machine-to-machine protocol. Sci. Afr. 2025, 27, e02559. [Google Scholar] [CrossRef]

- Krüll, W.; Tobera, R.; Willms, I.; Essen, H.; Von Wahl, N. Early forest fire detection and verification using optical smoke, gas and microwave sensors. Procedia Eng. 2012, 45, 584–594. [Google Scholar] [CrossRef]

- Asbaş, C.; Tuzlukaya, Ş.E. The New Agricultural Revolution: Agriculture 4.0 and Artificial Intelligence Applications in Agriculture, Forestry, and Fishery. In Generating Entrepreneurial Ideas with AI; IGI Global: Hershey, PA, USA, 2024; pp. 265–291. [Google Scholar]

- Gonzáles, H.; Ocaña, C.L.; Cubas, J.A.; Vega-Nieva, D.J.; Ruíz, M.; Santos, A.; Barboza, E. Impact of forest fire severity on soil physical and chemical properties in pine and scrub forests in high Andean zones of Peru. Trees For. People 2024, 18, 100659. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Tashev, K.; Egamberdiev, N.; Belalova, G.; Meliboev, A.; Atadjanov, I.; Temirov, Z.; Cho, Y.I. AI-Driven UAV Surveillance for Agricultural Fire Safety. Fire 2025, 8, 142. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Dias, F.D.C.P.M. Rural Fire Detection: A Close-Range Approach for Classification and Localisation. Master’s Thesis, Universidade NOVA de Lisboa, Lisboa, Portugal, 2024. [Google Scholar]

- Liu, H.; Zhang, F.; Xu, Y.; Wang, J.; Lu, H.; Wei, W.; Zhu, J. Tfnet: Transformer-based multi-scale feature fusion forest fire image detection network. Fire 2025, 8, 59. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Hussain, T.; Baik, S.W. A modified vision transformer architecture with scratch learning capabilities for effective fire detection. Expert Syst. Appl. 2024, 252, 123935. [Google Scholar] [CrossRef]

- Ai, H.Z.; Han, D.; Wang, X.Z.; Liu, Q.Y.; Wang, Y.; Li, M.Y.; Zhu, P. Early fire detection technology based on improved transformers in aircraft cargo compartments. J. Saf. Sci. Resil. 2024, 5, 194–203. [Google Scholar] [CrossRef]

- Makhmudov, F.; Umirzakova, S.; Kutlimuratov, A.; Abdusalomov, A.; Cho, Y.-I. Advanced Object Detection for Maritime Fire Safety. Fire 2024, 7, 430. [Google Scholar] [CrossRef]

- Sun, B.; Cheng, X. Smoke Detection Transformer: An Improved Real-Time Detection Transformer Smoke Detection Model for Early Fire Warning. Fire 2024, 7, 488. [Google Scholar] [CrossRef]

- Morchid, A.; Oughannou, Z.; El Alami, R.; Qjidaa, H.; Jamil, M.O.; Khalid, H.M. Integrated internet of things (IoT) solutions for early fire detection in smart agriculture. Results Eng. 2024, 24, 103392. [Google Scholar] [CrossRef]

- Maraveas, C.; Loukatos, D.; Bartzanas, T.; Arvanitis, K.G. Applications of artificial intelligence in fire safety of agricultural structures. Appl. Sci. 2021, 11, 7716. [Google Scholar] [CrossRef]

- Vasconcelos, R.N.; Franca Rocha, W.J.; Costa, D.P.; Duverger, S.G.; Santana, M.M.D.; Cambui, E.C.; Ferreira-Ferreira, J.; Oliveira, M.; Barbosa, L.D.S.; Cordeiro, C.L. Fire Detection with Deep Learning: A Comprehensive Review. Land 2024, 13, 1696. [Google Scholar] [CrossRef]

- Lopez-Alanis, A.; De-la-Torre-Gutierrez, H.; Hernández-Aguirre, A.; Orvañanos-Guerrero, M.T. Fuzzy rule-based combination model for the fire pixel segmentation. IEEE Access 2025, 13, 52478–52496. [Google Scholar] [CrossRef]

- Taşpınar, Y.S.; Köklü, M.; Altın, M. Fire detection in images using framework based on image processing, motion detection and convolutional neural network. Int. J. Intell. Syst. Appl. Eng. 2021, 9, 171–177. [Google Scholar] [CrossRef]

- Khatami, A.; Mirghasemi, S.; Khosravi, A.; Lim, C.P.; Nahavandi, S. A new PSO-based approach to fire flame detection using K-Medoids clustering. Expert Syst. Appl. 2017, 68, 69–80. [Google Scholar] [CrossRef]

- Cheng, G.; Chen, X.; Wang, C.; Li, X.; Xian, B.; Yu, H. Visual fire detection using deep learning: A survey. Neurocomputing 2024, 596, 127975. [Google Scholar] [CrossRef]

- Özel, B.; Alam, M.S.; Khan, M.U. Review of Modern Forest Fire Detection Techniques: Innovations in Image Processing and Deep Learning. Information 2024, 15, 538. [Google Scholar] [CrossRef]

- Wang, D.; Qian, Y.; Lu, J.; Wang, P.; Yang, D.; Yan, T. Ea-yolo: Efficient extraction and aggregation mechanism of YOLO for fire detection. Multimed. Syst. 2024, 30, 287. [Google Scholar] [CrossRef]

- Khan, Q. A Vision-Based Approach for Real Time Fire and Smoke Detection Using FASDD. Doctoral Dissertation, College of Electrical & Mechanical Engineering (CEME), NUST, Rawalpindi, Pakistan, 2025. [Google Scholar]

- Sun, Z.; Xu, R.; Zheng, X.; Zhang, L.; Zhang, Y. A forest fire detection method based on improved YOLOv5. Signal Image Video Process. 2025, 19, 136. [Google Scholar] [CrossRef]

- Alkhammash, E.H. A Comparative Analysis of YOLOv9, YOLOv10, YOLOv11 for Smoke and Fire Detection. Fire 2025, 8, 26. [Google Scholar] [CrossRef]

- Geng, X.; Han, X.; Cao, X.; Su, Y.; Shu, D. YOLOV9-CBM: An improved fire detection algorithm based on YOLOV9. IEEE Access 2025, 13, 19612–19623. [Google Scholar] [CrossRef]

- Liu, C.; Wu, F.; Shi, L. FasterGDSF-DETR: A Faster End-to-End Real-Time Fire Detection Model via the Gather-and-Distribute Mechanism. Electronics 2025, 14, 1472. [Google Scholar] [CrossRef]

- Liu, C.; Wu, F.; Shi, L. April. FasterGold-DETR: An Efficient End-to-End Fire Detection Model via Gather-and-Distribute Mechanism. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3651–3660. [Google Scholar]

- Wu, S.; Sheng, B.; Fu, G.; Zhang, D.; Jian, Y. Multiscale fire image detection method based on cnn and transformer. Multimed. Tools Appl. 2024, 83, 49787–49811. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Zahir, S.; Abbas, A.W.; Khan, R.U.; Ullah, M. Vision sensor assisted fire detection in iot environment using convnext. J. Artif. Intell. Syst. 2023, 5, 23–35. [Google Scholar]

- Khan, T.; Khan, Z.A.; Choi, C. Enhancing real-time fire detection: An effective multi-attention network and a fire benchmark. Neural Comput. Appl. 2023, 1–15. [Google Scholar] [CrossRef]

- Xu, Y.; Li, J.; Zhang, L.; Liu, H.; Zhang, F. CNTCB-YOLOv7: An effective forest fire detection model based on ConvNeXtV2 and CBAM. Fire 2024, 7, 54. [Google Scholar] [CrossRef]

| Category | Description |

|---|---|

| Total video sources | 38 (UAV footage and publicly accessible videos) |

| Total frames extracted | 8410 |

| Total annotated images | 5763 (with visible fire, smoke, or both) |

| Annotation format | COCO (bounding boxes with class labels) |

| Image resolution | Resized to 224 × 224 pixels |

| Training images | 4034 (70%) |

| Validation images | 865 (15%) |

| Test images | 864 (15%) |

| Scene types | Crop fields, orchards, forest-edge agriculture |

| Fire visibility levels | Full flame, partial flame with smoke, smoke-only scenes |

| Environmental conditions | Daylight, dusk, overcast |

| Camera distances & angles | Close-range, mid-range, long-range; overhead; and oblique perspectives |

| Model | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| DETR (Baseline) | 85.5 | 83.2 | 81.6 | 82.3 |

| Proposed model | 87.9 | 85.7 | 84.3 | 87.3 |

| Modification | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| DETR (Baseline) | 86.6 | 83.2 | 79.66 | 84.33 |

| YOLOv5s | 87.14 | 83.19 | 81.19 | 85.45 |

| YOLOv6s | 87.39 | 83.78 | 81.7 | 86.2 |

| YOLOv7s | 88.09 | 84.18 | 83.5 | 88.1 |

| YOLOv8s | 88.78 | 84.9 | 83.76 | 88.8 |

| YOLOv9s | 88.96 | 85.12 | 84.01 | 90.1 |

| Proposed model | 89.67 | 86.74 | 85.13 | 92.43 |

| Model Variant | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| DETR (Baseline, ResNet-50) | 85.5 | 83.2 | 81.6 | 82.3 |

| DETR + ConvNeXt (no FEB) | 86.7 | 84.3 | 83.0 | 85.1 |

| Proposed Model (ConvNeXt + FEB) | 87.9 | 85.7 | 84.3 | 87.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdusalomov, A.; Umirzakova, S.; Tashev, K.; Sevinov, J.; Temirov, Z.; Muminov, B.; Buriboev, A.; Safarova Ulmasovna, L.; Lee, C. AI-Driven Boost in Detection Accuracy for Agricultural Fire Monitoring. Fire 2025, 8, 205. https://doi.org/10.3390/fire8050205

Abdusalomov A, Umirzakova S, Tashev K, Sevinov J, Temirov Z, Muminov B, Buriboev A, Safarova Ulmasovna L, Lee C. AI-Driven Boost in Detection Accuracy for Agricultural Fire Monitoring. Fire. 2025; 8(5):205. https://doi.org/10.3390/fire8050205

Chicago/Turabian StyleAbdusalomov, Akmalbek, Sabina Umirzakova, Komil Tashev, Jasur Sevinov, Zavqiddin Temirov, Bahodir Muminov, Abror Buriboev, Lola Safarova Ulmasovna, and Cheolwon Lee. 2025. "AI-Driven Boost in Detection Accuracy for Agricultural Fire Monitoring" Fire 8, no. 5: 205. https://doi.org/10.3390/fire8050205

APA StyleAbdusalomov, A., Umirzakova, S., Tashev, K., Sevinov, J., Temirov, Z., Muminov, B., Buriboev, A., Safarova Ulmasovna, L., & Lee, C. (2025). AI-Driven Boost in Detection Accuracy for Agricultural Fire Monitoring. Fire, 8(5), 205. https://doi.org/10.3390/fire8050205