Abstract

Camera-based fire detection systems have been found to yield significantly superior results compared to their sensor-based counterparts, and are thus widely employed for this purpose across the globe. This study presents an effective and lightweight fire detection technique based on deep learning. A novel convolutional neural network has been created specifically for the purpose of identifying fire areas. This network utilizes the YOLOv5 algorithm. The default versions of the You Only Look Once (YOLO) technique demonstrate limited accuracy when applied to fire detection instances, even after undergoing training and testing. After training and testing in fire detection instances, the default versions of the YOLO technique exhibit a significantly low level of accuracy. We chose the YOLOv5 network to augment its capabilities and maximize its effectiveness in identifying fire disasters. The self-designed convolution module, also known as the improved convolution module, is implemented to substitute the regular convolution module in YOLOv5. By dividing the input features into two main portions, it helps to realize the lightweight of the network. Additionally, the network incorporates the attention mechanism. The testing findings clearly show that the proposed method is more effective than previous ways of identifying fire, with a precision of 91.22% and a recall of 93.78%.

1. Introduction

Fire is a natural calamity that plagues the world, and it reigns as the primary disaster in our daily lives. Its emergence and progression not only inflict severe harm on the environment but also pose a threat to people’s safety and property. In recent times, fires caused by both natural and human factors have resulted in significant casualties and ecological devastation [1,2]. There is no assurance of accuracy or real-time performance using the traditional fire detection method, which uses temperature and smoke sensors to collect relevant data for predicting fires. As computing power and AI continue to grow, deep learning has shown remarkable skill in detecting and recognizing images, leading to its extensive use in early warning systems and fire detection [3,4,5,6].

A two-stage object detection method and a one-stage algorithm are the two main categories of deep learning algorithms [7,8,9,10,11,12,13]. These types are based on different ideas. According to [14], R-CNN is a well-known two-stage algorithm that uses the Selective Search algorithm to identify candidate boxes within the region of interest. Although it outperforms traditional object detection by 50%, R-CNN has some drawbacks, such as slow performance due to the need for manual candidate region selection and repetitive feature extraction [15]. To address these issues, Microsoft Research developed the ResNet model in 2015. ResNet introduces a Spatial Pyramid Pooling (SPP) structure that eliminates the need for clipping and scaling candidate regions, reducing computational cost and avoiding repetitive operations [16]. This speeds up candidate box generation and prevents image distortion. Also, in 2015, Fast R-CNN was proposed, which built on the SPP-Net structure to further improve R-CNN by introducing a ROl pooling layer [15]. In addition, other algorithms have also been proposed and applied in the field of object detection, such as those based on Transformer [17,18,19] and U-Net [20]. Transformer, with its powerful global self-attention mechanism, can effectively capture long-range dependencies in images and has been used to develop efficient detection models like DETR (Detection Transformer), which enables end-to-end prediction without relying on traditional region proposal modules. On the other hand, U-Net, with its classical encoder–decoder architecture and skip connections, excels in preserving high-resolution feature maps and fusing multi-scale information, making it highly effective for tasks involving small object detection and semantic segmentation. With the introduction of these new architectures, object detection algorithms are continuously breaking the limitations of traditional convolutional neural networks, moving towards higher accuracy and more efficient computation.

Using sliding windows and regular blocks, the early one-stage algorithm OverFeat generated candidate boxes. Afterward, it used multi-scale sliding windows to improve detection outcomes for picture targets with complicated shapes and varying sizes. But the R-CNN algorithm supplanted it because of how inaccurate it was. The proposed You Only Look Once (YOLO) algorithm surpassed all others in terms of speed for one-stage object recognition very soon after its introduction [21]. The method uses worldwide picture data for prediction and has a straightforward design. The dimensions of the input photos are adjusted to 448 × 448 pixels and then divided into 7 × 7 grid cells. Its recall rates are lower than those of region-based techniques and it has problems with inaccurate localization [22,23]. As a result, there have been several iterations of the YOLO algorithm, the most popular of which is YOLOv5 [24]. Downsampling and convolution procedures cause the feature map size to shrink in the deep neural network architecture as the number of layers increases in the real fire detection application. This results in a loss of features during network propagation and weakens their utilization. Similar to deep neural network-based algorithms, the YOLOv5 algorithm also exhibits limitations in its ability to detect objects at long distances. The YOLO algorithm has undergone updates and iterations, resulting in the development of YOLOv8. This latest version addresses the issues present in its predecessor, leading to a further enhancement in object detection accuracy. YOLOv5 is highly optimized for real-time applications, offering superior frames per second (FPS) performance compared to newer models such as YOLOv8, which shows lower FPS on CPU [25]. This makes YOLOv5 a more suitable choice for time-sensitive tasks [26,27]. In fire detection tasks, acquiring and processing images through cameras or drones are often necessary. Since these devices rely on CPU calculations, YOLOv5 demonstrates a faster target detection response speed in practical applications. Therefore, this study focuses on algorithm optimization specifically for YOLOv5.

We used the enhanced YOLOv5 for fire detection to fix those problems, and here are the key points:

In place of the standard convolution module in the backbone and neck, YOLOv5 introduces its self-designed improved convolution module. This module simplifies things without sacrificing classification accuracy, making it ideal for online real-time devices. The network’s detection accuracy is enhanced, and the detection rate is improved, all thanks to the adoption of a lightweight model that minimizes the number of convolution kernels.

This study introduced the ECA attention mechanism to YOLOv5, which improves the backbone network’s feature extraction ability with a small increase in parameters and suppresses the interference of complex background environment, after the last layer in the backbone part. It is the goal of this technique to enhance the expression weight of the feature map and make up for the loss of specific feature information.

2. Materials and Methods

The core principle of YOLOv5 is similar to YOLOv4. By surpassing Faster R-CNN, YOLOv3, and YOLOv4 in recall, average accuracy, and accuracy, YOLOv5 improves upon YOLOv4. Especially compared to YOLOv4, YOLOv5 offers several improvements, primarily in architecture optimization, loss function refinement, data augmentation, training efficiency, and model flexibility. YOLOv5 features a more efficient network architecture with enhanced feature extraction modules, resulting in faster inference and higher accuracy [28]. It also introduces the CIoU loss function, which improves bounding box regression accuracy. In terms of data augmentation, YOLOv5 utilizes techniques like mosaic augmentation and mixed-precision training, enhancing the model’s robustness and generalization ability. Compared to YOLOv4, YOLOv5 also demonstrates significant improvements in training efficiency and hardware utilization, enabling faster training and better performance in real-time applications [29]. Overall, YOLOv5 optimizes performance, efficiency, and adaptability while maintaining high accuracy. Along with that, YOLOv5 comes in four different flavors: YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x. The premise remains the same, even though this categorization relies on memory storage capacity. If you are looking for storage space, YOLOv5x has the most capacity, while YOLOv5s has the least. As demonstrated in Figure 1, we enhance the model using the most basic YOLOv5 in this experiment.

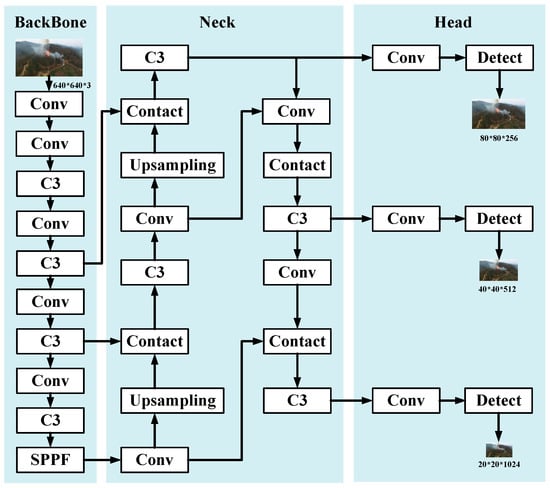

Figure 1.

Schematic of the network architecture of YOLOv5, including the backbone network, neck network and head network.

YOLOv5 offers flexibility in adjusting model size by modifying depth and width multiples, effectively balancing detection accuracy and speed. This design achieves high detection precision while maintaining fast inference speeds, making it a highly efficient choice for real-time applications. The algorithm’s structure, as shown in Figure 1, consists of three main components: the backbone, neck, and detection networks. The backbone network is responsible for feature extraction and comprises modules such as convolution (Conv), C3 (Core), and SP-PF (Support Vector Field). The neck network acts as a feature fusion network, enhancing feature integration through operations like convolution (Conv), the C3 module, upsampling, and feature fusion (Concat). Finally, the detection layer produces predictions at three different scales, allowing the detection of objects of varying sizes. A key feature of YOLOv5 is the incorporation of the focus and CSP (Cross-Stage Partial Connections) layers, which improve feature extraction efficiency and reduce computational redundancy, further enhancing the model’s performance [30].

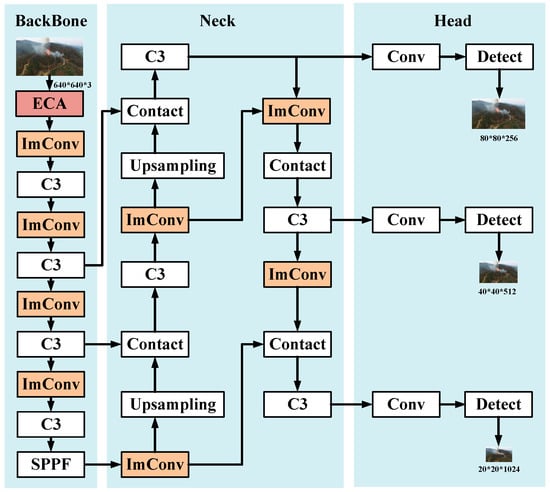

The YOLOv5s model relies on C3 modules for feature extraction, therefore as their number increases, the picture’s detailed feature information will be gradually lost. Therefore, following the first layer in the backbone section, this article introduces the ECA attention mechanism, which enhances the network’s feature extraction capability with a minimal increase in parameters and reduces interference from complicated background environments. This enhances the expressive weight of the feature and helps to make up for the loss of specific feature information in the feature map. We are simultaneously upgrading YOLOv5’s backbone and neck convolution modules from the regular to our own custom-built, enhanced version. This makes things simpler without sacrificing classification accuracy, making it ideal for online real-time devices. The network’s detection accuracy is enhanced, and the detection rate is improved, all thanks to the adoption of a lightweight model that minimizes the number of convolution kernels. In Figure 2, we can see the fully upgraded YOLOv5s design.

Figure 2.

Schematic of the network architecture of improved YOLOv5. The pink box represents the newly incorporated ECA module in the model, while the yellow boxes indicate the substitution of the standard convolution module with the improved version.

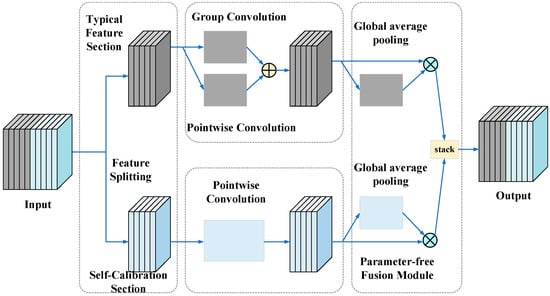

Improved convolution module (ImConv). According to the ratio, all input feature map channels are divided into two main portions by ImConv as shown in Figure 3. This helps to reduce feature redundancy and feature loss, as well as extract more effective features [31]. Part one employs the more sophisticated convolution to refine typical features, while part two uses the simpler convolution to extract feature information. Part three uses self-calibrating features that have been lightweighted for self-calibration to augment certain little hidden details. This improves the utilization of feature information while decreasing the number of model parameters, and it all goes like this:

where is the ratio of channel divisions, taken as 0.5. The matrix in the left half is convolved on the channels using a 3 × 3 convolution kernel, . The matrix in the right half is convolved on the channels using a 1*1 convolution kernel, .

Figure 3.

Improved convolution module in the improved YOLOv5.

The usual channels undergo separate convolution processes in two parallel portions, one representing a main feature class (e.g., color or texture) to avoid potential repetition in the typical feature part. In order to cut down on redundant data, one operation uses group convolution on the channel with common characteristics; to make up for some lost information, the other process runs in parallel and uses 1 × 1 pointwise convolution. Since both convolution procedures are applied to the same channel, the result is determined by adding them together. The group convolution can be expressed as a diagonal matrix, so the typicality part of Equation (1) can be written as the follows:

where contains channels. is the -th group Conv kernel.

The whole process of this operation can be understood as the introduction of two coefficient vectors and , respectively, to guide the two parts with typicality and self-calibration features, and the expression of this process is as Equation (3).

Since the two features come from separate input channels, they will need to be fused at some point to make sure the data are full. An innovative framework of parameter-free feature fusion is suggested to enhance performance without the need for additional parameters, as opposed to the traditional methods of performing fusion directly by splicing or summing. Each component undergoes its own global feature extraction and global average pooling process first. The input is multiplied by this value, which represents the spatial dimension compressed using the softmax function. Last but not least, the characteristics of the two components are combined.

So, to express the ImConv arguments, one may use:

ECA attention mechanism. The attention mechanism enables the localization of relevant information, while weight training is conducted on the channel dimension of the feature layer extracted by the backbone network [32]. This allows the network to focus specifically on fire-related aspects in input images, effectively suppressing irrelevant information within complex environments. As a result, it mitigates the impact of complex environments on fire detection tasks and enhances the performance of deep convolutional networks. The backbone network plays a pivotal role in this process. Therefore, this work adds ECA the attention mechanism to the backbone part of the network.

In our work, the novelty does not lie in the introduction of the ECA mechanism itself but in its specific integration into the YOLOv5 framework to address the challenges of feature extraction in complex environments. The key contributions of our approach include:

Customized Integration: We integrated the ECA mechanism into the backbone of YOLOv5 after the last layer to enhance feature extraction with minimal computational overhead. This specific placement was carefully chosen to balance computational efficiency and feature enhancement, which is tailored to our use case.

Performance Enhancement in Specific Scenarios: By incorporating ECA, we aimed to address the interference caused by complex backgrounds in our dataset. The mechanism enhances the expression weight of key feature maps while suppressing irrelevant background information, which significantly improves detection precision, particularly for small and occluded objects.

Analysis of Impact: Unlike existing works that apply ECA in a general sense, we provide a detailed analysis of its impact on the detection accuracy, model efficiency, and robustness within the YOLOv5 framework. This context-specific adaptation demonstrates its effectiveness for object detection tasks in challenging environments.

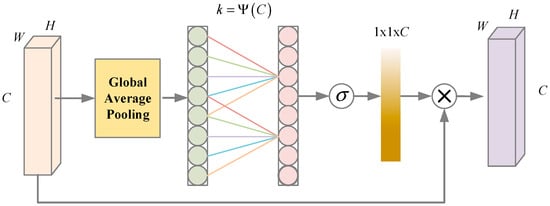

The channel attention mechanism is a type of attention mechanism, with SENet being the most representative example. It primarily extracts features through feature aggregation and recalibration. While this approach achieves high accuracy, it also entails high complexity and computational burden due to the dimension reduction method employed by SENet to control model complexity. However, dimensionality reduction negatively impacts channel attention prediction efficiency and acquisition speed. ECA can effectively address this issue, and its structure is illustrated in Figure 4.

Figure 4.

Diagram of the ECA attention mechanism.

Computational Complexity: ECA significantly reduces the computational burden compared to SENet. While SENet applies a fully connected layer (FC) to learn channel-wise dependencies, resulting in a quadratic increase in the number of parameters, ECA simplifies this by using a 1D kernel for local cross-channel interaction. This results in a more lightweight structure with fewer parameters and lower computational cost.

Efficiency: ECA achieves similar or better performance compared to SENet but with lower computational overhead. This is due to its efficient use of channel-wise attention without the need for a large FC layer. ECA’s mechanism reduces the number of operations required, which not only enhances computational efficiency but also makes it more suitable for real-time applications, where efficiency is crucial.

The aggregated features are obtained through average pooling of the input data, followed by local cross-channel information fusion achieved via one-dimensional convolution with a convolution kernel size . The size of the convolution kernel, denoted as k, represents the extent to which local cross-channel information fusion is covered. Moreover, exhibits a non-linear proportionality with respect to the channel dimension . By means of nonlinear mapping, high-dimensional channels are able to interact over longer distances while low-dimensional channels experience shorter interaction distances:

Given the channel dimension , the convolution kernel size can be adaptively determined:

where and is the tuning parameter.

3. Results

3.1. Experimental Equipment

In this part, we will go over the experimental setup and procedures for the fire detection experiment. Intel(R) Core(TM) i9-12900K@3.2GHz and NVIDIA GeForce RTX 4090 with 24 GB RAM were the central processing units (CPUs) utilized in the experimental hardware. Software environment: CUDA11.1, torch: 1.8.1, python: 3.8; operating system: Ubuntu 22.04.2. We used a 32-batch size, an initial learning rate of 0.01 and a momentum of 0.937, a weight decay coefficient of 0.0005, and the cosine annealing strategy to lower the learning rate as our experimental parameters.

3.2. Fire Dataset

The inadequate database for executing and evaluating the suggested procedure is a key shortcoming of fire detection. Publicly available datasets and Google Photos were used to acquire the fire photos used for training in this study. The images are labeled by the LABELIMG. The labeled dataset consists of 1553 images with good resolution. Among them, there were 904 urban fire photos and 649 wildfire photos.

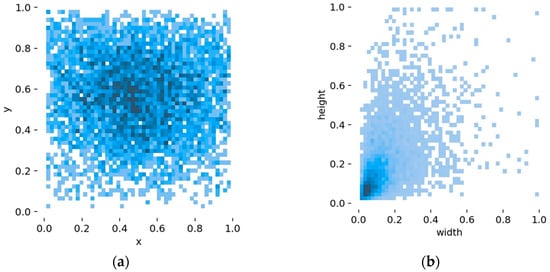

Figure 5 presents the analysis of the spatial locations and size distribution of fire areas across different images in the dataset. This analysis helps to visualize and understand the dataset’s diversity in terms of fire locations and sizes, which is essential for evaluating the model’s generalization capability. Figure 5a illustrates the spatial distribution of the fire, revealing a relatively non-uniform target distribution with a concentration of fire in the central region. In Figure 5b, an analysis of the size distribution indicates that most instances of fire occupy less than 20% of the width and height dimensions of the original image. This observation suggests that the majority of fires captured in the image are at an early stage, occupying only a small portion of its total area.

Figure 5.

Statistics of fire distribution in the images: (a) The spatial distribution of the fire, (b) The analysis of the size distribution.

3.3. Training and Analysis

To quantitatively evaluate the detection effect of the model, the precision, recall, F1 and mAP are used to measure the performance of the algorithm [33,34].

In this context, stands for the target that was correctly given a positive classification, for the target that was given an incorrect negative classification, for the target that was given an incorrect positive classification, and for the area under the precision-recall curve.

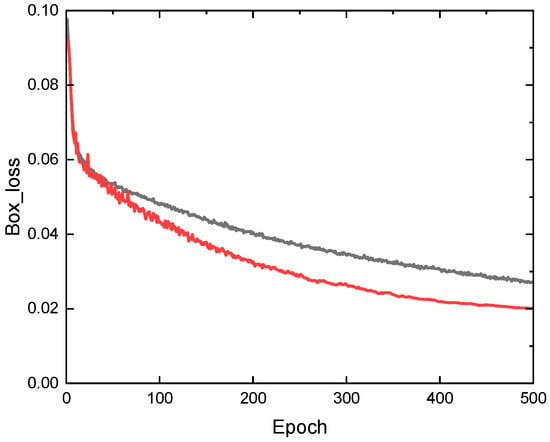

We compare and analyze the modified YOLOv5s algorithm with YOLOv3, YOLOv4, YOLOv4-Tiny, SSD, EfficientDet, and YOLOv5s to validate the effectiveness and performance of different algorithms. Firstly, the bounding box loss function value of the YOLOv5s model and improved YOLOv5s model changes with the curve of training rounds as shown in Figure 6, which illustrates the trend of bounding box loss over training epochs, where the black curve represents the original YOLO model and the red curve represents the improved YOLO model. As training progresses, both loss curves decrease, indicating that the models are learning and improving their localization accuracy. However, the red curve shows a faster decline and consistently lower loss values compared to the black curve, demonstrating that the improved YOLO model converges more quickly and achieves better localization performance. This suggests that the proposed improvements, such as the lightweight architecture and attention mechanism, significantly enhance the model’s ability to predict accurate bounding boxes, resulting in a lower box loss. Secondly, the detection performance comparison of various algorithms is verified, and its data comparison is shown in Table 1. Finally, in order to observe the effect of the model before and after improvement more intuitively, some images of the test set are visualized and analyzed, and the detection effect is shown in Figure 7.

Figure 6.

Comparison of the curve of bounding box loss value with the number of iterations before (black line) and after improvement (red line).

Table 1.

Comparison results of different fire detection models.

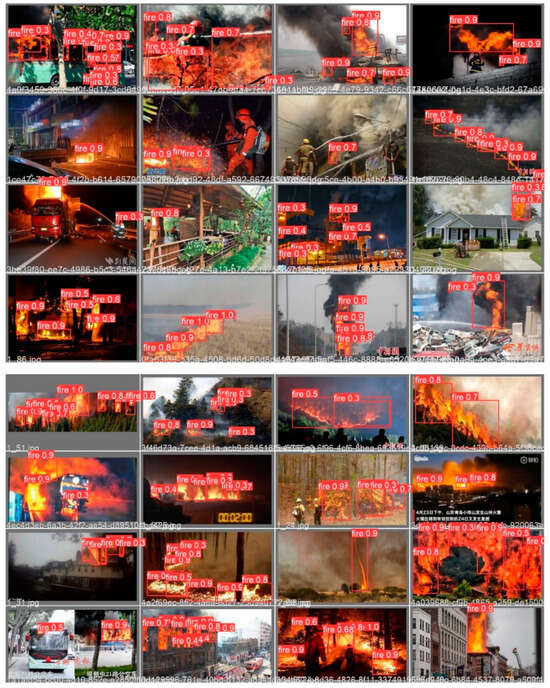

Figure 7.

Display of fire detection results using improved YOLOv5.

The modified YOLOv5s algorithm shows faster convergence speed than the original YOLOv5s, as seen in a comparative analysis of the bounding box loss in Figure 6. Furthermore, following stabilization, this enhanced algorithm shows decreased loss values, going down from 0.1 to about 0.02—a reflection of its greater performance. And throughout the training process, we evaluated the model’s performance on a separate validation set. The results showed consistent improvements in both training and validation accuracy, indicating that the model did not overfit despite the large number of epochs.

Table 1 demonstrates that the precision of the improved YOLOv5s model attains 91.22%, while the recall reaches 93.78%. The F1 value and mAP also reach 0.93 and 95.57%, respectively, all of which exhibit improvements compared to the original YOLOv5s model, placing it in a leading position among other algorithms. As shown in Figure 7, through detection visualization display, it is evident that the improved YOLOv5s model can accurately detect obscured and overlapping targets with high identification accuracy for fire.

The results (Table 2) indicate that each method exhibits good performance, with the improved YOLOv5s demonstrating superior performance across all aspects. However, due to the relatively regular shape of through holes, detection difficulty is low and thus the advantages of the improved YOLOv5s are not as pronounced.

Table 2.

Comparison results of different TH detection models.

3.4. Ablation Experiment

In order to evaluate the gain effect of each improvement point on the YOLOv5s model more intuitively and rigorously, the model numbers of the two improvements and their combination improvements are given: improvement a means embedding ECA attention mechanism, and improvement means replacing the backbone convolution module with the improved convolution module. In the same environment, ablation experiments were carried out according to the same allocation ratio and parameters of the data set, and the experimental results are shown in Table 3. Y indicates using this improvement, and N means that no such improvement was used.

Table 3.

Ablation experiment.

From Table 2, it is evident that the YOLOv5s model exhibits a marginal enhancement in detection performance following two types of improvements. Through a comprehensive comparative analysis of all models, Model 4 demonstrates the most superior detection accuracy with exceptional mAP and F1 scores, showcasing significant improvement compared to the original Model 1 while also displaying commendable generalization capabilities.

The validity of the constructed lightweight modules was demonstrated through a comparative experiment, ensuring objective comparisons and analysis as follows:

Recall from the previous analysis that the hyperparameter αindicates the proportion of typical features among all input features. As shown in Table 4, both the FLOPs and the number of parameters decrease as αis gradually reduced from 1 to 0.25. Specifically, when α = 1, the improved YOLOv5s model requires 56M FLOPs and 0.4M parameters to achieve a precision of 90.12%. However, when α = 0.5, the FLOPs and parameters decrease to 26M and 0.2M, respectively—approximately 46% of the original FLOPs and 50% of the original parameters—while the precision increases to 91.22%, surpassing the performance at α = 1. This indicates that reducing αto 0.5 allows the model to focus on more significant features while discarding redundant computations, leading to a better trade-off between computational efficiency and detection accuracy.

Table 4.

Comparison results of different ImConv blocks.

When α = 0.25, the FLOPs and parameters are further reduced to 20M and 0.1M, but the precision drops slightly to 89.08%. This suggests that while a smaller αgreatly reduces the computational cost, it may also lead to the loss of important feature information, thereby slightly impacting the detection performance. Overall, the results highlight that setting α = 0.5 achieves the best balance between accuracy and resource efficiency, demonstrating the effectiveness of the proposed improvements in creating a more lightweight and precise detection model.

4. Conclusions

This study presents a novel approach for identifying fire areas using the YOLOv5 algorithm. We employed two cutting-edge methodologies to enhance the accuracy of the default YOLOv5 approach for fire detection, leading to a surprising level of precision. Initially, a self-designed convolution module is implemented to substitute the conventional convolution module of YOLOv5 in the backbone and neck sections. This replacement decreases complexity while maintaining classification accuracy. Additionally, this paper introduces the ECA attention mechanism after the last layer in the backbone part. This system makes up for the missing granularity in the feature map by giving certain features more weight. It improves the feature extraction performance of the backbone network with little increase in parameter count. Furthermore, it effectively reduces the impact of complex background environments. The improved YOLOv5 has been subjected to comparative experiments with other methods and ablation experiments. The results suggest that the improved YOLOv5 model achieves superior accuracy in fire detection with Precision of 91.22% and Recall of 93.78%. But the model’s performance on simpler, underrepresented features (e.g., through holes) indicates room for improvement in generalization across diverse feature types.

The next study is to deploy the improved YOLOv5s model on embedded systems for real-time industrial applications. This deployment will involve further optimization to balance accuracy and computational efficiency for resource-constrained environments.

Author Contributions

Methodology, D.Y.; Software, S.L.; Investigation, Z.Z. and X.Z.; Resources, W.D.; Data curation, X.L.; Funding acquisition, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by Key Laboratory of Fire Protection Technology for Industry and Public Building, the Ministry of Emergency Management (2023KLIB01); Tianjin Natural Science Foundation Project (22JCYBJC01710); National Natural Science Foundation of China (62103417).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Zhongze Zhang is employed by the company China Siwei Surveying and Mapping Technology Co. Ltd., Wei Ding is is employed by the company Anhui Zhongkezhonghuan Intelligent Equipment Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Verstockt, S.; Beji, T.; De Potter, P.; Van Hoecke, S.; Sette, B.; Merci, B.; Van de Walle, R. Video driven fire spread forecasting (f) using multi-modal LWIR and visual flame and smoke data. Pattern Recognit. Lett. 2013, 34, 62–69. [Google Scholar] [CrossRef]

- Ren, W.K.; Jin, Z.J. Phase space visibility graph. Chaos Solitons Fract. 2023, 176, 114170. [Google Scholar] [CrossRef]

- Battistoni, P.; Cantone, A.A.; Martino, G.; Passamano, V.; Romano, M.; Sebillo, M.; Vitiello, G. A cyber-physical system for wildfire detection and firefighting. Future Internet 2023, 15, 237. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional neural networks based fire detection in surveillance videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Mao, W.; Wang, W.; Dou, Z.; Li, Y. Fire recognition based on multi-channel convolutional neural network. Fire Technol. 2018, 54, 531–554. [Google Scholar] [CrossRef]

- Bu, F.; Gharajeh, M.S. Intelligent and vision-based fire detection systems: A survey. Image Vis. Comput. 2019, 91, 103803. [Google Scholar] [CrossRef]

- Yin, H.; Chen, M.; Lin, Y.; Luo, S.; Chen, Y.; Yang, S.; Gao, L. A real-time detection model for smoke in grain bins with edge devices. Heliyon 2023, 9, e18606. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, H.; Wang, P.; Ling, X. ATT squeeze U-Net: A lightweight network for forest fire detection and recognition. IEEE Access 2021, 9, 10858–10870. [Google Scholar] [CrossRef]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A forest fire detection system based on ensemble learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Whangbo, T.K. Detection and removal of moving object shadows using geometry and color information for indoor video streams. Appl. Sci. 2019, 9, 5165. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Mukhiddinov, M.; Djuraev, O.; Khamdamov, U.; Whangbo, T.K. Automatic salient object extraction based on locally adaptive thresholding to generate tactile graphics. Appl. Sci. 2020, 10, 3350. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision-ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Su, H.; Sun, H.; Zhao, Y. Efficient Pruning of Detection Transformer in Remote Sensing Using Ant Colony Evolutionary Pruning. Appl. Sci. 2025, 15, 200. [Google Scholar] [CrossRef]

- Shiao, Y.; Gadde, P.; Liu, C.-Y. Wavelet-Based Analysis of Motor Current Signals for Detecting Obstacles in Train Doors. Appl. Sci. 2025, 15, 25. [Google Scholar] [CrossRef]

- Wang, Y.; Deng, Y.; Zheng, Y.; Chattopadhyay, P.; Wang, L. Vision Transformers for Image Classification: A Comparative Survey. Technologies 2025, 13, 32. [Google Scholar] [CrossRef]

- Bochkov, V.S.; Kataeva, L.Y. wUUNet: Advanced Fully Convolutional Neural Network for Multiclass Fire Segmentation. Symmetry 2021, 13, 98. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Chen, C.; Lu, J.; Zhou, M.; Yi, J.; Liao, M.; Gao, Z. A YOLOv3-based computer vision system for identification of tea buds and the picking point. Comput. Electron. Agric. 2022, 198, 107116. [Google Scholar] [CrossRef]

- Ren, W.K.; Jin, N.D.; OuYang, L. Phase Space Graph Convolutional Network for Chaotic Time Series Learning. IEEE Trans. Ind. Inform. 2024, 20, 7576–7584. [Google Scholar] [CrossRef]

- Ren, W.K.; Jin, N.D.; Wang, T.Y. An Interdigital Conductance Sensor for Measuring Liquid Film Thickness in Inclined Gas-Liquid Two-Phase Flow. IEEE Trans. Instrum. Meas. 2024, 73, 9505809. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, C.; Zhong, X.; Shi, G.; Zhang, H.; Yang, D.; Wang, J. A Lightweight Method for Peanut Kernel Quality Detection Based on SEA-YOLOv5. Agriculture 2024, 14, 2273. [Google Scholar] [CrossRef]

- Yan, W.; Wang, X.; Tan, S. YOLO-DFAN: Effective High-Altitude Safety Belt Detection Network. Future Internet 2022, 14, 349. [Google Scholar] [CrossRef]

- Chen, Y.; Ye, J.; Wan, X. TF-YOLO: A Transformer–Fusion-Based YOLO Detector for Multimodal Pedestrian Detection in Autonomous Driving Scenes. World Electr. Veh. J. 2023, 14, 352. [Google Scholar] [CrossRef]

- Orgeira-Crespo, P.; Gabín-Sánchez, C.; Aguado-Agelet, F.; Rey-González, G. Novel Algorithm to Detect, Classify, and Count Mussel Larvae in Seawater Samples Using Computer Vision. Appl. Sci. 2024, 14, 5113. [Google Scholar] [CrossRef]

- Matadamas, I.; Zamora, E.; Aquino-Bolaños, T. Detection and Classification of Agave angustifolia Haw Using Deep Learning Models. Agriculture 2024, 14, 2199. [Google Scholar] [CrossRef]

- Yi, S.; Li, J.; Liu, X.; Yuan, X. CCAFFMNet: Dual-spectral semantic segmentation network with channel-coordinate attention feature fusion module. Neurocomputing 2022, 482, 236–251. [Google Scholar] [CrossRef]

- Pandey, A.; Jain, K. A robust deep attention dense convolutional neural network for plant leaf disease identification and classification from smart phone captured real world images. Ecol. Inf. 2022, 70, 101725. [Google Scholar] [CrossRef]

- Lu, R.; Yang, X.; Jing, X.; Chen, L.; Fan, J.; Li, W.; Li, D. Infrared small target detection based on local hypergraph dissimilarity measure. IEEE Geosci. Remote Sens. Lett. 2020, 19, 7000405. [Google Scholar] [CrossRef]

- Lu, R.; Yang, X.; Li, W.; Fan, J.; Li, D.; Jing, X. Robust infrared small target detection via multidirectional derivative-based weighted contrast measure. IEEE Geosci. Remote Sens. Lett. 2020, 19, 7000105. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).