Early-Stage Wildfire Detection: A Weakly Supervised Transformer-Based Approach

Abstract

1. Introduction

1.1. Annotation Bottleneck

1.2. Contributions

- A Flexible Weakly Supervised Framework: Omni-Nemo is designed to utilize a variety of annotation types during the model training process, including noisy bounding boxes, point labels, and unlabeled images. This flexibility effectively addresses the high costs associated with annotation and the limited availability of accurately labeled data, which significantly impacts model performance and training quality. As a result, the framework is well-suited for large-scale deployment.

- A Stable Training Paradigm using a Static Expert Teacher: In contrast to co-learning models that utilize evolving supervision, where the teacher model is trained alongside the student, Omni-Nemo employs a fixed pre-trained expert teacher to guide the student. This method guarantees consistent supervisory signals, reduces drift and error accumulation, and facilitates efficient knowledge transfer without the need to retrain a model to act as the teacher. It also enables the integration of other trained and valuable models to enhance the training process using smaller and new datasets.

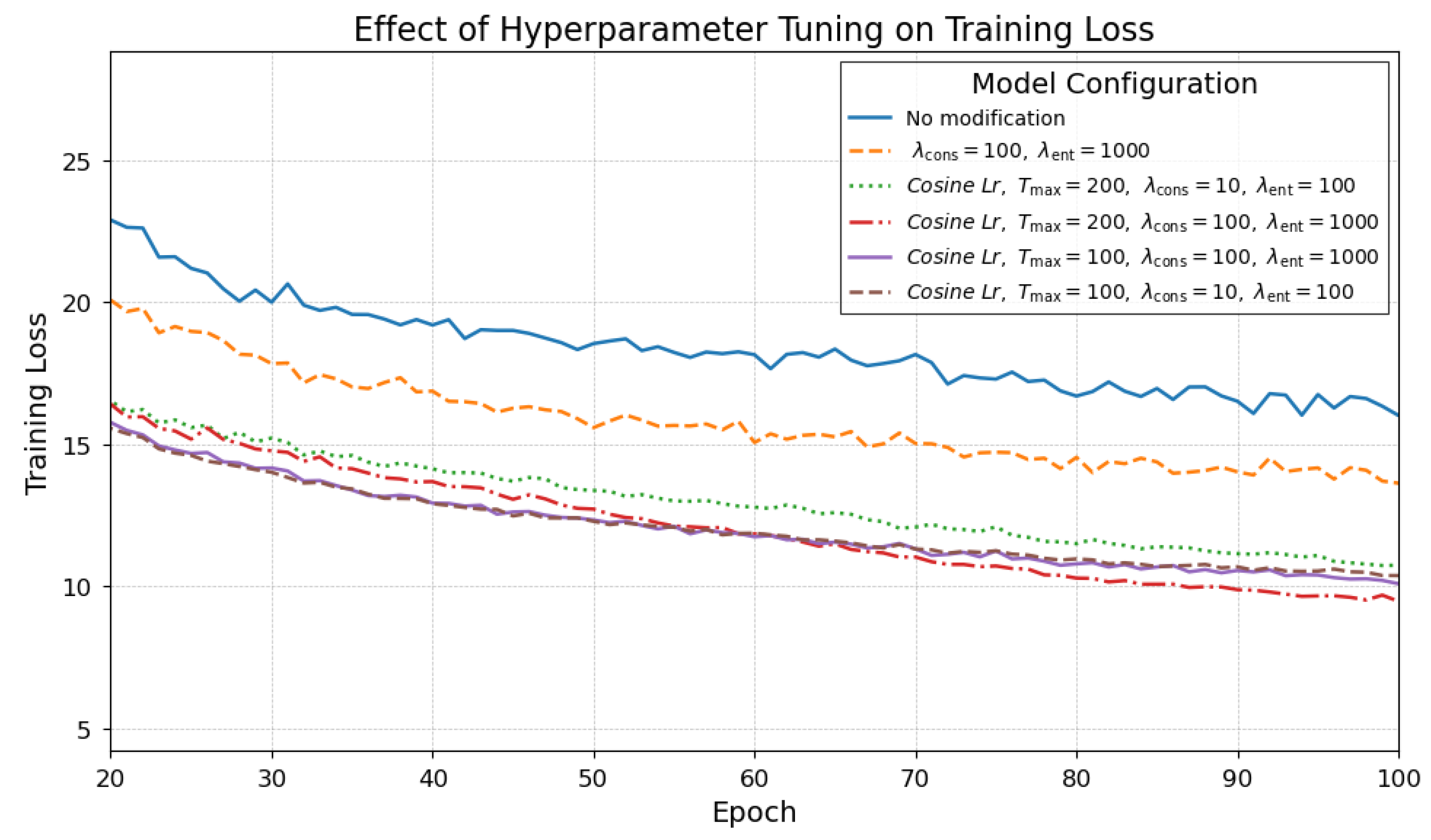

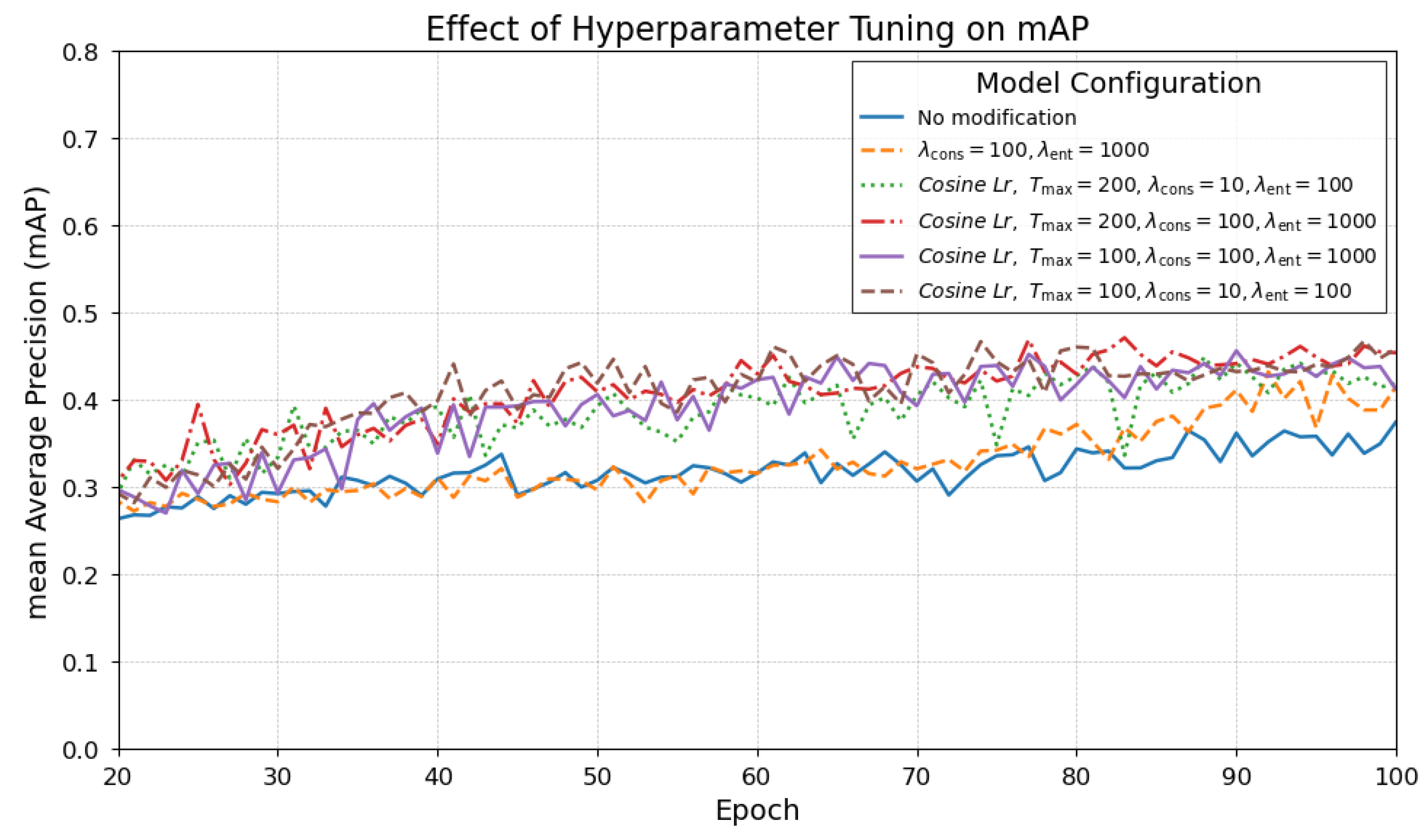

- An Optimized Loss Function and Learning Schedule for Accelerated Distillation: The framework features a three-component loss function that integrates supervised learning with consistency regularization and entropy minimization. These components encourage confident predictions and alignment with the teacher’s outputs, while the cosine learning rate scheduler facilitates smooth convergence.

2. Materials and Methods

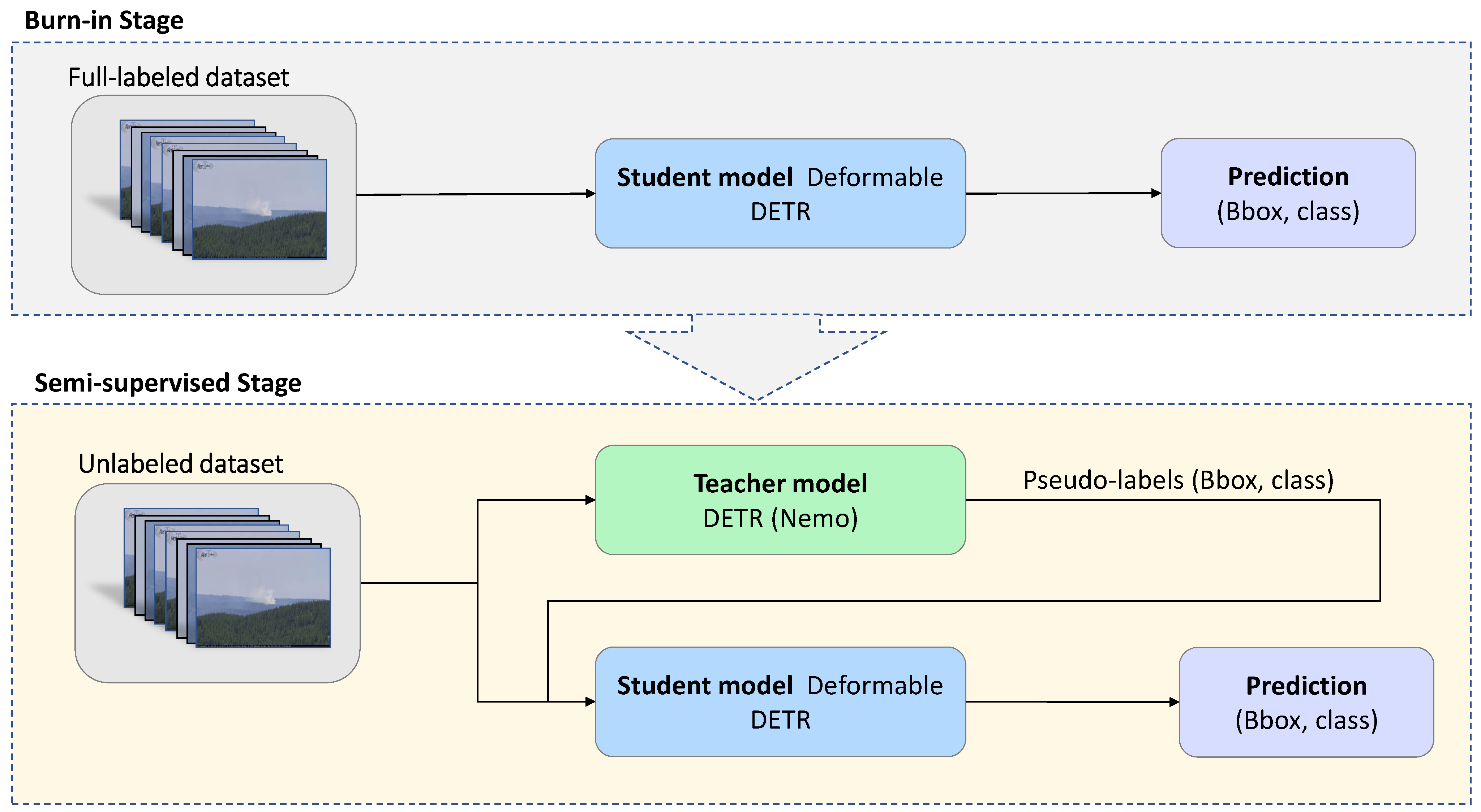

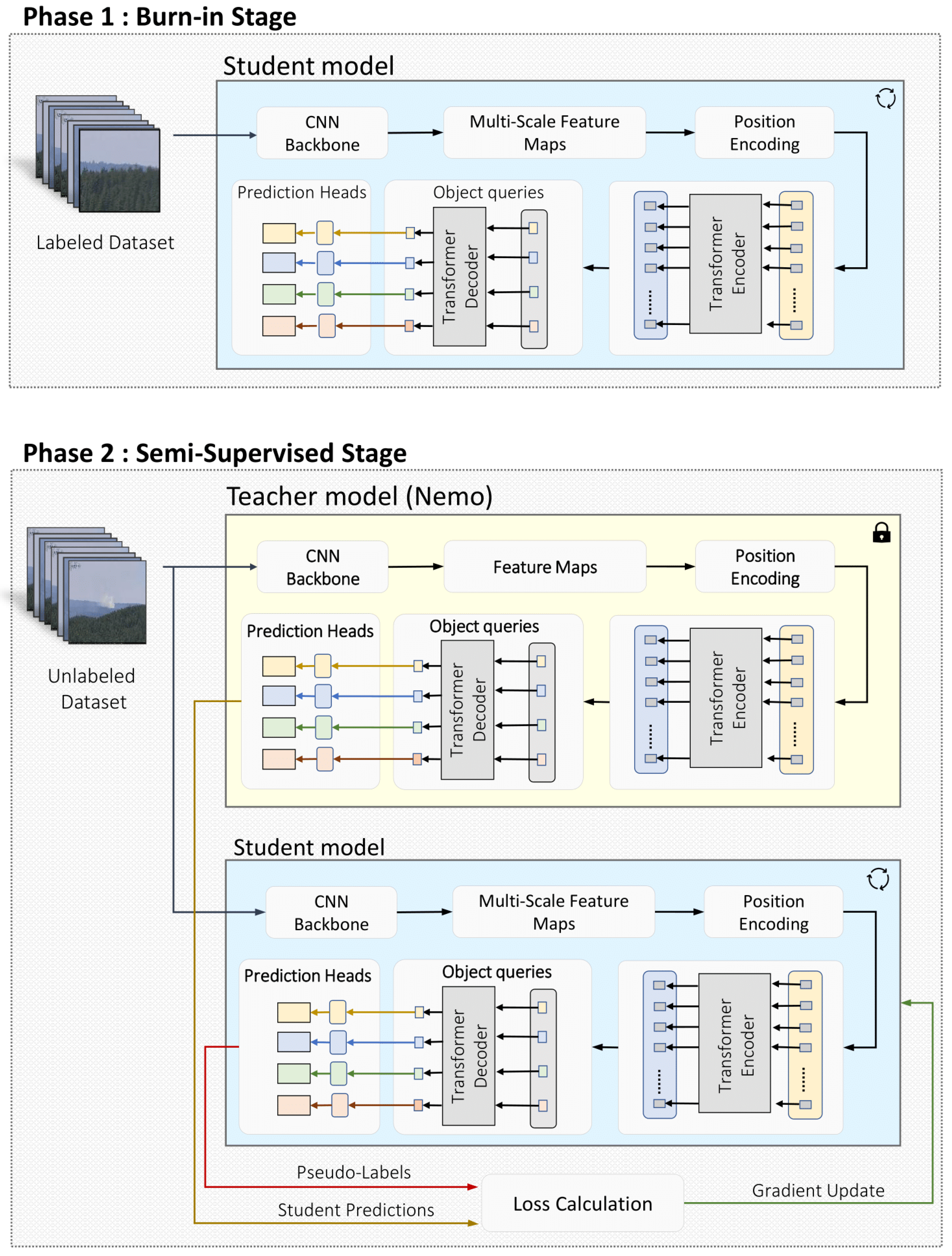

2.1. Teacher–Student Architecture

2.1.1. Teacher Model

2.1.2. Student Model

2.2. Loss Function Design

2.2.1. Supervised Loss ()

2.2.2. Unsupervised Consistency Loss ()

2.2.3. Entropy Minimization ()

2.3. Two-Stage Training Strategy

- The frozen teacher network produces predictions that serve as pseudo-labels.

- The student network processes the same input and generates its own predictions.

- Consistency loss () and entropy loss () are computed by comparing the two outputs.

- Gradients are applied only to update the student network parameters.

3. Implementation Details

3.1. Dataset

- Stage 1 (Burn-In Training): In the first phase, the student model is trained on the Nemo training set, which consists of 2400 fully labeled wildfire images collected before 2020 from the AlertWildfire network. This dataset, identical to the one used for training the original Nemo teacher model [24], provides full supervision and establishes the foundation for initializing the student.

- Stage 2 (Semi-Supervised Training): The student is further trained on a distinct dataset of approximately 1300 images collected from HPWREN video archives [57], spanning wildfire events between 2020 and 2023. These images were not included in the teacher model’s training and are temporally and contextually disjoint from the original Nemo splits. This separation ensures that the teacher does not generate pseudo-labels for data it has previously seen, thereby eliminating the risk of circular supervision or domain overfitting. The dataset includes full ground-truth annotations created following the manual labeling methodology described in the Nemo study [24], in which experts review terrestrial camera footage to identify and annotate early-stage smoke signals under operational conditions. This process accounts for visual ambiguity, environmental variability, and the diffuse appearance of smoke plumes. To enable controlled experimentation across different levels of supervision, we simulate weak annotation regimes by programmatically generating alternative formats, including noisy bounding boxes, point-level labels, and unlabeled subsets for various training scenarios. This procedure directly follows the dataset variation protocol proposed by Omni-DETR [51], which provides a systematic framework for evaluating model robustness under imperfect labeling.

- No Annotation: 80% of the dataset lacks any form of annotation.

- Noisy BBox: 80% of the samples are annotated with algorithmically generated bounding boxes that may contain noise.

- Point: 80% of the data is weakly annotated using a single point indicating the presence of smoke.

- Point and Noisy BBox: 40% of the data is annotated with point-level labels and 40% with noisy bounding boxes.

3.2. Experimental Settings

3.3. Model Configuration

3.4. Hardware

3.5. Learning Rate

3.6. Hyperparameter Tuning

4. Results

4.1. Wildfire Detection Performance Evaluation

4.2. Early Incipient Time-Series

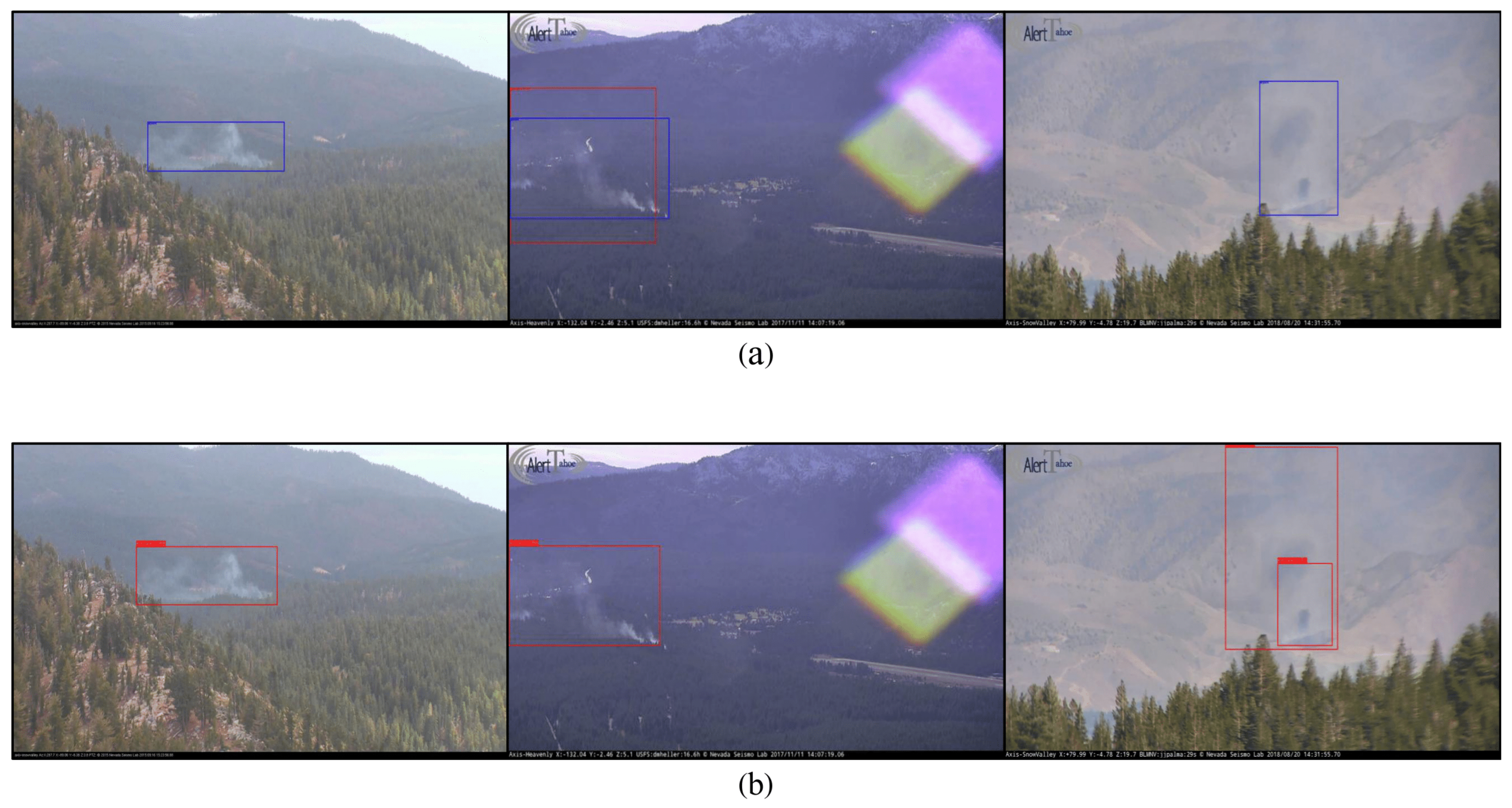

4.3. Detection Visualization

5. Discussion

Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Interagency Fire Center. Wildland Fire Summary and Statistics Annual Report 2024. 2025. Available online: https://www.nifc.gov/sites/default/files/NICC/2-Predictive%20Services/Intelligence/Annual%20Reports/2024/annual_report_2024.pdf (accessed on 15 May 2025).

- Li, Y.; Zhang, T.; Ding, Y.; Wadhwani, R.; Huang, X. Review and perspectives of digital twin systems for wildland fire management. J. For. Res. 2025, 36, 1–24. [Google Scholar] [CrossRef]

- Negash, N.M.; Sun, L.; Fan, C.; Shi, D.; Wang, F. Review of Wildfire Detection, Fighting, and Technologies: Future Prospects and Insights. In Proceedings of the AIAA Aviation Forum and ASCEND 2025, Las Vegas, NV, USA, 21–25 July 2025; p. 3469. [Google Scholar]

- Özel, B.; Alam, M.S.; Khan, M.U. Review of modern forest fire detection techniques: Innovations in image processing and deep learning. Information 2024, 15, 538. [Google Scholar] [CrossRef]

- Balsak, A.; San, B.T. Evaluation of the effect of spatial and temporal resolutions for digital change detection: Case of forest fire. Nat. Hazards 2023, 119, 1799–1818. [Google Scholar] [CrossRef]

- Singh, H.; Ang, L.M.; Srivastava, S.K. Active wildfire detection via satellite imagery and machine learning: An empirical investigation of Australian wildfires. Nat. Hazards 2025, 121, 9777–9800. [Google Scholar] [CrossRef]

- Zhao, Y.; Ban, Y. GOES-R time series for early detection of wildfires with deep GRU-network. Remote Sens. 2022, 14, 4347. [Google Scholar] [CrossRef]

- Boroujeni, S.P.H.; Razi, A.; Khoshdel, S.; Afghah, F.; Coen, J.L.; O’Neill, L.; Fule, P.; Watts, A.; Kokolakis, N.M.T.; Vamvoudakis, K.G. A comprehensive survey of research towards AI-enabled unmanned aerial systems in pre-, active-, and post-wildfire management. Inf. Fusion 2024, 108, 102369. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Bakhtiyor Shukhratovich, M.; Mukhiddinov, M.; Kakhorov, A.; Buriboev, A.; Jeon, H.S. Drone-Based Wildfire Detection with Multi-Sensor Integration. Remote Sens. 2024, 16, 4651. [Google Scholar] [CrossRef]

- Saltiel, T.M.; Larson, K.B.; Rahman, A.; Coleman, A. Airborne LiDAR to Improve Canopy Fuels Mapping for Wildfire Modeling; Technical Report; Pacific Northwest National Laboratory (PNNL): Richland, WA, USA, 2024. [Google Scholar]

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne optical and thermal remote sensing for wildfire detection and monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef] [PubMed]

- Shah, S. Preliminary Wildfire Detection Using State-of-the-art PTZ (Pan, Tilt, Zoom) Camera Technology and Convolutional Neural Networks. arXiv 2021, arXiv:2109.05083. [Google Scholar]

- Tzoumas, G.; Pitonakova, L.; Salinas, L.; Scales, C.; Richardson, T.; Hauert, S. Wildfire detection in large-scale environments using force-based control for swarms of UAVs. Swarm Intell. 2023, 17, 89–115. [Google Scholar] [CrossRef]

- Govil, K.; Welch, M.L.; Ball, J.T.; Pennypacker, C.R. Preliminary results from a wildfire detection system using deep learning on remote camera images. Remote Sens. 2020, 12, 166. [Google Scholar]

- Honary, R.; Shelton, J.; Kavehpour, P. A Review of Technologies for the Early Detection of Wildfires. ASME Open J. Eng. 2025, 4, 040803. [Google Scholar] [CrossRef]

- Pimpalkar, S. Wild-Fire and Smoke Detection for Environmental Disaster Management using Deep Learning: Comparative Analysis using YOLO variants. In Proceedings of the 2025 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 5–7 March 2025; pp. 1–6. [Google Scholar]

- Wang, Z.; Wu, L.; Li, T.; Shi, P. A smoke detection model based on improved YOLOv5. Mathematics 2022, 10, 1190. [Google Scholar] [CrossRef]

- Li, J.; Xu, R.; Liu, Y. An improved forest fire and smoke detection model based on yolov5. Forests 2023, 14, 833. [Google Scholar] [CrossRef]

- Saydirasulovich, S.N.; Mukhiddinov, M.; Djuraev, O.; Abdusalomov, A.; Cho, Y.I. An improved wildfire smoke detection based on YOLOv8 and UAV images. Sensors 2023, 23, 8374. [Google Scholar] [CrossRef] [PubMed]

- Ide, R.; Yang, L. Adversarial Robustness for Deep Learning-Based Wildfire Prediction Models. Fire 2025, 8, 50. [Google Scholar] [CrossRef]

- Sun, B.; Cheng, X. Smoke Detection Transformer: An Improved Real-Time Detection Transformer Smoke Detection Model for Early Fire Warning. Fire 2024, 7, 488. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Yazdi, A.; Qin, H.; Jordan, C.B.; Yang, L.; Yan, F. Nemo: An open-source transformer-supercharged benchmark for fine-grained wildfire smoke detection. Remote Sens. 2022, 14, 3979. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Chen, Y.; Liu, B.; Yuan, L. PR-Deformable DETR: DETR for remote sensing object detection. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Krawi, O.; Rada, L. Towards Automatic Streetside Building Identification with an Integrated YOLO Model for Building Detection and a Vision Transformer for Identification. IEEE Access 2025, 13, 52901–52911. [Google Scholar] [CrossRef]

- Choi, S.; Song, Y.; Jung, H. Study on Improving Detection Performance of Wildfire and Non-Fire Events Early Using Swin Transformer. IEEE Access 2025, 13, 46824–46837. [Google Scholar] [CrossRef]

- Li, R.; Hu, Y.; Li, L.; Guan, R.; Yang, R.; Zhan, J.; Cai, W.; Wang, Y.; Xu, H.; Li, L. SMWE-GFPNNet: A high-precision and robust method for forest fire smoke detection. Knowl.-Based Syst. 2024, 289, 111528. [Google Scholar]

- Chaturvedi, S.; Shubham Arun, C.; Singh Thakur, P.; Khanna, P.; Ojha, A. Ultra-lightweight convolution-transformer network for early fire smoke detection. Fire Ecol. 2024, 20, 83. [Google Scholar] [CrossRef]

- Tang, T.; Jayaputera, G.T.; Sinnott, R.O. A Performance Comparison of Convolutional Neural Networks and Transformer-Based Models for Classification of the Spread of Bushfires. In Proceedings of the 2024 IEEE 20th International Conference on e-Science (e-Science), Osaka, Japan, 16–20 September 2024; pp. 1–9. [Google Scholar]

- Dietterich, T.G.; Lathrop, R.H.; Lozano-Pérez, T. Solving the multiple instance problem with axis-parallel rectangles. Artif. Intell. 1997, 89, 31–71. [Google Scholar] [CrossRef]

- Bilen, H.; Vedaldi, A. Weakly supervised deep detection networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2846–2854. [Google Scholar]

- Tang, P.; Wang, X.; Bai, X.; Liu, W. Multiple instance detection network with online instance classifier refinement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2843–2851. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 618–626. [Google Scholar]

- Xie, J.; Yu, F.; Wang, H.; Zheng, H. Class activation map-based data augmentation for satellite smoke scene detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar]

- Zhao, L.; Liu, J.; Peters, S.; Li, J.; Mueller, N.; Oliver, S. Learning class-specific spectral patterns to improve deep learning-based scene-level fire smoke detection from multi-spectral satellite imagery. Remote Sens. Appl. Soc. Environ. 2024, 34, 101152. [Google Scholar]

- Pan, J.; Ou, X.; Xu, L. A collaborative region detection and grading framework for forest fire smoke using weakly supervised fine segmentation and lightweight faster-RCNN. Forests 2021, 12, 768. [Google Scholar] [CrossRef]

- Liu, L.; Yu, D.; Zhang, X.; Xu, H.; Li, J.; Zhou, L.; Wang, B. A Semi-Supervised Attention-Temporal Ensembling Method for Ground Penetrating Radar Target Recognition. Sensors 2025, 25, 3138. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results.Advances in Neural Information Processing Systems (NeurIPS 30); Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 1195–1204. [Google Scholar]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semi-supervised learning. In Advances in Neural Information Processing Systems (NeurIPS 32); Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 5050–5060. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Wang, C.; Grau, A.; Guerra, E.; Shen, Z.; Hu, J.; Fan, H. Semi-supervised wildfire smoke detection based on smoke-aware consistency. Front. Plant Sci. 2022, 13, 980425. [Google Scholar] [CrossRef]

- Xie, Q.; Luong, M.T.; Hovy, E.; Le, Q.V. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10687–10698. [Google Scholar]

- Sohn, K.; Zhang, Z.; Li, C.L.; Zhang, H.; Lee, C.Y.; Pfister, T. A simple semi-supervised learning framework for object detection. arXiv 2020, arXiv:2005.04757. [Google Scholar] [CrossRef]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-end semi-supervised object detection with soft teacher. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3060–3069. [Google Scholar]

- Li, H.; Qi, J.; Li, Y.; Zhang, W. A dual-branch selection method with pseudo-label based self-training for semi-supervised smoke image segmentation. Digit. Signal Process. 2024, 145, 104320. [Google Scholar] [CrossRef]

- Amini, M.R.; Feofanov, V.; Pauletto, L.; Hadjadj, L.; Devijver, E.; Maximov, Y. Self-training: A survey. Neurocomputing 2025, 616, 128904. [Google Scholar] [CrossRef]

- Kage, P.; Rothenberger, J.C.; Andreadis, P.; Diochnos, D.I. A review of pseudo-labeling for computer vision. arXiv 2024, arXiv:2408.07221. [Google Scholar] [CrossRef]

- Wang, P.; Cai, Z.; Yang, H.; Swaminathan, G.; Vasconcelos, N.; Schiele, B.; Soatto, S. Omni-detr: Omni-supervised object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9367–9376. [Google Scholar]

- Liu, Y.C.; Ma, C.Y.; He, Z.; Kuo, C.W.; Chen, K.; Zhang, P.; Wu, B.; Kira, Z.; Vajda, P. Unbiased teacher for semi-supervised object detection. arXiv 2021, arXiv:2102.09480. [Google Scholar] [CrossRef]

- Zhou, H.; Ge, Z.; Liu, S.; Mao, W.; Li, Z.; Yu, H.; Sun, J. Dense teacher: Dense pseudo-labels for semi-supervised object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 35–50. [Google Scholar]

- Kurakin, A.; Raffel, C.; Berthelot, D.; Cubuk, E.D.; Zhang, H.; Sohn, K.; Carlini, N. ReMixMatch: Semi-Supervised Learning with Distribution Alignment and Augmentation Anchoring. arXiv 2020, arXiv:1911.09785. [Google Scholar] [CrossRef]

- ALERTWildfire Camera Network. Available online: https://www.alertwildfire.org/ (accessed on 6 September 2025).

- HPWREN. The HPWREN Fire Ignition Images Library for Neural Network Training. Available online: https://www.hpwren.ucsd.edu/FIgLib/ (accessed on 4 August 2025).

- Omni-Nemo Dataset. Available online: https://nevada.box.com/s/9mvjpononhmq1g8hnc8tj3hlqiasa7pg (accessed on 6 September 2025).

- SayBender/Nemo. Available online: https://github.com/SayBender/Nemo (accessed on 6 September 2025).

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Zhang, S.; Gao, H.; Zhang, T.; Zhou, Y.; Wu, Z. Alleviating robust overfitting of adversarial training with consistency regularization. arXiv 2022, arXiv:2205.11744. [Google Scholar] [CrossRef]

- Ramos, L.T.; Casas, E.; Romero, C.; Rivas-Echeverría, F.; Bendek, E. A study of yolo architectures for wildfire and smoke detection in ground and aerial imagery. Results Eng. 2025, 26, 104869. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 4 August 2025).

- LaBonte, T.; Song, Y.; Wang, X.; Vineet, V.; Joshi, N. Scaling novel object detection with weakly supervised detection transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 85–96. [Google Scholar]

- Liu, C.; Zhang, W.; Lin, X.; Zhang, W.; Tan, X.; Han, J.; Li, X.; Ding, E.; Wang, J. Ambiguity-resistant semi-supervised learning for dense object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 15579–15588. [Google Scholar]

| Category | Training Process | Number of Images | Origin |

|---|---|---|---|

| Labeled dataset | Burn-in | 2400 | Nemo (Train) [24] |

| Unlabeled dataset | Semi-supervised | 1300 | Generated in this study [57] |

| Validation dataset | Evaluation | 260 | Nemo (Test) [24] |

| Parameter | Nemo-DETR | FRCNN | YOLO-v11 | YOLO-v8 | WS-DETR | Omni-DETR | Omni-Nemo | |||

|---|---|---|---|---|---|---|---|---|---|---|

| No Annotation | Noisy BBox | Point | Point and Noisy BBox | |||||||

| mAP | 40.6 | 29.3 | 26.8 | 39.6 | 12.3 | 48.0 | 64.1 | 66.4 | 66.5 | 65.9 |

| AP50 | 77.2 | 68.4 | 43.7 | 73.5 | 20.1 | 82.9 | 80.2 | 81.0 | 82.6 | 81.8 |

| APS | 54.4 | 27.2 | 8.2 | 35.6 | 0.0 | 40.1 | 55.5 | 51.6 | 58.3 | 57.2 |

| APM | 69.4 | 64.4 | 17.3 | 31.1 | 3.7 | 47.2 | 71.8 | 73.9 | 72.1 | 72.5 |

| APL | 80.7 | 72.1 | 25.1 | 42.0 | 6.9 | 60.8 | 68.5 | 69.4 | 67.2 | 67.9 |

| AR | 88.6 | 77.2 | 35.2 | 57.7 | 7.1 | 59.1 | 68.4 | 69.7 | 73.9 | 70.6 |

| ARS | 66.7 | 44.4 | 7.8 | 28.9 | 0.0 | 42.3 | 67.6 | 66.8 | 69.1 | 68.8 |

| ARM | 83.7 | 75.5 | 30.1 | 50.2 | 5.6 | 64.7 | 75.4 | 77.8 | 76.1 | 77.3 |

| ARL | 91.0 | 79.3 | 32.3 | 61.5 | 9.8 | 70.4 | 73.4 | 76.7 | 75.0 | 76.5 |

| Fire Event | FRCNN | Nemo-DETR | YOLO-v8 | Omni-DETR | Omni-Nemo | ||

|---|---|---|---|---|---|---|---|

| (HPWREN Archive [1]) | (min) | (min) | (min) | (min) | No Annotation | Point | Noisy BBox |

| bravo-e-mobo-c__2019-08-13 | 5 | 2 | 3 | 2 | 2 | 2 | 2 |

| bh-w-mobo-c__2019-06-10 | 9 | 4 | 5 | 4 | 3 | 3 | 3 |

| bl-n-mobo-c__2019-08-29 | 4 | 3 | 5 | 4 | 3 | 3 | 4 |

| bl-s-mobo-c__2019-07-16 | 8 | 3 | 5 | 4 | 3 | 3 | 3 |

| lp-n-mobo-c__2019-07-17 | 2 | 1 | 3 | 2 | 2 | 1 | 2 |

| ml-w-mobo-c__2019-09-24 | 4 | 3 | 4 | 3 | 3 | 2 | 3 |

| ml-w-mobo-c__2019-10-06 | 7 | 4 | 5 | 3 | 3 | 3 | 3 |

| om-e-mobo-c__2019-07-12 | 2 | 5 | 7 | 6 | 6 | 6 | 7 |

| pi-s-mobo-c__2019-08-14 | 5 | 3 | 5 | 3 | 4 | 3 | 4 |

| rm-w-mobo-c__2019-10-03 | 2 | 2 | 3 | 2 | 2 | 2 | 2 |

| rm-w-mobo-c__2019-10-03 | 2 | 1 | 2 | 1 | 1 | 1 | 2 |

| smer-tcs8-mobo-c__2019-08-29 | 3 | 2 | 3 | 2 | 2 | 2 | 3 |

| wc-e-mobo-c__2019-09-25 | 7 | 5 | 7 | 5 | 4 | 4 | 5 |

| wc-s-mobo-c__2019-09-24 | 10 | 8 | 10 | 8 | 7 | 7 | 7 |

| Mean ± sd (Events 1–14) | 5.0 ± 2.8 | 3.3 ± 1.9 | 4.8 ± 2.0 | 3.5 ± 1.8 | 3.2 ± 1.6 | 3.0 ± 1.7 | 3.6 ± 1.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Samavat, T.; Yazdi, A.; Yan, F.; Yang, L. Early-Stage Wildfire Detection: A Weakly Supervised Transformer-Based Approach. Fire 2025, 8, 413. https://doi.org/10.3390/fire8110413

Samavat T, Yazdi A, Yan F, Yang L. Early-Stage Wildfire Detection: A Weakly Supervised Transformer-Based Approach. Fire. 2025; 8(11):413. https://doi.org/10.3390/fire8110413

Chicago/Turabian StyleSamavat, Tina, Amirhessam Yazdi, Feng Yan, and Lei Yang. 2025. "Early-Stage Wildfire Detection: A Weakly Supervised Transformer-Based Approach" Fire 8, no. 11: 413. https://doi.org/10.3390/fire8110413

APA StyleSamavat, T., Yazdi, A., Yan, F., & Yang, L. (2025). Early-Stage Wildfire Detection: A Weakly Supervised Transformer-Based Approach. Fire, 8(11), 413. https://doi.org/10.3390/fire8110413