Abstract

Due to its wide monitoring range and low cost, visual-based fire detection technology is commonly used for fire detection in open spaces. However, traditional fire detection algorithms have limitations in terms of accuracy and speed, making it challenging to detect fires in real time. These algorithms have poor anti-interference ability against fire-like objects, such as emissions from factory chimneys, clouds, etc. In this study, we developed a fire detection approach based on an improved YOLOv5 algorithm and a fire detection dataset with fire-like objects. We added three Convolutional Block Attention Modules (CBAMs) to the head network of YOLOv5 to improve its feature extraction ability. Meanwhile, we used the C2f module to replace the original C2 module to capture rich gradient flow information. Our experimental results show that the proposed algorithm achieved a mAP@50 of 82.36% for fire detection. In addition, we also conducted a comparison test between datasets with and without labeling information for fire-like objects. Our results show that labeling information significantly reduced the false-positive detection proportion of fire-like objects incorrectly detected as fire objects. Our experimental results show that the CBAM and C2f modules enhanced the network’s feature extraction ability to differentiate fire objects from fire-like objects. Hence, our approach has the potential to improve fire detection accuracy, reduce false alarms, and be more cost-effective than traditional fire detection methods. This method can be applied to camera monitoring systems for automatic fire detection with resistance to fire-like objects.

1. Introduction

Fire detection technology is essential to protecting individuals and assets from fire disasters. Current detection technologies focus primarily on building fires, despite the challenge of automatically detecting fires in open spaces, such as urban roads, parks, grasslands, etc. These kinds of fires are sudden and destructive and make rescue operations difficult [1]. Therefore, having an effective and reliable system for detecting fires at an early stage is crucial. However, this endeavor means confronting the unpredictable nature of fire, ongoing observation, and diverse man-made or environmental interference [2].

To detect fires in open spaces, various fire sensors have been developed based on sound, flame, smoke, gas, temperature, etc. These sensors are suitable for indoor fire detection but exhibit significant drawbacks in open spaces, such as high costs for setup and maintenance [3]. Additionally, false alarms frequently occur in sensor-based detection systems due to various interference objects in complex situations [4,5]. Satellite imagery provides an expansive and accurate approach to analyze terrain. Researchers have developed effective algorithms for identifying burned areas from satellite imagery, such as MODIS, Landsat-9, Sentinel-2, etc. [6,7,8]. This implies that satellite imagery would also have great potential for near-real-time wildfire detection.

Visual-based technologies provide an economical solution for extensive and all-climate fire surveillance in open-space scenarios by detecting features of flame and smoke [9,10,11,12]. Researchers have transformed these images into alternate color spaces to extract color characteristics and identify flames according to a preset threshold [13,14]. Additionally, edges, textures, and other features have also been used for fire detection [15,16,17,18,19]. Detection accuracy and computation speed have been further improved using machine learning algorithms, such as Decision Trees and Bayesian networks, Support Vector Machines, and so on [20,21]. According to the manually selected characteristics, these methods have achieved good detection results but struggle with nuisance alarms caused by interference factors in practical applications, such as light, clouds, chimney emissions, etc.

Compared with traditional image-based fire detection algorithms, deep learning technologies present a novel approach to visual-based fire detection. They have demonstrated outstanding performance in automatic feature extraction, coupled with high accuracy, enhanced speed, reliable operation, and cost-effectiveness [22,23,24,25,26]. Frizzi et al. classified images as fire, smoke, and no fire using a six-layer convolutional neural network (CNN) [27]. Other research suggests that increasing CNN depth can enhance fire detection precision. Kinaeva et al. utilized the Faster Region-based Convolutional Neural Network (Faster R-CNN) algorithm to identify flames and smoke in images captured using unmanned aerial vehicles. Sequential characteristics of fire propagation in videos are extracted to improve the alarm accuracy using time-series networks, such as Region-based Convolutional Neural Networks (RCNNs), Long Short-Term Memory (LSTM), etc. [28,29]. Nonetheless, these algorithms often demand significant computational resources.

CNNs demonstrate remarkable object detection performance capabilities. Various CNN-based algorithms are used to monitor fire using images, such as Single Shot Multibox Detector (SSD), MobileNetV2, etc. [30,31]. As a state-of-the-art object detection algorithm, the You Only Look Once (YOLO) series is widely used for fire detection. According to experiments conducted by Alexandrov et al., YOLOv2 achieved impressive results for forest fire detection, with an F1 score of 99.14% and an accuracy of 98.3% [32]. Compared to SSD, Faster-RCNN, and Region-based Fully Convolutional Networks (R-FCNs), the subsequently introduced YOLOv3 exhibits higher accuracy and speed [33,34]. Bochkovskiy et al. employed YOLOv4 to construct a real-time flame detection system and achieved good detection results. YOLOv5 presents several advantages, including fast convergence, high accuracy, strong customization, and the ability to detect small objects [32]. This network has been further enhanced for fire detection applications across diverse scenarios using strategies including the SPP module, new activation functions, predictive bounding box suppression, etc. [35,36,37].

Most studies focus on true-positive detection accuracy during fires, but these datasets rarely consider fire-like images. Consequently, nuisance alarms arise due to the algorithmic misidentification of fire among typical fire-like objects, such as chimney emissions, clouds, etc. Zhang et al. enhanced the network’s detection accuracy by enriching the dataset with simulated smoke images [38]. Huang et al. developed a light forest fire detection model enhanced by hard negative samples, such as clouds and fog [39]. Therefore, a dataset with diverse interference objects and complex scenes can help improve the detection accuracy of deep-learning-based algorithms.

In this paper, we developed an improved YOLOv5 algorithm for fire detection in open spaces and a new dataset containing both fire objects and typical fire-like objects. The main contributions of this paper are as follows:

- A dataset comprising 5274 fire (smoke and flame) images and 726 typical fire-like object (chimney emissions and clouds) images;

- Proposing an improved YOLOv5 fire detection algorithm for anti-interference. We added the Convolutional Block Attention Module (CBAM) to the end of the Neck module to improve the network’s feature extraction ability. Meanwhile, the C3 modules were replaced by the C2f module, which provided better feature gradient flow;

- Enhanced accuracy: The proposed approach may improve the accuracy of fire detection in open spaces compared to traditional methods. This accuracy may be achieved by leveraging the strengths of deep learning algorithms such as YOLOv5 to perceive and identify fire-specific features, which are challenging to identify using traditional image processing methods;

- Real-time detection: The YOLOv5 algorithm provides high speed and a real-time objection detection ability. Thus, the proposed approach is suitable for open-space scenarios where quick and timely fire detection is crucial;

- Reduced false alarms: Deep learning technology provides a powerful ability to extract fire features and typical fire-like objects. The proposed approach reduces the frequent false alarms that are prevalent with traditional fire detection methods. This method can prevent unnecessary emergency responses and reduce costs related to false alarms;

- Cost-effective: the proposed approach is more economically feasible than traditional fire detection methods because of its compatibility with low-cost cameras and hardware, reducing the need for expensive fire detection systems.

The remainder of this paper is organized as follows: Section 2 discusses the framework and improvement of YOLOv5; Section 3 shows the performance of our improved YOLOv5 algorithm based on the experimental results; Section 4 discusses the adaptability of the YOLOv5 model and our future research ideas for fire detection; and Section 5 concludes with a summary.

2. Materials and Methods

2.1. Anti-Interference Fire Detection Method for Open Spaces

The primary goal of image-based fire detection systems is to enhance a fire alarm’s intelligence and sensitivity. Accurate fire detection involves rapid fire detection and resistance to fire-like objects. Most research focuses on enhancing fire detection accuracy, whereas fire-like objects are typically treated as background, and suppression strategies for nuisance alarms are seldom discussed.

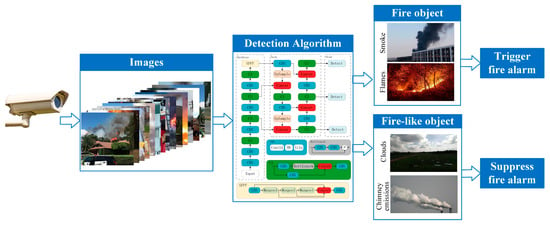

The detection scheme shown in Figure 1 shows a camera capturing images in various open-space scenarios. These images are then processed by an intelligent detection algorithm to automatically identify fire objects (flame and smoke). Meanwhile, the algorithm is trained to detect typical fire-like objects (clouds and chimney emissions) at the same time. CBAM and C3 modules are used to further enhance the network’s feature extraction capability. Thus, the network can differentiate fire objects from fire-like objects more accurately to reduce nuisance alarms and enhance the accuracy of fire alarms.

Figure 1.

Proposed scheme of anti-interference fire detection method for open spaces.

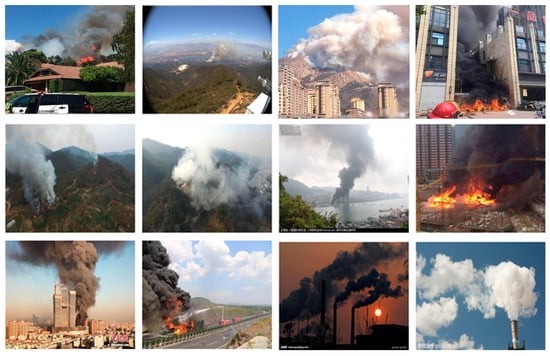

2.2. Dataset Preparation and Pre-Processing

Due to the great risks associated with fire, gathering comprehensive fire images from different scenes through experimental methods is challenging. Most existing fire detection datasets are images of real fires collected via the Internet. However, there are few public fire datasets with annotations. Meanwhile, fire-like objects are rarely considered in existing datasets. For this paper, over 8000 fire and fire-like images were collected from public datasets [40,41,42,43] and web images. These images were manually inspected to remove data with a high level of repetition or low quantity. Finally, our dataset contained 6000 images, including 5274 fire images and 726 fire-like images. The fire images contained a single flame and smoke, multiple flames and smoke, buildings, forests, grassland, and complex background fires. Meanwhile, clouds and chimney emissions were considered typical fire-like objects in the dataset. Correspondingly, there were 5293 labels for flames, 5118 labels for smoke, 733 labels for clouds, and 189 labels for chimney emissions. Figure 2 presents part of the dataset.

Figure 2.

Representative images of the dataset.

2.3. Network Design

Due to their high accuracy and small model size, the YOLO series algorithms are widely used in various computer vision applications. In this series, YOLOv5 demonstrates robust accuracy, speed, and small object detection. YOLOv5n was developed as a lightweight algorithm to reduce the computational burden, which is nearly 95% smaller than YOLOv4 and thus suitable for embedded devices. In our experiment, it achieved 79.64% mAP@50 with 153 frames per second (FPS), which was 16.8% faster than YOLOv5s at the cost of a slight accuracy reduction of about 0.05% mAP@50.

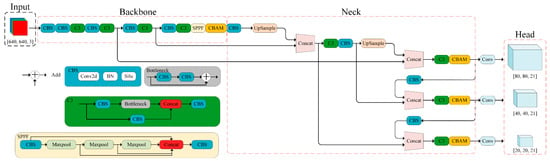

To further enhance network performance, YOLOv5n was improved by introducing the attention mechanism and C2f module. Specifically, a CBAM was added at the end of the Neck module to improve the network’s representation power by emphasizing features [44]. C3 modules were replaced by C2f modules to enhance gradient flow information. As shown in Figure 3, the network mainly consists of three parts: the backbone for extracting the features of different resolutions, the Neck for fusing these features, and the head for detecting objects. Specifically, the backbone consists of multiple CBS (Conv + BN + SiLU) modules and C2f modules, and finally one SPPF module. The CBS modules can enhance the feature extraction ability of the C2f module, while the SPPF module improves the feature expression ability of the backbone [45]. In the Neck, features from different resolutions are fused by the path aggregation network (PAN), which consists of CBS modules, C2f modules, and a series of concatenating operations.

Figure 3.

Improved network architecture based on YOLOv5n, CBAM, and C2f module. Modules are marked in different colors.

2.3.1. Attention Mechanism Using Convolutional Block Attention Module (CBAM)

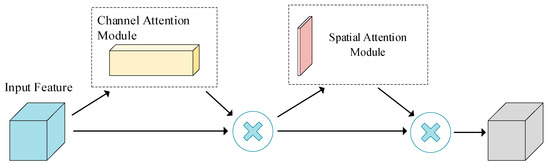

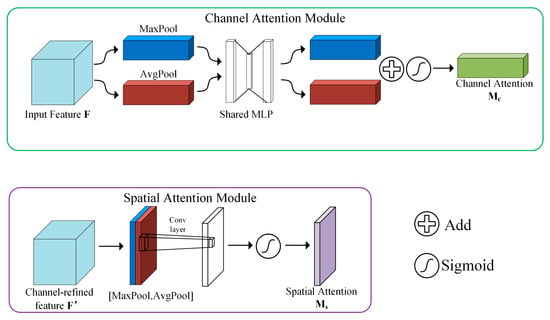

Attention mechanisms are widely used to improve the representation power of networks in various machine learning tasks. They help the network find correlations between the original data, highlighting important features, such as channel attention, pixel attention, multi-order attention, etc. [46]. A CBAM is a lightweight module emphasizing meaningful features along both channel and spatial principal dimensions. As shown in Figure 4, it consists of a channel attention module (CAM) and a spatial attention module (SAM).

Figure 4.

Structure of the CBAM.

A CAM increases network efficiency in detecting objects. As illustrated in Figure 5, the channel attention can be calculated using input feature F according to Equation (1):

where σ(·) is the sigmoid function; MLP is a multilayer perceptron with weights and ; AvgPool(·) indicates the average pooling operation and outputs ; and MaxPool(·) represents the maximum pooling operation and outputs . Thus, we obtain channel-refined feature map F′ using Equation (2):

Figure 5.

Channel and spatial attention modules in CBAM.

The output of channel attention operation F′ is then processed by the SAM module according to Equation (3), which enriches the contextual information of the whole picture.

where f 7×7 represents a convolution operation with a filter size of 7 × 7. Finally, the CBAM outputs feature map F″:

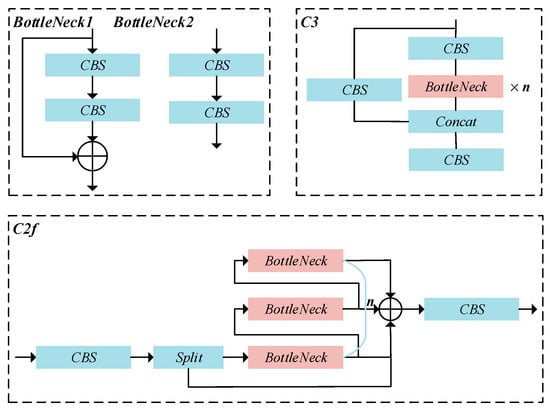

2.3.2. Replacing the C3 Module with the C2f Module

Inspired by the advanced modules introduced in YOLOv8, we designed the C2f module to replace the original C2 module to capture rich gradient flow information and enhance the detection accuracy for small targets. As described in Figure 6, the C2f module uses multiple branches of parallel gradient streams to enhance gradient flow information and improve network performance.

Figure 6.

Structures of C2f module and C3 module.

2.4. Network Training

We conducted the experiments on a Windows 10 system with an Intel (R) Core (TM) i9-13900KF CPU@3.0 GHz and an NVIDIA GeForce RTX 4090 GPU, where Pytorch version 1.8.1, Python version 3.8.8, and CUDA version 11.2 were used. The dataset was randomly divided into training, validation, and test data using an 8:1:1 ratio. Table 1 shows the parameters of the training process used in the experiment.

Table 1.

Training parameters in the experiment.

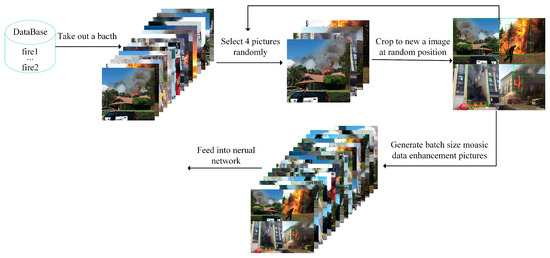

Mosaic data augmentation was adopted to increase the network’s robusticity and reduce the memory occupation of the GPU video [47]. It greatly enriched the detection dataset, where four images were randomly cut and combined into one newly generated image for training. Figure 7 illustrates the workflow of the mosaic data augmentation.

Figure 7.

Mosaic data augmentation. Four pictures were randomly selected from the training set and inserted into a synthetic picture for direct training.

2.5. Evaluation Metrics

To evaluate the algorithm’s performance, we adopted multiple evaluation indices, including precision (P), recall (R), F1 score, mean average precision (mAP), and frames per second (FPS) [44]. Precision indicates the percentage of positive predicted samples in all positive predictions:

where TP denotes true-positive samples and FP represents false-positive samples. Recall describes the percentage of positively predicted samples in all positive samples:

where FN indicates false-negative samples. To evaluate performance comprehensively, the F1 score is used by obtaining the harmonic average of both precision and recall:

The mean average precision (mAP) is calculated as the mean value of the average precisions of all categories:

where S is the number of categories and AP is the average value of the highest precision across the recall values.

3. Results

3.1. Object Detection Network Comparison Experiment Results

During the selection of the optimal deep learning model for fire detection, several prevalent object detection models were applied to our dataset for training and testing. Subsequently, the performance of these algorithms was evaluated comprehensively in terms of precision (P), recall (R), mAP@50, and detection speed (FPS). As depicted in Table 2, the YOLOv5 series achieved good results. Compared to YOLOv5s, the mAP@50 of YOLOv5n was 0.05% lower, while the detection speed was 16.8% faster at 153 FPS. Considering the balance of accuracy and speed, YOLOv5n was finally chosen as the target detection algorithm in this study.

Table 2.

Comparison of object detection algorithms.

3.2. Contrast Experiment Results after Introducing Attention Mechanism and C2f Module

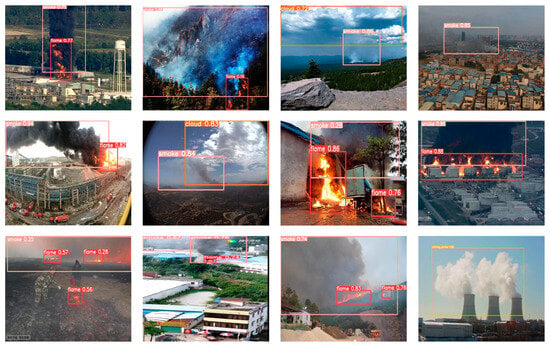

As discussed in Section 3, the constructed model consists of the YOLOv5n baseline, a CBAM, and C2f modules. Comparative experiments were conducted to analyze the contribution of each component. As shown in Table 3, ‘√’ indicates that the corresponding module was used in the network, while ‘×’ indicates that the module was not used. The CBAM module introduced the attention mechanism to the network and, thus, increased mAP@0.5 by 2.09%. On the other hand, replacing C2 with the C2f module slightly increased mAP@0.5 by 1.35%. The network achieved competitive results by combining both modules, with mAP@0.5 increasing by 2.72%. Figure 8 shows some detection results using the CBAM-C2f-YOLOv5 algorithm and our dataset.

Table 3.

Comparative experiments.

Figure 8.

Visualization results of some detection samples.

3.3. Fire-like Data Labeling Experiment

To evaluate the contribution of labeling information for fire-like objects, we trained the CBAM-C2f-YOLOv5 network again according to the dataset without labeling information. As shown in Table 4, 21.17% of chimney emissions and 14.33% of clouds were incorrectly recognized as fire objects without labeling information and, thus, caused potential nuisance fire alarms. This was due to the similarities between the fire objects and the fire-like objects in the background. With label information for fire-like objects, nuisance alarms for chimney emissions and clouds were significantly reduced by 3.70% and 2.45%, respectively. This finding implies that more information about fire-like objects helped improve the network’s detection accuracy. On the other hand, there are more fire-like objects besides chimney emissions and clouds, and the dataset cannot contain sufficient images for all fire-like objects. Our experiments illustrate that labeling information for fire-like objects would help reduce nuisance alarms.

Table 4.

Proportions of fire-like objects incorrectly detected as fire objects.

4. Discussion

With the development of deep learning technology, intelligent object detection algorithms provide an effective and suitable solution for image-based fire detection in open-space scenarios. Compared to the traditional method, YOLOv5 provides an effective approach to fire detection with high accuracy and speed. To develop a lightweight algorithm, we compared the performances of YOLOv5n and YOLOv5s. Our experimental results show that the mAP@50 values of YOLOv5n and YOLOv5s were 79.64 and 79.69%, respectively, while the detection speeds were 153 FPS and 135 FPS, respectively. Considering real-time, fast, and accurate fire detection, YOLOv5n is more suitable than YOLOv5n for reducing the computational burden.

A fire alarm is triggered by flame and smoke in an image, which are diverse in size, shape, texture, and color [28]. Meanwhile, there are various fire-like objects in complex scenarios. Since fire causes significant damage, verification is required for nuisance alarms. Verifying frequent nuisance alarms wastes manpower and reduces the system’s credibility. Therefore, fire detection in open spaces requires more-accurate detection algorithms and more training data than other types of object detection applications. We enriched our datasets with typical fire-like images and corresponding labeling information. Based on the YOLOv5n algorithm, the CBAM and C2f modules enhance the network’s feature extraction ability to distinguish between fire objects and fire-like objects. Thus, fire detection accuracy can be improved based on the dataset and an improved network.

In a follow-up study, we intend to further improve the fire detection system’s accuracy. Firstly, more fire-like objects will be added to the dataset with labeling information. Secondly, infrared thermal images will provide effective information for fire detection since flame- and smoke-induced temperatures are usually much higher than their surroundings. Finally, we will attempt to deploy the algorithm on an embedded system while further compressing the model’s size without reducing its accuracy. Thus, the transmission burden of the image data can be significantly reduced with edge computing.

5. Conclusions

Visual-based object detection technology using deep learning provides a low-cost and quick solution for fire detection in open spaces. Since fire objects and fire-like objects share similar features, nuisance alarms are a great challenge for accurate fire detection in complex scenarios. In this paper, we investigated methods of reducing nuisance alarms by adding fire-like objects to the dataset with corresponding label information. Then, we developed a fire detection algorithm based on YOLOv5n and improved the network using a CBAM module and a C2f module, which effectively enhanced the network’s feature extraction ability between fire and fire-like objects. Our experimental results show that our detection algorithm achieved a competitive performance, where mAP@50 increased by 2.72% compared to the baseline YOLOv5n model. We also demonstrated that label information for fire-like objects plays an important role in reducing nuisance alarms; thus, it can be used to improve the network’s detection accuracy. In the future, we will add more fire-like objects to our dataset using visible and infrared images and optimize the network structure of our proposed algorithm for field monitoring to further improve this detection system.

Author Contributions

Conceptualization, F.X.; methodology, F.X.; validation, X.Z.; formal analysis, X.Z.; investigation, W.X.; resources, T.D.; data curation, W.X.; writing—original draft preparation, W.X.; writing—review and editing, T.D.; visualization, W.X.; supervision, T.D.; project administration, F.X.; funding acquisition, F.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (grant number 2021YFC3001605), Fundamental Research Funds for the Central Universities of HUST (grant number 2020kfyXJJS102).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting these findings are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Aryal, J. Forest Fire Susceptibility and Risk Mapping Using Social/Infrastructural Vulnerability and Environmental Variables. Fire 2019, 2, 50. [Google Scholar] [CrossRef]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Avazov, K.; Mukhiddinov, M.; Makhmudov, F.; Cho, Y.I. Fire Detection Method in Smart City Environments Using a Deep-Learning-Based Approach. Electronics 2021, 11, 73. [Google Scholar] [CrossRef]

- Zhang, L.; Li, J.M.; Zhang, F.Q. An Efficient Forest Fire Target Detection Model Based on Improved YOLOv5. Fire 2023, 6, 291. [Google Scholar] [CrossRef]

- Kim, S.Y.; Muminov, A. Forest Fire Smoke Detection Based on Deep Learning Approaches and Unmanned Aerial Vehicle Images. Sensors 2023, 23, 5702. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Squicciarino, G.; Fiori, E.; Negro, D.; Gollini, A.; Puca, S. Near real-time generation of a country-level burned area database for Italy from Sentinel-2 data and active fire detections. Remote Sens. Appl. Soc. Environ. 2023, 29, 100925. [Google Scholar] [CrossRef]

- Farhadi, H.; Ebadi, H.; Kiani, A. Badi: A Novel Burned Area Detection Index for SENTINEL-2 Imagery Using Google Earth Engine Platform. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2023, 10, 179–186. [Google Scholar] [CrossRef]

- Rostami, A.; Shah-Hosseini, R.; Asgari, S.; Zarei, A.; Aghdami-Nia, M.; Homayouni, S. Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sens. 2022, 14, 992. [Google Scholar] [CrossRef]

- Chen, T.H.; Yin, Y.H.; Huang, S.F.; Ye, Y.T. The smoke detection for early fire-alarming system base on video processing. In Proceedings of the Iih-Msp: 2006 International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Pasadena, CA, USA, 18–20 December 2006; pp. 427–430. [Google Scholar]

- Toreyin, B.U.; Dedeoglu, Y.; Gudukbay, U.; Cetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Yuan, F. A fast accumulative motion orientation model based on integral image for video smoke detection. Pattern Recognit. Lett. 2008, 29, 925–932. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-Temporal Flame Modeling and Dynamic Texture Analysis for Automatic Video-Based Fire Detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Hu, G.L.; Jiang, X. Early Fire Detection of Large Space Combining Thresholding with Edge Detection Techniques. Appl. Mech. Mater. 2010, 44–47, 2060–2064. [Google Scholar] [CrossRef]

- Singh, Y.K.; Deb, D. Detection of Fire Regions from a Video Image Frames in YCbCr Color Model. Int. J. Recent Technol. Eng. 2019, 8, 6082–6087. [Google Scholar] [CrossRef]

- Wu, Z.S.; Xue, R.; Li, H. Real-Time Video Fire Detection via Modified YOLOv5 Network Model. Fire Technol. 2022, 58, 2377–2403. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.M.; Zhang, Q.X.; Lin, G.H.; Wang, Z.; Jia, Y.; Wang, J.J. Video smoke detection based on deep saliency network. Fire Saf. J. 2019, 105, 277–285. [Google Scholar] [CrossRef]

- Kim, Y.H.; Kim, A.; Jeong, H.Y. RGB Color Model Based the Fire Detection Algorithm in Video Sequences on Wireless Sensor Network. Int. J. Distrib. Sens. Netw. 2014, 10, 923609. [Google Scholar] [CrossRef]

- Gunay, O.; Toreyin, B.U.; Kose, K.; Cetin, A.E. Entropy-Functional-Based Online Adaptive Decision Fusion Framework With Application to Wildfire Detection in Video. IEEE Trans. Image Process. 2012, 21, 2853–2865. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Qiu, J.; Wang, H.; Shen, W.J.; Zhang, Y.L.; Su, H.Y.; Li, M.S. Quantifying Forest Fire and Post-Fire Vegetation Recovery in the Daxin’anling Area of Northeastern China Using Landsat Time-Series Data and Machine Learning. Remote Sens. 2021, 13, 792. [Google Scholar] [CrossRef]

- Chanthiya, P.; Kalaivani, V. Forest fire detection on LANDSAT images using support vector machine. Concurr. Comput. Pract. Exp. 2021, 33, e6280. [Google Scholar] [CrossRef]

- Zheng, H.T.; Duan, J.C.; Dong, Y.; Liu, Y. Real-time fire detection algorithms running on small embedded devices based on MobileNetV3 and YOLOv4. Fire Ecol. 2023, 19, 31. [Google Scholar] [CrossRef]

- Zhao, Y.C.; Wu, S.L.; Wang, Y.R.; Chen, H.D.; Zhang, X.Y.; Zhao, H.W. Fire Detection Algorithm Based on an Improved Strategy of YOLOv5 and Flame Threshold Segmentation. Comput. Mater. Contin. 2023, 75, 5639–5657. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Xie, J.; Pang, Y.W.; Pan, J.; Nie, J.; Cao, J.L.; Han, J.G. Complementary Feature Pyramid Network for Object Detection. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 178. [Google Scholar] [CrossRef]

- Sathishkumar, V.E.; Cho, J.H.Y.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 9. [Google Scholar] [CrossRef]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Fnaiech, F. Convolutional Neural Network for Video Fire and Smoke Detection. In Proceedings of the IEEE Industrial Electronics Society Conference, Florence, Italy, 23–26 October 2016; pp. 877–882. [Google Scholar]

- Xu, R.J.; Lin, H.F.; Lu, K.J.; Cao, L.; Liu, Y.F. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Kim, B.; Lee, J. A Video-Based Fire Detection Using Deep Learning Models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef]

- Khan, S.; Khan, A. FFireNet: Deep Learning Based Forest Fire Classification and Detection in Smart Cities. Symmetry 2022, 14, 2155. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Liu, H.Q.; Hu, H.P.; Zhou, F.; Yuan, H.P. Forest Flame Detection in Unmanned Aerial Vehicle Imagery Based on YOLOv5. Fire 2023, 6, 279. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W.D. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Baratov, N.; Kutlimuratov, A.; Whangbo, T.K. An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems. Sensors 2021, 21, 6519. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wu, L.; Li, T.; Shi, P.B. A Smoke Detection Model Based on Improved YOLOv5. Mathematics 2022, 10, 1190. [Google Scholar] [CrossRef]

- Mseddi, W.S.; Ghali, R.; Jmal, M.; Attia, R. Fire Detection and Segmentation using YOLOv5 and U-NET. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021; pp. 741–745. [Google Scholar]

- Huang, Z.C.; Wang, J.L.; Fu, X.S.; Yu, T.; Guo, Y.Q.; Wang, R.T. DC-SPP-YOLO: Dense connection and spatial pyramid pooling based YOLO for object detection. Inf. Sci. 2020, 522, 241–258. [Google Scholar] [CrossRef]

- Zhang, Q.-X.; Lin, G.-H.; Zhang, Y.-M.; Xu, G.; Wang, J.-J. Wildland Forest Fire Smoke Detection Based on Faster R-CNN using Synthetic Smoke Images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Huang, J.R.; He, Z.L.; Guan, Y.W.; Zhang, H.G. Real-Time Forest Fire Detection by Ensemble Lightweight YOLOX-L and Defogging Method. Sensors 2023, 23, 1894. [Google Scholar] [CrossRef] [PubMed]

- Chino, D.Y.T.; Avalhais, L.P.S.; Rodrigues, J.F.; Traina, A.J.M. BoWFire: Detection of Fire in Still Images by Integrating Pixel Color and Texture Analysis. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 95–102. [Google Scholar] [CrossRef]

- Wu, S.Y.; Zhang, X.R.; Liu, R.Q.; Li, B.H. A dataset for fire and smoke object detection. Multimed Tools Appl. 2023, 82, 6707–6726. [Google Scholar] [CrossRef]

- Jin, C.T.; Wang, T.; Alhusaini, N.; Zhao, S.H.; Liu, H.L.; Xu, K.; Zhang, J.; Chen, T. Video Fire Detection Methods Based on Deep Learning: Datasets, Methods, and Future Directions. Fire 2023, 6, 315. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Jiang, K.L.; Xie, T.Y.; Yan, R.; Wen, X.; Li, D.Y.; Jiang, H.B.; Jiang, N.; Feng, L.; Duan, X.L.; Wang, J.J. An Attention Mechanism-Improved YOLOv7 Object Detection Algorithm for Hemp Duck Count Estimation. Agriculture 2022, 12, 1659. [Google Scholar] [CrossRef]

- Xie, X.A.; Chen, K.; Guo, Y.R.; Tan, B.T.; Chen, L.M.; Huang, M. A Flame-Detection Algorithm Using the Improved YOLOv5. Fire 2023, 6, 313. [Google Scholar] [CrossRef]

- Niu, Z.Y.; Zhong, G.Q.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).