A Method of Detecting Candidate Regions and Flames Based on Deep Learning Using Color-Based Pre-Processing

Abstract

1. Introduction

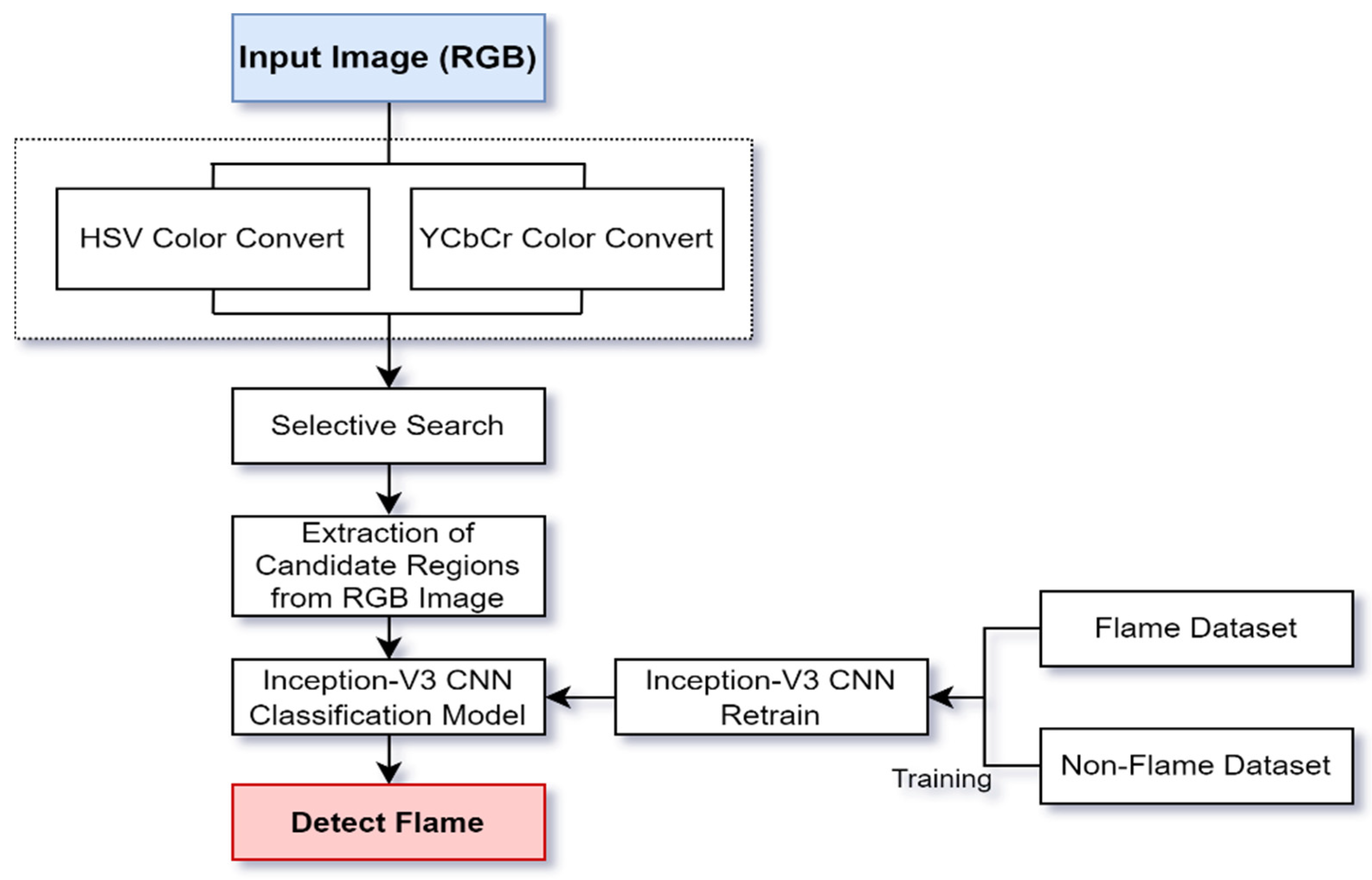

2. Proposed Method

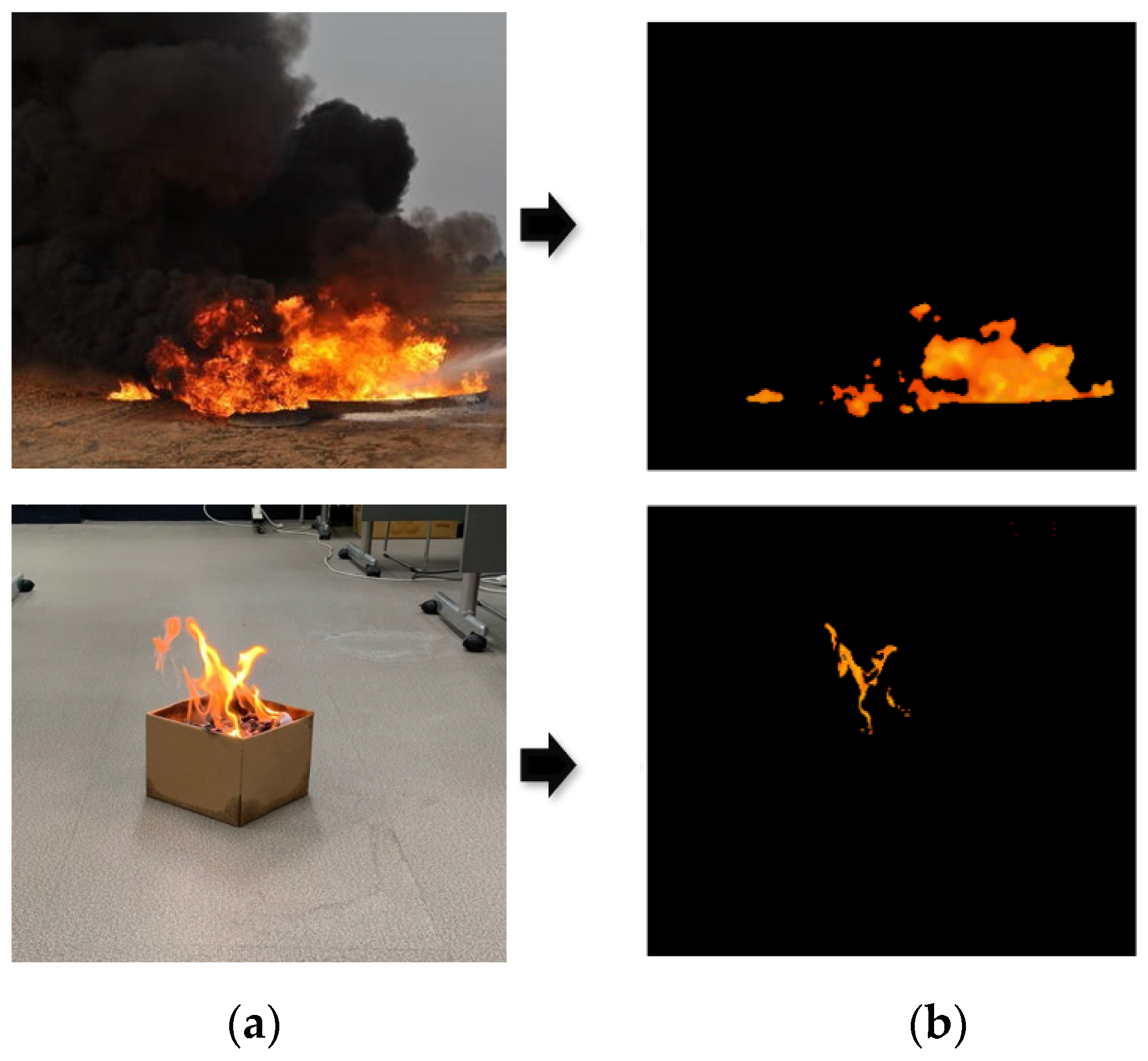

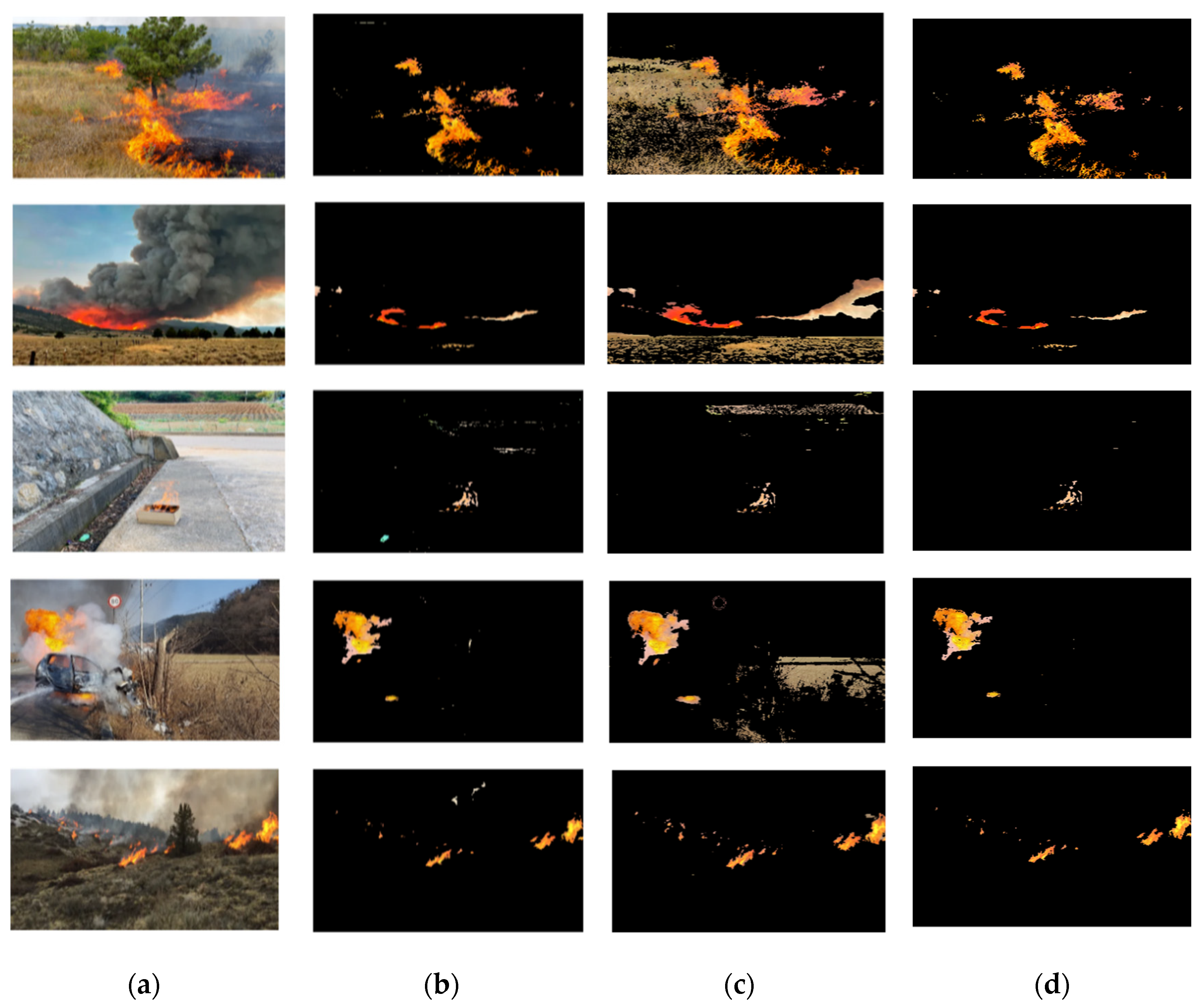

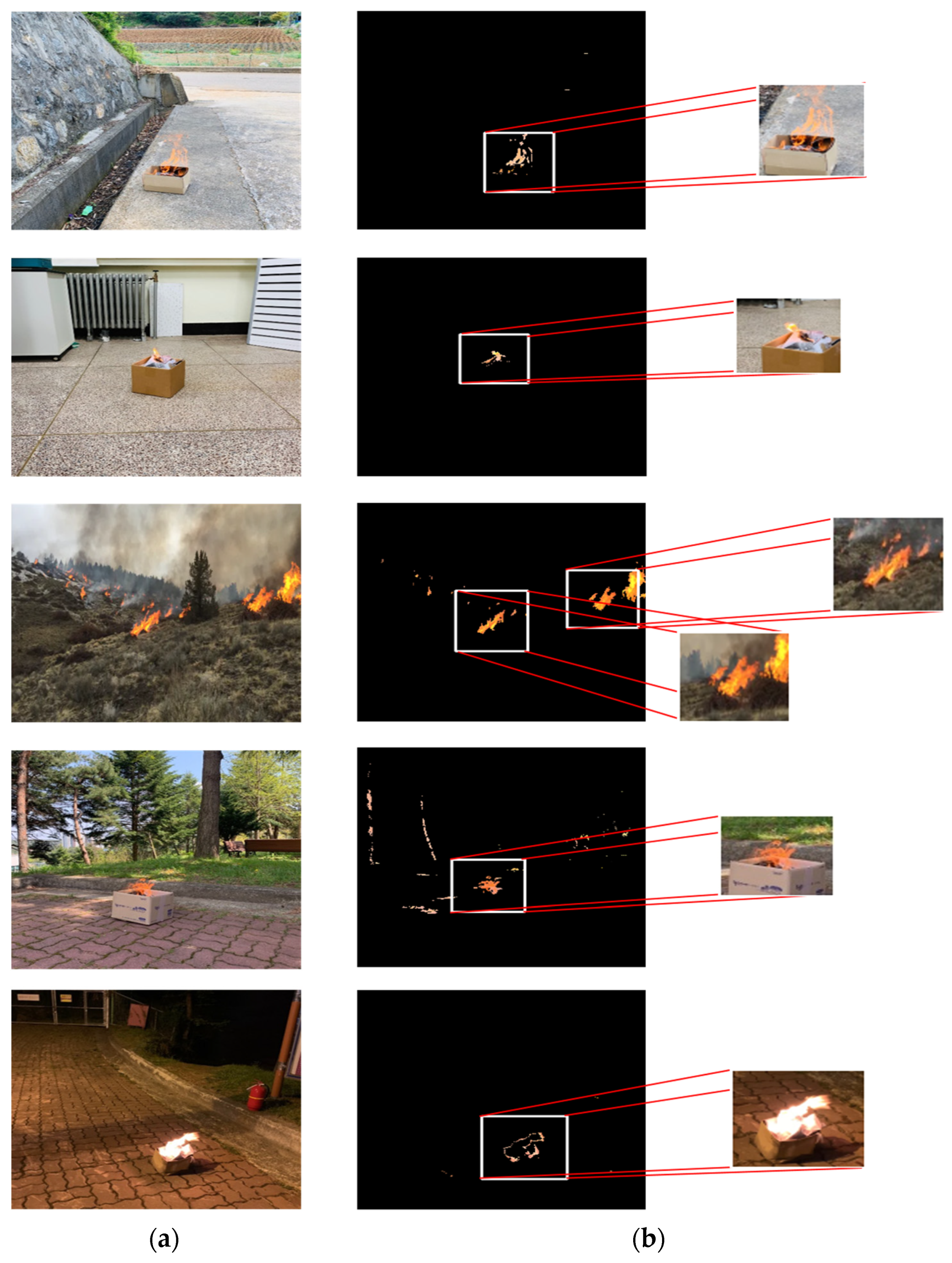

2.1. Pre-Processing Using HSV Color Conversion

2.2. Pre-Processing Using YCbCr Color Conversion

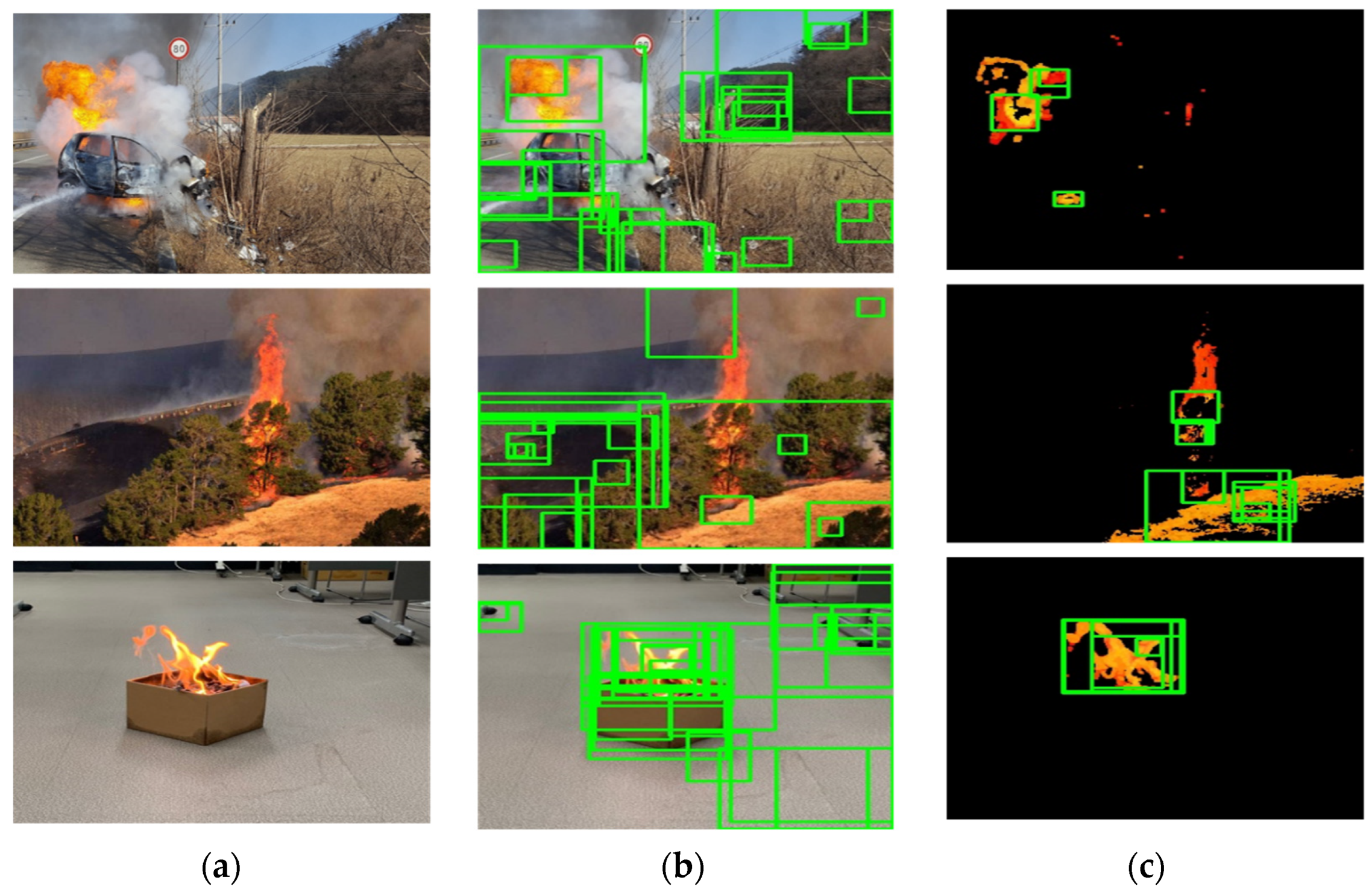

2.3. Detecting Candidate Regions Using Selective Search

2.4. Constructing CNN for Inference

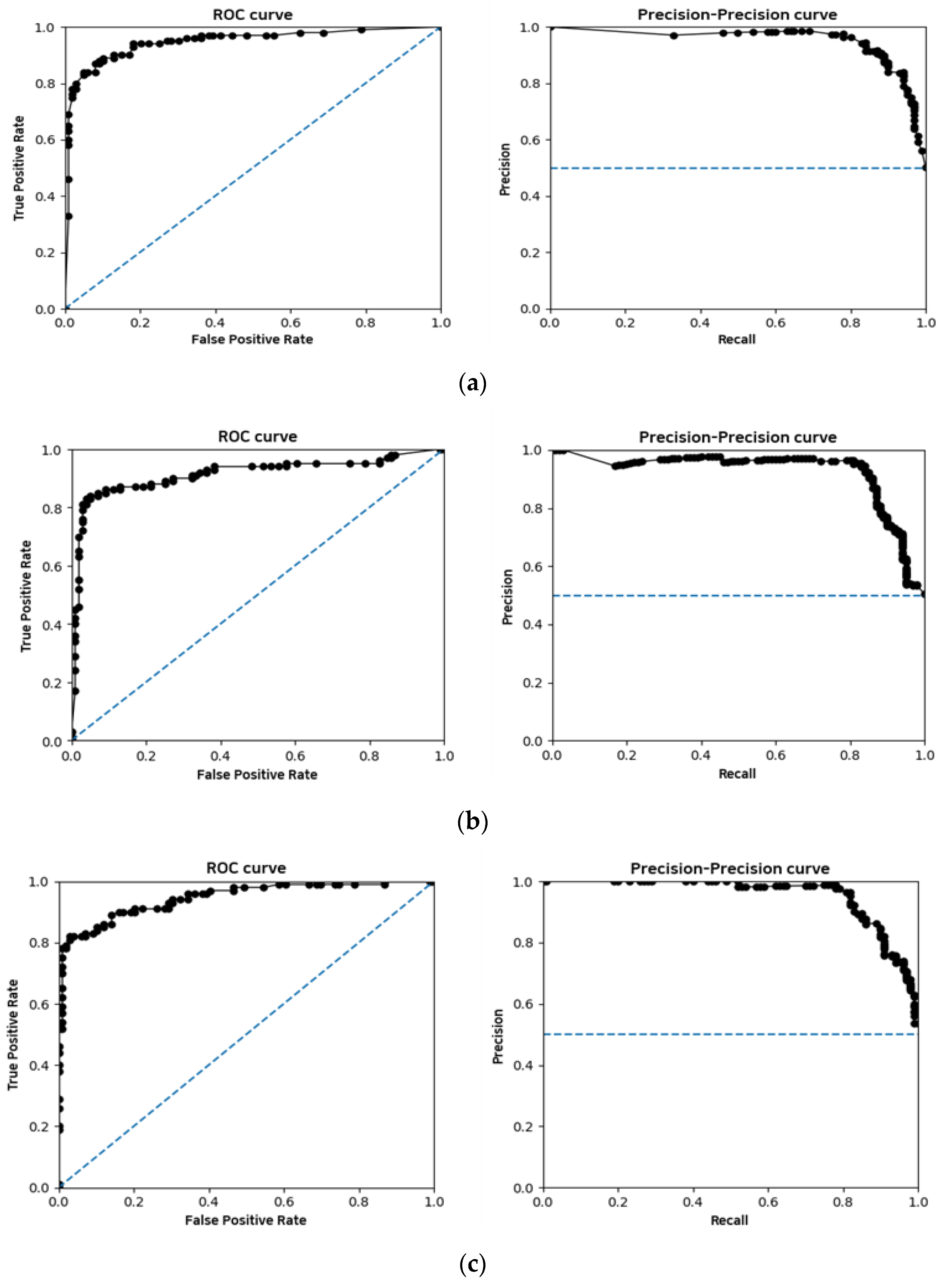

3. Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ryu, J.; Kwak, D. Flame detection using appearance-based pre-processing and Convolutional Neural Network. Appl. Sci. 2021, 11, 5138. [Google Scholar] [CrossRef]

- Shen, D.; Chen, X.; Nguyen, M.; Yan, W. Flame detection using deep learning. In Proceedings of the 2018 4th International Conference on Control, Automation and Robotics (ICCAR), Auckland, New Zealand, 20–23 April 2018; pp. 416–420. [Google Scholar]

- Muhammad, K.; Khan, S.; Elhoseny, M.; Ahmed, S.; Baik, S. Efficient Fire Detection for Uncertain Surveillance Environment. IEEE Trans. Ind. Inform. 2019, 15, 3113–3122. [Google Scholar] [CrossRef]

- Sarkar, S.; Menon, A.S.; T, G.; Kakelli, A.K. Convolutional Neural Network (CNN-SA) based selective amplification model to enhance image quality for efficient fire detection. Int. J. Image Graph. Signal Process. 2021, 13, 51–59. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Baratov, N.; Kutlimuratov, A.; Whangbo, T. An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems. Sensors 2021, 21, 6519. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Lee, J. A Video-Based Fire Detection Using Deep Learning Models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–786. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Kurilová, V.; Goga, J.; Oravec, M.; Pavlovičová, J.; Kajan, S. Support Vector Machine and deep-learning object detection for localisation of hard exudates. Sci. Rep. 2021, 11, 16045. [Google Scholar] [CrossRef]

- Chmelar, P.; Benkrid, A. Efficiency of HSV over RGB gaussian mixture model for fire detection. In Proceedings of the 2014 24th International Conference Radioelektronika, Bratislava, Slovakia, 15–16 April 2014. [Google Scholar]

- Chen, X.J.; Dong, F. Recognition and segmentation for fire based HSV. In Computing, Control, Information and Education Engineering; CRC Press: Boca Raton, FL, USA, 2015; pp. 369–374. [Google Scholar]

- Ibrahim, A.S.; Sartep, H.J. Grayscale image coloring by using YCbCr and HSV color spaces. Int. J. Mod. Trends Eng. Res. 2017, 4, 130–136. [Google Scholar]

- Munshi, A. Fire detection methods based on various color spaces and gaussian mixture models. Adv. Sci. Technol. Res. J. 2021, 15, 197–214. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, J.; Sun, Y. Remote Sensing Image Change Detection using superpixel cosegmentation. Information 2021, 12, 94. [Google Scholar] [CrossRef]

- Qiu, W.; Gao, X.; Han, B. A superpixel-based CRF Saliency Detection Approach. Neurocomputing 2017, 244, 19–32. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient graph-based image segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Uijlings, J.R.; van de Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Nan, H.; Tong, M.; Fan, L.; Li, M. 3D RES-inception network transfer learning for multiple label crowd behavior recognition. KSII Trans. Internet Inf. Syst. 2019, 13, 1450–1463. [Google Scholar]

- Kim, H.; Park, J.; Lee, H.; Im, G.; Lee, J.; Lee, K.-B.; Lee, H.J. Classification for breast ultrasound using convolutional neural network with multiple time-domain feature maps. Appl. Sci. 2021, 11, 10216. [Google Scholar] [CrossRef]

- Pu, Y.; Apel, D.B.; Szmigiel, A.; Chen, J. Image recognition of coal and coal gangue using a convolutional neural network and transfer learning. Energies 2019, 12, 1735. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Al Husaini, M.A.; Habaebi, M.H.; Gunawan, T.S.; Islam, M.R.; Elsheikh, E.A.; Suliman, F.M. Thermal-based Early Breast Cancer Detection Using Inception V3, inception V4 and modified inception MV4. Neural Comput. Appl. 2021, 34, 333–348. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision—ECCV 2016; Springer: Berlin/Heidelberg, Germany; pp. 21–37.

- Yan, C.; Zhang, H.; Li, X.; Yuan, D. R-SSD: Refined single shot multibox detector for pedestrian detection. Appl. Intell. 2022, 52, 10430–10447. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

| Greater than | Less than | |

|---|---|---|

| Hue | 5 | 90 |

| Saturation | 40 | 255 |

| Value | 220 | 255 |

| Layer | Kernel Size | Input Size |

|---|---|---|

| Conv | ||

| Conv | ||

| Convolution (Padded) | ||

| MaxPool | ||

| Conv | ||

| Conv | ||

| MaxPool | ||

| - | ||

| Reduction | - | |

| - | ||

| Reduction | - | |

| - | ||

| AveragePool | - | |

| FC | - | |

| Sigmoid | - | - |

| Train Dataset | Test Dataset | ||

|---|---|---|---|

| Flame | Non-Flame | Flame | Non-Flame |

| 8152 | 8024 | 2001 | 2000 |

| Evaluation Indicator | |||||

|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | MCC | |

| SSD | 90.0% | 88.5% | 92.0% | 90.2% | 0.80 |

| Faster R-CNN | 91.0% | 90.2% | 92.0% | 91.1% | 0.82 |

| Sarkar et al. [4] (SA-CNN) | 95.6% | 96.0% | 97.1% | 96.6% | 0.90 |

| Muhammad et al. [5] (AlexNet) | 94.4% | 97.7% | 90.9% | 89.0% | 0.89 |

| Our proposal | 97.0% | 96.1% | 98.0% | 97.0% | 0.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ryu, J.; Kwak, D. A Method of Detecting Candidate Regions and Flames Based on Deep Learning Using Color-Based Pre-Processing. Fire 2022, 5, 194. https://doi.org/10.3390/fire5060194

Ryu J, Kwak D. A Method of Detecting Candidate Regions and Flames Based on Deep Learning Using Color-Based Pre-Processing. Fire. 2022; 5(6):194. https://doi.org/10.3390/fire5060194

Chicago/Turabian StyleRyu, Jinkyu, and Dongkurl Kwak. 2022. "A Method of Detecting Candidate Regions and Flames Based on Deep Learning Using Color-Based Pre-Processing" Fire 5, no. 6: 194. https://doi.org/10.3390/fire5060194

APA StyleRyu, J., & Kwak, D. (2022). A Method of Detecting Candidate Regions and Flames Based on Deep Learning Using Color-Based Pre-Processing. Fire, 5(6), 194. https://doi.org/10.3390/fire5060194