Real-Time Forest Fire Detection Framework Based on Artificial Intelligence Using Color Probability Model and Motion Feature Analysis

Abstract

:1. Introduction

- Introducing the use of a color probability model based on the Gaussian Mixture Model for segmenting the fire region, which can handle any illumination condition.

- Proposing simple motion feature analysis of fire detection, which reduces the false-positive rate.

- Integrating classification-based fire verification to make decision steps more effective and efficient in localizing the fire region.

- Introducing the new evaluation protocol and annotation for fire detection evaluation based on the intersection over union (IoU) rate.

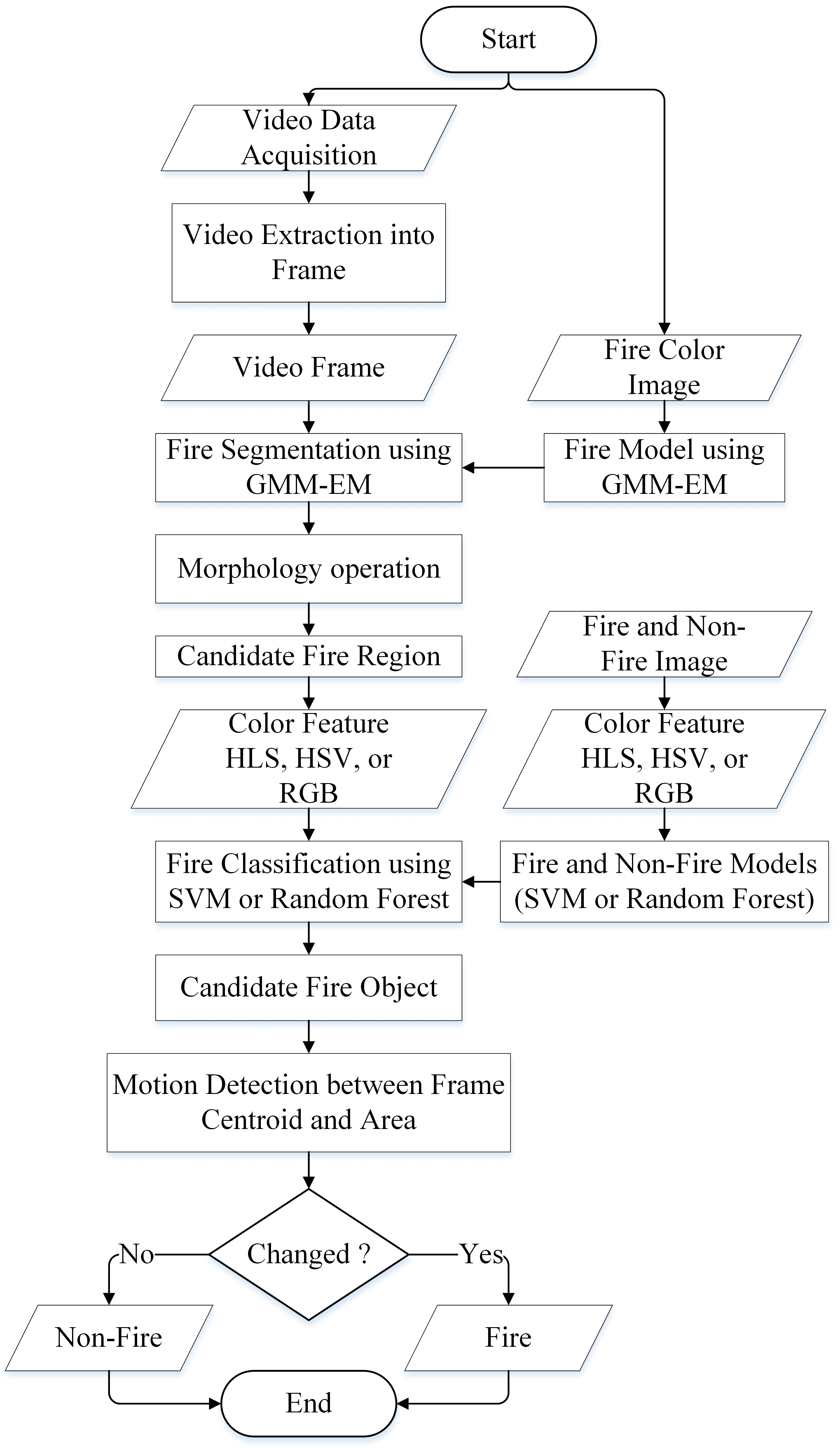

2. The Proposed Framework

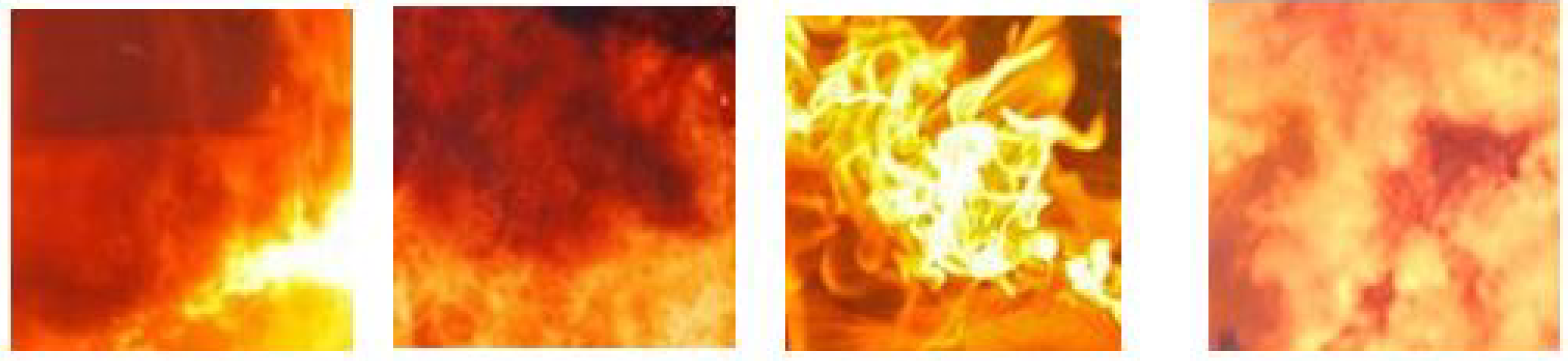

2.1. Training Data Acquisition

2.2. Color Probability Modeling

2.3. Color-Based Feature Extraction

2.4. Fire Object Verification

2.5. Motion Feature Analysis

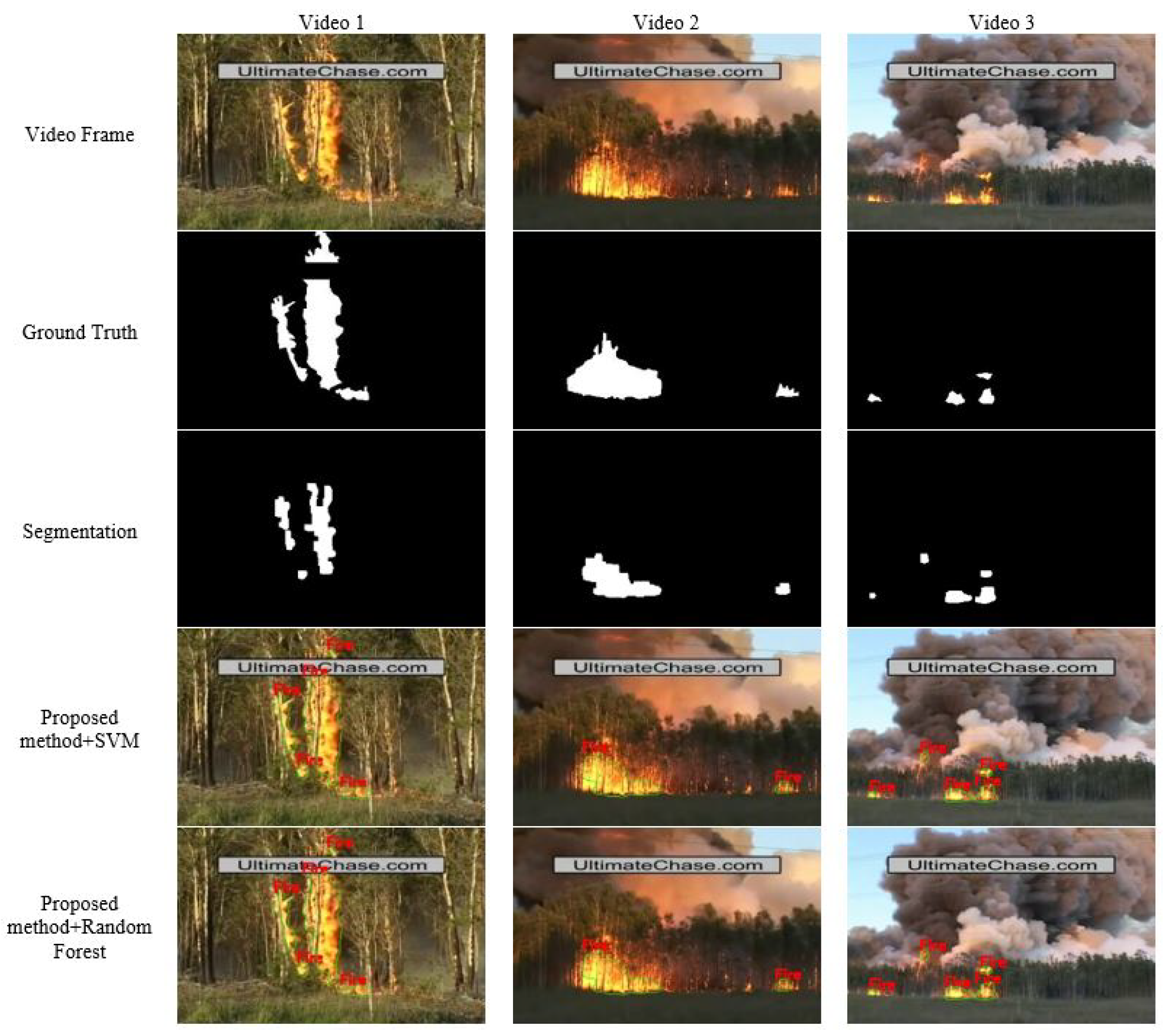

3. Results and Discussion

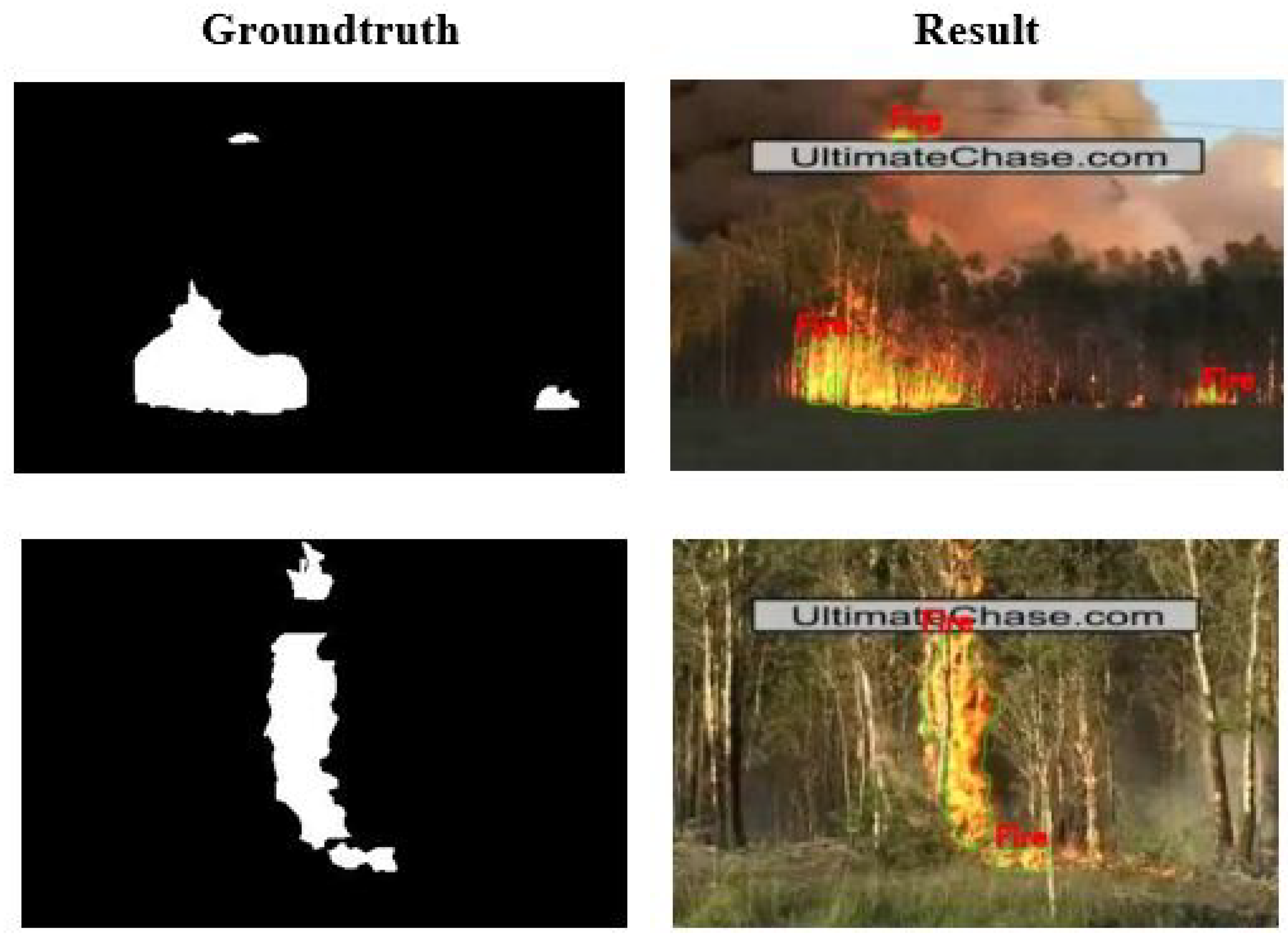

3.1. Experimental Setting and Protocol Evaluation

3.2. Optimal Parameter Settings

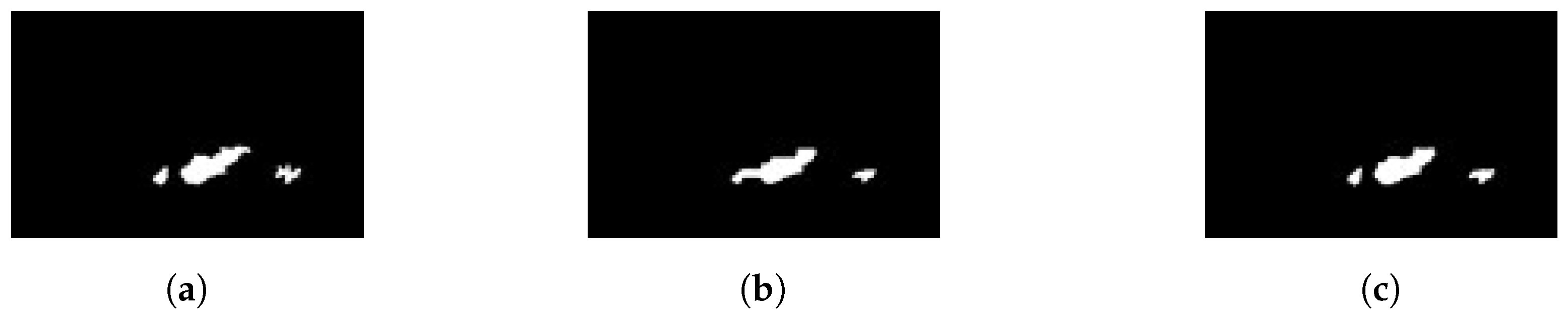

3.2.1. Parameters for Fire Object Segmentation

3.2.2. Parameters for Fire Object Classification

3.3. Frame-Based Evaluation

3.4. Location-Based Evaluation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- The World Bank. Forest Area—Indonesia|Data. data.worldbank.org. 2020. Available online: https://data.worldbank.org/indicator/AG.LND.FRST.ZS?locations=ID (accessed on 3 February 2022).

- Fitriany, A.A.; Flatau, P.J.; Khoirunurrofik, K.; Riama, N.F. Assessment on the use of meteorological and social media information for forest fire detection and prediction in Riau, Indonesia. Sustainability 2021, 13, 11188. [Google Scholar] [CrossRef]

- Chen, T.H.; Wu, P.H.; Chiou, Y.C. An early fire-detection method based on image processing. In Proceedings of the International Conference on Image Processing (ICIP), Singapore, 24–27 October 2004; Volume 3, pp. 1707–1710. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Gunay, O.; Kose, K.; Erden, F.; Chaabene, F.; Tsalakanidou, F.; Grammalidis, N.; Çetin, E. Flame Detection for Video-Based Early Fire Warning for the Protection of Cultural Heritage. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2012; Volume 7616, pp. 378–387. [Google Scholar]

- Çelik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Celik, T. Fast and efficient method for fire detection using image processing. ETRI J. 2010, 32, 881–890. [Google Scholar] [CrossRef] [Green Version]

- Shidik, G.F.; Adnan, F.N.; Supriyanto, C.; Pramunendar, R.A.; Andono, P.N. Multi color feature background subtraction and time frame selection for fire detection. In Proceedings of the International Conference on Robotics, Biomimetics and Intelligent Computing System, Shenzhen, China, 12–14 December 2013; pp. 115–120. [Google Scholar] [CrossRef]

- Mueller, M.; Karasev, P.; Kolesov, I.; Tannenbaum, A. Optical Flow Estimation for Flame Detection in Videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef] [Green Version]

- Teng, Z.; Kim, J.; Kang, D. Fire Detection Based on Hidden Markov Models. Int. J. Control. Autom. Syst. 2010, 8, 822–830. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Dedeoǧlu, Y.; Güdükbay, U.; Çetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef] [Green Version]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Real time video fire detection using spatio-temporal consistency energy. In Proceedings of the 10th IEEE International Conference on Advanced Video Signal Based Surveillance, Krakow, Poland, 27–30 August 2013; pp. 365–370. [Google Scholar] [CrossRef]

- Foggia, P.; Saggese, A.; Vento, M. Real-Time Fire Detection for Video-Surveillance Applications Using a Combination of Experts Based on Color, Shape, and Motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional Neural Networks Based Fire Detection in Surveillance Videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient Deep CNN-Based Fire Detection and Localization in Video Surveillance Applications. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 1419–1434. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19. [Google Scholar] [CrossRef]

- Xu, Z.; Guo, Y.; Saleh, J.H. Tackling Small Data Challenges in Visual Fire Detection: A Deep Convolutional Generative Adversarial Network Approach. IEEE Access 2020, 9, 3936–3946. [Google Scholar] [CrossRef]

- Wu, X.; Lu, X.; Leung, H. An adaptive threshold deep learning method for fire and smoke detection. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1954–1959. [Google Scholar] [CrossRef]

- Chaoxia, C.; Shang, W.; Zhang, F. Information-Guided Flame Detection Based on Faster R-CNN. IEEE Access 2020, 8, 58923–58932. [Google Scholar] [CrossRef]

- Xie, Y.; Zhu, J.; Cao, Y.; Zhang, Y.; Feng, D.; Zhang, Y.; Chen, M. Efficient video fire detection exploiting motion-flicker-based dynamic features and deep static features. IEEE Access 2020, 8, 81904–81917. [Google Scholar] [CrossRef]

- Jadon, A.; Omama, M.; Varshney, A.; Ansari, M.S.; Sharma, R. FireNet: A Specialized Lightweight Fire and Smoke Detection Model for Real-Time IoT Applications. arXiv 2019, arXiv:1905.11922. [Google Scholar]

- Iglesias, F.; Zseby, T.; Ferreira, D.; Zimek, A. MDCGen: Multidimensional Dataset Generator for Clustering. J. Classif. 2019, 36, 599–618. [Google Scholar] [CrossRef] [Green Version]

- Xiao, J.; Xu, Q.; Wu, C.; Gao, Y.; Hua, T.; Xu, C. Performance evaluation of missing-value imputation clustering based on a multivariate Gaussian mixture model. PLoS ONE 2016, 11, e0161112. [Google Scholar] [CrossRef]

- Wei, W.; Balabdaoui, F.; Held, L. Calibration tests for multivariate Gaussian forecasts. J. Multivariat Anal. 2016, 154, 216–233. [Google Scholar] [CrossRef] [Green Version]

- Mohd Yusoff, M.I.; Mohamed, I.; Abu Bakar, M.R. Improved expectation maximization algorithm for gaussian mixed model using the kernel method. Math. Probl. Eng. 2013, 2013, 757240. [Google Scholar] [CrossRef]

- Boccuto, A.; Gerace, I.; Giorgetti, V.; Rinaldi, M. A Fast Algorithm for the Demosaicing Problem Concerning the Bayer Pattern. Open Signal Process. J. 2019, 6, 1–14. [Google Scholar] [CrossRef]

- Zarkasi, A.; Nurmaini, S.; Setiawan, D.; Abdurahman, F.; Deri Amanda, C. Implementation of fire image processing for land fire detection using color filtering method. J. Phys. Conf. Ser. 2019, 1196, 012003. [Google Scholar] [CrossRef]

- Chen, Y.; Sano, H.; Wakaiki, M.; Yaguchi, T. Secret Communication Systems Using Chaotic Wave Equations with Neural Network Boundary Conditions. Entropy 2021, 23, 904. [Google Scholar] [CrossRef]

- Roberto, N.; Baldini, L.; Adirosi, E.; Facheris, L.; Cuccoli, F.; Lupidi, A.; Garzelli, A. A Support Vector Machine Hydrometeor Classification Algorithm for Dual-Polarization Radar. Atmosphere 2017, 8, 134. [Google Scholar] [CrossRef] [Green Version]

- Tyralis, H.; Papacharalampous, G.; Langousis, A. A Brief Review of Random Forests for Water Scientists and Practitioners and Their Recent History in Water Resources. Water 2019, 10, 910. [Google Scholar] [CrossRef] [Green Version]

- Zhai, Y.; Zheng, X. Random Forest based Traffic Classification Method In SDN. In Proceedings of the 2018 International Conference on Cloud Computing. Big Data and Blockchain (ICCBB), Fuzhou, China, 15–17 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Shu, X.; Zhang, Q.; Shi, J.; Qi, Y. A Comparative Study on Weighted Central Moment and Its Application in 2D Shape Retrieval. Information 2016, 7, 10. [Google Scholar] [CrossRef] [Green Version]

- Çetin, A.E. Computer Vision Based Fire Detection Dataset. 2014. Available online: http://signal.ee.bilkent.edu.tr/VisiFire/ (accessed on 15 July 2021).

- Grammalidis, N.; Dimitropoulos, K.; Cetin, E. FIRESENSE database of videos for flame and smoke detection. IEEE Trans. Circuits Syst. Video Technol. 2017, 25, 339–351. [Google Scholar] [CrossRef]

- Ko, B.C.; Cheong, K.H.; Nam, J.Y. Fire detection based on vision sensor and support vector machines. Fire Saf. J. 2009, 44, 322–329. [Google Scholar] [CrossRef]

- Xuan Truong, T.; Kim, J.M. Fire flame detection in video sequences using multi-stage pattern recognition techniques. Eng. Appl. Artif. Intell. 2012, 25, 1365–1372. [Google Scholar] [CrossRef]

- Torabian, M.; Pourghassem, H.; Mahdavi-Nasab, H. Fire Detection Based on Fractal Analysis and Spatio-Temporal Features. Fire Technol. 2021, 57, 2583–2614. [Google Scholar] [CrossRef]

- Wahyono; Dharmawan, A.; Harjoko, A.; Chrystian; Adhinata, F.D. Region-based annotation data of fire images for intelligent surveillance system. Data Br. 2022, 107925. [Google Scholar] [CrossRef]

| Video | Resolution | Frame Rate | Frames | Fire | Notes |

|---|---|---|---|---|---|

| Video 1 | 400 × 256 | 15 | 260 | yes | Controlled1 [32] |

| Video 2 | 400 × 256 | 15 | 246 | yes | Controlled2 [32] |

| Video 3 | 400 × 256 | 15 | 208 | yes | Controlled3 [32] |

| Video 4 | 400 × 256 | 15 | 200 | yes | Forest1 [32] |

| Video 5 | 400 × 256 | 15 | 245 | yes | Forest2 [32] |

| Video 6 | 400 × 256 | 15 | 255 | yes | Forest3 [32] |

| Video 7 | 400 × 256 | 15 | 219 | yes | Forest4 [32] |

| Video 8 | 400 × 256 | 15 | 216 | yes | Forest5 [32] |

| Video 9 | 400 × 256 | 15 | 218 | yes | ForestFire1 [32] |

| Video 10 | 320 × 240 | 2 | 241 | yes | fBackYardFire [32] |

| Video 11 | 320 × 240 | 5 | 236 | yes | Fire1 [32] |

| Video 12 | 320 × 240 | 29.97 | 140 | yes | 40m PanFire 20060824 [32] |

| Video 13 | 320 × 240 | 10 | 155 | no | negsVideo2.859 [33] |

| Video 14 | 320 × 240 | 10 | 160 | no | negsVideo3.860 [33] |

| Video 15 | 480 × 368 | 30 | 439 | no | negsVideo5.862 [33] |

| Video 16 | 640 × 368 | 30 | 541 | no | negsVideo7.864 [33] |

| Video 17 | 640 × 480 | 25 | 645 | no | negsVideo9.866 [33] |

| Video 18 | 360 × 288 | 25 | 246 | no | negsVideo10.1072 [33] |

| Video 19 | 320 × 240 | 25 | 180 | no | negsVideo11.1073 [33] |

| Video 20 | 352 × 288 | 24.46 | 272 | no | negsVideo13.1075 [33] |

| Video 21 | 1600 × 1200 | 18.51 | 196 | no | negsVideo16.1077 [33] |

| K | Ground Truth | Fire Segmentation | Fps |

|---|---|---|---|

| 2 |  |  | 27.71 |

| 3 |  |  | 18.87 |

| 4 |  |  | 17.07 |

| 5 |  |  | 13.64 |

| Color Space | Kernel | Accuracy (%) |

|---|---|---|

| RGB | RBF | 56.08 |

| Polynomial | 52.98 | |

| Linear | 83.50 | |

| HSV | RBF | 65.56 |

| Polynomial | 52.37 | |

| Linear | 81.64 | |

| YCbCr | RBF | 75.05 |

| Polynomial | 52.98 | |

| Linear | 81.93 |

| Color Space | Number of Trees | Accuracy (%) |

|---|---|---|

| RGB | 10 | 85.77 |

| 20 | 86.18 | |

| 30 | 87.21 | |

| 40 | 87.83 | |

| 50 | 88.24 | |

| HSV | 10 | 91.75 |

| 20 | 92.16 | |

| 30 | 91.95 | |

| 40 | 91.13 | |

| 50 | 91.13 | |

| YCbCr | 10 | 90.11 |

| 20 | 90.72 | |

| 30 | 90.92 | |

| 40 | 92.15 | |

| 50 | 91.95 |

| Video | Proposed Method | R-CNN | Torabian [36] | Toreyin [10] | Ko [34] | Truong [35] | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TPR | FNR | TPR | FNR | TPR | FNR | TPR | FNR | TPR | FNR | TPR | FNR | |

| Video 1 | 100 | 0 | 100 | 0 | 100 | 0 | 34 | 0 | 55.2 | 2 | 94.98 | 5.02 |

| Video 2 | 100 | 0 | 100 | 0 | 100 | 0 | 87.5 | 4.9 | 77.7 | 0 | - | - |

| Video 3 | 100 | 0 | 95.12 | 4.88 | 100 | 0 | 73.7 | 10 | 97.9 | 0 | 95 | 5 |

| Video 4 | 100 | 0 | 95.19 | 4.81 | - | - | - | - | - | - | - | - |

| Video 5 | 100 | 0 | 97 | 3 | - | - | - | - | - | - | - | - |

| Video 6 | 92.17 | 7.83 | 99.18 | 0.82 | - | - | - | - | - | - | - | - |

| Video 7 | 100 | 0 | 63.25 | 36.75 | - | - | - | - | - | - | - | - |

| Video 8 | 98.61 | 1.39 | 76.26 | 23.74 | - | - | - | - | - | - | - | - |

| Video 9 | 26.67 | 73.33 | 84.58 | 15.42 | 100 | 0 | 51.2 | 44.1 | 56.3 | 0 | 93 | 7 |

| Video 10 | 98.62 | 1.38 | 100 | 0 | - | - | - | - | - | - | - | - |

| Video 11 | 74.75 | 25.25 | 60.4 | 39.6 | - | - | - | - | - | - | - | - |

| Video 12 | 88.76 | 11.24 | 95.51 | 4.49 | - | - | - | - | - | - | - | - |

| Average | 89.97 | 10.03 | 88.87 | 11.13 | 100 | 0 | 61.6 | 14.75 | 71.78 | 0.5 | 94.33 | 5.67 |

| Video | Proposed Method | R-CNN | ||

|---|---|---|---|---|

| TNR (%) | FPR (%) | TNR (%) | FPR (%) | |

| Video 13 | 27.74 | 72.26 | 12.26 | 87.74 |

| Video 14 | 20.62 | 79.38 | 21.88 | 78.12 |

| Video 15 | 38.72 | 61.28 | 3.42 | 96.58 |

| Video 16 | 33.46 | 66.54 | 4.99 | 95.01 |

| Video 17 | 21.71 | 78.29 | 23.1 | 76.9 |

| Video 18 | 34.55 | 65.45 | 0 | 100 |

| Video 19 | 59.44 | 40.56 | 97.22 | 2.78 |

| Video 20 | 66.42 | 33.58 | 2.95 | 97.05 |

| Video 21 | 45.92 | 54.08 | 2.04 | 97.96 |

| Average | 38.73 | 61.27 | 18.65 | 81.35 |

| Video | Proposed Method | R-CNN | ||

|---|---|---|---|---|

| Avg. IoU | FPS | Avg. IoU | FPS | |

| Video 1 | 0.5 | 23.63 | 0.3 | 1.47 |

| Video 2 | 0.26 | 21.75 | 0.07 | 2.64 |

| Video 3 | 0.09 | 18.42 | 0.02 | 2.69 |

| Video 4 | 0.31 | 21.84 | 0.53 | 2.68 |

| Video 5 | 0.38 | 19.60 | 0.14 | 2.67 |

| Video 6 | 0.63 | 23.36 | 0.38 | 2.74 |

| Video 7 | 0.29 | 19.6 0 | 0.16 | 2.64 |

| Video 8 | 0.34 | 22.25 | 0.31 | 2.63 |

| Video 9 | 0.23 | 26.99 | 0.31 | 2.44 |

| Video 10 | 0.19 | 19.38 | 0.16 | 2.61 |

| Video 11 | 0.24 | 22.77 | 0.26 | 2.57 |

| Video 12 | 0.43 | 20.87 | 0.41 | 2.60 |

| Average | 0.32 | 21.7 | 0.26 | 2.53 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wahyono; Harjoko, A.; Dharmawan, A.; Adhinata, F.D.; Kosala, G.; Jo, K.-H. Real-Time Forest Fire Detection Framework Based on Artificial Intelligence Using Color Probability Model and Motion Feature Analysis. Fire 2022, 5, 23. https://doi.org/10.3390/fire5010023

Wahyono, Harjoko A, Dharmawan A, Adhinata FD, Kosala G, Jo K-H. Real-Time Forest Fire Detection Framework Based on Artificial Intelligence Using Color Probability Model and Motion Feature Analysis. Fire. 2022; 5(1):23. https://doi.org/10.3390/fire5010023

Chicago/Turabian StyleWahyono, Agus Harjoko, Andi Dharmawan, Faisal Dharma Adhinata, Gamma Kosala, and Kang-Hyun Jo. 2022. "Real-Time Forest Fire Detection Framework Based on Artificial Intelligence Using Color Probability Model and Motion Feature Analysis" Fire 5, no. 1: 23. https://doi.org/10.3390/fire5010023

APA StyleWahyono, Harjoko, A., Dharmawan, A., Adhinata, F. D., Kosala, G., & Jo, K.-H. (2022). Real-Time Forest Fire Detection Framework Based on Artificial Intelligence Using Color Probability Model and Motion Feature Analysis. Fire, 5(1), 23. https://doi.org/10.3390/fire5010023