Development of a System for Recognising and Classifying Motor Activity to Control an Upper-Limb Exoskeleton

Abstract

1. Introduction

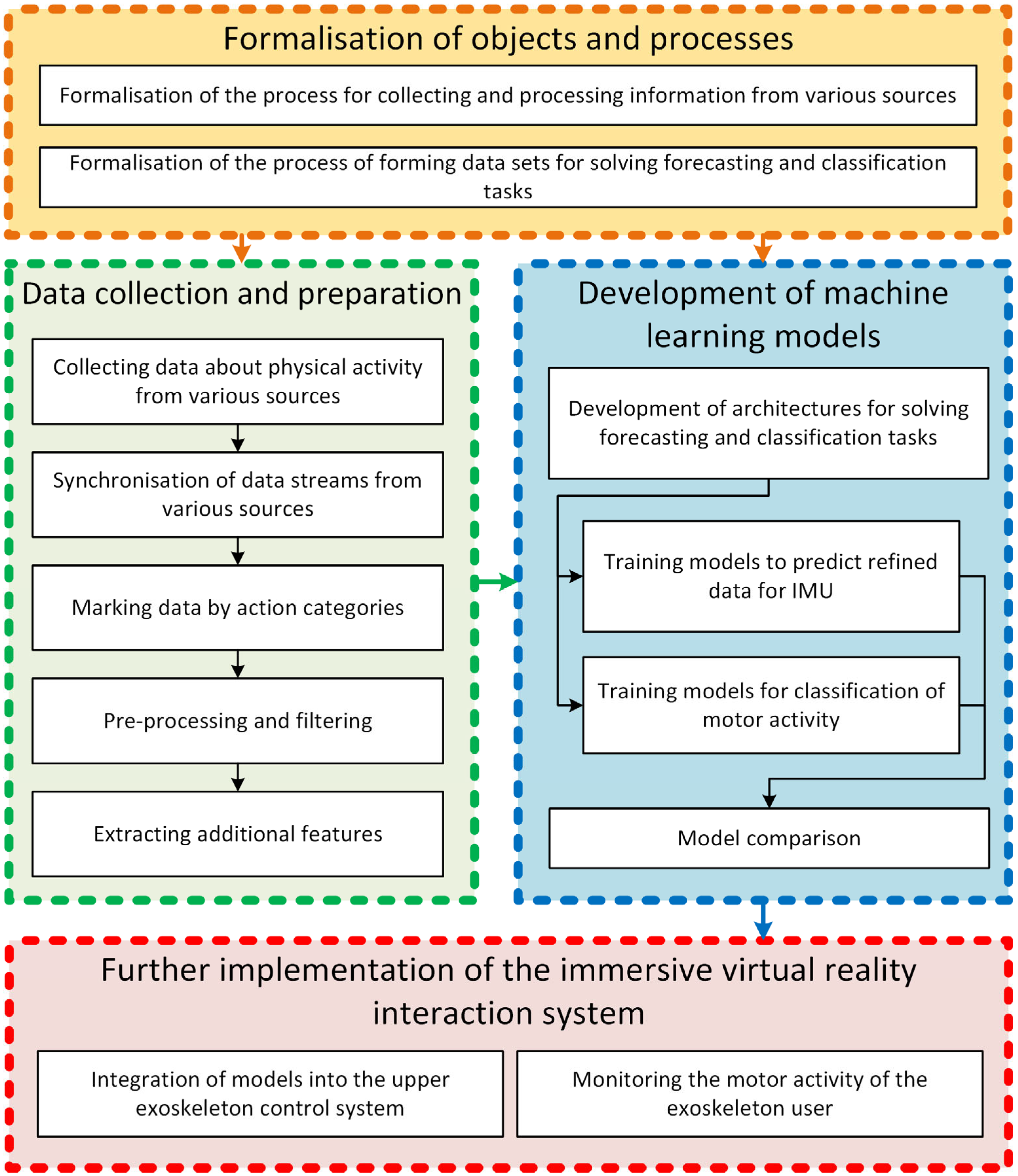

- Development of theoretical foundations and practical tools aimed at combining data from different tracking systems to form a comprehensive dataset of the user’s hand motor activity;

- Development and comparison of machine learning models to optimally solve the problem of predicting the main parameters of motor activity: hand position, rotation angle, motion vector, and motion type classification;

- Development of an algorithm to integrate the trained optimal machine learning model to form a digital shadow of the hand motor activity process for further use in virtual simulators and exoskeleton systems.

- Development and formalisation of the theoretical foundations for processing multi-sensor data on a user’s motor activity within a virtual training environment, including procedures for synchronising data from heterogeneous sources, thereby standardising data collection and processing and enabling reproducible comparison of different sensor-source combinations;

- Investigation of how individual components of the multi-sensor dataset, used to train various machine learning models, affect the final accuracy of regression and classification tasks, which makes it possible to justify replacing some data sources with others that are simpler or more user-friendly within exoskeleton systems;

- Formalisation of an algorithm for integrating trained models into the control loop of an upper-limb exoskeleton embedded in virtual training and rehabilitation systems, which constitutes an important theoretical step toward the subsequent implementation of control systems operating on multi-sensor data.

2. Related Works

3. Materials and Methods

- Synchronisation of data streams from various sources into a single set, taking into account time stamps;

- Division of a large amount of information into time segments (ranges) through automatic or manual tagging. In the last case, manual tagging tools need to be implemented, and a video stream can be the main source of information for the analyst doing the tagging. By analysing the actions happening in the video in separate fragments, the analyst indicates the time range of the current fragment, linking it to the corresponding data from other sources;

- Preprocessing of data using appropriate filters. This stage is most relevant for data from IMU, where additional transformations are performed to remove high-frequency noise and baseline drift;

- Extraction of additional attributes from the raw data to obtain additional metrics. This could be the power spectral density (PSD) of the signal for EMG or the calculated speed and position of the sensor for IMU.

- Prediction of the refined positions of human arm segments based on the initial input data using reference data from VR trackers as output data. This will reduce IMU positioning errors through the use of machine learning models. This approach to improving accuracy has been successfully tested in previous studies [32];

- Classification of the user’s hand movements according to several categories based on input data analysis. Within the scope of this task, it is also of great interest to study the influence of the number of sources on movement classification accuracy.

- Development of several alternative machine learning model architectures, which will allow for the objective comparison of different approaches and their effectiveness in the context of the tasks being solved;

- Preparation of an experimental setup, within which it is necessary to simulate as closely as possible the conditions of being in an immersive system based on an upper exoskeleton, and to organise the collection of data from the necessary sources;

- Creation of software tools for synchronising and tagging data from selected data sources.

- Use of processed and refined data on motor activity as a component of the control action to regulate the position of the upper exoskeleton;

- Monitoring and classification of the motor activity of the exoskeleton user in order to log the operations they perform;

- Tracking of the position of the hands to check the safety of the user’s current state and to avoid emergencies.

3.1. Research Methodology

3.2. Formalisation of Data Handling Processes

- Data synchronisation is used to eliminate for all sensors by synchronising the initial time stamps in samples from different sensors within each recording session. Before a session begins, the position of the sensors is calibrated and a single reference time is set. This further reduces the noise.

- Use of filters to reduce noise. For IMU, a combination of the Kalman filter and the Madgwick’s orientation filter is used, which has proven highly effective. For other sources, filters or conversion procedures may be applied as needed.

- Selection of the length of the scanning window as an input dimension, which varies for each data source and is significantly smaller than the duration of each measurement, allowing the action to be classified based on a data fragment. The disadvantage of this approach is that the time sequence corresponding to the movement will not be fully analysed; only a small part of it will, which may negatively affect accuracy. Additionally, the window dimension for each source will differ, as it is directly proportional to the recording frequency.

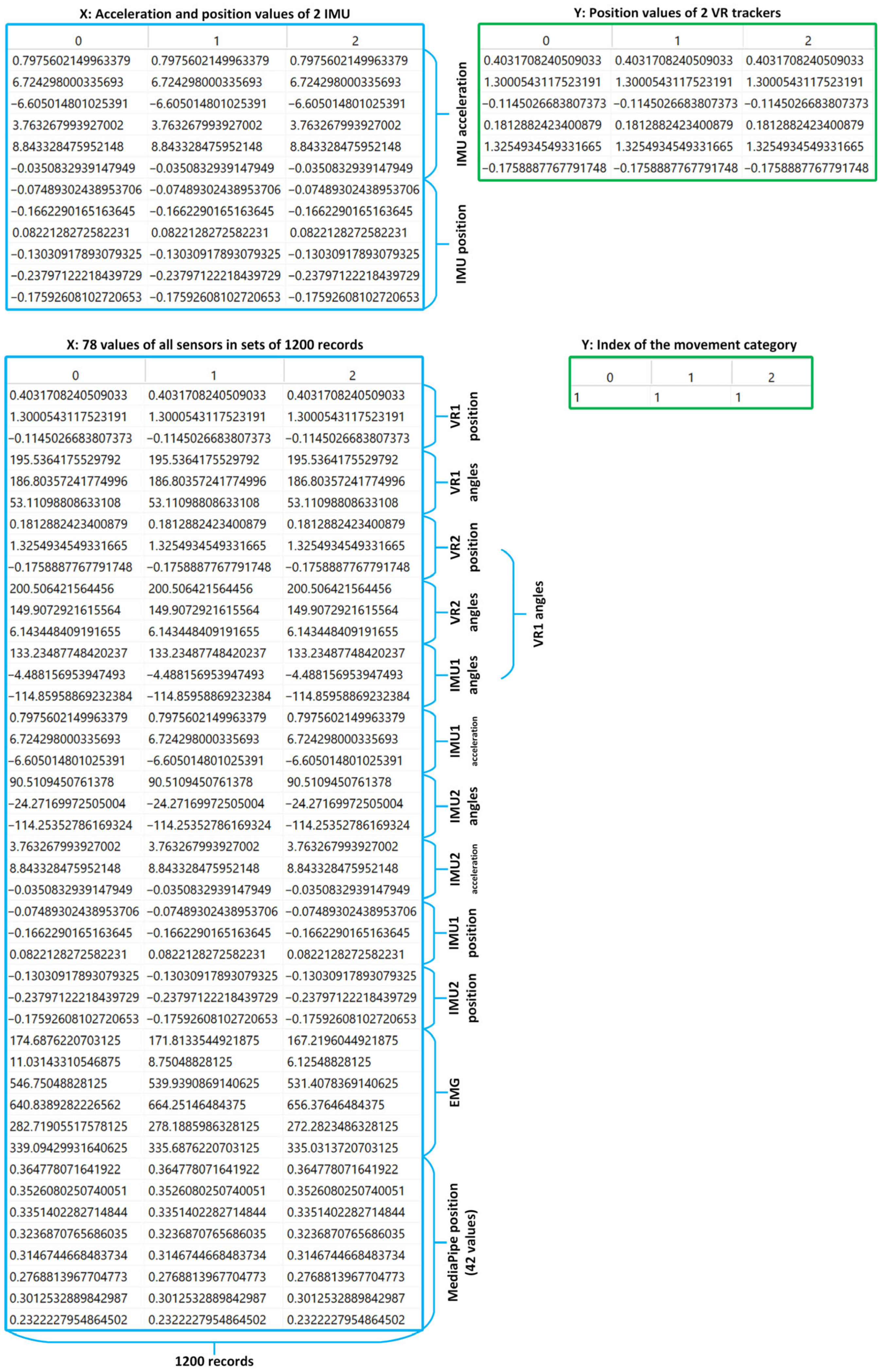

- Alignment of the input data sizes to a single length, taking into account the highest frequency among all sources by approximation. This approach will result in all data being represented as matrices with the same frequency (based on the values, this frequency will correspond to the highest and equal to ). The downside is that the amount of data will increase significantly (for example, up to 8.3 times for computer vision). On the other hand, there are the following advantages: all data are synchronised, which allows for the comparison of measurements from different sources that are equal in terms of indices (this can be used in solving problem 1 when predicting refined values); all data from the current measurement can be combined into a single matrix with dimensions , where the number of records corresponds to the largest number of records from the source in the current measurement (), and the number of columns corresponds to the total number of features from all sources ().

3.3. Development of Machine Learning Models

3.4. Evaluating the Quality of Trained Models

- Cross-entropy loss used as a loss function in neural network training:

- Classification accuracy (proportion of correctly predicted classes):

- Average Precision:

- Average Recall:

- F1-score (harmonic average Precision and Recall):

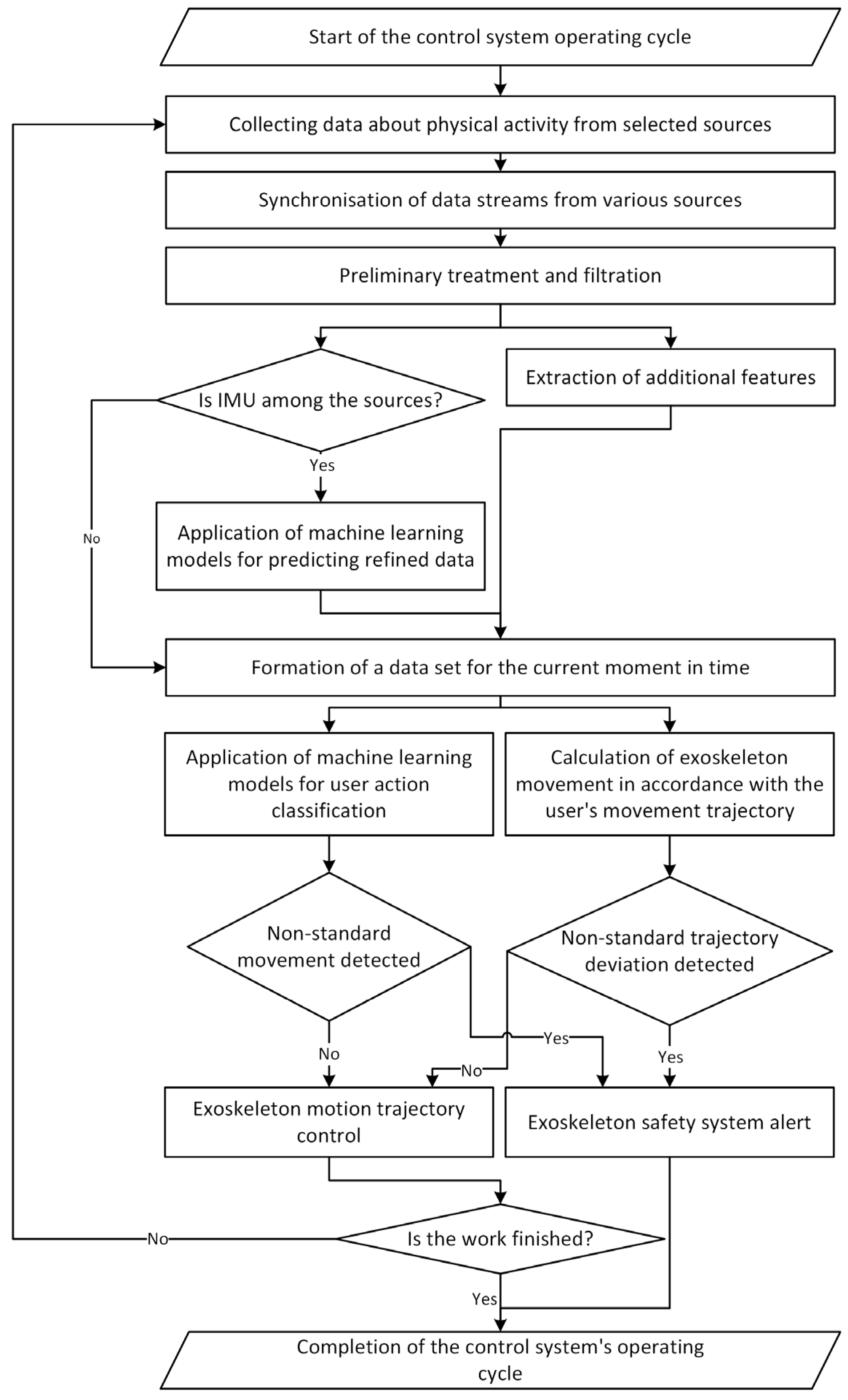

3.5. Algorithm for Integrating Trained Models for Upper Exoskeleton Control

| Algorithm 1. UpperExoControlCycle |

| INPUT: S = {s_EMG, s_IMU, s_CV, s_VR} // set of sensors AllowedClasses = {C1, …, Ck} // permissible action categories Δt // control-loop period allT = 5 s // window length allν = ν_EMG // unified sampling rate after resampling ML1_abs, ML1_inc // regressors: absolute coordinates and increments ML2_cls_abs, ML2_cls_rel // classifiers: using absolute or normalised data Thresholds_traj // tolerances for trajectory/velocity/angles STATE: Buffer_raw ← ∅ // circular buffer of packets r ∈ R Mode ← NORMAL LOOP every Δt while SystemActive: NewPackets ← AcquireFromSensors(S) // 1. Packet acquisition and transfer (φ, ϕ) Buffer_raw ← Buffer_raw ∪ Transfer(NewPackets) E ← Preprocess(Buffer_raw) // 2. Preprocessing (γ): synchronization, filtering, alignment // 3. Window/rate balancing → forming U-arrays U1 ← Build_U1(E, allT, allν) // unified per-measurement data U2 ← Extract_IMU_and_Kinematics(U1) // IMU + computed poses U3 ← GlobalAlign_AllSources(U1) // second alignment across the entire dataset U4 ← Diff_IMU_Positions(U2) // IMU displacements U5 ← Normalise_Relative(U3) // normalising the first row of the record if UseAbsoluteRegression: // 4. Choosing the regression scheme Pose_hat ← ML1_abs.predict(U2) // predict Y1 else: ΔPose_hat ← ML1_inc.predict(U4) // predict Y3 Pose_hat ← IntegratePose(ΔPose_hat) if UseRelativeClassification: // 5. Feature construction for classification X_cls ← U5 else: X_cls ← U3 Prob ← ML2_cls.predict_proba(X_cls) Action_hat ← argmax(Prob) // 7. Safety check if Action_hat ∉ AllowedClasses OR TrajectoryDeviation(Pose_hat, Thresholds_traj): EmergencyStop() Mode ← EMERGENCY Log(Action_hat, Pose_hat, "STOP") continue LOOP Cmd ← ComputeCommands(Pose_hat, Action_hat) // 8. Control command computation SendToActuators(Cmd) Log(Action_hat, Pose_hat, "OK") if LatencyExceeded() OR MissingCriticalSensors():// 9. Latency monitoring and resource degradation ApplyFallback(FallbackModels) EmergencyStop() Mode ← EMERGENCY END LOOP |

| END |

4. Results

4.1. Experimental Setup and Data Preparation

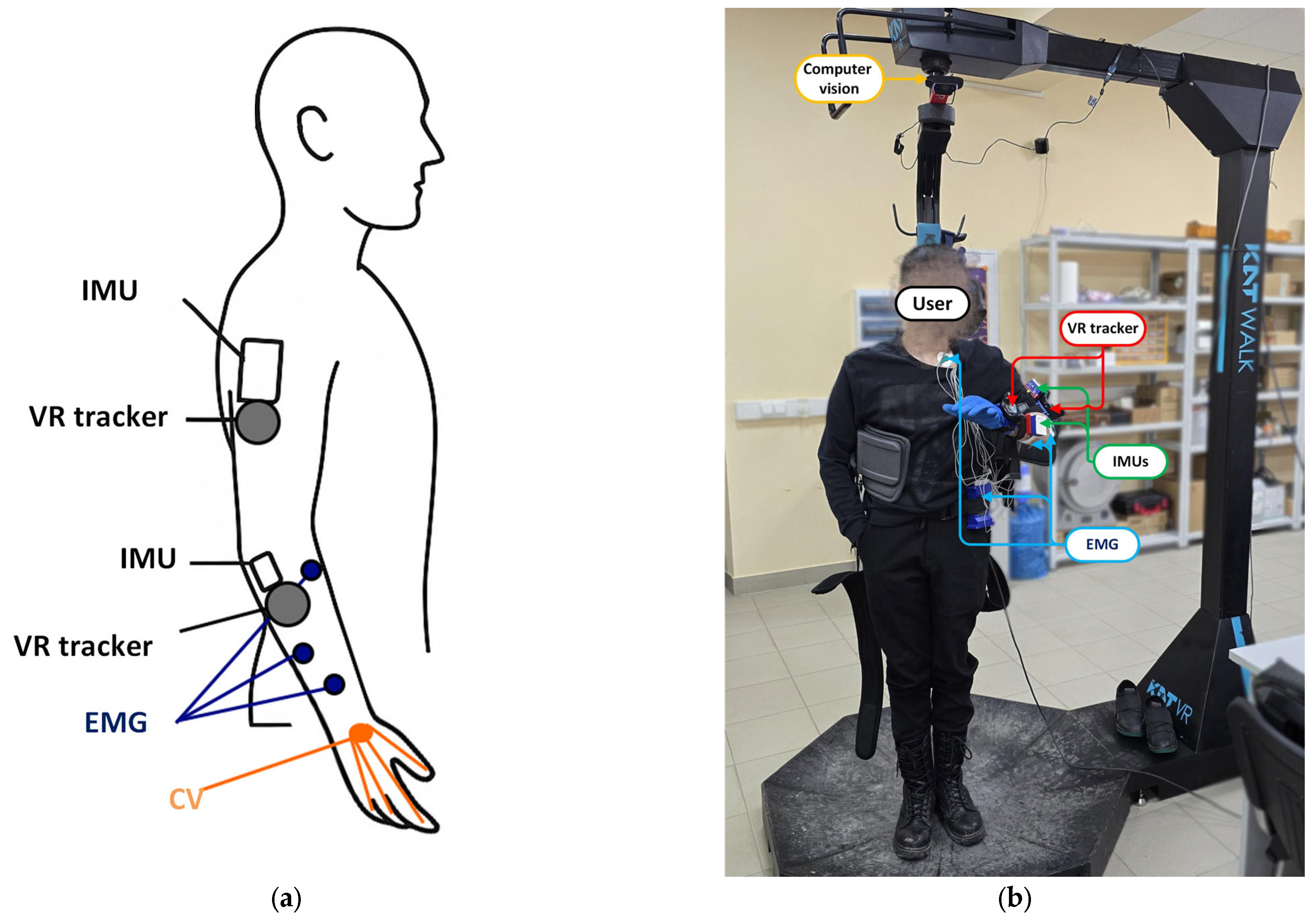

- (1)

- Preparation of software for synchronous data recording. The Python programming language and the set of libraries for working with the used equipment were applied. Each source logged the measurement time, which later allowed for synchronising measurements from different sources with different frequencies.

- (2)

- Equipment preparation and sensor calibration. A mandatory procedure for attaching EMG sensors, trackers, and IMUs to each participant at predetermined positions followed by calibration to minimise error accumulation. The sensor placement diagram and an illustrative excerpt from the experimental study are presented in Figure 5. The following tracking hardware was used for data acquisition: an IMU module based on the MPU-9250 with an ESP32 controller (TDK InvenSense, San Jose, CA, USA), HTC Vive trackers (HTC Corporation, Taoyuan, Taiwan), a Logitech HD Pro C920 camera (Logitech International S.A., Lausanne, Switzerland), and a six-channel EMG sensor (Wuxi Sizhirui Technology Co., Wuxi, China) built on an STM32 microcontroller (STMicroelectronics, Shanghai, China).

- (3)

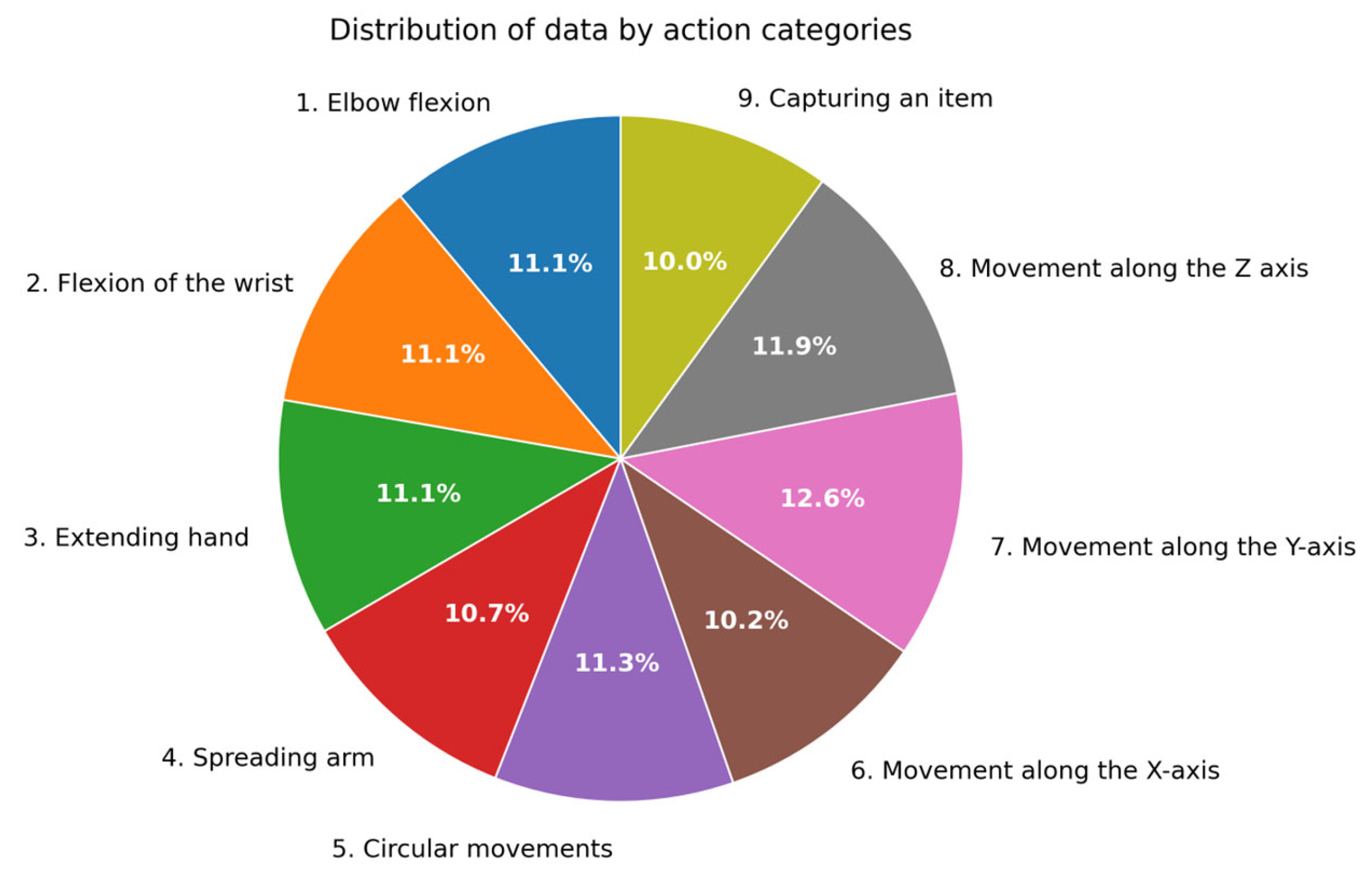

- Data collection. Participants performed a set of specified movements, presented in Table 2, including elbow flexion/extension, circular hand movements, and movements along various axes. Each movement was repeated several times to obtain a sufficient amount of data.

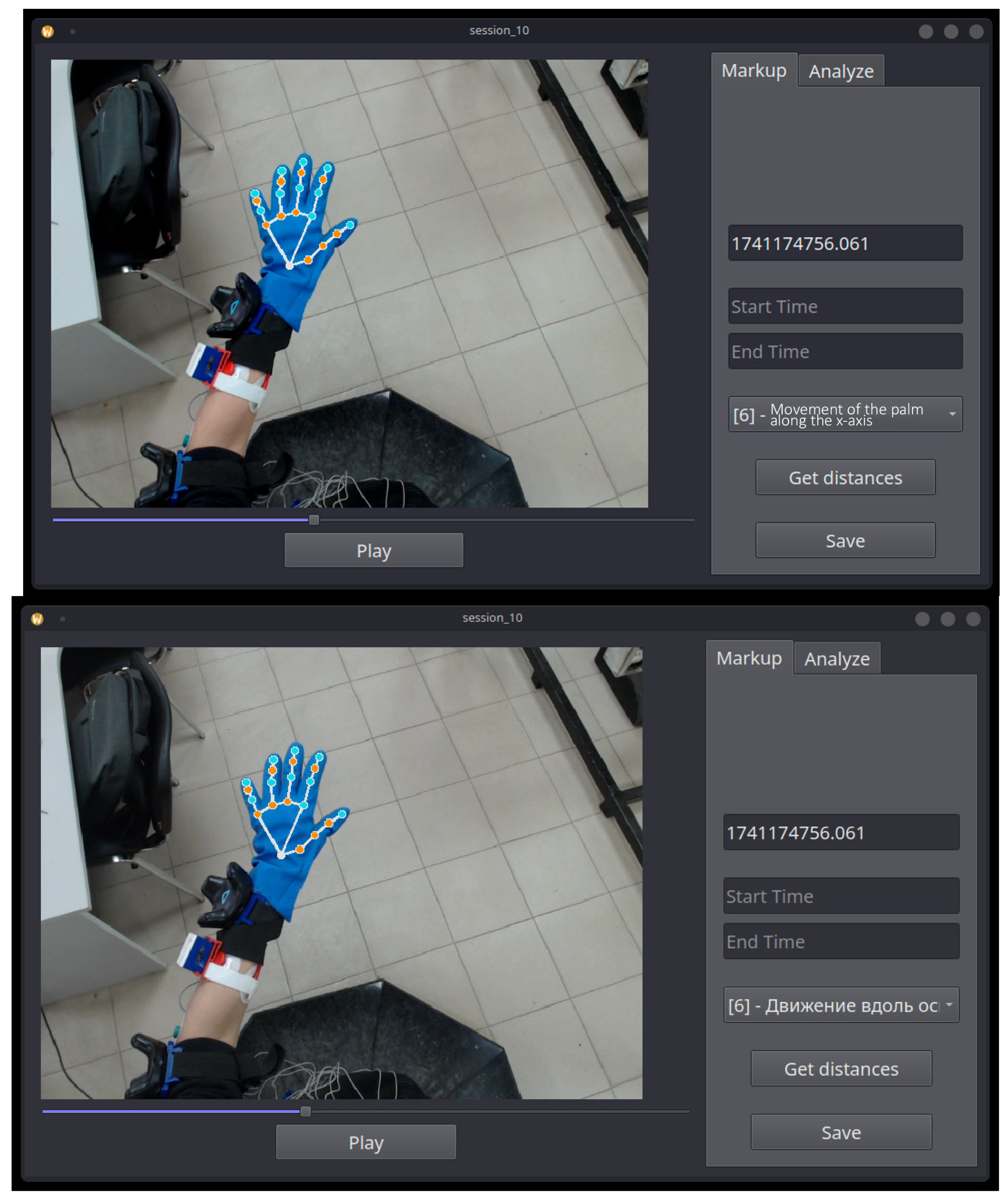

- (4)

- Data marking. To mark the data, software developed by the team was used, as shown in Figure 6. The developed software allows the user to create sessions for recording individual exercises, visually track recorded movements on video, and record the start and end times of movements for subsequent data extraction. During marking, data are extracted synchronously from all sources, taking into account the start and end times selected by the employee responsible for marking. Each fragment is saved as a separate file in csv format.

- (5)

- Signal processing. Necessary noise filtering is performed using band-pass filters, after which a single, synchronised sample is formed from sources arriving at different frequencies and in different formats. This stage involves combining all data on a single time scale, which is then stretched to a fixed time of 5 s (the most common movement length), allowing for a uniform dimension for all 1200-line records, which are then fed into the models. Thus, the presented software not only provides visual data annotation but also implements an algorithm for temporal alignment of streams with different sampling rates. All annotated signals are brought to a fixed window length, which makes it possible to form a feature matrix of a unified size for any combination of sensors.

- (6)

- Model training. Based on the selected features and synchronised data, the model architectures presented above were trained to solve the regression problem (IMU position to VR tracker position) and classification. This stage includes the study of classification model ablation in order to identify the influence of individual data sources on the accuracy of the solution. Model training was performed on the following hardware: AMD Ryzen 9 7950X, NVIDIA RTX 4070 Ti Super (16 GB), 128 GB RAM, and an SSD drive.

4.2. Structure of the Collected Data

4.3. Comparing Models When Solving Regression Problems

4.4. Ablation Model Research

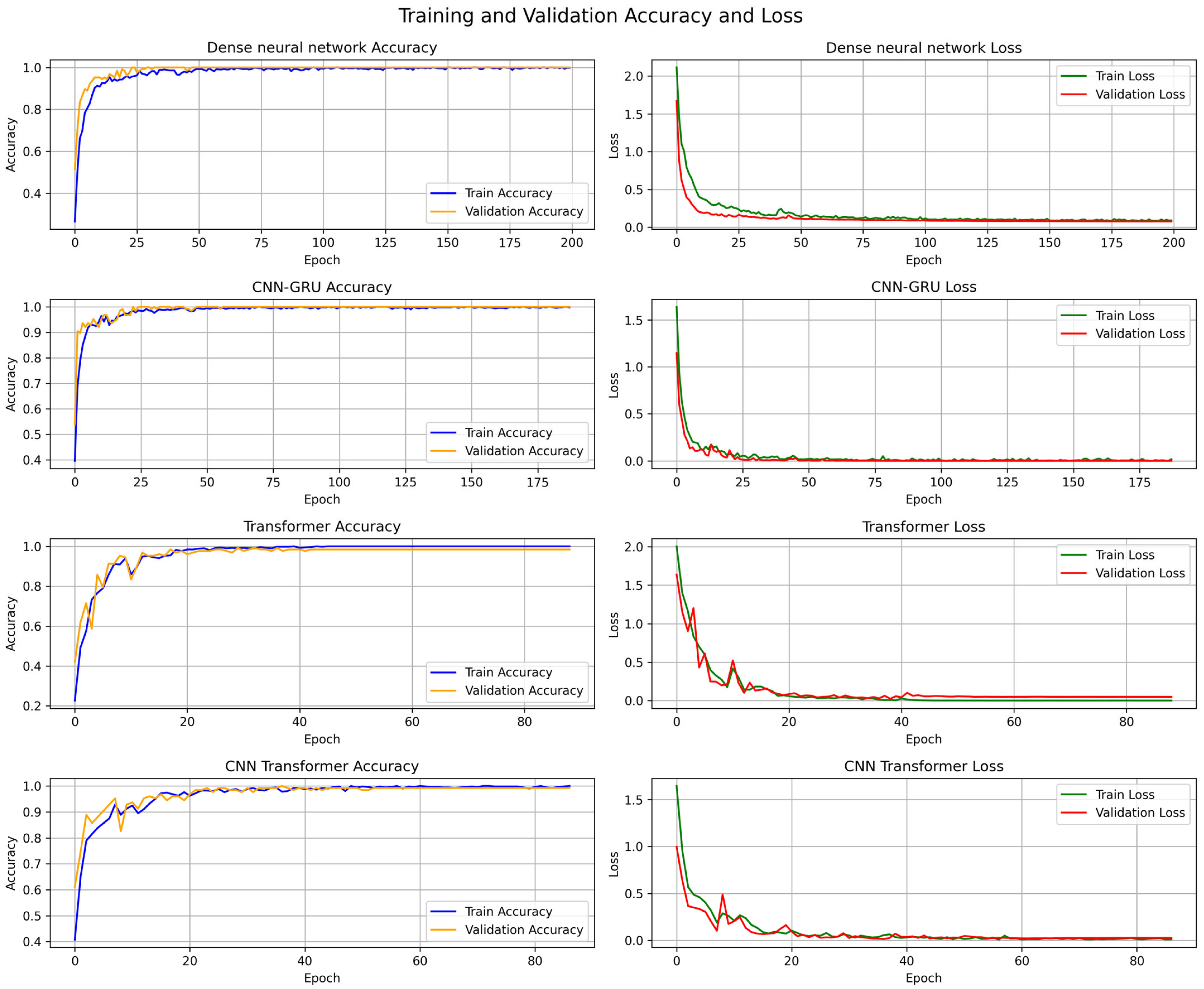

4.5. Comparison of Classification Algorithms

4.6. Comparison with Previous Studies

5. Discussion

5.1. Analysis of the Obtained Results

- -

- A formalised protocol for collecting and synchronising data for upper-limb exoskeletons, enabling unified analysis window lengths, sampling rates, and feature–matrix structures, thereby facilitating the comparison of models and sensor sets in current and future studies;

- -

- A universal algorithm for integrating machine learning models into the exoskeleton control loop, accounting for user safety requirements and enabling improved positioning accuracy when using IMUs through a regression model;

- -

- An investigation of the minimally sufficient set of sensors for classifying the user’s motor activity, which will simplify and reduce the cost of the upper-exoskeleton control system without sacrificing tracking quality and monitoring accuracy.

5.2. Limitations of the Study and Directions for Further Work

- Expansion of the set of exercises to classifications. The set of movements presented in the classification task should be significantly expanded. In addition, it is necessary to introduce a class of other actions that do not belong to any of the analysed ones. It would also be productive to take into account the probability of an action, since actions are not always unambiguous in the process of movement and must be identified by degree of probability rather than by strict category.

- Expansion of the number of tracking sources. Increasing the amount of source information about motor activity will have a positive effect on the accuracy of classification and the effectiveness of the monitoring system. For example, integrating an external camera with a depth sensor that covers the entire height of a person will allow key points of the entire body model to be recognised in three dimensions, while the use of EEG or ECG sensors will provide additional information about the physiological state of a person during activities, and strain or pressure sensors will provide an additional assessment of hand movements in space.

- Identification of features in the analysed signals. In addition to directly expanding the number of information sources, it is necessary to deepen the analysis and the processing of raw data in order to obtain additional knowledge from the information already available. For EMG, it is advisable to calculate frequency time features, such as the average and median frequencies of the spectrum, power spectrum density, signal entropy, fractal dimension, and so on. Similarly, for IMU data, it is possible to calculate normal and angular velocities, and, for computer vision systems, the velocity of individual body segments, adding recognition of additional body segments and their positions. An expanded set of features can increase the sensitivity of the system and its versatility in situations where processing the raw data with a model is not effective.

- Accounting for signal variability and model adaptability to human parameters. This limitation is particularly justified for such a category of tracking systems as EMG (and therefore ECG and EEG sensors), since the amplitude, shape, and spectrum of EMG signals vary significantly due to differences in anatomy, fitness level, skin temperature, and electrode placement. Without taking this variability into account, the model tends to overfit and lose accuracy after a long session or when the user changes. It is necessary to implement continuous calibration mechanisms: adaptive scaling of signals by a sliding window, normalisation taking into account the current level, and retraining strategies that allow the network weights to be reconfigured for a new person. This study considered normalisation to the initial values of each measurement, but it did not show the desired effectiveness in practice. Adapting models to a specific user is an extremely promising direction for future research.

- Accounting for external factors. In real-world conditions, sensors are affected by various interferences, including electromagnetic noise from the network and equipment (affects EMG), metal structures and collisions with them (may affect IMU and VR sensors), variable lighting, and non-uniform backgrounds (affects computer vision systems). To increase robustness, more multi-stage filtering and the processing of raw data are required. For computer vision systems, exposure, contrast, and viewing angle control are also necessary. In this regard, ensemble decision making methods trained under various environmental conditions may be further applied, which can increase resistance to unpredictable interference.

- Expansion of the participant sample. The study analysed data from healthy users, and the sample size is rather limited. Although the collected data made it possible to confirm the proposed hypothesis and to train the models effectively, expanding the sample—including participants from different age groups and patients with musculoskeletal disorders—is highly promising. This will allow us to verify the transferability of the models to movement analysis in cases of limited mobility, as well as ensuring the applicability of the hardware–software complex based on a controlled exoskeleton and the trained models to rehabilitation tasks.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Damaševičius:, R.; Sidekerskienė, T. Virtual Worlds for Learning in Metaverse: A Narrative Review. Sustainability 2024, 16, 2032. [Google Scholar] [CrossRef]

- Kim, Y.M.; Rhiu, I.; Yun, M.H. A systematic review of a virtual reality system from the perspective of user experience. Int. J. Hum.–Comput. Interact. 2020, 36, 893–910. [Google Scholar] [CrossRef]

- Dritsas, E.; Trigka, M.; Troussas, C.; Mylonas, P. Multimodal Interaction, Interfaces, and Communication: A Survey. Multimodal Technol. Interact. 2025, 9, 6. [Google Scholar] [CrossRef]

- Vélez-Guerrero, M.A.; Callejas-Cuervo, M.; Mazzoleni, S. Artificial Intelligence-Based Wearable Robotic Exoskeletons for Upper Limb Rehabilitation: A Review. Sensors 2021, 21, 2146. [Google Scholar] [CrossRef] [PubMed]

- Tiboni, M.; Borboni, A.; Vérité, F.; Bregoli, C.; Amici, C. Sensors and Actuation Technologies in Exoskeletons: A Review. Sensors 2022, 22, 884. [Google Scholar] [CrossRef]

- Kyrarini, M.; Lygerakis, F.; Rajavenkatanarayanan, A.; Sevastopoulos, C.; Nambiappan, H.R.; Chaitanya, K.K.; Babu, A.R.; Mathew, J.; Makedon, F. A Survey of Robots in Healthcare. Technologies 2021, 9, 8. [Google Scholar] [CrossRef]

- Vélez-Guerrero, M.A.; Callejas-Cuervo, M.; Mazzoleni, S. Design, Development, and Testing of an Intelligent Wearable Robotic Exoskeleton Prototype for Upper Limb Rehabilitation. Sensors 2021, 21, 5411. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural net-works: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef]

- Obukhov, A.; Volkov, A.; Pchelintsev, A.; Nazarova, A.; Teselkin, D.; Surkova, E.; Fedorchuk, I. Examination of the Accuracy of Movement Tracking Systems for Monitoring Exercise for Musculoskeletal Rehabilitation. Sensors 2023, 23, 8058. [Google Scholar] [CrossRef]

- Udeozor, C.; Toyoda, R.; Russo Abegão, F.; Glassey, J. Perceptions of the Use of Virtual Reality Games for Chemical Engineering Education and Professional Training. High. Educ. Pedagog. 2021, 6, 175–194. [Google Scholar] [CrossRef]

- Wanying, L.; Shuwei, L.; Qi, S. Robot-Assisted Upper Limb Rehabilitation Training Pose Capture Based on Optical Motion Capture. Int. J. Adv. Manuf. Technol. 2024, 121, 1–12. [Google Scholar] [CrossRef]

- Bhujel, S.; Hasan, S.K. A Comparative Study of End-Effector and Exoskeleton Type Rehabilitation Robots in Human Upper Extremity Rehabilitation. Hum.–Intell. Syst. Integr. 2023, 5, 11–42. [Google Scholar] [CrossRef]

- Mani Bharathi, V.; Manimegalai, P.; George, T.; Pamela, D. A Systematic Review of Techniques and Clinical Evidence to Adopt Virtual Reality in Post-Stroke Upper Limb Rehabilitation. Virtual Real. 2024, 28, 172. [Google Scholar] [CrossRef]

- Ödemiş, E.; Baysal, C.V.; İnci, M. Patient Performance Assessment Methods for Upper Extremity Rehabilitation in Assist-as-Needed Therapy Strategies: A Comprehensive Review. Med. Biol. Eng. Comput. 2025, 63, 1895–1914. [Google Scholar] [CrossRef]

- Cha, K.; Wang, J.; Li, Y.; Shen, L.; Chen, Z.; Long, J. A Novel Upper-Limb Tracking System in a Virtual Environment for Stroke Rehabilitation. J. NeuroEng. Rehabil. 2021, 18, 166. [Google Scholar] [CrossRef]

- Li, R.T.; Kling, S.R.; Salata, M.J.; Cupp, S.A.; Sheehan, J.; Voos, J.E. Wearable Performance Devices in Sports Medicine. Sports Health 2016, 8, 74–78. [Google Scholar] [CrossRef]

- Li, Y.; Zheng, L.; Wang, X. Flexible and Wearable Healthcare Sensors for Visual Reality Health-Monitoring. Virtual Real. Intell. Hardw. 2019, 1, 411–427. [Google Scholar] [CrossRef]

- Tsilomitrou, O.; Gkountas, K.; Evangeliou, N.; Dermatas, E. Wireless Motion Capture System for Upper Limb Rehabilitation. Appl. Syst. Innov. 2021, 4, 14. [Google Scholar] [CrossRef]

- Yang, Y.; Weng, D.; Li, D.; Xun, H. An improved method of pose estimation for lighthouse base station extension. Sensors 2017, 17, 2411. [Google Scholar] [CrossRef]

- Ergun, B.G.; Şahiner, R. Embodiment in Virtual Reality and Augmented Reality Games: An Investigation on User Interface Haptic Controllers. J. Soft Comput. Artif. Intell. 2023, 4, 80–92. [Google Scholar] [CrossRef]

- Franček, P.; Jambrošić, K.; Horvat, M.; Planinec, V. The Performance of Inertial Measurement Unit Sensors on Various Hardware Platforms for Binaural Head-Tracking Applications. Sensors 2023, 23, 872. [Google Scholar] [CrossRef]

- Ghorbani, F.; Ahmadi, A.; Kia, M.; Rahman, Q.; Delrobaei, M. A Decision-Aware Ambient Assisted Living System with IoT Embedded Device for In-Home Monitoring of Older Adults. Sensors 2023, 23, 2673. [Google Scholar] [CrossRef]

- Eliseichev, E.A.; Mikhailov, V.V.; Borovitskiy, I.V.; Zhilin, R.M.; Senatorova, E.O. A Review of Devices for Detection of Muscle Activity by Surface Electromyography. Biomed. Eng. 2022, 56, 69–74. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Lu, T.-F.; Grimshaw, P.; Robertson, W. A Novel Approach for Human Intention Recognition Based on Hall Effect Sensors and Permanent Magnets. Prog. Electromagn. Res. M 2020, 92, 55–65. [Google Scholar] [CrossRef]

- Chung, J.-L.; Ong, L.-Y.; Leow, M.-C. Comparative Analysis of Skeleton-Based Human Pose Estimation. Future Internet 2022, 14, 380. [Google Scholar] [CrossRef]

- Obukhov, A.; Dedov, D.; Volkov, A.; Teselkin, D. Modeling of Nonlinear Dynamic Processes of Human Movement in Virtual Reality Based on Digital Shadows. Computation 2023, 11, 85. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef]

- Lin, J.-J.; Hsu, C.-K.; Hsu, W.-L.; Tsao, T.-C.; Wang, F.-C.; Yen, J.-Y. Machine Learning for Human Motion Intention Detection. Sensors 2023, 23, 7203. [Google Scholar] [CrossRef]

- Gonzales-Huisa, O.A.; Oshiro, G.; Abarca, V.E.; Chavez-Echajaya, J.G.; Elias, D.A. EMG and IMU Data Fusion for Locomotion Mode Classification in Transtibial Amputees. Prosthesis 2023, 5, 1232–1256. [Google Scholar] [CrossRef]

- Marcos Mazon, D.; Groefsema, M.; Schomaker, L.R.B.; Carloni, R. IMU-Based Classification of Locomotion Modes, Transitions, and Gait Phases with Convolutional Recurrent Neural Networks. Sensors 2022, 22, 8871. [Google Scholar] [CrossRef]

- Vásconez, J.P.; Barona López, L.I.; Valdivieso Caraguay, Á.L.; Benalcázar, M.E. Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks. Sensors 2022, 22, 9613. [Google Scholar] [CrossRef]

- Obukhov, A.; Dedov, D.; Volkov, A.; Rybachok, M. Technology for Improving the Accuracy of Predicting the Position and Speed of Human Movement Based on Machine Learning Models. Technologies 2025, 13, 101. [Google Scholar] [CrossRef]

- Mishra, R.; Mishra, A.K.; Choudhary, B.S. High-Speed Motion Analysis-Based Machine Learning Models for Prediction and Simulation of Flyrock in Surface Mines. Appl. Sci. 2023, 13, 9906. [Google Scholar] [CrossRef]

- Kwon, B.; Son, H. Accurate Path Loss Prediction Using a Neural Network Ensemble Method. Sensors 2024, 24, 304. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Suh, J. A Study on Systematic Improvement of Transformer Models for Object Pose Estimation. Sensors 2025, 25, 1227. [Google Scholar] [CrossRef]

- Sulla-Torres, J.; Calla Gamboa, A.; Avendaño Llanque, C.; Angulo Osorio, J.; Zúñiga Carnero, M. Classification of Motor Competence in Schoolchildren Using Wearable Technology and Machine Learning with Hyperparameter Optimization. Appl. Sci. 2024, 14, 707. [Google Scholar] [CrossRef]

- Stančić, I.; Musić, J.; Grujić, T.; Vasić, M.K.; Bonković, M. Comparison and Evaluation of Machine Learning-Based Classification of Hand Gestures Captured by Inertial Sensors. Computation 2022, 10, 159. [Google Scholar] [CrossRef]

- Samkari, E.; Arif, M.; Alghamdi, M.; Al Ghamdi, M.A. Human Pose Estimation Using Deep Learning: A Systematic Literature Review. Mach. Learn. Knowl. Extr. 2023, 5, 1612–1659. [Google Scholar] [CrossRef]

- Ogundokun, R.O.; Maskeliūnas, R.; Misra, S.; Damasevicius, R. Hybrid InceptionV3-SVM-Based Approach for Human Posture Detection in Health Monitoring Systems. Algorithms 2022, 15, 410. [Google Scholar] [CrossRef]

- Farhadpour, S.; Warner, T.A.; Maxwell, A.E. Selecting and Interpreting Multiclass Loss and Accuracy Assessment Metrics for Classifications with Class Imbalance: Guidance and Best Practices. Remote Sens. 2024, 16, 533. [Google Scholar] [CrossRef]

- Jiang, Y.; Song, L.; Zhang, J.; Song, Y.; Yan, M. Multi-Category Gesture Recognition Modeling Based on sEMG and IMU Signals. Sensors 2022, 22, 5855. [Google Scholar] [CrossRef]

- Bangaru, S.S.; Wang, C.; Aghazadeh, F. Data Quality and Reliability Assessment of Wearable EMG and IMU Sensor for Construction Activity Recognition. Sensors 2020, 20, 5264. [Google Scholar] [CrossRef] [PubMed]

- Valdivieso Caraguay, Á.L.; Vásconez, J.P.; Barona López, L.I.; Benalcázar, M.E. Recognition of Hand Gestures Based on EMG Signals with Deep and Double-Deep Q-Networks. Sensors 2023, 23, 3905. [Google Scholar] [CrossRef] [PubMed]

- Bai, A.; Song, H.; Wu, Y.; Dong, S.; Feng, G.; Jin, H. Sliding-Window CNN + Channel-Time Attention Transformer Network Trained with Inertial Measurement Units and Surface Electromyography Data for the Prediction of Muscle Activation and Motion Dynamics Leveraging IMU-Only Wearables for Home-Based Shoulder Rehabilitation. Sensors 2025, 25, 1275. [Google Scholar] [CrossRef]

- Jeon, H.; Choi, H.; Noh, D.; Kim, T.; Lee, D. Wearable Inertial Sensor-Based Hand-Guiding Gestures Recognition Method Robust to Significant Changes in the Body-Alignment of Subject. Mathematics 2022, 10, 4753. [Google Scholar] [CrossRef]

- Toro-Ossaba, A.; Jaramillo-Tigreros, J.; Tejada, J.C.; Peña, A.; López-González, A.; Castanho, R.A. LSTM Recurrent Neural Network for Hand Gesture Recognition Using EMG Signals. Appl. Sci. 2022, 12, 9700. [Google Scholar] [CrossRef]

- Hassan, N.; Miah, A.S.M.; Shin, J. A Deep Bidirectional LSTM Model Enhanced by Transfer-Learning-Based Feature Extraction for Dynamic Human Activity Recognition. Appl. Sci. 2024, 14, 603. [Google Scholar] [CrossRef]

- Lin, W.-C.; Tu, Y.-C.; Lin, H.-Y.; Tseng, M.-H. A Comparison of Deep Learning Techniques for Pose Recognition in Up-and-Go Pole Walking Exercises Using Skeleton Images and Feature Data. Electronics 2025, 14, 1075. [Google Scholar] [CrossRef]

- Kamali Mohammadzadeh, A.; Alinezhad, E.; Masoud, S. Neural-Network-Driven Intention Recognition for Enhanced Human–Robot Interaction: A Virtual-Reality-Driven Approach. Machines 2025, 13, 414. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, J.; Wang, S.; Wang, R.; Lu, Y.; Yuan, Y.; Chen, J.; Dai, Y.; Liu, Y.; Wang, X.; et al. Multimodal Sensing in Stroke Motor Rehabilitation. Adv. Sens. Res. 2023, 2, 2200055. [Google Scholar] [CrossRef]

- Lauer-Schmaltz, M.W.; Cash, P.; Hansen, J.P.; Das, N. Human Digital Twins in Rehabilitation: A Case Study on Exoskeleton and Serious-Game-Based Stroke Rehabilitation Using the ETHICA Methodology. IEEE Access 2024, 12, 180968–180991. [Google Scholar] [CrossRef]

- Cellupica, A.; Cirelli, M.; Saggio, G.; Gruppioni, E.; Valentini, P.P. An Interactive Digital-Twin Model for Virtual Reality Environments to Train in the Use of a Sensorized Upper-Limb Prosthesis. Algorithms 2024, 17, 35. [Google Scholar] [CrossRef]

- Fu, J.; Choudhury, R.; Hosseini, S.M.; Simpson, R.; Park, J.-H. Myoelectric Control Systems for Upper Limb Wearable Robotic Exoskeletons and Exosuits—A Systematic Review. Sensors 2022, 22, 8134. [Google Scholar] [CrossRef]

| Source | Brief Description of the Study | Main Findings |

|---|---|---|

| Wanying et al. (2024) [11] | Method for capturing human posture for robotic rehabilitation of upper limbs using optical motion capture. | An optical method based on markers placed on the human body was used to capture movements. The collected data on the user’s hand movements were used to control the manipulator motors, with the trajectories of the human and manipulator movements corresponding to each other with a specified level of accuracy. |

| Bhujel et al. (2023) [12] | Comparison of manipulators and exoskeletons for upper-limb rehabilitation of various designs. | Exoskeletons only track the body segments to which they are attached, which makes it difficult to track, for example, fingers. Depth cameras and inertial sensors need to be integrated for more accurate tracking of the entire body. |

| Mani Bharathi et al. (2024) [13] | The systematic review of the use of VR in upper-limb rehabilitation after stroke. | The review includes an analysis of the tracking systems used, including specialised gloves, motion sensors, mobile devices, inertial sensors, cameras, and computer vision. |

| Ödemiş et al. (2025) [14] | Methods for assessing the effectiveness of upper-limb rehabilitation. | Approaches to assessing the effectiveness of rehabilitation using various methods are considered: positional error analysis, assessment of the strength of interaction with a robot, electromyography, electroencephalography (EEG), exercise performance effectiveness, physiological signals (pulse, galvanic skin response). |

| Cha et al. (2021) [15] | Development of a virtual reality rehabilitation system for restoring upper-limb function. | The computer vision-based tracking system is considered, which allows for analysing precise finger movements, the amplitude of the entire arm’s movement, and matching the user’s upper limb movements with those of the avatar’s upper limbs. |

| Li et al. (2016) [16] | The use of wearable devices to measure training effectiveness in sports medicine. | Wearable devices in sports medicine (accelerometers, heart rate monitors, GPS devices, and other sensors) are analysed to track exercise performance, analyse movements, and prevent sports injuries. |

| Li et al. (2019) [17] | The use of wearable sensors for health monitoring in VR. | The use of various wearable sensors for health monitoring in VR. EEG, heart rate, temperature, ECG, EMG, pressure, and other sensors are used, which highlights the need to generate comprehensive information about the user’s physiological indicators. |

| Tsilomitrou et al. (2021) [18] | Wireless capture system for upper-limb rehabilitation. | Combining multiple IMUs to monitor patient progress during rehabilitation exercises. Merging data from sensors to construct a kinematic chain with 7 degrees of freedom. |

| Exercise Number | Description |

|---|---|

| 1. | Flexion and extension of the arm at the elbow |

| 2. | Flexion and extension of the wrist |

| 3. | Reaching forward with one’s hand |

| 4. | Spreading the arm out to the side and bringing it back to the chest |

| 5. | Circular movements of the arm along the body |

| 6. | Movement of the palm in front of the body along the x-axis |

| 7. | Movement of the palm in front of the body along the y-axis |

| 8. | Movement of the palm in front of the body along the z-axis |

| 9. | Imitation of grasping an object with an outstretched arm and moving it towards the chest |

| Model | MAE | MSE | Time |

|---|---|---|---|

| 1.1 Linear Regression | 0.071169 | 0.009196 | 1.45 × 10−4 |

| 1.2 ElasticNet Regression | 0.092258 | 0.013951 | 8.46 × 10−5 |

| 1.3 RANSAC Regressor | 0.126859 | 0.032174 | 9.36 × 10−5 |

| 1.4 Theil–Sen Regressor | 0.072374 | 0.009491 | 0.633186 |

| 1.5 Decision Tree Regressor | 0.029349 | 0.002572 | 2.00 × 10−4 |

| 1.6 Random Forest Regressor | 0.002181 | 4.43 × 10−5 | 0.013973 |

| 1.7 AdaBoost | 0.067673 | 0.008036 | 0.345653 |

| 1.8 Gradient Boosting Regressor | 0.041770 | 0.003961 | 0.036809 |

| 1.9 XGBRegressor | 0.027777 | 0.001940 | 5.00 × 10−4 |

| 1.10 K-Nearest Neighbors Regressor | 0.006458 | 4.00 × 10−4 | 0.009008 |

| 1.11 Dense NN | 0.028895 | 0.002276 | 0.920696 |

| 1.12 Transformer | 0.092324 | 0.013956 | 0.433021 |

| 1.13 CNN–Transformer | 0.022941 | 0.001387 | 2.043763 |

| Model | MAE | MSE | Time |

|---|---|---|---|

| 1.1 Linear Regression | 4.75 × 10−4 | 6.96 × 10−6 | 1.56 × 10−4 |

| 1.2 ElasticNet Regression | 4.16 × 10−4 | 7.10 × 10−6 | 8.99 × 10−5 |

| 1.3 RANSAC Regressor | 4.13 × 10−4 | 7.10 × 10−6 | 9.21 × 10−5 |

| 1.4 Theil–Sen Regressor | 4.14 × 10−4 | 7.10 × 10−6 | 0.003424 |

| 1.5 Decision Tree Regressor | 4.19 × 10−4 | 7.12 × 10−6 | 1.57 × 10−4 |

| 1.6 Random Forest Regressor | 5.29 × 10−4 | 8.81 × 10−6 | 0.017663 |

| 1.7 AdaBoost | 0.003944 | 8.34 × 10−5 | 0.023176 |

| 1.8 Gradient Boosting Regressor | 4.40 × 10−4 | 7.37 × 10−6 | 0.015588 |

| 1.9 XGBRegressor | 4.85 × 10−4 | 7.98 × 10−6 | 4.18 × 10−4 |

| 1.10 K-Nearest Neighbors Regressor | 6.53 × 10−4 | 8.25 × 10−6 | 0.009248 |

| 1.11 Dense NN | 4.22 × 10−4 | 7.10 × 10−6 | 0.073274 |

| 1.12 Transformer | 4.34 × 10−4 | 7.10 × 10−6 | 0.207461 |

| 1.13 CNN–Transformer | 4.19 × 10−4 | 7.10 × 10−6 | 0.396306 |

| EMG | IMUp | IMUa | CV | VRp | VRa | LoR | NNC | DT | RF | Ada | GNB | XGB | Stack | Vot | DNN | Tr | CTr | CGRU |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ✓ | 0.460 | 0.786 | 0.365 | 0.278 | 0.500 | 0.357 | 0.460 | 0.738 | 0.492 | 0.897 | 0.500 | 0.810 | 0.897 | |||||

| ✓ | 0.881 | 0.960 | 0.762 | 0.730 | 0.921 | 0.508 | 0.913 | 0.968 | 0.802 | 0.976 | 0.952 | 1.000 | 1.000 | |||||

| ✓ | 0.659 | 0.897 | 0.786 | 0.722 | 0.897 | 0.540 | 0.881 | 0.952 | 0.921 | 1.000 | 0.952 | 0.984 | 1.000 | |||||

| ✓ | 0.937 | 0.937 | 0.825 | 0.841 | 0.937 | 0.722 | 0.857 | 0.905 | 0.913 | 0.968 | 0.968 | 0.992 | 0.968 | |||||

| ✓ | 0.889 | 0.992 | 0.841 | 0.738 | 0.952 | 0.437 | 0.937 | 0.992 | 0.905 | 0.992 | 0.754 | 0.984 | 0.992 | |||||

| ✓ | 0.921 | 0.968 | 0.929 | 0.889 | 0.968 | 0.619 | 0.921 | 0.960 | 0.929 | 0.992 | 0.706 | 1.000 | 1.000 | |||||

| ✓ | ✓ | 0.460 | 0.786 | 0.722 | 0.675 | 0.913 | 0.571 | 0.873 | 0.841 | 0.786 | 0.913 | 0.714 | 0.889 | 0.937 | ||||

| ✓ | ✓ | 0.786 | 0.897 | 0.746 | 0.746 | 0.929 | 0.532 | 0.889 | 0.905 | 0.865 | 0.992 | 0.976 | 1.000 | 1.000 | ||||

| ✓ | ✓ | 0.921 | 0.968 | 0.873 | 0.865 | 0.976 | 0.643 | 0.905 | 0.984 | 0.929 | 0.984 | 0.817 | 0.992 | 0.992 | ||||

| ✓ | ✓ | ✓ | 0.698 | 0.825 | 0.746 | 0.762 | 0.921 | 0.595 | 0.873 | 0.841 | 0.802 | 0.976 | 0.921 | 0.984 | 0.976 | |||

| ✓ | ✓ | ✓ | 0.952 | 0.976 | 0.825 | 0.881 | 0.968 | 0.698 | 0.929 | 0.968 | 0.913 | 0.992 | 0.794 | 1.000 | 1.000 | |||

| ✓ | ✓ | ✓ | ✓ | 0.698 | 0.825 | 0.841 | 0.849 | 0.952 | 0.722 | 0.889 | 0.905 | 0.865 | 0.968 | 0.944 | 0.976 | 0.992 | ||

| ✓ | ✓ | ✓ | ✓ | 0.952 | 0.968 | 0.865 | 0.889 | 0.952 | 0.754 | 0.929 | 0.944 | 0.897 | 0.992 | 0.952 | 1.000 | 1.000 | ||

| ✓ | ✓ | ✓ | ✓ | ✓ | 0.698 | 0.825 | 0.794 | 0.865 | 0.968 | 0.722 | 0.881 | 0.873 | 0.817 | 0.937 | 0.921 | 0.984 | 0.992 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 0.802 | 0.857 | 0.857 | 0.810 | 0.952 | 0.770 | 0.897 | 0.889 | 0.857 | 0.992 | 0.937 | 0.984 | 1.000 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 0.802 | 0.857 | 0.865 | 0.881 | 0.976 | 0.810 | 0.913 | 0.905 | 0.857 | 1.000 | 0.937 | 0.984 | 0.984 |

| EMG | IMUp | IMUa | CV | VRp | VRa | LoR | NNC | DT | RF | Ada | GNB | XGB | Stack | Vot | DNN | Tr | CTr | CGRU |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ✓ | 0.222 | 0.452 | 0.317 | 0.246 | 0.325 | 0.317 | 0.302 | 0.317 | 0.310 | 0.706 | 0.349 | 0.476 | 0.571 | |||||

| ✓ | 0.698 | 0.905 | 0.738 | 0.619 | 0.833 | 0.492 | 0.825 | 0.897 | 0.825 | 0.984 | 0.944 | 0.992 | 0.960 | |||||

| ✓ | 0.468 | 0.873 | 0.849 | 0.794 | 0.889 | 0.460 | 0.873 | 0.881 | 0.881 | 0.937 | 0.952 | 0.968 | 0.976 | |||||

| ✓ | 0.889 | 0.889 | 0.786 | 0.802 | 0.889 | 0.476 | 0.865 | 0.897 | 0.825 | 0.929 | 0.913 | 0.976 | 0.968 | |||||

| ✓ | 0.746 | 0.968 | 0.714 | 0.738 | 0.881 | 0.571 | 0.873 | 0.929 | 0.802 | 0.984 | 0.810 | 0.976 | 0.992 | |||||

| ✓ | 0.817 | 0.960 | 0.833 | 0.810 | 0.929 | 0.595 | 0.913 | 0.960 | 0.913 | 0.984 | 0.897 | 0.968 | 0.992 | |||||

| ✓ | ✓ | 0.587 | 0.873 | 0.802 | 0.770 | 0.913 | 0.587 | 0.873 | 0.921 | 0.849 | 0.968 | 0.984 | 0.984 | 0.984 | ||||

| ✓ | ✓ | 0.794 | 0.960 | 0.873 | 0.873 | 0.944 | 0.635 | 0.937 | 0.960 | 0.889 | 0.984 | 0.952 | 0.984 | 0.984 | ||||

| ✓ | ✓ | 0.881 | 0.960 | 0.865 | 0.921 | 0.968 | 0.698 | 0.952 | 0.952 | 0.937 | 0.984 | 0.960 | 0.992 | 0.992 | ||||

| ✓ | ✓ | ✓ | 0.841 | 0.913 | 0.833 | 0.881 | 0.968 | 0.706 | 0.937 | 0.921 | 0.929 | 0.968 | 0.960 | 0.984 | 0.984 | |||

| ✓ | ✓ | ✓ | 0.389 | 0.516 | 0.833 | 0.857 | 0.984 | 0.706 | 0.929 | 0.833 | 0.865 | 0.921 | 0.873 | 0.968 | 0.976 | |||

| ✓ | ✓ | ✓ | ✓ | 0.389 | 0.516 | 0.810 | 0.889 | 0.968 | 0.786 | 0.929 | 0.857 | 0.873 | 0.913 | 0.841 | 0.976 | 0.968 | ||

| ✓ | ✓ | ✓ | ✓ | 0.222 | 0.452 | 0.317 | 0.246 | 0.325 | 0.317 | 0.302 | 0.317 | 0.310 | 0.706 | 0.349 | 0.476 | 0.571 | ||

| ✓ | ✓ | ✓ | ✓ | ✓ | 0.698 | 0.905 | 0.738 | 0.619 | 0.833 | 0.492 | 0.825 | 0.897 | 0.825 | 0.984 | 0.944 | 0.992 | 0.960 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 0.468 | 0.873 | 0.849 | 0.794 | 0.889 | 0.460 | 0.873 | 0.881 | 0.881 | 0.937 | 0.952 | 0.968 | 0.976 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 0.889 | 0.889 | 0.786 | 0.802 | 0.889 | 0.476 | 0.865 | 0.897 | 0.825 | 0.929 | 0.913 | 0.976 | 0.968 |

| Model | Accuracy | Precision | Recall | F1-Score | Time, s. |

|---|---|---|---|---|---|

| 2.1 Logistic Regression | 0.8016 | 0.8767 | 0.8073 | 0.8148 | 0.7699 |

| 2.2 Nearest Neighbors Classification | 0.8571 | 0.8940 | 0.8614 | 0.8595 | 1.5360 |

| 2.3 Decision Tree Classifier | 0.8651 | 0.9108 | 0.8671 | 0.8746 | 0.0225 |

| 2.4 Random Forest Classifier | 0.8810 | 0.9294 | 0.8816 | 0.8867 | 0.0284 |

| 2.5 AdaBoost Classifier | 0.9762 | 0.9804 | 0.9766 | 0.9771 | 0.0487 |

| 2.6 Gaussian Naive Bayes | 0.8095 | 0.8573 | 0.8069 | 0.7936 | 0.1545 |

| 2.7 XGBClassifier | 0.9127 | 0.9511 | 0.9134 | 0.9218 | 0.4667 |

| 2.8 Stacking Classifier | 0.9048 | 0.9263 | 0.9053 | 0.9085 | 1.4746 |

| 2.9 Voting Classifier | 0.8571 | 0.9311 | 0.8584 | 0.8747 | 1.8218 |

| 2.10 Dense NN | 0.9841 | 0.9840 | 0.9835 | 0.9835 | 0.0928 |

| 2.11 Transformer | 0.9921 | 0.9926 | 0.9915 | 0.9917 | 0.2688 |

| 2.12 CNN–Transformer | 0.9444 | 0.9456 | 0.9438 | 0.9436 | 0.3683 |

| 2.13 CNN–GRU | 0.9762 | 0.9755 | 0.9756 | 0.9753 | 0.5511 |

| Model | Accuracy | Precision | Recall | F1-Score | Time, s. |

|---|---|---|---|---|---|

| 2.1 Logistic Regression | 0.7857 | 0.8322 | 0.7884 | 0.7902 | 0.6984 |

| 2.2 Nearest Neighbors Classification | 0.8968 | 0.9107 | 0.8993 | 0.8986 | 0.6466 |

| 2.3 Decision Tree Classifier | 0.7460 | 0.8469 | 0.7500 | 0.7569 | 0.0193 |

| 2.4 Random Forest Classifier | 0.7460 | 0.9089 | 0.7495 | 0.7726 | 0.0248 |

| 2.5 AdaBoost Classifier | 0.9286 | 0.9517 | 0.9295 | 0.9311 | 0.0457 |

| 2.6 Gaussian Naive Bayes | 0.5317 | 0.6113 | 0.5230 | 0.5197 | 0.0103 |

| 2.7 XGBClassifier | 0.8889 | 0.9315 | 0.8886 | 0.8958 | 0.3956 |

| 2.8 Stacking Classifier | 0.9048 | 0.9432 | 0.9090 | 0.9137 | 0.4264 |

| 2.9 Voting Classifier | 0.8651 | 0.9198 | 0.8674 | 0.8775 | 0.4592 |

| 2.10 Dense NN | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.9878 |

| 2.11 Transformer | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.5917 |

| 2.12 CNN–Transformer | 0.9841 | 0.9847 | 0.9851 | 0.9844 | 0.3468 |

| 2.13 CNN–GRU | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.5062 |

| Source | Data Source | Model Type | Classification Accuracy |

|---|---|---|---|

| Jiang et al. (2022) [41] | EMG + IMU | LSTM–Res | 99.67% |

| Jiang et al. (2022) [41] | EMG + IMU | GRU–Res | 99.49% |

| Jiang et al. (2022) [41] | EMG + IMU | Transformer–CNN | 98.96% |

| Vásconez et al. (2022) [31] | EMG + IMU | DQN (deep Q-network) | 97.50% ± 1.13% |

| Bangaru et al. (2020) [42] | EMG + IMU | Random Forest | 98.13% |

| Valdivieso Caraguay et al. (2023) [43] | EMG | DQN | 90.37 |

| Bai et al. (2025) [44] | EMG + IMU | SWCTNet (CNN + Transformer) | 98% |

| Jeon et al. (2022) [45] | IMU | Bi-directional LSTM | 91.7% |

| Toro-Ossaba et al. (2022) [46] | EMG | LSTM | 87.29 ± 6.94% |

| Hassan et al. (2024) [47] | CV (UCF11 dataset) | Deep BiLSTM | 99.2% |

| Lin et al. (2025) [48] | CV (MediaPipe) | Swin Transformer | 99.7% |

| Kamali Mohammadzadeh et al. (2025) [49] | VR trackers | CNN–Transformer | 100% |

| Our work | IMU | Dense neural network/CNN–Transformer/CNN–GRU | 100% |

| Our work | EMG + IMU + CV+ VR trackers | Transformer | 99.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Obukhov, A.; Krasnyansky, M.; Merkuryev, Y.; Rybachok, M. Development of a System for Recognising and Classifying Motor Activity to Control an Upper-Limb Exoskeleton. Appl. Syst. Innov. 2025, 8, 114. https://doi.org/10.3390/asi8040114

Obukhov A, Krasnyansky M, Merkuryev Y, Rybachok M. Development of a System for Recognising and Classifying Motor Activity to Control an Upper-Limb Exoskeleton. Applied System Innovation. 2025; 8(4):114. https://doi.org/10.3390/asi8040114

Chicago/Turabian StyleObukhov, Artem, Mikhail Krasnyansky, Yaroslav Merkuryev, and Maxim Rybachok. 2025. "Development of a System for Recognising and Classifying Motor Activity to Control an Upper-Limb Exoskeleton" Applied System Innovation 8, no. 4: 114. https://doi.org/10.3390/asi8040114

APA StyleObukhov, A., Krasnyansky, M., Merkuryev, Y., & Rybachok, M. (2025). Development of a System for Recognising and Classifying Motor Activity to Control an Upper-Limb Exoskeleton. Applied System Innovation, 8(4), 114. https://doi.org/10.3390/asi8040114