On the Use of Quality Models to Address Distinct Quality Views

Abstract

1. Introduction

- Internal quality view: The set of quality properties of the source code and documentation that are deemed to influence the behaviour and use of the software.

- External quality view: The set of quality properties that determine how the software product behaves while it operates. These properties are usually measured when the software product assessed is operational and is being tested.

- Quality-in-use view: The set of quality properties that determine how the user perceives software product quality, i.e., to what extent the objectives that the software is used for can be achieved.

1.1. Research Question

- RQ1:

- Which quality views can the identified 23 software product quality model families address?

1.2. Structure of the Study

2. Related Works

- (1)

- Search queries were developed and recorded for automatic document search;

- (2)

- Searches were carried out in six computer science digital libraries, including IEEE and ACM;

- (3)

- The automatic document search was complemented with manual and reference searches;

- (4)

- All the documents returned by the searches were pre-analysed, the duplicates were removed, the non-primary sources were removed, the complete software product quality models were included in the investigation, but process quality models and partial quality models were excluded with the reason for exclusion recorded;

- (5)

- Documents were assigned to the software product quality models they define, tailor or reference, which lead to the creation of related document clusters, i.e., to software product quality model families. Publications and model definitions stem from the academic and industrial domain, including EMISQ [19], SQUALE [20], FURPS [10,11], SQAE and ISO9126 combination [21], Quality Model of Kim and Lee [15] from companies such as Air France, Siemens, Qualixo, IBM, MITRE, and Samsung;

- (6)

- Each document was analysed in-depth to extract the terminology and concepts of each software product quality model;

- (7)

- Each document was assigned a score value for clarity of the publication and a score value for the actuality of the publication based on defined scoring criteria; the clarity score discriminates whether a quality model was published in a detailed mature state in one publication or in several publications by means of smaller increments;

- (8)

- The quality score of a document is defined as the product of its clarity score and actuality score;

- (9)

- For each cluster of documents, the following indicators are defined:

- (i)

- The relevance score as the sum of the quality scores of its documents;

- (ii)

- The quality score average;

- (iii)

- The publication time range; and

- (iv)

- The 12-month average of Google Trends called the Google Relative Search Index [22].

| Ranking | Model Family | Relevance Score | Quality Score Average | Publication Range from 2000 | Google Relative Search Index |

|---|---|---|---|---|---|

| 1 | ISO25010 [7,23,24,25,26,27,28,29] | 130 | 16.25 | [2011; 2018] | 30.02 |

| 2 | ISO9126 [6,30,31,32,33,34,35,36,37] | 120 | 13.33 | [2000; 2017] | 53.06 |

| 3 | SQALE [17,38,39,40,41,42,43,44] | 107 | 13.38 | [2009; 2016] | 18.33 |

| 4 | Quamoco [45,46,47,48] | 90 | 22.5 | [2012; 2015] | 0 |

| 5 | EMISQ [19,49,50] | 38 | 12.67 | [2008; 2011] | 0 |

| 6 | SQUALE [20,51,52,53] | 36 | 9 | [2012; 2015] | n.a. |

| 7 | ADEQUATE [8,9] | 18 | 9 | [2005; 2009] | n.a. |

| 8 | COQUALMO [54,55] | 15 | 7.5 | [2008; 2008] | 0.21 |

| =9 | FURPS [10,11,12] | 10 | 3.33 | [2005; 2005] | 20.56 |

| =9 | SQAE and ISO9126 combination [21] | 10 | 10 | [2004; 2004] | 0 |

| =9 | Ulan et al. [56] | 10 | 10 | [2018; 2018] | n.a. |

| 10 | Kim and Lee [15] | 9 | 9 | [2009; 2009] | n.a. |

| 11 | GEQUAMO [13] | 5 | 5 | [2003; 2003] | 0 |

| 12 | McCall et al. [2,57] | 1 | 0.5 | [2002; 2002] | n.a. |

| =13 | 2D Model [58] | 0 | 0 | n.a. | n.a. |

| =13 | Boehm et al. [1] | 0 | 0 | n.a. | n.a. |

| =13 | Dromey [59] | 0 | 0 | n.a. | n.a. |

| =13 | GQM [60] | 0 | 0 | n.a. | 40.73 |

| =13 | IEEE Metrics Framework Reaffirmed in 2009 [61] | 0 | 0 | n.a. | 0 |

| =13 | Metrics Framework for Mobile Apps [62] | 0 | 0 | n.a. | 0 |

| =13 | SATC [63] | 0 | 0 | n.a. | n.a. |

| =13 | SQAE [64] | 0 | 0 | n.a. | n.a. |

| =13 | SQUID [14] | 0 | 0 | n.a. | n.a. |

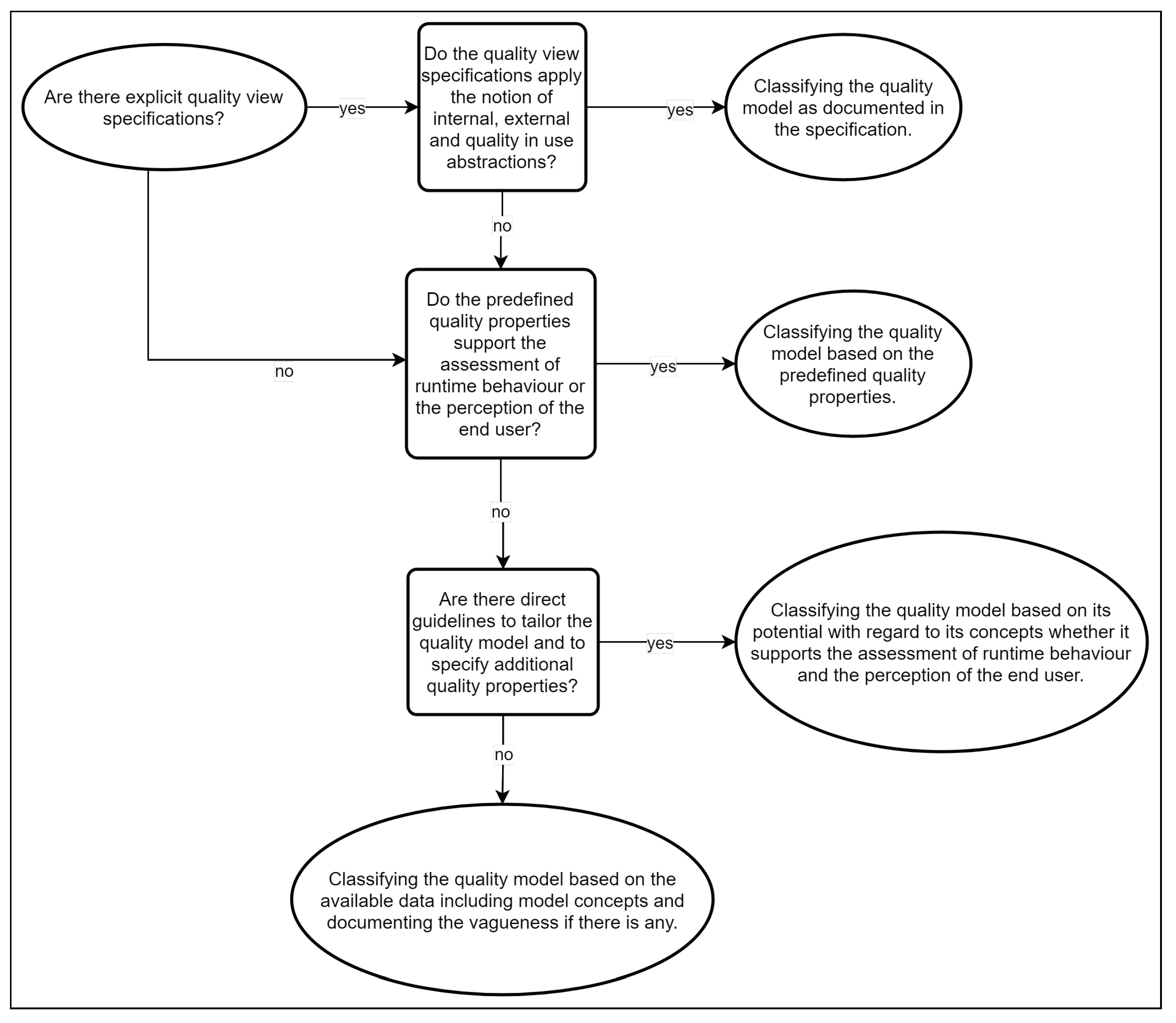

3. Methods

4. Results

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Boehm, B.W.; Brown, J.R.; Lipow, M. Quantitative Evaluation of Software Quality. In Proceedings of the 2nd International Conference on Software Engineering, San Francisco, CA, USA, 13–15 October 1976. [Google Scholar]

- McCall, J.A.; Richards, P.K.; Walters, G.F. Factors in Software Quality, Concept and Definitions of Software Quality. 1977. Available online: http://www.dtic.mil/dtic/tr/fulltext/u2/a049014.pdf (accessed on 6 March 2018).

- Galli, T. Fuzzy Logic Based Software Product Quality Model for Execution Tracing. Master’s Thesis, Centre for Computational Intelligence, De Montfort University, Leicester, UK, 2013. [Google Scholar]

- Galli, T.; Chiclana, F.; Carter, J.; Janicke, H. Towards Introducing Execution Tracing to Software Product Quality Frameworks. Acta Polytech. Hung. 2014, 11, 5–24. [Google Scholar] [CrossRef]

- Galli, T.; Chiclana, F.; Siewe, F. Software Product Quality Models, Developments, Trends and Evaluation. SN Comput. Sci. 2020. [Google Scholar] [CrossRef]

- ISO/IEC 9126-1:2001 Software Engineering—Product Quality—Part 1: Quality Model; International Organization for Standardization: Geneva, Switzerland, 2001.

- ISO/IEC 25010:2011 Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—System and Software Quality Models; International Organization for Standardization: Geneva, Switzerland, 2011.

- Khaddaj, S.; Horgan, G. A Proposed Adaptable Quality Model for Software Quality Assurance. J. Comput. Sci. 2005, 1, 482–487. [Google Scholar] [CrossRef]

- Horgan, G.; Khaddaj, S. Use of an adaptable quality model approach in a production support environment. J. Syst. Softw. 2009, 82, 730–738. [Google Scholar] [CrossRef]

- Grady, R.B.; Caswell, D.L. Software Metrics: Establishing a Company-Wide Program; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1987. [Google Scholar]

- Grady, R.B. Practical Software Metrics for Project Management and Process Improvement; Prentice Hall: Upper Saddle River, NJ, USA, 1992. [Google Scholar]

- Eeles, P. Capturing Architectural Requirements. 2005. Available online: https://www.ibm.com/developerworks/rational/library/4706-pdf.pdf (accessed on 19 April 2018).

- Georgiadou, E. GEQUAMO—A Generic, Multilayered, Customisable, Software Quality Model. Softw. Qual. J. 2003, 11, 313–323. [Google Scholar] [CrossRef]

- Kitchenham, B.; Linkman, S.; Pasquini, A.; Nanni, V. The SQUID approach to defining a quality model. Softw. Qual. J. 1997, 6, 211–233. [Google Scholar] [CrossRef]

- Kim, C.; Lee, K. Software Quality Model for Consumer Electronics Product. In Proceedings of the 9th International Conference on Quality Software, Monte Porzio Catone, Italy, 23–25 June 2008; pp. 390–395. [Google Scholar]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report; EBSE-2007-01; Software Engineering Group, School of Computer Science and Mathematics, Keele University: Keele, UK; Department of Computer Science, University of Durham: Durham, UK, 2007; Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 1 June 2017).

- Hegeman, J.H. On the Quality of Quality Models. Master’s Thesis, University Twente, Enskeard, The Netherlands, 2011. [Google Scholar]

- Ferenc, R.; Hegedüs, P.; Gyimóthy, T. Software Product Quality Models, Chapter; In Evolving Software Systems; Springer: Berlin/Heidelberg, Germany, 2014; pp. 65–100. [Google Scholar] [CrossRef]

- Kothapalli, C.; Ganesh, S.G.; Singh, H.K.; Radhika, D.V.; Rajaram, T.; Ravikanth, K.; Gupta, S.; Rao, K. Continual monitoring of Code Quality. In Proceedings of the 4th India Software Engineering Conference 2011, ISEC’11, Thiruvananthapuram Kerala, India, 24–27 February 2011; pp. 175–184. [Google Scholar] [CrossRef]

- Mordal-Manet, K.; Balmas, F.; Denier, S.; Ducasse, S.; Wertz, H.; Laval, J.; Bellingard, F.; Vaillergues, P. The Squale Model—A Practice-based Industrial Quality Model. 2009. Available online: https://hal.inria.fr/inria-00637364 (accessed on 6 March 2018).

- Côté, M.A.; Suryn, W.; Martin, R.A.; Laporte, C.Y. Evolving a Corporate Software Quality Assessment Exercise: A Migration Path to ISO/IEC 9126. Softw. Qual. Prof. 2004, 6, 4–17. [Google Scholar]

- Google. Google Search Trends for the Past 12 Months, Worldwide. 2020. Available online: https://trends.google.com/trends/explore (accessed on 30 January 2021).

- Ouhbi, S.; Idri, A.; Fernández-Alemán, J.L.; Toval, A.; Benjelloun, H. Applying ISO/IEC 25010 on mobile personal health records. In Proceedings of the HEALTHINF 2015—8th International Conference on Health Informatics, Part of 8th International Joint Conference on Biomedical Engineering Systems and Technologies, BIOSTEC, Lisbon, Portugal, 12–15 January 2015; SciTePress: Setúbal, Portugal, 2015; pp. 405–412, ISBN 978-989-758-068-0. [Google Scholar]

- Idri, A.; Bachiri, M.; Fernández-Alemán, J.L. A Framework for Evaluating the Software Product Quality of Pregnancy Monitoring Mobile Personal Health Records. J. Med. Syst. 2016, 40, 50. [Google Scholar] [CrossRef] [PubMed]

- Forouzani, S.; Chiam, Y.K.; Forouzani, S. Method for assessing software quality using source code analysis. In ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2016; pp. 166–170. [Google Scholar] [CrossRef]

- Domínguez-Mayo, F.J.; Escalona, M.J.; Mejías, M.; Ross, M.; Staples, G. Quality evaluation for Model-Driven Web Engineering methodologies. Inf. Softw. Technol. 2012, 54, 1265–1282. [Google Scholar] [CrossRef]

- Idri, A.; Bachiri, M.; Fernandez-Aleman, J.L.; Toval, A. Experiment design of free pregnancy monitoring mobile personal health records quality evaluation. In Proceedings of the 2016 IEEE 18th International Conference on e-Health Networking, Applications and Services (Healthcom), Munich, Germany, 14–16 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Shen, P.; Ding, X.; Ren, W.; Yang, C. Research on Software Quality Assurance Based on Software Quality Standards and Technology Management. In Proceedings of the 2018 19th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Busan, Korea, 27–29 June 2018; pp. 385–390. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Yu, X.; Liu, Z. A Software Quality Quantifying Method Based on Preference and Benchmark Data. In Proceedings of the 2018 19th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Busan, Korea, 27–29 June 2018; pp. 375–379. [Google Scholar] [CrossRef]

- Kanellopoulos, Y.; Tjortjis, C.; Heitlager, I.; Visser, J. Interpretation of source code clusters in terms of the ISO/IEC-9126 maintainability characteristics. In Proceedings of the European Conference on Software Maintenance and Reengineering, CSMR, Athens, Greece, 1–4 April 2008; pp. 63–72. [Google Scholar] [CrossRef]

- Vetro, A.; Zazworka, N.; Seaman, C.; Shull, F. Using the ISO/IEC 9126 product quality model to classify defects: A controlled experiment. In Proceedings of the 16th International Conference on Evaluation Assessment in Software Engineering (EASE 2012), Ciudad Real, Spain, 14–15 May 2012; pp. 187–196. [Google Scholar] [CrossRef]

- Parthasarathy, S.; Sharma, S. Impact of customization over software quality in ERP projects: An empirical study. Softw. Qual. J. 2017, 25, 581–598. [Google Scholar] [CrossRef]

- Li, Y.; Man, Z. A Fuzzy Comprehensive Quality Evaluation for the Digitizing Software of Ethnic Antiquarian Resources. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; Volume 5, pp. 1271–1274. [Google Scholar] [CrossRef]

- Hu, W.; Loeffler, T.; Wegener, J. Quality model based on ISO/IEC 9126 for internal quality of MATLAB/Simulink/Stateflow models. In Proceedings of the 2012 IEEE International Conference on Industrial Technology, Athens, Greece, 19–21 March 2012; pp. 325–330. [Google Scholar] [CrossRef]

- Liang, S.K.; Lien, C.T. Selecting the Optimal ERP Software by Combining the ISO 9126 Standard and Fuzzy AHP Approach. Contemp. Manag. Res. 2006, 3, 23. [Google Scholar] [CrossRef][Green Version]

- Correia, J.; Visser, J. Certification of Technical Quality of Software Products. In Proceedings of the International Workshop on Foundations and Techniques for Open Source Software Certification; 2008; pp. 35–51. Available online: http://wiki.di.uminho.pt/twiki/pub/Personal/Joost/PublicationList/CorreiaVisserOpenCert2008.pdf (accessed on 1 June 2018).

- Andreou, A.S.; Tziakouris, M. A quality framework for developing and evaluating original software components. Inf. Softw. Technol. 2007, 49, 122–141. [Google Scholar] [CrossRef]

- Letouzey, J.L.; Coq, T. The SQALE Analysis Model: An Analysis Model Compliant with the Representation Condition for Assessing the Quality of Software Source Code. In Proceedings of the 2010 Second International Conference on Advances in System Testing and Validation Lifecycle, Nice, France, 22–27 August 2010; pp. 43–48. [Google Scholar]

- Letouzey, J.L. Managing Large Application Portfolio with Technical Debt Related Measures. In Proceedings of the Joint Conference of the International Workshop on Software Measurement and the International Conference on Software Process and Product Measurement (IWSM-MENSURA), Berlin, Germany, 5–7 October 2016; p. 181. [Google Scholar] [CrossRef]

- Letouzey, J.L. The SQALE method for evaluating Technical Debt. In Proceedings of the Third International Workshop on Managing Technical Debt (MTD), Zurich, Switzerland, 5 June 2012; pp. 31–36. [Google Scholar] [CrossRef]

- Letouzey, J.; Coq, T. The SQALE Models for Assessing the Quality of Real Time Source Code. 2010. Available online: https://pdfs.semanticscholar.org/4dd3/a72d79eb2f62fe04410106dc9fcc27835ce5.pdf?ga=2.24224186.1861301954.1500303973-1157276278.1497961025 (accessed on 17 July 2017).

- Letouzey, J.L.; Ilkiewicz, M. Managing Technical Debt with the SQALE Method. IEEE Softw. 2012, 29, 44–51. [Google Scholar] [CrossRef]

- Letouzey, J.L.; Coq, T. The SQALE Quality and Analysis Models for Assessing the Quality of Ada Source Code. 2009. Available online: http://www.adalog.fr/publicat/sqale.pdf (accessed on 17 July 2017).

- Letouzey, J.L. The SQALE Method for Managing Technical Debt, Definition Document V1.1. 2016. Available online: http://www.sqale.org/wp-content/uploads//08/SQALE-Method-EN-V1-1.pdf (accessed on 2 August 2017).

- Gleirscher, M.; Golubitskiy, D.; Irlbeck, M.; Wagner, S. Introduction of static quality analysis in small- and medium-sized software enterprises: Experiences from technology transfer. Softw. Qual. J. 2014, 22, 499–542. [Google Scholar] [CrossRef][Green Version]

- Wagner, S.; Lochmann, K.; Heinemann, L.; As, M.K.; Trendowicz, A.; Plösch, R.; Seidl, A.; Goeb, A.; Streit, J. The Quamoco Product Quality Modelling and Assessment Approach. In Proceedings of the 34th International Conference on Software Engineering, Zurich, Switzerland, 2–9 June 2012; IEEE Press: Piscataway, NJ, USA, 2012. ICSE ’12. pp. 1133–1142. [Google Scholar]

- Wagner, S.; Lochmann, K.; Winter, S.; Deissenboeck, F.; Juergens, E.; Herrmannsdoerfer, M.; Heinemann, L.; Kläs, M.; Trendowicz, A.; Heidrich, J.; et al. The Quamoco Quality Meta-Model. October 2012. Available online: https://mediatum.ub.tum.de/attfile/1110600/hd2/incoming/2012-Jul/517198.pdf (accessed on 18 November 2017).

- Wagner, S.; Goeb, A.; Heinemann, L.; Kläs, M.; Lampasona, C.; Lochmann, K.; Mayr, A.; Plösch, R.; Seidl, A.; Streit, J.; et al. Operationalised product quality models and assessment: The Quamoco approach. Inf. Softw. Technol. 2015, 62, 101–123. [Google Scholar] [CrossRef]

- Plösch, R.; Gruber, H.; Hentschel, A.; Körner, C.; Pomberger, G.; Schiffer, S.; Saft, M.; Storck, S. The EMISQ method and its tool support-expert-based evaluation of internal software quality. Innov. Syst. Softw. Eng. 2008, 4, 3–15. [Google Scholar] [CrossRef]

- Plösch, R.; Gruber, H.; Körner, C.; Saft, M. A Method for Continuous Code Quality Management Using Static Analysis. In Proceedings of the 2010 Seventh International Conference on the Quality of Information and Communications Technology, Porto, Portugal, 29 September–2 October 2010; pp. 370–375. [Google Scholar] [CrossRef]

- Laval, J.; Bergel, A.; Ducasse, S. Assessing the Quality of Your Software with MoQam. 2008. Available online: https://hal.inria.fr/inria-00498482 (accessed on 6 March 2018).

- Balmas, F.; Bellingard, F.; Denier, S.; Ducasse, S.; Franchet, B.; Laval, J.; Mordal-Manet, K.; Vaillergues, P. Practices in the Squale Quality Model (Squale Deliverable 1.3). October 2010. Available online: http://www.squale.org/quality-models-site/research-deliverables/WP1.3Practices-in-the-Squale-Quality-Modelv2.pdf (accessed on 16 November 2017).

- INRIA RMoD, Paris 8, Qualixo. Technical Model for Remediation (Workpackage 2.2). 2010. Available online: http://www.squale.org/quality-models-site/research-deliverables/WP2.2Technical-Model-for-Remediationv1.pdf (accessed on 16 November 2017).

- Boehm, B.; Chulani, S. Modeling Software Defect Introduction and Removal—COQUALMO (Constructive QUALity Model); USC—Center for Software Engineering: Los Angeles, CA, USA, 1999. [Google Scholar]

- Madachy, R.; Boehm, B. Assessing Quality Processes with ODC COQUALMO. In Making Globally Distributed Software Development a Success Story; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5007, pp. 198–209. [Google Scholar] [CrossRef]

- Ulan, M.; Hönel, S.; Martins, R.M.; Ericsson, M.; Löwe, W.; Wingkvist, A.; Kerren, A. Quality Models Inside Out: Interactive Visualization of Software Metrics by Means of Joint Probabilities. In Proceedings of the 2018 IEEE Working Conference on Software Visualization (VISSOFT), Madrid, Spain, 18–19 September 2018; pp. 65–75. [Google Scholar] [CrossRef]

- Benedicenti, L.; Wang, V.W.; Paranjape, R. A quality assessment model for Java code. In Proceedings of the Canadian Conference on Electrical and Computer Engineering, Winnipeg, MB, Canada, 12–15 May 2002; pp. 687–690. [Google Scholar] [CrossRef]

- Zhang, L.; Li, L.; Gao, H. 2-D Software Quality Model and Case Study in Software Flexibility Research. In Proceedings of the 2008 International Conference on Computational Intelligence for Modelling Control and Automation, Vienna, Austria, 10–12 December 2008; IEEE Computer Society: Washington, DC, USA, 2008. CIMCA ’08. pp. 1147–1152. [Google Scholar] [CrossRef]

- Dromey, R. A Model for Software Product Quality. IEEE Trans. Softw. Eng. 1995, 21, 146–162. [Google Scholar] [CrossRef]

- Van Solingen, R.; Berghout, E. The Goal/Question/Metric Method a Practical Guide for Quality Improvement of Software Development; McGraw Hill Publishing: London, UK, 1999. [Google Scholar]

- IEEE Computer Society. IEEE Stdandard 1061–1998: IEEE Standard for a Software Quality Metrics Methodology; IEEE: Piscataway, NJ, USA, 1998. [Google Scholar]

- Franke, D.; Weise, C. Providing a software quality framework for testing of mobile applications. In Proceedings of the 4th IEEE International Conference on Software Testing, Verification, and Validation, ICST 2011, Berlin, Germany, 21–25 March 2011; pp. 431–434. [Google Scholar] [CrossRef]

- Hyatt, L.E.; Rosenberg, L.H. A Software Quality Model and Metrics for Identifying Project Risks and Assessing Software Quality. In Proceedings of the Product Assurance Symposium and Software Product Assurance Workshop, EAS SP-377, Noordwijk, The Netherlands, 19–21 March 1996; European Space Agency: Paris, France, 1996. [Google Scholar]

- Martin, R.A.; Shafer, L.H. Providing a Framework for effective software quality assessment—A first step in automating assessments. In Proceedings of the First Annual Software Engineering and Economics Conference, McLean, VA, USA, 2–3 April 1996. [Google Scholar]

- SonarSource. SonarQube. 2017. Available online: https://www.sonarqube.org (accessed on 16 February 2017).

| ID | Relevance Rank | Name | Quality Views Considered | Predefined Quality Properties or Metrics Available | Research Interest | Widespread Use Cases | Also Process Related Properties |

|---|---|---|---|---|---|---|---|

| 1 | 1 | ISO25010 [7,23,24,25,26,27,28,29] | I, E, U | Yes | Yes | Yes | No |

| 2 | 2 | ISO9126 [6,30,31,32,33,34,35,36,37] | I, E, U | Yes | Yes | Yes | No |

| 3 | 3 | SQALE [17,38,39,40,41,42,43,44] | I | Yes | Yes | Yes | No |

| 4 | 4 | Quamoco [45,46,47,48] | I, E, U | Yes | No | No | No |

| 5 | 5 | EMISQ [19,49,50] | I | Yes | No | No | No |

| 6 | 6 | SQUALE [20,51,52,53] | I, E | Yes | No | No | Yes |

| 7 | 7 | ADEQUATE [8,9] | I, E, U | Yes | No | No | Yes |

| 8 | 8 | COQUALMO [54,55] | I, E | Yes | No | No | Yes |

| 9 | 9 | FURPS [10,11,12] | I, E, (U) | Yes | No | Yes | Yes |

| 10 | 9 | SQAE and ISO9126 combination [21] | I, E | Yes | No | No | No |

| 11 | 9 | Ulan et al. [56] | I | Yes | No | No | No |

| 12 | 10 | Kim and Lee [15] | I | Yes | No | No | No |

| 13 | 11 | GEQUAMO [13] | I, E, U | Yes | No | No | Yes |

| 14 | 12 | McCall et al. [2,57] | I, E, (U) | Yes | No | No | Yes |

| 15 | 13 | 2D Model [58] | Undefined | No | No | No | Undefined |

| 16 | 13 | Boehm et al. [1] | I, E, (U) | Yes | No | No | No |

| 17 | 13 | Dromey [59] | I | Yes | No | No | No |

| 18 | 13 | GQM [60] | D | No | No | Yes | D |

| 19 | 13 | IEEE Metrics Framework Reaffirmed in 2009 [61] | Undefined | No | No | No | Undefined |

| 20 | 13 | Metrics Framework for Mobile Apps [62] | Undefined | Yes | No | No | Undefined |

| 21 | 13 | SATC [63] | I, E | Yes | No | No | Yes |

| 22 | 13 | SQAE [64] | I | Yes | No | No | No |

| 23 | 13 | SQUID [14] | D | D | No | No | D |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Galli, T.; Chiclana, F.; Siewe, F. On the Use of Quality Models to Address Distinct Quality Views. Appl. Syst. Innov. 2021, 4, 41. https://doi.org/10.3390/asi4030041

Galli T, Chiclana F, Siewe F. On the Use of Quality Models to Address Distinct Quality Views. Applied System Innovation. 2021; 4(3):41. https://doi.org/10.3390/asi4030041

Chicago/Turabian StyleGalli, Tamas, Francisco Chiclana, and Francois Siewe. 2021. "On the Use of Quality Models to Address Distinct Quality Views" Applied System Innovation 4, no. 3: 41. https://doi.org/10.3390/asi4030041

APA StyleGalli, T., Chiclana, F., & Siewe, F. (2021). On the Use of Quality Models to Address Distinct Quality Views. Applied System Innovation, 4(3), 41. https://doi.org/10.3390/asi4030041