Methodological Quality of Systematic Reviews for Questions of Therapy and Prevention Published in the Urological Literature (2016–2021) Fails to Improve

Abstract

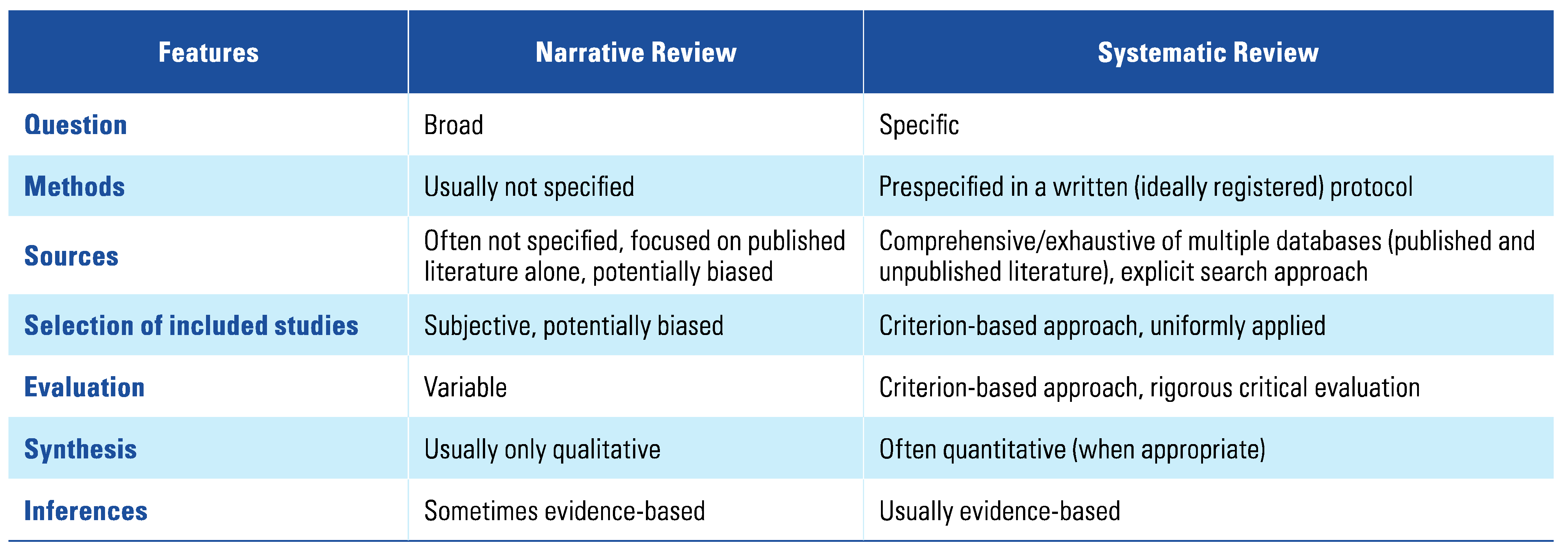

:1. Introduction

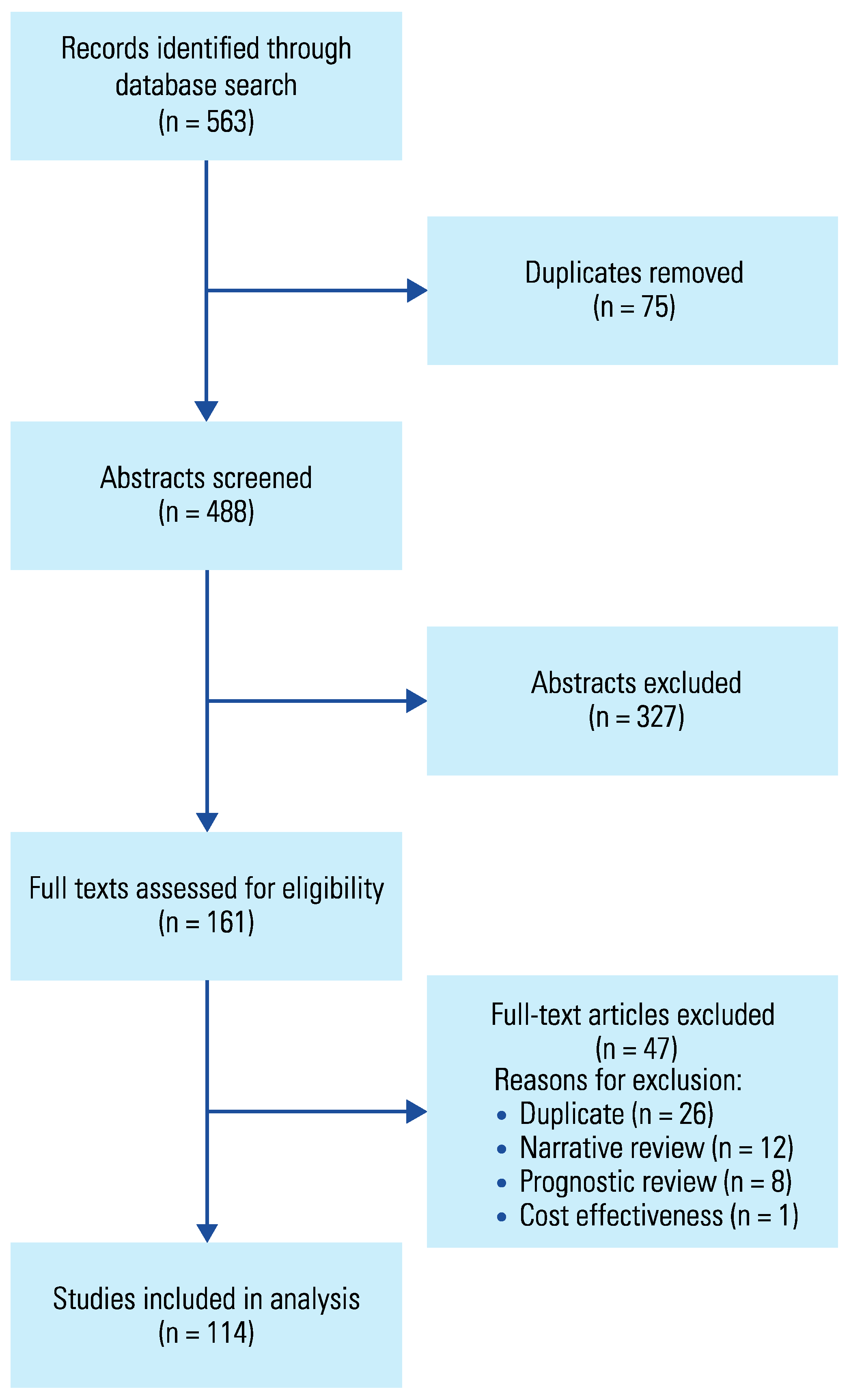

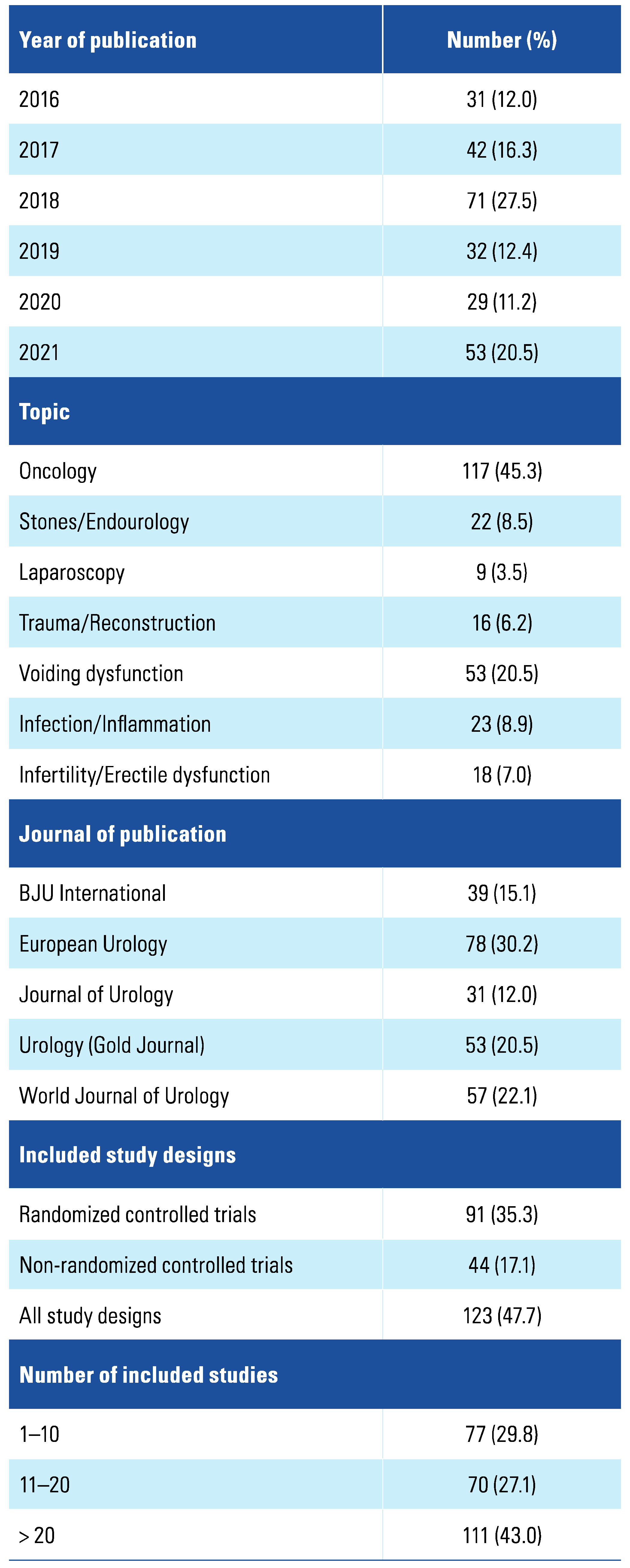

2. Methods

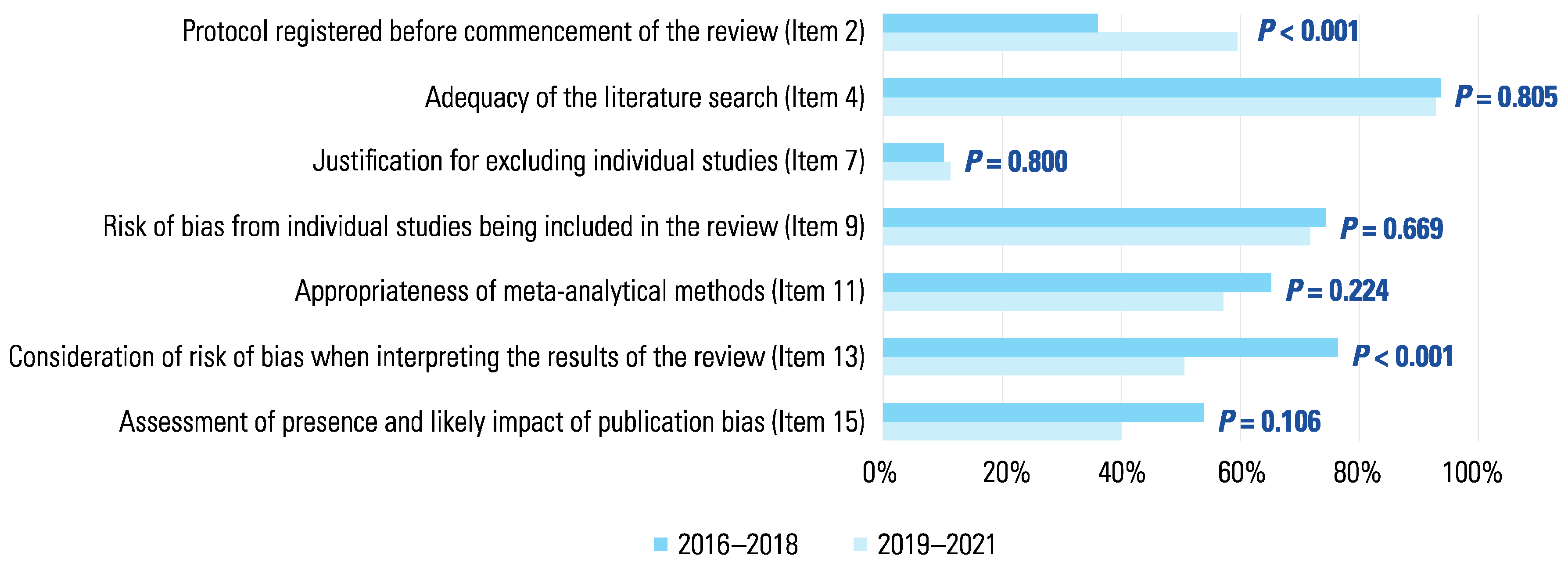

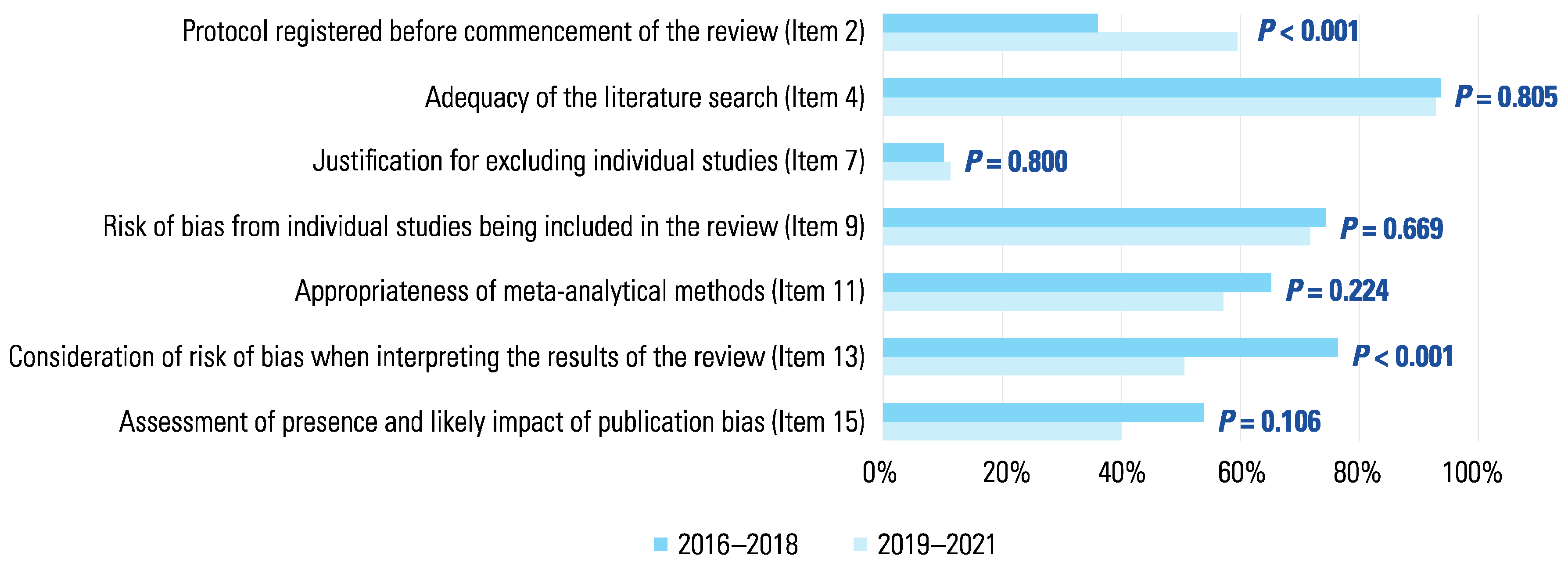

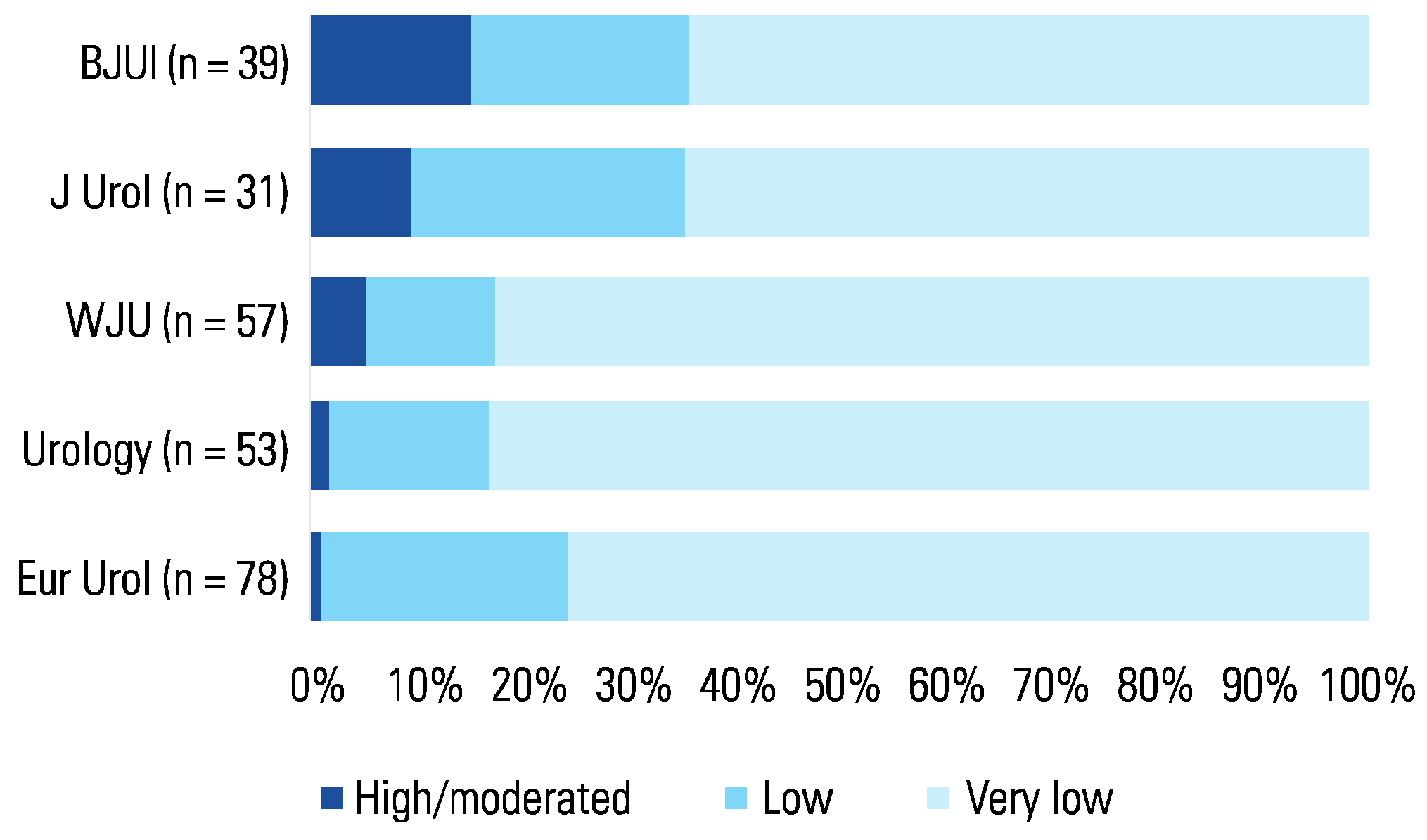

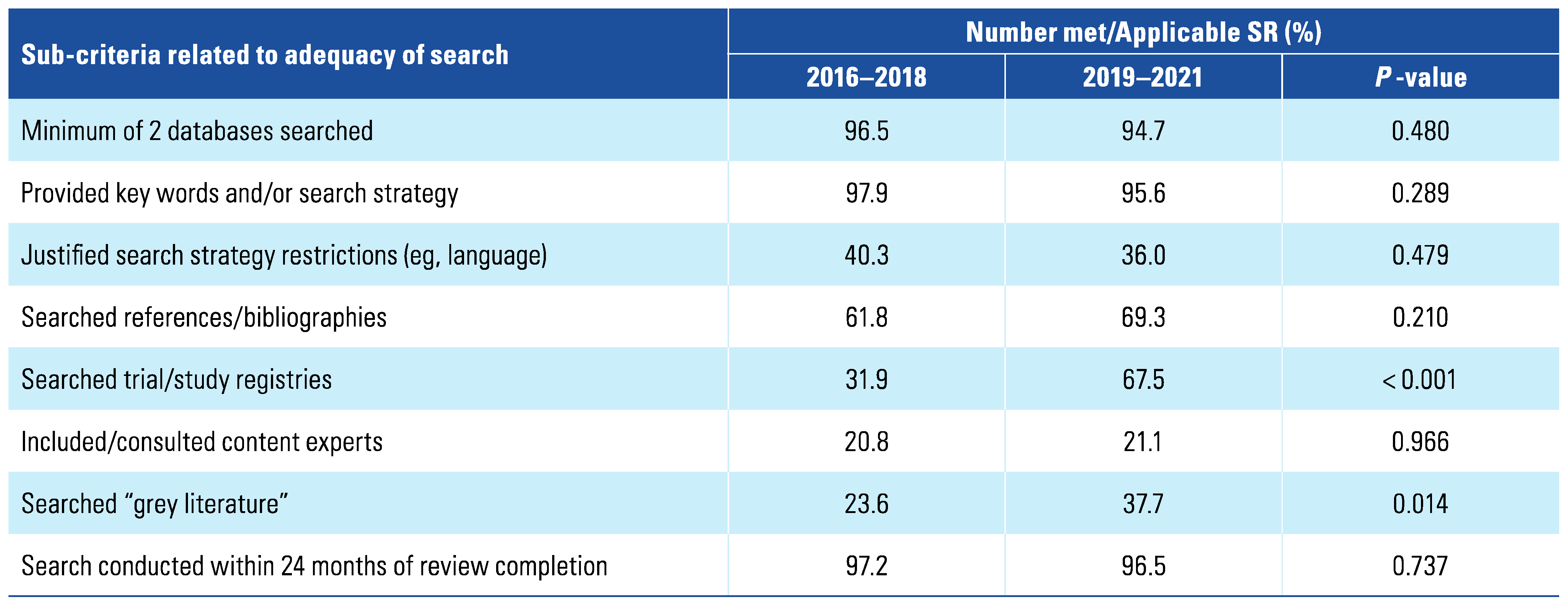

3. Results

4. Discussion

4.1. Statement of principal findings

4.2. Strengths and weaknesses of the study

4.3. Strengths and weaknesses in relation to other studies

4.4. Implications for clinicians and policymakers

4.5. Unanswered questions and future research

5. Conclusions

Supplementary Materials

Acknowledgements

Conflicts of Interest

Abbreviations

| AMSTAR-2 | Assessment of Multiple Systematic Reviews Version 2 |

| AUA | American Urological Association |

| EAU | European Association of Urology |

| GRADE | Grading of Recommendations Assessment, Development, and Evaluation |

| SRs | systematic reviews |

References

- Dickersin, K. Health-care policy. To reform U.S. health care, start with systematic reviews. Science 2010, 329, 516–517. [Google Scholar] [CrossRef] [PubMed]

- Ioannidis, J.P. The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. Milbank Q. 2016, 94, 485–514. [Google Scholar] [CrossRef]

- Shea, B.J.; Reeves, B.C.; Wells, G.; Thuku, M.; Hamel, C.; Moran, J.; et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017, 358, j4008. [Google Scholar] [CrossRef]

- Ding, M.; Soderberg, L.; Jung, J.H.; Dahm, P. Low methodological quality of systematic reviews published in the urological literature (2016–2018). Urology 2020, 138, 5–10. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Padilla, D.A.; Dahm, P. Evidence-based urology: understanding GRADE methodology. Eur Urol Focus. 2021, 7, 1230–1233. [Google Scholar] [CrossRef]

- Canfield, S.E.; Dahm, P. Rating the quality of evidence and the strength of recommendations using GRADE. World J Urol. 2011, 29, 311–7. [Google Scholar] [CrossRef]

- Corbyons, K.; Han, J.; Neuberger, M.M.; Dahm, P. Methodological quality of systematic reviews published in the urological literature from 1998 to 2012. J Urol. 2015, 194, 1374–9. [Google Scholar] [CrossRef] [PubMed]

- Han, J.L.; Gandhi, S.; Bockoven, C.G.; Nararyan, V.M.; Dahm, P. The landscape of systematic reviews in urology (1998 to 2015): an assessment of methodological quality. BJU Int. 2017, 119, 638–649. [Google Scholar] [CrossRef]

- O’Kelly, F.; DeCotiis, K.; Aditya, I.; Braga, L.H.; Koyle, M.A. Assessing the methodological and reporting quality of clinical systematic reviews and meta-analyses in paediatric urology: can practices on contemporary highest levels of evidence be built? J Pediatr Urol. 2020, 16, 207–217. [Google Scholar] [CrossRef]

- Bole, R.; Gottlich, H.C.; Ziegelmann, M.J.; Corrigan, D.; Levine, L.A.; Mulhall, J.P.; et al. a critical analysis of reporting in systematic reviews and meta-analyses in the peyronie’s disease literature. J Sex Med. 2022, 19, 629–640. [Google Scholar] [CrossRef]

- Bojcic, R.; Todoric, M.; Puljak, L. Adopting AMSTAR 2 critical appraisal tool for systematic reviews: speed of the tool uptake and barriers for its adoption. BMC Med Res Methodol. 2022, 22, 104. [Google Scholar] [CrossRef] [PubMed]

- Dettori, J.R.; Skelly, A.C.; Brodt, E.D. Critically low confidence in the results produced by spine surgery systematic reviews: an AMSTAR-2 evaluation from 4 spine journals. Global Spine J. 2020, 10, 667–673. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Monedero, R.; Danielian, A.; Angajala, V.; Dinalo, J.E.; Kezerian, E.J. Methodological quality of systematic reviews and meta-analyses published in high-impact otolaryngology journals. Otolaryngol Head Neck Surg. 2020, 163, 892–905. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Yang, Z.; Zhang, Y.; Cui, Y.; Tang, J.; Hirst, A.; et al. The methodological quality on systematic reviews of surgical randomised controlled trials: a cross-sectional survey. Asian J Surg. 2022, 45, 1817–1822. [Google Scholar] [CrossRef]

- Siemens, W.; Schwarzer, G.; Rohe, M.S.; Buroh, S.; Meerpohl, J.J.; Becker, G. Methodological quality was critically low in 9/10 systematic reviews in advanced cancer patients-a methodological study. J Clin Epidemiol. 2021, 136, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Cai, Y.; Yang, K.; Liu, M.; Shi, S.; Chen, J.; et al. Methodological and reporting quality in non-Cochrane systematic review updates could be improved: a comparative study. J Clin Epidemiol. 2020, 119, 36–46. [Google Scholar] [CrossRef] [PubMed]

- Tsen g, T.Y.; Dahm, P.; Poolman, R.W.; Preminger, G.M.; Canales, B.J.; Montori, V.M.; et al. How to use a systematic literature review and meta-analysis. J Urol. 2008, 180, 1249–56. [Google Scholar] [CrossRef]

- Faraday, M.; Hubbard, H.; Kosiak, B.; Dmochowski, R.; et al. Staying at the cutting edge: a review and analysis of evidence reporting and grading; the recommendations of the American Urological Association. BJU Int. 2009, 104, 294–297. [Google Scholar] [CrossRef] [PubMed]

- Knoll, T.; Omar, M.I.; Maclennan, S.; Hernández, V.; Canfield, S.; Yuan, Y.; et al. Key steps in conducting systematic reviews for underpinning clinical practice guidelines: methodology of the European Association of Urology. Eur Urol. 2018, 73, 290–300. [Google Scholar] [CrossRef]

- Glasziou, P.; Altman, D.G.; Bossuyt, P.; Boutron, I.; Clarke, M.; Julious, S.; et al. Reducing waste from incomplete or unusable reports of biomedical research. Lancet 2014, 383, 267–276. [Google Scholar] [CrossRef]

- Dahm, P. Raising the bar for systematic reviews with Assessment of Multiple Systematic Reviews (AMSTAR). BJU Int. 2017, 119, 193. [Google Scholar] [CrossRef] [PubMed]

- Jung, J.H.; Dahm, P. Reaching for the stars - rating the quality of systematic reviews with the Assessment of Multiple Systematic Reviews (AMSTAR) 2. BJU Int. 2018, 122, 717–718. [Google Scholar] [CrossRef] [PubMed]

- Koffel, J.B. Use of recommended search strategies in systematic reviews and the impact of librarian involvement: a cross-sectional survey of recent authors. PLoS One 2015, 10, e0125931. [Google Scholar] [CrossRef] [PubMed]

This is an open access article under the terms of a license that permits non-commercial use, provided the original work is properly cited. © 2023 The Authors. Société Internationale d'Urologie Journal, published by the Société Internationale d'Urologie, Canada.

Share and Cite

Ding, M.; Johnson, J.; Ergun, O.; Alvez, G.A.; Dahm, P. Methodological Quality of Systematic Reviews for Questions of Therapy and Prevention Published in the Urological Literature (2016–2021) Fails to Improve. Soc. Int. Urol. J. 2023, 4, 415-422. https://doi.org/10.48083/WURA1857

Ding M, Johnson J, Ergun O, Alvez GA, Dahm P. Methodological Quality of Systematic Reviews for Questions of Therapy and Prevention Published in the Urological Literature (2016–2021) Fails to Improve. Société Internationale d’Urologie Journal. 2023; 4(5):415-422. https://doi.org/10.48083/WURA1857

Chicago/Turabian StyleDing, Maylynn, Jared Johnson, Onuralp Ergun, Gustavo Ariel Alvez, and Philipp Dahm. 2023. "Methodological Quality of Systematic Reviews for Questions of Therapy and Prevention Published in the Urological Literature (2016–2021) Fails to Improve" Société Internationale d’Urologie Journal 4, no. 5: 415-422. https://doi.org/10.48083/WURA1857

APA StyleDing, M., Johnson, J., Ergun, O., Alvez, G. A., & Dahm, P. (2023). Methodological Quality of Systematic Reviews for Questions of Therapy and Prevention Published in the Urological Literature (2016–2021) Fails to Improve. Société Internationale d’Urologie Journal, 4(5), 415-422. https://doi.org/10.48083/WURA1857