Introduction

In the United States, 8 in 10 internet users search for healthcare information online, a majority using social media [

1]. A recent study examining misinformation in online cancer resources concluded that 32.5% of these articles contained misinformation and the majority were deemed harmful [

2]. While misinformation about malignancies can pose significant health risks, it remains unclear whether misinformation about prevalent benign conditions such as pelvic organ prolapse (POP) leads to harm.

With growing numbers of social media platforms, instruments to categorize diverse social media posts regarding the type of misinformation and potential harm may be challenging to implement due to the vastly different content format and length presented in these platforms. Therefore, a streamlined classification of the type and nature of health-related misinformation compatible across multiple networks is needed.

POP is a benign condition that impacts quality of life with a prevalence as high as 50% of women, with 12% electing for surgical correction [

3]. We previously identified the presence of “low to moderate quality” information in 74.1% of posts on pelvic organ prolapse on Pinterest, YouTube, and Instagram [

4].

We investigated 3 prominent social media platforms (YouTube, Pinterest, and Instagram) for POP posts to categorize the quantity and types of misinformation represented, as well as the degree and type of potential harm resulting from exposure to misinformation about this benign condition.

Materials and Methods

We conducted a descriptive study in October 2021 that was exempt by the University of Maryland Internal Review Board (IRB). Relevant YouTube videos and Pinterest and Instagram posts were identified by searching for “pelvic organ prolapse” on YouTube and Pinterest and “#PelvicOrganProlapse” on Instagram in September 2019. These platforms were chosen because they are among the top 15 most used social media platforms and have a proprietary search engine to identify relevant and publicly available pelvic organ prolapse posts. The first 100 posts on each platform were analyzed for quality, understandability, actionability, and misinformation as detailed by our previous study [

4]. Misinformation was assessed using a Likert scale from 1 (no misinformation) to 5 (high misinformation).

We reviewed posts with misinformation scores ≥ 2 for type and amount of misinformation, and the type of resultant harm [

2]. To measure harmfulness, reviewers used a Likert scale from 1 (certainly not harmful) to 5 (certainly harmful). Harm was defined as harmful inaction (encouragement to forgo standard of care), economic harm (money spent on ineffective treatments), harmful action (toxic effects of the suggested test or treatment), and harmful interaction (medical interactions with curative therapies). Type of misinformation was defined based on prior work: fabricated content (a completely false statement), misleading content (misleading use of content to frame an issue), imposter content (genuine sources impersonated with false sources), manipulative content (genuine information or imagery manipulated to deceive), false connection (headlines, visuals, or captions that do not support the content), and false context (genuine content shared with false contextual information) [

5].

Each social media post was reviewed by at least 2 reviewers. Disagreements were reviewed until consensus was achieved.

Descriptive statistics were calculated as the number of observations and percentages. Data were analyzed using Fisher exact test and examined for associations between the categorical variables of interest: social media platform, quantity of misinformation, and harmfulness of medical claims. P-values < 0.05 were significant.

Results

Of 300 total posts including 100 posts per platform, 82 posts were identified as misinformative comprising 18 YouTube videos, 24 Pinterest posts, and 40 Instagram posts. Instagram and Pinterest had a greater proportion of posts that contained “mostly false” claims, whereas YouTube had a greater proportion of videos with “mostly true” claims. “Mostly false” and “false” medical claims regarding POP were identified in 10 of 82 (12.2%) of the posts (0% YouTube videos, 15% Instagram posts, and 17% Pinterest posts).

Social media misinformation about POP most frequently arises from fabricated content (4 of 10), false connection (5 of 10), or misleading content (6 of 10). For example, one video included chemotherapy and radiation as a nonsurgical treatment option for POP (fabricated content) and another post misrepresented an article published in the journal Nature to claim that starvation and dysbiosis were prominent risk factors for POP (false connection).

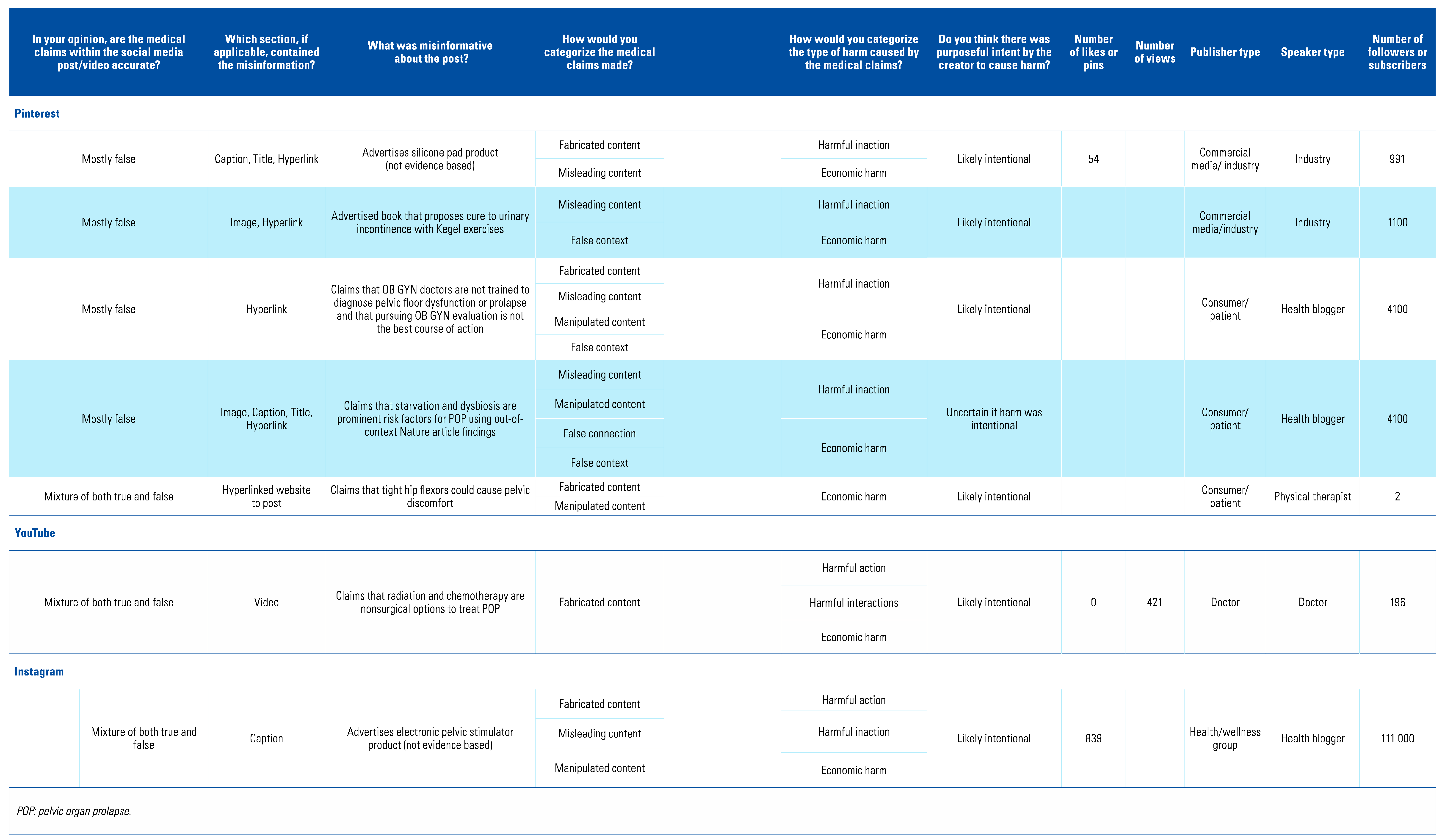

Of 82 misinformative posts, 8.5% were found to be “probably harmful” (6% YouTube videos, 3% Instagram posts, and 21% Pinterest posts). Among these 7 posts, harmful inaction (5 of 7 posts) and economic harm (5 of 7 posts) were the most common types of harm, suggesting that harmful misinformation may result in delays of care and/or more expensive alternative routes of treatment (

Table 1). For example, one post advertised silicone pads and guaranteed symptom improvement without scientific evidence (economic harm). Others encouraged their audience to forgo assessment by a doctor and pursue a pelvic floor physiotherapist instead (harmful inaction).

Regarding the reach of these 7 “probably harmful” posts, 5 of these posts came from Pinterest, with a modest reach of around 1000 to 4000 followers. The 1 Instagram post by a health blogger had the greatest reach with over 100 000 followers, and the 1 YouTube post by a doctor in Bangalore had the lowest reach with fewer than 200 subscribers and 400 views (

Table 1).

Discussion

In this novel investigation assessing the category and quantity of misinformation and resultant harm regarding POP on YouTube, Pinterest, and Instagram, our data show a significant difference in distribution of misinformation across platforms, and that misinformative posts regarding a benign condition can also result in harm, most frequently harmful inaction and economic harm and most frequently in Pinterest posts and least frequently in YouTube posts. These findings are congruent with a previous study by Morra et al. who found that YouTube is considered a reliable source of information on bladder pain syndrome, with more than 70% of videos receiving good or excellent global quality scores and more than 80% having no misinformation [

6].

Limitations of the study are that the data were gathered from a small subset of posts and may not be generalizable to all medical-related content across all social media platforms. Further limitations include the subjective nature of scoring and the selection of just 3 of dozens of social media platforms. While our methodology attempted to standardize review using multiple reviewers, subjectivity is naturally introduced when using nonbinary metrics for harm, where it is not possible to track which consumers of media pursued specific treatments and whether resultant harm ensued. Furthermore, while YouTube, Instagram, and Pinterest are among the top 15 social media platforms used globally, Facebook, the social media platform with the greatest number of daily and monthly users, was not included in our study because of the private nature of many pages and accounts that limited analysis of this magnitude.

Conclusion

The rise of social media as a prominent source of healthcare information has outpaced research on its reliability and safety. Our research demonstrates the potential harms of various types of misinformation, even in a benign condition that impacts quality of life. Uniquely, our data help further categorize and quantify the specific type of misinformation across multiple social media platforms and sets the groundwork for analyzing the reach and impact of these posts. These data can help guide larger-scale policy changes, such as mandatory textual warnings underneath misinformative health posts, and help providers tailor medical education to best communicate patient diagnoses and treatment options.