Context-Aware Emotion Gating and Modulation for Fine-Grained Sentiment Classification

Abstract

1. Introduction

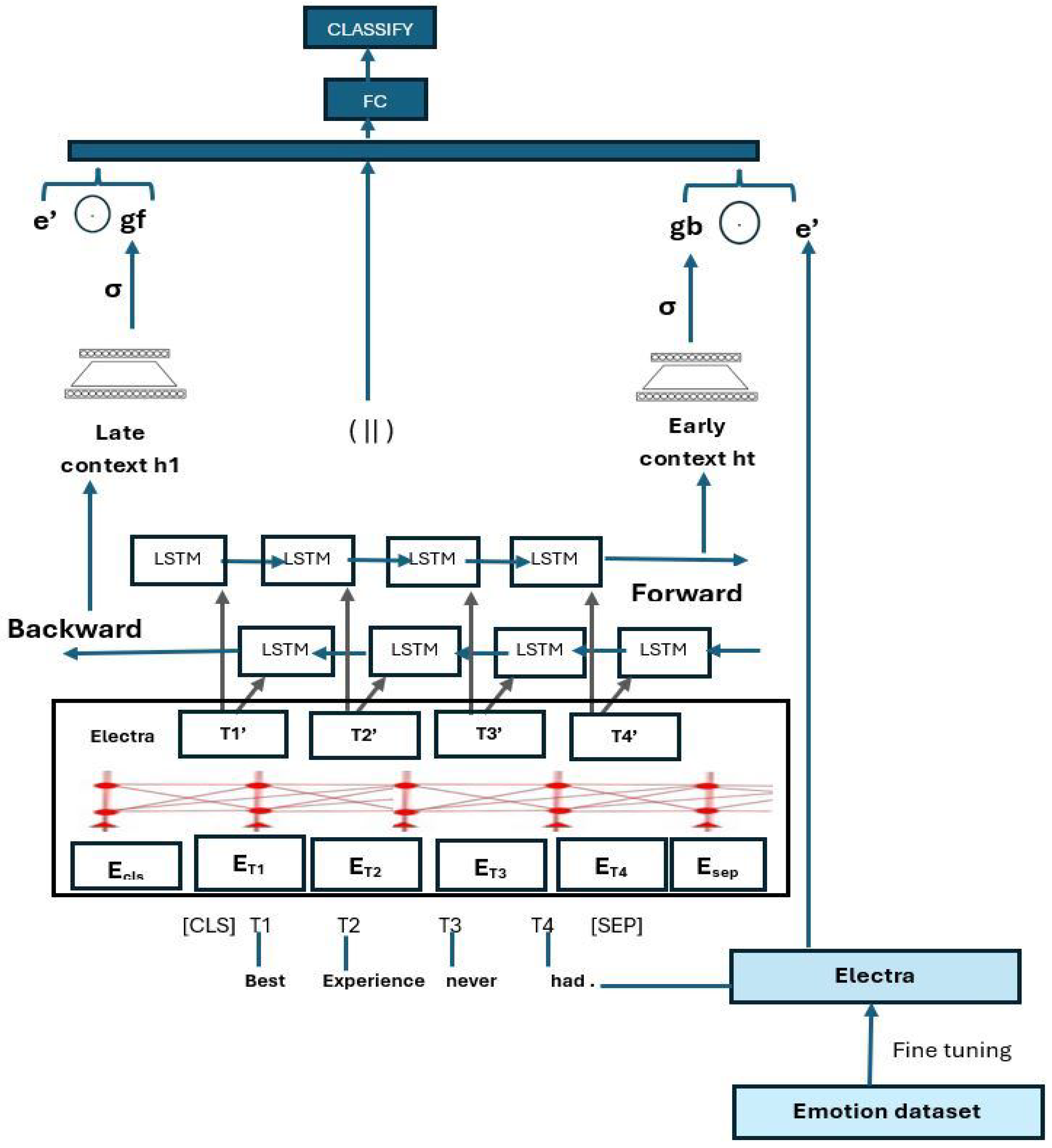

- Emotion-Aware Bidirectional Gating Mechanism: We introduce a novel gating architecture leveraging both early (forward) and late (backward) BiLSTM contexts to generate emotionally sensitive representations, enabling adaptive modulation of contextual semantics for fine-grained sentiment classification.

- Cross-Domain Emotion Modulation via Auxiliary Signals: Our method incorporates auxiliary emotion signals learned from an external emotion-labeled dataset, enabling effective emotional knowledge transfer that enhances discrimination between closely related sentiment classes.

- Model Interpretability: We evaluate the asymmetric, gating strategy that dynamically adjusts emotional influence based on linguistic context and modulated through auxiliary emotions. Comprehensive ablation studies and emotion weight analyses offer interpretable insights into how emotional cues are amplified or suppressed across sentiment classes.

2. Related Work

3. Methodology

3.1. Data Description

Dataset Pre-Processing

3.2. Extracting Emotional Features

3.3. Textual Encoding

3.4. Extracting Contextual Features

Extracting BiLSTM Textual Contexts

3.5. Dynamic Gating and Modulation of Emotional Features

3.6. Feature Fusion and Classification

| Algorithm 1: Asymmetric Context-Aware Emotion Gating and Modulating |

Input: ● Input tokens: ● Attention mask: M ● Emotion probabilities: Output: ● Prediction: ● Gate values:

//

// Forward/backward

// Last forward state

// First backward state

// Emotion projection

// Forward gate

// Backward gate

// Forward-gated

// Backward-gated

// Fusion return

|

4. Experimental Design

4.1. Evaluation Matrices

4.2. Implementation Environment

4.3. Hyper Parameter Search

4.4. Experiments

5. Results and Analysis

5.1. Ablation Study

- Base Model Isolation: To evaluate the individual impact of ELECTRA, BiLSTM, and Emotions by testing simplified versions and confirming the necessity of complex additions and Emotion Influence.

- Gating Mechanism Ablation: To verify whether emotion–text fusion via gating improves performance.

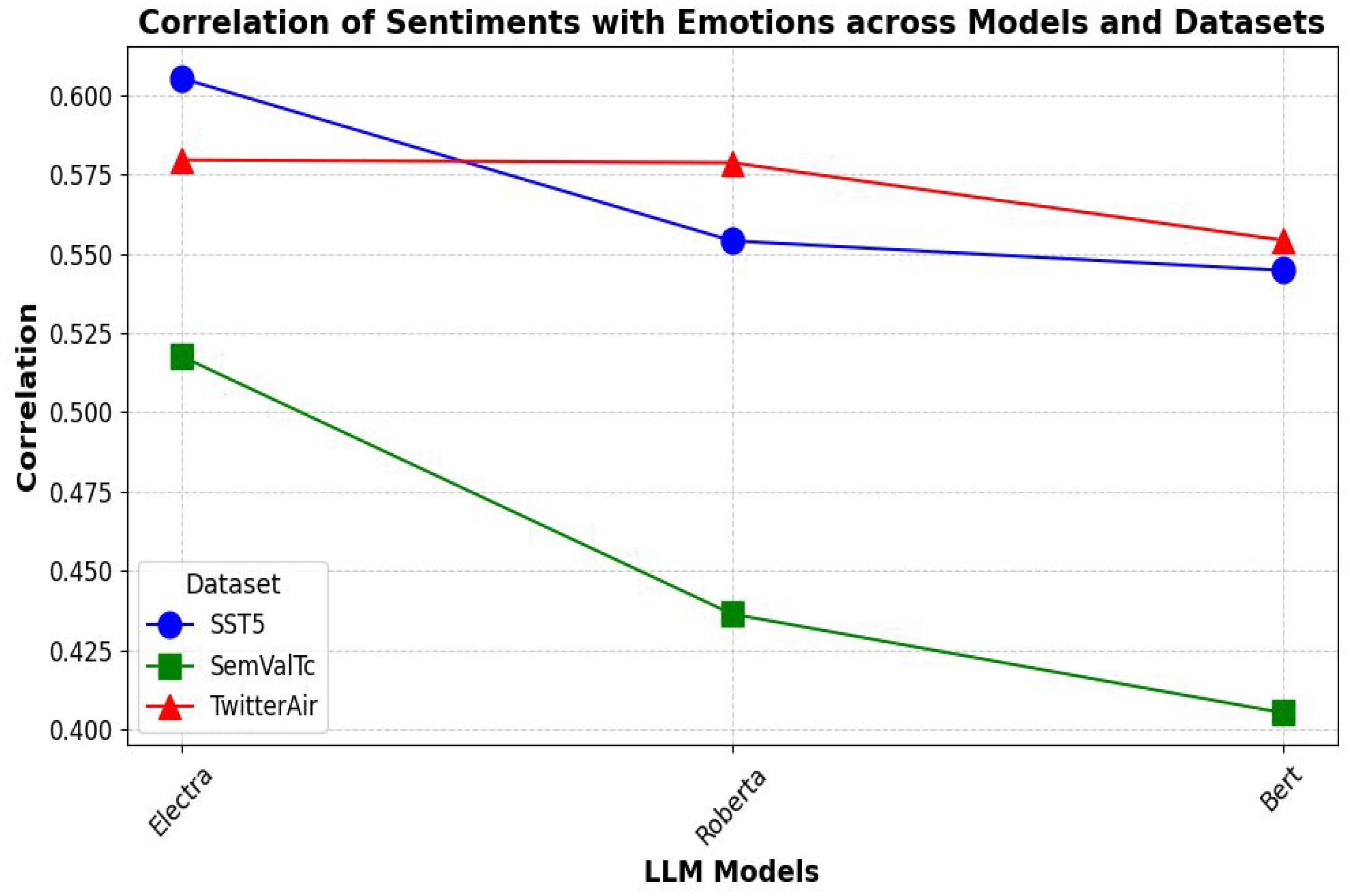

5.2. Impact of Pretrained Language Model Embeddings on the Proposed Emotion Fusion Approach

5.3. Comparison with Fine-Tuned LLMs

5.4. Comparison with State-of-the-Art Methods

- Single_Domain_Tweets [22]—A Roberta large model fine-tuned on tweet data using a [CLS] token embedding for classification.

- Fast_Textcnn [8]—A baseline model that combines FastText word embeddings with a CNN to capture local semantic features for text classification.

- DeepFusionSent [12]—A method that uses handcrafted sentiment shift patterns to model subtle transitions and enhance fine-grained sentiment detection.

- RoBERTa_HYBRID_Emoji—A method enhancing sentiment classification by fine-tuning RoBERTa embeddings and integrating BiGRU-BiLSTM layers, with a focus on handling emoticons in text.

- Senti_Twitter_BERT [22]—A BERT transformer-based architecture for solving task 4A.

- BERT-Large [6]—Uses BERT Large for SST5 classification.

- DLAWG [13]—A context-adaptive model that dynamically learns optimal window sizes and applies variable-size self-attention to enhance token-level representation and capture fine-grained contextual cues.

- LM-CPPF [24]—A method that uses LLM-generated, class-preserving paraphrases to enrich training data and improve semantic understanding within classes for classification.

- MP-TFWA [28]—A model that uses a sentence-level representation learning mechanism to capture both token-level (words/phrases) and feature-level (latent semantic) dependencies for enhanced sentiment understanding.

- LACL [1]—A model that introduces Label-aware Contrastive Loss (LCL) to explicitly model inter-class relationships, thereby enhancing fine-grained sentiment discrimination.

- Mode LSTM [15]—A model that disentangles LSTM states using orthogonal constraints and employs multi-scale temporal windows to efficiently extract non-redundant semantic features for fine-grained sentiment classification.

- GPT4 [36]—As per the evaluation of SST5 dataset performance with zero-shot prompts, we compared its results with our approach.

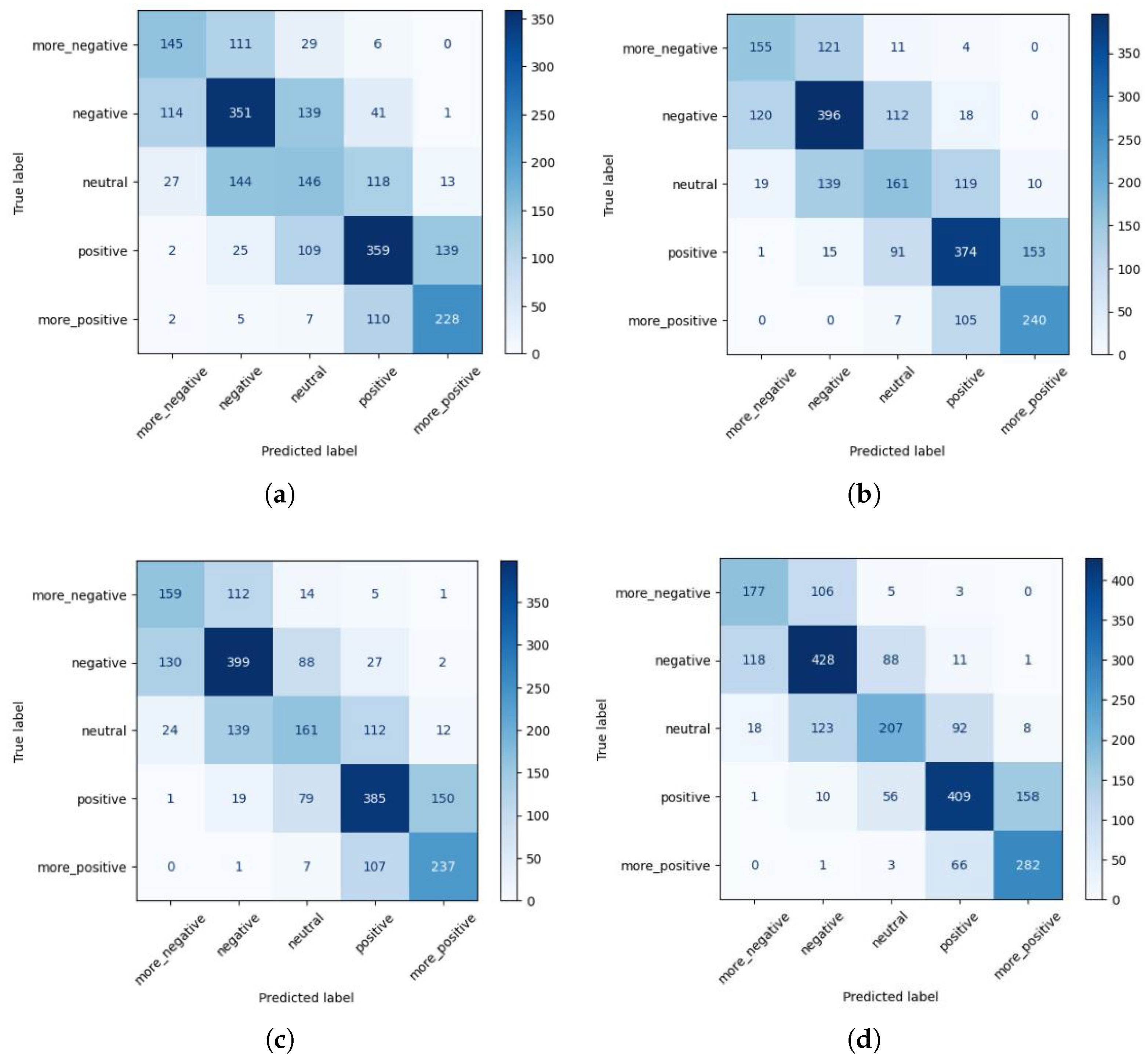

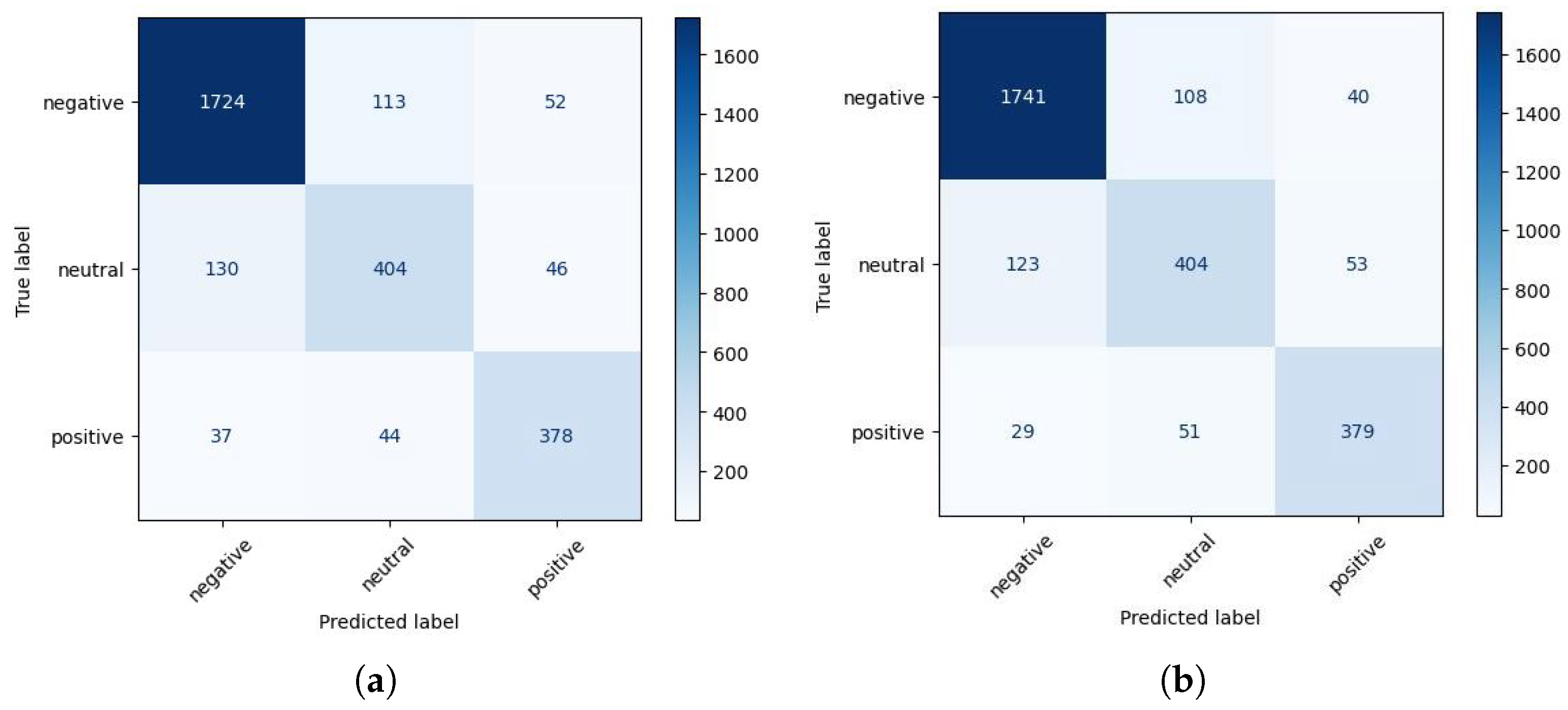

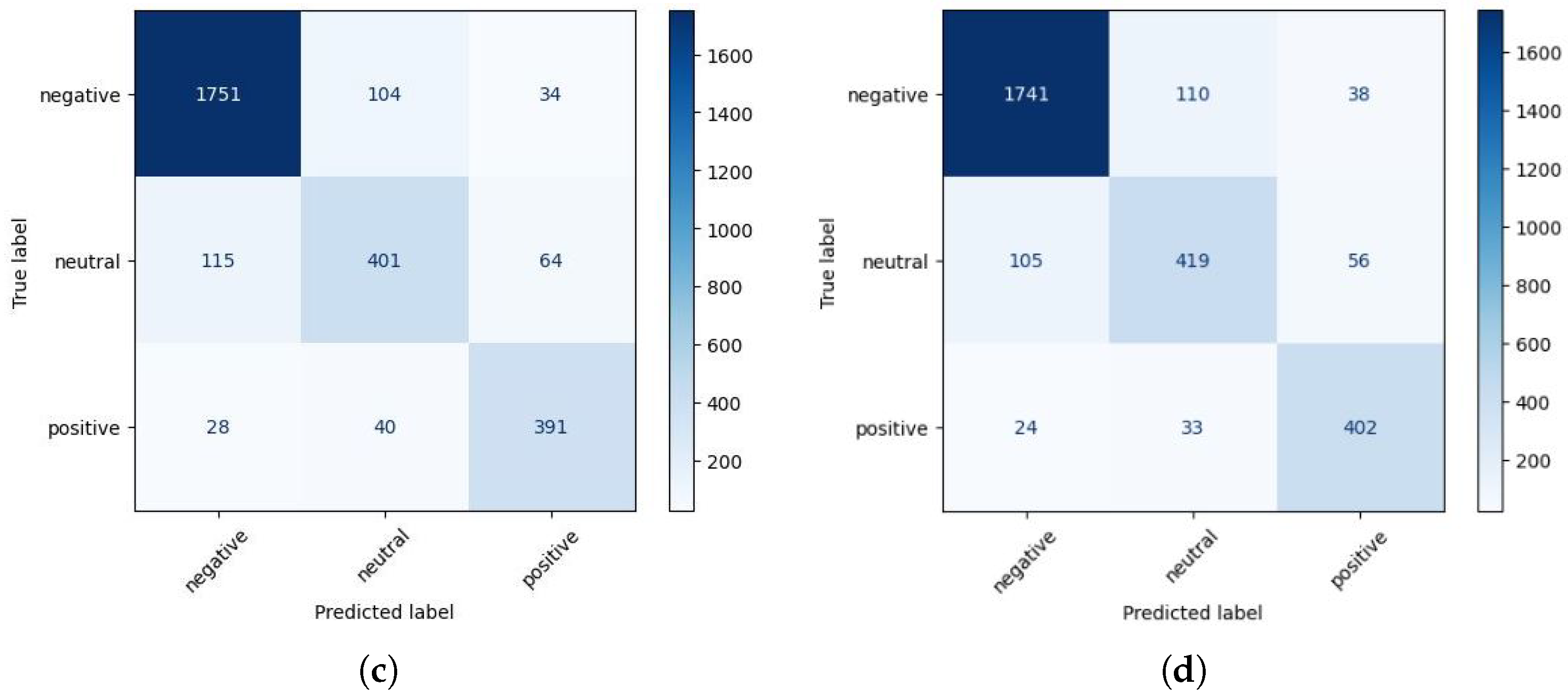

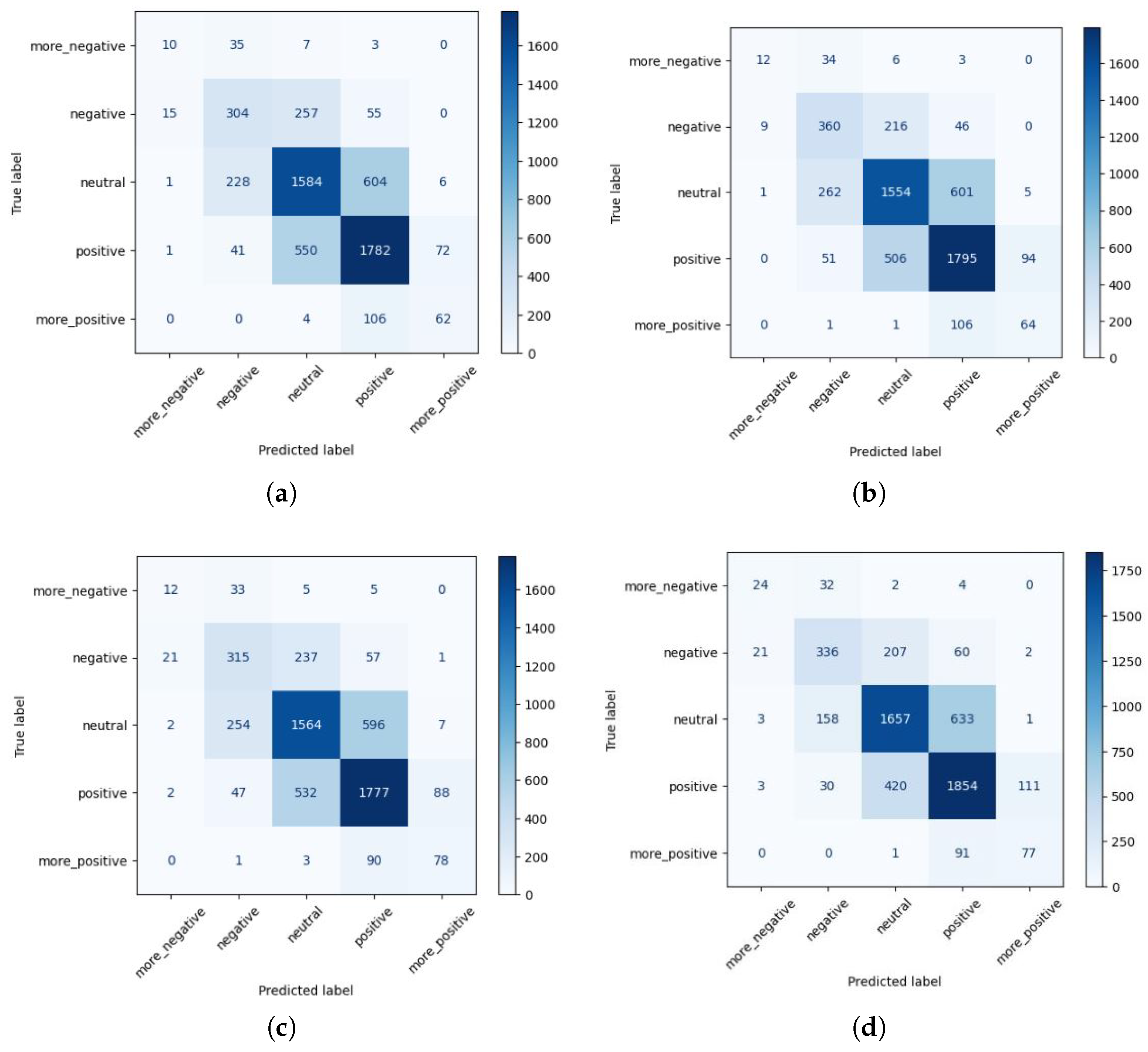

5.5. Evaluating Emotional Contributions via Confusion Matrix Analysis for Fine-Grained Sentiment Classification

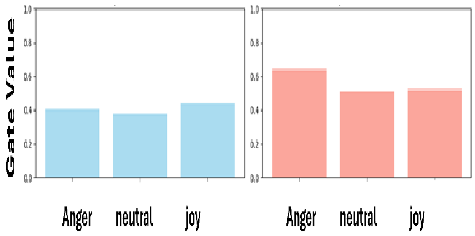

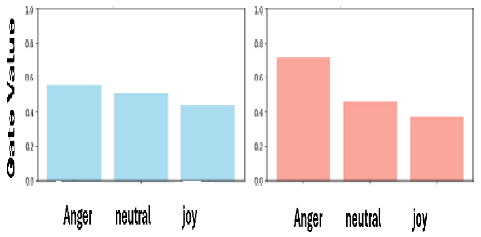

5.6. Interpretation of Fine-Grained Sentiment Analysis Based on Emotions in Different Text Contexts

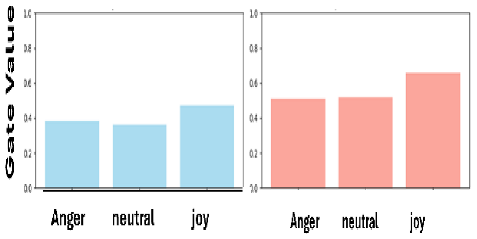

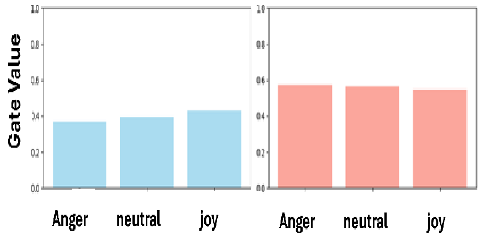

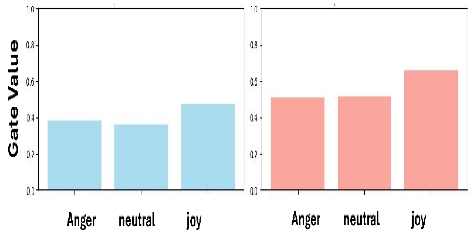

5.6.1. Emotional Bias with Text Context

5.6.2. Feature Importance

5.6.3. Interpretation of Emotion Influence of Text Context in Overall Prediction with Example Posts

5.6.4. Emotion vs. Sentiment

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Suresh, V.; Ong, D.C. Not all negatives are equal: Label-aware contrastive loss for fine-grained text classification. arXiv 2021, arXiv:2109.05427. [Google Scholar]

- Chakraborty, K.; Bhattacharyya, S.; Bag, R. A survey of sentiment analysis from social media data. IEEE Trans. Comput. Soc. Syst. 2020, 7, 450–464. [Google Scholar] [CrossRef]

- Gandhi, A.; Adhvaryu, K.; Poria, S.; Cambria, E.; Hussain, A. Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 2023, 91, 424–444. [Google Scholar] [CrossRef]

- Zhao, S.; Hong, X.; Yang, J.; Zhao, Y.; Ding, G. Toward label-efficient emotion and sentiment analysis. Proc. IEEE 2023, 111, 1159–1197. [Google Scholar] [CrossRef]

- dos Santos, C.; Gatti, M. Deep convolutional neural networks for sentiment analysis of short texts. In Proceedings of the 25th International Conference on Computational Linguistics (COLING), Dublin, Ireland, 23–29 August 2014; pp. 69–78. [Google Scholar]

- Munikar, M.; Shakya, S.; Shrestha, A. Fine-grained sentiment classification using BERT. In Proceedings of the 2019 Artificial Intelligence for Transforming Business and Society (AITB), Kathmandu, Nepal, 5 November 2019; Volume 1, pp. 1–5. [Google Scholar]

- Talaat, A.S. Sentiment analysis classification system using hybrid BERT models. J. Big Data 2023, 10, 110. [Google Scholar] [CrossRef]

- Umer, M.; Imtiaz, Z.; Ahmad, M.; Nappi, M.; Medaglia, C.; Choi, G.S.; Mehmood, A. Impact of convolutional neural network and FastText embedding on text classification. Multimed. Tools Appl. 2023, 82, 5569–5585. [Google Scholar] [CrossRef]

- Nguyen, D.Q.; Vu, T.; Nguyen, A.T. BERTweet: A pre-trained language model for English Tweets. arXiv 2020, arXiv:2005.10200. [Google Scholar] [CrossRef]

- Eang, C.; Lee, S. Improving the accuracy and effectiveness of text classification based on the integration of the BERT model and a recurrent neural network (RNN_BERT_Based). Appl. Sci. 2024, 14, 8388. [Google Scholar] [CrossRef]

- Ghanee, A.; Jahanbin, K.; Yamchi, A.R. ElHBiAt: Electra pre-training network hybrid of BiLSTM and an attention layer for aspect-based sentiment analysis. IEEE Access 2025, 13, 88342–88370. [Google Scholar] [CrossRef]

- Thakkar, A.; Pandya, D. DeepFusionSent: A novel feature fusion approach for deep learning-enhanced sentiment classification. Inf. Fusion 2025, 118, 103000. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Anbananthen, K.S.M.; Lim, K.M. RoBERTa-LSTM: A hybrid model for sentiment analysis with transformer and recurrent neural network. IEEE Access 2022, 10, 21517–21525. [Google Scholar] [CrossRef]

- Gunathilaka, T.M.A.U.; Zhang, J.; Li, Y. Fine-grained feature extraction in key sentence selection for explainable sentiment classification using BERT and CNN. IEEE Access 2025, 13, 68462–68480. [Google Scholar] [CrossRef]

- Ma, Q.; Lin, Z.; Yan, J.; Chen, Z.; Yu, L. Mode-LSTM: A parameter-efficient recurrent network with multi-scale for sentence classification. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6705–6715. [Google Scholar]

- Tang, D.; Qin, B.; Liu, T. Document modeling with gated recurrent neural network for sentiment classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Lisbon, Portugal, 17–21 September 2015; pp. 1422–1432. [Google Scholar]

- Mena, F.; Pathak, D.; Najjar, H.; Sanchez, C.; Helber, P.; Bischke, B.; Habelitz, P.; Miranda, M.; Siddamsetty, J.; Nuske, M.; et al. Adaptive fusion of multi-modal remote sensing data for optimal sub-field crop yield prediction. Remote Sens. Environ. 2025, 318, 114547. [Google Scholar] [CrossRef]

- Liu, C.; Chen, C. Text mining and sentiment analysis: A new lens to explore the emotion dynamics of mother-child interactions. Soc. Dev. 2024, 33, e12733. [Google Scholar] [CrossRef]

- Choi, G.; Oh, S.; Kim, H. Improving document-level sentiment classification using importance of sentences. Entropy 2020, 22, 1336. [Google Scholar] [CrossRef] [PubMed]

- Ding, K.; Fan, C.; Ding, Y.; Wang, Q.; Wen, Z.; Li, J.; Xu, R. LCSEP: A large-scale Chinese dataset for social emotion prediction to online trending topics. IEEE Trans. Comput. Soc. Syst. 2024, 11, 3362–3375. [Google Scholar] [CrossRef]

- Yu, Z.; Li, H.; Feng, J. Enhancing text classification with attention matrices based on BERT. Expert Syst. 2024, 41, e13512. [Google Scholar] [CrossRef]

- Fiok, K.; Karwowski, W.; Gutierrez, E.; Wilamowski, M. Analysis of sentiment in tweets addressed to a single domain-specific Twitter account: Comparison of model performance and explainability of predictions. Expert Syst. Appl. 2021, 186, 115771. [Google Scholar] [CrossRef]

- Galal, O.; Abdel-Gawad, A.H.; Farouk, M. Rethinking of BERT sentence embedding for text classification. Neural Comput. Appl. 2024, 36, 20245–20258. [Google Scholar] [CrossRef]

- Abaskohi, A.; Rothe, S.; Yaghoobzadeh, Y. LM-CPPF: Paraphrasing-guided data augmentation for contrastive prompt-based few-shot fine-tuning. arXiv 2023, arXiv:2305.18169. [Google Scholar]

- Yu, J.; Jiang, J.; Xia, R. Entity-sensitive attention and fusion network for entity-level multimodal sentiment classification. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2019, 28, 429–439. [Google Scholar] [CrossRef]

- Du, Y.; Li, T.; Pathan, M.S.; Teklehaimanot, H.K.; Yang, Z. An effective sarcasm detection approach based on sentimental context and individual expression habits. Cogn. Comput. 2022, 14, 78–90. [Google Scholar] [CrossRef]

- Hossain, M.S.; Hossain, M.M.; Hossain, M.S.; Mridha, M.F.; Safran, M.; Alfarhood, S. EmoNet: Deep Attentional Recurrent CNN for X (formerly Twitter) Emotion Classification. IEEE Access 2025, 13, 37591–37610. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Demszky, D.; Movshovitz-Attias, D.; Ko, J.; Cowen, A.; Nemade, G.; Ravi, S. GoEmotions: A dataset of fine-grained emotions. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL), Online, 5–10 July 2020. [Google Scholar]

- Wang, K.; Jing, Z.; Su, Y.; Han, Y. Large language models on fine-grained emotion detection dataset with data augmentation and transfer learning. arXiv 2024, arXiv:2403.06108. [Google Scholar] [CrossRef]

- Baziotis, C.; Pelekis, N.; Doulkeridis, C. DataStories at SemEval-2017 Task 4: Deep LSTM with Attention for Message-level and Topic-based Sentiment Analysis. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 747–754. [Google Scholar]

- Ameer, I.; Bölücü, N.; Siddiqui, M.H.F.; Can, B.; Sidorov, G.; Gelbukh, A. Multi-label emotion classification in texts using transfer learning. Expert Syst. Appl. 2023, 213, 118534. [Google Scholar] [CrossRef]

- Kumar, A.; Singh, J.P.; Singh, A.K. Explainable BERT-LSTM stacking for sentiment analysis of COVID-19 vaccination. IEEE Trans. Comput. Soc. Syst. 2023, 12, 1296–1306. [Google Scholar] [CrossRef]

- Arevalo, J.; Solorio, T.; Montes-y-Gómez, M.; González, F.A. Gated multimodal networks. Neural Comput. Appl. 2020, 32, 10209–10228. [Google Scholar] [CrossRef]

- Jang, E.; Gu, S.; Poole, B. Categorical reparameterization with Gumbel-Softmax. arXiv 2016, arXiv:1611.01144. [Google Scholar]

- Liu, Z.; Yang, K.; Xie, Q.; Zhang, T.; Ananiadou, S. Emollms: A series of emotional large language models and annotation tools for comprehensive affective analysis. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD), Barcelona, Spain, 25–29 August 2024; pp. 5487–5496. [Google Scholar]

| Parameter | SST-5 | SemEval 2017 | Twitter Airline |

|---|---|---|---|

| LSTM Hidden Dim | 256 | 128 | 128 |

| Learning Rate | 2 × 10−5 | 2 × 10−5 | 2 × 10−5 |

| Batch Size | 32 | 16 | 32 |

| Dropout Rate | 0.2 | 0.2 | 0.25 |

| 1.5 | 1.2 | 1.5 | |

| 0.8 | 0.7 | 0.7 | |

| Epochs | 15 | 12 | 10 |

| Early Stopping Patience | 5 | 5 | 3 |

| Ablation Study | Compared Models/Variants | Description and Justification |

|---|---|---|

| Base Models | ELECTRA-only | Remove LSTM and emotion gates; fine-tune ELECTRA only to test if LSTM/gating is needed. |

| ELECTRA + BiLSTM | Remove emotion_probs; ELECTRA → BiLSTM → classifier. Tests impact of emotion fusion. | |

| ELECTRA + Emotion | Remove BiLSTM; concatenate ELECTRA [CLS] + emotion_probs → FC. Tests if LSTM adds value. | |

| Gating Mechanism | ELECTRA + BiLSTM + EMO | Replace gates with concatenation: [BiLSTM_out; emotion_probs] → FC. Tests if gating outperforms naive fusion. |

| ELECTRA + BiLSTM + FG | Use only first gate (forward LSTM) to check necessity of bidirectional gating. | |

| ELECTRA + BiLSTM + LG | Use only last gate (backward LSTM) to check necessity of bidirectional gating. | |

| ELECTRA-BiG-Emo (Proposed) | Incorporates bidirectional gated emotion fusion and modulation for optimal emotion–text interaction. |

| Dataset | Base Model | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| ELECTRA-only | 0.65 | 0.66 | 0.65 | 0.65 | |

| SemEval | ELECTRA + BiLSTM | 0.48 | 0.55 | 0.49 | 0.61 |

| ELECTRA + Emotion | 0.54 | 0.52 | 0.53 | 0.66 | |

| ELECTRA-only | 0.56 | 0.57 | 0.56 | 0.57 | |

| SST-5 | ELECTRA + BiLSTM | 0.54 | 0.53 | 0.53 | 0.55 |

| ELECTRA + Emotion | 0.54 | 0.55 | 0.54 | 0.55 | |

| ELECTRA-only | 0.85 | 0.85 | 0.85 | 0.85 | |

| ELECTRA + BiLSTM | 0.79 | 0.82 | 0.80 | 0.85 | |

| ELECTRA + Emotion | 0.81 | 0.82 | 0.81 | 0.86 |

| Dataset | Gated Model | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| SemEval | ELECT. + BiLSTM + Emo | 0.52 | 0.54 | 0.53 | 0.64 |

| ELECT. + BiLSTM + FG | 0.57 | 0.49 | 0.51 | 0.66 | |

| ELECT. + BiLSTM + LG | 0.53 | 0.52 | 0.52 | 0.64 | |

| ELECTRA-BiG-Emo | 0.67 | 0.67 | 0.67 | 0.67 | |

| SST-5 | ELECT. + BiLSTM + Emo | 0.53 | 0.55 | 0.53 | 0.54 |

| ELECT. + BiLSTM + FG | 0.53 | 0.55 | 0.54 | 0.54 | |

| ELECT. + BiLSTM + LG | 0.52 | 0.55 | 0.53 | 0.54 | |

| ELECTRA-BiG-Emo | 0.60 | 0.60 | 0.59 | 0.60 | |

| ELECT. + BiLSTM + Emo | 0.78 | 0.82 | 0.79 | 0.84 | |

| ELECT. + BiLSTM + FG | 0.82 | 0.79 | 0.80 | 0.86 | |

| ELECT. + BiLSTM + LG | 0.80 | 0.81 | 0.81 | 0.85 | |

| ELECTRA-BiG-Emo | 0.88 | 0.88 | 0.88 | 0.88 |

| Dataset | Emotion Source | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| SST-5 | GoEmotion | 0.597 ± 0.018 | 0.598 ± 0.018 | 0.597 ± 0.018 | 0.593 ± 0.018 |

| SST-5 | SemEval | 0.581 ± 0.015 | 0.585 ± 0.013 | 0.581 ± 0.015 | 0.576 ± 0.017 |

| SST-5 | ISEAR | 0.579 ± 0.014 | 0.581 ± 0.017 | 0.579 ± 0.018 | 0.574 ± 0.019 |

| GoEmotion | 0.870 ± 0.010 | 0.870 ± 0.010 | 0.870 ± 0.010 | 0.868 ± 0.013 | |

| SemEval | 0.869 ± 0.009 | 0.870 ± 0.010 | 0.869 ± 0.009 | 0.868 ± 0.012 | |

| ISEAR | 0.866 ± 0.011 | 0.867 ± 0.012 | 0.866 ± 0.011 | 0.864 ± 0.014 | |

| SemEval | GoEmotion | 0.668 ± 0.007 | 0.668 ± 0.007 | 0.668 ± 0.007 | 0.666 ± 0.007 |

| SemEval | SemEval | 0.660 ± 0.017 | 0.665 ± 0.014 | 0.660 ± 0.017 | 0.659 ± 0.015 |

| SemEval | ISEAR | 0.664 ± 0.008 | 0.669 ± 0.006 | 0.664 ± 0.008 | 0.665 ± 0.008 |

| Group | Emotion Categories |

|---|---|

| Group 1 | Joy (admiration, joy, love), anger (sadness, anger, annoyance), neutral |

| Group 2 | Joy (joy, excitement, desire), anger (disgust, annoyance, anger), neutral |

| Group 3 | Joy (joy, surprise, pride), anger (embarrassment, disappointment, anger), neutral |

| Group 4 | Joy (joy, surprise, excitement), anger (disappointment, disgust, anger), neutral |

| Group | Dataset | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| Group 1 | SemEval | 0.65 | 0.65 | 0.65 | 0.65 |

| SST-5 | 0.56 | 0.55 | 0.54 | 0.55 | |

| 0.85 | 0.85 | 0.85 | 0.85 | ||

| Group 2 | SemEval | 0.66 | 0.65 | 0.65 | 0.65 |

| SST-5 | 0.47 | 0.50 | 0.47 | 0.50 | |

| 0.86 | 0.85 | 0.85 | 0.85 | ||

| Group 3 | SemEval | 0.66 | 0.65 | 0.65 | 0.65 |

| SST-5 | 0.56 | 0.57 | 0.56 | 0.57 | |

| 0.85 | 0.85 | 0.85 | 0.85 | ||

| Group 4 | SemEval | 0.67 | 0.67 | 0.67 | 0.67 |

| SST-5 | 0.60 | 0.60 | 0.59 | 0.60 | |

| 0.88 | 0.88 | 0.88 | 0.88 |

| Dataset | Pretrained LLM | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| SST-5 | ELECTRA | 0.60 | 0.60 | 0.59 | 0.60 |

| SST-5 | BERT | 0.55 | 0.56 | 0.55 | 0.56 |

| SST-5 | RoBERTa | 0.56 | 0.56 | 0.55 | 0.56 |

| SemEval | ELECTRA | 0.67 | 0.67 | 0.67 | 0.67 |

| SemEval | BERT | 0.60 | 0.59 | 0.58 | 0.60 |

| SemEval | RoBERTa | 0.67 | 0.66 | 0.66 | 0.67 |

| ELECTRA | 0.88 | 0.88 | 0.88 | 0.88 | |

| BERT | 0.86 | 0.86 | 0.86 | 0.86 | |

| RoBERTa | 0.87 | 0.86 | 0.86 | 0.87 |

| Dataset | Fine-Tuned LLM | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| SST-5 | ELECTRA | 0.64 | 0.62 | 0.62 | 0.64 |

| SST-5 | BERT | 0.62 | 0.61 | 0.61 | 0.62 |

| SST-5 | RoBERTa | 0.61 | 0.64 | 0.61 | 0.62 |

| SemEval | ELECTRA | 0.70 | 0.71 | 0.70 | 0.71 |

| SemEval | BERT | 0.62 | 0.63 | 0.62 | 0.63 |

| SemEval | RoBERTa | 0.69 | 0.69 | 0.69 | 0.69 |

| ELECTRA | 0.90 | 0.93 | 0.91 | 0.91 | |

| BERT | 0.87 | 0.89 | 0.87 | 0.89 | |

| RoBERTa | 0.90 | 0.88 | 0.88 | 0.91 |

| Metric | Baseline | Score (Mean ± SD) | p-Value |

|---|---|---|---|

| Accuracy | BERT | vs. | <0.001 *** |

| Accuracy | RoBERTa | vs. | <0.001 *** |

| Accuracy | ELECTRA | vs. | 0.0012 ** |

| Precision | BERT | vs. | <0.001 *** |

| Precision | RoBERTa | vs. | <0.001 *** |

| Precision | ELECTRA | vs. | 0.00095 *** |

| Recall | BERT | vs. | <0.001 *** |

| Recall | RoBERTa | vs. | <0.001 *** |

| Recall | ELECTRA | vs. | 0.0012 ** |

| F1-score | BERT | vs. | <0.001 *** |

| F1-score | RoBERTa | vs. | <0.001 *** |

| F1-score | ELECTRA | vs. | 0.00087 *** |

| Metric | Baseline | Score (Mean ± SD) | p-Value |

|---|---|---|---|

| Accuracy | BERT | vs. | <0.001 *** |

| Accuracy | RoBERTa | vs. | |

| Accuracy | ELECTRA | vs. | 0.016 * |

| Precision | BERT | vs. | <0.001 *** |

| Precision | RoBERTa | vs. | |

| Precision | ELECTRA | vs. | |

| Recall | BERT | vs. | <0.001 *** |

| Recall | RoBERTa | vs. | |

| Recall | ELECTRA | vs. | 0.014 * |

| F1-score | BERT | vs. | <0.001 *** |

| F1-score | RoBERTa | vs. | |

| F1-score | ELECTRA | vs. | 0.013 * |

| Metric | Baseline | Score (Mean ± SD) | p-Value |

|---|---|---|---|

| Accuracy | BERT | vs. | 0.00027 *** |

| Accuracy | RoBERTa | vs. | 0.018 * |

| Accuracy | ELECTRA | vs. | 0.0008 *** |

| Precision | BERT | vs. | 0.00011 *** |

| Precision | RoBERTa | vs. | 0.009 ** |

| Precision | ELECTRA | vs. | 0.0003 *** |

| Recall | BERT | vs. | 0.00027 *** |

| Recall | RoBERTa | vs. | 0.018 * |

| Recall | ELECTRA | vs. | 0.0008 *** |

| F1-score | BERT | vs. | 0.00010 *** |

| F1-score | RoBERTa | vs. | 0.007 ** |

| F1-score | ELECTRA | vs. | 0.0003 *** |

| Dataset | Model | Recall | F1 | Accuracy | MAE |

|---|---|---|---|---|---|

| Proposed ELECTRA-BiG-Emo | 0.88 | 0.88 | 0.88 | – | |

| RoBERTa_HYBRID_Emoji | – | – | 0.86 | – | |

| DeepFusionSent | 0.85 | – | 0.86 | – | |

| FAST_TEXTcnn | 0.86 | – | 0.86 | – | |

| SemEval Task 4 | Proposed ELECTRA-BiG-Emo | 0.67 | 0.67 | 0.67 | 0.26 |

| SemEval Task 4 | Single_Domain_Tweets | – | 0.43 | – | 0.45 |

| SemEval Task 4 | Senti_Twitter_BERT | 0.54 | – | 0.54 | 0.45 |

| SST-5 | Proposed ELECTRA-BiG-Emo | – | – | 0.597 | – |

| SST-5 | LM-CPPF | – | – | 0.55 | – |

| SST-5 | Mode LSTM | – | – | 0.55 | – |

| SST-5 | DLAWG | – | – | 0.54 | – |

| SST-5 | MP-TFWA | – | – | 0.56 | – |

| SST-5 | BERT Large | – | – | 0.56 | – |

| SST-5 | LACL | – | – | 0.59 | – |

| SST-5 | GPT-4 | – | 0.50 | 0.54 | – |

| Sentiment | LSTM | First Emo Gate | Last Emo Gate |

|---|---|---|---|

| More_negative | 3.8339 | 2.7648 | 4.2156 |

| Negative | 3.8489 | 3.4404 | 3.8397 |

| Neutral | 3.8787 | 0.0000 | 3.6235 |

| Positive | 3.9543 | 4.5038 | 3.5901 |

| More_positive | 3.9583 | 4.6151 | 4.3517 |

| Sentiment | LSTM | First Emo Gate | Last Emo Gate |

|---|---|---|---|

| More_negative | 3.9006 | 0.9816 | 4.0604 |

| Negative | 3.8200 | 4.2171 | 0.0000 |

| Neutral | 3.8624 | 4.2111 | 3.6614 |

| Positive | 3.7977 | 2.6933 | 4.6151 |

| More_positive | 3.8124 | 4.4493 | 4.3204 |

| Sample Post | Early vs. Late Modulation | Actual | Pred. | Interpretation |

|---|---|---|---|---|

| All in all, there’s only one thing to root for: expulsion for everyone. |  | Neg. | Neg. | Positive opener (“all in all”) overridden by late anger cue (“expulsion”); late context dominates. |

| Amazon Prime Day will be like Black Friday, I guess, because I’m just as disappointed. |  | More Neg. | More Neg. | Mixed early cues; “disappointed” in late context suppresses mixed signals → strong negative. |

| Can you bear the laughter? |  | Pos. | Pos. | Early positivity; late context tempers intensity but remains Positive overall. |

| Creepy, authentic, and dark |  | Neu. | Neu. | Early gate over-weights “authentic”; late balanced cues cancel it → Neutral. |

| You are my early frontrunner for best airline! Oscars 2016. |  | More Pos. | More Pos. | Early/late cues both reinforce joy → strong positive. |

| Gate Type | Sentiment | Anger | Neutral | Joy |

|---|---|---|---|---|

| Forward | More_negative | 0.612 | 0.629 | 0.485 |

| More_positive | 0.398 | 0.385 | 0.438 | |

| Negative | 0.614 | 0.604 | 0.488 | |

| Neutral | 0.519 | 0.496 | 0.485 | |

| Positive | 0.394 | 0.393 | 0.450 | |

| Backward | More_negative | 0.520 | 0.453 | 0.507 |

| More_positive | 0.624 | 0.531 | 0.531 | |

| Negative | 0.522 | 0.467 | 0.569 | |

| Neutral | 0.489 | 0.508 | 0.626 | |

| Positive | 0.572 | 0.569 | 0.572 |

| Gate Type | Sentiment | Anger | Neutral | Joy |

|---|---|---|---|---|

| Forward | More_negative | 0.537 | 0.490 | 0.447 |

| More_positive | 0.454 | 0.502 | 0.557 | |

| negative | 0.534 | 0.522 | 0.499 | |

| Neutral | 0.489 | 0.519 | 0.566 | |

| Positive | 0.456 | 0.502 | 0.574 | |

| Backward | More_negative | 0.578 | 0.464 | 0.393 |

| More_positive | 0.616 | 0.508 | 0.450 | |

| Negative | 0.650 | 0.558 | 0.372 | |

| Neutral | 0.760 | 0.621 | 0.355 | |

| Positive | 0.748 | 0.574 | 0.343 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Thennakoon Mudiyanselage, A.U.G.; Zhang, J.; Li, Y. Context-Aware Emotion Gating and Modulation for Fine-Grained Sentiment Classification. Mach. Learn. Knowl. Extr. 2026, 8, 9. https://doi.org/10.3390/make8010009

Thennakoon Mudiyanselage AUG, Zhang J, Li Y. Context-Aware Emotion Gating and Modulation for Fine-Grained Sentiment Classification. Machine Learning and Knowledge Extraction. 2026; 8(1):9. https://doi.org/10.3390/make8010009

Chicago/Turabian StyleThennakoon Mudiyanselage, Anupama Udayangani Gunathilaka, Jinglan Zhang, and Yeufeng Li. 2026. "Context-Aware Emotion Gating and Modulation for Fine-Grained Sentiment Classification" Machine Learning and Knowledge Extraction 8, no. 1: 9. https://doi.org/10.3390/make8010009

APA StyleThennakoon Mudiyanselage, A. U. G., Zhang, J., & Li, Y. (2026). Context-Aware Emotion Gating and Modulation for Fine-Grained Sentiment Classification. Machine Learning and Knowledge Extraction, 8(1), 9. https://doi.org/10.3390/make8010009