3.1. Large Language Models

Large Language Models (LLMs), primarily those built on transformer architectures, have made significant strides in producing coherent, contextually relevant text [

33]. They excel at pattern recognition and can generate fluent natural language by leveraging billions of parameters trained on massive corpora [

34]. However, their computational principle—self-attention over sequential data—imposes fundamental limitations that hinder their ability to perform rich, open-ended problem-solving tasks.

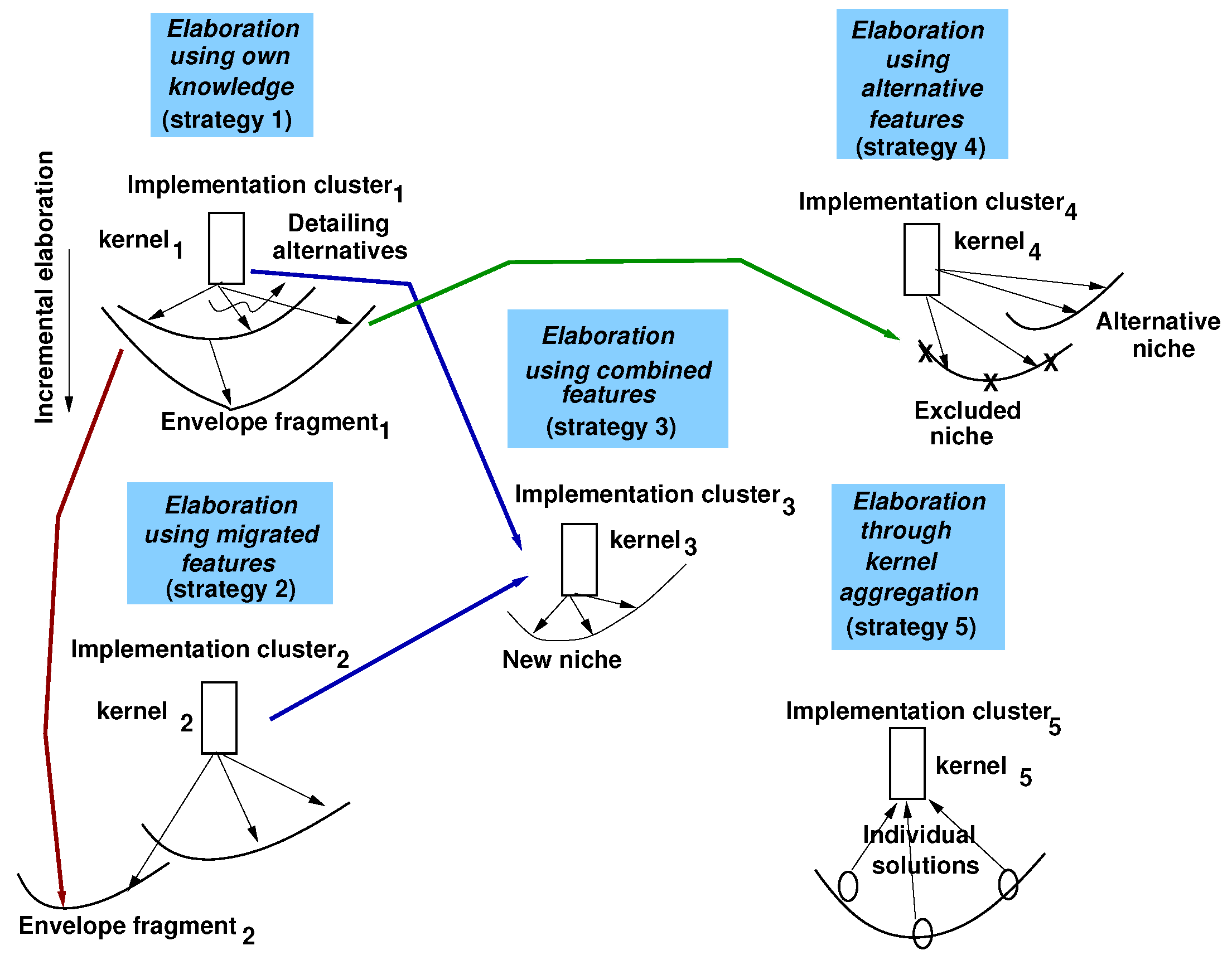

At the core of these limitations is the reliance on statistical correlations rather than genuine logical understanding. While self-attention excels at identifying relevant tokens in a sequence, it does not inherently encode hierarchical structures, domain-specific causal rules, or strict logical constraints. This stands in contrast to open-ended problem solving, where the concept space encompasses hierarchical, alternative, and fundamental concepts, and the action space includes complex operations such as feature combination, dynamic adjustment, abstraction, insight generation, and summarization [

35]. LLMs struggle to engage these conceptual spaces because they lack mechanisms for hierarchical reasoning, strategic problem decomposition, or flexible reuse of insights [

36].

LLMs tend to produce generalized answers aligned with statistical patterns in their training data [

37]. Unlike open-ended problem solving, which demands iterative refinement—exploring solution spaces, backtracking, and learning from failures—LLMs typically generate single forward passes [

38]. Without internal models of logical inference, memory structures for knowledge accumulation, or explicit strategy formulations, LLMs cannot easily correct their reasoning or adapt based on previous mistakes [

39]. This leads to hallucinations, distractions, and inability to build complex, causally grounded explanations.

Some researchers have explored techniques like constraint-based decoding [

40], sparse attention mechanisms, adapter layers [

41], and memory-augmented transformers. While these approaches enhance performance on certain tasks, they remain add-ons that do not overcome the inherent limitations of attention-based architectures or enable robust open-ended problem solving. The models remain biased toward training data patterns and lack the ability to intentionally search concept space, systematically test hypotheses, or derive new conceptual abstractions [

42].

In response to these challenges, methods have emerged to push LLMs toward more sophisticated reasoning and problem-solving behaviors, broadly categorized as prompt engineering, retrieval-augmented generation, and reinforcement learning.

3.2. Prompt Engineering

Prompting techniques utilize carefully constructed input prompts to guide the model’s response generation process. Techniques can be grouped into five categories discussed next.

Table 1 provides a comprehensive summary of these prompt engineering approaches.

(a) Single-stage prompting (SSP): SSP methods directly instruct the model without iterative refinement. Basic/standard/vanilla prompting simply provides a query or instruction to the model, as seen in [

43]. Basic with Term Definitions augments queries with brief term definitions to offer extra context, but its impact remains limited since localized definitions may conflict with the model’s broader knowledge. Meanwhile, Basic + Annotation Guideline-Based prompting + Error Analysis-Based prompting [

44] uses formally defined entity annotation guidelines to specify how clinical terms should be identified and categorized, ensuring clarity in entity recognition. In addition, it incorporates instructions derived from analyzing common model errors, such as addressing ambiguous entity boundaries or redefining prompts for overlapping terms. This strategy significantly improves clinical Named Entity Recognition, with relaxed F1 scores reported as 0.794 for GPT-3.5 and 0.861 for GPT-4 on the MTSamples dataset [

45] and 0.676 for GPT-3.5 and 0.736 for GPT-4 on the VAERS dataset [

46], demonstrating its effectiveness.

(b) Reasoning strategies: These methods are of three types: linear, branching, and iterative reasoning.

Linear reasoning methods such as Chain-of-Thought (CoT), Complex CoT, Thread-of-Thought (ThoT), Chain-of-Knowledge (CoK), Chain-of-Code (CoC), Logical Thoughts (LoT), Chain-of-Event (CoE), and Chain-of-Table generate a single, step-by-step sequence (chain) of responses toward the final answer. Methods differ in the type of task they target, i.e., code generation, summarization, and logical inference, and in how they refine or represent intermediate steps. CoT shows that using intermediate prompting steps can enhance accuracy, e.g., up to 39% gains in mathematical problem solving [

47]. An example of in-context prompt for CoT might be: “

If the problem is ‘Calculate 123 × 456,’ break it down as (100 + 20 + 3) × 456 and compute step-by-step.” Complex CoT uses more involved in-context examples, improving performance by as much as 18% on harder tasks [

48]. ThoT tackles long or chaotic contexts by

breaking them into manageable parts (e.g., dividing long passages into sections for sequential summarization) [

49], while CoK strategically adapts and consolidates knowledge from multiple sources to ensure coherence and reduce hallucination [

50]. CoC specializes in code-oriented reasoning by simulating key code outputs (e.g., predicting intermediate variable states for debugging) [

51], whereas LoT integrates logical equivalences and reductio ad absurdum checks to refine reasoning chains (e.g., validating statements by identifying contradictions in their negations) [

52]. CoE handles summarization by extracting, generalizing, filtering, and integrating key events (e.g., pinpointing main events from news articles) [

53], and Chain-of-Table extends CoT techniques to tabular data, dynamically applying transformations like filtering or aggregation to generate coherent answers [

54].

Branching reasoning methods, like Self-Consistency, Contrastive CoT (or Contrastive Self-Consistency), Federated Same/Different Parameter Self-Consistency/CoT (Fed-SP/DP-SC/COT), Tree-of-Thoughts, and Maieutic prompting, explore multiple possible reasoning paths in parallel. However, they vary in how they sample or fuse paths, some relying on consensus votes and others on dynamic adaptation or tree-based elimination. Self-Consistency, for instance, samples diverse solution paths and selects the most consistent final answer, achieving gains of over 11% on math tasks [

55]. Contrastive CoT incorporates both correct and incorrect in-context examples to broaden the model’s understanding, improving performance by over 10% compared to standard CoT [

56]. Fed-SP-SC leverages paraphrased queries to crowdsource additional hints [

57], while ToT maintains a tree of partial solutions and systematically explores them with breadth-first or depth-first strategies, offering up to 65% higher success rates than CoT on challenging math tasks [

58]. Maieutic prompting likewise generates a tree of propositions to reconcile contradictory statements, surpassing linear methods by 20% on common-sense benchmarks [

59].

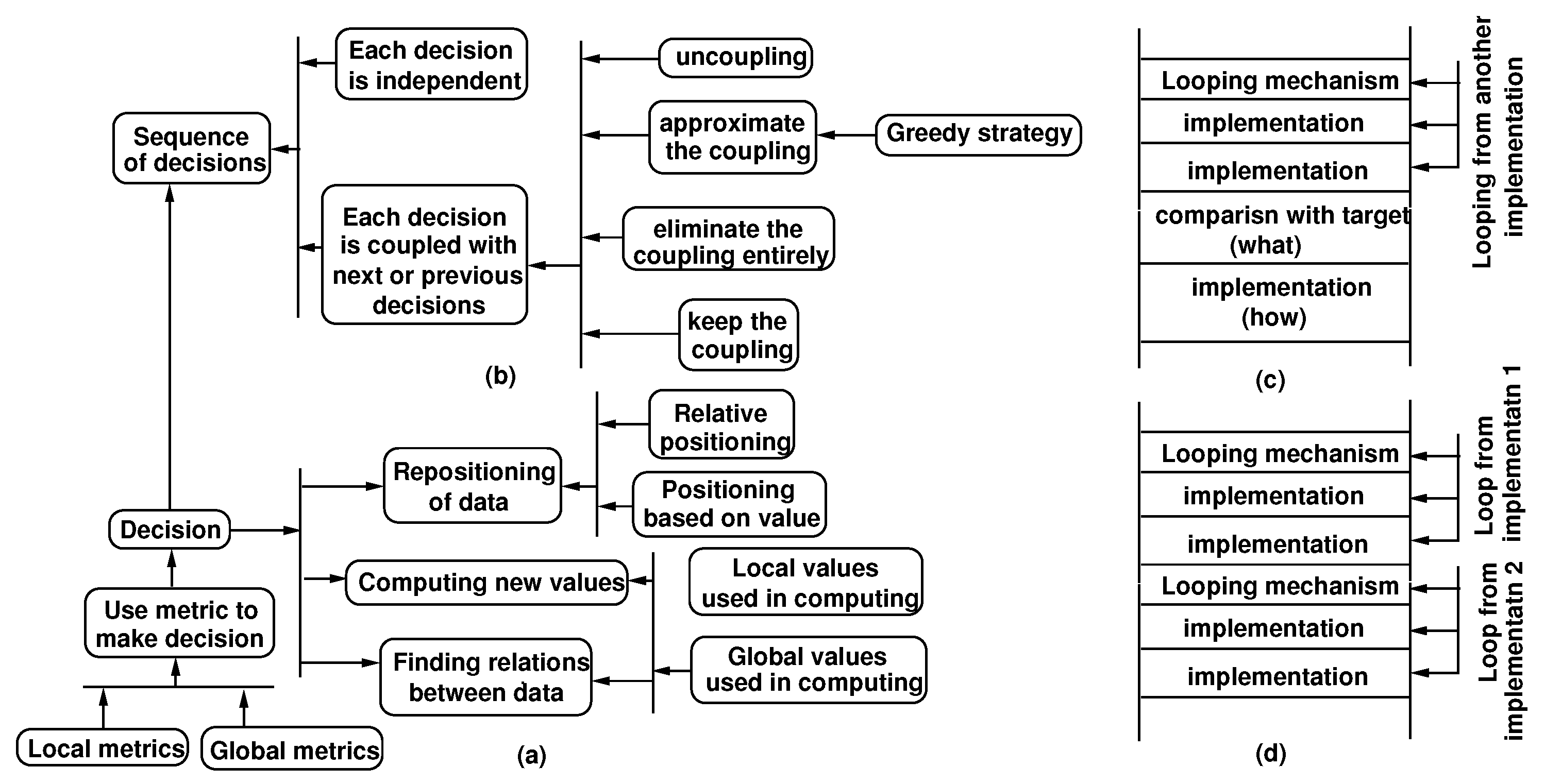

Iterative reasoning approaches, such as Plan-and-Solve (PS), Program-of-Thoughts (PoT), Chain-of-Symbol (CoS), Structured Chain-of-Thought (SCoT), and Three-Hop Reasoning (THOR), refine solutions step by step, often bypassing intermediate outputs back into the model to enhance accuracy. PS explicitly decomposes tasks into planning and execution phases, where the planning phase structures the problem into smaller sub-tasks, and the execution phase solves them sequentially. This reduces semantic and calculation errors, outperforming Chain-of-Thought (CoT) prompting by up to 5% [

60]. PoT enhances performance by separating reasoning from computation: the model generates programmatic solutions executed by a Python interpreter, achieving up to 12% accuracy gains in numerical and QA tasks [

61]. CoS encodes spatial and symbolic relationships using concise symbolic representations, which improves reasoning in spatial tasks by up to 60.8% [

62]. SCoT introduces structured reasoning through program-like branching and looping, significantly improving code generation accuracy by up to 13.79% [

63]. Finally, THOR tackles emotion and sentiment analysis through a three-stage approach: aspect identification, opinion analysis, and polarity inference. This structured method achieves superior performance compared to previous supervised and zero-shot models [

64]. These approaches exemplify the power of iterative methods in breaking complex problems into manageable components, thereby reducing errors and improving overall performance.

(c) Multi-Stage Prompting (MSP): MSP techniques rely on iterative feedback loops or ensemble strategies. MSP methods systematically refine outputs and incorporate multiple response paths, e.g., through voting or iterative analysis, to yield more robust and accurate solutions, particularly in domains requiring deeper reasoning or tailored task adaptation. Ensemble Refinement (ER) [

65] builds on Chain-of-Thought (CoT) and Self-Consistency by generating multiple CoT-based responses at high temperature (introducing diversity) and then iteratively conditioning on generated responses to produce a more coherent and accurate output, leveraging insights from the strengths and weaknesses of initial explanations and majority voting. Auto-CoT [

66] constructs demonstrations automatically by clustering queries from a dataset and generating reasoning chains for representative queries using zero-shot CoT. Clustering is achieved by partitioning questions into groups based on semantic similarity, ensuring that representative queries capture the diversity of the dataset. ReAct [

67] interleaves reasoning traces—thought processes that explain intermediate steps—with action steps that execute operations, enabling superior performance in complex tasks by seamlessly combining reasoning and action. Moreover, Active-Prompt [

68] adaptively selects the most uncertain training queries, identified via confidence metrics like entropy or variance, for human annotation, boosting few-shot learning performance by focusing on areas with the highest uncertainty.

(d) Knowledge Enhancement: These approaches use high-quality examples and strategic self-monitoring to improve LLM performance. They pertain to two types, example-based and meta-level guidance methods.

Example-based methods leverage auxiliary examples or synthesized instances to guide the response creation process of LLMs. MathPrompter [

69] focuses on creating a symbolic template of the given mathematical query, solving it analytically or via Python, and then validating the derived solution with random variable substitutions before finalizing the answer. The approach boosts accuracy from 78.7% to 92.5%. analogical reasoning [

70] prompts LLMs to generate and solve similar examples before addressing the main problem, resulting in a 4% average accuracy gain across various tasks. Synthetic Prompting [

71] involves a backward step, where a new query is generated from a self-constructed reasoning chain, and a forward step, where this query is re-solved; this strategy selects the most complex examples for few-shot prompts, leading to up to 15.6% absolute improvements in mathematical problem solving, common-sense reasoning, and logical reasoning.

Meta-Level Guidance (MLG) methods enhance LLMs by promoting self-reflection and focusing on pertinent information, thereby reducing errors. Self-reflection involves the model evaluating its own outputs to identify and correct mistakes, leading to improved performance. For example, in translation tasks, self-reflection enables LLMs to retrieve bilingual knowledge, facilitating the generation of higher-quality translations. Focusing is achieved through techniques like System 2 Attention (S2A) [

72], which filters out irrelevant content by prompting the model to regenerate the context to include only essential information before producing a final response. This two-step approach enhances reasoning by concentrating on relevant details, thereby improving accuracy. S2A has been shown to outperform basic prompting methods, including Chain-of-Thought (CoT) and instructed prompting, particularly on truthfulness-oriented datasets. Metacognitive Prompting (MP) [

73] introduces a five-stage process to further enhance LLM performance: (1) Comprehension: The model attempts to understand the input, ensuring clarity before proceeding. (2) Preliminary Judgment: An initial assessment is made based on the understood information. (3) Critical Evaluation: The initial judgment is scrutinized, considering alternative perspectives and potential errors. (4) Final Decision with Explanation: A conclusive decision is reached, accompanied by a rationale to support it. (5) Self-Assessment of Confidence: The model evaluates its confidence in the final decision, reflecting on the reasoning process. This structured approach enables LLMs to perform consistently better than methods like CoT and Program Synthesis (PS) across various natural language processing tasks, including paraphrasing, natural language inference, and named entity recognition.

(e) Task Decomposition: These approaches break down complex tasks into smaller steps but vary in how they orchestrate and execute the sub-problems. They include problem breakdown and sequential solving methods.

Problem breakdown approaches include the Least-to-Most method [

74], which addresses the challenge of Chain-of-Thought (CoT) failing on problems more difficult than its exemplars by first prompting the LLM to decompose a query into sub-problems and then solving them sequentially, demonstrating notable improvements over CoT and basic prompting on tasks like commonsense reasoning and mathematical problem solving. The decompositions are characterized by their hierarchical structure, breaking down complex problems into simpler, manageable sub-tasks that build upon each other to facilitate step-by-step reasoning. Decomposed Prompting (DecomP) breaks complex tasks into simpler sub-tasks, each handled with tailored prompts or external tools, ensuring efficient and accurate execution. For instance, the task “Concatenate the first letters of words in ‘Jack Ryan’” is decomposed into extracting words, finding their first letters, and concatenating them [

75]. DecomP leverages modular decomposers to partition problems hierarchically or recursively, assigning sub-tasks to specialized LLMs or APIs. This approach achieves a 25% improvement over CoT and Least-to-Most methods in commonsense reasoning. Program-Aided Language models (PAL) [

76] further leverage interleaved natural language and programmatic steps to enable Python-based execution of the reasoning process, surpassing CoT and basic methods for mathematical and commonsense tasks.

Sequential solving includes methods like Binder and Dater algorithms. Binder [

77] integrates neural and symbolic parts by using an LLM both as a parser and executor for natural language queries, leveraging programming languages like Python or SQL for structured execution. Binding is achieved through a unified API that enables the LLM to generate, interpret, and execute code using a few in-context examples, leading to higher accuracy on table-based tasks compared to fine-tuned approaches. Dater [

78] focuses on few-shot table reasoning by splitting a large table into relevant sub-tables, translating complex queries into SQL sub-queries, and combining partial outcomes into a final solution. These three steps aim to systematically extract meaningful data, execute precise operations, and integrate results to address complex queries, outperforming fine-tuned methods by at least 2% on Table-Based Truthfulness and 1% on Table-Based QA, and surpassing Binder on these tasks.

3.3. Retrieval-Augmented Generation

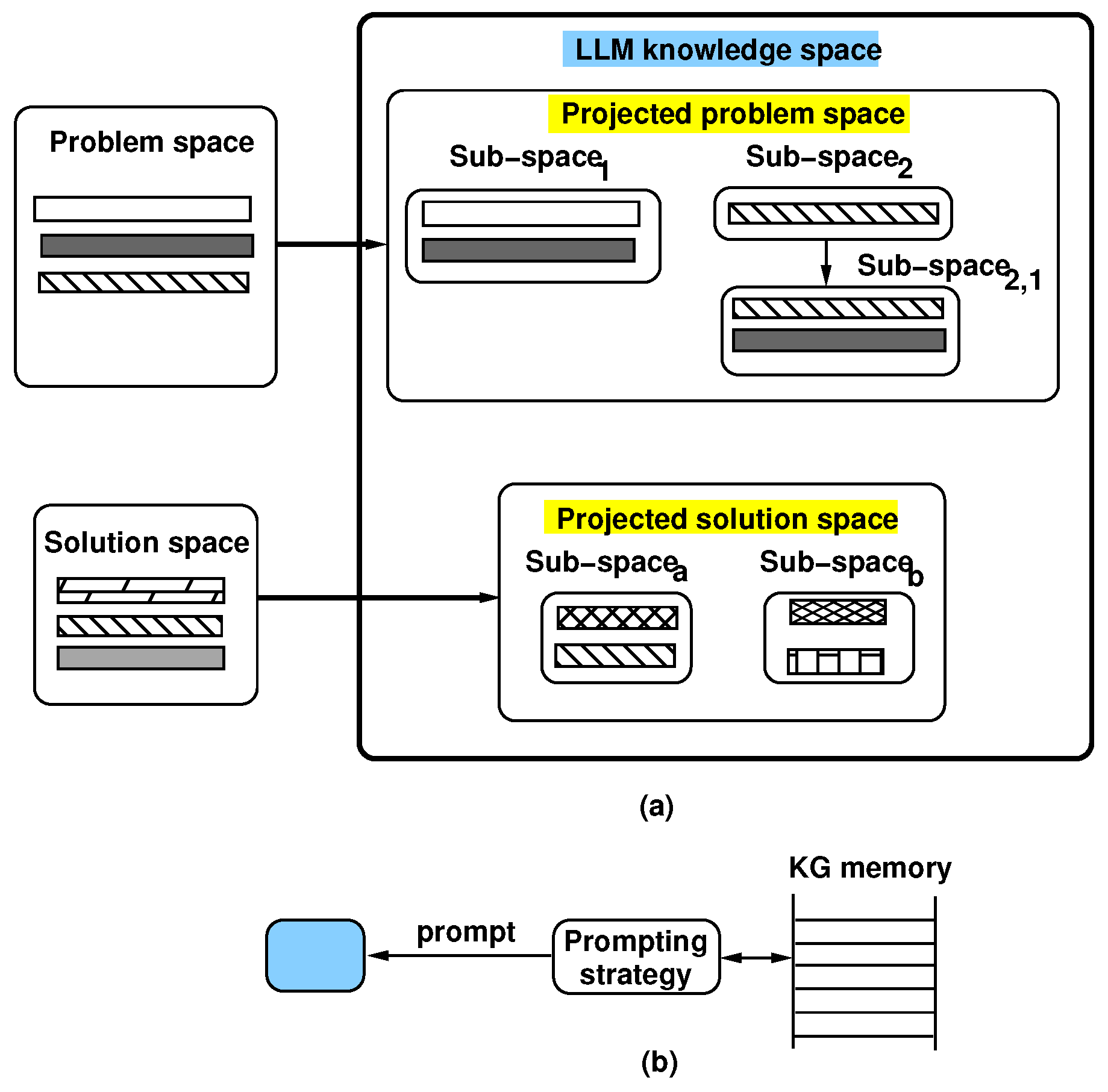

Retrieval-augmented generation (RAG) addresses LLMs’ lack of persistent memory and factual grounding by integrating external knowledge sources into the generation process [

79]. Instead of relying solely on learned parameters, RAG systems retrieve relevant documents, facts, or structured data at inference time, reducing hallucinations and ensuring reasoning steps reference accurate, up-to-date information [

80]. RAG advancements span healthcare, finance, education, and scientific research, addressing challenges in reasoning, problem-solving, and knowledge integration. This review categorizes these advancements into four areas: task-specific and schema-based techniques, self-aware and adaptive mechanisms, long-term memory integration, and multi-hop and multi-modal reasoning.

Table 2 provides a comprehensive summary of these RAG approaches.

(a) Task-Specific and Schema-Based Retrieval (TSR): TSR approaches employ structured methods for domain-specific challenges in mathematics and knowledge-intensive tasks, categorized into schema-based and data-driven techniques.

Schema-based techniques use predefined structures to guide reasoning. Schema-Based Instruction RAG (SBI-RAG) [

81] formalizes schemas to represent problem structures through knowledge graphs that map domain-specific concepts. The Knowledge Graph-Enhanced RAG Framework (KRAGEN) [

82] employs graph-of-thoughts reasoning to decompose tasks into sub-problems, retrieve relevant knowledge, and synthesize solutions. KRAGEN integrates visualization tools for explainability, allowing users to trace sub-problem decomposition and validate reasoning processes. However, reliance on predefined templates limits performance on novel problems.

Data-driven retrieval methods extract insights without predefined schemas. Generative Retrieval-Augmented Matching (GRAM) [

83] uses hierarchical classification for dynamic schema alignment, performing coarse-grained matching followed by fine-grained context-based refinement. GRAM adapts to evolving query patterns with minimal labeled data but struggles in noisy environments. TableRAG [

84] reasons over tabular data through query expansion, schema retrieval, and cell-level reasoning, though query expansion introduces latency unsuitable for real-time tasks.

(b) Self-Aware and Adaptive Retrieval: Self-aware RAG frameworks address ambiguous queries, conflicting information, and knowledge gaps through adaptive mechanisms.

SeaKR [

85] detects uncertainty through internal state inconsistencies, triggering retrieval when confidence is low and re-ranking snippets to reduce uncertainty. While effective for uncertainty detection, it struggles with domain-specific tasks and may trigger unnecessary retrievals for complex but unambiguous queries.

Self-Reflective RAG (Self-RAG) [

86] uses token-based strategies for iterative refinement. Retrieve tokens assess query complexity to determine retrieval needs, while Critique tokens evaluate responses across relevance, support, and usefulness dimensions. Through iterative retrieval-critique cycles, Self-RAG adapts responses to retrieved knowledge, though this increases latency and may overfit to evaluation criteria.

Speculative RAG [

87] employs a two-stage process: a smaller model generates diverse draft responses from clustered document subsets, then a larger model evaluates drafts via conditional generation probabilities. This achieves 12.97% accuracy improvement and 51% latency reduction on PubHealth, though it lacks uncertainty detection mechanisms and may miss nuances in dynamic queries.

SimRAG [

88] fine-tunes RAG for specialized domains through self-training with pseudo-labeled data generated from unlabeled corpora. While addressing domain-specific knowledge gaps, it risks overfitting and lacks explicit uncertainty detection.

CR-Planner [

89] uses critic models trained via Monte Carlo Tree Search to evaluate sub-goals and execution paths, decomposing tasks for clarity. However, MCTS incurs high computational costs, and domain-specific critics limit generalizability.

Self-Rewarding Tree Search (SeRTS) [

90] frames retrieval as tree search, using MCTS with upper confidence bounds to select nodes and Proximal Policy Optimization to refine strategies. While effective for biomedical retrieval, tree search becomes computationally expensive for complex queries.

(c) Long-Term Memory for Knowledge Retrieval: Long-term memory integration addresses limitations of query-specific retrieval: poor context retention, limited reusability, and inability to accommodate human feedback [

91].

HippoRAG [

92] organizes information using knowledge graphs (KGs) where entities are nodes and relationships are edges, constructed via Open Information Extraction. Personalized PageRank retrieves relevant subgraphs for queries, supporting multi-hop reasoning by combining relationships across nodes. HippoRAG achieves 20% improvement on multi-hop benchmarks like 2WikiMultiHopQA [

93] and MuSiQue [

94].

Long-term memory systems can be characterized across five dimensions: (i) KG types and management, (ii) relevant information identification, (iii) knowledge utilization mechanisms, (iv) memory organization, and (v) response integration into KGs.

For dimension (i), HybridRAG combines structured KGs with vector-based retrieval for unstructured data, enhancing retrieval in domains like finance requiring both precise definitions and broad context [

95]. For dimension (ii), GRAG retrieves k-hop ego-graphs with soft pruning [

96], while SimGRAG identifies similar subgraphs using recurring patterns for consistency [

97].

For dimension (iv), MemLong [

98] maintains a compressed memory buffer with dynamic caching, handling up to 65,000 tokens while prioritizing frequently accessed information. HAT [

99] organizes dialogue history into hierarchical aggregate trees for recursive aggregation. MemoRAG [

100] decouples memory updates from retrieval, using draft answers as clues for precise retrieval and processing up to 600K tokens through token compression.

For dimension (v), Pistis-RAG [

101] adapts to human feedback through multistage processing (matching, pre-ranking, ranking, reasoning, aggregating), achieving 9.3% improvement on MMLU, though continuous feedback may introduce variability.

(d) Multi-Hop and Multi-Modal Reasoning Retrieval: Multi-hop reasoning connects information across multiple steps for coherent answers, while multi-modal retrieval synthesizes data from images, text, audio, and video.

Multi-layered Thoughts Enhanced RAG (METRAG) [

102] employs four components: similarity-oriented retrieval identifies relevant documents, utility assessment evaluates usefulness via task relevance and completeness metrics, task-adaptive summarization reduces redundancy, and contextual reasoning integrates information for nuanced outputs.

RAG-Star [

103] integrates Monte Carlo Tree Search with retrieval-augmented verification, balancing exploration and exploitation through random simulations. Query- and answer-aware reward modeling refines reasoning trajectories for multi-step logical inference.

KRAGEN [

82] uses Graph-of-Thoughts to decompose queries into subproblems represented as dynamically constructed graphs. Vectorized embeddings retrieve relevant data from domain-specific knowledge graphs, synthesizing results while preserving dependencies. However, multi-hop techniques rely heavily on underlying knowledge source quality.

Multi-modal extensions handle diverse data formats. M3DocRAG [

104] encodes document images into visual embeddings, retrieves pages via multi-modal models, and generates answers for both single-hop and multi-hop queries. VisRAG [

105] embeds documents directly as images using vision–language models, avoiding text parsing loss while retaining structure and context. OmniSearch [

106] employs self-adaptive planning to decompose multi-modal queries into sub-question chains, adapting retrieval strategies based on query characteristics and temporal factors.

(e) Self-Critique Methods: Self-critique methodologies systematically verify and refine outputs to enhance reliability and factual accuracy in RAG systems.

Chain-of-Verification (CoVe) [

107] follows four steps: generating an initial response, formulating verification questions to fact-check the response, independently answering these questions to reduce bias, and revising the answer using validated information. The model dynamically generates verification questions by conditioning on the query and baseline response, cross-referencing with existing knowledge sources. CoVe achieves over 10% performance gains compared to standard prompting and Chain-of-Thought methods.

Verify-and-Edit (VE) [

108] identifies weak or contradictory reasoning through self-consistency by generating multiple solutions and using majority voting to pinpoint uncertainty. VE integrates validated evidence from trusted sources, demonstrating 9.5% gains in multi-hop reasoning and 25.6% improvement in truthfulness evaluations.

3.4. Reinforcement Learning

Reinforcement learning (RL) refines LLM behavior through iterative feedback and reward signals from human feedback, automated metrics, or pre-trained reward models [

109]. RL comprises six components: agent, environment, state, action, reward, and policy [

110]. For LLM fine-tuning, the LLM represents the policy, the textual sequence is the state, and token generation is the action. After generating a complete sequence, a reward assesses output quality, either training a reward model or directly guiding alignment. RL methods divide into model-based and model-free approaches.

Table 3 provides a comprehensive summary of these RL techniques.

(a) Model-based RL Approaches: The methods in this category can be grouped into three categories, RLHF, RLAIF, and exploration, which are discussed next.

Reinforcement Learning from Human Feedback (RLHF) incorporates reward signals from human evaluations through three stages: supervised fine-tuning (SFT) with labeled datasets, training a reward model (RM) from human-evaluated outputs, and policy fine-tuning using Proximal Policy Optimization (PPO) [

111]. InstructGPT [

112] exemplifies RLHF’s effectiveness in instruction adherence. These methods address challenges like length bias [

113,

114], while frameworks like trlX [

115] and datasets like UltraFeedback [

116] scale RLHF to tasks including summarization, translation, and dialogue generation.

PPO iteratively adjusts model weights to maximize expected rewards. Reward model training relies on human feedback through curated datasets of ranked examples, as in Skywork-Reward [

117] and TÜLU-V2-mix [

118]. Tool-augmented reward modeling [

119] integrates external resources like calculators, while generative reward models use synthetic preferences to reduce human feedback dependence. Pairwise feedback pipelines [

120] improve preference learning by comparing response pairs. However, RLHF remains resource-intensive and risks over-optimization, where models exploit reward function weaknesses rather than achieving genuine alignment [

121,

122].

RL from AI Feedback (RLAIF) replaces human evaluators with AI systems for better scalability and consistency [

123]. Reward models are trained using LLM-generated preference labels transformed via softmax and optimized through cross-entropy loss [

124]. Approaches include large-scale datasets like UltraFeedback with GPT-4 annotations [

116], self-synthesis methods like Magpie [

125], and permissively licensed datasets like HelpSteer2 [

126]. LLMs can also function directly as reward functions [

127,

128]. Self-supervised mechanisms like Eureka [

129] and self-rewarding systems [

130,

131] enable iterative refinement through self-generated feedback. However, RLAIF risks propagating AI biases, creating feedback loops that constrain diversity and limit generalization [

116,

129,

131].

Exploration techniques balance seeking new information with exploiting current knowledge [

132]. Traditional approaches like epsilon-greedy [

133,

134] and Boltzmann exploration [

135] introduce randomness but slow convergence. Recent methods leverage LLMs for strategic exploration. ExploRLLM [

136] combines LLM-generated high-level plans with affordance-based policies for action execution, improving efficiency in structured environments but struggling with dynamic domains [

137]. Soft RLLF [

138] integrates natural language as logical feedback for reasoning tasks, though it is optimized for structured rather than creative problems. LLM + Exp [

139] employs dual LLMs to analyze action–reward trajectories and adjust action probabilities, excelling in structured environments but facing scalability issues in unpredictable tasks. Guided Pretraining RL [

127] uses LLM-generated structured trajectories to pretrain agents, improving sample efficiency but limiting generalization to variable environments.

(b) Model-Free Approaches: These methods can be grouped into three categories, DPO, IPO, and actor–critic, which are discussed next.

Direct Preference Optimization (DPO) simplifies RLHF by directly optimizing LLM parameters using preference data, bypassing reward model training [

140]. DPO uses preference loss functions on paired human preferences. Extensions include DPOP [

141], which introduces margin-based terms to prevent rewarding both preferred and disfavored outputs; Iterative DPO [

131], which mitigates distribution shifts through continuous policy updates;

-DPO [

142], which adaptively tunes regularization based on data quality; and Stepwise DPO [

143], which performs incremental updates with stronger intermediate reference models. DPO excels in structured problem-solving by incorporating human preferences without complex RL training [

140]. However, it is sensitive to distribution shifts and can introduce label noise in creative tasks [

144,

145].

Identity Preference Optimization (IPO) [

146] addresses overfitting in RLHF and DPO by directly optimizing preferences without nonlinear transformations. Unlike the Bradley–Terry model [

147], IPO uses a linear objective function with KL divergence regularization, formulating a squared loss over preferred and less preferred output pairs. This approach is robust for deterministic feedback scenarios and outperforms DPO [

148,

149]. However, IPO’s reliance on static preference distributions limits adaptability, and its sensitivity to noise reduces robustness in complex environments [

150].

Actor–critic methods like Advantage Actor–Critic (A2C) and Deep Deterministic Policy Gradient (DDPG) optimize LLM prompts through iterative actor–critic loops. Prompt Actor–Critic Editing (PACE) [

151] uses an actor to generate responses from prompts and inputs, while a critic evaluates relevance and accuracy against objectives. KL-regularization [

152] balances prompt fidelity with task-specific improvements. However, actor–critic methods assume well-structured feedback, challenging for sparse or noisy signals. Recent work addresses this: HDFlow [

153] combines fast and slow thinking modes for complex reasoning, while Direct Q-function Optimization (DQO) [

154] formulates response generation as a Markov Decision Process, parameterizing the Q-function within the LLM to learn from offline data including unbalanced samples, improving multi-step reasoning.