1. Introduction

The notion of

trust in Modeling and Simulation (M&S) often revolves around technical constructs such as verification and validation [

1,

2,

3]. A model that is demonstrated to be sufficiently accurate in an applicable domain can be considered adequate or ‘fit-for-purpose’. While the credibility of a model does contribute to trust and eventual adoption, practitioners have observed that trust is a multi-faceted construct that requires more than validation. Even if a model is comprehensive, validated, and built from appropriate datasets under the supervision of renowned subject-matter experts, end-users do not necessarily trust it and use it to inform practices. As pointed out by Harper and colleagues, transparency, communication, and documentation are important enablers of trust [

4]. There are two broad (and sometimes complementary) approaches to promoting transparency: either we are

transparent when building a model (which is familiar to M&S practitioners working with participatory methods) or we are

transparent by explaining a model that was built (e.g., post-hoc explainable AI to convey black-box models). In this paper, we focus on the transparency of

conceptual models in the form of

causal maps (also known as causal loop diagrams or systems maps), which consist of labeled nodes with a clear directionality (e.g., we can have more or less ‘rain’ but not more or less ‘weather’), connected by directed typed edges (e.g., more rain causes more floods).

Participatory approaches provide

transparent methods to build a model by eliciting core assumptions and model dynamics from participants. However, there is no guarantee that the resulting model is transparent to other end-users, or even to the participants who were involved in building it. For example, we can build a small model with an individual within an hour where all constructs and relationships are easy to follow in a diagram, but once we scale this process to a

group then the conceptual model can become complex and hard to visualize. The goal of transparency in assumptions and limitations [

5] is challenging to achieve in this situation, as participants would struggle to know what

is (assumptions) or

is not (limitations) in a model that consists of hundreds of constructs and many more relationships. This situation is particularly problematic in the participatory modeling context, where participants are both model providers (the model synthesizes their views) and consumers (the model supports their decision-making activities). If the model-building process is transparent but the result is hard to interpret, then this imbalance can leave participants with the impression of being ‘used’ to create a model that they still do not really trust. Without transparency in the end-product, we lose the benefits of conceptual modeling activities, such as learning among participants, fostering engagement, or promoting buy-in from key actors [

6].

Approaches focused on

explainability examine how to convey a model (potentially with hundreds of constructs and relationships) to participants. While metrics such as LIME and SHAP are familiar AI approaches for models such as classifiers or regressors, they are not applicable in the context of explaining models such as causal maps. Instead, we turn to reporting guidelines for simulation studies such as TRACE (TRAnsparent and Comprehensive model Evaluation), which includes model description [

7], or recommendations from the ISPOR-SMDM task force to achieve transparency by providing nontechnical documentation that covers the model’s variables and relationships [

8]. These recommendations are supported by empirical studies on decision-support systems, showing that “revealing the system’s internal decision structure (global explanation) and explaining how it decides in individual cases (local explanation)” positively affects a range of constructs such as trust [

9]. However, as exemplified by a systematic survey of models for obesity, about half of peer-reviewed studies do not provide documentation on the overall model diagram, the algorithms used, or how data is processed [

10]. The realization that documentation is important but either missing or inconsistent has prompted a line of research in

automatically generating explanations for a model. The development and application of computational methods supporting documentation is an open problem. In 2021, Wang et al. approached the problem using a small set of predefined natural-language templates that provide contrastive model-based explanations (how does the inclusion of another parameter affect the measured outcomes?), scenario-based explanations (how does one scenario affect the outcomes?), and goal-oriented explanations (to achieve a target, which options to include and exclude?). For example, the model-based explanation starts with “Under scenario

s, compared with model

B, model

A includes

⟪options⟫, but excludes

⟪options⟫. Such configuration differences improve

⟪performance measures⟫...” [

11]

With the emergence of Generative AI (GenAI) models such as GPT 3, we showed in 2022 that a causal map could be turned into text without templates, instead producing varied outputs [

12]. While early works lost parts of the model in the translation process and required extensive manual examples for fine-tuning, we later showed that models could be reliably turned into text without loss [

13] and by using only a handful of examples [

14] (i.e., few shot prompting). The use of GenAI to explain models is now becoming increasingly common, as LLMs synthesize design documents and simulation logs to provide explanations to various end-users [

15,

16,

17]. Despite these advances, current solutions can produce a large amount of text when explaining complex models. For instance, a causal map with 361 concept nodes and 946 edges could turn into a report of almost 10,000 words, spanning 92 paragraphs [

13]. While such long reports echo standard practices in M&S, they are problematic as pointed out by Uhrmacher and colleagues: “textual documentation of simulation studies is notoriously lengthy [as one model] results easily in producing 30 pages [thus] means for succinct documentation are needed” [

18]. The need for shorter outputs was emphasized in an earlier panel by Nigel Gilbert: noting that the UK Prime Minister at the time limited memos to two pages, Gilbert observed that “it is likely to be a tough job for a policy analyst to boil down the results of a policy simulation to two pages.” [

19] Necessarily, if a report is shorter, then we cannot cover every aspect of the model. Transparency, thus, results in a trade-off, as the textual explanation must be simple and short yet sufficiently rigorous to cover what matters in a model [

20]. Being selective by creating shorter reports can be beneficial. In particular, Mitchell argues that

not disclosing certain aspects for transparency is

better to build trust in a system [

21]. That is, the indiscriminate disclosure of every internal detail (e.g., listing every parameter and rule) would lead to cognitive overload and paradoxically undermine trust. However, shorter reports can impact key measures in different ways: shortening a report inevitably reduces how much of the model’s content is represented (lower coverage), but it should avoid affecting how the retained content reflects the underlying model (faithfulness), particularly in high-risk domains such as policy or healthcare [

22].

Reports can be shortened either by simplifying the model through explicit criteria and then using established text generation methods, or by passing the complete model to a text generator with an additional summarization task. Although text summarization [

23] and model simplification [

24] have been extensively studied, they have often appeared in separate strands of literature and follow different objective functions. For example, text summarization maximizes coverage and relevancy while minimizing redundancy [

25], and model simplification may involve structural (e.g., prune weak links, eliminate transitive edges that can be inferred) or semantic objectives. Our main contribution is to experimentally compare the effects of model simplification and text summarization to generate shorter reports of conceptual models, motivated by the need to build trust through succinct documentation. We focus on measurable textual qualities of generated summaries (e.g., coverage, fluency, faithfulness, readability) rather than direct end-user comprehension. Our objective is accomplished through two specific aims:

We formalize and implement algorithms to simplify causal models by structural compression (i.e., skip intermediates between concepts until reaching a branching or looping structure) and semantic pruning (i.e., remove the least-central concept nodes).

We compare our model simplification algorithms with seven text summarization algorithms, including GPT-4, accounting for both extractive (select existing sentences) and abstractive (craft new sentences) strategies.

The remainder of this paper is organized as follows. In

Section 2, we provide a brief background on how large conceptual models arise and how they can be transformed into text, along with text summarization methods. In

Section 3, we describe the algorithms used to apply our summarization models and reduce our graph to a desired size. Our results are provided in

Section 4, and we compare all generated summaries with handwritten (ground truth) summaries of the conceptual model. Lastly, we discuss our findings in

Section 5. To support replicability, we provide our source code on an open third-party repository at

https://osf.io/whqkd, accessed on 26 September 2025.

3. Methods

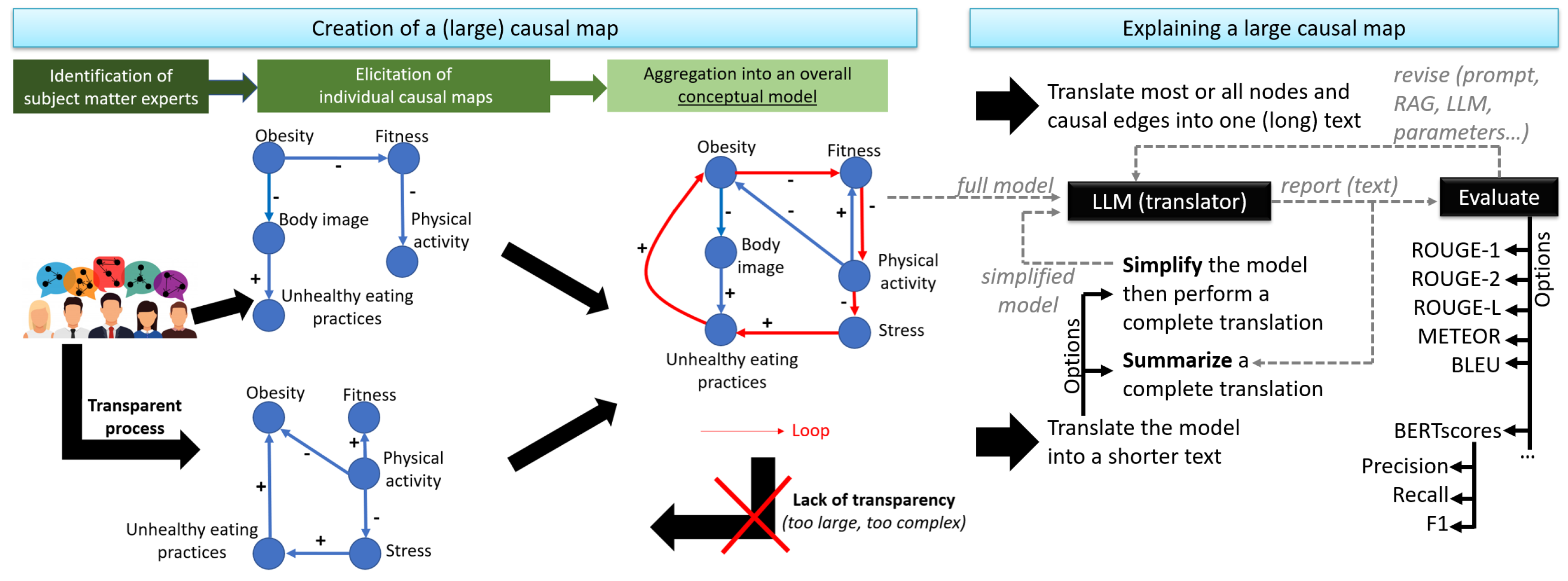

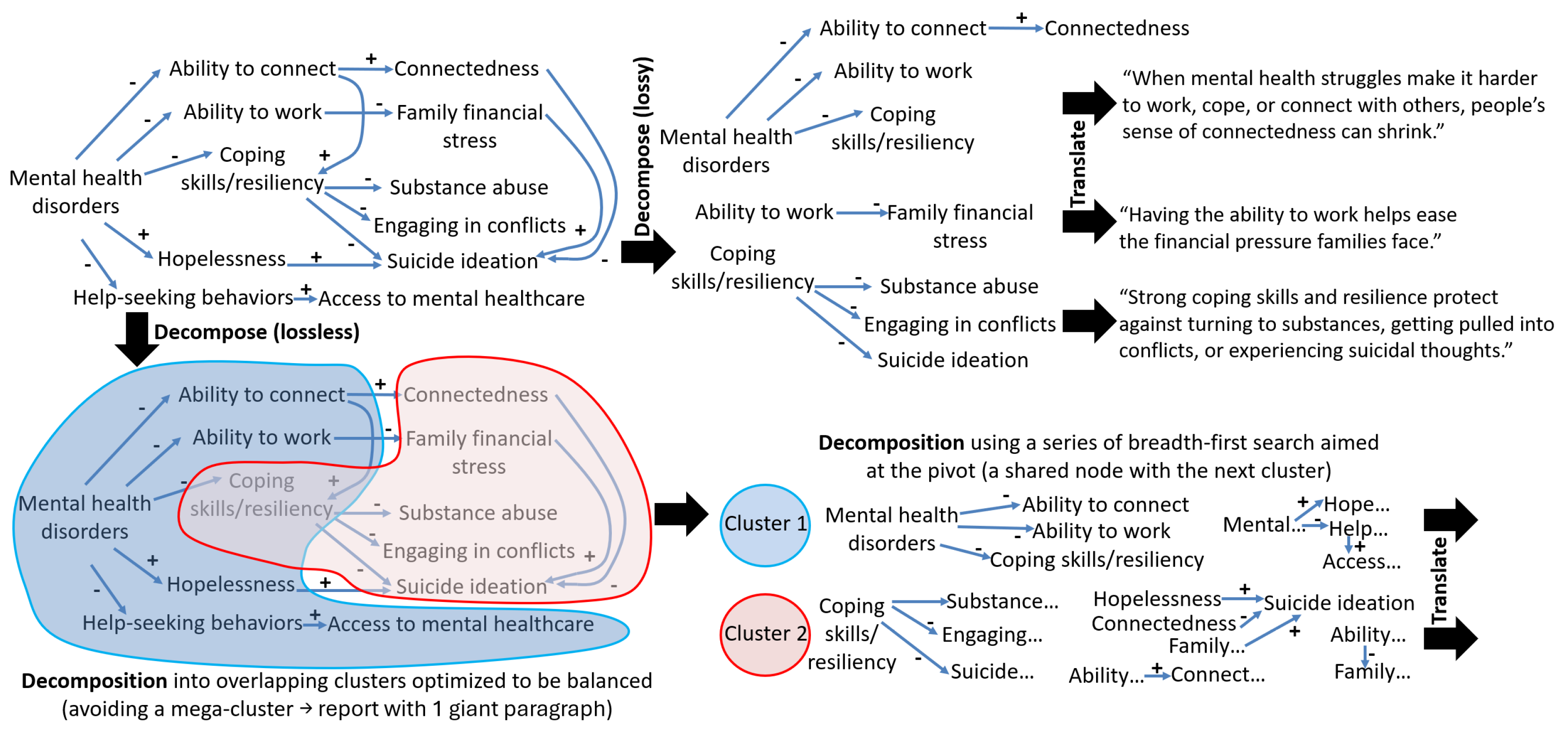

Our work compares two methods (

Figure 4). In the modeler-led simplification, we use graph algorithms that can be controlled to reflect different priorities. In our case, the algorithms combine structural (e.g., compress chains of links to avoid intermediates) and semantic objectives (e.g., prioritize the preservation of the most central nodes). Then, the simplified model is turned into text using the open-source model-to-paragraphs method previously released at ER’25 [

13]. In the LLM-led simplification, we take the entire map and immediately generate the paragraphs, then we perform summarization. Our process evaluates all seven text summarization algorithms listed in

Section 2.3, covering abstraction methods (Distilbart, T5, LED, GPT-4) and extractive methods (Textrank, BertEXT, longformerEXT). The outputs from the two methods are then quantitatively evaluated. The two methods are formalized at a high-level in Algorithm 1, then

Section 3.1 covers the custom algorithms for the modeler-led approach and

Section 3.2 explains how we used text summarization algorithms.

Figure 4.

Overview of our methods.

Figure 4.

Overview of our methods.

| Algorithm 1 Two Pipelines for Graph-to-Text Simplification |

- Require:

Causal graph - Ensure:

Simplified textual explanation T - 1:

Choose simplification strategy: Modeler-Led or LLM-Led - 2:

if

Modeler-Led

then - 3:

Apply graph simplification algorithm to G producing - 4:

Generate text T from using graph-to-text LLM - 5:

else if

LLM-Led

then - 6:

Generate initial text from full graph G using graph-to-text LLM - 7:

Apply text summarization (abstractive or extractive) to producing T - 8:

return T

|

3.1. Modeler-Led: Algorithms for Conceptual Model Simplification

By definition, a model is a

simplification of a phenomenon. As the same phenomenon can be modeled at several levels of granularity, different schools of practice exist in the modeling community. For example, the KISS approach aims at the simplest model and only adds content when strictly necessary, whereas the KIDS approach starts with the most comprehensive model possible and then consider simplifying it in light of the available evidence [

72]. Intuitively, two schools of thought distinguish the prevention of complexity (keeping a model simple) from post-hoc simplification (allowing a model to get complex before simplifying it) [

73,

74]. As showed algorithmically, these two approaches could produce different causal maps given the same evidence base [

75]. For example, two concepts could be linked by more intermediary nodes, or connected by more alternate pathways.

Automating a simplification process based solely on the model can be challenging, as we lack information about the intended

use of the model (e.g., an apparently unnecessary intermediate between two concepts may have been highly meaningful for the model commissioner) and we cannot measure the

impact on decision-making activities (e.g., is removal of edges a simplification or error removal?). While the literature on graph reduction offers many options (e.g., sparsification, coarsening, condensation [

76]), these options are neither all applicable to causal maps nor able to provide a sufficient level of controls for modelers. In this section, we developed a series of simplification for causal maps that can be adapted by modelers based on their own needs. That is, the algorithms below are not intended to be universally applied to simplify models: rather, we provide them as tools that can be tailored by users, and we recommend that their applications consider the rationale for simplification, the expected effect, and the risks for validity [

73].

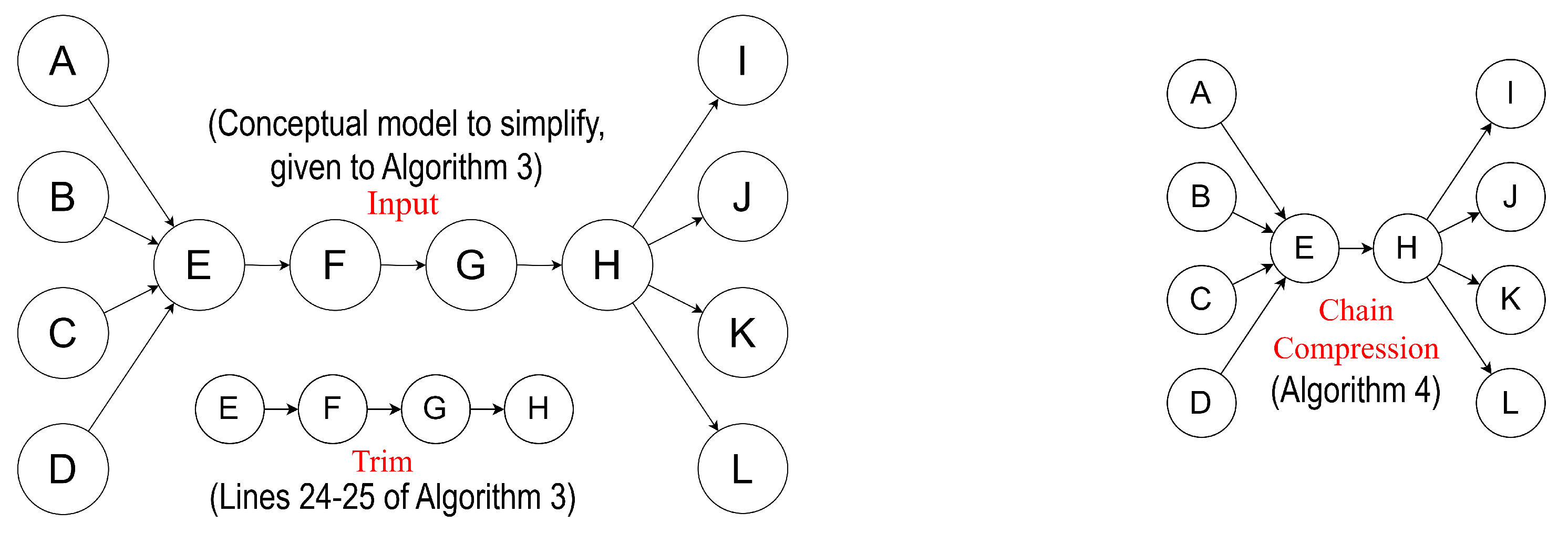

The overall simplification process is orchestrated by Algorithm 2, which simplifies the conceptual model by progressively eliminating structural elements that do not contribute meaningfully to its overall connectivity or flow. It begins with a typical reduction step that removes edges pointing from a node to itself (i.e., self-loops). Then it relies on Algorithm 3 to prune excess connections from highly connected nodes by removing their least central neighbors, helping to reduce noise in dense regions of the models, which may have been over-detailed. The core of the algorithm is an iterative process that alternates between identifying and removing linear chains of nodes that serve only as pass-through points (via chain compression in Algorithm 4; see

Figure 5—bottom), and trimming peripheral nodes that function purely as sources or sinks (i.e., endpoints with only incoming or outgoing edges; see (

Figure 5—top). By repeating these steps until no further changes occur, our algorithm gradually distills the model down to its essential structure, simplifying over-detailed areas and intermediate concepts while preserving the key relational backbone.

In dense parts of the conceptual model, pruning (Algorithm 3) preserves the most important nodes while removing their less important neighbors to reduce density. The user controls the removal process by stating that important nodes can keep up to neighbors, which we experimentally set to 2.

The Chain Compression (Algorithm 4) identifies and collapses linear chains in the conceptual model. Starting from a given node, the algorithm traverses backward to find the chain’s head and forward to find its tail, so that intermediate nodes (i.e., not involved in a branch or cycle) can be skipped. To avoid concurrent modifications on a data structure, the skipped nodes and edges are not directly removed by the algorithm; rather, they are identified and returned for removal. As a result, the conceptual model maintains connectivity and cumulative causal weights without intermediate concepts.

| Algorithm 2 Function SimplifyGraph: input graph G↦ simplified graph |

- 1:

▹ Initialize node and edge counts to detect convergence - 2:

- 3:

- 4:

- 5:

- 6:

Remove all self-loops from G - 7:

for each node do ▹Step 1: Prune neighbors via betweenness centrality - 8:

Prune(u, 2, betweenness_centrality) ▹ See Alg. 2 - 9:

for each do - 10:

Remove edge from G - 11:

Remove all nodes with degree 0 from G ▹Step 2: Remove isolated nodes - 12:

while

or

do

▹Step 3: Iteratively compress/trim model - 13:

if not then - 16:

- 14:

- 15:

end if - 16:

▹ Nodes identified as non-removable - 17:

▹ Nodes marked for deletion - 18:

for each node do ▹Step 3.1: Try to compress chains through u - 19:

ChainCompression(G, u, ) ▹ See Alg. 4 - 20:

if then - 21:

- 22:

else if then - 23:

- 24:

if in-degree or out-degree then - 25:

▹Step 3.2: Trim sources and sinks - 26:

Remove all nodes in from G - 27:

▹ Update node and edge counts for convergence check - 28:

- 29:

- 30:

Remove all nodes with degree 0 from G ▹ Remove new isolated nodes - 31:

return

G

|

| Algorithm 3 Prune: Remove Least-Central Neighbors |

| 1: | Input: node, sub (neighbors of node), max (maximum edges to keep), centrality function f, graph G |

| 2: | Output: Set of nodes to remove from node’s outgoing edges |

| 3: | function Prune(node, sub, max, f, G) |

| 4: | | ▹ Get centrality for each neighbor |

| ▹ Sort neighbors by increasing centrality value |

| 5: | nodes in , ordered by centrality (lowest first) |

| 6: | if then | ▹ If no edges should be retained, remove all neighbors |

| 7: | return |

| 8: | else | ▹ Keep the top ‘max‘ most central neighbors; remove the rest |

| 9: | return first elements of |

| Algorithm 4 Chain Compression in a Graph |

| 1: | Input: Directed graph , node , set of preserved nodes |

| 2: | Output: Set of nodes to remove , and Boolean indicating whether compression occurred |

| 3: | function ChainCompression(G, v, ) |

| 4: | if or then |

| 5: | | ▹ Set of candidate nodes to remove (initially just v) |

| 6: | | ▹ Pointer used to traverse the chain |

| 7: | | ▹ Aggregated weight of the compressed chain |

| ▹— Backward traversal: follow unique incoming edges— |

| 8: | while w has exactly one incoming edge and u has exactly one outgoing edge do |

| 9: | if then |

| 10: | return S, False | ▹ Cycle detected; abort compression |

| 11: | | ▹ Add u to the set of removable nodes |

| 12: | | ▹ Multiply cumulative weight |

| 13: | | ▹ Move pointer one step backward |

| 14: | | ▹ is now the start of the chain |

| 15: | | ▹ Reset pointer for forward traversal |

| ▹— Forward traversal: follow unique outgoing edges— |

| 16: | while w has exactly one outgoing edge and u has exactly one incoming edge do |

| 17: | if then |

| 18: | return S, False | ▹ Cycle detected; abort compression |

| 19: | | ▹ Add u to the set of removable nodes |

| 20: | | ▹ Multiply cumulative weight |

| 21: | | ▹ Move pointer one step forward |

| 22: | | ▹ is now the end of the chain |

| 23: | if then |

| 24: | Add edge to G with weight | ▹ Insert compressed edge |

| 25: | return S, True | ▹ Return removable nodes and success flag |

| 26: | return ∅, False | ▹ No compression performed |

3.2. LLM-Led: Applying Abstractive and Extractive Summarization

For a fair comparison of modeler- and LLM-led summarization, the summaries should have a similar length. Otherwise, results may not reflect a difference in methods but rather a difference in length. For example, metrics that rely on overlap between generated words and a ground-truth summary would be affected by length: longer summaries are more likely to contain the target words, thus, boosting recall-oriented scores. Conversely, precision-oriented scores may be lower with longer summaries, as there are more chances for hallucinations. To guarantee a fair comparison, we set the length of LLM-led summaries to match the length of the modeler-led summaries. This is accomplished by setting a ratio parameter (from original text to summarized text) of 0.114 or by chunking, as explained at the end of this section.

To create summaries with extractive methods,

sentences are tokenized, then clustered by K-means, finally the summarization occurs. Clustering reduces redundancy by grouping similar sentences and preserves coverage by ensuring that each semantic group is represented, thus, balancing content diversity and concision. In contrast, abstractive summarization tokenizes

words to generate new sentences; thus, we cannot pre-process the data into clusters. The larger number of tokens can exceed a model’s token limits, often ranging from 512 to 4096 tokens. A straightforward solution would be to use up the token limit, e.g., by processing the first 512 tokens followed by the next batch of 512 tokens. However, such fragmented inputs can yield confused outputs for abstractive summarization models, since they risk breaking the text mid-sentence or mid-thought. Preserving semantic coherence, thus, requires meaningful ‘chunks’, which may be below the token limits: for example, if coherent units have 200, 300, and 150 tokens then the first batch would have 200 + 300 = 500 tokens (<512). The well-established technique of ‘chunking’ or ‘multi-stage summarization’ divides the input into coherent units that fit within each model’s limit. Given that the recent open-source for conceptual model produces paragraphs that have a coherent theme [

13], we perform chunking at the level of paragraphs (Algorithm 5). Then, each batch is summarized and summaries are concatenated (Algorithm 6).

Algorithm 5 Chunking for Abstractive Summarization:

(text, tokenizer, max_tokens) ↦ list of batches of paragraph

|

| 1: | paragraphs ← split text by newline | ▹ Split input text into individual paragraphs |

| 2: | batches | ▹ Initialize empty list to hold paragraph batches |

| 3: | total_tokens ← tokenizer(text) | ▹ Count total tokens in the input text |

| 4: | batch_length | ▹ Estimate number of paragraphs per batch |

| 5: | div ← total_tokens | ▹ Expected number of tokens per batch |

| 6: | | ▹ Initialize batch index |

| 7: | previous_difference ← total_tokens | ▹ Track previous token difference |

| 8: | difference | ▹ Initialize current token difference |

| 9: | for each paragraph in paragraphs do |

| 10: | if paragraph then | ▹ Ignore empty lines |

| 11: | temp ← batch[i] concatenated with paragraph | ▹ Try adding paragraph to current batch |

| 12: | tokens ← tokenizer(temp) | ▹ Token count of temporary batch |

| 13: | diff ← |tokens − div| | ▹ Difference from expected token count |

| 14: | if previous_difference < diff or tokens > max_tokens then | ▹ Too far from target or over limit |

| 15: | | ▹ Start a new batch |

| 16: | batch[i] ← paragraph | ▹ Initialize new batch with this paragraph |

| 17: | previous_difference ← tokens | ▹ Reset token difference tracker |

| 18: | else |

| 19: | batch[i] ← temp | ▹ Append paragraph to current batch |

| 20: | previous_difference ← diff | ▹ Update token difference |

| 21: | return batches | ▹ Return the list of completed batches |

While chunking (Algorithm 5) preserves paragraph integrity (we never split within a paragraph), it may nonetheless introduce discontinuities across chunks. For example, thematic links between consecutive paragraphs may be weakened if they are processed separately as summarization (Algorithm 6) may alter the order in which ideas connect across boundaries: the last paragraph of chunk

i may originally flow into the first paragraph of chunk

, but there is no guarantee that summaries preserve such connections. We mitigated this risk by ensuring chunks followed the paragraph order produced by the graph-to-text process, which was already designed for thematic coherence. However, we acknowledge that residual discontinuities may remain, and addressing this through overlap strategies or discourse-aware summarization is an area for future work.

| Algorithm 6 Generate Abstract |

| Input: Text, ModelName, Tokenizer, MaxTokens, Optional GroundTruthSummary |

| Output: Summary paragraphs generated from Text |

| 1: | function GenerateAbstract(Text, ModelName, Tokenizer, MaxTokens, GroundTruthSummary) |

| 2: | Batches ←Chunking(Text, Tokenizer, MaxTokens) | ▹ See Alg. 5 |

| 3: | Summarizer ←Pipeline(ModelName) | ▹ Initialize summarization model |

| 4: | if GroundTruthSummary then | ▹ If there is truth summary, use its length |

| 5: | WordCount(GroundTruthSummary) ÷ WordCount(Text) |

| 6: | groundTruthParagraphs ← ParagraphCount(GroundTruthSummary) |

| 7: | else |

| 8: | | ▹ Default length ratio |

| 9: | groundTruthParagraphs | ▹ Default number of output paragraphs |

| 10: | results | ▹ We will track the list of batches and associated summaries |

| 11: | for do |

| 12: | if Batchesi is not empty then |

| 13: |

MaxSummaryLength ←WordCount(Batchesi) | ▹ Buffer |

| 14: | Sentences ←Summarizer(Batchesi, MaxSummaryLength) |

| 15: | Clean-up sentences | ▹ Depends on summarization; see paragraph below ▹ |

| Append sentences to new or existing paragraph based on expected summary |

| 16: | if groundTruthParagraphs then |

| 17: | Sentences ← Sentences | ▹ Add paragraph break |

| 18: | else |

| 19: | Sentences ← Sentences | ▹ Continue sentence inline |

| 20: | Append (Batchesi, Sentences) to results | ▹ input text and its summary |

| 21: | return results |

Various models have different pre-processing and post-processing techniques in encoding/decoding, which can result in poorly formatted text. For example, T5-base does not capitalize the first letter of a sentence. Algorithm 6, thus, includes a model-specific ‘clean-up’ step (line 15) in which we fix capitalization (for BART), spaces around punctuations (for BART, T5, LED), spaces around parentheses (for BART and T5), and line breaks (for BART and T5). These fixes were not needed when using GPT.

Our open-source

implementation is available online on a permanent repository at

https://doi.org/10.5281/zenodo.15660803, accessed on 26 September 2025. For extractive algorithms, our Python implementation used gensim 3.8.2 for TextRank (no token limit) and bert-extractivesummarizer 0.10.1 for both Longformer-Ext (token limit 4096) and BERT (token limit 512). For abstractive algorithms, we used transformers 4.35.2 for BART (1024 tokens), T5 (512 tokens), and LED (16,384). We used OpenAI 1.3.3 for GPT-4-Turbo (128,000 tokens). Given the token limitations, we used 11 batches to fit within BART’s limits and 24 batches to work with T5. The high token limit for LED was sufficient given our text size; thus, this specific model did not require chunking.

Theoretically, GPT has a high token limit that does not require chunking either. However, remember that we need to control the length of the generated summaries for fair comparison with the modeler-led solution. When using LLMs such as GPT, setting a maximum number of output tokens to achieve a desired length did not work (it was always well below the limit); thus,

in practice we used chunking to better control the summary’s length.

5. Discussion

In this paper, we proposed a new solution that improves the accessibility of large conceptual models reducing their size both structurally and textually. In particular, we have empirically shown that our proposed modeler-led algorithms for reducing the size of causal maps leads to a summary that is comparable to methods based on language models, while providing the high faithfulness and fluency scores achieved by few language models (e.g., BART).

While our study includes multiple categories of scores (e.g., overlap between summaries, readability, faithfulness and fluency), the ability of summaries to be used is ultimately the most important criterion. Future works should, thus, consider user studies to examine how summaries support key activities for participatory modeling such as identifying key causal mechanisms or facilitating group learning. Such investigations may also discover how the parameters involved in the generation of summaries (e.g., tone, length, selection of conceptual model components) depends on the list of intended activities, the characteristics of the audience, and the application domain.

While deriving conceptual models from text has been the subject of numerous recent works, the reciprocal relation of generating (explanatory) text from large model has received relatively less attention. There is also extensive work in graph reduction and text summarization, but never in-tandem with one another, as we have shown in our work. Consequently, there is currently a paucity of benchmarks that contain a causal map, a textual representation of the map, and summarized versions of the text and graph. The creation of such datasets is currently very labor intensive, as subject matter experts need to summarize large conceptual models, which is a challenging task that prompted the design of our methods.

The modeler-led approach discards information early in the process, which puts it at a disadvantage with respect to summaries generated by language models. Instead of permanently deleting nodes and/or edges during simplification, we could create metadata to track which entities were simplified and why (e.g., the rule that triggered removal). A hybrid approach could leverage the metadata by examining whether the summary misses key topics and, if so, trigger a rollback by using the metadata to reintegrate parts that should not have been pruned. Such reversibility and feedback-guided simplification could make conceptual model simplification more intelligent and adaptive.

Simplifications have been studied in other applications, including graph simplifications (e.g., removing self-loops) or neural network pruning techniques such as compressing chains [

79] and node-centric pruning [

80], which resembles our removal of sinks and sources. While our algorithms are novel for conceptual modeling, we emphasize that our primary contribution is to

compare simplifications by language models vs. algorithms controlled by modelers. This controlled simplification through explicit, documented rules (e.g., chain compression, pruning by centrality) can give modelers an advantage on interpretability by contrast with LLM-led summarization, which invokes opaque transformations that make it harder to trace why certain information is included or omitted. However, this potential for higher interpretability depends on using rules that are appropriate for the application domain (e.g., self-loops are valid self-monitoring mechanisms that should not be discarded in process diagrams) and that modelers can explain to the target audience. Our algorithms offer direct access to adapting the simplification controls, for instance by changing chain compression to preserve short chains, or using a different centrality measure to determine what is ‘important’. In particular, these centrality measures provide modelers with tools to control the consideration given to the different structural roles of the nodes in the model, such as by giving higher values to nodes that are involved in multiple pathways (e.g., betweenness or flow). Our open-source code and commented processes support researchers to adapt our work for their simplification needs. While we emphasize structural simplifications, semantics (e.g., concept labeled as ‘decision’ or ‘risk’) could play a role in making some nodes exempt from simplification, which can be explored in future works through interactive or ontology-informed model simplifications.