1. Introduction

With the popularity and wide range of applications of machine learning techniques, specialized techniques for attacks on models trained using machine learning methods, both in theoretical considerations and in the real world, have emerged. The attacks can have various goals—including disrupting the correct operation of the model (e.g., by increasing the number of incorrect decisions generated by the model), reducing the reliability of decisions made by the model, reproducing the training set, reproducing the model, etc. Their common feature is the use of specific properties of models and machine learning techniques. Such attacks are referred to as Adversarial Machine Learning attacks (AML) [

1,

2].

It is necessary to ensure security in systems that automate business processes and use machine learning techniques in decision making. In practice, there is a widespread gap in designing security for these systems. In particular, the vulnerability of machine learning models to adversarial attacks is not taken into account. This is especially true for attacks in which the attacking party intentionally disrupts the data passed to the ML model in such a way that the disruption is as small as possible but causes as large an effect as possible. The least possible disruption means disruption of data conducted in a way that makes it difficult to detect, often below the threshold of detection by a human not supported by additional tools. The greatest possible effect means the achievement of a specific attack goal, such as the misclassification of data. In particular, misclassification involves the indication of a specific class preferred by the attack method.

Although adversarial attacks on machine learning models have been extensively studied in the context of images and text [

3,

4], relatively fewer works address robust, model-agnostic detection of such attacks in tabular data [

5]. Existing detection methods often assume partial knowledge of the model internals (e.g., gradients or layer outputs), rely on specialized network architectures, or focus on a single class of attacks. In contrast, our work proposes a universal, black-box detection approach for tabular classifiers—irrespective of their underlying training mechanism—by employing a surrogate model and a suite of diagnostic attributes derived from rough-set-based approximation reducts [

6].

This approach aligns with the broader goals of interpretable machine learning, yet it introduces two key innovations:

Unified Detection via Local Model Diagnostics. Rather than relying on gradient-based or distribution-shift heuristics, we construct a surrogate model that emulates the black-box classifier’s behavior and produces explanatory features—such as local consistency measures and uncertainty scores—that naturally expose adversarial manipulations. By treating these features as inputs to a secondary detection classifier, our framework seamlessly couples interpretability with robust attack monitoring.

Comprehensive Evaluation Across Diverse Attacks and Datasets. We systematically test the proposed detection mechanism on 22 real-world tabular datasets and seven distinct attack methods (both gradient- and sampling-based). Our experiments demonstrate consistently high detection accuracy, often exceeding 90% balanced accuracy, even in challenging scenarios where the type of attack or dataset is unseen during training (one-attack-out and one-dataset-out cross-validations).

Compared to our earlier studies [

7,

8], which examined a limited subset of models and adversarial attacks, the present work advances the methodology in several ways. First, we extend the evaluation to a wider range of attacks, including those designed for neural networks. Second, we incorporate feature selection into the detection framework, improving the interpretability of diagnostic attributes. Third, we perform sensitivity analyses, showing that the method maintains strong detection performance even at low perturbation levels, where adversaries typically operate. Finally, we tackle the more complex task of attack-type classification, thereby testing the discriminative capacity of diagnostic attributes in an eight-class setting.

The paper is organized as follows:

Section 2 provides a broad context for adversarial attack methods in the literature;

Section 3 focuses on the general definition of adversarial attack and describes different approaches for tabular data;

Section 4 provides the background of the proposed method for detecting adversarial attacks;

Section 5 presents the results of experiments, including a sensitivity analysis; and the paper ends with conclusions and limitations.

2. Related Works

Research on adversarial attacks on models obtained by machine learning (ML) methods began with discovering adversarial examples (interfering, misleading examples) in image classification models, in which small, carefully designed perturbations in the input data can lead to misclassification. An adversarial example contains intentionally modified input data. The changes made are usually imperceptible to a human but are enough to exploit vulnerabilities in the decision boundaries of the ML model, leading to incorrect results. Szegedy et al. [

3] were among the first to document this phenomenon, revealing that neural networks are highly susceptible to subtle modifications. This observation has generated great interest, and the widespread use of neural networks has prompted the need to understand and counteract these vulnerabilities [

9]. Vulnerability to attacks has been of particular concern in the context of practical applications of machine learning models, such as autonomous vehicle control or facial recognition, where perturbed data can lead to decision errors with serious consequences—for example, making an autonomous car misread traffic signs or making a security system fail to recognize authorized individuals [

10].

An example of an attack method is the Fast Gradient Sign Method (FGSM) proposed by Goodfellow et al. [

4]. The method uses gradient optimization to efficiently generate adversarial examples. Attacks conducted using gradient optimization have proven effective even with minimal modifications to the input data [

11]. Methods of attacks on ML models are carried out in two main scenarios: white box (the attacker is fully aware of the architecture and parameters of the model) and black box (the attacker’s access to the attacked model is limited, usually only queries to the model are possible). An important step in the development of research on adversarial attacks was the study of the extent of transferability (transferability—analogy to transfer learning) of attacks. Papernot et al. [

12] showed that adversarial examples created for one model can fool other models trained on similar data. This concept enables attacks even in restricted environments, such as cloud-based machine learning services [

13].

The aforementioned studies and methods have focused on investigating attacks on models for classifying image data, ignoring the specifics of machine learning models operating on tabular data, commonly used in applications such as finance, healthcare, or security. Tabular data, unlike images or text, are characterized by specific structures and limitations, such as the variety of data types (symbolic and numeric, among others) and the frequent relationship between variables describing the data. These features make it more challenging to create hard-to-detect adversarial examples for tabular data, as the interference must remain consistent with the actual constraints and dependencies present in the data. In this type of attack, it is necessary to use strategies that include the specific characteristics of the data and its context so that the modifications made are reliable and difficult to detect [

14]. Realistic attacks on tabular data require an approach that takes into account the specific characteristics of individual variables, both numeric and categorical, and maintains consistency with expected value constraints. Attacks that take into consideration constraints on attribute values, or changes in the values of selected groups of attributes, are key to hiding the fact of an attack—in tabular data, even small changes must be within realistic limits [

15]. For example, in the context of finance, modifications can focus on critical variables, such as transaction amounts or frequency of operations, with the statistical characteristics of these variables being preserved to fool models that detect financial fraud.

The examples cited above highlight the risks that adversarial attacks pose to computer systems using ML models. This is especially true for systems used in vital areas of life. Areas such as road safety (attacks on traffic sign recognition models [

16]), cybersecurity (deception of IDS systems [

17], malware camouflage [

18]), healthcare (modification of medical data [

2]), or finance (fraud concealment [

14,

19]) can be mentioned.

Recent advances in adversarial machine learning have increasingly focused on black-box detection methods for tabular data, addressing the unique challenges posed by structured datasets. He et al. [

20] conducted a comprehensive empirical analysis of imperceptibility characteristics in adversarial attacks on tabular data, proposing seven key properties to characterize such attacks, including proximity, sparsity, and feature interdependencies. This work highlighted the trade-off between attack imperceptibility and effectiveness, providing valuable insights for developing more robust detection mechanisms.

Vadillo et al. [

21] presented a comprehensive survey of adversarial attacks in explainable machine learning contexts, examining threats against both models and human interpretation. Their analysis extended beyond traditional attack scenarios to consider how adversarial examples can manipulate explanation systems themselves, which has direct implications for surrogate-based detection approaches like ours.

The field has also seen significant developments in generative approaches to adversarial attacks on tabular data. Dyrmishi et al. [

22] adapted deep generative models as adversarial attack strategies specifically for tabular machine learning, addressing the challenge of preserving domain constraints in adversarial examples. This work demonstrated that generative models can create more realistic adversarial examples that maintain structural validity in tabular domains.

It is worth noting the uneven distribution of research on the creation and analysis of the properties of attack methods between models that process images and models that process tabular data. For example, a search of the arXiv database yields an eleven-fold difference in the number of publications in favor of image data. For the phrase “adversarial machine learning image data”, 1898 items were found, while for the phrase “adversarial machine learning tabular data”, only 163 were found (accessed on: 9 September 2025).

3. Adversarial Attacks

Adversarial attacks on ML classifiers carried out at the inference stage (i.e., decision making by the trained model) can be presented as an optimization problem. Their goal is to find perturbations that lead to misclassification of input data. For typical adjacency attacks, we consider classifiers that, based on a vector of input data (where: is the Cartesian product of conditional attributes) predict a class y using the model , where k is the number of classes, and where is a loss function that measures the classification error between the predicted labels and the true labels y. In this context, is a vector of adversarial data.

The mathematical problem of generating an adversarial example can be formulated as an optimization problem:

This assumes that

remains in a certain neighborhood of

, determined by the distance function

used and the maximum distance of

[

11]. The optimization itself can be carried out by various methods—the optimization method used is, in addition to the distance function used, the main factor distinguishing the different types of adversarial attacks.

This study focuses on the detection of attacks on the integrity of the model, made at the inference stage using a previously trained model, belonging to the type of evasion attacks. In addition, it is assumed that the attack detection method is based on the assumptions of black box. It is assumed that the method only has access to model inference, i.e., to the original set of examples on which the version of the properly functioning (i.e., unattacked) ML model was trained and validated.

Attack Strategies for Tabular Data

Adversarial attacks on models that process tabular data differ from those used in image or text analysis [

5]. Tabular data is characterized by a variety of feature types, domain constraints, and complex relationships between attributes describing examples. These peculiarities require the adaptation of both attack strategies and detection methods.

When attacking models that classify tabular data, two directions are distinguished—the use of heuristic attacks and the adaptation of general methods to the specifics of tabular data.

An example of a heuristic method is PermuteAttack [

23], which is dedicated to tabular data, including those containing categorical variables. During the implementation of the attack, known domain constraints (e.g., non-negative age, marriage date later than birth date, etc.) can be included. During the attack, a permutation of the data structure is made—feature values or changes in their order—to disrupt their interpretation by the model, while maintaining semantics and type compatibility.

Another category of attacks easily applied to tabular data is based on sampling the decision space. These attacks do not require access to gradients or decision space continuity and are a natural choice when attacking decision-tree-based models. An example of such an attack is HopSkipJump [

24], for which, when implemented for tabular data, it is only necessary to specify the available categories for categorical data and limit the range of perturbations to acceptable values. The number of features that can be changed at the same time can also be limited to reduce detectability.

Another strategy is to use a method that reproduces the local gradients of the loss function. The best known of such methods is the Zeroth-Order Optimization (ZOO) attack [

25], which works directly on the value of the objective function. Given an approximation of the gradient values, it selects the variables that have the greatest impact on model prediction. The method requires, for each model under attack, limiting the change in feature values to their acceptable ranges (e.g., numeric values within a range or only available categorical values).

Gradient attacks, such as FGSM (Fast Gradient Sign Method, also known as the FGM method) [

4], PGD (Projected Gradient Descent) [

26], and BIM (Basic Iterative Method) [

27], adapt to tabular data by modifying the loss function to account for the specifics of the data. For tabular categorical features, gradients can be used to identify the most susceptible features, which are then changed to other values consistent with possible categories. Gradient methods can also be used when acquiring a surrogate model for the attacked model [

5].

The last category of attacks on tabular models is generative attacks, which take advantage of the generalization ability manifested by large language models, to create adversarial examples capable of disrupting the model [

28].

4. Adversarial Attacks Detection Method

Explainable Artificial Intelligence (XAI) methods seek to provide transparency in machine learning models by offering insights into how and why particular decisions are made. Commonly, such methods employ surrogate models—simpler or more interpretable classifiers—to approximate the behavior of complex

black box systems [

29,

30]. By examining how a surrogate responds to inputs, one can infer patterns indicative of the original model’s decision processes. The present work integrates seamlessly into this landscape by constructing a surrogate model that captures local behavior around each instance and computing diagnostic attributes (e.g., neighborhood consistency and uncertainty measures), which summarize the local decision context.

Unlike more widely known surrogate-based approaches, such as LIME or SHAP, designed primarily for explanatory purposes, our method leverages these local interpretability elements for security. Specifically, the surrogate model (built using rough-set-based approximation reducts) not only demystifies decision making but also serves as the foundation for detecting anomalous behavior induced by adversarial perturbations. Such dual usage of local interpretability metrics (for both explanation and defense) extends the BrightBox paradigm [

6] toward the emerging challenge of adversarial robustness in tabular data.

The basis of the proposed attack detection method is a combination of three analytical components:

A method for creating surrogate models based on observations of the monitored model.

Analysis of diagnostic attributes extracted from the surrogate model.

Creation of classifiers that recognize the possibility of an attack for a specific data sample, operating based on diagnostic attributes.

The proposed method is based on the assumption that during the preparation of the surrogate model, the observed model is not subject to attacks—it learns such of its behavior as occurs during the original observation. Based on the prepared surrogate model and observation of its operation (processing the same data as the observed model), diagnostic attributes are extracted. Then, based on these attributes, classifiers are prepared to process the diagnostic attributes on the fly to detect the case of deliberate change of data values—an adversarial attack. The attacks are distinguished not based on the characteristics of the data themselves but based on what happens to the data during classification—for example, changes in the characteristics of the neighborhood of the classified examples are observed.

In the present study, it was decided to use a surrogate model building method based on an ensemble of approximation reducts [

31], as this approach is stable and resistant to minor changes in the input data. Ensemble methods, such as the one used here, are characterized by high stability in the sense of approximating the decisions of the underlying model, which is crucial in the context of the detection of adversarial attacks, where even small perturbations in the data can significantly affect the results.

Before the formal definition of approximation reduct, we provide an intuitive example. Consider a simplified tabular dataset used to assess loan applications (

Table 1). Each row represents an applicant, described by attributes such as income, age, and credit score. A black-box model predicts whether the loan is approved. To understand the decision for a specific applicant, we identify similar applicants—those with matching values for a subset of attributes. This subset is an approximation reduct, capturing the minimal information needed to explain the decision.

In this example, the reduct {Income, Credit Score} is sufficient to explain the decision for Applicant A, as all similar applicants with the same values received the same decision. If an adversarial attack perturbs the input slightly (e.g., changes Credit Score to 680), the neighborhood consistency may break, signaling a potential attack.

One of the key features of the surrogate model is the ability to calculate and analyze the neighborhoods of data instances. For an object , a neighborhood is defined as the set of similar objects in the training set , defined by the indistinguishability class for each approximation reduct. The neighborhood consists of observations from the reference dataset that are similar to the diagnosed instance, according to the set of reducts. Similarity is defined by the number of reducts for which two instances have the same combinations of attribute values. Neighborhood plays a key role in diagnosing the performance of a black box model, as it provides local context for evaluating the model’s decisions. By analyzing the consistency of predictions in the neighborhood—for example, whether similar instances were classified correctly or incorrectly—the surrogate model can provide insight into the causes of model errors.

To illustrate this concept more intuitively,

Table 2 shows an example neighborhood construction for an instance with three attributes. Here, reducts define similarity relations, and instances with matching reduct-based values are grouped into the neighborhood of the diagnosed object.

In this example, the diagnosed instance x has the attribute values (A, B, C). Objects belong to the neighborhood because they share reduct-defined similarities with x. Objects do not, as none of the reducts consider them indistinguishable from x. This neighborhood then becomes the basis for calculating diagnostic attributes.

After calculating the neighborhoods, the surrogate model completes the diagnosis by calculating a set of diagnostic attributes. Diagnostic attributes provide a standardized set of measures to analyze the model’s behavior through neighborhood characteristics. For each object , whose neighborhood was determined from indistinguishability relations determined by approximate reductions, the following diagnostic attributes are calculated:

Neighborhood size—the number of observations that are in the neighborhood of the observation being diagnosed, normalized as . Measures how large the neighborhood is for each instance, reflecting the number of similar instances found in the reference set.

Uncertainty—a measure of prediction uncertainty, calculated based on normalized entropy:

where

l is the number of decision classes and

is the probability of class

i for observation

x. This is a critical diagnostic attribute, measuring the surrogate model’s confidence in its predictions. Low uncertainty can indicate overconfidence about incorrect predictions, while high uncertainty suggests the model’s difficulty in making correct predictions.

Target consistency with approximations in the neighborhood—a measure of the correspondence between the target value of the diagnosed observation

and the approximate prediction values

obtained for observations in its neighborhood

, calculated as:

where

is the probability (according to the

model) of assigning a class

c for a point

z and

is an indicator function, taking the value 1 if the condition in parentheses is met and 0 otherwise.

Prediction consistency with targets in neighborhood—a measure that determines the consistency of the prediction

for the diagnosed observation

x with the target values

of the observations belonging to its neighborhood

, calculated as:

where

is the actual value of the target (label) of the observation

z and

is an indicator function, taking the value of 1 when the condition is met and 0 otherwise.

Target consistency with targets in neighborhood—a measure describing the correspondence between the target values of a diagnosed observation

and the target values

of observations in its neighborhood

, calculated as:

Targets and approximations inconsistency in neighborhood—a measure of the degree of inconsistency between the values of the target

and the approximations

obtained for observations

. It is calculated as:

where

is the approximate probability of the decision class

for the observation

.

Targets diversity in neighborhood—a measure describing the diversity of target values in the neighborhood of the diagnosed observation compared to the diversity of target values calculated for the entire set of diagnosed data. It is calculated as:

where

p is the a priori distribution and

is the distribution of decision classes in the neighborhood of

.

Approximations diversity in neighborhood—a measure describing the variation of prediction approximations in the neighborhood of the diagnosed observation concerning the variation of approximations calculated for the entire set of diagnosed data. It is defined as:

where

is the distribution of approximate predictions in the neighborhood of

.

For objects with empty neighborhoods, attribute values are implicitly determined from metrics based on

p a priori distributions. The normalized entropy used in uncertainty calculations is defined as:

where

l is the number of decision classes.

Diagnostic attributes provide a comprehensive view of the model’s performance, helping to identify potential problems such as over-fitting, under-fitting, or errors due to heterogeneity in the training data. These attributes are then used to assess the model’s predictive consistency. For example, if neighbors with the same true labels surround an instance, and the model incorrectly predicts its label with high confidence, this could indicate an overfitting problem. On the other hand, if the model shows high uncertainty in predicting the label for an instance with a large, consistent neighborhood, this may suggest a mismatch in the model (underfitting).

A key advantage of diagnostic attributes is that they provide a standardized representation, always yielding the same number of attributes regardless of dataset size or model complexity. This property makes them directly comparable across heterogeneous settings, which is particularly valuable in large-scale experimental studies. Building on prior work where they were applied for model-level diagnostics and instance-level data assessment. In this study, we extend their use to the detection of adversarial attacks by verifying the hypothesis that the analysis of diagnostic attributes can indicate a significant probability of input modification bearing the hallmarks of intentional modification. In this way, diagnostic attributes emerge as a universal diagnostic framework spanning different stages of the machine learning pipeline.

5. Results

The purpose of the work carried out was to create a method, the concretization of which is a classifier that makes it possible to detect attacks on ML models dedicated to solving classification problems and trained on tabular data.

The experiments were conducted on a set of 22 tabular datasets (presented in

Table 3), provided on the OpenML service, and prepared for classification tasks. Datasets were selected for which it was known that it was possible to build correctly working classifiers—for example, in connection with their use in data science competitions on the Kaggle platform and similar.

The datasets used in this study include collections comparable in size to those employed in industrial applications. In our earlier work [

6], we also validated the method on a dataset containing 59,427 instances and 455 attributes originating from a real enterprise environment, confirming its feasibility at production scale. Moreover, the framework can be extended to streaming data scenarios by adopting a batch-based evaluation strategy, where incoming data are processed incrementally in manageable segments. This ensures that the approach remains computationally practical for large-scale and continuously evolving industrial use cases.

The selection of machine learning methods to target attacks was conducted to diversify the types of models so that the proposed methods’ effectiveness for detecting and isolating attacks under different conditions could be evaluated. Several classifiers representing different machine learning paradigms, including linear methods and ensemble methods, were selected. Each of these models introduces different decision boundaries, learning strategies, and vulnerabilities to attacks. They also provide examples of models often found in practical implementations of decision automation systems based on machine learning.

Logistic regression (LR) acts as a simple base model, particularly vulnerable to attacks. Support Vector Machine (SVM) adds non-linear decision boundaries, increasing resistance to some attacks. XGBoost (XGB), as an ensemble model, is widely used in practice and is also the model that, on average, gives the best solutions. Moreover, the simple neural network (NN) was also considered as it can capture complex patterns in data through its layers and non-linear activations, potentially enhancing robustness against adversarial attacks.

The implementation of attacks on machine learning models was done using the ART (Adversarial Robustness Toolkit) software package available for free on the github.com platform (

https://github.com/Trusted-AI/adversarial-robustness-toolbox, accessed on 2 June 2025). For attacks not supported by ART, the implementations provided by the authors of each method were used.

Overall, experiments were conducted on four models (LR, NN, SVM, and XGB) and seven attacks (BIM, FGM, HopSkiJump, Permute Attack, PGD, Random Noise, and ZOO).

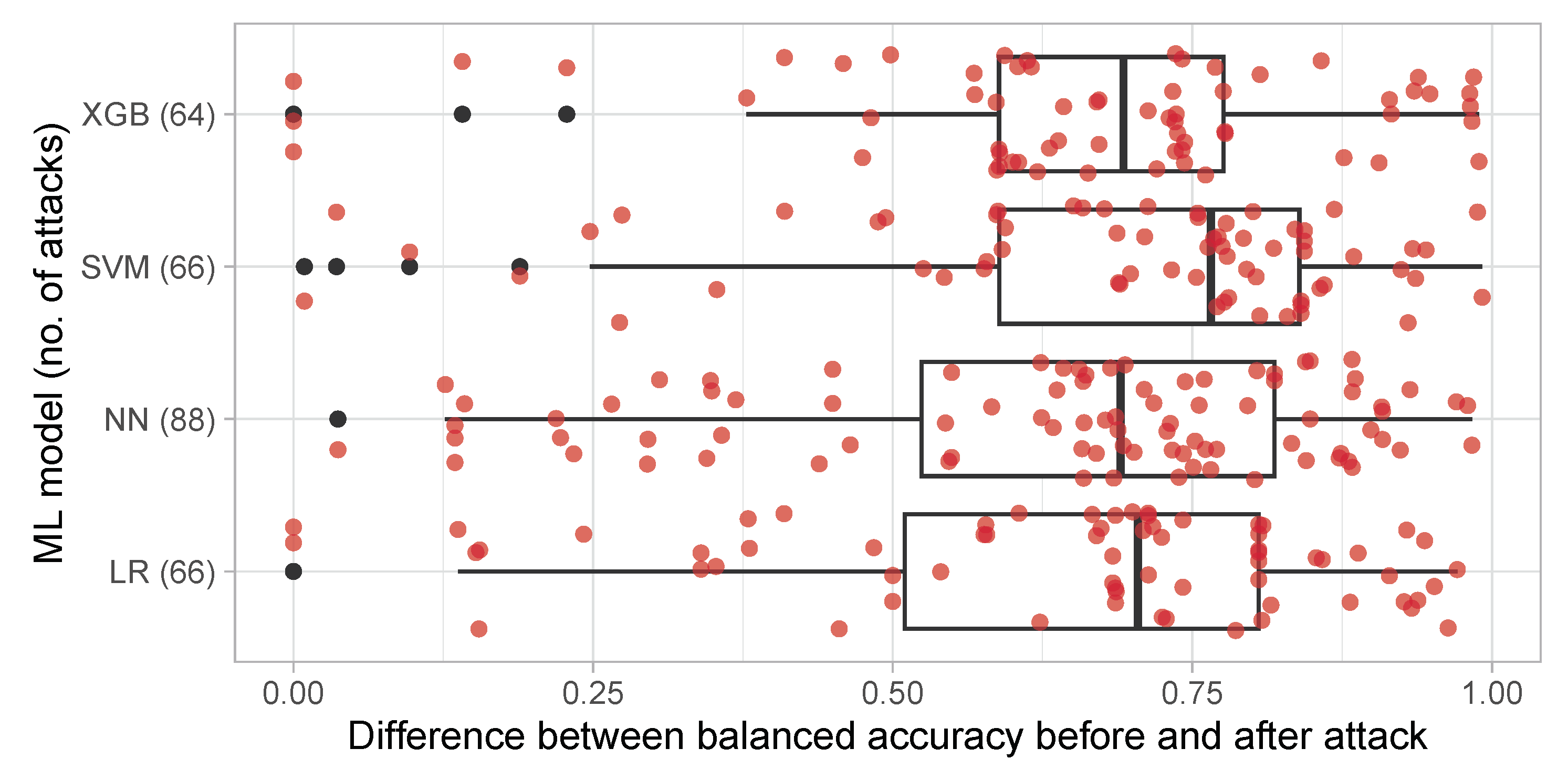

Figure 1 presents values of balanced accuracy difference between original datasets and those after adversarial attack. It can be seen that the median value of deterioration is about 0.7 in absolute value. There are also some single datasets for which conducting an attack results in no effect (the difference is equal to 0).

5.1. Adversarial Attacks Detection

With the models to be diagnosed prepared and the adversarial examples prepared, it was possible to calculate the values of the diagnostic attributes. For this purpose, a surrogate model was prepared for each of the models to be diagnosed, based on 2500 approximation reducts, for which . Verification of the appropriate degree of similarity of the surrogate model to the diagnosed model was carried out using Cohen’s kappa measure, which is used to measure the similarity of the decisions made by the diagnosed model and the surrogate model. The accepted threshold for similarity was an accepted value of Cohen’s kappa greater than 0.9. Diagnostic attribute values were calculated for each dataset analyzed and each attack method considered, and for the case where the data was unaffected by the attack.

The issue of computational cost and scalability of approximation reduct ensembles was previously analyzed in detail in our earlier study [

6]. Those experiments demonstrated that runtime depends primarily on the number of instances and attributes: for small- and medium-sized datasets, the surrogate model can be trained within a few seconds, whereas for high-dimensional or very large datasets, the process may extend to several minutes. Across all benchmarks, the average runtime was approximately 29 s, with a relatively large standard deviation (56 s), reflecting the variability in dataset size and dimensionality. The current experiments, performed on a machine with 8 CPUs and 32 GB RAM, confirm these observations and show consistent behavior with the previously reported results. The selection of 2500 reducts with

was guided by stability tests carried out in the same paper, where this configuration consistently produced stable neighborhoods and robust diagnostic attributes.

We performed a comparative experiment using Random Forest proximities to construct neighborhoods. While this approach is widely applied in machine learning diagnostics, in our experiments, the resulting neighborhoods were very large, often encompassing a majority of the dataset. Such neighborhoods do not highlight only the most similar instances, which limits their utility for detecting subtle adversarial perturbations. In contrast, reduct-based surrogates explicitly restrict neighborhoods to the most relevant comparisons, leading to more discriminative diagnostic attributes (

Table 4).

Importantly, the feasibility of the reduct-based approach is supported by our custom implementation of reduct generation in C++, which significantly accelerates computations. This ensures that ensembles of thousands of reducts can be generated and applied efficiently, making the method practical even for larger tabular datasets.

On this basis, we proceeded to construct a classifier that takes as input the aggregate values of all diagnostic attributes within the dataset, and as output returns a binary decision (attack/no attack detected) for a given data sample. In addition, the possibility of creating a classifier that can distinguish types of attacks among known attacks was tested. At this stage, aggregated data are enriched with several dataset characteristics, such as the number of classes and instances as well as the balanced accuracy of the model. A total of 59 features were analyzed, representing a vector of inputs to the classifiers.

Random Forest (RF) and XGBoost (XGB) were selected as final detectors because they represent two of the most widely adopted classification algorithms for tabular data, balancing interpretability, robustness, and computational efficiency. The obtained results were verified using cross-validation in the following variants: 10-fold cross-validation, one-attack-out, one-dataset-out, and one-model-out.

Table 5 and

Table 6 present the classification metrics for these scenarios.

The results summarized in

Table 5 and

Table 6 confirm a strong performance in the binary detection task. Both XGBoost and Random Forest classifiers consistently achieved balanced accuracy above 0.94, with precision, recall, and F1 close to 0.97–0.99, and very low false negative rates (0.02–0.10). These values demonstrate the robustness of the framework in distinguishing adversarially perturbed data from clean samples.

In contrast, the attack-type classification task remains substantially more challenging. Balanced accuracy across different validation scenarios lies in the 0.50–0.65 range, with lower precision, recall, and F1 scores. This modest performance is due to the fact that the task involves eight imbalanced attack classes, which increases complexity relative to binary detection. Detailed confusion matrix analyses revealed that the most frequent misclassifications occur between the attacks BIM–PGD, HSJ–ZOO, and PER–ZOO.

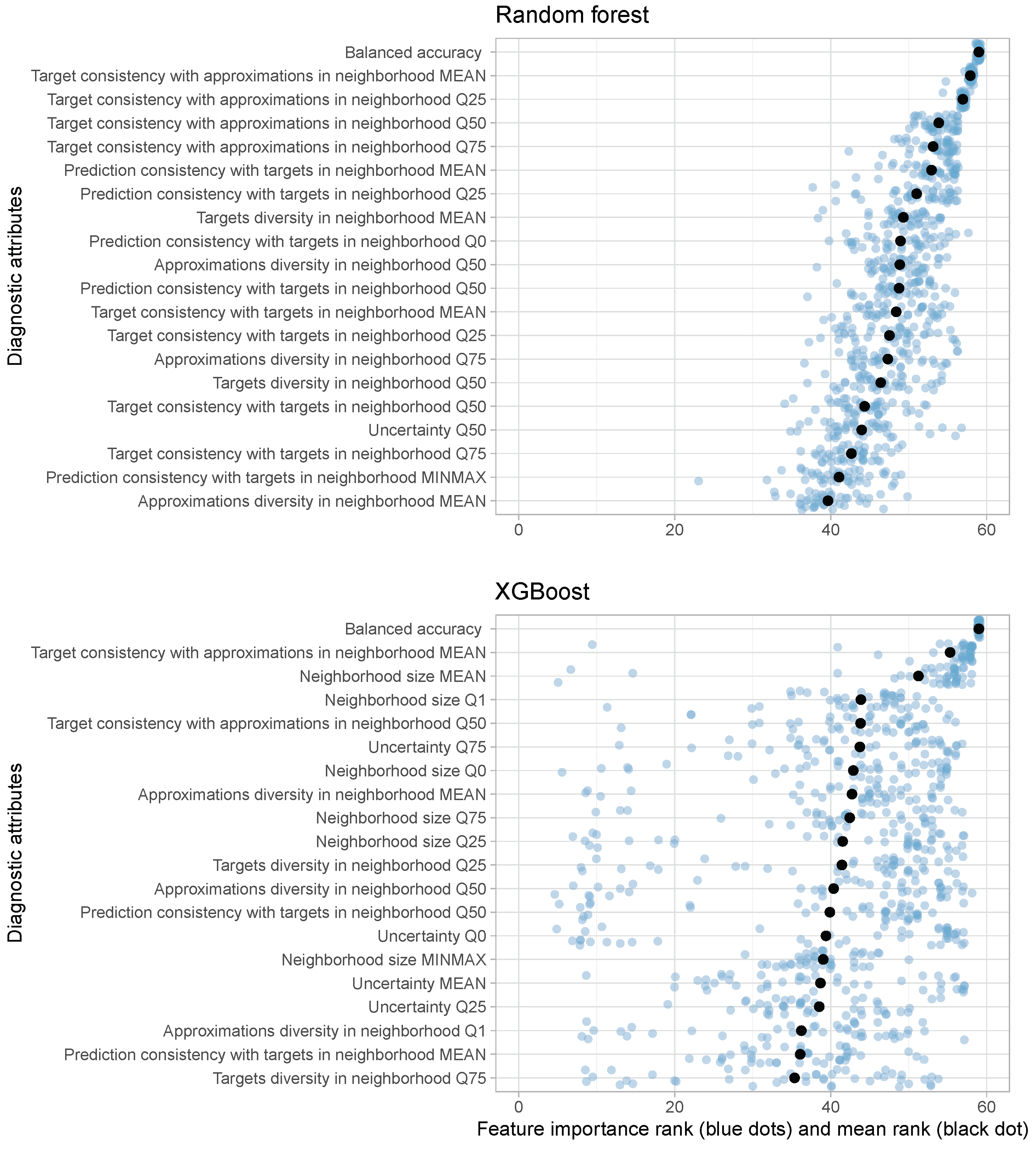

For each scenario and classifier, the importance of the features was determined and their ranking was created—a higher rank means higher importance.

Figure 2 shows the 20 most significant variables for each binary classifier.

In the analysis conducted on the importance of diagnostic attributes for both types of classifiers, the balanced accuracy set plays a key role. For the random forest classifier, the next positions are occupied by features related to the diagnostic attribute target consistency with approximations, while for XGBoost, the positions are occupied by features related to target consistency with approximations and neighborhood size.

For each of the trained classifiers detecting attacks and their type, the most significant diagnostic attribute affecting the performance of the classifier was the balanced accuracy value obtained by the model undergoing diagnostics. In real-world applications, the ratio of adversarial examples to normal data is usually very low. Balanced accuracy, by equally weighting sensitivity and specificity, can give an overly optimistic picture of classifier performance in scenarios where the attacker selectively influences the results, without a significant decrease in the balanced accuracy parameter. In addition, balanced accuracy treats all classification errors equally, while in a security context, error costs are not necessarily symmetric. An undetected attack is usually more costly than a false alarm. Taking the above into account, classifiers have been trained to detect attacks without including balanced accuracy as a diagnostic attribute. Such a procedure will also make it possible to assess to what extent the loss of balanced accuracy value information will affect the ability to detect the fact and distinguish the attack type. The results of training the classifiers are presented in

Table 7 and

Table 8.

The attack detection classifiers obtained at this stage were considered good enough for practical use. Under the most favorable scenario of classifier training, removing one attack did not significantly affect the prediction quality of the classifiers. However, for attack-type classifiers, there is a noticeable deterioration in the results obtained.

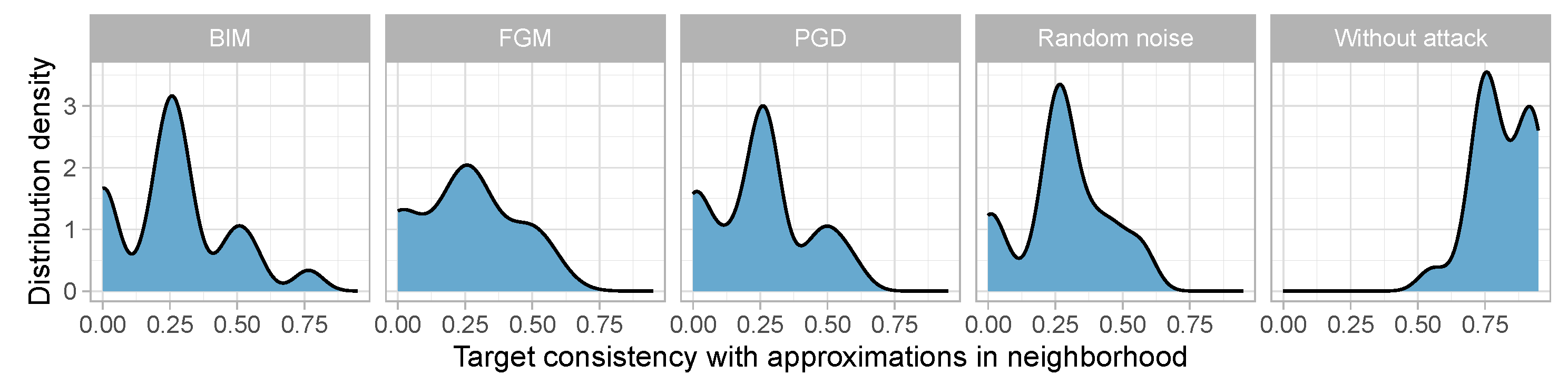

Based on the most important features selected by the model, it is possible to show what the distributions of diagnostic attributes look like in the event of an adversarial attack—

Figure 3.

The values of the selected attribute indicate that for normal model behavior (without attack), decision consistency in the neighborhood is very high, in contrast to a situation where an adversarial attack has occurred. This shows that diagnostic attribute values are human-readable measures, indicating the presence of a problem with the dataset.

Beyond aggregated plots, diagnostic attributes can also be used to derive explicit decision rules that directly expose adversarial triggers. For instance, from the experimental results, we obtained the following rule:

This type of rule demonstrates that adversarial perturbations lead to distinctive local patterns: the attacked instance becomes inconsistent with its neighborhood, while the model’s uncertainty simultaneously decreases. Such joint conditions are rarely observed in natural errors and can therefore be interpreted as a strong signal of adversarial manipulation. The ability to extract simple and human-readable rules makes the framework not only effective in detection but also transparent and actionable for practitioners.

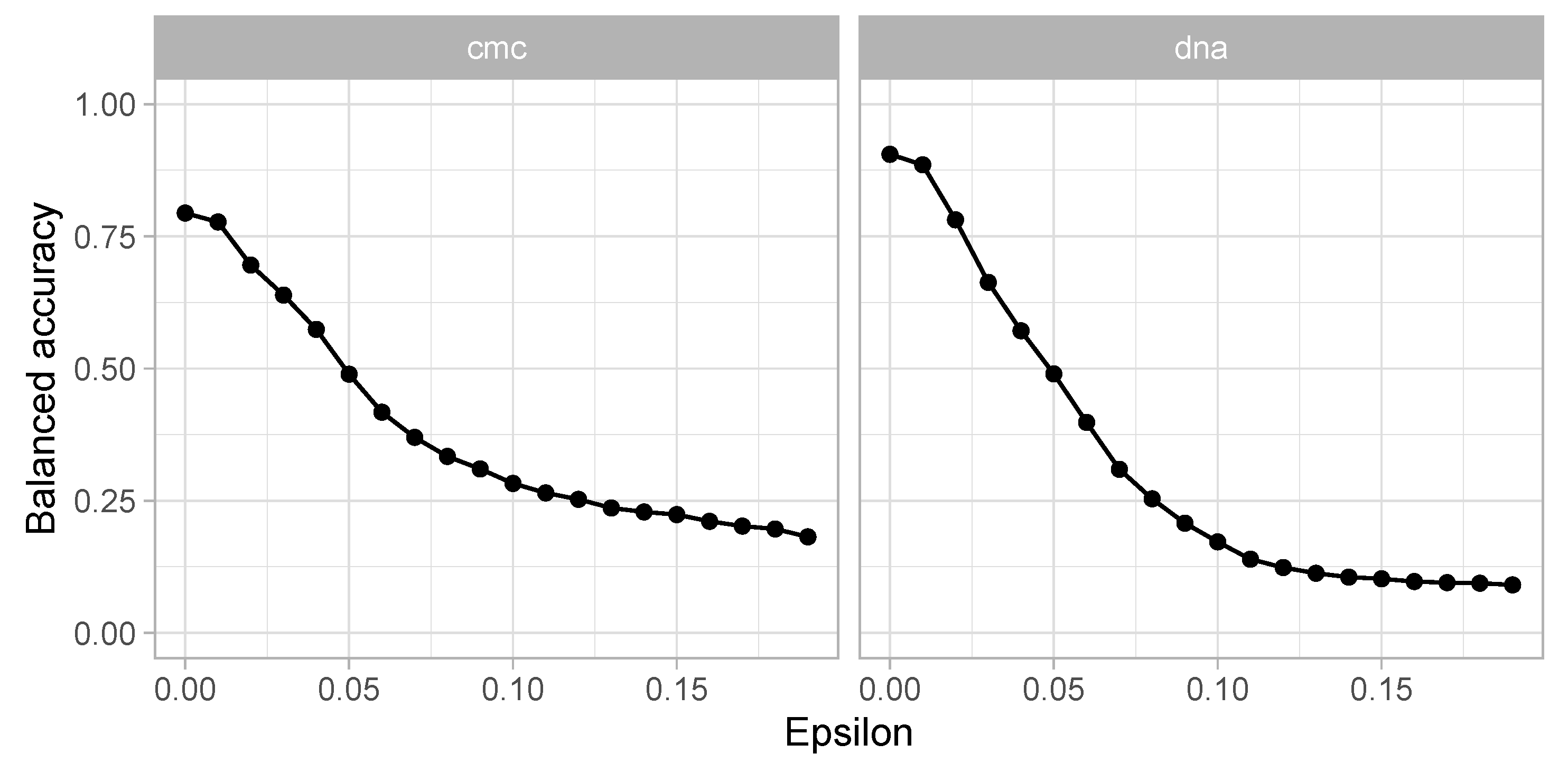

5.2. Sensitivity Analysis

The attack cases tested so far have assumed a standard

perturbation scale factor. The practical significance of this coefficient is important from the point of view of both the attacking party and the party trying to detect the attack. Lowering it will result in smaller perturbations that are more difficult to detect while having less impact on the correctness of the attacked system. This is a special case of the general problem, in which each attack assumes some level of conscious balance between its effectiveness and difficulty of detection [

20].

To test the dependence of the attack’s effectiveness on its magnitude, a sensitivity analysis was conducted to check how the balanced accuracy measure changes depending on the

value of the FGM attack. Twenty

values ranging from 0 to 0.2 with a step equal to 0.01 were verified. The results, showing how effectively the attack can reduce the effectiveness of the attacked model for two sample datasets, are shown in

Figure 4.

In the next step, the attack detection performance of the previously prepared classifiers was checked, both with and without balanced accuracy. The results are presented in

Table 9.

The high quality of the obtained classification results indicates that at the low intensity of the attack, when its overall performance quality has not yet been visibly highlighted by a decrease in the balanced accuracy value of the attacked model, the other diagnostic attributes already allow effective detection of the attack.

6. Conclusions and Discussion

The main objective of the paper was to develop, verify, and implement a method for detecting adversarial attacks on machine learning models trained on tabular data. The method was to operate in a black-box regime, without access to the architecture and parameters of the model being attacked.

The proposed method is dedicated to classification models that process tabular data. It makes it possible to detect adversarial attacks intended to induce misclassification of data—at the inference stage (evasion type attacks). It requires access to input data and the corresponding decisions made by the diagnosed model (the ML model can be treated as a black box) and the ability to run a data processing stream parallel to the diagnosed model—used to calculate diagnostic attributes and detect attacks.

The method is based on two key elements: a surrogate model approximating the performance of the diagnosed model and a set of diagnostic attributes extracted from the surrogate model reflecting the model’s behavior in the context of the local environments of the analyzed examples. Based on these attributes, a trained classifier detects attack cases for a given data. The proposed approach, with high efficiency measured by balanced accuracy, enables the detection of intentional data changes used to disrupt machine learning models.

The present study focused on validating the application of approximation reduct ensembles as surrogates for adversarial attack detection. An interesting future research direction is the exploration of alternative surrogates, in particular random-forest-based approaches, which may offer a complementary way of constructing neighborhoods and diagnostic attributes.

6.1. Dual-Use Considerations and Safe Deployment

While the proposed detection method demonstrates significant promise for enhancing ML security, the adversarial robustness research has an inherent dual-use nature. The detailed exposition of diagnostic attributes and their thresholds could potentially enable adversaries to develop adaptive attacks specifically designed to evade detection mechanisms. For instance, attackers aware of our reliance on neighborhood consistency measures might craft perturbations that maintain local coherence while still achieving misclassification objectives. The same applies to the sensitivity analysis, where revealing detection capabilities at low perturbation scales could guide adversaries toward identifying optimal attack magnitudes that balance effectiveness with detectability.

To mitigate these risks, we recommend several safe deployment strategies:

Implementing ensemble-based detection systems that combine multiple orthogonal detection approaches to increase adaptation costs for adversaries;

Employing dynamic threshold adjustment and periodic retraining of detection classifiers to maintain robustness against evolving attack strategies;

Restricting detailed technical specifications in production deployments while maintaining transparency about general detection capabilities;

Establishing continuous monitoring frameworks that can identify when detection performance degrades due to adaptive adversarial behavior.

These considerations highlight that adversarial detection represents an ongoing evolutionary process rather than a static solution, requiring continuous adaptation to maintain effectiveness against sophisticated adversaries who may leverage publicly available detection methodologies to refine their attack strategies.

6.2. Limitations

The experimental setup presents several constraints that must be acknowledged. Experiments were conducted on 22 tabular datasets from OpenML, using 7 attack methods and 4 machine learning models. While these datasets cover diverse application domains, the controlled laboratory setting does not reflect the complexity encountered in real-world deployments where data characteristics, model architectures, and attack patterns exhibit greater variability.

The method relies on a fundamental assumption that surrogate models are constructed using clean, unattacked data during the initial training phase. This assumption differs significantly from actual deployment scenarios where business data distributions drift continuously, necessitating regular model updates and maintenance. The current approach does not address surrogate model update strategies or the incremental computational costs required to maintain detection capabilities as underlying ML models evolve through retraining cycles. It also does not tackle the problem of poisoning attacks.

The evaluation framework did not incorporate adaptive adversaries who specifically target the detection mechanism. Real-world attackers may develop strategies to circumvent detection by exploiting knowledge of diagnostic attribute calculations or engineering perturbations that preserve local neighborhood consistency while achieving misclassification goals. The absence of such adaptive attack scenarios in the experimental design represents a gap that could impact the practical robustness of the detection approach.

In its current form, the method is designed to detect evasion attacks at the inference stage and does not address poisoning attacks that compromise model training. The approach is also limited to tabular data, with effectiveness on other data modalities remaining unexplored.

In its current form, the classifiers are not suitable for distinguishing between types of attacks. The method is used to detect attacks on models at the inference stage—it is not dedicated to detecting poisoning attacks aimed at modifying the model’s decision boundaries at a later stage. Moreover, the proposed method was verified using static tabular data only without temporal dependencies.

6.3. Future Works

In conducting this research, we have followed the pattern of verifying the core principle of the method in laboratory environments, with a plan laid out for future experiments verifying the boundaries of its usefulness in gradually more real-world-like scenarios. Future research will split into three distinctive streams of work. The most important from the practical point of view is to address adaptive detection mechanisms for evolving data distributions, evaluate robustness against adversaries targeting the detection system itself, and extend the approach to non-tabular data modalities. Another stream of work will approach investigation of poisoning attack detection and improved attack type classification accuracy represent additional priorities. Finally, large-scale validation using real-world deployment scenarios will be essential for assessing practical effectiveness of the presented method.

While the proposed framework focuses on detecting evasion attacks at inference, future research may address poisoning attacks that target the training process. Investigating how the surrogate model and diagnostic attributes behave under incremental retraining or adaptive scenarios, where adversaries refine their strategies based on detection feedback, would provide broader coverage of adversarial threats. Introducing adversarial training elements for the detection classifier itself could further improve its resilience. Another direction of future research will be investigation of attack detection in time-series data, which allow to increase the usability of proposed framework for other sectors such as healthcare or industry.

Future work will include extending the evaluation to other black-box detection baselines such as Kernel Density Estimation, nearest neighbor distance, and autoencoder-based reconstruction error, as well as comparisons with transfer learning and meta-learning schemes. These additions will require substantial methodological adaptation and large-scale experiments, but they are essential for a more comprehensive assessment of the proposed framework.