Abstract

Human activity recognition (HAR) remains an essential field of research with increasing real-world applications ranging from healthcare to industrial environments. As the volume of publications in this domain continues to grow, staying abreast of the most pertinent and innovative methodologies can be challenging. This survey provides a comprehensive overview of the state-of-the-art methods employed in HAR, embracing both classical machine learning techniques and their recent advancements. We investigate a plethora of approaches that leverage diverse input modalities including, but not limited to, accelerometer data, video sequences, and audio signals. Recognizing the challenge of navigating the vast and ever-growing HAR literature, we introduce a novel methodology that employs large language models to efficiently filter and pinpoint relevant academic papers. This not only reduces manual effort but also ensures the inclusion of the most influential works. We also provide a taxonomy of the examined literature to enable scholars to have rapid and organized access when studying HAR approaches. Through this survey, we aim to inform researchers and practitioners with a holistic understanding of the current HAR landscape, its evolution, and the promising avenues for future exploration.

1. Introduction

Human activity recognition (HAR) pertains to the systematic identification and classification of activities undertaken by individuals based on diverse sensor-derived data [1]. This interdisciplinary research domain intersects computer science, engineering, and data science to decipher patterns in sensor readings and correlate them with specific human motions or actions. The importance of HAR is underscored by the rapid proliferation of wearable devices, mobile sensors, and the burgeoning Internet of Things (IoT) environment. Recognizing human activities accurately can not only improve user experience and automation but also assist in a myriad of applications where understanding human behavior is essential.

The applications of HAR are multifaceted, encompassing health monitoring, smart homes, security surveillance, sports analytics, and human–robot interaction, to name just a few. For instance, in the healthcare sector, HAR can facilitate the remote monitoring of elderly or patients with chronic diseases, enabling timely interventions and reducing hospital readmissions. Similarly, in smart homes, recognizing daily activities can lead to energy savings, enhanced comfort, and improved safety. In the realm of sports, HAR can aid athletes in refining their techniques and postures, providing feedback in real time. Moreover, in security and surveillance, anomalous activities can be promptly detected, thereby ensuring timely responses. In summary, HAR holds transformative potential across numerous sectors, shaping a more responsive and intuitive environment [2].

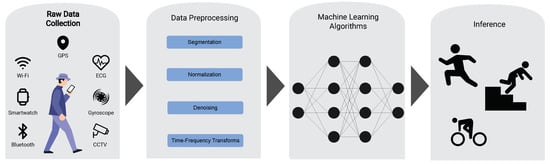

An example of HAR involves the use of wearable devices, such as smartwatches or fitness trackers, to monitor and classify user activities, as shown in Figure 1. These devices are often equipped with a range of sensors, the most prevalent being accelerometers and gyroscopes, which measure linear accelerations and angular velocities, respectively. Raw sensor data are captured at defined intervals, resulting in a time-series dataset [3,4,5,6]. For instance, an accelerometer would produce a triaxial dataset corresponding to acceleration values along the x, y, and z axes. Prior to feature extraction, several preprocessing steps are typically undertaken. In typical HAR applications, the proper predictor selection and data normalization are proven critical for the performance of machine learning algorithms in the field of HAR [7]. The raw time-series data are often noisy and may contain irrelevant fluctuations. Hence, they undergo filtering, commonly using a low-pass filter, to eliminate high-frequency noise [8]. Subsequently, the continuous data stream is segmented into overlapping windows, each representing a specific timeframe (e.g., 2.56 s with a 50% overlap). The windowing technique facilitates the extraction of local features that can encapsulate distinct activity patterns. For each window, multiple features are computed. Temporal domain features such as mean, variance, standard deviation, and correlation between axes are commonly extracted. Traditional algorithms such as support vector machines (SVMs), decision trees, random forests, and k-nearest neighbors (k-NN) have been extensively applied. However, with the advent of deep learning, methods like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have also found prominence due to their ability to model complex temporal relationships. Once trained, the model can classify incoming data into predefined activity classes such as walking, running, sitting, or standing. The granularity and accuracy of the classification are contingent on the quality of the data, the features extracted, and the efficacy of the chosen algorithm. In summation, HAR through wearable devices encompasses a systematic pipeline from raw sensor data acquisition to refined activity classification, leveraging advanced computational methods and algorithms.

Figure 1.

Generic flow of processes in human activity recognition tasks.

In a survey conducted in [9] about vision-based HAR, the authors point out their findings about related surveys in the topic of HAR. Specifically, the statistics of related surveys from 2010 until 2019 show that the majority of related surveys are focused on providing details about specific aspects of HAR [9,10,11,12], rather than giving a broader picture of the topic. We argue that in order to inform the reader about the recent trends in the field, more work needs to be performed on describing the wider spectrum of methodologies and settings adopted in every aspect of HAR. This article fills in the gap in the bibliography by giving an overview of various approaches of HAR, utilizing a large array of sensors and modalities, enabling the reader to identify the gaps in the literature.

Due to the expansive applications of human activity recognition (HAR) utilizing machine learning techniques, we structured our survey to distinctly address both sensor-based and vision-based methods. An additional salient contribution of this article is the deployment of large language models (LLMs) to extract pertinent keywords and respond to questions, thereby facilitating the ranking and filtering of our comprehensive database of papers. This paper is organized as follows:

- Introduction: This section delineates the problem of HAR, setting the context for the remainder of the paper.

- Related Work: Here, we reference the seminal and recent literature on HAR, underscoring the importance of comprehensive literature reviews in the domain.

- Methodology: In this section, we present our methodology, highlighting our data sources and the processes we employed to distill key information.

- Taxonomy of Methods: This section presents a deeper categorization of HAR methods and distinctly partitions them into sensor-based and vision-based techniques.

- Datasets: In this section, we catalog the most prevalent datasets employed in HAR research.

- Conclusion and Future Directions: Finally, in this section we wrap up the paper by discussing potential avenues for future research in the realm of HAR.

2. Related Work

A plethora of surveys have emerged to record the advancements, methodologies, and applications shaping this dynamic domain. These comprehensive reviews offer critical insights into the evolution of HAR enabled by machine learning and deep learning techniques. Notably, the surveys by Ray et al. [12] and Singh et al. [13] underscore the pivotal role of transfer learning and the paradigm shift towards automatic feature extraction through deep neural networks, respectively, in enhancing HAR systems. Additionally, the study by Gu et al. [14] fills a crucial gap in the literature by providing an in-depth analysis of contemporary deep learning methods applied to HAR. Collectively, these works not only delineate the current state of HAR but also highlight the challenges and future directions, thereby offering a comprehensive backdrop for understanding the progress and potential within this field.

The field of human activity recognition (HAR) has seen significant research, particularly in its applications to eldercare and healthcare within assistive technologies, as highlighted by the comprehensive surveys conducted by various researchers. The survey by Hussain et al. [15] spans research from 2010 to 2018, focusing on device-free HAR solutions, which eliminate the need for subjects to carry devices by utilizing environmental sensors instead. This innovative approach is categorized into action-based, motion-based, and interaction-based research, offering a unique perspective across all sub-areas of HAR. The authors present a detailed analysis using ten critical metrics and discuss future research directions, underlining the importance of device-free solutions in the evolution of HAR.

Jobanputra et al. [16] explores a variety of state-of-the-art HAR methods that employ sensors, images, accelerometers, and gyroscopes placed in different settings to collect data. The paper delves into the effectiveness of various machine learning and deep neural network techniques, such as decision trees, k-nearest neighbors, support vector machines, and more advanced models like convolutional and recurrent neural networks, in interpreting the data collected for HAR. By comparing these techniques and reviewing their performance across different datasets, the paper complements Hussain et al.’s [15] taxonomy and analysis by providing a deeper understanding of the methodologies employed in HAR and their practical implications, especially in healthcare and eldercare.

Further extending the recording of the advancements in HAR, Lara et al. [10] provide a survey on HAR using wearable sensors, emphasizing the role of pervasive computing across sectors such as the medical, security, entertainment, and tactical fields. This paper introduces a general HAR system architecture and proposes a two-level taxonomy based on the learning approach and response time. By evaluating twenty-eight HAR systems qualitatively on various parameters like recognition performance and energy consumption, this survey highlights the critical challenges and suggests future research areas. The focus on wearable sensors offers a different yet complementary perspective to the device-free approach discussed by Hussain et al. [15], showcasing the diversity in HAR research and its potential to revolutionize interactions between individuals and mobile devices.

The article by Ramamurthy et al. [17] investigates the effectiveness of machine learning in extracting and learning from activity datasets, transitioning from traditional algorithms that utilize hand-crafted features to advanced deep learning algorithms that evolve features hierarchically. The complexity of AR in uncontrolled environments, exacerbated by the volatile nature of activity data, underscores the ongoing challenges in the field. The paper provides a broad overview of the current machine learning and data mining techniques used in AR, identifying the challenges of existing systems and pointing towards future research directions.

Building on the discussion of machine learning’s role in HAR, the survey by Dang et al. [18] offers a thorough review of HAR technologies that are crucial for the development of context-aware applications, especially within the Internet of Things (IoT) and healthcare sectors. The paper stands out by aiming for a comprehensive coverage of HAR, categorizing methodologies into sensor-based and vision-based HAR, and further dissecting these groups into subcategories related to data collection, preprocessing, feature engineering, and training. The conclusion highlights the existing challenges and suggests directions for future research, adding depth to the ongoing conversation about HAR technologies.

Furthermore, the work by Vrigkas et al. [19] focuses on the classification of human activities from videos and images, acknowledging the complications introduced by background clutter, occlusions, and variations in appearance. This review is particularly pertinent to applications in surveillance, human–computer interaction, and robotics. The authors categorize activity classification methodologies and analyze their pros and cons, dividing them based on the data modalities used and further into subcategories based on activity modeling and focus. The paper also assesses existing datasets for activity classification and discusses the criteria for an ideal dataset, concluding with future research directions in HAR. This review complements the broader discussions by Ramamurthy et al. [17] and Dang et al. [18], by providing specific insights into video and image-based HAR, thereby enriching the understanding of the challenges and potential advancements in the field.

Finally, Ke et al. [11] offer an in-depth survey focusing on three pivotal aspects: the core technologies behind HAR, the various systems developed for recognizing human activities, and the wide array of applications these systems serve. It thoroughly explores the processing stages essential for HAR, such as human object segmentation, feature extraction, and the algorithms for activity detection and classification. The review then categorizes HAR systems based on their functionality—from recognizing activities of individual persons to understanding interactions among multiple people and identifying abnormal behaviors in crowds. Special emphasis is placed on the application domains, particularly highlighting the roles of HAR in surveillance, entertainment, and healthcare, thereby illustrating the breadth of HAR’s impact.

Saleem et al. [20] broadens the scope by presenting a comprehensive overview that encapsulates the multifaceted nature of HAR. This research introduces a detailed taxonomy that classifies HAR methods along several dimensions, including their operation mode (online/offline), data modality (multimodal/unimodal), feature type (handcrafted/learning-based), and more. By covering a wide range of application areas and methodological approaches, this study underscores the interdisciplinary nature of HAR, providing a comparative analysis of contemporary methods against various benchmarks such as activity complexity and recognition accuracy. This comparative lens not only deepens the understanding of state-of-the-art HAR techniques but also underscores the ongoing challenges and future avenues for research within the field.

Focusing on the computer vision aspect of HAR, Ref. [21] delves into the specific challenges and advancements in human action recognition within the broader spectrum of vision-based methods. This research highlights the paradigm shift towards feature learning-based representations driven by the adoption of deep learning methodologies. It offers a thorough examination of the latest developments in HAR, which are relevant across diverse applications from augmented reality to surveillance. The paper delineates a taxonomy of techniques for feature acquisition, including those leveraging RGB and depth data, and discusses the interplay between cutting-edge deep learning approaches and traditional hand-crafted methods. Further delving into the realm of computer vision, the paper by Singh et al. [13] addresses the critical aspect of human action identification from video, which is a cornerstone in applications ranging from healthcare to security. Their study provides a meticulous evaluation and comparison of these methodologies, offering insights into their effectiveness based on accuracy, classifier types, and datasets, thereby presenting a holistic view of the current advancements in activity detection.

Complementing these discussions, the survey by Gu et al. [10,14] focuses on the advancements and challenges in HAR, particularly through the lens of deep learning. This is especially pertinent given the rapid evolution of deep learning techniques and their significant potential in enhancing HAR systems. By offering a detailed review of contemporary deep learning methods applied in HAR, this study addresses a critical need for an in-depth exploration of the field, highlighting the ongoing developments and future directions. The study by Ray et al. [12] emphasizes the role of transfer learning. Transfer learning is known for its ability to enhance accuracy, reduce data collection and labeling efforts, and adapt to evolving data distributions.

The summarized overview provided in Table 1 encapsulates the breadth and depth of research in the domain of human activity recognition (HAR), revealing a diverse array of focuses, from device-free solutions to the nuanced application of deep learning techniques. Notably, several surveys, such as those concentrating on device-free, wearable device, and sensor-based HAR, not only dissect the current methodologies and systems but also rigorously compare various techniques, underscoring the field’s multifaceted nature. These comparisons offer valuable insights into the strengths and weaknesses of different approaches, guiding future research directions. Interestingly, while the majority of the surveys delve into comparative analyses, a select few, particularly those focusing on machine learning, video-based HAR, and deep learning applications, choose to emphasize the discussion of challenges, recent advances, and future directions without directly comparing techniques. This distinction might reflect the rapidly evolving landscape of HAR, where the emphasis is increasingly shifting towards understanding the underlying complexities and potential advancements rather than solely focusing on methodological comparisons. The recurring mention of future directions across all categories highlights a collective acknowledgment of the untapped potential and unresolved challenges within HAR. The emphasis on vision-based and video-based HAR underscores the growing importance of visual data in understanding human activities, which is a trend further amplified by the advent of deep learning and transfer learning. Specifically, the impact of transfer learning, as discussed in the surveys, signifies a transformative shift towards leveraging pre-existing knowledge, thereby enhancing efficiency and accuracy in HAR applications. This comprehensive table of surveys not only provides a snapshot of the current state of HAR research but also sets the stage for future explorations.

Table 1.

Summary of survey papers on human activity recognition.

The surveys highlight several key future challenges, including integrating data from various sensors to recognize complex behaviors beyond simple actions like walking [14,17,18]. They note the high cost and effort in gathering labeled data and the limitations of generative models like AEs and GANs in human activity recognition (HAR) [14,17]. Additionally, they emphasize the need to account for simultaneous and overlapping activities, which are more common in daily life than singular tasks [10,18,21].

3. Methodology

The field of human activity recognition (HAR) has witnessed an exponential growth in published research, resulting in an immense corpus of literature. The recent emergence of large language models (LLMs) offers a promising avenue for processing such extensive corpora with remarkable precision in condensed time frames. In this section, we elucidate our methodology. We commence by outlining the origins of our paper sources, underscoring that our analysis, while primarily based on scraped data, is not limited to them. Subsequently, we delineate the techniques employed to mine keywords from textual content and to respond to specific queries. This section culminates with an account of our approach to filtering and refining the selection of papers, ensuring our review is both comprehensive and discerning. In this particular work, we cut off works prior to 2020 and cover works up to the year of authoring this paper, 2023.

3.1. Data Sources

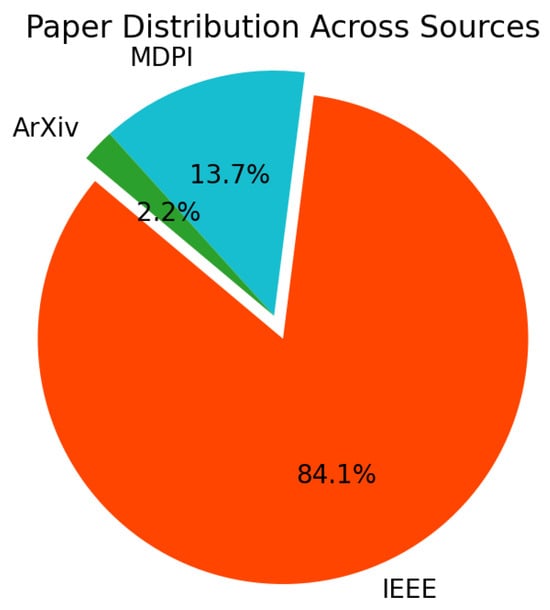

To amass a comprehensive dataset for our survey, we mined data from prominent academic research repositories. These included IEEEXplore, from which we extracted 8005 papers; arXiv, contributing 271 papers; and MDPI, accounting for 1300 papers. All the data procured are publicly accessible. The specific attributes scraped for each paper, contingent upon availability, encompass the paper title, its publication year, the number of citations, and most notably, the abstract. An overview of the distribution of paper number can be represented graphically in the pie chart in Figure 2. Given the assumption that an abstract encapsulates a paper’s content prior to a full perusal, our extraction efforts prioritized it. A succinct overview of the information available from each repository is furnished in Table 2. The data collection procedure is presented in Figure 3.

Figure 2.

Pie chart representation of the number of papers scraped from our paper sources.

Table 2.

Available extracted information from the pool of academic research paper repositories.

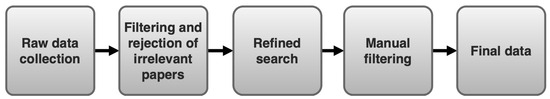

Figure 3.

Overview of the data collection, filtering, and compilation pipeline.

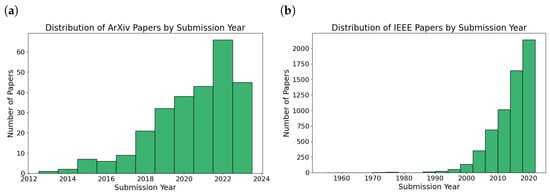

The analysis encompassed three main sources: IEEE, MDPI, and ArXiv. The IEEE repository is the most extensive, comprising 8005 papers. The distribution of publications in IEEE (Figure 4b) demonstrates a consistent growth over the years, with citations ranging from none to a substantial 2659, showcasing the varied impact of these works. MDPI contributes 1300 articles, though detailed yearly trends were not discernible due to data constraints. ArXiv, while the smallest with 270 articles, offers unique insights, particularly from its submission year trends (Figure 4a). Collectively, this triad of sources offers a comprehensive glimpse into the academic landscape of the topics in question. The citation distribution is markedly right-skewed (Figure 4b), indicating that a large portion of the IEEE papers garner a relatively low number of citations. This pattern is characteristic of many academic publications, where only a fraction of works achieve broad recognition and accrue a high citation count. The presence of a few papers with exceptionally high citations suggests the existence of seminal works that have profoundly impacted the field. These outliers, albeit few, underscore the importance of breakthrough research and its far-reaching implications in the academic landscape. The majority of papers, however, reside within a modest citation bracket, emphasizing the collective contribution of incremental research to the broader knowledge base.

Figure 4.

Statistics for the paper sources for IEEE and arXiv. (a) Distribution of ArXiv papers by submission year. (b) Average and Median number of citations per year for IEEE papers.

3.2. Use of Natural Language Processing

In our methodology, we harnessed the capabilities of natural language processing (NLP) to enhance the comprehensibility and assessment of scientific abstracts. Specifically, we employed the Voicelab/vlt5-base-keywords (https://huggingface.co/Voicelab/vlt5-base-keywords (accessed on 12 December 2023)) summarization transformer to extract salient keywords. Furthermore, to facilitate a deeper understanding of the content, we integrated a question answering transformer (https://huggingface.co/consciousAI/question-answering-roberta-base-s-v2 (accessed on 12 December 2023)). Our approach involved constructing an input context by amalgamating the paper’s title and abstract. This composite text was then posed with a series of pertinent questions designed to assess the abstract’s writing quality and information richness. Through this approach, we aimed to create an efficient tool for academic researchers to quickly ascertain the relevance and quality of a given scientific paper.

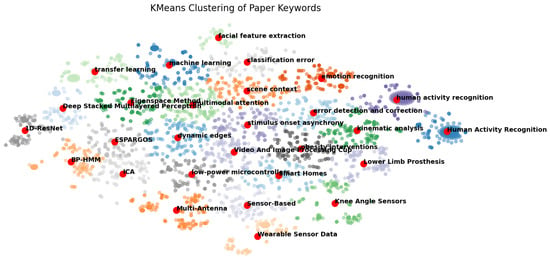

To further analyze our collected data, we proceed to the investigation of the keywords of our data. Concretely, we extract keywords from the title-abstract string concatenation and then for each keyword, we extract their vector embeddings. Afterwards, we project them onto the 2D plane using the T-SNE method [22], and then cluster them using K-Means clustering. We can see a clear trend emerging in Figure 5, with the most dominant neighborhoods being occupied by the keywords “Human Action Recognition”. We can also see that during the years 2020–2023, deep learning and transfer learning are obvious choices of approach for solving the problem of HAR. Other regions represent model proposal names as are seen on the far left side of the plane. The most repeated keywords that are close to the centroid that corresponds to “Sensor-Based” methods, are words that refer to accelerometers, IMUs, WiFi, and radars, hence we make the choice of focusing on those sensors. Another keyword that influenced our choice of encompassing vision-based methods is the centroid that corresponds to “Video and Image Processing” as well as the multiple occurrences of computer vision convolutional neural network-based methods. Finally, it should be noted that K-Means clustering was implemented after the projection of the embeddings onto the 2D plane and not vice-versa. We intentionally did this, so there is a clear distinction of the regions.

Figure 5.

Projection on the 2D plane of the keyword embeddings using the t-SNE method [23]. The red dots represent the respective keyword closer to its corresponding centroid. Each point on the plot corresponds to a vector representation of the keyword embeddings, which is reduced to 2D for better visualization. The different colors differentiate the embeddings’ clusters.

We filter our papers using the aforementioned models by asking the following template questions. We refer the reader to Appendix A for a detailed demonstration of the question answering module. The first criterion of filtering is the date of publication of the paper under examination; specifically, we are filtering out papers whose publication year is before 2020. Afterwards, we prompt the LLM to extract keywords and compare it to the column that corresponds to the scraped keywords, if available. Once the keywords are extracted, we proceed to ask a series of questions about the paper itself. As context we provide the LLM the string concatenation of the title and its abstract. We deliberately use these two components as input, as we assume that the title and the paper abstract contain just as much information as we need to infer whether it proposes a survey or a new methodology. The previous two steps have proven useful to discard the majority of irrelevant papers.

3.3. Data Compilation

In this subsection, we describe the methodology we adopted in order to obtain our data for our research. An overview of the pipeline is presented in Figure 3. First of all, we initiate our raw data search with prompting our search with the keywords “human activity recognition” and “human-robot interaction” and we limit our search by constraining to results from 2020 until the present. Once the search is complete, we end up amassing a large volume of papers from the public sources mentioned in Table 2, where most of the retrieved papers may be irrelevant for our purposes. As a first stage of filtering, we introduce the natural language processing method, as described in the Section 3.2, resulting in a volume of papers of about 500 articles. From the remaining pool of articles, we consequently proceed to manually refine our search within the collected data and leave out the irrelevant by reading the papers’ abstracts. The criterion was that we keep every article that introduces either a methodology, a dataset or a survey in the topic of HAR. Finally, after the conclusion of this step we end up with 159 papers.

3.4. Taxonomy Method

In this subsection, we outline the various comparison metrics that have been employed to taxonomize the literature we have collected. Our focus is on providing a comprehensive classification of the various HAR methods, ensuring that they encapsulate the breadth and depth of our research. This approach is crucial for establishing a clear understanding of the criteria and standards we have used to categorize and analyze the collected works. The inclusion of these metrics serves not only to enhance the clarity and rigor of our taxonomy but also aids in offering a structured and methodical overview of the literature for our readers.

- Cost Under this metric, we investigate the computational cost associated with training and deploying each method discussed in the literature. This metric is critically assessed based on the deep learning approach utilized in each method. Our empirical evaluation takes into account several key factors: the memory requirements essential for training and deploying, the complexity encountered during the inference process, and the usage of ensemble methods by the authors. By scrutinizing these elements, we aim to provide a thorough and nuanced understanding of the computational costs, offering insights into the practicality and efficiency of each method in real-world scenarios. This metric is of vital importance, as it is crucial for HAR methods to be cost-effective, as they are deployed in memory- and computing-constrained embedded systems.

- Approach We focus on identifying the machine learning models adopted by the authors to address the problem at hand. This aspect is crucial for understanding the benefits and shortcomings of each method. By identifying the types of deep learning models used, we enable readers to discern the benefits and drawbacks inherent to each approach. Such an understanding is pivotal for researchers and practitioners alike, as it not only provides a clear picture of the current state of the field but also aids in identifying potential areas for future exploration and development. We aim to offer a comprehensive overview that not only informs but also inspires readers to bridge gaps and contribute to the evolution of the future literature.

- Performance In this segment of our analysis, we turn our attention to the evaluation performance of the various methods within the datasets they were validated on. We categorize performance into three distinct tiers: low, medium, and high. A performance is deemed ’low’ when scores fall below 75%, ‘medium’ for those ranging between 75% and 95%, and ’high’ for scores exceeding 95%. However, it is crucial for our readers to understand that these performance degrees are indicative and not absolute. This is because different methods are often evaluated using diverse metrics, making direct comparisons challenging. Therefore, while these performance categories provide a helpful framework for initial assessment, they should be interpreted with an understanding of the varied and specific contexts in which each method is tested. Our intention is to offer a guide that aids in gauging performance, while also acknowledging the complexities and nuances inherent in methodological evaluations.

- Datasets This component of our taxonomy is essential, as it provides a clear insight into the environments and conditions under which each method was tested and refined. By presenting this information, we aim to give readers a comprehensive understanding of the types of data each method is best suited for, as well as the potential limitations or biases inherent in these datasets.

- Supervision In the ’Supervision’ section, we report the nature of supervision employed in the training of the methods we have examined. This aspect is pivotal, as the type of supervision has a significant impact on several facets of the developmental process, most notably in the cost and effort associated with data labeling. Methods that utilize supervised learning often require large datasets, which in turn necessitate extensive input from human annotators, thereby increasing costs. Conversely, methods based on unsupervised learning, while alleviating the need for labeled data, often confront challenges in maintaining a consistent quality metric. Such methods are also more prone to collapsing during training. By outlining the supervision techniques used, we aim to provide insights into the trade-offs and considerations inherent in each approach, offering a comprehensive perspective on how the choice of supervision influences not just the method’s development but also its potential applications and efficacy in real-world scenarios.

3.5. Budget

In this subsection, we analyze the costs for conducting our experiments. Since most of the queries were carried out through API calls, it is worth mentioning the overall financial cost. Given the cost (https://openai.com/pricing (accessed on 28 March 2024)) of using the OpenAI’s platform for using the chat completion API, it costed us around USD 8.5. Most of the cost was dominated from the chat completion (USD 30/106 tokens), whereas the embedding generation cost was nearly negligible (USD 0.02/106 tokens). As far as the computational cost is concerned, the most critical aspect of our implementation is the clustering, which has a space complexity of , where N is the number of samples in our available dataset, M is the embedding dimensionality, which for this use case, was set to 256, and K the number of clusters, which was set to 28.

4. HAR Devices and Processing Algorithms

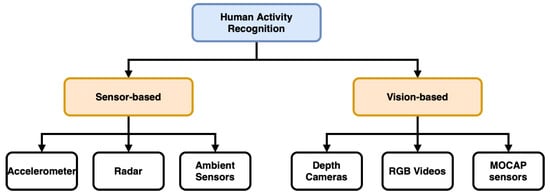

In the domain of human activity recognition (HAR), a synergistic interplay between sophisticated algorithms and high-fidelity sensors is quintessential for achieving accurate and reliable recognition outcomes. This section meticulously delineates the pivotal constituents underpinning HAR, segregated into two focal subsections. The initial subsection expounds on the diverse array of sensors employed in HAR, shedding light on their operational principles and the distinct types of data they capture, which are indispensable for discerning human actions and postures. Following this, the subsequent subsection delves into the algorithms that are instrumental in processing the signals derived from these sensors. It elucidates the computational techniques and algorithmic frameworks that are adept at deciphering the intricate patterns embedded in the sensor data, thereby enabling the robust identification and classification of human activities. Through an in-depth examination of both the sensor technologies and the algorithmic methodologies, this section aims to furnish a comprehensive understanding of the technological underpinnings that propel the field of human activity recognition forward. Figure 6 illustrates the two main categories of HAR methods that we be discussed for the rest of the paper.

Figure 6.

A general hierarchy of HAR methods. Typically, HAR is separated in sensor-based and vision-based approaches. The two major subcategories are divided into their respective sensors that are leveraged.

4.1. Devices

While some HAR approaches can apply to all sensor modalities, many are specific to certain types. The modalities can be categorized into three aspects: body-worn sensors, object sensors, and ambient sensors. Body-worn sensors, such as accelerometers, magnetometers, and gyroscopes, are commonly used in HAR. They capture human body movements and are found in devices like smartphones, watches, and helmets. Object sensors are placed on objects to detect their movement and infer human activities. For example, an accelerometer attached to a cup can detect drinking water activity. Radio frequency identifier (RFID) tags are often used as object sensors in smart home and medical applications. Ambient sensors capture the interaction between humans and the environment and are embedded in smart environments. They include radar, sound sensors, pressure sensors, and temperature sensors. Ambient sensors are challenging to deploy and are influenced by the environment. They are used to recognize activities and hand gestures in settings like smart homes. Some HAR approaches combine different sensor types, such as combining acceleration with acoustic information or using a combination of body-worn, object, and ambient sensors in a smart home environment. These hybrid sensor approaches enable the capture of rich information about human activities.

4.1.1. Body-Worn Sensors

Body-worn sensors, such as accelerometers, magnetometers, and gyroscopes, are commonly used in HAR. These sensors are typically worn by users on devices like smartphones, watches, and helmets. They capture changes in acceleration and angular velocity caused by human body movements, enabling the inference of human activities. Body-worn sensors, particularly accelerometers, have been extensively employed in deep learning-based HAR research. Gyroscopes and magnetometers are also commonly used in combination with accelerometers [24,25]. These sensors are primarily utilized to recognize activities of daily living (ADL) and sports [26]. Rather than extracting statistical or frequency-based features from movement data, the original sensor signals are directly used as inputs for the deep learning network.

4.1.2. Object Sensors

Object sensors are employed to detect the movement of specific objects, unlike body-worn sensors that capture human movements. These sensors enable the inference of human activities based on object movement [27,28]. For example, an accelerometer attached to a cup can detect the activity of drinking water [29]. Object sensors, typically using radio frequency identifier (RFID) tags, are commonly utilized in smart home environments and medical activities. RFID tags provide detailed information that facilitates complex activity recognition [30,31,32,33].

It is important to note that object sensors are less commonly used compared to body-worn sensors due to deployment challenges. However, there is a growing trend of combining object sensors with other sensor types to recognize higher-level activities.

4.1.3. Ambient Sensors

Ambient sensors are designed to capture the interactions between humans and their surrounding environment. These sensors are typically integrated into smart environments. Various types of ambient sensors are available, including radar, sound sensors, pressure sensors, and temperature sensors. Unlike object sensors that focus on measuring object movements, ambient sensors are used to monitor changes in the environment.

Several studies have utilized ambient sensors to recognize daily activities and hand gestures. Most of these studies were conducted in the context of smart home environments. Similar to object sensors, deploying ambient sensors can be challenging. Additionally, ambient sensors are susceptible to environmental influences, and only specific types of activities can be reliably inferred [34].

4.1.4. Hybrid Sensors

Some studies have explored the utilization of hybrid sensors in HAR by combining different types of sensors. For instance, research has shown that incorporating both acceleration and acoustic information can enhance the accuracy of HAR. Additionally, there are cases where ambient sensors are employed alongside object sensors, enabling the capture of both object movements and environmental conditions. An example is the development of a smart home environment called A-Wristocracy, where body-worn, object, and ambient sensors are utilized to recognize a wide range of intricate activities performed by multiple occupants. The combination of sensors demonstrates the potential to gather comprehensive information about human activities, which holds promise for future real-world smart home systems.

4.1.5. Vision Sensors

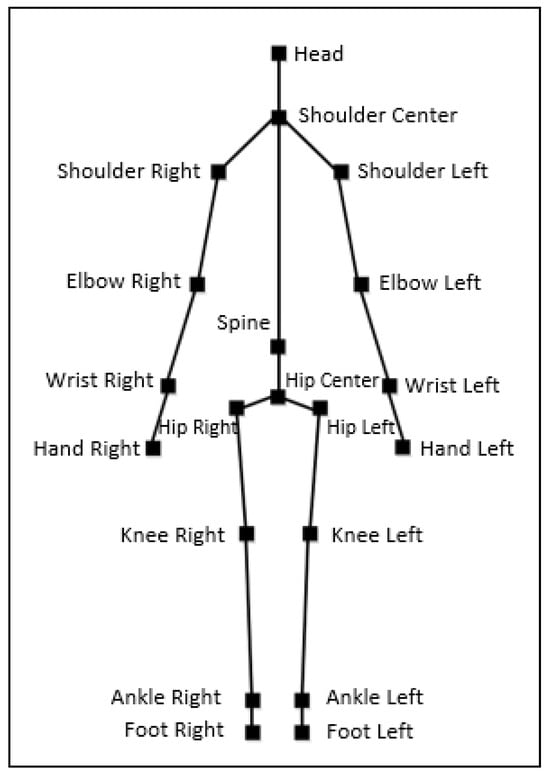

RGB data are widely recognized for their high availability, affordability, and the rich texture details they provide of subjects, making them a popular choice in various applications [18]. Despite these advantages, RGB sensors have limitations, such as a restricted range and sensitivity to calibration issues, and their performance is heavily influenced by environmental conditions like lighting, illumination, and the presence of cluttered backgrounds. The field of vision-based HAR has seen significant interest due to its real-world applications, and research in this area can be categorized based on the type of data used, such as RGB [35,36,37] and RGB-D data [38,39]. RGB-D data, which include depth information alongside traditional RGB data, provides additional layers of information for more accurate activity recognition. Furthermore, from depth data, it is possible to extract skeleton data, which offers a simplified yet effective representation of the human body’s skeleton. These skeleton data occupy a lower-dimensional space, enabling HAR models to operate more efficiently and swiftly, which is an essential factor for real-time applications like surveillance. The most common sensor that can provide RGB, RGB-D, and skeleton information is the Kinect. An example of a both RGB and RGB-D dataset is the Human4D dataset [40] (Figure 7) where the authors provide samples with RGB and depth readings. A common way to represent a skeleton is illustrated in Figure 8 and typically is represented in the form of joint rotations in either exponential maps or quaternions [41,42].

Figure 7.

An example of an RGB-D image. The source RGB image appears to the left and its corresponding depth map to the right. Objects that appear closer have a darker red color while the furthest objects appear blue. A notable dataset that is available for HAR problems and provides both RGB and LiDAR scanned depth maps is the Human4D dataset [40].

Figure 8.

Example of a human body skeleton representation.

4.2. Algorithms

We outline the deep neural network (DNN)-based approaches employed in the literature for solving the problem of human activity recognition that utilize sensor inputs. The most common methodologies include convolutional neural networks (CNNs), autoencoders, and recurrent neural networks (RNNs).

4.2.1. Convolutional Neural Networks

This subsection discusses the application of convolutional neural networks (CNNs) in human activity recognition (HAR) and highlights its advantages and considerations. CNNs leverage sparse interactions, parameter sharing, and equivariant representations. After convolution, pooling and fully connected layers are typically used for classification or regression tasks.

CNNs have proven effective in extracting features from signals and have achieved promising results in various domains such as image classification, speech recognition, and text analysis. When applied to time-series classification like HAR, CNN offers two advantages over other models: local dependency and scale invariance. Local dependency refers to the correlation between nearby signals in HAR, while scale invariance means the model can handle different paces or frequencies. Due to the effectiveness of CNNs, most of the surveyed work in HAR has focused on this area.

When applying CNNs to HAR, several aspects need to be considered: input adaptation, pooling, and weight-sharing.

4.2.2. Input Adaptation

HAR sensors typically produce time-series readings, such as acceleration signals, which are temporal multi-dimensional 1D readings. Input adaptation is necessary to transform these inputs into a suitable format for CNN. There are two main types of adaptation approaches:

4.2.3. Data-Driven Approach

Each dimension is treated as a channel, and 1D convolution is performed on them. The outputs of each channel are then flattened to unified deep neural network (DNN) layers. This approach treats the 1D sensor readings as a 1D image, and examples include treating accelerometer dimensions as separate RGB channels [43] and using shared weights in multi-sensor CNNs [44]. While this approach is simple, it ignores dependencies between dimensions and sensors, which can impact performance.

4.2.4. Model-Driven Approach

The inputs are resized to form a virtual 2D image, allowing for 2D convolution. This approach requires non-trivial input tuning techniques and domain knowledge. Examples include combining all dimensions into a single image [45] or using complex algorithms to transform time series into images [46]. This approach considers the temporal correlation of sensors but requires additional efforts in mapping time series to images.

4.2.5. Weight-Sharing

Weight-sharing is an efficient method to speed up the training process on new tasks. Different weight-sharing techniques have been explored, including relaxed partial weight-sharing [43] and CNN-pf and CNN-pff structures [47]. Partial weight-sharing has shown improvements in CNN performance.

In summary, this subsection provides an overview of the key concepts and considerations when applying CNNs to HAR, including input adaptation, pooling, and weight-sharing techniques.

4.2.6. Recurrent Neural Networks

RNN is a popular neural network architecture that leverages temporal correlations between neurons and is commonly used in speech recognition and natural language processing. In HAR, a RNN is often combined with long short-term memory (LSTM) cells, which act as memory units and help capture long-term dependencies through gradient descent.

There have been relatively few works that utilize RNNs for HAR tasks. In these works [48,49,50,51], the main concerns are the learning speed and resource consumption in HAR. One study [51] focused on investigating various model parameters and proposed a model that achieves high throughput for HAR. Another study [48] introduced a binarized-BLSTM-RNN model, where the weight parameters, inputs, and outputs of all hidden layers are binary values. The main focus of RNN-based HAR models is to address resource-constrained environments while still achieving good performance.

In summary, this subsection highlights the limited use of RNNs in HAR and emphasizes the importance of addressing learning speed and resource consumption in HAR applications. It mentions specific studies that have explored different approaches, including optimizing model parameters and introducing resource-efficient models like the binarized-BLSTM-RNN model.

5. Sensor-Based HAR

Human activity recognition (HAR) has witnessed transformative advancements with the incorporation of body-worn sensors, ushering in a new era of ubiquitous and continuous monitoring. Accelerometer and Inertial Measurement Unit (IMU) sensors serve as foundational elements, capturing motion-based data to deduce activities ranging from simple gestures to complex movements. In parallel, WiFi sensors have unlocked the potential for HAR even without direct contact with the human body, by leveraging the ambient wireless signals that reflect off our bodies. Advancements in radar sensors further expand the spectrum, providing a richer dataset with the capability to penetrate obstacles and discern minute motions, such as breathing patterns. However, the pinnacle of HAR’s potential is realized through sensor fusion, where the combined prowesses of these varied sensors coalesce, offering enhanced accuracy, resilience to environmental noise, and the ability to operate in multifarious scenarios. This section delves into the multifaceted applications of these sensors, both in isolation and in harmony, illustrating their transformative impact on HAR’s landscape. In the following subsections, we present applications of HAR that leverage accelerometer and IMU signals, WiFi, and radar imaging, as well as methods that combine all of the aforementioned modalities. The findings of this section are summarized in Table 3, Table 4, Table 5, Table 6, for the modalities of accelerometers, Wi-Fi, RADAR-based methods and finally for the various modalities, respectively.

Table 3.

Accelerometer and IMU sensor-based methods.

Table 4.

WiFi sensor-based methods.

Table 5.

Classification of radar-based methods.

Table 6.

Classification of methods that leverage a fusion of various modalities.

5.1. Accelerometer and IMU Modalities

A contribution to accelerometer-based HAR is presented in [3], where the authors introduce an adaptive HAR model that employs a two-stage learning process. This model utilizes data recorded from a waist-mounted accelerometer and gyroscope sensor to first distinguish between static and moving activities using a random forest classifier. Subsequent classification of static activities is performed by a Support Vector Machine (SVM), while moving activities are identified with the aid of a 1D convolutional neural network (CNN). Building on the idea of utilizing deep learning for HAR, the work in [4] proposes an ensemble deep learning approach that integrates data from sensor nodes located at various body sites, including the waist, chest, leg, and arm. The implementation involves training three distinct deep learning networks on a publicly available dataset, encompassing data from eight human actions, demonstrating the potential of ensemble methods in improving HAR accuracy. Furthermore, the research in [5] introduces a multi-modal deep convolutional neural network designed to leverage accelerometer data from multiple body positions for activity recognition. The study in [52] explores the application of a one-dimensional (1D) CNN model for HAR, utilizing tri-axis accelerometer data collected from a smartwatch. This research focuses on distinguishing between complex activities such as studying, playing games, and mobile scrolling.

Another development in this area is presented in [6], where the authors introduce a convolved self-attention neural network model optimized for gait detection and HAR tasks. Further addressing the challenges of capturing spatial and temporal relationships in time-series data from wearable devices, the work in [53] explores the application of a transformer-based deep learning architecture. Recognizing the limitations of traditional AI algorithms, where convolutional models focus on local features and recurrent networks overlook spatial aspects, the authors propose leveraging the transformer model’s self-attention mechanism. In another approach, Ref. [54] employs Bidirectional long short-term memory (LSTM) networks for data generation using the WISDM dataset, a publicly available tri-axial accelerometer dataset. The study focuses on assessing the similarity between generated and original data and explores the impact of synthetic data on classifier performance. Addressing the variability in human subjects’ physical attributes, which often leads to inconsistent model performance, the authors in [55] propose a physique-based HAR approach. By leveraging raw data from smartphone accelerometers and gyroscopes. Additionally, the study in [56] introduces an architecture called “ConvAE-LSTM”, which synergizes the strengths of convolutional neural networks (CNNs), autoencoders (AEs), and Long Short-Term Memory (LSTM) networks. In parallel, the challenge of capturing salient activity features across varying sensor modalities and time intervals due to the fixed nature of traditional convolutional filters is addressed in [8]. This research presents a deformable convolutional network designed to enhance human activity recognition from complex sensory data. Ref. [57] contributes to the HAR field by proposing a hierarchical framework named HierHAR, which focuses on distinguishing between similar activities. This structure serves as the basis for a tree-based activity recognition model, complemented by a graph-based model to address potential compounding errors during the prediction process.

In [58], Teng et al. introduce a groundbreaking approach by implementing a CNN-based architecture with a local loss. The adoption of a local loss-based CNN represents a strategy in the domain that offers a more refined feature extraction capabilities and enhanced learning efficacy for activity recognition. Additionally, Ref. [59] explores the use of Generative Adversarial Networks (GANs) combined with temporal convolutions in a semisupervised learning framework for action recognition. This approach is particularly designed to tackle common challenges in HAR, such as the scarcity of annotated samples. Ref. [60] presents the BiGRUResNet model, which combines LSTM-CNN architectures with deep residual learning for improved HAR accuracy and reduced parameter count.

In [61], the authors propose XAI-BayesHAR, an integrated Bayesian framework by leveraging a Kalman filter to recursively track the feature embedding vector and its associated uncertainty. This feature is particularly valuable for practical deployment scenarios where understanding and quantifying predictive uncertainty are required. Furthermore, the framework’s ability to function as an out-of-data distribution (OOD) detector adds an additional layer of practical utility by identifying inputs that significantly deviate from the trained data distribution. In contrast to traditional wrist-worn devices, Yen, Liao, and Huang in [86] explore the potential of a waist-worn wearable device specifically designed to accurately monitor six fundamental daily activities, particularly catering to the needs of patients with medical constraints such as artificial blood vessels in their arms. The hardware and software components of this wearable, including an ensemble of inertial sensors and a sophisticated activity recognition algorithm powered by a CNN-based model, highlight the importance of tailored hardware and algorithmic approaches in enhancing HAR accuracy in specialized healthcare applications. Furthermore, Ref. [87] delves into the utilization of smartphones and IoT devices for HAR. The work in [62] addresses the challenge of balancing HAR efficiency with the inference-time costs associated with deep convolutional networks, which is especially pertinent for resource-constrained environments.

The authors of [63] introduce a wearable prototype that combines an accelerometer with another launchpad device. This device is specifically designed to recognize and classify activities such as walking, running, and stationary states. The classification algorithm is based on analyzing statistical features from the accelerometer data, applying Linear Discriminant Analysis (LDA) for dimensionality reduction, and employing a support vector machine (SVM) for the final activity classification. Building on the integration of advanced neural network architectures for HAR, Ref. [64] presents a system that combines convolutional neural networks (CNNs) with Gated Recurrent Units (GRUs). This hybrid approach allows for enhanced feature extraction through the CNN layers, followed by the GRU layers capturing temporal dependencies. In response to the constraints imposed by resource-limited hardware, Ref. [65] introduces an innovative HAR system based on a partly binarized Hybrid Neural Network (HNN). This system is optimized for real-time activity recognition using data from a single tri-axial accelerometer, distinguishing among five key human activities. Finally, Tang et al. in [66] propose a CNN architecture that incorporates a hierarchical-split (HS) module, designed to enhance multiscale feature representation by capturing a broad range of receptive fields within a single feature layer.

Finally, in a similar direction, Ref. [67] explores the integration of cost-effective hardware focusing on leveraging the gyroscope and accelerometer to feed data into a deep neural network architecture. Their model, DS-MLP, represents a confluence of affordability and sophistication, aiming to make HAR more accessible and reliable.

5.2. Methods Leveraging WiFi Signals

The work presented in [68] introduces WiHARAN, a robust WiFi-based activity recognition system designed to perform effectively in various settings. The key to WiHARAN’s success lies in its ability to learn environment-independent features from Channel State Information (CSI) traces, utilizing a base network adept at extracting temporal information from spectrograms. This is complemented by adversarial learning techniques that align the feature and label distributions across different environments, thus ensuring consistent performance despite changes in the operating conditions. Complementing this, the approach detailed in [69] explores a device-free methodology utilizing WiFi Received Signal Strength Indication (RSSI) for recognizing human activities within indoor spaces. By employing machine learning algorithms and collecting RSSI data from multiple access points and mobile phones, the system achieves remarkable accuracy, particularly in the 5 GHz frequency band. Further expanding the scope of WiFi-based activity recognition, Ref. [70] presents a comprehensive framework that integrates data collection and machine learning models for the simultaneous recognition of human orientation and activity. In a different vein, Ref. [71] focuses on the nuances of limb movement and its impact on WiFi signal propagation. The research identifies the challenges associated with variability in activity performance and individual-specific signal reflections, which complicate the recognition process. To overcome these hurdles, a novel system is proposed that leverages diverse CSI transformation methods and deep learning models tailored for small datasets. The work by Ding et al. [72] showcases the application of a straightforward convolutional neural network (CNN) architecture, augmented with transfer learning techniques, with minimal or even no prior training. This approach not only demonstrates the effectiveness of transfer learning in overcoming the limitations of small training sets but also sets new benchmarks in location-independent, device-free human activity recognition. Amidst these technological advancements, the aspect of user privacy emerges as a pivotal consideration, particularly in the domain of HAR applications and, by extension, Assisted Daily Living (ADL). As the quality of service for IoT devices improves, users are increasingly faced with the balance between benefitting from enhanced services and risking the exposure of sensitive personal information, such as banking details, workout routines, and medical records. The participants of the study conducted in [88] highlight a nuanced stance towards this balance; they seem amenable to sharing sensitive information about their health and daily habits as long as the IoT services provided are tailored to their needs and yield better service performance. Nonetheless, their willingness to share personal data is conditional, underscored by the prerequisite of specific circumstances that safeguard their privacy while enabling the benefits of technology-enhanced living.

Wireless sensing technologies have seen remarkable advancements, particularly in the realms of indoor localization and human activity recognition (HAR), propelled by the nuanced capabilities of wireless signals to reflect human motions. This is vividly illustrated in the works of [73,74], where each study presents a unique approach to leveraging Channel State Information (CSI) for enhanced HAR and localization. In [73], the focus is on the dynamic nature of wireless signals and their interactions with human activities. The authors observe that the scattering of wireless signals, as captured in the CSI, varies with different human motions, such as walking or standing. They achieve this by leveraging the U-Net architecture, a specialized convolutional neural network (CNN). Ref. [74] integrates wireless sensing within the Internet of Things (IoT) ecosystem, emphasizing its importance for both HAR and precise location estimation. The authors in [74] introduce a hardware design, which simplifies CSI acquisition and enables the simultaneous execution of HAR and localization tasks using Siamese networks. This integrated approach is particularly advantageous in smart home environments, where it can facilitate gesture-based device control from specific locations without compromising user privacy, as it negates the need for wearable sensors or cameras.

5.3. Radar Signal HAR

In an effort to address challenges related to high data dimensions in Frequency Modulated Continuous Wave (FMCW) radar images, slow feature extraction, and complex recognition algorithms, Ref. [75] presents a method using two-dimensional feature extraction for FMCW radar. The approach begins by employing two-dimensional principal component analysis (2DPCA) to reduce the dimensionality of the radar Doppler–Time Map (DTM). The recognition task is accomplished using a k-nearest neighbor (KNN) classifier. Ref. [76] explores the application of three self-attention models, specifically Point Transformer models, in the classification of Activities of Daily Living (ADL). The experimental dataset, collected at TU Delft, serves as the foundation for investigating the optimal combination of various input features, assessing the impact of the proposed Adaptive Clutter Cancellation (ACC) method, and evaluating the model’s robustness within a leave-one-subject-out scenario. In [77], the Bayesian Split Bidirectional recurrent neural network for human activity recognition is introduced, in order to compensate for the computational cost of deep neural networks. The proposed technique harnesses the computational capabilities of the off-premise device to quantify uncertainty, distinguishing between epistemic (uncertainty due to lack of training data) and aleatoric (inherent uncertainty in predictions) uncertainties. Radar signal modalities are used to predict human activities.

5.4. Various Modalities and Modality Fusion

One approach that leverages multiple modalities for HAR is the Data Efficient Separable Transformer (DeSepTr) framework, as introduced in [78]. This framework leverages the capabilities of transformer neural networks [89,90], specifically a Vision Transformer (ViT), trained using spectrograms generated from data collected by wearable sensors. In response to the limitations of model-based hidden Markov models, the switching Gaussian mixture model-based hidden Markov model (S-GMMHMM), is introduced in [82]. The S-GMMHMM utilizes a supervised algorithm for accurate parameter estimation and introduces a real-time recognition algorithm to compute activity posteriors recursively. Capitalizing on Enveloped Power Spectrum (EPS) to isolate impulse components from signals, Ref. [83] introduces Linear Discriminant Analysis (LDA), which is employed to reduce the feature dimensionality and extract discriminative features. The extracted features are then used to train a multiclass support vector machine (MCSVM). Building upon the need for efficient data handling and feature extraction in HAR, Ref. [84] introduces the HAP-DNN model, an advanced solution addressing the challenges posed by the extensive sensor data and multichannel signals typical in HAR tasks. Finally, in response to the complexities of recognizing human activities in varied real-world settings, Ref. [85] presents a method that combines data from Frequency Modulated Continuous Wave (FMCW) radar with image data, taking advantage of the complementary strengths of these modalities to boost recognition accuracy. This approach is further enhanced by incorporating domain adaptation techniques to address inconsistencies in data due to environmental variations and differing user behaviors.

6. Vision-Based HAR

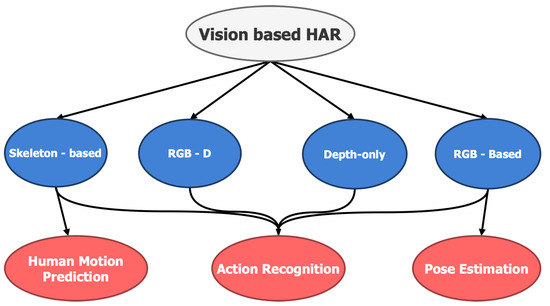

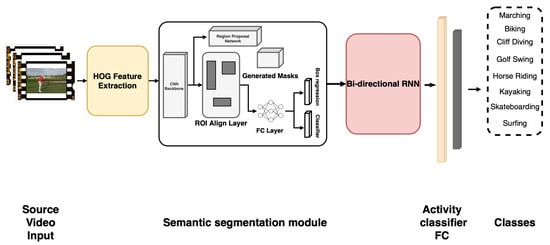

Human activity recognition (HAR) represents a pivotal area of research in the realm of computer vision, aiming to identify and categorize human actions from a series of observational data. Primarily, the data feeding these models come from visual sources such as videos or sequences of images captured from a variety of devices like surveillance cameras, smartphones, or dedicated recording equipment. Traditional machine learning algorithms, having provided the foundation for HAR, have in recent years been complemented and even superseded by more advanced architectures. Particularly, convolutional neural networks (CNNs) have proven adept at extracting spatial hierarchies and patterns from visual data [91,92,93], while Vision Transformers [90,94] divide the input image into fixed-size patches and linearly embed them for attention-driven understanding. Furthermore, to capture the temporal dependencies inherent in video sequences, researchers often amalgamate CNNs with recurrent neural networks (RNNs) [95]. This combination exploits the spatial recognition capabilities of CNNs and the sequence understanding of RNNs, offering a comprehensive understanding of both the spatial and temporal aspects of human activities. As technology advances, the fusion of classical and modern algorithms offers promise in achieving more accurate and real-time HAR applications. The typical vision-based approaches re illustrated in Figure 9. Many approaches use a combination of primitive tasks, such as image segmentation [96] in order to extract semantic information about the scene and infer the ongoing activity [97], as shown in Figure 10. We summarize our findings in Table 7.

Figure 9.

Typically used modalities in vision-based methods in HAR. Due to the wide range of applications of HAR, vision-based methods can be further categorized in subsequent tasks. Most notable are human motion prediction, action recognition and pose estimation.

Figure 10.

The framework proposed in [97]. First, the video is split into a sequence of RGB images and consequently fed into an instance segmentation module, typically a MaskRCNN [96]. The per-frame semantic representation extracted from the segmentation module are then processed by an RNN, before they are fed to a fully connected layer to finally infer the activity class.

Table 7.

Classification of vision-based methods.

The work presented in HAMLET [98] is characterized by a hierarchical architecture designed for encoding spatio-temporal features from unimodal data. This is achieved through a multi-head self-attention mechanism that captures detailed temporal and spatial dynamics. In a similar fashion, the SDL-Net model introduced in [99] leverages the synergies of convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to address HAR challenges. This model uniquely employs Part Affinity Fields (PAFs) to construct skeletal representations and skeletal gait energy images, which are instrumental in capturing the nuanced sequential patterns of human movement. Moreover, Popescu et al. [100] augment information from multiple channels within a 3D video framework, including RGB, depth data, skeletal information, and contextual objects. By processing each data stream through independent 2D convolutional neural networks and then integrating the outputs using sophisticated fusion mechanisms, their system achieves a comprehensive understanding of the video content. Ref. [101] proposes a novel approach that melds the strengths of Deep Belief Networks (DBNs) with a DBN-based Recursive Genetic Micro-Aggregation Approach (DBN-RGMAA) to ensure anonymity in HAR. The Hybrid Deep Fuzzy Hashing Algorithm (HDFHA) further enhances this approach by capturing complex dependencies between actions. Furthermore, the CNN-LSTM framework introduced in [103] by harmoniously integrating convolutional neural networks (CNNs) with long short-term memory networks (LSTMs), captures the spatio-temporal dependencies of human activities. The study by [104] introduces a pioneering hybrid approach that integrates the temporal learning capabilities of long short-term memory (LSTM) networks with the optimization prowess of Particle Swarm Optimization (PSO). This combination effectively analyzes both the temporal and spatial dimensions of video data. The introduction of the Maximum Entropy Markov Model (MEMM) by the authors of [105] treats sensor observations as inputs to the model and employs a customized Viterbi algorithm to infer the most likely sequence of activities.

7. Datasets

7.1. Sensor-Based Datasets

Ref. [106] offers an exhaustive survey on datasets tailored for human activity recognition (HAR) through the use of installed sensors. These datasets are pivotal for researchers aiming to develop efficient machine learning models for activity recognition, and they exclude datasets based on RGB or RGB-Depth video actions. The content of these datasets spans a broad spectrum of sensor-based activities. Firstly, there are datasets from the UCI Machine Learning Repository, which encompass a variety of domains, including activities of daily living, fall classification, and smartphone-based activity recognition. Another significant contributor is the Pervasive System Research Group from the University of Twente, offering datasets on smoking activity, complex human activities, and physical activity recognition. The Human Activity Sensing Consortium (HASC) provides large-scale databases, with datasets like HASC2010corpus and HASC2012corpus, focusing on human behavior monitoring using wearable sensors. Medical activities also find representation with datasets like the Daphnet Freezing of Gait (FoG) dataset, which monitors Parkinson’s disease patients, and the Nursing Activity dataset that observes nursing activities in hospitals. For those interested in physical and sports activities, datasets such as the Body Attack Fitness and Swimmaster datasets provide insights into workout routines and swimming techniques, respectively. The rise of smart homes has led to the inclusion of household activities-related datasets like the MIT PlaceLab and CMU-MMAC, which utilize IoT to track daily household activities. Lastly, with the ubiquity of smart devices, the document also touches upon datasets related to device usage, capturing user behaviors and patterns while interacting with these devices.

The UCI Machine Learning Repository offers a diverse collection of datasets pivotal for human activity recognition (HAR) research. The HHAR dataset [107], for instance, encompasses data from nine subjects across six activities, with 16 attributes spanning about 44 million instances. Similarly, the UCIBWS dataset [108], derived from 14 subjects, captures four activities and comprises nine attributes across 75,000 instances. The AReM dataset [109], although from a single subject, provides insights into six activities with six attributes, though the instance count remains unspecified. The HAR [110] and HAPT datasets [111], both sourced from 30 subjects, delve into 6 and 12 activities respectively, both having 10,000 instances. Single Chest [112] and OPPORTUNITY datasets [113] offer a broader activity spectrum, with the former capturing 7 activities from 15 subjects and the latter detailing 35 activities from 4 subjects. ADLs [114] and REALDISP datasets [115] provide a snapshot of daily activities, with the latter offering an extensive 33 activities and attributes from 17 subjects. UIFWA [112] and PAMAP2 [81] datasets, from 22 and 9 subjects, respectively, offer varied attributes, with the former’s activity count unspecified. DSA and Wrist ADL datasets [116], both focusing on daily activities, provide data from 8 and 16 subjects respectively and proposed in the same publication. The RSS dataset [117], while not specifying subject count, offers insights into two activities with four attributes. Lastly, the MHEALTH [118], WISDM [119], and WESAD datasets [120], derived from 10, 51, and 15 subjects, respectively, provide a comprehensive view of various activities, with the WISDM dataset [119] detailing 18 activities across 15 million instances. Collectively, these datasets from the UCI Machine Learning Repository present a rich tapestry of information that is invaluable for researchers aiming to advance the domain of HAR. Table 8 summarizes all the datasets’ attributes along with the measurement tools employed to create the samples.

Table 8.

Summary of datasets from the UCI Machine Learning Repository for human activity recognition (HAR) research. The table provides details on the number of subjects, activities, instances, measurement sensors and the respective sources for each dataset.

7.2. Vision-Based Datasets

In terms of visual-based human activity recognition (HAR), several benchmark datasets have been established to evaluate and validate algorithmic approaches and learning methods. At the action level, the KTH Human Action Dataset, developed by the Royal Institute of Technology of Sweden in 2004, offers 2391 sequences spanning six distinct human actions [121]. Transitioning to the behavior level, the VISOR Dataset from the University of Modena and Reggio Emilia encompasses 130 video sequences tailored for human action and activity recognition [122]. Additionally, the Caviar Dataset (2004) provides videos capturing nine activities in varied settings, while the Multi-Camera Action Dataset (MCAD) [123] from the National University of Singapore focuses on 18 daily actions across five camera perspectives. On the interaction level, the MSR Daily Activity 3D Dataset by Microsoft Research Redmond [124] comprises 320 sequences across channels like depth maps, skeleton joint positions, and RGB video, capturing 10 subjects in 16 activities. Other notable datasets include the UCF50 [125] with 50 action categories from YouTube, the HMDB-51 Dataset from Brown University [126] with 6849 clips across 51 action categories, and the Hollywood Dataset by INRIA [127], featuring actions from 32 movies. We split our pool of the benchmark datasets into four major categories: (i) action-level datasets, (ii) behavioral-level datasets, (iii) interaction-level datasets, and (iv) group activities-level datasets. Table 9 summarizes the fundamental properties of all the datasets that will be described below.

Table 9.

Taxonomy of the vision-based dataset based on their interaction properties.

7.2.1. Action-Level Datasets

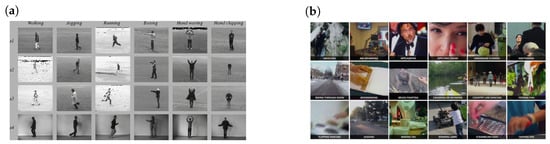

In the category of action-level datasets, the KTH Human Action Dataset, developed by the Royal Institute of Technology of Sweden in 2004, consists of 2391 sequences across six action classes, including actions such as walking, jogging, and boxing, recorded against consistent backgrounds with a stationary camera. In contrast, the Weizmann Human Action Dataset, introduced by the Weizmann Institute of Science in 2005, comprises 90 sequences with nine individuals performing 10 distinct actions, including jumping, skipping, and hand waving. The Stanford 40 Actions dataset, curated by the Stanford Vision Lab, encompasses 9532 images from 40 different action classes. Another notable dataset is the IXMAS dataset, established in 2006, offering a unique multi-view perspective on action recognition by capturing 11 actors executing 13 everyday actions using five cameras from varied angles. Lastly, the MSR Action 3D dataset, a brainchild of Wanqing Li from Microsoft Research Redmond, includes 567 depth map sequences, where 10 subjects perform 20 types of actions, captured using a Kinect device. Some characteristic dataset are shown in Figure 11.

Figure 11.

Comparison of activity snapshots from two renowned datasets, KTH [121] and Kinetics [137]. While both datasets provide a visual array of human actions, they vary in complexity and scene context. (a) Snapshots from the KTH dataset, showcasing various human activities such as walking, jogging, running, boxing, hand waving, and hand clapping. (b) A collection of snapshots from the Kinetics dataset, depicting diverse activities ranging from air drumming and applauding to making tea and mowing the lawn.

7.2.2. Behavioral-Level Datasets

Examining the behavioral-level datasets, we have the VISOR dataset, introduced by the Imagelab Laboratory of the University of Modena and Reggio Emilia in 2005, which includes a diverse range of videos categorized by type. One significant category, designated for human action recognition in video surveillance, encompasses 130 video sequences. The Caviar dataset, founded in 2004, splits into two distinct sets: one captured using a wide-angle lens in the INRIA Labs’ lobby in Grenoble, France, and the other filmed in a shopping center in Lisbon. This dataset captures individuals engaging in nine activities across two contrasting settings. Lastly, the Multi-Camera Action Dataset (MCAD), a creation of the National University of Singapore, addresses the open-view classification challenge in surveillance contexts. It comprises recordings of 18 daily actions, sourced from other datasets like KTH and IXMAS, captured through five cameras and performed by 20 subjects. Each subject performs each action eight times, split evenly between daytime and evening sessions.

7.2.3. Interaction-Level Datasets

In the category of interaction-level datasets essential for vision-based human activity recognition, a large number of datasets can be encountered. Among these, the MSR Daily Activity 3D Dataset from Microsoft Research Redmond offers 320 sequences across channels including depth maps, skeleton joint positions, and RGB video, capturing 10 subjects engaging in 16 activities in both standing and sitting stances. The 50 Salads dataset from the University of Dundee chronicles 25 individuals preparing salads in videos summing up to 4 h. The MuHAVI dataset by Kingston University focuses on silhouette-based human action recognition with videos from eight different angles. Additionally, the University of Central Florida has contributed two significant datasets: UCF50, which extends from the UCF11 dataset with 50 action categories, and the UCF Sports Action Dataset, offering 150 sequences from televised sports. The ETISEO dataset by the INRIA Institute aims at enhancing video surveillance techniques across varied settings. Meanwhile, the Olympic Sports Dataset by the Stanford Vision Lab comprises 50 videos of athletes from 16 sports disciplines. The University of Texas presented the UT-Interaction dataset, stemming from a research competition, spotlighting 20 video sequences of continuous human–human interactions across diverse attire conditions. Lastly, the UT-Tower dataset, also by the University of Texas, showcases 108 video sequences spanning two settings: a concrete square and a lawn.

7.2.4. Group Activity-Level Datasets