Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology

Abstract

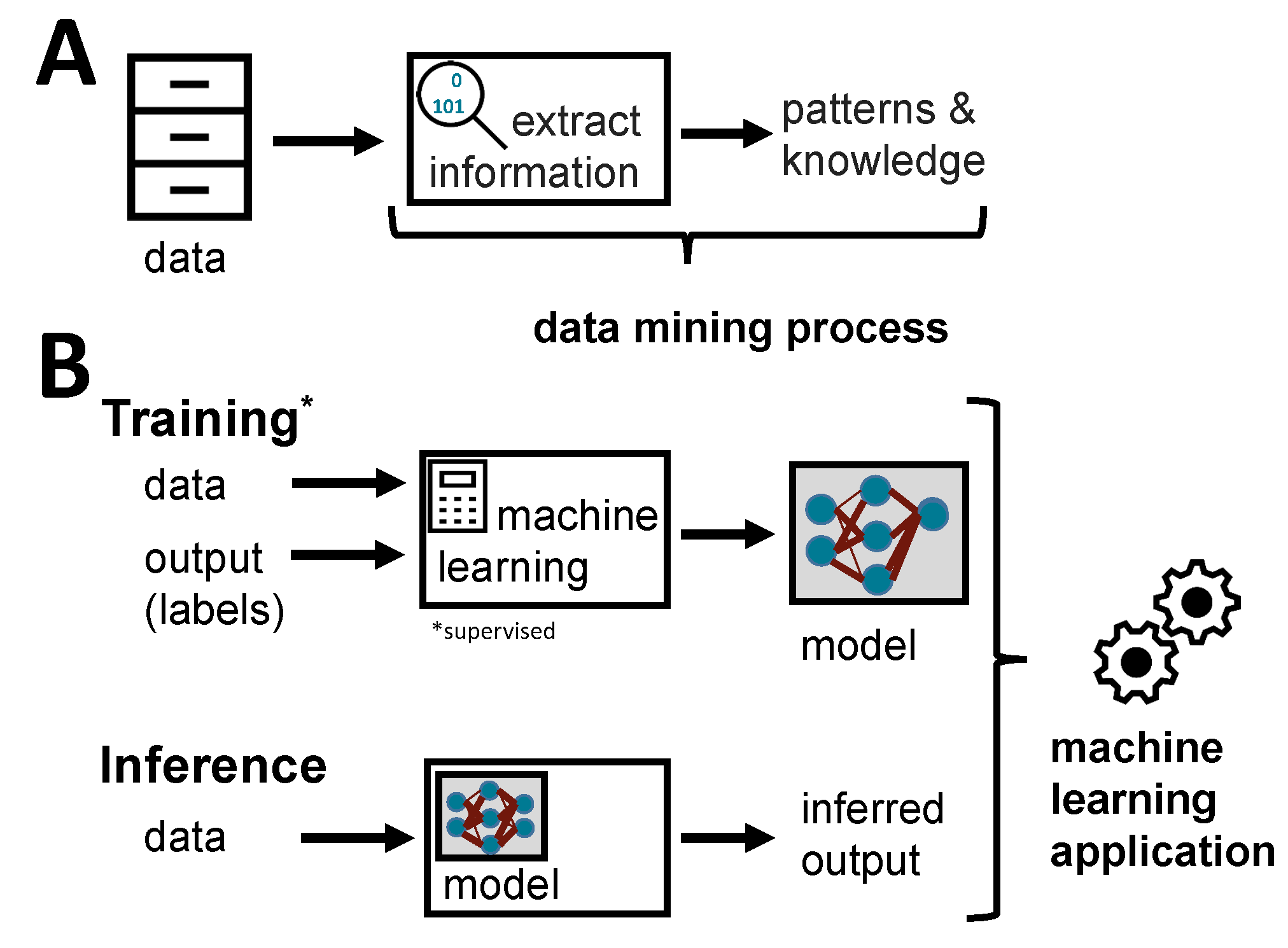

1. Introduction

2. Related Work

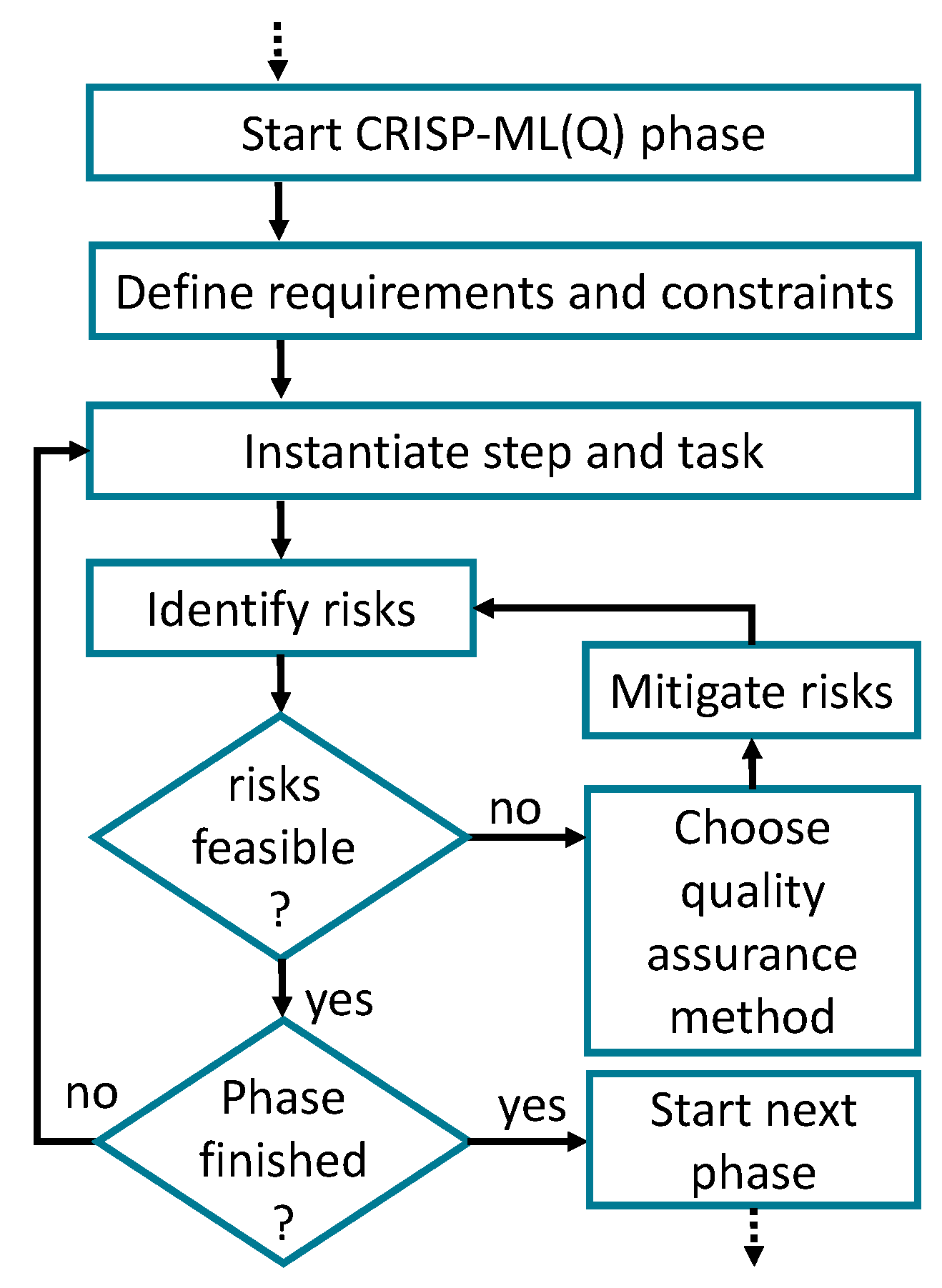

3. Quality Assurance in Machine Learning Projects

3.1. Business and Data Understanding

3.1.1. Define the Scope of the ML Application

3.1.2. Success Criteria

3.1.3. Feasibility

3.1.4. Data Collection

3.1.5. Data Quality Verification

3.1.6. Review of Output Documents

3.2. Data Preparation

3.2.1. Select Data

3.2.2. Clean Data

3.2.3. Construct Data

3.2.4. Standardize Data

3.3. Modeling

Assure Reproducibility

3.4. Evaluation

3.5. Deployment

3.6. Monitoring and Maintenance

- Non-stationary data distribution: Data distributions change over time and result in a stale training set and, thus, the characteristics of the data distribution are represented incorrectly by the training data. Either a shift in the features and/or in the labels are possible. This degrades the performance of the model over time. The frequency of the changes depends on the domain. Data of the stock market are very volatile, whereas the visual properties of elephants will not change much over the next few years.

- Degradation of hardware: The hardware that the model is deployed on and the sensor hardware will age over time. Wear parts in a system will age and friction characteristics of the system might change. Sensors get noisier or fail over time. This will shift the domain of the system and has to be adapted by the model or by retraining it.

- System updates: Updates on the software or hardware of the system can cause a shift in the environment. For example, the units of a signal got changed during an update. Without notifications, the model would use this scaled input to infer false predictions.

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lee, J.; Bagheri, B.; Kao, H.A. A cyber-physical systems architecture for industry 4.0-based manufacturing systems. Manuf. Lett. 2015, 3, 18–23. [Google Scholar] [CrossRef]

- Brettel, M.; Friederichsen, N.; Keller, M.; Rosenberg, M. How virtualization, decentralization and network building change the manufacturing landscape: An Industry 4.0 Perspective. Int. J. Mech. Ind. Sci. Eng. 2014, 8, 37–44. [Google Scholar]

- Dikmen, M.; Burns, C.M. Autonomous driving in the real world: Experiences with Tesla autopilot and summon. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 225–228. [Google Scholar]

- Kourou, K.; Exarchos, T.P.; Exarchos, K.P.; Karamouzis, M.V.; Fotiadis, D.I. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115. [Google Scholar] [CrossRef]

- Andrews, W.; Hare, J. Survey Analysis: AI and ML Development Strategies, Motivators and Adoption Challenges; Gartner: Stamford, CT, USA, 2019. [Google Scholar]

- Nimdzi Insights. Artificial Intelligence: Localization Winners, Losers, Heroes, Spectators, and You. Available online: https://www.nimdzi.com/wp-content/uploads/2019/06/Nimdzi-AI-whitepaper.pdf (accessed on 21 April 2021).

- Fischer, L.; Ehrlinger, L.; Geist, V.; Ramler, R.; Sobiezky, F.; Zellinger, W.; Brunner, D.; Kumar, M.; Moser, B. AI System Engineering—Key Challenges and Lessons Learned. Mach. Learn. Knowl. Extr. 2021, 3, 56–83. [Google Scholar] [CrossRef]

- Hamada, K.; Ishikawa, F.; Masuda, S.; Matsuya, M.; Ujita, Y. Guidelines for quality assurance of machine learning-based artificial intelligence. In Proceedings of the SEKE2020: The 32nd International Conference on Software Engineering& Knowledge Engineering, virtual, USA, 9–19 July 2020; pp. 335–341. [Google Scholar]

- Chapman, P.; Clinton, J.; Kerber, R.; Khabaza, T.; Reinartz, T.; Shearer, C.; Wirth, R. CRISP-DM 1.0 Step-by-Step Data Mining Guide. Available online: https://www.kde.cs.uni-kassel.de/wp-content/uploads/lehre/ws2012-13/kdd/files/CRISPWP-0800.pdf (accessed on 21 April 2021).

- Wirth, R.; Hipp, J. CRISP-DM: Towards a standard process model for data mining. In Proceedings of the Fourth International Conference on the Practical Application of Knowledge Discovery and Data Mining, Manchester, UK, 11–13 April 2000; pp. 29–39. [Google Scholar]

- Shearer, C. The CRISP-DM Model: The New Blueprint for Data Mining. J. Data Warehous. 2000, 5, 13–22. [Google Scholar]

- Kurgan, L.; Musilek, P. A survey of Knowledge Discovery and Data Mining process models. Knowl. Eng. Rev. 2006, 21, 1–24. [Google Scholar] [CrossRef]

- Mariscal, G.; Marbán, O.; Fernández, C. A survey of data mining and knowledge discovery process models and methodologies. Knowl. Eng. Rev. 2010, 25, 137–166. [Google Scholar] [CrossRef]

- Kriegel, H.P.; Borgwardt, K.M.; Kröger, P.; Pryakhin, A.; Schubert, M.; Zimek, A. Future trends in data mining. Data Min. Knowl. Discov. 2007, 15, 87–97. [Google Scholar] [CrossRef]

- de Abajo, N.; Diez, A.B.; Lobato, V.; Cuesta, S.R. ANN Quality Diagnostic Models for Packaging Manufacturing: An Industrial Data Mining Case Study. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 799–804. [Google Scholar]

- Gersten, W.; Wirth, R.; Arndt, D. Predictive modeling in automotive direct marketing: Tools, experiences and open issues. In Proceedings of the Sixth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Boston, MA, USA, 20–23 August 2000; pp. 398–406. [Google Scholar]

- Hipp, J.; Lindner, G. Analysing Warranty Claims of Automobiles; An Application Description following the CRISP-DM Data Mining Process. In Proceedings of the Fifth International Computer Science Conference, Hong Kong, China, 13–15 December 1999; pp. 31–40. [Google Scholar]

- IEEE. Std 1074-1997, IEEE Standard for Developing Software Life Cycle Processes; Technical Report; IEEE: New York, NY, USA, 1997. [Google Scholar]

- Marbán, O.; Segovia, J.; Menasalvas, E.; Fernández-Baizán, C. Toward data mining engineering: A software engineering approach. Inf. Syst. 2009, 34, 87–107. [Google Scholar] [CrossRef]

- SAS. SEMMA Data Mining Methodology; Technical Report; SAS Institute: Cary, NC, USA, 2016. [Google Scholar]

- IEEE. Standard for Software Quality Assurance Plans; IEEE Std 730-1998; IEEE: New York, NY, USA, 1998; pp. 1–24. [Google Scholar] [CrossRef]

- de Normalisation, Comite Europeen. EN ISO 9001:2015 Quality Management Systems-Requirements; Technical Report; ISO: Geneva, Switzerland, 2015. [Google Scholar]

- ISO/IEC JTC 1/SC 42 Artificial intelligence. ISO/IEC TR 24029: Artificial Intelligence (AI) — Assessment of the Robustness of Neural Networks; Technical Report; ISO/IEC: Geneva, Switzerland, 2021. [Google Scholar]

- Holzinger, A.; Kieseberg, P.; Weippl, E.; Tjoa, A.M. Current Advances, Trends and Challenges of Machine Learning and Knowledge Extraction: From Machine Learning to Explainable AI. In Machine Learning and Knowledge Extraction; Holzinger, A., Kieseberg, P., Tjoa, A.M., Weippl, E., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 1–8. [Google Scholar]

- Hazelwood, K.; Bird, S.; Brooks, D.; Chintala, S.; Diril, U.; Dzhulgakov, D.; Fawzy, M.; Jia, B.; Jia, Y.; Kalro, A.; et al. Applied machine learning at facebook: A datacenter infrastructure perspective. In Proceedings of the 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), Vienna, Austria, 24–28 February 2018; pp. 620–629. [Google Scholar]

- Breck, E.; Polyzotis, N.; Roy, S.; Whang, S.E.; Zinkevich, M. Data infrastructure for machine learning. In Proceedings of the SysML Conference, Stanford, CA, USA, 15–16 February 2018. [Google Scholar]

- Catley, C.; Smith, K.P.; McGregor, C.; Tracy, M. Extending CRISP-DM to incorporate temporal data mining of multidimensional medical data streams: A neonatal intensive care unit case study. In Proceedings of the 22nd IEEE International Symposium on Computer-Based Medical Systems, Sao Carlos, SP, Brazil, 22–25 June 2009; pp. 1–5. [Google Scholar]

- Heath, J.; McGregor, C. CRISP-DM0: A method to extend CRISP-DM to support null hypothesis driven confirmatory data mining. In Proceedings of the 1st Advances in Health Informatics Conference, Kitchener, ON, Canada, 28–30 April 2010; pp. 96–101. [Google Scholar]

- Venter, J.; de Waal, A.; Willers, C. Specializing CRISP-DM for evidence mining. In Proceedings of the IFIP International Conference on Digital Forensics, Orlando, FL, USA, 30 January–1 February 2007; pp. 303–315. [Google Scholar]

- Niaksu, O. CRISP Data Mining Methodology Extension for Medical Domain. Balt. J. Mod. Comput. 2015, 3, 92–109. [Google Scholar]

- Huber, S.; Wiemer, H.; Schneider, D.; Ihlenfeldt, S. DMME: Data mining methodology for engineering applications—A holistic extension to the CRISP-DM model. In Proceedings of the 12th CIRP Conference on Intelligent Computation in Manufacturing Engineering, Gulf of Naples, Italy, 18–20 July 2018. [Google Scholar] [CrossRef]

- Wiemer, H.; Drowatzky, L.; Ihlenfeldt, S. Data Mining Methodology for Engineering Applications (DMME)—A Holistic Extension to the CRISP-DM Model. Appl. Sci. 2019, 9, 2407. [Google Scholar] [CrossRef]

- Amershi, S.; Begel, A.; Bird, C.; DeLine, R.; Gall, H.; Kamar, E.; Nagappan, N.; Nushi, B.; Zimmermann, T. Software Engineering for Machine Learning: A Case Study. In Proceedings of the International Conference on Software Engineering (ICSE 2019)-Software Engineering in Practice Track, Montréal, QC, Canada, 25–31 May 2019. [Google Scholar]

- Breck, E.; Cai, S.; Nielsen, E.; Salib, M.; Sculley, D. The ML test score: A rubric for ML production readiness and technical debt reduction. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 1123–1132. [Google Scholar]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M.; Crespo, J.F.; Dennison, D. Hidden technical debt in machine learning systems. In Advances in Neural Information Processing Systems; ACM: New York, NY, USA, 2015; pp. 2503–2511. [Google Scholar]

- Kim, M.; Zimmermann, T.; DeLine, R.; Begel, A. Data Scientists in Software Teams: State of the Art and Challenges. IEEE Trans. Softw. Eng. 2018, 44, 1024–1038. [Google Scholar] [CrossRef]

- de Souza Nascimento, E.; Ahmed, I.; Oliveira, E.; Palheta, M.P.; Steinmacher, I.; Conte, T. Understanding Development Process of Machine Learning Systems: Challenges and Solutions. In Proceedings of the 2019 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Porto de Galinhas, Brazil, 19–20 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Surange, V.G. Implementation of Six Sigma to reduce cost of quality: A case study of automobile sector. J. Fail. Anal. Prev. 2015, 15, 282–294. [Google Scholar] [CrossRef]

- Yang, C.; Letourneau, S.; Zaluski, M.; Scarlett, E. APU FMEA Validation and Its Application to Fault Identification. In Proceedings of the ASME 2010 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Montreal, QC, Canada, 15–18 August 2010; Volume 3. [Google Scholar] [CrossRef]

- AIAG; der Automobilindustrie (VDA), V. FMEA Handbook-Failure Mode and Effects Analysis; AIAG: Southfield, MI, USA, 2019. [Google Scholar]

- Falcini, F.; Lami, G.; Mitidieri Costanza, A. Deep Learning in Automotive Software. IEEE Softw. 2017, 34, 56–63. [Google Scholar] [CrossRef]

- Kuwajima, H.; Yasuoka, H.; Nakae, T.; Open Problems in Engineering and Quality Assurance of Safety Critical Machine Learning Systems. CoRR 2018, abs/1812.03057. Available online: https://arxiv.org/pdf/1904.00001v1.pdf (accessed on 21 April 2021).

- Gunawardana, A.; Shani, G. A Survey of Accuracy Evaluation Metrics of Recommendation Tasks. J. Mach. Learn. Res. 2009, 10, 2935–2962. [Google Scholar]

- Lenarduzzi, V.; Taibi, D. MVP Explained: A Systematic Mapping Study on the Definitions of Minimal Viable Product. In Proceedings of the 2016 42th Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Limassol, Cyprus, 31 August–2 September 2016; pp. 112–119. [Google Scholar] [CrossRef]

- Thakur, A.; Beck, R.; Mostaghim, S.; Großmann, D. Survey into predictive key performance indicator analysis from data mining perspective. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 476–483. [Google Scholar] [CrossRef]

- Ramis Ferrer, B.; Muhammad, U.; Mohammed, W.M.; Martínez Lastra, J.L. Implementing and Visualizing ISO 22400 Key Performance Indicators for Monitoring Discrete Manufacturing Systems. Machines 2018, 6, 39. [Google Scholar] [CrossRef]

- Badawy, M.; El-Aziz, A.A.; Idress, A.M.; Hefny, H.; Hossam, S. A survey on exploring key performance indicators. Future Comput. Inform. J. 2016, 1, 47–52. [Google Scholar] [CrossRef]

- Hoffmann, M.W.; Wildermuth, S.; Gitzel, R.; Boyaci, A.; Gebhardt, J.; Kaul, H.; Amihai, I.; Forg, B.; Suriyah, M.; Leibfried, T.; et al. Integration of Novel Sensors and Machine Learning for Predictive Maintenance in Medium Voltage Switchgear to Enable the Energy and Mobility Revolutions. Sensors 2020, 20, 99. [Google Scholar] [CrossRef]

- Watanabe, Y.; Washizaki, H.; Sakamoto, K.; Saito, D.; Honda, K.; Tsuda, N.; Fukazawa, Y.; Yoshioka, N. Preliminary Systematic Literature Review of Machine Learning System Development Process. arXiv 2019, arXiv:cs.LG/1910.05528. [Google Scholar]

- Rudin, C.; Carlson, D. The Secrets of Machine Learning: Ten Things You Wish You Had Known Earlier to be More Effective at Data Analysis. arXiv 2019, arXiv:cs.LG/1906.01998. [Google Scholar]

- Pudaruth, S. Predicting the price of used cars using machine learning techniques. Int. J. Inf. Comput. Technol 2014, 4, 753–764. [Google Scholar]

- Reed, C.; Kennedy, E.; Silva, S. Responsibility, Autonomy and Accountability: Legal liability for machine learning. Available online: https://ssrn.com/abstract=2853462 (accessed on 21 April 2021).

- Bibal, A.; Lognoul, M.; de Streel, A.; Frénay, B. Legal requirements on explainability in machine learning. Artif. Intell. Law 2020, 1–21. [Google Scholar] [CrossRef]

- Binns, R. Fairness in Machine Learning: Lessons from Political Philosophy. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; Friedler, S.A., Wilson, C., Eds.; PMLR: New York, NY, USA; Volume 81, pp. 149–159. [Google Scholar]

- Corbett-Davies, S.; Goel, S. The Measure and Mismeasure of Fairness: A Critical Review of Fair Machine Learning. arXiv 2018, arXiv:cs.CY/1808.00023. [Google Scholar]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. arXiv 2019, arXiv:cs.AI/1910.10045. [Google Scholar] [CrossRef]

- McQueen, J.; Meilă, M.; VanderPlas, J.; Zhang, Z. Megaman: Scalable manifold learning in python. J. Mach. Learn. Res. 2016, 17, 5176–5180. [Google Scholar]

- Polyzotis, N.; Roy, S.; Whang, S.E.; Zinkevich, M. Data management challenges in production machine learning. In Proceedings of the 2017 ACM International Conference on Management of Data, Chicago, IL, USA, 14–19 May 2017; pp. 1723–1726. [Google Scholar]

- Schelter, S.; Biessmann, F.; Lange, D.; Rukat, T.; Schmidt, P.; Seufert, S.; Brunelle, P.; Taptunov, A. Unit Testing Data with Deequ. In Proceedings of the 2019 International Conference on Management of Data, Amsterdam, The Netherlands, 30 June–5 July 2019; pp. 1993–1996. [Google Scholar]

- Keogh, E.; Mueen, A. Curse of Dimensionality. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G.I., Eds.; Springer US: Boston, MA, USA, 2017; pp. 314–315. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern recognition and machine learning, 5th ed.; Information Science and Statistics, Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Braun, M.L.; Buhmann, J.M.; Müller, K.R. On relevant dimensions in kernel feature spaces. J. Mach. Learn. Res. 2008, 9, 1875–1908. [Google Scholar]

- Hira, Z.M.; Gillies, D.F. A review of feature selection and feature extraction methods applied on microarray data. Adv. Bioinform. 2015, 2015, 198363. [Google Scholar] [CrossRef]

- Saeys, Y.; Inza, I.n.; Larrañaga, P. A Review of Feature Selection Techniques in Bioinformatics. Bioinformatics 2007, 23, 2507–2517. [Google Scholar] [CrossRef]

- Chandrashekar, G.; Sahin, F. A Survey on Feature Selection Methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Guyon, I.; Gunn, S.; Nikravesh, M.; Zadeh, L.A. Feature Extraction: Foundations and Applications (Studies in Fuzziness and Soft Computing); Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Ambroise, C.; McLachlan, G.J. Selection bias in gene extraction on the basis of microarray gene-expression data. Proc. Natl. Acad. Sci. USA 2002, 99, 6562–6566. [Google Scholar] [CrossRef]

- Cai, J.; Luo, J.; Wang, S.; Yang, S. Feature selection in machine learning: A new perspective. Neurocomputing 2018, 300, 70–79. [Google Scholar] [CrossRef]

- Blum, A.L.; Langley, P. Selection of relevant features and examples in machine learning. Artif. Intell. 1997, 97, 245–271. [Google Scholar] [CrossRef]

- Lapuschkin, S.; Binder, A.; Montavon, G.; Müller, K.R.; Samek, W. Analyzing classifiers: Fisher vectors and deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2912–2920. [Google Scholar]

- Lapuschkin, S.; Wäldchen, S.; Binder, A.; Montavon, G.; Samek, W.; Müller, K.R. Unmasking Clever Hans predictors and assessing what machines really learn. Nat. Commun. 2019, 10, 1096. [Google Scholar] [CrossRef] [PubMed]

- Samek, W.; Montavon, G.; Vedaldi, A.; Hansen, L.K.; Müller, K.R. Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer Nature: Berlin/Heidelberg, Germany, 2019; Volume 11700. [Google Scholar]

- Lawrence, S.; Burns, I.; Back, A.; Tsoi, A.C.; Giles, C.L. Neural network classification and prior class probabilities. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 1998; pp. 299–313. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Batista, G.E.A.P.A.; Prati, R.C.; Monard, M.C. A Study of the Behavior of Several Methods for Balancing Machine Learning Training Data. SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A Python Toolbox to Tackle the Curse of Imbalanced Datasets in Machine Learning. J. Mach. Learn. Res. 2017, 18, 559–563. [Google Scholar]

- Walker, J.S. A Primer on Wavelets and Their Scientific Applications; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Lyons, R.G. Understanding Digital Signal Processing, 2nd ed.; Prentice Hall PTR: Upper Saddle River, NJ, USA, 2004. [Google Scholar]

- Che, Z.; Purushotham, S.; Cho, K.; Sontag, D.; Liu, Y. Recurrent neural networks for multivariate time series with missing values. Sci. Rep. 2018, 8, 6085. [Google Scholar] [CrossRef] [PubMed]

- Biessmann, F.; Salinas, D.; Schelter, S.; Schmidt, P.; Lange, D. Deep Learning for Missing Value Imputationin Tables with Non-Numerical Data. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Turin, Italy, 22–26 October 2018; pp. 2017–2025. [Google Scholar]

- Koren, Y.; Bell, R.; Volinsky, C. Matrix Factorization Techniques for Recommender Systems. Computer 2009, 42, 30–37. [Google Scholar] [CrossRef]

- Murray, J.S. Multiple imputation: A review of practical and theoretical findings. Stat. Sci. 2018, 33, 142–159. [Google Scholar] [CrossRef]

- White, I.R.; Royston, P.; Wood, A.M. Multiple imputation using chained equations: Issues and guidance for practice. Stat. Med. 2011, 30, 377–399. [Google Scholar] [CrossRef]

- Azur, M.J.; Stuart, E.A.; Frangakis, C.; Leaf, P.J. Multiple imputation by chained equations: What is it and how does it work? Int. J. Methods Psychiatr. Res. 2011, 20, 40–49. [Google Scholar] [CrossRef]

- Bertsimas, D.; Pawlowski, C.; Zhuo, Y.D. From Predictive Methods to Missing Data Imputation: An Optimization Approach. J. Mach. Learn. Res. 2018, 18, 1–39. [Google Scholar]

- Coates, A.; Ng, A.Y. Learning feature representations with k-means. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 561–580. [Google Scholar]

- Schölkopf, B.; Smola, A.; Müller, K.R. Kernel principal component analysis. In Proceedings of the International Conference on Artificial Neural Networks; Springer-Verlag: Berlin/Heidelberg, Germany, 1997; pp. 583–588. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; Technical Report; California Univ San Diego La Jolla Inst for Cognitive Science: La Jolla, CA, USA.

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Wong, S.C.; Gatt, A.; Stamatescu, V.; McDonnell, M.D. Understanding data augmentation for classification: When to warp? In Proceedings of the 2016 international conference on digital image computing: Techniques and applications (DICTA), Gold Coast, Australia, 30 November– 2 December 2016; pp. 1–6. [Google Scholar]

- Andulkar, M.; Hodapp, J.; Reichling, T.; Reichenbach, M.; Berger, U. Training CNNs from Synthetic Data for Part Handling in Industrial Environments. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; pp. 624–629. [Google Scholar]

- LeCun, Y.A.; Bottou, L.; Orr, G.B.; Müller, K.R. Efficient backprop. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 9–48. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- Baylor, D.; Breck, E.; Cheng, H.T.; Fiedel, N.; Foo, C.Y.; Haque, Z.; Haykal, S.; Ispir, M.; Jain, V.; Koc, L.; et al. TFX: A TensorFlow-based production-scale machine learning platform. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1387–1395. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Schmidt, P.; Biessmann, F. Quantifying Interpretability and Trust in Machine Learning Systems. arXiv 2019, arXiv:1901.08558. [Google Scholar]

- Marler, R.T.; Arora, J.S. Survey of multi-objective optimization methods for engineering. Struct. Multidiscip. Optim. 2004, 26, 369–395. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Wolpert, D.H. The Lack of a Priori Distinctions Between Learning Algorithms. Neural Comput. 1996, 8, 1341–1390. [Google Scholar] [CrossRef]

- Müller, K.R.; Mika, S.; Rätsch, G.; Tsuda, K.; Schölkopf, B. An introduction to kernel-based learning algorithms. IEEE Trans. Neural Netw. 2001, 12, 181–201. [Google Scholar] [CrossRef]

- Zhang, J.M.; Harman, M.; Ma, L.; Liu, Y. Machine Learning Testing: Survey, Landscapes and Horizons. IEEE Transactions on Software Engineering 2020, 1-1. [Google Scholar] [CrossRef]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. (Eds.) Automated Machine Learning-Methods, Systems, Challenges; The Springer Series on Challenges in Machine Learning; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.; Blum, M.; Hutter, F. Efficient and Robust Automated Machine Learning. In Advances in Neural Information Processing Systems 28; MIT Press: Cambridge, MA, USA, 2015; pp. 2962–2970. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Erhan, D.; Bengio, Y.; Courville, A.; Manzagol, P.A.; Vincent, P.; Bengio, S. Why Does Unsupervised Pre-training Help Deep Learning? J. Mach. Learn. Res. 2010, 11, 625–660. [Google Scholar]

- Dreher, D.; Schmidt, M.; Welch, C.; Ourza, S.; Zündorf, S.; Maucher, J.; Peters, S.; Dreizler, A.; Böhm, B.; Hanuschkin, A. Deep Feature Learning of In-Cylinder Flow Fields to Analyze CCVs in an SI-Engine. Int. J. Engine Res. 2020. [Google Scholar] [CrossRef]

- Kingma, D.P.; Mohamed, S.; Jimenez Rezende, D.; Welling, M. Semi-supervised Learning with Deep Generative Models. In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; MIT Press: Cambridge, MA, USA, 2014; pp. 3581–3589. [Google Scholar]

- Chapelle, O.; Schlkopf, B.; Zien, A. Semi-Supervised Learning, 1st ed.; The MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; MIT Press: Cambridge, MA, USA, 2014; pp. 3320–3328. [Google Scholar]

- Williams, C.K.I.; Seeger, M. Using the Nyström Method to Speed Up Kernel Machines. In Advances in Neural Information Processing Systems 13; Leen, T.K., Dietterich, T.G., Tresp, V., Eds.; MIT Press: Cambridge, MA, USA, 2001; pp. 682–688. [Google Scholar]

- Drineas, P.; Mahoney, M.W. On the Nyström Method for Approximating a Gram Matrix for Improved Kernel-Based Learning. J. Mach. Learn. Res. 2005, 6, 2153–2175. [Google Scholar]

- Cheng, Y.; Wang, D.; Zhou, P.; Zhang, T. A Survey of Model Compression and Acceleration for Deep Neural Networks. CoRR 2017, abs/1710.09282. Available online: https://arxiv.org/pdf/1710.09282.pdf (accessed on 21 April 2021).

- Frankle, J.; Carbin, M. The lottery ticket hypothesis: Finding sparse, trainable neural networks. arXiv 2018, arXiv:1803.03635. [Google Scholar]

- Wiedemann, S.; Kirchhoffer, H.; Matlage, S.; Haase, P.; Marbán, A.; Marinc, T.; Neumann, D.; Nguyen, T.; Osman, A.; Marpe, D.; et al. DeepCABAC: A Universal Compression Algorithm for Deep Neural Networks. IEEE J. Sel. Top. Signal Process. 2020, 14, 700–714. [Google Scholar] [CrossRef]

- Rokach, L. Ensemble-based classifiers. Artif. Intell. Rev. 2010, 33, 1–39. [Google Scholar] [CrossRef]

- Zhou, Z.H.; Wu, J.; Tang, W. Ensembling neural networks: Many could be better than all. Artif. Intell. 2002, 137, 239–263. [Google Scholar] [CrossRef]

- Opitz, D.; Maclin, R. Popular ensemble methods: An empirical study. J. Artif. Intell. Res. 1999, 11, 169–198. [Google Scholar] [CrossRef]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; pp. 6402–6413. [Google Scholar]

- Gal, Y.; Ghahramani, Z. Dropout as a Bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1050–1059. [Google Scholar]

- Pineau, J. The Machine Learning Reproducibility Checklist. 2019. Available online: https://www.cs.mcgill.ca/~jpineau/ReproducibilityChecklist.pdf (accessed on 21 April 2021).

- Tatman, R.; VanderPlas, J.; Dane, S. A Practical Taxonomy of Reproducibility for Machine Learning Research. Available online: https://openreview.net/forum?id=B1eYYK5QgX (accessed on 21 April 2021).

- Henderson, P.; Islam, R.; Bachman, P.; Pineau, J.; Precup, D.; Meger, D. Deep reinforcement learning that matters. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Sculley, D.; Snoek, J.; Wiltschko, A.; Rahimi, A. Winner’s Curse? On Pace, Progress, and Empirical Rigor. Available online: https://openreview.net/forum?id=rJWF0Fywf (accessed on 21 April 2021).

- Bouthillier, X.; Laurent, C.; Vincent, P. Unreproducible Research is Reproducible. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 725–734. [Google Scholar]

- Vartak, M.; Subramanyam, H.; Lee, W.E.; Viswanathan, S.; Husnoo, S.; Madden, S.; Zaharia, M. ModelDB: A System for Machine Learning Model Management. In Proceedings of the Workshop on Human-In-the-Loop Data Analytics, San Francisco, CA, USA, 26 June 2016; pp. 14:1–14:3. [Google Scholar] [CrossRef]

- Zhou, Z.Q.; Sun, L. Metamorphic Testing of Driverless Cars. Commun. ACM 2019, 62, 61–67. [Google Scholar] [CrossRef]

- Tian, Y.; Pei, K.; Jana, S.; Ray, B. DeepTest: Automated Testing of Deep-neural-network-driven Autonomous Cars. In Proceedings of the 40th International Conference on Software Engineering, Gothenburg, Sweden, 27 May–3 June 2018; pp. 303–314. [Google Scholar] [CrossRef]

- Pei, K.; Cao, Y.; Yang, J.; Jana, S. DeepXplore: Automated Whitebox Testing of Deep Learning Systems. In Proceedings of the 26th Symposium on Operating Systems Principles, Shanghai, China, 28–31 October 2017; pp. 1–18. [Google Scholar] [CrossRef]

- Chan-Hon-Tong, A. An Algorithm for Generating Invisible Data Poisoning Using Adversarial Noise That Breaks Image Classification Deep Learning. Mach. Learn. Knowl. Extr. 2019, 1, 192–204. [Google Scholar] [CrossRef]

- Chakarov, A.; Nori, A.V.; Rajamani, S.K.; Sen, S.; Vijaykeerthy, D. Debugging Machine Learning Tasks. arXiv 2016, arXiv:1603.07292. [Google Scholar]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.R.; Samek, W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 2015, 10, e0130140. [Google Scholar] [CrossRef] [PubMed]

- Baehrens, D.; Schroeter, T.; Harmeling, S.; Kawanabe, M.; Hansen, K.; Müller, K.R. How to explain individual classification decisions. J. Mach. Learn. Res. 2010, 11, 1803–1831. [Google Scholar]

- Arras, L.; Horn, F.; Montavon, G.; Müller, K.R.; Samek, W. “What is relevant in a text document?”: An interpretable machine learning approach. PLoS ONE 2017, 12, e0181142. [Google Scholar] [CrossRef] [PubMed]

- Hois, J.; Theofanou-Fuelbier, D.; Junk, A.J. How to Achieve Explainability and Transparency in Human AI Interaction. In HCI International 2019-Posters; Stephanidis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 177–183. [Google Scholar]

- Schneider, T.; Hois, J.; Rosenstein, A.; Gerlicher, A.; Theofanou-Fülbier, D.; Ghellal, S. ExplAIn Yourself! Transparency for Positive UX in Autonomous Driving. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Denver, US, USA, 6–11 May 2021. CHI ‘21. [Google Scholar] [CrossRef]

- Thrun, M.C.; Ultsch, A.; Breuer, L. Explainable AI Framework for Multivariate Hydrochemical Time Series. Mach. Learn. Knowl. Extr. 2021, 3, 170–204. [Google Scholar] [CrossRef]

- Alber, M.; Lapuschkin, S.; Seegerer, P.; Hägele, M.; Schütt, K.T.; Montavon, G.; Samek, W.; Müller, K.R.; Dähne, S.; Kindermans, P.J. iNNvestigate neural networks! J. Mach. Learn. Res. 2019, 20, 1–8. [Google Scholar]

- Nori, H.; Jenkins, S.; Koch, P.; Caruana, R. InterpretML: A Unified Framework for Machine Learning Interpretability. arXiv 2019, arXiv:cs.LG/1909.09223. [Google Scholar]

- Burkart, N.; Huber, M.F. A Survey on the Explainability of Supervised Machine Learning. J. Artif. Intell. Res. 2021, 70, 245–317. [Google Scholar] [CrossRef]

- Wu, C.J.; Brooks, D.; Chen, K.; Chen, D.; Choudhury, S.; Dukhan, M.; Hazelwood, K.; Isaac, E.; Jia, Y.; Jia, B.; et al. Machine learning at Facebook: Understanding inference at the edge. In Proceedings of the 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA), Washington, DC, USA, 16–20 February 2019; pp. 331–344. [Google Scholar]

- Sehgal, A.; Kehtarnavaz, N. Guidelines and Benchmarks for Deployment of Deep Learning Models on Smartphones as Real-Time Apps. Mach. Learn. Knowl. Extr. 2019, 1, 450–465. [Google Scholar] [CrossRef]

- Christidis, A.; Davies, R.; Moschoyiannis, S. Serving Machine Learning Workloads in Resource Constrained Environments: A Serverless Deployment Example. In Proceedings of the 2019 IEEE 12th Conference on Service-Oriented Computing and Applications (SOCA), Kaohsiung, Taiwan, China, 18–21 November 2019; pp. 55–63. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 2018, 312, 135–153. [Google Scholar] [CrossRef]

- Sugiyama, M.; Krauledat, M.; Müller, K.R. Covariate shift adaptation by importance weighted cross validation. J. Mach. Learn. Res. 2007, 8, 985–1005. [Google Scholar]

- Heckemann, K.; Gesell, M.; Pfister, T.; Berns, K.; Schneider, K.; Trapp, M. Safe automotive software. In Proceedings of the International Conference on Knowledge-Based and Intelligent Information and Engineering Systems, Kaiserslautern, Germany, 10–12 September 2011; pp. 167–176. [Google Scholar]

- Berkenkamp, F.; Moriconi, R.; Schoellig, A.P.; Krause, A. Safe learning of regions of attraction for uncertain, nonlinear systems with gaussian processes. In Proceedings of the 2016 IEEE 55th Conference on Decision and Control (CDC), Las Vegas, NV, USA, 12–14 December 2016; pp. 4661–4666. [Google Scholar]

- Derakhshan, B.; Mahdiraji, A.R.; Rabl, T.; Markl, V. Continuous Deployment of Machine Learning Pipelines. In Proceedings of the 22nd International Conference on Extending Database Technology (EDBT), Lisbon, Portugal, 26–29 March 2019; pp. 397–408. [Google Scholar]

- Fehling, C.; Leymann, F.; Retter, R.; Schupeck, W.; Arbitter, P. Cloud Computing Patterns: Fundamentals to Design, Build, and Manage Cloud Applications; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar] [CrossRef]

- Muthusamy, V.; Slominski, A.; Ishakian, V. Towards Enterprise-Ready AI Deployments Minimizing the Risk of Consuming AI Models in Business Applications. In Proceedings of the 2018 First International Conference on Artificial Intelligence for Industries (AI4I), Laguna Hills, CA, USA, 26–28 September 2018; pp. 108–109. [Google Scholar] [CrossRef]

- Ghanta, S.; Subramanian, S.; Sundararaman, S.; Khermosh, L.; Sridhar, V.; Arteaga, D.; Luo, Q.; Das, D.; Talagala, N. Interpretability and Reproducability in Production Machine Learning Applications. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 14–17 December 2018; pp. 658–664. [Google Scholar]

- Aptiv; Audi; Baidu; BMW; Continental; Daimler; FCA; here; infineo; intel; et al. Safety First For Automated Driving. 2019. Available online: https://www.daimler.com/dokumente/innovation/sonstiges/safety-first-for-automated-driving.pdf (accessed on 2 July 2019).

| CRISP-ML(Q) | CRISP-DM | Amershi et al. [34] | Breck et al. [35] | |

|---|---|---|---|---|

| Business and Data Understanding | Business Understanding | Requirements | - | |

| Data Understanding | Collection | Data | ||

| Data Preparation | Data Preparation | Cleaning | Infra- structure | |

| Labeling | ||||

| Feature Engineering | ||||

| Modeling | Modeling | Training | Model | |

| Evaluation | Evaluation | Evaluation | - | |

| Deployment | Deployment | Deployment | - | |

| Monitoring & Maintenance | - | Monitoring | Monitoring | |

| Performance | The Model’s Performance on Unseen Data |

|---|---|

| Robustness | Ability of the ML application to maintain its level of performance under defined circumstances (ISO/IEC technical report 24029 [24]) |

| Scalability | The model’s ability to scale to high data volume in the production system. |

| Explainability | The model’s direct or post hoc explainability. |

| Model Complexity | The model’s capacity should suit the data complexity. |

| Resource Demand | The model’s resource demand for deployment. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Studer, S.; Bui, T.B.; Drescher, C.; Hanuschkin, A.; Winkler, L.; Peters, S.; Müller, K.-R. Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology. Mach. Learn. Knowl. Extr. 2021, 3, 392-413. https://doi.org/10.3390/make3020020

Studer S, Bui TB, Drescher C, Hanuschkin A, Winkler L, Peters S, Müller K-R. Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology. Machine Learning and Knowledge Extraction. 2021; 3(2):392-413. https://doi.org/10.3390/make3020020

Chicago/Turabian StyleStuder, Stefan, Thanh Binh Bui, Christian Drescher, Alexander Hanuschkin, Ludwig Winkler, Steven Peters, and Klaus-Robert Müller. 2021. "Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology" Machine Learning and Knowledge Extraction 3, no. 2: 392-413. https://doi.org/10.3390/make3020020

APA StyleStuder, S., Bui, T. B., Drescher, C., Hanuschkin, A., Winkler, L., Peters, S., & Müller, K.-R. (2021). Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology. Machine Learning and Knowledge Extraction, 3(2), 392-413. https://doi.org/10.3390/make3020020