Confidence Intervals for Class Prevalences under Prior Probability Shift

Abstract

1. Introduction

- Would it be worthwhile to distinguish confidence and prediction intervals for class prevalences and deploy different methods for their estimation? This question is raised against the backdrop that, for instance, Keith and O’Connor [7] talked about estimating confidence intervals but in fact constructed prediction intervals which are conceptionally different [12].

- Would it be worthwhile to base class prevalence estimation on more accurate classifiers? The background for this question are conflicting statements in the literature as to the benefit of using accurate classifiers for prevalence estimation. On the one hand, Forman [2] stated on p. 168: “A major benefit of sophisticated methods for quantification is that a much less accurate classifier can be used to obtain reasonably precise quantification estimates. This enables some applications of machine learning to be deployed where otherwise the raw classification accuracy would be unacceptable or the training effort too great.” As an example for the opposite position, on the other hand, Barranquero et al. [13] commented on p. 595 with respect to prevalence estimation: “We strongly believe that it is also important for the learner to consider the classification performance as well. Our claim is that this aspect is crucial to ensure a minimum level of confidence for the deployed models.”

- Which prevalence estimation methods show the best performance with respect to the construction of as short as possible confidence intervals for class prevalences?

- Do non-simulation approaches to the construction of confidence intervals for class prevalences work?

- The true class prevalences are known and can even be chosen with a view to facilitate obtaining clear answers.

- The setting of the study can be freely modified—e.g., with regard to samples sizes or accuracy of the involved classifiers—to more precisely investigate the topics in question.

- In a simulation study, it is easy to apply an ablation approach to assess the relative impact of factors that influence the performance of methods for estimating confidence intervals.

- The results can be easily replicated.

- Simulation studies are good for delivering counter-examples. A method performing poorly in the study reported in this paper may be considered unlikely to perform much better in complex real-world settings.

- Most findings of the study are suggestive and illustrative only. No firm conclusions can be drawn from them.

- Important features of the problem which only occur in real-world situations might be overlooked.

- The prevalence estimation problem primarily is caused by dataset shift. For capacity reasons, the scope of the simulation study in this paper is restricted to prior probability shift, a special type of dataset shift. In the literature, prior probability shift is known under a number of different names, for instance “target shift” [14], “global drift” [15], or “label shift” [16] (see Moreno-Torres et al. [17] for a categorisation of types of dataset shift).

- Extra efforts to construct prediction intervals instead of confidence intervals for class prevalences appear to be unnecessary.

- “Error Adjusted Bootstrapping” as proposed by Daughton and Paul [11] for the construction of prevalence confidence or prediction intervals may fail in the presence of prior probability shift.

- Deploying more accurate classifiers for class prevalence estimation results in shorter confidence intervals.

- Compared to the other estimation methods considered in this paper, straight-forward “adjusted classify and count” methods for prevalence estimation [2] (called “confusion matrix method” in [18]) without any further tuning produce the longest confidence intervals and, hence, given identical coverage, perform worst. Methods based on minimisation of the Hellinger distance [3] (with different numbers of bins) produce much shorter confidence intervals, but sometimes do not guarantee sufficient coverage. The maximum likelihood approach (with bootstrapping for the confidence intervals) and “adjusted probabilistic classify and count” ([19], called there “scaled probability average”) appear to stably produce the shortest confidence intervals among the methods considered in the paper.

- Section 2 describes the conception and technical details of the simulation study, including in Section 2.2 a list of the prevalence estimation methods in scope.

- Section 3 provides some tables with results of the study and comments on the results, in order to explore the questions stated above. The results in Section 3.3 show that certain standard non-simulation approaches cannot take into account estimation uncertainty in the training sample and that bootstrap-based construction of confidence intervals could be used instead.

- Section 4 wraps up and closes the paper.

- In Appendix A, the mathematical details needed for coding the simulation study are listed.

- In Appendix B, the appropriateness for prior probability shift of the approach proposed by Daughton and Paul [11] is investigated.

2. Setting of the Simulation Study

- There is a training sample of observations of features and class labels for m instances. Instances with label belong to the negative class, instances with label 1 belong to the positive class. By assumption, this sample was generated from a joined distribution (the training population distribution) of the feature random variable X and the label (or class) random variable Y.

- There is a test sample of observations of features for n instances. By assumption, each instance has a latent class label , and both the features and the labels were generated from a joined distribution (the test population distribution) of the feature random variable X and the label random variable Y.

2.1. The Model for the Simulation Study

- is the prevalence of the positive class in the training population.

- is the size of training sample. In the case , the training sample is considered identical with the training population and learning of the model is unnecessary. In the case of a finite training sample, the number of instances with positive labels is non-random in order to reflect the fact that for model development purposes a pre-defined stratification of the training sample might be desirable and can be achieved by under-sampling of the majority class or by over-sampling of the minority class. then is the size of the training sub-sample with positive labels, and is the size of the training sub-sample with negative labels. Hence, it holds that , und for finite m.

- is the prevalence of the positive class in the test population.

- is the size of the test sample. In the test sample, the number of instances with positive labels is random.

- The population distribution underlying the features of the negative-class training sub-sample is always with and . The population distribution underlying the features of the positive-class training sub-sample is with and .

- The population distribution underlying the features of the negative-class test sub-sample is always with and . The population distribution underlying the features of the positive-class test sub-sample is with and .

- The number of simulation runs in all of the experiments is , i.e., -times a training sample and a test sample as specified above are generated and subjected to some estimation procedures.

- The number of bootstrap iterations where needed in any of the interval estimation procedures is always [23].

- All confidence and prediction intervals are constructed at confidence level.

2.2. Methods for Prevalence Estimation Considered in this Paper

- The methods must be Fisher consistent in the sense of Tasche [24]. This criterion excludes, for instance, “classify and count” [2], the “Q-measure” approach [1] and the distance-minimisation approaches based on the Inner Product, Kumar–Hassebrook, Cosine, and Harmonic Mean distances mentioned in [8].

- The methods should enjoy some popularity in the literature.

- Two new methods based on already established methods and designed to minimise the lengths of confidence intervals are introduced and tested.

- ACCp: Adjusted Classify and Count, based on the Bayes classifier that maximises the difference of TPR (true positive rate) and FPR (false positive rate). “p” because if the Bayes classifier is represented by means of the posterior probability of the positive class and a threshold, the threshold needs to be p, the a priori probability (or prevalence) of the positive class in the training population. ACCp is called “method max” in Forman [2].

- ACCv: New version of ACC where the threshold for the classifier is selected in such a way that the variance of the prevalence estimates is minimised among all ACC-type estimators based on classifiers represented by means of the posterior probability of the positive class and some threshold.

- MS: “Median sweep” as proposed by Forman [2].

- APCC: “Adjusted probabilistic classify and count” ([19], there called “scaled probability average”).

- APCCv: New version of APCC where the a priori positive class probability parameter in the posterior positive class probability is selected in such a way that the variance of the prevalence estimates is minimised among all APCC-type estimators based on posterior positive class probabilities where the a priori positive class probability parameter varies between 0 and 1.

- MLinf/MLboot: ML is the maximum likelihood approach to prevalence estimation [26]. Note that the EM (expectation maximisation) approach of Saerens et al. [18] is one way to implement ML. “MLinf” refers to construction of the prevalence confidence interval based on the asymptotic normality of the ML estimator (using the Fisher information for the variance). “MLboot” refers to construction of the prevalence confidence interval solely based on bootstrap sampling.

2.3. Calculations Performed in the Simulation Study

- Create the training sample: Simulate times from features , …, of positive instances and times from features , …, of negative instances.

- Create the test sample: Simulate the number of positive instances as a binomial random variable with size n and success probability q. Then, simulate times from features , …, of positive instances and times from features , …, of negative instances. The information of whether a feature was sampled from or from is assumed to be unknown in the estimation step. Therefore, the generated features are combined in a single sample , …, .

- Iterate R times the bootstrap procedure: Generate by stratified sampling with replications bootstrap samples , …, of features of positive instances, , …, of features of negative instances from the training subsamples, and , …, of features with unknown labels from the test sample. Calculate, based on the three resulting bootstrap samples, estimates of the positive class prevalence in the test population according to all the estimation methods listed in Section 2.2.

- For each estimation method, the bootstrap procedure from the previous step creates a sample of R estimates of the positive class prevalence. Based on this sample of R estimates, construct confidence intervals at level for the positive class prevalence in the test population.

- For each estimation method, estimates of the positive class prevalence are calculated. From this set of estimates, the following summary results were derived and tabulated:

- −

- the average of the prevalence estimates;

- −

- the average absolute deviation of the prevalence estimates from the true prevalence parameter;

- −

- the percentage of simulation runs with failed prevalence estimates; and

- −

- the percentage of estimates equal to 0 or 1;

- For each estimation method, confidence intervals at level for the positive class prevalence are produced. From this set of confidence intervals, the following summary results were derived and tabulated:

- −

- the average length of the confidence intervals; and

- −

- the percentage of confidence intervals that contain the true prevalence parameter (coverage rate).

- “A confidence interval for an unknown quantity may be formally characterized as follows: If one repeatedly calculates such intervals from many independent random samples, of the intervals would, in the long run, correctly include the actual value . Equivalently, one would, in the long run, be correct of the time in claiming that the actual value of is contained within the confidence interval.” ([12] Section 2.2.5)

- “If from many independent pairs of random samples, a prediction interval is computed from the data of the first sample to contain the value(s) of the second sample, of the intervals would, in the long run, correctly bracket the future value(s). Equivalently, one would, in the long run, be correct of the time in claiming that the future value(s) will be contained within the prediction interval.” ([12] Section 2.3.6)

- 4*.

- For each estimation method, the bootstrap procedure from the previous step creates a sample of R estimates of the positive class prevalence. For each estimate, generate a virtual number of realisations of positive instances by simulating an independent binomial variable with size n and success probability given by the estimate. Divide these virtual numbers by n to obtain (for each estimation method) a sample of relative frequencies of positive labels. Based on this additional size-R sample of relative frequencies, construct prediction intervals at level for the percentage of instances with positive labels in the test sample.

- For each estimation method, virtual relative frequencies of positive labels in the test sample were simulated under the assumption that the estimated positive class prevalence equals the true prevalence. From this set of frequencies, the following summary results are derived and tabulated:

- −

- the average of the virtual relative frequencies;

- −

- the average absolute deviation of the virtual relative frequencies from the true prevalence parameter;

- −

- the percentage of simulation runs with failed prevalence estimates and hence also failed simulations of virtual relative frequencies of positive labels; and

- −

- the percentage of virtual relative frequencies equal to 0 or 1.

- For each estimation method, prediction intervals at level for the realised relative frequencies of positive labels are produced. From this set of prediction intervals, the following summary results were derived and tabulated:

- −

- the average length of the prediction intervals; and

- −

- the percentage of prediction intervals that contain the true relative frequencies of positive labels (coverage rate).

3. Results of the Simulation Study

3.1. Prediction vs. Confidence Intervals

- Top two panels: Simulation of a “benign” situation, with not too much difference of positive class prevalences (33% vs. 20%) in training and test population distributions, and high power of the score underlying the classifiers and distance minimisation approaches. The results suggest “overshooting” by the binomial prediction interval approach, i.e., intervals are so long that coverage is much higher than requested, even reaching 100%. The confidence intervals clearly show sufficient coverage of the true realised percentages of positive labels for all estimation methods. Interval lengths are quite uniform, with only the straight ACC methods ACC50 and ACCp showing distinctly longer intervals. In addition, in terms of average absolute deviation from the true positive class prevalence, the performance is rather uniform. However, it is interesting to see that ACCv which has been designed for minimising confidence interval length among the ACC estimators shows the distinctly worst performance with regard to average absolute deviation.

- Central two panels: Simulation of a rather adverse situation, with very different (67% vs. 20%) positive class prevalences in training and test population distributions and low power of the score underlying the classifiers and distance minimisation approaches. There is still overshooting by the binomial prediction interval approach for all methods but H8. For all methods but H8, sufficient coverage by the confidence intervals is still clearly achieved. H8 coverage of relative positive class frequency is significantly too low with the confidence intervals but still sufficient with the prediction intervals. However, H8 also displays heavy bias of the average relative frequency of positive labels, possibly a consequence of the combined difficulties of there being eight bins for only 50 points (test sample size) and little difference between the densities of the score conditional on the two classes. In terms of interval length performance MLboot is best, closely followed by APCC and Energy. However, even for these methods, confidence interval lengths of more than 47% suggest that the estimation task is rather hopeless.

- Bottom two panels: Similar picture to the central panels, but even more adverse with a small test sample prevalence of 5%. The results are similar, but much higher proportions of 0% estimates for all methods. H8 now has insufficient coverage with both prediction and confidence intervals, and also H4 coverage with the confidence intervals is insufficient. Note the strong estimation bias suggested by all average frequency estimates, presumably caused by the clipping of negative estimates (i.e., replacing such estimates by zero). Among all these bad estimators, MLboot is clearly best in terms of bias, average absolute deviation and interval lengths.

- General conclusion: For all methods in Section 2.2 but the Hellinger methods, it suffices to construct confidence intervals. No need to apply special prediction interval techniques.

- Performance in terms of interval length (with sufficient coverage in all circumstances): MLboot best, followed by APCC and Energy.

3.2. Does Higher Accuracy Help for Shorter Confidence Intervals?

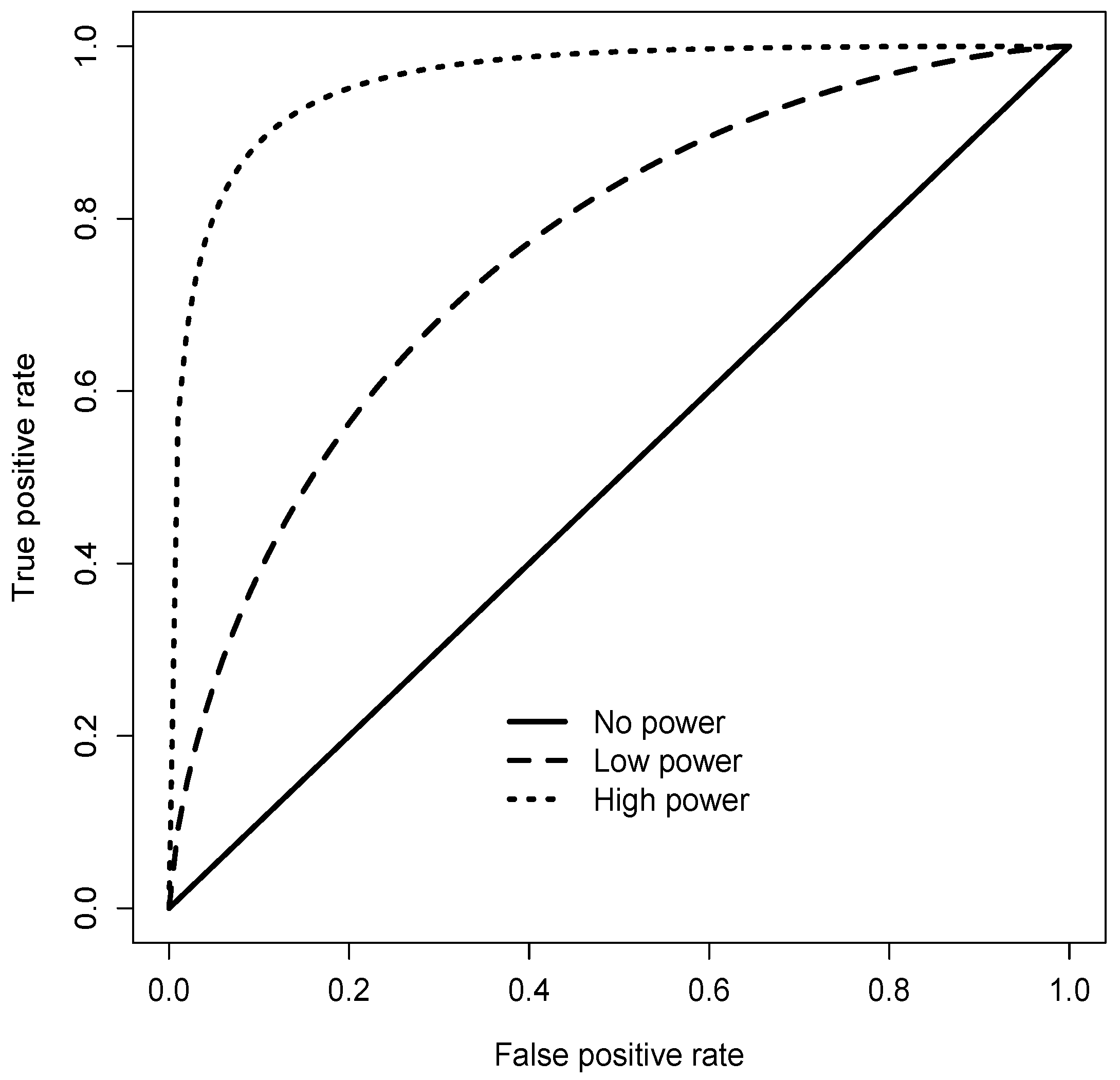

- Top two panels: Simulation of a “benign” situation, with moderate difference of positive class prevalences (50% vs. 20%) in training and test population distributions, no estimation uncertainty on the training sample and a rather large test sample with . The results for all estimation methods suggest that the lengths of the confidence intervals are strongly dependent upon the discriminatory power of the score which is the basic building block of all the methods. Coverage is accurate for the high power situation, whereas there is even slight overshooting of coverage in the low power situation.

- Central two panels: Simulation of a less benign situation, with small test sample size and low true positive class prevalence in the test sample but still without uncertainty on the training sample. There is nonetheless again evidence for the strong dependence of the lengths of the confidence intervals upon the discriminatory power of the score. For all estimation methods, low power leads to strong bias of the prevalence estimates. The percentage of zero estimates jumps between the third and the fourth panel. Hence, decrease of power of the score entails much higher rates of zero estimates. For the maximum likelihood method, the interval length results in both panels show that constructing confidence intervals based on the central limit theorem for maximum likelihood estimators may become unstable for small test sample size and small positive class prevalence.

- Bottom two panels: Simulation of an adverse situation, with small test and training sample sizes and low true positive class prevalence in the test sample. The results show qualitatively very much the same picture as in the central panels. The impact of estimation uncertainty in the training sample which marks the difference to the situation for the central panels, however, is moderate for high power of the score but dramatic for low power of the score. Again, there is a jump of the rate of zero estimates between the two panels differentiated by different levels of discriminatory power. For the Hellinger methods, results of the high power panel suggest a performance issue with respect to the coverage rate. This observation is not confirmed by a repetition of the calculations for Panel 5 with deployment of R-boot.ci method “bca” instead of “perc”; however, the “better” results are accompanied by a high rate of failures of the confidence interval construction. In contrast to MLinf, MLboot (using only bootstrapping for constructing the confidence intervals) performs well, even with relatively low bias for the prevalence estimate in the low power case.

- General conclusion: The results displayed in Table 4 suggest that there should be a clear benefit in terms of shorter confidence intervals when high power scores and classifiers are deployed for prevalence estimation. In addition, the results illustrate the statement on the asymptotic variance of ratio estimators like ACC50, ACCp, APCv, APCC and APCCv in Corollary 11 of Vaz et al. [9].

- Performance in terms of interval length (with sufficient coverage in all circumstances): Both APCC estimation methods show good and stable performance when compared to all other methods. Energy and MLboot follow closely. The Hellinger methods also produce short confidence lengths but may have insufficient coverage.

3.3. Do Approaches to Confidence Intervals without Monte Carlo Simulations Work?

4. Conclusions

- whether estimation techniques for confidence intervals are appropriate if in practice most of the time prediction intervals are needed; and

- whether the discriminatory power of the soft classifier or score at the basis of a prevalence estimation method matters when it comes to minimizing the confidence interval for an estimate.

- the fact that the findings of the paper apply only for problems where it is clear that training and test samples are related by prior probability shift; and

- the general observation that the scope of a simulation study necessarily is rather restricted and therefore findings of such studies can be suggestive and illustrative at best.

- For not too small test sample sizes, such as 50 or more, there is no need to deploy special techniques for prediction intervals.

- It is worthwhile to base prevalence estimation on powerful classifiers or scores because this way the lengths of the confidence intervals can be much reduced. The use of less accurate classifiers may entail confidence intervals so long that the estimates have to be considered worthless.

Funding

Conflicts of Interest

Appendix A. Particulars for the Implementation of the Simulation Study

Appendix A.1. Adjusted Classify and Count (ACC) and Related Prevalence Estimators

- is the proportion of instances in the test population whose labels are predicted positive by the classifier g.

- is the false positive rate (FPR) associated with the classifier g. The FPR equals and, therefore, also of the classifier g.

- is the true positive rate (TPR) associated with the classifier g. The TPR is also called “recall” or “sensitivity” of g.

- for maximum accuracy (i.e., minimum classification error) which leads to the estimator ACC50 listed in Section 2.2; and

- for maximising the denominator of the right-hand side of Equation (A1), which leads to the estimator ACCp listed in Section 2.2.

Appendix A.2. Prevalence Estimation by Distance Minimisation

- for all probability measures , to which d is applicable.

- if and only if .

Appendix A.2.1. Prevalence Estimation by Minimising the Hellinger Distance

Appendix A.2.2. Prevalence Estimation by Minimising the Energy Distance

Appendix A.2.3. Prevalence Estimation by Minimising the Kullback–Leibler Distance

- if ; and

- if .

- Method MLboot: Bootstrapping the samples , …, , , …, , and , …, from Section 2.3 and creating a sample of by solving Equation (A25a) for each of the bootstrapping samples.

- Method MLinf: Asymptotic approximation by making use of the central limit theorem for maximum likelihood estimators (see, e.g., Theorem 10.1.12 of [27]). According to this limit theorem, converges for toward a normal distribution with mean 0 and variance v given bywhere q is the true positive class prevalence of the population underlying the test sample. The right-hand side of Equation (A26a) is approximated with an estimate that is based on a sample average:where denotes the—unique if any—solution of Equation (A25a) in the unit interval and was generated under the test population distribution . In the binormal setting of Section 2.1, the density ratio is given by Equation (3b) if the training sample is infinite and can be derived from the posterior class probabilities obtained by logistic regression if the training sample is finite.

Appendix B. Analysis of Error Adjusted Bootstrapping

- Apply the classifier g to the bootstrapped features of an instance in the test sample.

- If a positive label is predicted by g, simulate a Bernoulli variable with success probability . If a negative label is predicted by g, simulate a Bernoulli variable with success probability .

- In both cases, if the outcome of the Bernoulli variable is success, count the result as positive class, otherwise as negative class.

References

- Barranquero, J.; González, P.; Díez, J.; del Coz, J. On the study of nearest neighbor algorithms for prevalence estimation in binary problems. Pattern Recognit. 2013, 46, 472–482. [Google Scholar] [CrossRef]

- Forman, G. Quantifying counts and costs via classification. Data Min. Knowl. Discov. 2008, 17, 164–206. [Google Scholar] [CrossRef]

- González-Castro, V.; Alaiz-Rodríguez, R.; Alegre, E. Class distribution estimation based on the Hellinger distance. Inf. Sci. 2013, 218, 146–164. [Google Scholar] [CrossRef]

- Du Plessis, M.; Niu, G.; Sugiyama, M. Class-prior estimation for learning from positive and unlabeled data. Mach. Learn. 2017, 106, 463–492. [Google Scholar] [CrossRef]

- González, P.; Castaño, A.; Chawla, N.; del Coz, J. A Review on Quantification Learning. ACM Comput. Surv. 2017, 50, 74:1–74:40. [Google Scholar] [CrossRef]

- Castaño, A.; Morán-Fernández, L.; Alonso, J.; Bolón-Canedo, V.; Alonso-Betanzos, A.; del Coz, J. Análisis de algoritmos de cuantificacíon basados en ajuste de distribuciones. In IX Simposio de Teoría y Aplicaciones de la Minería de Datos (TAMIDA 2018); Riquelme, J., Troncoso, A., García, S., Eds.; Asociación Española para la Inteligencia Artificial: Granada, Spain, 2018; pp. 913–918. [Google Scholar]

- Keith, K.; O’Connor, B. Uncertainty-aware generative models for inferring document class prevalence. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 4575–4585. [Google Scholar]

- Maletzke, A.; Dos Reis, D.; Cherman, E.; Batista, G. DyS: A Framework for Mixture Models in Quantification. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Vaz, A.; Izbicki, R.; Stern, R. Quantification Under Prior Probability Shift: The Ratio Estimator and its Extensions. J. Mach. Learn. Res. 2019, 20, 1–33. [Google Scholar]

- Hopkins, D.; King, G. A Method of Automated Nonparametric Content Analysis for Social Science. Am. J. Polit. Sci. 2010, 54, 229–247. [Google Scholar] [CrossRef]

- Daughton, A.; Paul, M. Constructing Accurate Confidence Intervals when Aggregating Social Media Data for Public Health Monitoring. In Proceedings of the AAAI International Workshop on Health Intelligence (W3PHIAI), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Meeker, W.; Hahn, G.; Escobar, L. Statistical Intervals: A Guide for Practitioners and Researchers, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Barranquero, J.; Díez, J.; del Coz, J. Quantification-oriented learning based on reliable classifiers. Pattern Recognit. 2015, 48, 591–604. [Google Scholar] [CrossRef]

- Zhang, K.; Schölkopf, B.; Muandet, K.; Wang, Z. Domain Adaptation Under Target and Conditional Shift. In Proceedings of the 30th International Conference on International Conference on Machine Learning, JMLR.org, ICML’13, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. III-819–III-827. [Google Scholar]

- Hofer, V.; Krempl, G. Drift mining in data: A framework for addressing drift in classification. Comput. Stat. Data Anal. 2013, 57, 377–391. [Google Scholar] [CrossRef]

- Lipton, Z.; Wang, Y.X.; Smola, A. Detecting and Correcting for Label Shift with Black Box Predictors. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Dy, J., Krause, A., Eds.; Cornell University: Ithaca, NY, USA, 2018; Volume 80, pp. 3122–3130. [Google Scholar]

- Moreno-Torres, J.; Raeder, T.; Alaiz-Rodriguez, R.; Chawla, N.; Herrera, F. A unifying view on dataset shift in classification. Pattern Recognit. 2012, 45, 521–530. [Google Scholar] [CrossRef]

- Saerens, M.; Latinne, P.; Decaestecker, C. Adjusting the Outputs of a Classifier to New a Priori Probabilities: A Simple Procedure. Neural Comput. 2001, 14, 21–41. [Google Scholar] [CrossRef] [PubMed]

- Bella, A.; Ferri, C.; Hernandez-Orallo, J.; Ramírez-Quintana, M. Quantification via probability estimators. In Proceedings of the 2010 IEEE International Conference on Data Mining(ICDM), Sydney, NSW, Australia, 3–17 December 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 737–742. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2014. [Google Scholar]

- Kawakubo, H.; du Plessis, M.; Sugiyama, M. Computationally Efficient Class-Prior Estimation under Class Balance Change Using Energy Distance. IEICE Trans. Inf. Syst. 2016, 99, 176–186. [Google Scholar] [CrossRef]

- Cramer, J. Logit Models From Economics and Other Fields; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Davison, A.; Hinkley, D. Bootstrap Methods and their Application; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Tasche, D. Fisher Consistency for Prior Probability Shift. J. Mach. Learn. Res. 2017, 18, 3338–3369. [Google Scholar]

- Gart, J.; Buck, A. Comparison of a screening test and a reference test in epidemiologic studies. II. A probabilistic model for the comparison of diagnostic tests. Am. J. Epidemiol. 1966, 83, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Peters, C.; Coberly, W. The numerical evaluation of the maximum-likelihood estimate of mixture proportions. Commun. Stat. Theory Methods 1976, 5, 1127–1135. [Google Scholar] [CrossRef]

- Casella, G.; Berger, R. Statistical Inference, 2nd ed.; Thomson Learning: Pacific Grove, CA, USA, 2002. [Google Scholar]

- Kull, M.; Silva Filho, T.; Flach, P. Beyond sigmoids: How to obtain well-calibrated probabilities from binary classifiers with beta calibration. Electron. J. Stat. 2017, 11, 5052–5080. [Google Scholar] [CrossRef]

- Hofer, V. Adapting a classification rule to local and global shift when only unlabelled data are available. Eur. J. Oper. Res. 2015, 243, 177–189. [Google Scholar] [CrossRef]

- Frühwirth-Schnatter, S. Finite Mixture and Markov Switching Models: Modeling and Applications to Random Processes; Springer: Berlin, Germany, 2006. [Google Scholar]

- Du Plessis, M.; Sugiyama, M. Semi-supervised learning of class balance under class-prior change by distribution matching. Neural Netw. 2014, 50, 110–119. [Google Scholar] [CrossRef] [PubMed]

- Redner, R.; Walker, H. Mixture densities, maximum likelihood and the EM algorithm. SIAM Rev. 1984, 26, 195–239. [Google Scholar] [CrossRef]

- Tasche, D. The Law of Total Odds. arXiv 2013, arXiv:1312.0365. [Google Scholar]

| Row Name | Explanation |

|---|---|

| “Av prev” | Average of the prevalence estimates (for confidence intervals) |

| “Av freq” | Average of the relative frequencies of simulated positive class labels (for prediction intervals) |

| “Av abs dev” | Average of the absolute deviation of the prevalence estimates |

| or the simulated relative frequencies from the true prevalence | |

| “Perc fail est” | Percentage of simulation runs with failed prevalence estimates |

| “Av int length” | Average of the confidence or prediction interval lengths |

| “Coverage” | Percentage of confidence intervals containing the true prevalence or |

| of prediction intervals containing the true realised relative frequencies of positive labels | |

| “Perc 0 or 1” | Percentage of prevalence estimates or simulated fequencies with value or |

| ,,,, Prediction Intervals | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av freq | 19.26 | 20.70 | 16.02 | 20.72 | 20.42 | 18.74 | 18.92 | 19.72 | 19.76 | 19.38 |

| Av abs dev | 7.50 | 8.82 | 9.02 | 7.60 | 7.58 | 7.58 | 7.40 | 7.24 | 7.28 | 7.50 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 32.20 | 33.30 | 29.98 | 30.36 | 30.04 | 29.68 | 30.32 | 29.86 | 30.00 | 29.88 |

| Coverage | 100.0 | 99.0 | 94.0 | 100.0 | 99.0 | 98.0 | 99.0 | 99.0 | 99.0 | 98.0 |

| Perc 0 or 1 | 1.0 | 2.0 | 6.0 | 1.0 | 2.0 | 2.0 | 4.0 | 1.0 | 3.0 | 1.0 |

| , , , , Confidence Intervals | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av prev | 20.34 | 21.05 | 17.01 | 20.50 | 20.54 | 20.34 | 20.63 | 20.31 | 20.55 | 20.65 |

| Av abs dev | 6.68 | 7.23 | 7.61 | 6.22 | 6.12 | 6.13 | 6.06 | 5.88 | 6.18 | 6.00 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 28.27 | 28.98 | 26.13 | 25.67 | 25.15 | 24.55 | 25.57 | 25.10 | 25.10 | 24.83 |

| Coverage | 97.0 | 97.0 | 89.0 | 98.0 | 97.0 | 96.0 | 98.0 | 95.0 | 97.0 | 98.0 |

| Perc 0 or 1 | 0.0 | 2.0 | 1.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 |

| , , , , Prediction Intervals | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av freq | 18.96 | 22.30 | 23.72 | 21.33 | 17.08 | 18.20 | 22.80 | 28.76 | 19.12 | 17.48 |

| Av abs dev | 19.00 | 16.98 | 17.32 | 15.56 | 15.16 | 14.32 | 15.12 | 17.20 | 15.04 | 14.48 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 71.69 | 58.92 | 72.82 | 54.15 | 47.88 | 49.96 | 56.24 | 59.32 | 49.44 | 47.38 |

| Coverage | 95.0 | 94.0 | 99.0 | 95.0 | 92.0 | 94.0 | 92.0 | 87.0 | 93.0 | 91.0 |

| Perc 0 or 1 | 43.0 | 26.0 | 19.0 | 17.2 | 32.0 | 24.0 | 20.0 | 9.0 | 24.0 | 24.0 |

| , , , , Confidence Intervals | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av prev | 21.36 | 22.90 | 21.16 | 23.61 | 19.25 | 20.93 | 23.65 | 28.81 | 21.26 | 19.59 |

| Av abs dev | 19.28 | 16.45 | 15.21 | 14.60 | 14.63 | 13.91 | 15.64 | 15.53 | 14.56 | 13.37 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 4.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 66.65 | 57.89 | 69.97 | 51.98 | 47.20 | 49.07 | 54.25 | 54.26 | 48.45 | 47.08 |

| Coverage | 97.0 | 95.0 | 98.0 | 96.0 | 90.0 | 95.0 | 90.0 | 77.0 | 94.0 | 96.0 |

| Perc 0 or 1 | 36.0 | 24.0 | 22.0 | 15.6 | 27.0 | 22.0 | 19.0 | 7.0 | 22.0 | 16.0 |

| , , , , Prediction Intervals | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| AAv freq | 13.90 | 10.78 | 12.48 | 12.02 | 10.50 | 10.96 | 13.74 | 19.86 | 11.80 | 8.48 |

| Av abs dev | 13.06 | 10.34 | 11.84 | 10.94 | 10.36 | 10.44 | 12.88 | 16.52 | 11.12 | 8.44 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 62.61 | 44.90 | 59.71 | 44.94 | 39.12 | 41.48 | 46.78 | 52.30 | 41.30 | 35.30 |

| Coverage | 98.0 | 97.0 | 97.0 | 94.0 | 93.0 | 92.0 | 90.0 | 75.0 | 92.0 | 93.0 |

| Perc 0 or 1 | 41.0 | 42.0 | 42.0 | 36.4 | 47.0 | 42.0 | 39.0 | 13.0 | 38.0 | 47.0 |

| , , , , Confidence Intervals | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av prev | 13.43 | 11.88 | 11.65 | 12.15 | 9.52 | 10.40 | 12.77 | 18.30 | 10.70 | 8.33 |

| Av abs dev | 13.79 | 11.11 | 11.03 | 10.68 | 9.56 | 9.71 | 11.44 | 15.43 | 10.01 | 8.34 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 4.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 58.34 | 45.14 | 55.64 | 41.20 | 34.38 | 37.22 | 43.41 | 48.10 | 37.30 | 32.95 |

| Coverage | 98.0 | 93.0 | 96.0 | 93.0 | 89.0 | 91.0 | 83.0 | 62.0 | 94.0 | 92.0 |

| Perc 0 or 1 | 52.0 | 40.0 | 42.0 | 31.2 | 42.0 | 36.0 | 34.0 | 17.0 | 37.0 | 44.0 |

| , | ||||||

| ACC50 | predACC50 | ACCp | predACCp | DnPACC50 | DnPACCp | |

| Av prev or freq | 19.97 | 20.22 | 19.49 | 19.78 | 24.32 | 25.40 |

| Av abs dev | 6.19 | 7.70 | 6.56 | 8.54 | 5.68 | 6.80 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 27.91 | 32.84 | 29.28 | 33.90 | 21.68 | 21.78 |

| Coverage | 100.0 | 100.0 | 99.0 | 100.0 | 95.0 | 93.0 |

| Perc 0 or 1 | 0.0 | 0.0 | 1.0 | 2.0 | 0.0 | 0.0 |

| , | ||||||

| ACC50 | predACC50 | ACCp | predACCp | DnPACC50 | DnPACCp | |

| Av prev | 19.69 | 19.38 | 19.58 | 20.28 | 38.06 | 39.26 |

| Av abs dev | 9.91 | 9.82 | 9.03 | 10.32 | 18.18 | 19.26 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 32.41 | 36.34 | 30.49 | 34.72 | 26.28 | 26.32 |

| Coverage | 98.0 | 99.0 | 95.0 | 98.0 | 22.0 | 14.0 |

| Perc 0 or 1 | 12.0 | 12.0 | 3.0 | 5.0 | 0.0 | 0.0 |

| , , , , , | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLinf | |

| Av prev | 20.28 | 20.28 | 19.68 | 20.34 | 20.35 | 20.27 | 20.27 | 20.33 | 20.34 | 20.35 |

| Av abs dev | 1.97 | 1.97 | 1.90 | 1.79 | 1.79 | 1.72 | 1.81 | 1.76 | 1.80 | 1.72 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 8.47 | 8.47 | 8.04 | 7.74 | 7.71 | 7.50 | 7.54 | 7.42 | 7.72 | 7.35 |

| Coverage | 91.0 | 91.0 | 93.0 | 90.0 | 89.0 | 92.0 | 89.0 | 89.0 | 89.0 | 91.0 |

| Perc 0 or 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| , , , , , | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLinf | |

| Av prev | 20.71 | 20.71 | 18.60 | 20.74 | 20.53 | 20.00 | 20.47 | 20.47 | 20.66 | 20.41 |

| Av abs dev | 4.68 | 4.68 | 4.21 | 3.64 | 3.37 | 3.23 | 3.65 | 3.30 | 3.39 | 3.19 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 19.08 | 19.08 | 18.47 | 16.70 | 15.73 | 15.15 | 16.14 | 15.50 | 15.91 | 15.05 |

| Coverage | 92.0 | 92.0 | 93.0 | 94.0 | 94.0 | 94.0 | 96.0 | 95.0 | 95.0 | 94.0 |

| Perc 0 or 1 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| , , , , , | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLinf | |

| Av prev | 4.96 | 5.49 | 2.75 | 4.99 | 5.14 | 4.60 | 5.07 | 4.56 | 5.15 | 5.09 |

| Av abs dev | 3.63 | 4.03 | 3.26 | 3.32 | 3.47 | 2.92 | 3.33 | 2.93 | 3.54 | 3.06 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 16.75 | 17.98 | 14.44 | 12.65 | 12.73 | 10.88 | 12.95 | 12.03 | 12.84 | 16.96 |

| Coverage | 95.0 | 98.0 | 97.0 | 90.0 | 86.0 | 85.0 | 88.5 | 94.4 | 88.0 | 97.0 |

| Perc 0 or 1 | 24.0 | 24.0 | 27.0 | 16.0 | 18.0 | 11.0 | 18.0 | 10.0 | 18.0 | 13.0 |

| , , , , , | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLinf | |

| Av prev | 8.37 | 9.56 | 2.77 | 8.07 | 7.74 | 6.56 | 7.86 | 7.37 | 7.93 | 7.26 |

| Av abs dev | 8.31 | 9.28 | 5.70 | 7.78 | 7.64 | 7.00 | 7.73 | 7.47 | 7.68 | 7.50 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 38.27 | 35.30 | 29.85 | 27.52 | 25.46 | 24.98 | 27.63 | 25.80 | 26.30 | 47.01 |

| Coverage | 96.0 | 92.0 | 90.0 | 89.0 | 86.0 | 86.0 | 89.0 | 86.3 | 89.0 | 97.0 |

| Perc 0 or 1 | 38.0 | 44.0 | 65.0 | 32.0 | 41.0 | 48.0 | 41.0 | 45.0 | 43.0 | 48.0 |

| , , , , | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av prev | 5.72 | 6.91 | 3.62 | 5.67 | 5.88 | 5.72 | 5.53 | 5.65 | 5.97 | 5.59 |

| Av abs dev | 4.46 | 5.10 | 3.54 | 4.11 | 4.11 | 3.97 | 4.00 | 3.95 | 4.18 | 3.55 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 16.62 | 18.68 | 11.35 | 14.96 | 14.92 | 13.90 | 14.22 | 13.44 | 15.09 | 13.95 |

| Coverage | 89.0 | 87.0 | 91.0 | 88.0 | 86.0 | 87.0 | 84.8 | 82.0 | 85.0 | 85.0 |

| Perc 0 or 1 | 24.0 | 22.0 | 24.0 | 18.0 | 17.0 | 17.0 | 18.0 | 15.0 | 17.0 | 14.0 |

| , , , , | ||||||||||

| ACC50 | ACCp | ACCv | MS | APCC | APCCv | H4 | H8 | Energy | MLboot | |

| Av prev | 10.49 | 11.59 | 6.54 | 10.43 | 8.50 | 8.38 | 10.96 | 12.12 | 9.50 | 8.39 |

| Av abs dev | 10.47 | 10.94 | 6.58 | 9.55 | 8.30 | 7.88 | 10.00 | 10.43 | 8.83 | 7.72 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 4.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 52.48 | 46.04 | 40.00 | 36.00 | 32.37 | 31.70 | 39.38 | 39.11 | 32.85 | 32.96 |

| Coverage | 97.0 | 95.0 | 96.0 | 94.0 | 91.0 | 90.0 | 91.0 | 92.0 | 92.0 | 92.0 |

| Perc 0 or 1 | 43.0 | 37.0 | 43.0 | 32.3 | 41.0 | 38.0 | 36.0 | 28.0 | 40.0 | 38.0 |

| No Bootstrap, , | |||||

| ACC50 | ACCp | ACCv | MS | MLinf | |

| Av prev | 20.15 | 20.28 | 19.40 | 20.14 | 20.27 |

| Av abs dev | 2.25 | 2.24 | 2.18 | 2.22 | 2.02 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 8.11 | 8.46 | 8.02 | 8.17 | 7.33 |

| Coverage | 92.0 | 86.0 | 88.0 | 87.0 | 92.0 |

| Perc 0 or 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| No Bootstrap, , | |||||

| ACC50 | ACCp | ACCv | MS | MLinf | |

| Av prev | 19.95 | 19.48 | 19.01 | 19.76 | 20.06 |

| Av abs dev | 3.23 | 3.88 | 2.98 | 3.35 | 2.57 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 8.13 | 8.47 | 7.60 | 8.16 | 7.22 |

| Coverage | 69.0 | 64.0 | 66.0 | 66.0 | 75.0 |

| Perc 0 or 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Bootstrap, , | |||||

| ACC50 | ACCp | ACCv | MS | MLboot | |

| Av prev | 19.79 | 19.94 | 18.63 | 20.18 | 20.34 |

| Av abs dev | 3.11 | 3.38 | 3.17 | 2.79 | 2.67 |

| Perc fail est | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Av int length | 15.13 | 16.96 | 14.18 | 12.94 | 12.07 |

| Coverage | 95.0 | 93.0 | 91.0 | 91.0 | 92.0 |

| Perc 0 or 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tasche, D. Confidence Intervals for Class Prevalences under Prior Probability Shift. Mach. Learn. Knowl. Extr. 2019, 1, 805-831. https://doi.org/10.3390/make1030047

Tasche D. Confidence Intervals for Class Prevalences under Prior Probability Shift. Machine Learning and Knowledge Extraction. 2019; 1(3):805-831. https://doi.org/10.3390/make1030047

Chicago/Turabian StyleTasche, Dirk. 2019. "Confidence Intervals for Class Prevalences under Prior Probability Shift" Machine Learning and Knowledge Extraction 1, no. 3: 805-831. https://doi.org/10.3390/make1030047

APA StyleTasche, D. (2019). Confidence Intervals for Class Prevalences under Prior Probability Shift. Machine Learning and Knowledge Extraction, 1(3), 805-831. https://doi.org/10.3390/make1030047