Abstract

Point estimation of class prevalences in the presence of dataset shift has been a popular research topic for more than two decades. Less attention has been paid to the construction of confidence and prediction intervals for estimates of class prevalences. One little considered question is whether or not it is necessary for practical purposes to distinguish confidence and prediction intervals. Another question so far not yet conclusively answered is whether or not the discriminatory power of the classifier or score at the basis of an estimation method matters for the accuracy of the estimates of the class prevalences. This paper presents a simulation study aimed at shedding some light on these and other related questions.

1. Introduction

In a prevalence estimation problem, one is presented with a sample of instances (the test sample), each of which belongs to exactly one of a finite number of classes but has not been labelled with the class. The task is to estimate the distribution of the class labels in the sample. If the problem sits in a binary two-class context, all instances belong to exactly one of two possible classes and, accordingly, can be labelled either positive or negative. The distribution of the labels then is characterised by the prevalence (i.e., proportion) of the positive labels (“class prevalence” for short) in the test sample. However, the labels are latent at estimation time such that the class prevalence cannot be determined by simple inspection of the labels. Instead, the class prevalence can only be inferred from the features of the instances in the sample, i.e., from observable covariates of the labels. The interrelationship between features and labels must be learnt from a training sample of labelled instances in another step before the class prevalence of the positive labels in the test sample can be estimated.

This whole process is called “supervised prevalence estimation” [1], “quantification” [2], “class distribution estimation” [3] or “class prior estimation” [4] in the literature. See the work of González et al. [5] for a recent overview of the quantification problem and approaches to deal with it. The emergence of further recent papers with new proposals of prevalence estimation methods suggests that the subject is still of high interest for both researchers and practitioners [6,7,8,9].

A variety of different methods for prevalence point estimation has been proposed and a considerable number of comparative studies for such methods has been published in the literature [5]. However, the question of how to construct confidence and prediction intervals for class prevalences seems to have attracted less attention. Hopkins and King [10] routinely provided confidence intervals for their estimates “via standard bootstrapping procedures”, without commenting much on details of the procedures or on any issues encountered with them. Keith and O’Connor [7] proposed and compared a number of methods for constructing such confidence intervals. Some of these methods involve Monte-Carlo simulation and some do not. In addition, Daughton and Paul [11] proposed a new method for constructing bootstrap confidence intervals and compared its results with the confidence intervals based on popular prevalence estimation methods. Vaz et al. [9] introduced the “ratio estimator” for class prevalences and used its asymptotic properties for determining confidence intervals without involving Monte-Carlo techniques.

This paper presents a simulation study that seeks to illustrate some observations from these previous papers on confidence intervals for class prevalences in the binary case and to provide answers to some questions begged in the papers:

- Would it be worthwhile to distinguish confidence and prediction intervals for class prevalences and deploy different methods for their estimation? This question is raised against the backdrop that, for instance, Keith and O’Connor [7] talked about estimating confidence intervals but in fact constructed prediction intervals which are conceptionally different [12].

- Would it be worthwhile to base class prevalence estimation on more accurate classifiers? The background for this question are conflicting statements in the literature as to the benefit of using accurate classifiers for prevalence estimation. On the one hand, Forman [2] stated on p. 168: “A major benefit of sophisticated methods for quantification is that a much less accurate classifier can be used to obtain reasonably precise quantification estimates. This enables some applications of machine learning to be deployed where otherwise the raw classification accuracy would be unacceptable or the training effort too great.” As an example for the opposite position, on the other hand, Barranquero et al. [13] commented on p. 595 with respect to prevalence estimation: “We strongly believe that it is also important for the learner to consider the classification performance as well. Our claim is that this aspect is crucial to ensure a minimum level of confidence for the deployed models.”

- Which prevalence estimation methods show the best performance with respect to the construction of as short as possible confidence intervals for class prevalences?

- Do non-simulation approaches to the construction of confidence intervals for class prevalences work?

In addition, this paper introduces two new methods for class prevalence estimation that are specifically designed for delivering as short as possible confidence intervals.

Deploying a simulation study for finding answers to the above questions has some advantages compared to working with real-world data:

- The true class prevalences are known and can even be chosen with a view to facilitate obtaining clear answers.

- The setting of the study can be freely modified—e.g., with regard to samples sizes or accuracy of the involved classifiers—to more precisely investigate the topics in question.

- In a simulation study, it is easy to apply an ablation approach to assess the relative impact of factors that influence the performance of methods for estimating confidence intervals.

- The results can be easily replicated.

- Simulation studies are good for delivering counter-examples. A method performing poorly in the study reported in this paper may be considered unlikely to perform much better in complex real-world settings.

Naturally, these advantages are bought at the cost of accepting certain obvious drawbacks:

- Most findings of the study are suggestive and illustrative only. No firm conclusions can be drawn from them.

- Important features of the problem which only occur in real-world situations might be overlooked.

- The prevalence estimation problem primarily is caused by dataset shift. For capacity reasons, the scope of the simulation study in this paper is restricted to prior probability shift, a special type of dataset shift. In the literature, prior probability shift is known under a number of different names, for instance “target shift” [14], “global drift” [15], or “label shift” [16] (see Moreno-Torres et al. [17] for a categorisation of types of dataset shift).

With these qualifications in mind, the main findings of this paper can be summarised as follows:

- Extra efforts to construct prediction intervals instead of confidence intervals for class prevalences appear to be unnecessary.

- “Error Adjusted Bootstrapping” as proposed by Daughton and Paul [11] for the construction of prevalence confidence or prediction intervals may fail in the presence of prior probability shift.

- Deploying more accurate classifiers for class prevalence estimation results in shorter confidence intervals.

- Compared to the other estimation methods considered in this paper, straight-forward “adjusted classify and count” methods for prevalence estimation [2] (called “confusion matrix method” in [18]) without any further tuning produce the longest confidence intervals and, hence, given identical coverage, perform worst. Methods based on minimisation of the Hellinger distance [3] (with different numbers of bins) produce much shorter confidence intervals, but sometimes do not guarantee sufficient coverage. The maximum likelihood approach (with bootstrapping for the confidence intervals) and “adjusted probabilistic classify and count” ([19], called there “scaled probability average”) appear to stably produce the shortest confidence intervals among the methods considered in the paper.

In this paper, instead of accuracy, also the term “discriminatory power” is used; similarly, instead of “accurate” the adjective “powerful” is employed.

The paper is organised as follows:

- Section 2 describes the conception and technical details of the simulation study, including in Section 2.2 a list of the prevalence estimation methods in scope.

- Section 3 provides some tables with results of the study and comments on the results, in order to explore the questions stated above. The results in Section 3.3 show that certain standard non-simulation approaches cannot take into account estimation uncertainty in the training sample and that bootstrap-based construction of confidence intervals could be used instead.

- Section 4 wraps up and closes the paper.

- In Appendix A, the mathematical details needed for coding the simulation study are listed.

- In Appendix B, the appropriateness for prior probability shift of the approach proposed by Daughton and Paul [11] is investigated.

The calculations of the simulation study were performed by making use of the statistical software R [20]. The R-scripts utilised can be downloaded from the URL https://www.researchgate.net/profile/Dirk_Tasche under the heading “Rscripts and data for paper Confidence Intervals for Class Prevalences under Prior Probability Shift”.

2. Setting of the Simulation Study

The set-up of the simulation study is intended to reflect the situation that occurs when a prevalence estimation problem as described in Section 1 has to be solved:

- There is a training sample of observations of features and class labels for m instances. Instances with label belong to the negative class, instances with label 1 belong to the positive class. By assumption, this sample was generated from a joined distribution (the training population distribution) of the feature random variable X and the label (or class) random variable Y.

- There is a test sample of observations of features for n instances. By assumption, each instance has a latent class label , and both the features and the labels were generated from a joined distribution (the test population distribution) of the feature random variable X and the label random variable Y.

The prevalence estimation or quantification problem is then to estimate the prevalence of the positive class labels in the test population. Of course, this is only a problem if there is dataset shift, i.e., if and as a likely consequence .

This paper deals only with the situation where the training population distribution and the test population distribution are related by prior probability shift, which means in mathematical terms that

for all subsets A of the feature space such that and are well-defined.

2.1. The Model for the Simulation Study

The classical binormal model with equal variances fits well into the prior probability shift setting for prevalence estimation of this paper. Kawakubo et al. [21] used it as part of their experiments for comparing the performance of prevalence methods. Logistic regression is a natural and optimal approach to the estimation of the binormal model with equal variances ([22] Section 6.1). Hence, when logistic regression is used for the estimation of the model in the simulation study, there is no need to worry about the results being invalidated by the deployment of a sub-optimal regression or classification technique. The binormal model is specified by defining the two class-conditional feature distributions and , respectively.

Training population distribution. Both class-conditional feature distributions are normal, with equal variances, i.e.,

with and .

Test population distribution. This is the same as the training population distribution, with replaced by , in order to satisfy the assumption in Equation (1) on prior probability shift between training and test times.

For the sake of brevity, in the following, the setting with Equation (2a) for both the training and the test samples is referred to as “double” binormal setting.

Given the class-conditional population distributions as specified in Equation (2a), the unconditional training and test population distributions can be represented as

with and as parameters whose values in the course of the simulation study are selected depending on the purposes of the specific numerical experiments.

Control parameters. For this paper’s numerical experiments, the values for the parameterisation of the model are selected from the ranges specified in the following list:

- is the prevalence of the positive class in the training population.

- is the size of training sample. In the case , the training sample is considered identical with the training population and learning of the model is unnecessary. In the case of a finite training sample, the number of instances with positive labels is non-random in order to reflect the fact that for model development purposes a pre-defined stratification of the training sample might be desirable and can be achieved by under-sampling of the majority class or by over-sampling of the minority class. then is the size of the training sub-sample with positive labels, and is the size of the training sub-sample with negative labels. Hence, it holds that , und for finite m.

- is the prevalence of the positive class in the test population.

- is the size of the test sample. In the test sample, the number of instances with positive labels is random.

- The population distribution underlying the features of the negative-class training sub-sample is always with and . The population distribution underlying the features of the positive-class training sub-sample is with and .

- The population distribution underlying the features of the negative-class test sub-sample is always with and . The population distribution underlying the features of the positive-class test sub-sample is with and .

- The number of simulation runs in all of the experiments is , i.e., -times a training sample and a test sample as specified above are generated and subjected to some estimation procedures.

- The number of bootstrap iterations where needed in any of the interval estimation procedures is always [23].

- All confidence and prediction intervals are constructed at confidence level.

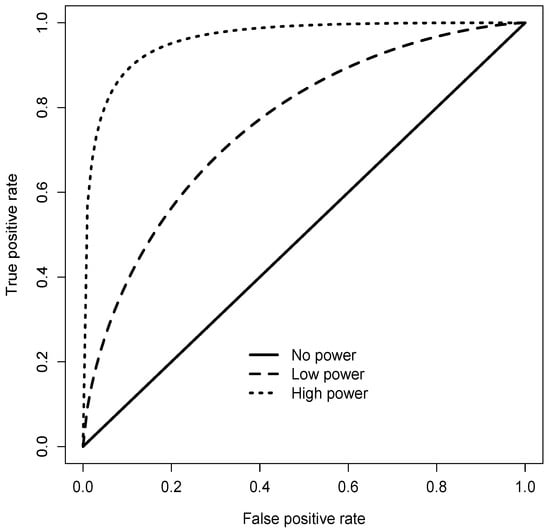

Choosing in one of the following simulation experiments reflects a situation where no accurate classifier can be found, as it is suggested by the fact that then the AUC (area under the curve) of the feature X taken as a soft classifier is (where denotes the standard normal distribution function). In the case , the same soft classifier X is very accurate with an AUC of . The different performance of the classifier depending on the value of parameter is also demonstrated in Figure 1 by the ROCs (receiver operating characteristics) corresponding to the two values (“low power”) and (“high power”).

Figure 1.

Receiver Operating Characteristics for the high power () and low power () simulation scenarios.

For the sake of completeness, it is also noted that the feature-conditional class probability under the training population distribution is given by

with and .

For the density ratio R under both the training and test population distributions, one obtains

denotes the density function of the one-dimensional normal distribution with mean and standard deviation .

2.2. Methods for Prevalence Estimation Considered in this Paper

The following criteria were applied for the selection of the methods deployed in the simulation study:

- The methods must be Fisher consistent in the sense of Tasche [24]. This criterion excludes, for instance, “classify and count” [2], the “Q-measure” approach [1] and the distance-minimisation approaches based on the Inner Product, Kumar–Hassebrook, Cosine, and Harmonic Mean distances mentioned in [8].

- The methods should enjoy some popularity in the literature.

- Two new methods based on already established methods and designed to minimise the lengths of confidence intervals are introduced and tested.

According to these criteria, the following prevalence estimation methods were included in the simulation study:

- ACC50: Adjusted Classify and Count (ACC: [2,18,25]), based on the Bayes classifier that minimises accuracy. The number “50” indicates that, when the Bayes classifier is represented by means of the posterior probability of the positive class and a threshold, the threshold has to be 50%.

- ACCp: Adjusted Classify and Count, based on the Bayes classifier that maximises the difference of TPR (true positive rate) and FPR (false positive rate). “p” because if the Bayes classifier is represented by means of the posterior probability of the positive class and a threshold, the threshold needs to be p, the a priori probability (or prevalence) of the positive class in the training population. ACCp is called “method max” in Forman [2].

- ACCv: New version of ACC where the threshold for the classifier is selected in such a way that the variance of the prevalence estimates is minimised among all ACC-type estimators based on classifiers represented by means of the posterior probability of the positive class and some threshold.

- MS: “Median sweep” as proposed by Forman [2].

- APCC: “Adjusted probabilistic classify and count” ([19], there called “scaled probability average”).

- APCCv: New version of APCC where the a priori positive class probability parameter in the posterior positive class probability is selected in such a way that the variance of the prevalence estimates is minimised among all APCC-type estimators based on posterior positive class probabilities where the a priori positive class probability parameter varies between 0 and 1.

- H4: Hellinger distance approach with four bins [3,6].

- H8: Hellinger distance approach with eight bins [3,6].

- Energy: Energy distance approach [6,21].

- MLinf/MLboot: ML is the maximum likelihood approach to prevalence estimation [26]. Note that the EM (expectation maximisation) approach of Saerens et al. [18] is one way to implement ML. “MLinf” refers to construction of the prevalence confidence interval based on the asymptotic normality of the ML estimator (using the Fisher information for the variance). “MLboot” refers to construction of the prevalence confidence interval solely based on bootstrap sampling.

For the readers’ convenience, the particulars needed to implement the methods in this list are presented in Appendix A. Note that ACC50, ACCp, ACCv, APCC und APCCv are all special cases of the “ratio estimator” discussed by Vaz et al. [9].

On the basis of the general asymptotic efficiency of maximum likelihood estimators ([27] Theorem 10.1.12), the maximum likelihood approach for class prevalences is a promising approach for achieving minimum confidence intervals lengths. In addition, the ML approach may be considered a representative of the class of entropy-related estimators and, as such, is closely related to the Topsøe approach which was found to perform very well by Maletzke et al. [8].

2.3. Calculations Performed in the Simulation Study

The calculations performed as part of the simulation study serve the purpose of providing facts for answers to the questions listed in Section 1 “Introduction”.

Calculations for constructing confidence intervals. Iterate times the following steps:

- Create the training sample: Simulate times from features , …, of positive instances and times from features , …, of negative instances.

- Create the test sample: Simulate the number of positive instances as a binomial random variable with size n and success probability q. Then, simulate times from features , …, of positive instances and times from features , …, of negative instances. The information of whether a feature was sampled from or from is assumed to be unknown in the estimation step. Therefore, the generated features are combined in a single sample , …, .

- Iterate R times the bootstrap procedure: Generate by stratified sampling with replications bootstrap samples , …, of features of positive instances, , …, of features of negative instances from the training subsamples, and , …, of features with unknown labels from the test sample. Calculate, based on the three resulting bootstrap samples, estimates of the positive class prevalence in the test population according to all the estimation methods listed in Section 2.2.

- For each estimation method, the bootstrap procedure from the previous step creates a sample of R estimates of the positive class prevalence. Based on this sample of R estimates, construct confidence intervals at level for the positive class prevalence in the test population.

Tabulated results of the simulation algorithm for confidence intervals.

- For each estimation method, estimates of the positive class prevalence are calculated. From this set of estimates, the following summary results were derived and tabulated:

- −

- the average of the prevalence estimates;

- −

- the average absolute deviation of the prevalence estimates from the true prevalence parameter;

- −

- the percentage of simulation runs with failed prevalence estimates; and

- −

- the percentage of estimates equal to 0 or 1;

- For each estimation method, confidence intervals at level for the positive class prevalence are produced. From this set of confidence intervals, the following summary results were derived and tabulated:

- −

- the average length of the confidence intervals; and

- −

- the percentage of confidence intervals that contain the true prevalence parameter (coverage rate).

For the construction of the bootstrap confidence intervals in Step 4 of the list of calculations, the method “perc” ([23] Section 5.3.1) of the function boot.ci of the R-package “boot” was used. More accurate methods for bootstrap confidence intervals are available, but these tend to require more computational time and to be less robust. Given that the performance of “perc” in the setting of this simulation study can be controlled via checking the coverage rates, the loss in performance seems tolerable. In the cases where calculations resulted in coverage rates of less than , the calculations were repeated with the “bca” method ([23] Section 5.3.2) of boot.ci in order to confirm the results.

Step 1 of the calculations can be omitted in the case , i.e., when the training sample is identical with the training population distribution. However, in this case, some quantities of relevance for the estimates have to be pre-calculated before the entrance into the loop for the simulation runs. The details for these pre-calculations are provided in Appendix A. In addition, in the case , for the prevalence estimation methods ACC50, ACCp, ACCv, APCC und APCCv, the bootstrap confidence intervals for the prevalences were replaced by “conservative binomial intervals” ([12] Section 6.2.2), computed with the “exact” method of the R-function binconf. Moreover, as explained in Section 2.2, in the case , the method MLinf was applied instead of MLboot for the construction of the maximum likelihood confidence interval.

As mentioned in Section 1, one of the purposes of the simulation study was to illustrate the differences between confidence and prediction intervals. Conceptionally, the difference may be described by their definitions as given in Meeker et al. [12] (“” as used by Meeker et al. [12] corresponds to “” as used in this paper):

- “A confidence interval for an unknown quantity may be formally characterized as follows: If one repeatedly calculates such intervals from many independent random samples, of the intervals would, in the long run, correctly include the actual value . Equivalently, one would, in the long run, be correct of the time in claiming that the actual value of is contained within the confidence interval.” ([12] Section 2.2.5)

- “If from many independent pairs of random samples, a prediction interval is computed from the data of the first sample to contain the value(s) of the second sample, of the intervals would, in the long run, correctly bracket the future value(s). Equivalently, one would, in the long run, be correct of the time in claiming that the future value(s) will be contained within the prediction interval.” ([12] Section 2.3.6)

To construct prediction intervals instead of confidence intervals in the simulation runs, Step 4 of the calculations was modified as follows:

- 4*.

- For each estimation method, the bootstrap procedure from the previous step creates a sample of R estimates of the positive class prevalence. For each estimate, generate a virtual number of realisations of positive instances by simulating an independent binomial variable with size n and success probability given by the estimate. Divide these virtual numbers by n to obtain (for each estimation method) a sample of relative frequencies of positive labels. Based on this additional size-R sample of relative frequencies, construct prediction intervals at level for the percentage of instances with positive labels in the test sample.

As in the case of the construction of confidence intervals, for the construction of the prediction intervals, again the method “perc” of the function boot.ci of the R-package “boot” was deployed.

Tabulated results of the simulation algorithm for prediction intervals.

- For each estimation method, virtual relative frequencies of positive labels in the test sample were simulated under the assumption that the estimated positive class prevalence equals the true prevalence. From this set of frequencies, the following summary results are derived and tabulated:

- −

- the average of the virtual relative frequencies;

- −

- the average absolute deviation of the virtual relative frequencies from the true prevalence parameter;

- −

- the percentage of simulation runs with failed prevalence estimates and hence also failed simulations of virtual relative frequencies of positive labels; and

- −

- the percentage of virtual relative frequencies equal to 0 or 1.

- For each estimation method, prediction intervals at level for the realised relative frequencies of positive labels are produced. From this set of prediction intervals, the following summary results were derived and tabulated:

- −

- the average length of the prediction intervals; and

- −

- the percentage of prediction intervals that contain the true relative frequencies of positive labels (coverage rate).

3. Results of the Simulation Study

All simulation procedures were performed with parameter setting , and (see Section 2.1 for the complete list of control parameters). In each table in the following, the values selected for the remaining control parameters are listed in the captions or within the table bodies.

In all the simulation procedures run for this paper, the R-boot.ci method for determining the statistical intervals (both confidence and prediction) was the method “perc”. In cases where the coverage found with “perc” is significantly lower than 90% (for at 5% significance level this means lower than 85%), the calculation was repeated with the R-boot.ci method “bca” for confirmation or correction.

The naming of the table rows and table columns has been standardized. Unless mentioned otherwise, the columns always display results for all or some of the prevalence estimation methods listed in Section 2.2. Short explanations of the meaning of the row names are given in Table 1. A more detailed explanation of the row names can be found in Section 2.3.

Table 1.

Explanation of the row names in the result tables in Section 3.

3.1. Prediction vs. Confidence Intervals

In the simulation study performed for this paper, the values of the true positive class prevalences of the test samples—understood in the sense of the a priori positive class prevalences of the populations from which the samples were generated (see Section 2)—are always known. In contrast, when one is working with real-world datasets, there is no way to know with certainty the true positive class prevalences of the test samples. Inevitably, therefore, in studies of prevalence estimation methods on real-world datasets, the performance has to be measured by comparison between the estimates and the relative frequencies of the positive labels observed in the test samples.

This was stated explicitly, for instance, by Keith and O’Connor [7]. The authors said in the section “Problem definition” of the paper that they estimated “prevalence confidence intervals” with the property that “ of the predicted intervals ought to contain the true value ”. For this purpose, Keith and O’Connor [7] defined the “true value” as follows: “For each group , let be the true proportion of positive labels (where ).” As “group” was used by Keith and O’Connor [7] as equivalent to sample and was 1 for positive labels and 0 otherwise, it is clear that Keith and O’Connor [7] estimated rather prediction intervals than confidence intervals (see Section 2.3 for the definitions of both types of intervals).

Hence, as asked in Section 1: Would it be worthwhile to distinguish confidence and prediction intervals for class prevalences and deploy different methods for their estimation?

By assumption (see Section 2), the test sample is interpreted as the feature components of independent, identically distributed random variables , …, . While the positive class prevalence in the test population is given by the constant , the relative frequency of the positive labels in test sample is represented by the random variable

where if and otherwise.

The simulation procedures for the panels in Table 2 are intended to gauge the impact of using a confidence interval instead of a prediction interval for capturing the relative frequency of positive labels in the test sample as defined in Equation (4). By the law of large numbers, the difference of and q should be small for large n. Therefore, if there were any impact of using a confidence interval when a prediction interval would be needed, it should rather be visible for smaller n.

Table 2.

Illustration of coverage of positive class frequencies in the test sample by means of prediction and confidence intervals. Control parameter for all panels: .

The algorithm devised in this paper for the construction of prediction intervals (see Section 2.3) involves the simulation of binomial random variables with the prevalence estimates as success probabilities which are independent of the test samples. This procedure, however, is likely to exaggerate the variance of the relative frequencies of the positive labels because the prevalence estimates and the test samples are not only not independent but even by design should be strongly dependent. The dependence between prevalence estimate and the test sample should be stronger when the classifier underlying the estimator is more accurate. This implies that, for prevalence estimation, differences between prediction and confidence intervals should rather be discernible for lower accuracy of the classifiers deployed.

Table 2 shows a number of simulation results, all for test sample size , i.e., for small size of the test sample:

- Top two panels: Simulation of a “benign” situation, with not too much difference of positive class prevalences (33% vs. 20%) in training and test population distributions, and high power of the score underlying the classifiers and distance minimisation approaches. The results suggest “overshooting” by the binomial prediction interval approach, i.e., intervals are so long that coverage is much higher than requested, even reaching 100%. The confidence intervals clearly show sufficient coverage of the true realised percentages of positive labels for all estimation methods. Interval lengths are quite uniform, with only the straight ACC methods ACC50 and ACCp showing distinctly longer intervals. In addition, in terms of average absolute deviation from the true positive class prevalence, the performance is rather uniform. However, it is interesting to see that ACCv which has been designed for minimising confidence interval length among the ACC estimators shows the distinctly worst performance with regard to average absolute deviation.

- Central two panels: Simulation of a rather adverse situation, with very different (67% vs. 20%) positive class prevalences in training and test population distributions and low power of the score underlying the classifiers and distance minimisation approaches. There is still overshooting by the binomial prediction interval approach for all methods but H8. For all methods but H8, sufficient coverage by the confidence intervals is still clearly achieved. H8 coverage of relative positive class frequency is significantly too low with the confidence intervals but still sufficient with the prediction intervals. However, H8 also displays heavy bias of the average relative frequency of positive labels, possibly a consequence of the combined difficulties of there being eight bins for only 50 points (test sample size) and little difference between the densities of the score conditional on the two classes. In terms of interval length performance MLboot is best, closely followed by APCC and Energy. However, even for these methods, confidence interval lengths of more than 47% suggest that the estimation task is rather hopeless.

- Bottom two panels: Similar picture to the central panels, but even more adverse with a small test sample prevalence of 5%. The results are similar, but much higher proportions of 0% estimates for all methods. H8 now has insufficient coverage with both prediction and confidence intervals, and also H4 coverage with the confidence intervals is insufficient. Note the strong estimation bias suggested by all average frequency estimates, presumably caused by the clipping of negative estimates (i.e., replacing such estimates by zero). Among all these bad estimators, MLboot is clearly best in terms of bias, average absolute deviation and interval lengths.

- General conclusion: For all methods in Section 2.2 but the Hellinger methods, it suffices to construct confidence intervals. No need to apply special prediction interval techniques.

- Performance in terms of interval length (with sufficient coverage in all circumstances): MLboot best, followed by APCC and Energy.

Daughton and Paul [11] proposed “Error Adjusted Bootstrapping” as an approach to constructing “confidence intervals” (prediction intervals, as a matter of fact) for prevalences and showed by example that its performance in terms of coverage was sufficient. However, theoretical analysis of “Error Adjusted Bootstrapping” presented in Appendix B suggests that this approach is not appropriate for constructing prediction intervals in the presence of prior probability shift. Indeed, Table 3 demonstrates that “Error Adjusted Bootstrapping” intervals based on the classifiers ACC50 and ACCp (see Section 2.2) achieve sufficient coverage if the difference between the training and test sample prevalences is moderate (33% vs. 20%) but breaks down if the difference is large (67% vs. 20%).

Table 3.

Illustration of difference between binomial approach and approach by Daughton and Paul [11] to prediction intervals for positive class prevalences. Control parameters for both panels: , , and . Columns “ACC50” and “ACCp” show results for confidence intervals with methods as explained in Section 2.2. All other columns show prediction intervals and average relative frequencies of positive labels. In all columns, coverage refers to containing the realised relative frequency of positive labels in the test sample. “DnPACC50” and “DnPACCp” are determined according to the “error-corrected bootstrapping”, as proposed by Daughton and Paul [11].

3.2. Does Higher Accuracy Help for Shorter Confidence Intervals?

As mentioned in Section 1, views in the literature differ on whether or not the performance of prevalence estimators is impacted by the discriminatory power of the score underlying the estimation method. Table 4 shows a number of simulation results, for a variety of sets of circumstances, both benign and adverse. The results for high and low power are juxtaposed:

Table 4.

Illustration of length of confidence intervals for different degrees of accuracy (or discriminatory power) of the score or classifier underlying the prevalence estimation methods.

- Top two panels: Simulation of a “benign” situation, with moderate difference of positive class prevalences (50% vs. 20%) in training and test population distributions, no estimation uncertainty on the training sample and a rather large test sample with . The results for all estimation methods suggest that the lengths of the confidence intervals are strongly dependent upon the discriminatory power of the score which is the basic building block of all the methods. Coverage is accurate for the high power situation, whereas there is even slight overshooting of coverage in the low power situation.

- Central two panels: Simulation of a less benign situation, with small test sample size and low true positive class prevalence in the test sample but still without uncertainty on the training sample. There is nonetheless again evidence for the strong dependence of the lengths of the confidence intervals upon the discriminatory power of the score. For all estimation methods, low power leads to strong bias of the prevalence estimates. The percentage of zero estimates jumps between the third and the fourth panel. Hence, decrease of power of the score entails much higher rates of zero estimates. For the maximum likelihood method, the interval length results in both panels show that constructing confidence intervals based on the central limit theorem for maximum likelihood estimators may become unstable for small test sample size and small positive class prevalence.

- Bottom two panels: Simulation of an adverse situation, with small test and training sample sizes and low true positive class prevalence in the test sample. The results show qualitatively very much the same picture as in the central panels. The impact of estimation uncertainty in the training sample which marks the difference to the situation for the central panels, however, is moderate for high power of the score but dramatic for low power of the score. Again, there is a jump of the rate of zero estimates between the two panels differentiated by different levels of discriminatory power. For the Hellinger methods, results of the high power panel suggest a performance issue with respect to the coverage rate. This observation is not confirmed by a repetition of the calculations for Panel 5 with deployment of R-boot.ci method “bca” instead of “perc”; however, the “better” results are accompanied by a high rate of failures of the confidence interval construction. In contrast to MLinf, MLboot (using only bootstrapping for constructing the confidence intervals) performs well, even with relatively low bias for the prevalence estimate in the low power case.

- General conclusion: The results displayed in Table 4 suggest that there should be a clear benefit in terms of shorter confidence intervals when high power scores and classifiers are deployed for prevalence estimation. In addition, the results illustrate the statement on the asymptotic variance of ratio estimators like ACC50, ACCp, APCv, APCC and APCCv in Corollary 11 of Vaz et al. [9].

- Performance in terms of interval length (with sufficient coverage in all circumstances): Both APCC estimation methods show good and stable performance when compared to all other methods. Energy and MLboot follow closely. The Hellinger methods also produce short confidence lengths but may have insufficient coverage.

3.3. Do Approaches to Confidence Intervals without Monte Carlo Simulations Work?

For the prevalence estimation methods ACC50, ACCp, ACCv, MS, and MLinf, confidence intervals can be constructed without bootstrapping and, therefore, much less numerical effort. For ACC50, ACCp, ACCv, and MS, conservative binomial intervals by means of the “exact” method of R-function binconf can be deployed ([12] Section 6.2.2). For the maximum likelihood approach, an asymptotically most efficient normal approximation with variance expressed in terms of the Fisher information can be used ([27] Theorem 10.1.12). This approach is denoted by “MLinf” in order to distinguish it from “MLboot”, maximum likelihood estimation combined with bootstrapping for the confidence intervals.

However, it can be shown by examples that these non-simulation approaches fail in the sense of producing insufficient coverage rates if training sample sizes are finite, i.e., if parameters such as true positive and false positive rates needed for the estimators have to be estimated (e.g., by means of regression) before being plugged in. Table 5 with panels juxtaposing results for infinite sample and finite sample sizes of the training sample, provides such an example.

Table 5.

Illustration of failure of non-simulation approaches to confidence intervals when training sample is finite. Control parameters for all panels: , , , and .

The estimation problem whose results are shown in Table 5 is pretty well-posed, with a large test sample, a high power score underlying the estimation methods and moderate difference between training and test sample positive class prevalences. Panel 1 shows that without estimation uncertainty on the training sample (infinite sample size) the non-simulation approaches produce confidence intervals with sufficient coverage. In contrast, Panel 2 demonstrates that for all five methods coverage breaks down when estimation uncertainty is introduced into the training sample (finite sample size). According to Panel 3, this issue can be remediated by deploying bootstrapping for the construction of the confidence intervals.

4. Conclusions

The simulation study whose results are reported in this paper was intended to shed some light on certain questions from the literature regarding the construction of confidence or prediction intervals for the prevalence of positive labels in binary quantification problems. In particular, the results of the study should help to provide answers to the questions on:

- whether estimation techniques for confidence intervals are appropriate if in practice most of the time prediction intervals are needed; and

- whether the discriminatory power of the soft classifier or score at the basis of a prevalence estimation method matters when it comes to minimizing the confidence interval for an estimate.

The answers suggested by the results of the simulation study are subject to a number of qualifications. Most prominent among the qualifications are:

- the fact that the findings of the paper apply only for problems where it is clear that training and test samples are related by prior probability shift; and

- the general observation that the scope of a simulation study necessarily is rather restricted and therefore findings of such studies can be suggestive and illustrative at best.

Hence, the findings from the study do not allow firm or general conclusions. As a consequence, the answers to the questions suggested by the simulation study have to be ingested with caution:

- For not too small test sample sizes, such as 50 or more, there is no need to deploy special techniques for prediction intervals.

- It is worthwhile to base prevalence estimation on powerful classifiers or scores because this way the lengths of the confidence intervals can be much reduced. The use of less accurate classifiers may entail confidence intervals so long that the estimates have to be considered worthless.

In most of the experiments performed as part of the simulation study, the maximum likelihood approach (method MLboot) to the estimation of the positive class prevalence turned out to deliver on average the shortest confidence intervals. As shown in Appendix A.2.3, application of the maximum likelihood approach requires that in a previous step the density ratio or the posterior class probabilities are estimated on the training samples. To achieve this with sufficient precision is a notoriously hard problem. Note, however, the promising recent progress made on this issue [28]. Not much worse and in a few cases even superior was the performance of APCC (Adjusted Probabilistic Classify and Count). In contrast the performance of the Energy distance and Hellinger distance estimation methods was not outstanding and, in the case of the latter methods, even insufficient in the sense of not guaranteeing the required coverage rates of the confidence intervals.

Clearly, it would be desirable to extend the scope of the simulation study in order to obtain more robust answers to the questions mentioned above.

One way to do so would be to look at a larger range of prevalence estimation methods. Recent research by Maletzke et al. [8] singled out prevalence estimation methods based on minimising distances related to the Earth Mover’s distance as very well and robustly performing. Earlier research by Hofer [29] already found that prevalence estimation by minimising the Earth Mover’s distance worked well in the presence of general dataset shift (“local drift”). Hence, it might be worthwhile to compare the performance of such estimators with respect to the length of confidence intervals to the performance of other estimators such as the ones considered in this paper.

Another way to extend the scope of the simulation study would be to generalise the “double binormal” framework the study described in this paper is based on. Such an extension could be constructed along the lines described in Section 4 of Tasche [24].

Funding

This research received no external funding.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Particulars for the Implementation of the Simulation Study

This appendix presents the mathematical details needed for coding the prevalence estimation methods listed in Section 2.2. In particular, the case of infinite training samples (i.e., where the training sample is actually the training population and the parameters of the model are exactly known) is covered.

Appendix A.1. Adjusted Classify and Count (ACC) and Related Prevalence Estimators

ACC and APCC, as mentioned in Section 2.2, are special cases of the “ratio estimator” of Vaz et al. [9]. From an even more general perspective, they are instances of estimation by the “Method of Moments” ([30], Section 2.4.1 and the references therein). By Theorem 6 of Vaz et al. [9], ratio estimators are Fisher consistent for estimating the positive class prevalence of the test population under prior probability shift.

Adjusted Classify and Count (ACC). In the setting of Section 2, denote the feature space (i.e., the range of values which the feature variable X can take) by . Let be a crisp classifier in the sense that if for an instance it holds that , a positive class label is predicted, and if a negative class label is predicted. With the notation introduced in Section 2, the ACC estimator based on the classifier g of the test population positive class prevalence is given by

Recall that

- is the proportion of instances in the test population whose labels are predicted positive by the classifier g.

- is the false positive rate (FPR) associated with the classifier g. The FPR equals and, therefore, also of the classifier g.

- is the true positive rate (TPR) associated with the classifier g. The TPR is also called “recall” or “sensitivity” of g.

Of course, the ACC estimator of Equation (A1) is defined only if , i.e., if g is not completely inaccurate. González et al. [5] gave in Section 6.2 some background information on the history of ACC estimators.

When a threshold is fixed, the soft classifier gives rise to a crisp classifier , defined by

The classifiers with

are Bayes classifiers which minimise cost-sensitive Bayes errors (see, for instance, (Tasche [24] Section 2.1)). Thresholds of special interest are:

- for maximum accuracy (i.e., minimum classification error) which leads to the estimator ACC50 listed in Section 2.2; and

- for maximising the denominator of the right-hand side of Equation (A1), which leads to the estimator ACCp listed in Section 2.2.

For the simulation procedures run for this paper, a sample version of was used:

where denotes a sample generated under the test population distribution .

To deal with the case where in the setting of Section 2.1 with the double binormal model the training sample is infinite, the following formulae were coded for the right-hand side of Equation (A1) with and (A4) (with parameters as in Equation (3a)):

Adjusted Probabilistic Classify and Count (APCC). APCC—called scaled probability average by Bella et al. [19]—generalises Equation (A1) by replacing the indicator variable with a real-valued random variable . If h only takes values in the unit interval the variable is a randomized decision classifier (RDC) which may be interpreted as the probability with which the positive label should be assigned. Equation (A1) modified for APCC reads:

Bella et al. [19] suggested the choice .

For the simulation procedures run for this paper, a sample version of was used:

where denotes a sample generated under the test population distribution .

To deal with the case where in the setting of Section 2.1 with the double binormal model the training sample is infinite, the following formulae have been coded for the right-hand side of Equation (A6) with and Equation (A7) (with parameters as in Equation (3a)):

Median sweep (MS). Forman [2] proposed to stabilise the prevalence estimates from ACC based on a soft classifier s via Equation (A2), by taking the median of all ACC estimates based on for all thresholds t such that the denominator of the right-hand side of Equation (A1) exceeds 25%. For the purpose of this paper, the base soft classifier is in connection with Equation (A3), and the set of possible thresholds t is restricted to .

Tuning ACC for ACCv. Observe that a main factor impacting the length of a confidence interval for a parameter is the standard deviation of the underlying estimator. This suggests the following approach to choosing a good threshold for the classifier in Equation (A3):

The test population distribution appears in the numerator of Equation (A9) because the confidence interval is calculated for a sample generated from . The training population distribution is used in the denominator of Equation (A9) because the confidence interval is scaled by the denominator of Equation (A1). See Equation (A5) for the formulae used for the calculations of this paper for Equation (A9) in the setting of Section 2.1. As in the case of MS, for the purpose of this paper, the set of possible thresholds t is restricted to .

Tuning APCC for APCCv. Similar to Equation (A9), the idea is to minimise the variance of the estimator under while controlling the size of the denominator in Equation (A6). For , define

where and are the class-conditional densities of the features. Then, it holds that

A good choice for could be with

For the purpose of this paper, the set of possible parameters in Equation (A10) is restricted to . In the setting of Section 2.1, let a be defined as in Equation (3a) and let

Then, analogously to Equation (A8), in the setting of Section 2.1 the following formulae are obtained for use in the calculations of this paper for Equation (A10):

Appendix A.2. Prevalence Estimation by Distance Minimisation

The idea for prevalence estimation by distance minimisation is to obtain an estimate of by solving the following optimisation problem:

Here, d denotes a distance measure of probability measures with the following two properties:

- for all probability measures , to which d is applicable.

- if and only if .

There is no need for d to be a metric (i.e., asymmetric distance measures d with for some , are permitted). By Property (2), distance minimisation estimators defined by Equation (A12) are Fisher consistent for estimating the positive class prevalence of the test population under prior probability shift. In the following subsections, three approaches to prevalence estimation based on distance minimisation are introduced that have been suggested in the literature and appear to be popular.

Appendix A.2.1. Prevalence Estimation by Minimising the Hellinger Distance

The Hellinger distance (see González-Castro et al. [3] and Castaño et al. [6] for more information on the Hellinger distance approach to prevalence estimation) of two probability measures , on the same domain is defined in measure-theoretic terms by

where is any measure on the same domain such that both and are absolutely continuous with respect to . The value of does not depend upon the choice of .

In practice, the calculation of the Hellinger distance must take into account that most of time it has to be estimated from sample data. Therefore, the right-hand side of Equation (A13) is discretized by (in the setting of Section 2) decomposing the feature space into a finite number of subsets or bins and evaluating the probability measures whose distance is to be measured on these bins. This leads to the following approximative version of the minimisation problem in Equation (A12):

If the feature space is multi-dimensional, e.g., for some , González-Castro et al. [3] also suggested minimising the Hellinger distance separately across all the d dimensions of the feature vector . In this case, the feature space is decomposed component-wise in b bins and Equation (A14) is modified to become

where . For the purposes of this paper, Equation (A14) has been adapted to become

where … is a sample of features of instances generated under the test population distribution and the -terms must be estimated from the training sample if it is finite and can be exactly pre-calculated in the case of an infinite training sample. In the latter case, Equation (A15) has to be modified to reflect the binormal setting of Section 2.1 for the training population distribution:

if for . For this paper, the number b of bins (see Maletzke et al. [8] for critical comments regarding the choice of the number of bins) in Equation (A16a) was chosen to be 4 or 8, and the boundaries of the bins have been defined as follows ( is the inverse function to the standard normal distribution function):

Appendix A.2.2. Prevalence Estimation by Minimising the Energy Distance

Kawakubo et al. [21] and Castaño et al. [6] provided background information for the application of the Energy distance approach to prevalence estimation.

Denote by V and , respectively, the projection on the first d components and the last d components of , i.e.,

V and are also used to denote the identity mapping on , i.e., and for .

Let and be two probability measures on . Then, denotes the product measure of and on . Hence, is the probability measure on such that V and are stochastically independent under .

Denote by the Euclidean norm of . Then, the Energy distance of two probability measures , on with and can be represented as

Recall that in this section the aim is to estimate class prevalences by solving the optimisation problem in Equation (A12). To do so by means of minimising the Energy distance, fix a function and choose as probability measures and the distributions of under the probability measures and whose distance is minimised in Equation (A12):

for and such that all involved probabilities are well-defined. With this choice for and , it follows from Equation (A17) that

with and . The unique minimising value of q for the right-hand side of Equation (A18) is found to be

The fact that there is a closed-form solution for the estimate in the two-class case is one of the advantages of the Energy distance approach.

When both the training and the test samples are finite, all the sub-terms of A and B in Equation (A19) can be empirically estimated in a straight-forward way. For the semi-finite version of Equation (A19), when the training sample is infinite, the terms and have to be replaced by empirical approximations based on a sample … of features of instances generated under the test population distribution , while the population measures for and , respectively, are kept:

Accordingly, for the case where in the setting of Section 2.1 with the double binormal model the training sample is infinite, the following formulae have been coded for Equation (A20) and the terms A and B in Equation (A19), with (parameters as in Equation (3a)):

Appendix A.2.3. Prevalence Estimation by Minimising the Kullback–Leibler Distance

In this section, the approach to prevalence estimation based on minimising the Kullback–Leibler distance is presented. Note that the Kullback–Leibler distance is also called Kullback–Leibler divergence (e.g., in [31]). In practical applications, this approach is equivalent to maximum likelihood estimation of the class prevalences. Due to the asymptotic efficiency of maximum likelihood estimators in terms of the variances of the estimates, this approach can serve as an absolute benchmark for what can be achieved in terms of short confidence intervals. Saerens et al. [18] made the EM-algorithm version of the approach—as a possibility to obtain the maximum likelihood estimate [32]—popular in the machine learning community.

Let and be two probability measures on the same domain. Assume that both and are absolutely continuous with respect to a measure on the same domain, with densities

respectively. If the densities , are positive, the Kullback–Leibler distance of to is then defined as

In contrast to the Hellinger and Energy distances, which are introduced in Appendix A.2.1 and Appendix A.2.2, respectively, the Kullback–Leibler distance is not symmetric in its arguments, i.e., in general may occur. In Equation (A22), the lack of symmetry is indicated by separating the arguments not by a comma but by the sign . However, while is not a metric it still has the properties and if and only if .

The choice and leads to a computationally convenient Kullback–Leibler version of the minimisation problem in Equation (A12). One has to assume that there are a measure on the feature space and densities , and such that

This gives the following optimisation problem:

where the density ratio is defined by

and additionally it must hold that . Under fairly general smoothness conditions, the right-hand side of Equation (A23) can be differentiated with respect to q. This gives the following necessary condition for optimality in Equation (A23):

When both the training and the test samples are finite, the right-hand side of Equation (A24) as a function of can be empirically estimated in a straight-forward way.

For the semi-finite setting according to Section 2.1 with infinite training sample, it can be assumed that the density ratio R is fully known by the specification of the model. In contrast, the test population distribution of the features is only known through a sample (or empirical distribution) that was sampled from . Replacing the expectation with respect to in Equation (A24) with a sample average gives the equation

as an approximative necessary condition for to minimise the Kullback–Leibler distance in Equation (A23). Solving Equation (A25a) results in an approximation of and therefore .

It is not hard to see (see, for instance, Lemma 4.1 of [33]) that Equation (A25a) has a unique solution with if and only if

If Equation (A25b) holds, the solution of Equation (A25a) is if and only if and the solution is if and only if . For the purpose of this paper, clipping is applied when solving Equation (A25a). In the literature on prevalence estimation, clipping is applied routinely (see, e.g., [2]). In general, one should be careful with clipping because the fact that there is no estimate between 0 and 1 could be a sign that the assumption of prior probability shift is violated. This is not an issue in the setting of this paper because prior probability shift is created by the design of the simulation study.

Since, as shown in the proof of Lemma 4.1 of Tasche [33], it is not possible that both and occur, it makes sense to set

- if ; and

- if .

Equation (A25a) happens also to be the first-order condition for the maximum likelihood estimator of the test population prevalence of the positive class , see Peters and Coberly [26]. A popular method to determine the maximum likelihood estimates for the class prevalences in mixture proportion problems such as the one of this paper is to deploy an Expectation Maximisation (EM) approach [18,32]. However, in the specific semi-finite context of this paper, and more generally in the two-classes case, it is more efficient to solve Equation (A25a) directly by an appropriate numerical algorithm (see, for instance, the documentation of the R-function “uniroot”).

The confidence and prediction intervals based on , as found by solving the empirical version of Equation (A24) or Equation (A25a), are determined with the following two methods:

- Method MLboot: Bootstrapping the samples , …, , , …, , and , …, from Section 2.3 and creating a sample of by solving Equation (A25a) for each of the bootstrapping samples.

- Method MLinf: Asymptotic approximation by making use of the central limit theorem for maximum likelihood estimators (see, e.g., Theorem 10.1.12 of [27]). According to this limit theorem, converges for toward a normal distribution with mean 0 and variance v given bywhere q is the true positive class prevalence of the population underlying the test sample. The right-hand side of Equation (A26a) is approximated with an estimate that is based on a sample average:where denotes the—unique if any—solution of Equation (A25a) in the unit interval and was generated under the test population distribution . In the binormal setting of Section 2.1, the density ratio is given by Equation (3b) if the training sample is infinite and can be derived from the posterior class probabilities obtained by logistic regression if the training sample is finite.

Appendix B. Analysis of Error Adjusted Bootstrapping

Without mentioning explicitly the notion of prediction intervals, Daughton and Paul [11] considered the problem of how to construct prediction intervals for the realised positive class prevalence with correct coverage rates. Their “error adjusted bootstrapping” approach works for crisp classifiers only.

Let g be a crisp classifier as defined in Appendix A.1. In the notation of that section. it then holds that

Hence, the event “an instance in the test sample turns out to have a positive label” can be simulated in three steps:

- Apply the classifier g to the bootstrapped features of an instance in the test sample.

- If a positive label is predicted by g, simulate a Bernoulli variable with success probability . If a negative label is predicted by g, simulate a Bernoulli variable with success probability .

- In both cases, if the outcome of the Bernoulli variable is success, count the result as positive class, otherwise as negative class.

By Equation (A27), the probability of the positive class in this experiment is the prevalence of the positive class in the test population distribution. Repeat the experiment for all the instances in the bootstrapped test sample. Then, the relative frequency of the positive outcomes of the experiments is an approximate realisation of the relative frequency of the positive class labels in the test sample which in the same way as by the binomial approach described in Section 2.3 can be used to construct a bootstrap prediction interval.

Daughton and Paul [11] noted that this approach worked if the “predictive values” and of the test sample ( is also called “precision” in the literature) were the same as in the training sample and hence could be estimated in the training sample:

Unfortunately, Equation (A28) does not hold under prior probability shift as is implied by the following representation of the precision in terms of , and test population prevalence p:

Under prior probability shift, TPR and FPR are not changed, but p changes. Hence, by replacing p with on the right-hand side of Equation (A29), it follows that

Therefore, under prior probability shift, the approach by Daughton and Paul [11] is unlikely to work in general. See Table 3 in Section 3.1 for a numerical example. It is not clear if requiring that Equation (A28) holds results in defining an instance of dataset shift which might occur in the real world.

References

- Barranquero, J.; González, P.; Díez, J.; del Coz, J. On the study of nearest neighbor algorithms for prevalence estimation in binary problems. Pattern Recognit. 2013, 46, 472–482. [Google Scholar] [CrossRef]

- Forman, G. Quantifying counts and costs via classification. Data Min. Knowl. Discov. 2008, 17, 164–206. [Google Scholar] [CrossRef]

- González-Castro, V.; Alaiz-Rodríguez, R.; Alegre, E. Class distribution estimation based on the Hellinger distance. Inf. Sci. 2013, 218, 146–164. [Google Scholar] [CrossRef]

- Du Plessis, M.; Niu, G.; Sugiyama, M. Class-prior estimation for learning from positive and unlabeled data. Mach. Learn. 2017, 106, 463–492. [Google Scholar] [CrossRef]

- González, P.; Castaño, A.; Chawla, N.; del Coz, J. A Review on Quantification Learning. ACM Comput. Surv. 2017, 50, 74:1–74:40. [Google Scholar] [CrossRef]

- Castaño, A.; Morán-Fernández, L.; Alonso, J.; Bolón-Canedo, V.; Alonso-Betanzos, A.; del Coz, J. Análisis de algoritmos de cuantificacíon basados en ajuste de distribuciones. In IX Simposio de Teoría y Aplicaciones de la Minería de Datos (TAMIDA 2018); Riquelme, J., Troncoso, A., García, S., Eds.; Asociación Española para la Inteligencia Artificial: Granada, Spain, 2018; pp. 913–918. [Google Scholar]

- Keith, K.; O’Connor, B. Uncertainty-aware generative models for inferring document class prevalence. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 4575–4585. [Google Scholar]

- Maletzke, A.; Dos Reis, D.; Cherman, E.; Batista, G. DyS: A Framework for Mixture Models in Quantification. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Vaz, A.; Izbicki, R.; Stern, R. Quantification Under Prior Probability Shift: The Ratio Estimator and its Extensions. J. Mach. Learn. Res. 2019, 20, 1–33. [Google Scholar]

- Hopkins, D.; King, G. A Method of Automated Nonparametric Content Analysis for Social Science. Am. J. Polit. Sci. 2010, 54, 229–247. [Google Scholar] [CrossRef]

- Daughton, A.; Paul, M. Constructing Accurate Confidence Intervals when Aggregating Social Media Data for Public Health Monitoring. In Proceedings of the AAAI International Workshop on Health Intelligence (W3PHIAI), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Meeker, W.; Hahn, G.; Escobar, L. Statistical Intervals: A Guide for Practitioners and Researchers, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Barranquero, J.; Díez, J.; del Coz, J. Quantification-oriented learning based on reliable classifiers. Pattern Recognit. 2015, 48, 591–604. [Google Scholar] [CrossRef]

- Zhang, K.; Schölkopf, B.; Muandet, K.; Wang, Z. Domain Adaptation Under Target and Conditional Shift. In Proceedings of the 30th International Conference on International Conference on Machine Learning, JMLR.org, ICML’13, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. III-819–III-827. [Google Scholar]

- Hofer, V.; Krempl, G. Drift mining in data: A framework for addressing drift in classification. Comput. Stat. Data Anal. 2013, 57, 377–391. [Google Scholar] [CrossRef]

- Lipton, Z.; Wang, Y.X.; Smola, A. Detecting and Correcting for Label Shift with Black Box Predictors. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Dy, J., Krause, A., Eds.; Cornell University: Ithaca, NY, USA, 2018; Volume 80, pp. 3122–3130. [Google Scholar]

- Moreno-Torres, J.; Raeder, T.; Alaiz-Rodriguez, R.; Chawla, N.; Herrera, F. A unifying view on dataset shift in classification. Pattern Recognit. 2012, 45, 521–530. [Google Scholar] [CrossRef]

- Saerens, M.; Latinne, P.; Decaestecker, C. Adjusting the Outputs of a Classifier to New a Priori Probabilities: A Simple Procedure. Neural Comput. 2001, 14, 21–41. [Google Scholar] [CrossRef] [PubMed]

- Bella, A.; Ferri, C.; Hernandez-Orallo, J.; Ramírez-Quintana, M. Quantification via probability estimators. In Proceedings of the 2010 IEEE International Conference on Data Mining(ICDM), Sydney, NSW, Australia, 3–17 December 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 737–742. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2014. [Google Scholar]

- Kawakubo, H.; du Plessis, M.; Sugiyama, M. Computationally Efficient Class-Prior Estimation under Class Balance Change Using Energy Distance. IEICE Trans. Inf. Syst. 2016, 99, 176–186. [Google Scholar] [CrossRef]

- Cramer, J. Logit Models From Economics and Other Fields; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Davison, A.; Hinkley, D. Bootstrap Methods and their Application; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Tasche, D. Fisher Consistency for Prior Probability Shift. J. Mach. Learn. Res. 2017, 18, 3338–3369. [Google Scholar]

- Gart, J.; Buck, A. Comparison of a screening test and a reference test in epidemiologic studies. II. A probabilistic model for the comparison of diagnostic tests. Am. J. Epidemiol. 1966, 83, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Peters, C.; Coberly, W. The numerical evaluation of the maximum-likelihood estimate of mixture proportions. Commun. Stat. Theory Methods 1976, 5, 1127–1135. [Google Scholar] [CrossRef]

- Casella, G.; Berger, R. Statistical Inference, 2nd ed.; Thomson Learning: Pacific Grove, CA, USA, 2002. [Google Scholar]

- Kull, M.; Silva Filho, T.; Flach, P. Beyond sigmoids: How to obtain well-calibrated probabilities from binary classifiers with beta calibration. Electron. J. Stat. 2017, 11, 5052–5080. [Google Scholar] [CrossRef]

- Hofer, V. Adapting a classification rule to local and global shift when only unlabelled data are available. Eur. J. Oper. Res. 2015, 243, 177–189. [Google Scholar] [CrossRef]

- Frühwirth-Schnatter, S. Finite Mixture and Markov Switching Models: Modeling and Applications to Random Processes; Springer: Berlin, Germany, 2006. [Google Scholar]

- Du Plessis, M.; Sugiyama, M. Semi-supervised learning of class balance under class-prior change by distribution matching. Neural Netw. 2014, 50, 110–119. [Google Scholar] [CrossRef] [PubMed]

- Redner, R.; Walker, H. Mixture densities, maximum likelihood and the EM algorithm. SIAM Rev. 1984, 26, 195–239. [Google Scholar] [CrossRef]

- Tasche, D. The Law of Total Odds. arXiv 2013, arXiv:1312.0365. [Google Scholar]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).