1. Introduction

One of the most difficult situations to handle for people affected by hearing loss is undoubtedly a conversational setting with consistent background noise—whether they are wearing hearing aids (HA) or cochlear implants (CI) [

1,

2,

3].

Improving speech perception in noisy environments is of paramount importance for individuals with hearing loss, as it enables them to effectively communicate and engage in social interactions, enhancing their quality of life and reducing the negative impact of hearing impairment on their daily activities.

As such, one of the most prevalent solutions adopted in order to solve or, at the very least, alleviate this issue has been to improve the signal-to-noise-ratio (SNR), an index that takes into consideration the intensity of the signal of interest that the person wants to hear—in this specific case, an interlocutor speaking to them—and compare it to the intensity of the background noise that interferes with it. A higher SNR implies clearer and easier communication.

CI manufacturers focused on increasing the SNR as much as possible in different ways.

The first proposed method of SNR improvement was based on sound pre-processing strategies [

4,

5,

6]. A variety of pre-processing strategies to improve speech in noise have been made available on almost all sound processors by most manufacturers, and several studies have indicated a marked improvement in speech performance in noise with the use of noise-reduction algorithms in cochlear implant users [

7,

8,

9,

10].

A variety of pre-processing strategies have been developed by Cochlear Limited since the release of the Nucleus Freedom processor and subsequent models. With the launch of the Nucleus 6 sound processor (CP900), a new pre-processing strategy called SCAN was introduced.

The second solution to amplify speech perception in noisy environments that has been employed by CI manufacturers was the use of external accessories designed to enhance perception in noisy conditions [

11,

12]. The most common solution was the addition of wireless microphones, such as the Mini-Microphone 2 (MiniMic2) and 2+ by Cochlear.

They are intended to be clipped on a speaker’s clothing (using the directional microphone) or placed flat on a table desk (using the omnidirectional microphone) to directly stream the orators’ voices into the sound processor.

Therefore, the main difference between SCAN technology and the wireless MiniMic lies in their functions and modes of operation. SCAN is an internal pre-processing strategy integrated into Nucleus sound processors, and it relies on the device’s algorithms to adapt to different listening environments automatically. On the other hand, MiniMic is an external accessory that captures the sound directly from the speaker, bypassing the environmental noise and providing improved speech perception for the CI user.

The purpose of this study was to determine whether there was a significant difference in benefits to speech recognition in noise between cochlear implant recipients who would only use the SCAN technology and those who would use the Wireless Microphone technology of the MiniMic2.

2. Materials and Methods

For this observational prospective study, a group of adult subjects with binaural sensorineural hearing loss and recipients of unilateral cochlear implants for at least 6 months were enrolled. All the patients had been implanted at our audiologic third-level referral center and sustained regular follow-up at our hospital. Only patients with Cochlear’s proprietary CP900, CP950, and CP1000 sound processors were selected. All participants had prior experience with the SCAN function, and all of them had an ability of bisyllabic word speech recognition in noise (S/R +10 dB) ≥ 60%.

Speech recognition in noise, as measured by SRT, was assessed by using the Matrix Sentence Test [

13], first with the aid of the SCAN function and then with the exclusive aid of the wireless device MiniMic 2. After three practice evaluations, both tests were run one after the other, but in a random order to avoid bias in results evaluations.

If a subject had an HA on the non-implanted ear, they would turn them off during the evaluation.

The test was administered through a single frontal loudspeaker, 1 m away from the subject; the loudspeaker was both the source of the signal and of the background noise (S0N0) and was connected to a personal computer, from which the Matrix Sentence Test was initiated.

The testing setup was a 4 × 8 m acoustically isolated room with dense sound-absorbing panels on the walls and on the roof. It was characterized by an irrelevant background noise and a very low reverberation time.

The Matrix Sentence Test was developed by HorTech gGmbh of Oldenburg and is today, one of the most widely used speech audiometry adaptive tests, especially in the evaluation of the efficacy of HAs and CIs. One of the most relevant reasons for this success is that the test is currently available in more than twenty different languages, allowing for much more effective international results sharing and research.

It works like the following: the subject is required to repeat out loud a sentence made of 5 random words selected from a preset list and produced through the loudspeaker at the same time as a masking noise. This test measures the Signal Reception Threshold (SRT) with a ±1 dB precision.

The sentences that are randomly generated by this test follow a language-specific grammatical structure; in the case of this study, following Italian grammar, the order is the following: subject, verb, numeral adjective, object, and qualifying adjective. Each word is randomly selected from a 10-word pool in such a way that it is possible to obtain up to 100.000 different but still grammatically correct sentences, making it possible to rerun the test more than once to the same subject without influencing its result.

The Matrix Evaluation Test measures the SRT, which is the SNR where 50% of the speech is correctly understood by the subject.

In this test, the noise level produced is at a constant 65 dB, and the SNR begins at 0.

When the subject correctly understands ≥50% of a sentence, the test automatically decreases the SNR of the following item; on the contrary, if they understand <50% of it, the test increases the SNR of the upcoming sentence. Such adjustments of the signal levels are gradual and precise, and they are based on how many and what words were successfully repeated by the subject. Control subjects had SNRs with a mean of −6.7 and standard deviation SD = 0.7 [

13].

In the case of this study, the exam’s background noise remained turned on for the total duration of the test to avoid any possible automatic auditory scene changing with the SCAN technology.

A randomized list of 20 sentences as generated by the Matrix Sentence Test was administered to the participants—for a total of circa 4 min for each test—starting with a noise level of 65 dB and an SNR of 0.

Once the first test was completed and the examiner noted the resulting SRT, the participant then proceeded to repeat the test with 20 new randomized sentences, with the sole aid of the wireless device MiniMic2 if SCAN was used at the first test, or vice versa.

In this configuration, the MiniMic2 was hanged to the loudspeaker support, about 15 cm under the transducer, mimicking the normal position of the device when used clipped on the clothes of a typical speaker.

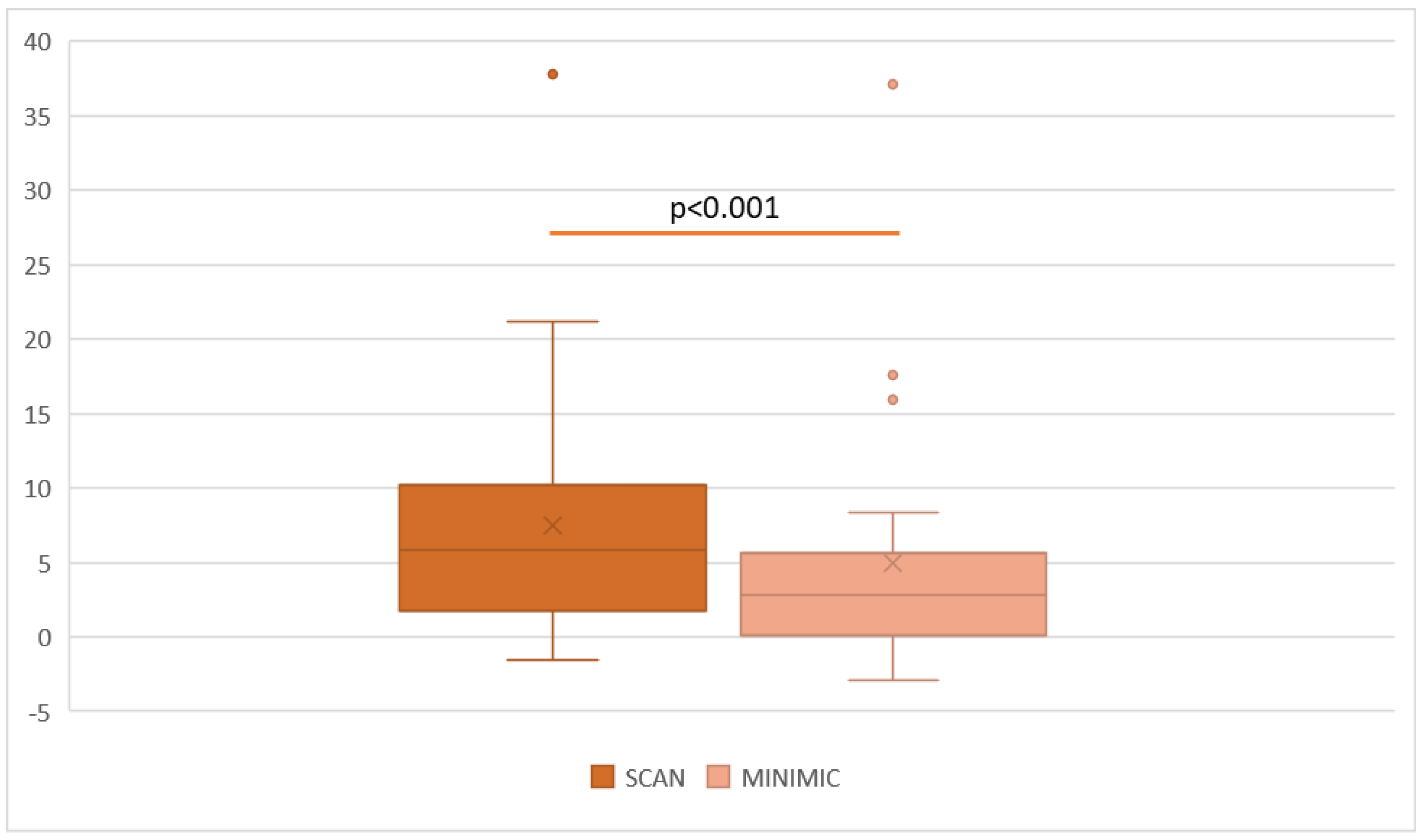

Furthermore, the SRT DELTA value has been calculated as the difference between SRTs obtained by the same subject with the use of SCAN minus the SRT obtained with the use of MiniMic2.

The statistical analysis was performed using SPSS 23 software (SPSS, Chicago, IL, USA).

Comparisons for quantitative variables were analyzed with the related samples using the Wilcoxon Signed Rank Test or ANOVA. Univariate correlation for quantitative variables was tested with Pearson correlation. p values < 0.05 were considered statistically significant.

The IRB approved the realization of this study. Written informed consent has been obtained from all the participants.

4. Discussion

In the interest of the discussion, it is useful to remember that the SRT represents the threshold at which speech can be accurately perceived, while the SNR indicates the balance between the speech signal and background noise, determining how well speech can be understood in noisy conditions. Both measures are essential in assessing an individual’s ability to communicate effectively in challenging listening environments. Nowadays, speech perception in noise is the main endpoint of the procedure of cochlear implantation.

With the evolution of the processors’ technologies, all the manufacturers presented effective algorithms of sound pre-processing, guaranteeing an improvement of the SRT [

7,

8,

9,

10]. On the other hand, few papers underlined the role of wireless microphones to be placed near the sound source in order to improve the signal-to-noise ratio, contributing to a better speech understanding in noisy situations for cochlear implant recipients and even hearing-aided subjects [

12,

14].

Cochlear Limited, currently one of the leading companies in producing cochlear implants, has devised in time a number of solutions to this issue.

Their first sound processor, known as the Wearable Sound Processor (WSP I), contained a hardware dual-port directional microphone able to attenuate by 5 dB the sound intensity of free-field noises coming from 180°. In 1997, the Audallion BEAMformer was released, a body-worn processor, this time with a double dual-port directional microphone, devised so they could attenuate noise coming from the back as well as the sides [

15,

16]. The main issue with this model resided in its hardware configuration, a hurdle that was ultimately overcome by the release of the Nucleus Freedom sound processor [

17]. This device had, in addition to one dual-port directional microphone, an omnidirectional microphone; by using both, the input processing would generate a strongly directional and adaptive microphone known as BEAM, which ended up improving Speech Reception Thresholds (SRTs) by between 6 dB and 16 dB [

16].

In 2009, the Nucleus 5 sound processor was released, this time bearing two omnidirectional microphones, sporting a reduced size and, most importantly of all, the new SmartSound pre-processing system, followed by an updated SmartSound2 (Wolfe et al. [

3]); the main feature of many of the technologies introduced in the Nucleus 5 by SmartSound is adaptive and automatic control and optimization, reached through ASC (Automatic Sensitivity Control), ADRO (Adaptive Dynamic Range Optimization) and Whisper. All of these have been shown to bring significant improvement, as reported by a number of recipients [

6,

9,

18].

With the release of the Nucleus 6 sound processor, a new update to the SmartSound system was released: the SmartSound iQ, which introduced a scene classifier technology called SCAN.

SCAN automatically examines modulation, pitch, and tone in the microphone input signals, and based on the results obtained, it selects the most probable environment the recipient may find themselves in from a specific and limited number of scenes, more precisely, Speech-in-Noise, Speech, Noise, Wind, Quiet, and Music. This selection is made based on rules founded on training data that were compiled during the development of the system. Once the scene is selected, the current program is slowly changed in order to adapt to it in the most comfortable and least abrupt way possible for the recipient.

All of this is conducted using SmartSound technologies such as ADRO, ASC, and Whisper, as well as WNR (Wind Noise Reduction), SNR-NR (Signal-to-Noise Ratio Noise Reduction), and various microphone directionalities, activated dynamically and appropriately as the selected scene requires them to.

In detail, the SCAN system, once selected the most probable acoustic scene, can independently regulate:

Three directionality algorithms: standard directionality, frontal focus with sound cancellation at 120° (Zoom), dynamic cancellation of lateral or posterior noise (Beam);

Four compression algorithms: ASC, AGC medium and fast, Whisper;

Four noise reduction algorithms: ADRO, Background Noise Reduction (BNR), WNR, SNR-NR.

In an S0N0 condition like the one tested in this paper, then, even if the regulation of directionality algorithms became negligible, the automatic and dynamic adjustment of compression and noise reduction algorithms provided by the SCAN system could improve the speech perception in noise performances.

Few previous papers reported the benefits of listening to noise with SCAN technology for CI recipients.

Wolfe and colleagues showed that the Nucleus 6 sound processor had the potential to improve speech understanding in noisy environments for both adult and pediatric users of Nucleus cochlear implants when compared to previous sound processors’ generations. This improvement was attributed to the automatic activation of the beamforming directional mode in the Nucleus 6, as well as the inclusion of the SCAN and SNR-NR [

19].

Plasmans and colleagues, in a study conducted on experienced pediatric cochlear implant users, proved that the implementation of noise reduction technologies in the Nucleus 6 sound processor led to significant improvements in speech perception in noisy environments, calculated as a percentage of word recognition [

20].

Mauger and colleagues, in a study on 21 experienced participants with Cochlear Nucleus CI systems, compared the performances of the participants with the Nucleus 5 sound processor and the newer Nucleus 6 sound processor with SmartSound iQ. Various pre-processing programs, including SCAN technology, were tested in both quiet and noisy environments. The study showed that the Nucleus 6 system’s default program, SCAN, provided better or comparable speech understanding compared to the Nucleus 5 programs [

21].

These findings offered evidence supporting the appropriateness of utilizing technologies such as SCAN in the cochlear-implanted population. Moreover, the data suggest that both automatic scene classification and background noise reduction technologies are suitable and beneficial for both experienced pediatric and adult cochlear implant users.

Anyway, those studies acknowledged that the benefit of SCAN and the automatic activation of beamforming may be greater in the controlled laboratory setting than in real-world situations characterized by diffuse noise and high levels of reverberation.

An additional solution to the noisy environment conundrum is the use of wireless microphones, such as the MiniMic2 and 2+ by Cochlear.

The MiniMic2 is a wireless microphone that receives signals from up to 7 m away and uses safe and private digital streaming via 2.4 GHz. It has a directional microphone and can modulate the volume within a range from –24 dB to +12 dB. The MiniMic 2+ has a microphone that can be set as directional if positioned vertically or as omnidirectional if set horizontally, and makes use of both an FM and 2.4 GHz connectivity.

A number of papers provided valuable insights into the benefits of wireless microphone technology and accessories for individuals with cochlear implants, highlighting improved speech perception, listening comfort, and speech intelligibility in various real-life and noisy situations.

One of the primary benefits of wireless microphones for cochlear implant users, in fact, is the significant improvement in speech intelligibility, especially in noisy situations. In particular, the digital adaptive systems (like the MiniMic2) outperformed the previous analog systems, especially improving speech recognition at higher noise levels [

14].

In fact, people with hearing problems, either using HA or CI, have limited directional capabilities, making it difficult to focus on a specific speaker in a noisy environment. Wireless microphones provide a solution by allowing users to wirelessly connect to a microphone worn by the speaker, isolating their voice from surrounding noise. This direct audio input enhances speech clarity, enabling better comprehension and reducing listening effort for cochlear implant users.

Moreover, wireless microphones also offer increased range and flexibility for cochlear implant users. By transmitting sound wirelessly, the user can maintain optimal hearing performance even when the speaker is physically distant. This functionality is particularly valuable in educational or professional settings, where a speaker may need to move around the room or speak from a distance. Wireless microphones enable seamless communication, bridging the gap between the speaker and the cochlear implant user, regardless of physical proximity.

Finally, while reverberation and background noise can severely hinder the speech-understanding abilities of cochlear implant users, wireless microphones address this issue by providing a direct audio feed from the speaker to the user’s cochlear implant, minimizing the impact of reverberation and background noise.

Other possible mechanisms that may lead to better speech perception results using remote microphones are:

- -

The capability of the wireless microphones to reduce the natural decrease in the spectral distribution of higher frequencies sounds with the distance between the sound source and the listener.

- -

A possible higher gain when using the remote microphone rather than the processor standard microphones.

Limiting the decrease in higher frequencies sounds’ loudness could ease consonant recognition and, therefore, speech perception.

On the other hand, to evaluate the gain differences when using remote microphones, electrodograms should be analyzed, particularly during noise-only presentations. Electrodograms are graphical representations of electrical responses in the cochlea, which can provide insights into how different frequencies are processed by the cochlear implant. Unfortunately, given the retrospective nature of this study, it was not possible to assess the electrodograms to explain the observed differences between the use of remote microphones and standard processor pickup conditions.

In 2013, Wolfe and colleagues first acknowledged that the new adaptive remote microphone system did provide better performance than the old-generation fixed-gain FM system, especially in high levels of noise [

14].

In 2015, Wolfe and collaborators published the results of a study involving 16 users of Cochlear Nucleus sound processor systems connected with a MiniMic2 wireless microphone. This study found that using the Nucleus 6 sound processor with a wireless remote microphone accessory resulted in significantly improved sentence recognition in quiet conditions compared to using the sound processor alone. This was in contrast with previous studies that showed no improvement with remote microphone technology in quiet conditions, but those studies used simpler speech stimuli, generating a ceiling effect. Moreover, even a broader improvement was found in speech recognition in noise, with gains of 0.40 to 0.60 percentage points at different signal-to-noise ratios. On the basis of these findings, the authors suggest that CI users should have the opportunity to use a remote microphone accessory in noisy environments, even if even more benefit would be conceivable from the implementation of digital remote-microphone systems with adaptive features to enhance the performance in noise even more [

22].

Razza and colleagues, in 2017, confirmed Wolfe et al.’s findings, showing that with two different remote microphone systems, it was possible to achieve an improvement in SRT in a cohort of young CI users with Nucleus Cochlear CP910 implants. The MiniMic system yielded higher gains in speech performance (SRT gain of −4.76) compared to another very widespread wireless system produced by Phonak Limited, the Roger system (SRT gain of −3.01). The main difference between these two types of wireless microphones is that while the MiniMic system can connect with Nucleus processors without any additional accessories, the Phonak limited Roger system requires the use of a supplementary shoe receiver. Moreover, MiniMic encodes the signal in a proprietary protocol for wireless radiofrequency transmission on the 2.4 GHz band, while the Roger systems provide a considerable compression of the sound signals in very short (160 ms) code packages, broadcasted on different radiofrequency bands between 2.400 and 2.4835 GHz. According to Razza et al., those differences are sufficient to explain the disparity in results between those two systems. Additionally, contrary to what was assumed by Wolfe et al., the addition of the NR algorithm did not result in statistically significant further improvements [

23].

De Ceulaer and colleagues, in 2017, tested the SRT of 13 adult Nucleus recipients without SCAN, with SCAN, and with MiniMic in a complex test setting. The tested wireless microphones were the MiniMic 2 and the previous model MiniMic 1. In this study, the authors showed a relevant improvement in SRT with the use of MiniMic 1 in various test settings and even a more consistent gain with a MiniMic 2. Our findings, even in a simpler setting, seem to confirm De Ceulaer et al. results, with comparable gain in SRT through the use of wireless microphones. In detail, the SRT gain reported in the study of De Ceulaer and colleagues with the source of speech at 1 m—which is the most similar to our setting—was 4.7 dB with MiniMic 1 in comparison to SCAN, a little higher than our DELTA SCAN of 2.49 dB. It is conceivable that this difference may be associated with the different test settings [

24].

As shown, our results are totally in line with the previous literature, reporting a significant gain in terms of SRT with the use of wireless microphone technologies, even compared to the use of the SCAN pre-processing algorithm.

In conclusion, our study confirms that wireless microphones significantly improve the signal-to-noise ratio, leading to enhanced speech perception and overall communication effectiveness. The mean gain from the use of a remote microphone in our cohort in terms of SRT was an impressive 2.49 dB.

Limit of the Study

It is important to acknowledge several limitations associated with this study. First, the sample size was relatively small, which may limit the application of the findings to a larger population.

Another limitation is the potential for confounding variables that were not accounted for in the analysis.

Additionally, the study was conducted in a controlled laboratory setting, which may not fully reflect real-world conditions. The findings should be cautiously extrapolated to everyday environments, which often involve complex and dynamic situations. Furthermore, it is important to note that the testing conducted with the MiniMic2 in our study, specifically in the S0N0 condition where both the signal and noise were emitted from the same loudspeaker, may not fully reflect the realistic use of the device. In everyday situations, the wireless microphone is typically positioned closer to the desired sound source (such as the speaker’s voice) and farther from the surrounding noise. However, in our experimental setup, the MiniMic2 was placed next to the same source, emitting both the signal and noise, which may have limited the potential improvement in SNR.

Despite this sub-optimal experimental setting, the use of the MiniMic2 still demonstrated superior performance compared to relying solely on pre-processing techniques. This suggests that even under less-than-ideal conditions, the MiniMic2 was able to provide benefits in terms of improving speech intelligibility and overcoming the limitations of relying solely on pre-processing algorithms.

Lastly, this study focused on a specific population or specific context, and caution should be exercised when generalizing the results to other populations or settings.

Despite these limitations, the study provides valuable insights into the determination of the best assessment for speech perception in noise in cochlear implant recipients, but further research addressing these limitations is necessary to confirm and extend the findings.