Multimodal Fusion and Dynamic Resource Optimization for Robust Cooperative Localization of Low-Cost UAVs

Highlights

- Proposes a novel collaborative localization algorithm integrating a cross-modal attention mechanism to fuse vision, radar, and lidar data, significantly enhancing robustness in occluded and adverse weather conditions.

- Proposes a dynamic resource optimization framework using integer linear programming, enabling real-time allocation of computational and communication resources to prevent node overload and improve system efficiency.

- Demonstrates superior performance in realistic simulations, significant improvements in positioning accuracy, resource efficiency, and fault recovery, demonstrating strong potential for applications in complex tasks.

- Provides a practical, low-cost system solution validated in complex scenarios, establishing a viable pathway for the engineering deployment of robust UAV swarms.

Abstract

1. Introduction

- A three-level multimodal feature extraction network is proposed, incorporating a cross-modal attention (CMA) mechanism to address the poor adaptability of heterogeneous sensor data fusion. To tackle the differences in heterogeneous sensor data, a hierarchical feature processing architecture is designed: the modality adaptation layer standardizes the dimensions of radar, lidar data, and visual images through format conversion; the shared feature layer employs a switchable backbone network, introducing the CMA mechanism to enhance the complementarity among modalities; the task-specific layer adjusts modality weights based on scene requirements, resolving issues such as “information mismatch,” resulting in a 35–40% reduction in multimodal fusion localization errors compared to traditional methods.

- Establish a multi-stage collaborative verification mechanism for multiple drones to ensure data consistency and positioning accuracy. Design a comprehensive collaborative verification scheme: In the result association stage, image feature matching and Ultra-Wideband (UWB) ranging are used to determine whether the detection results point to the same target, eliminating mismatches; in the weighted fusion stage, the trace of the extended Kalman filter (EKF) covariance matrix is calculated to assess positioning reliability, generating weights to obtain the final coordinates of the target; in the anomaly removal stage, Grubbs’ test is employed to eliminate anomalous positioning results, and UWB technology is utilized to correct the drone’s attitude, ensuring spatial alignment of data among multiple drones. This mechanism reduces the mean absolute positioning error (mAPE) of collaborative positioning among multiple drones by 45% compared to the non-verification scheme, with an anomaly removal rate exceeding 98%.

- Design a dynamic resource optimization scheme to achieve real-time adaptation of models and tasks, ensuring continuity and efficiency in positioning. To address the issue of limited resources for drones, a hybrid offline–online closed-loop mechanism is constructed: in the offline phase, a model library is built; in the real-time phase, distributed sensing monitors hardware load and communication bandwidth, dynamically scheduling based on an Integer Linear Programming (ILP) model: switching to lightweight models under high hardware load, enabling encoding and feature dimensionality reduction when bandwidth is insufficient, and migrating tasks in the event of node failures. This scheme improves resource utilization by 20–30% and reduces positioning interruption time by over 50% in node failure scenarios compared to traditional solutions.

2. Related Work

2.1. Cooperative Positioning Technology for Drones

2.1.1. Single Drone Positioning System

2.1.2. Multi-UAV Positioning System

2.2. Multimodal Data Fusion

2.3. Dynamic Resource Optimization

2.3.1. Optimization of Computing Resources

2.3.2. Communication Resource Optimization

3. Research Methods

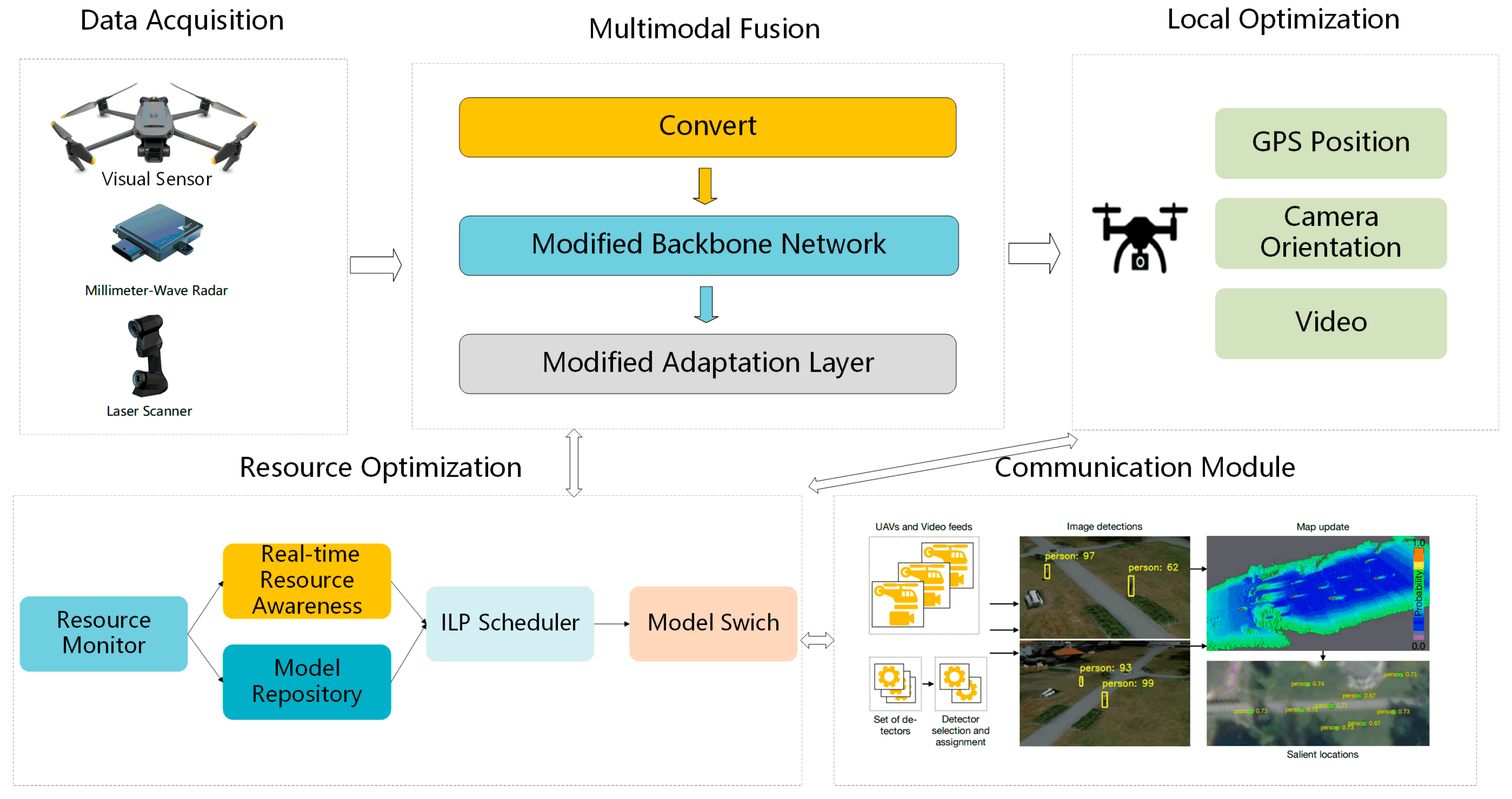

3.1. Overall Framework

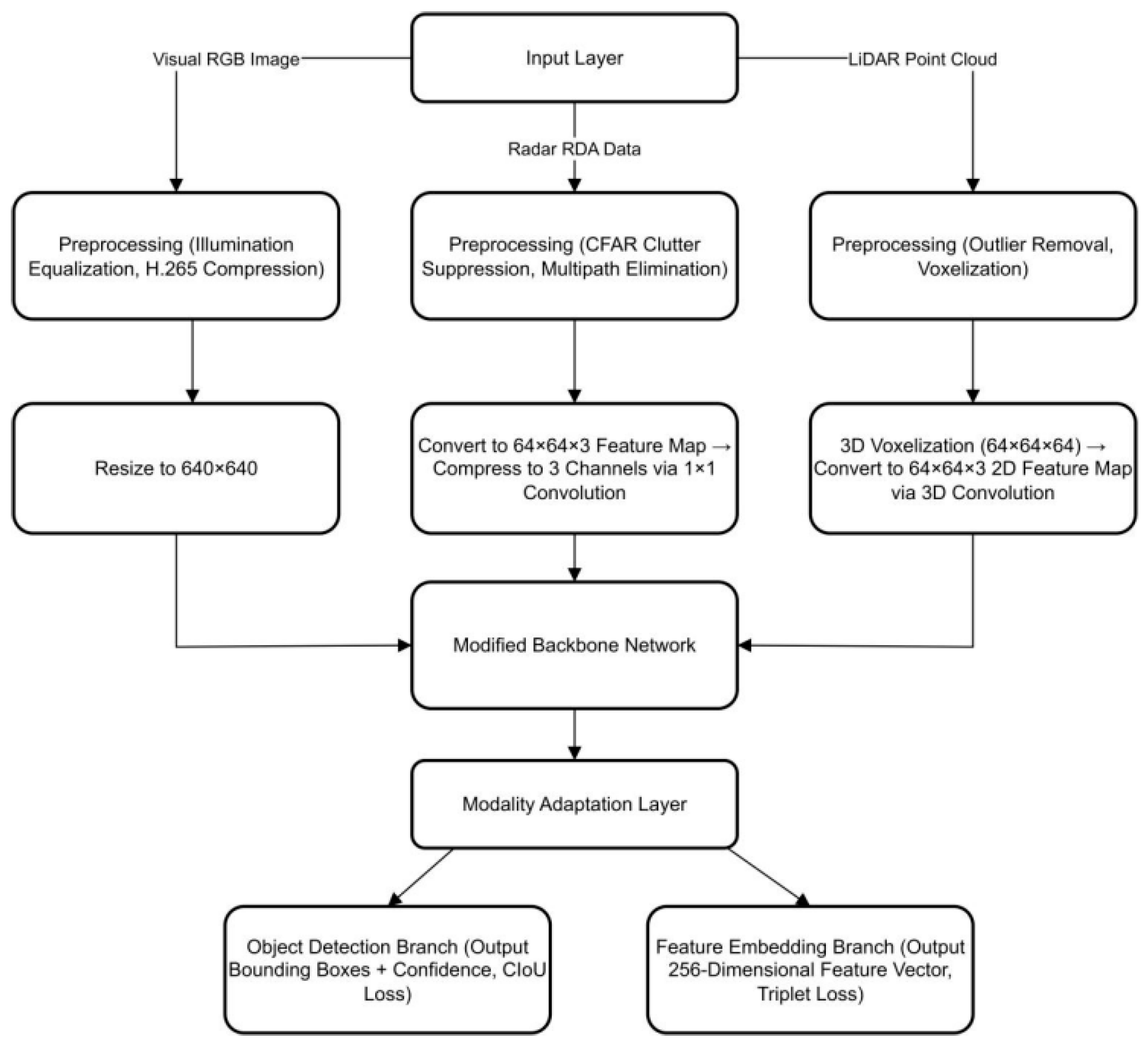

3.2. Multimodal Data Fusion Structure

3.2.1. Data Collection Phase

3.2.2. Preprocessing and Modal Adaptation

3.2.3. Shared Feature Layer

3.3. Dynamic Resource Optimization Allocation Algorithm

3.3.1. Resource Real-Time Awareness Mechanism

3.3.2. Offline Model Library Construction

3.3.3. Online Dynamic Resource Allocation Strategy

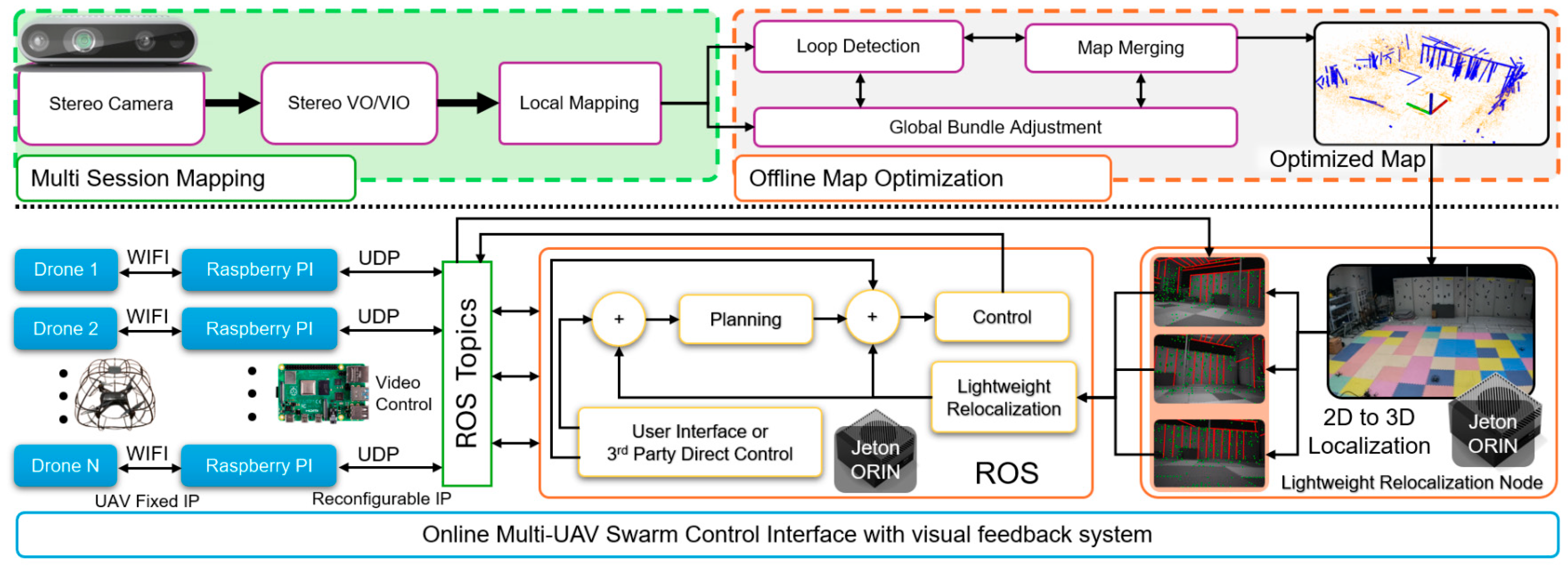

3.4. Optimization of Positioning Results and Collaborative Output

3.4.1. EKF Correction

3.4.2. Multi-Drone Verification

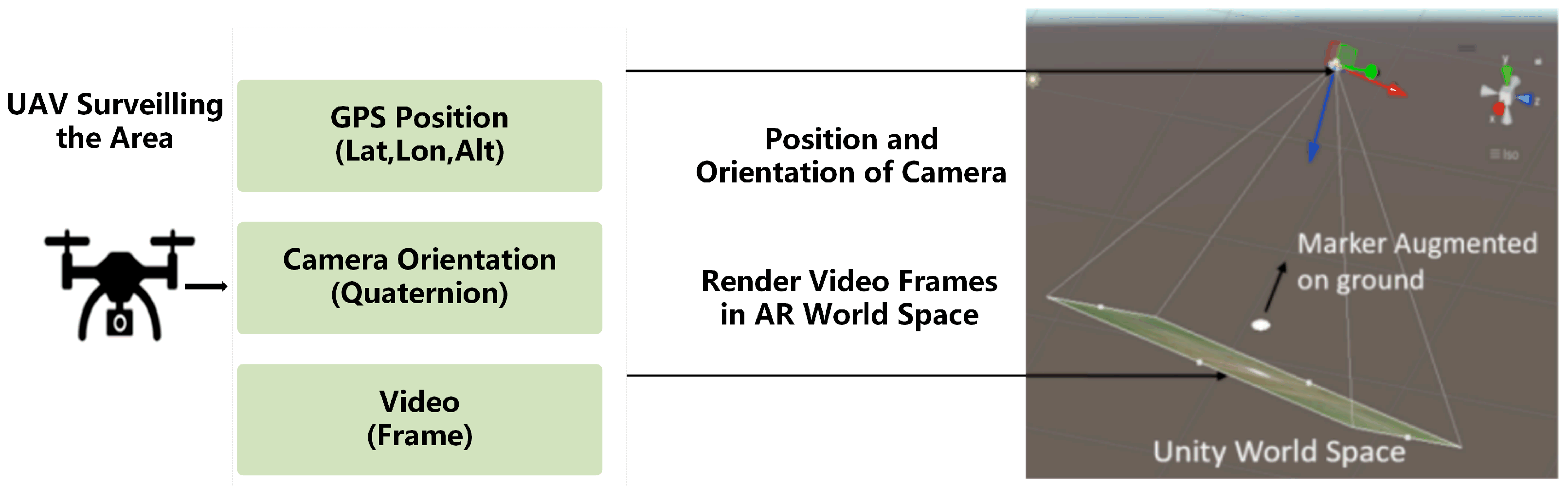

3.4.3. Three-Dimensional Visualization

4. Experimental Design and Results

4.1. Experimental Platform and Environment Design

4.1.1. Hardware System

4.1.2. Software Framework

4.1.3. Experimental Scenario Design

4.2. Experimental Indicators and Evaluation Methods

4.2.1. Positioning Accuracy

4.2.2. Resource Utilization Efficiency

4.2.3. Collaborative Robustness

4.3. Experimental Results and Analysis

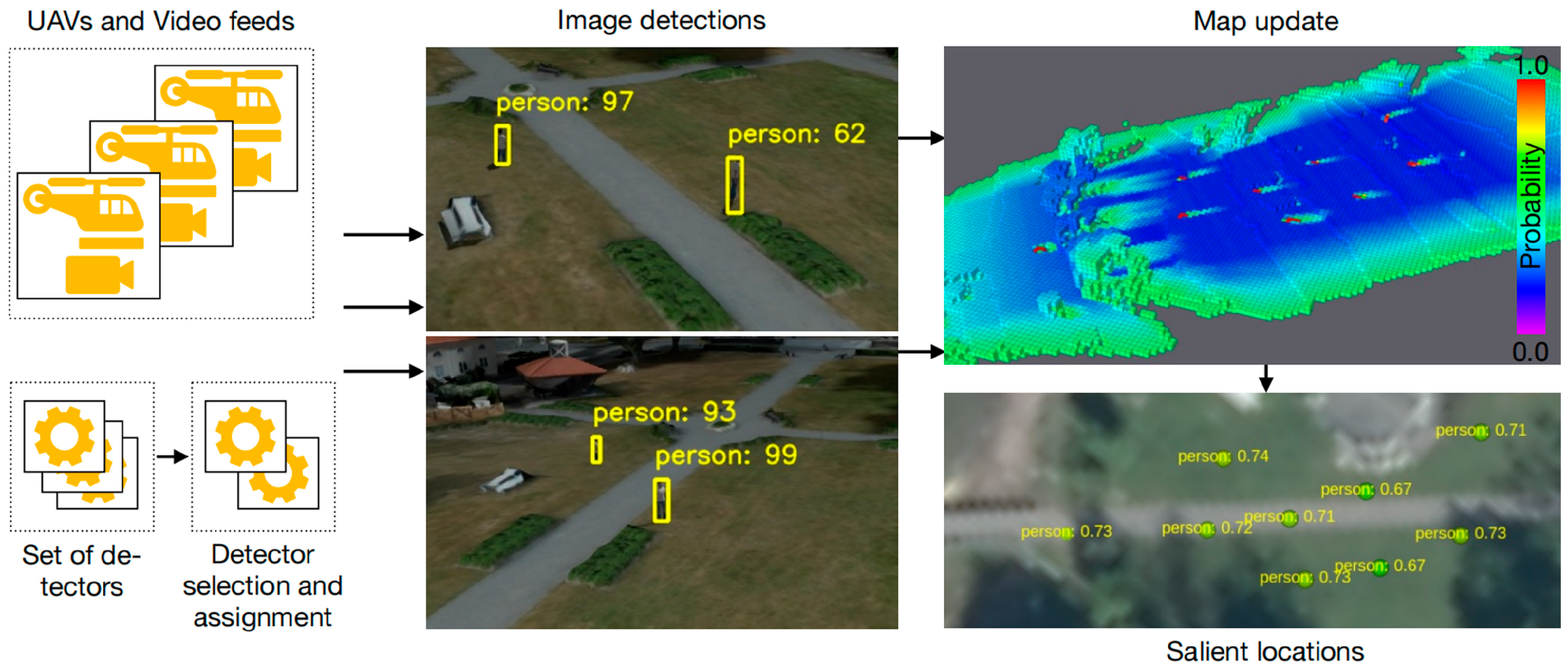

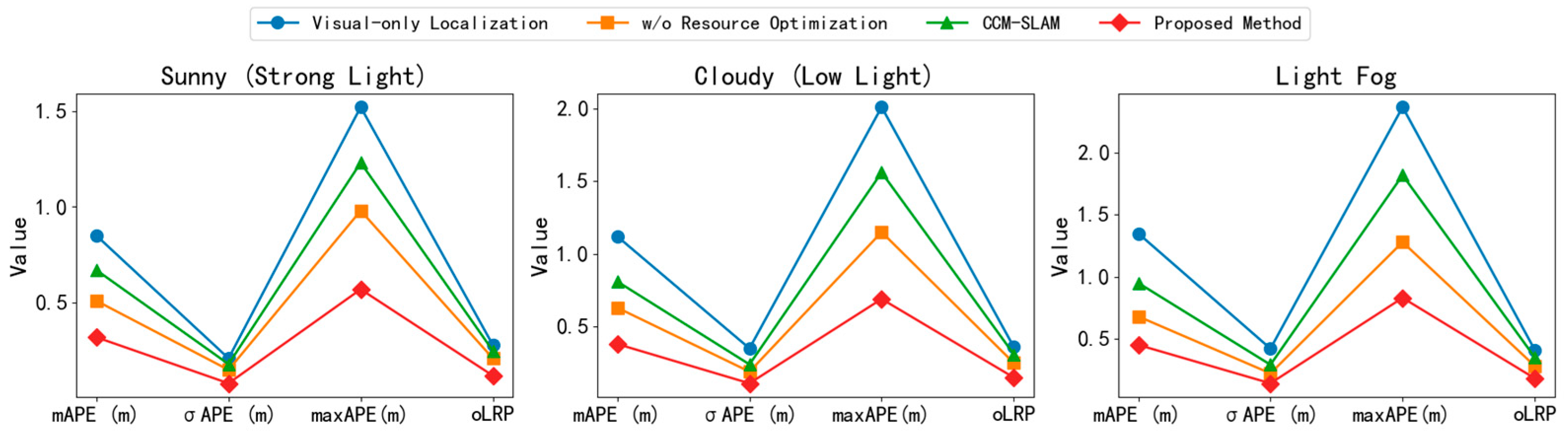

4.3.1. Results of Marine Search and Rescue Scenario Experiments

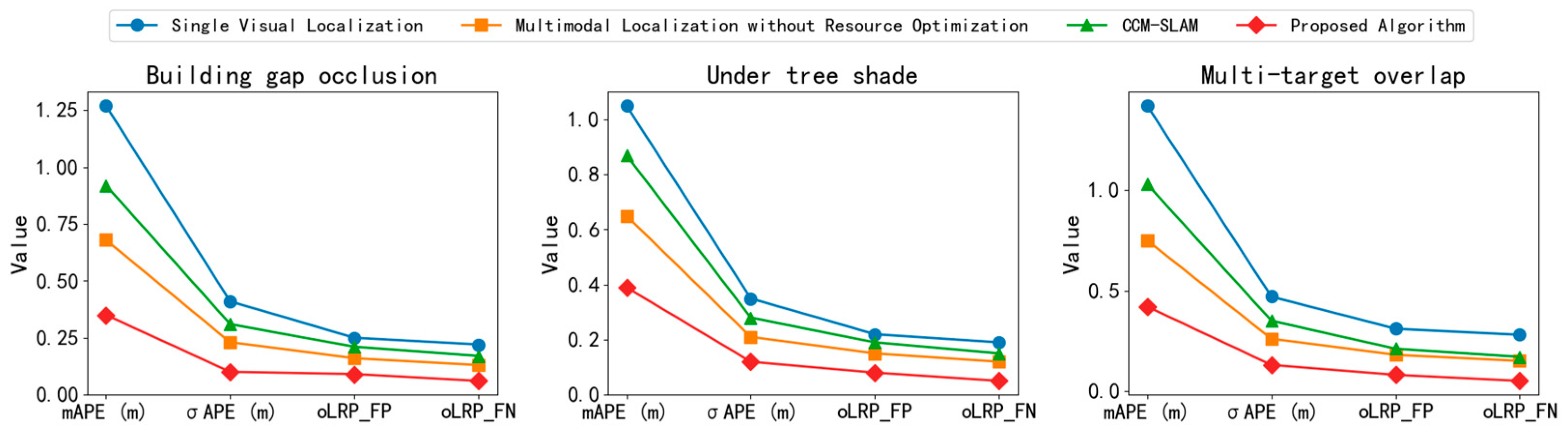

4.3.2. Experimental Results of Urban Occlusion Scenarios

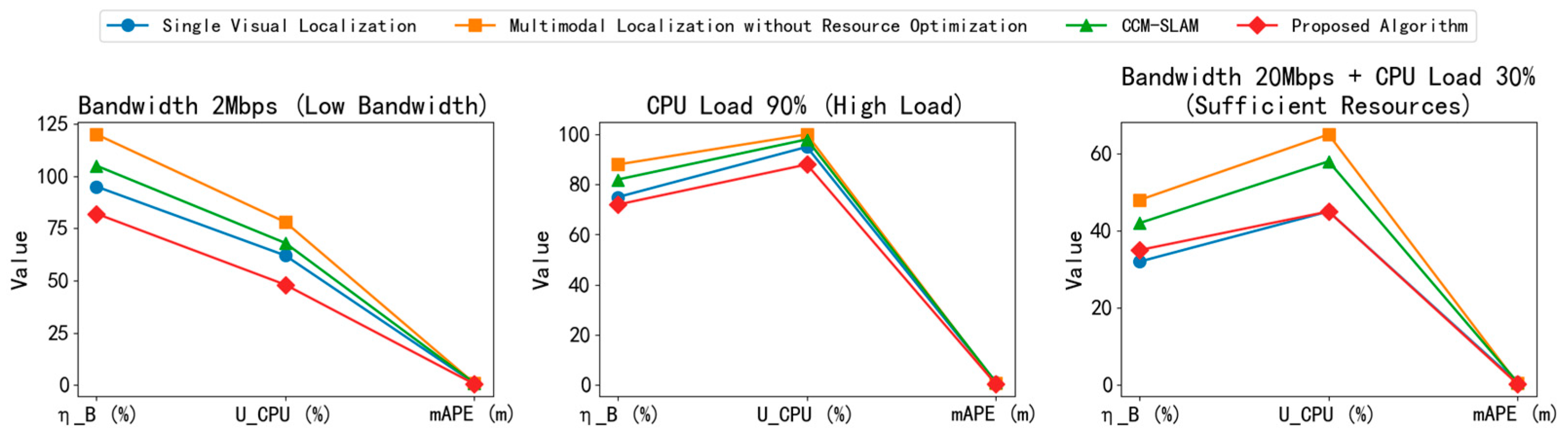

4.3.3. Experimental Results of Resource Dynamic Variation Scenarios

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bakirci, M.; Ozer, M.M. Post-disaster area monitoring with swarm UAV systems for effective search and rescue. In Proceedings of the 2023 10th International Conference on Recent Advances in Air and Space Technologies (RAST), Istanbul, Turkey, 7–9 June 2023; pp. 1–6. [Google Scholar]

- Rudol, P.; Doherty, P.; Wzorek, M.; Sombattheera, C. UAV-Based Human Body Detector Selection and Fusion for Geolocated Saliency Map Generation. arXiv 2024, arXiv:2408.16501. [Google Scholar] [CrossRef]

- Manley, J.E.; Puzzuoli, D.; Taylor, C.; Stahr, F.; Angus, J. A New Approach to Multi-Domain Ocean Monitoring: Combining UAS with USVs. In Proceedings of the OCEANS 2025 Brest, Brest, France, 16–19 June 2025; pp. 1–5. [Google Scholar]

- Akram, W.; Yang, S.; Kuang, H.; He, X.; Din, M.U.; Dong, Y.; Lin, D.; Seneviratne, L.; He, S.; Hussain, I. Long-Range Vision-Based UAV-Assisted Localization for Unmanned Surface Vehicles. arXiv 2024, arXiv:2408.11429. [Google Scholar]

- Hildmann, H.; Kovacs, E. Using unmanned aerial vehicles (UAVs) as mobile sensing platforms (MSPs) for disaster response, civil security and public safety. Drones 2019, 3, 59. [Google Scholar] [CrossRef]

- Liu, X.; Wen, W.; Hsu, L.-T. GLIO: Tightly-coupled GNSS/LiDAR/IMU integration for continuous and drift-free state estimation of intelligent vehicles in urban areas. IEEE Trans. Intell. Veh. 2023, 9, 1412–1422. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, L.; Lin, W.; Liang, W.; Du, Q.; Han, Z. Resource Allocation Based on Optimal Transport Theory in ISAC-Enabled Multi-UAV Networks. arXiv 2024, arXiv:2410.02122. [Google Scholar]

- Bu, S.; Bi, Q.; Dong, Y.; Chen, L.; Zhu, Y.; Wang, X. Collaborative Localization and Mapping for Cluster UAV. In Proceedings of the 4th 2024 International Conference on Autonomous Unmanned Systems, Shenyang, China, 19–21 September 2024; Springer: Singapore, 2025; pp. 313–325. [Google Scholar]

- Li, X.; Xu, K.; Liu, F.; Bai, R.; Yuan, S.; Xie, L. AirSwarm: Enabling Cost-Effective Multi-UAV Research with COTS Drones. arXiv 2025, arXiv:2503.06890. [Google Scholar]

- Shule, W.; Almansa, C.M.; Queralta, J.P.; Zou, Z.; Westerlund, T. UWB-based localization for multi-UAV systems and collaborative heterogeneous multi-robot systems. Procedia Comput. Sci. 2020, 175, 357–364. [Google Scholar] [CrossRef]

- Wu, L. Vehicle-based vision–radar fusion for real-time and accurate positioning of clustered UAVs. Nat. Rev. Electr. Eng. 2024, 1, 496. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Shen, C. Efficient Feature Fusion for UAV Object Detection. arXiv 2025, arXiv:2501.17983. [Google Scholar] [CrossRef]

- Irfan, M.; Dalai, S.; Trslic, P.; Riordan, J.; Dooly, G. LSAF-LSTM-Based Self-Adaptive Multi-Sensor Fusion for Robust UAV State Estimation in Challenging Environments. Machines 2025, 13, 130. [Google Scholar] [CrossRef]

- Peng, C.; Wang, Q.; Zhang, D. Efficient dynamic task offloading and resource allocation in UAV-assisted MEC for large sport event. Sci. Rep. 2025, 15, 11828. [Google Scholar] [CrossRef]

- Alqefari, S.; Menai, M.E.B. Multi-UAV task assignment in dynamic environments: Current trends and future directions. Drones 2025, 9, 75. [Google Scholar] [CrossRef]

- Qamar, R.A.; Sarfraz, M.; Rahman, A.; Ghauri, S.A. Multi-criterion multi-UAV task allocation under dynamic conditions. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 101734. [Google Scholar] [CrossRef]

- Yang, T.; Wang, S.; Li, X.; Zhang, Y.; Zhao, H.; Liu, J.; Sun, Z.; Zhou, H.; Zhang, C.; Xu, K. LD-SLAM: A Robust and Accurate GNSS-Aided Multi-Map Method for Long-Distance Visual SLAM. Remote Sens. 2023, 15, 4442. [Google Scholar]

- Weng, D.; Chen, W.; Ding, M.; Liu, S.; Wang, J. Sidewalk matching: A smartphone-based GNSS positioning technique for pedestrians in urban canyons. Satell. Navig. 2025, 6, 4. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An accurate open-source library for visual, visual–inertial, and multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Ramasubramanian, K.; Ginsburg, B. AWR 1243 sensor: Highly integrated 76–81-GHz radar front-end for emerging ADAS applications. In Texas Instruments White Paper; Texas Instruments: Dallas, TX, USA, 2017. [Google Scholar]

- Gao, L.; Xia, X.; Zheng, Z.; Ma, J. GNSS/IMU/LiDAR fusion for vehicle localization in urban driving environments within a consensus framework. Mech. Syst. Signal Process. 2023, 205, 110862. [Google Scholar] [CrossRef]

- Qu, S.; Cui, J.; Cao, Z.; Qiao, Y.; Men, X.; Fu, Y. Position estimation method for small drones based on the fusion of multisource, multimodal data and digital twins. Electronics 2024, 13, 2218. [Google Scholar] [CrossRef]

- Kwon, H.; Pack, D.J. A robust mobile target localization method for cooperative unmanned aerial vehicles using sensor fusion quality. J. Intell. Robot. Syst. 2012, 65, 479–493. [Google Scholar] [CrossRef]

- Guan, W.; Huang, L.; Wen, S.; Yan, Z.; Liang, W.; Yang, C.; Liu, Z. Robot Localization and Navigation Using Visible Light Positioning and SLAM Fusion. J. Light. Technol. 2021, 39, 7040–7051. [Google Scholar] [CrossRef]

- Yan, Z.; Guan, W.; Wen, S.; Huang, L.; Song, H. Multirobot Cooperative Localization Based on Visible Light Positioning and Odometer. IEEE Trans. Instrum. Meas. 2021, 70, 7004808. [Google Scholar] [CrossRef]

- Huang, K.; Shi, B.; Li, X.; Li, X.; Huang, S.; Li, Y. Multi-Modal Sensor Fusion for Auto Driving Perception: A Survey. arXiv 2022, arXiv:2202.02703. [Google Scholar] [CrossRef]

- Li, S.; Chen, S.; Li, X.; Zhou, Y.; Wang, S. Accurate and automatic spatiotemporal calibration for multi-modal sensor system based on continuous-time optimization. Inf. Fusion 2025, 120, 103071. [Google Scholar] [CrossRef]

- Lai, H.; Yin, P.; Scherer, S. Adafusion: Visual-lidar fusion with adaptive weights for place recognition. IEEE Robot. Autom. Lett. 2022, 7, 12038–12045. [Google Scholar] [CrossRef]

- Queralta, J.P.; Taipalmaa, J.; Pullinen, B.C.; Sarker, V.K.; Gia, T.N.; Tenhunen, H.; Gabbouj, M.; Raitoharju, J.; Westerlund, T. Collaborative multi-robot search and rescue: Planning, coordination, perception, and active vision. IEEE Access 2020, 8, 1000–1010. [Google Scholar] [CrossRef]

- Chen, Y.; Inaltekin, H.; Gorlatova, M. AdaptSLAM: Edge-assisted adaptive SLAM with resource constraints via uncertainty minimization. In Proceedings of the IEEE INFOCOM 2023—IEEE Conference on Computer Communications, New York City, NY, USA, 17–20 May 2023; pp. 1–10. [Google Scholar]

- He, Y.; Xie, J.; Hu, G.; Liu, Y.; Luo, X. Joint optimization of communication and mission performance for multi-UAV collaboration network: A multi-agent reinforcement learning method. Ad Hoc Netw. 2024, 164, 103602. [Google Scholar] [CrossRef]

- Ben Ali, A.J.; Kouroshli, M.; Semenova, S.; Hashemifar, Z.S.; Ko, S.Y.; Dantu, K. Edge-SLAM: Edge-assisted visual simultaneous localization and mapping. ACM Trans. Embed. Comput. Syst. 2022, 22, 1–31. [Google Scholar] [CrossRef]

- Koubâa, A.; Ammar, A.; Alahdab, M.; Kanhouch, A.; Azar, A.T. Deepbrain: Experimental evaluation of cloud-based computation offloading and edge computing in the internet-of-drones for deep learning applications. Sensors 2020, 20, 5240. [Google Scholar] [CrossRef]

- Liu, X.; Wen, S.; Zhao, J.; Qiu, T.Z.; Zhang, H. Edge-assisted multi-robot visual-inertial SLAM with efficient communication. IEEE Trans. Autom. Sci. Eng. 2024, 22, 2186–2198. [Google Scholar] [CrossRef]

- Hu, Y.; Peng, J.; Liu, S.; Ge, J.; Liu, S.; Chen, S. Communication-efficient collaborative perception via information filling with codebook. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 15481–15490. [Google Scholar]

- Seçkin, A.Ç.; Karpuz, C.; Özek, A. Feature matching based positioning algorithm for swarm robotics. Comput. Electr. Eng. 2018, 67, 807–818. [Google Scholar] [CrossRef]

| Technology | Accuracy | Range/Coverage | Update Rate | Robustness & Environmental Sensitivity | Scalability |

|---|---|---|---|---|---|

| Visual (Monocular/Stereo VO/SLAM) | Medium-High (cm-dm level) | Short (<10–20 m). Limited by field of view and feature quality. | Medium (10–30 Hz). Limited by computational load of image processing. | Low. Highly sensitive to lighting conditions (low light, glare), textureless environments, and dynamic obstacles. Prone to drift over time. | Medium. Requires significant processing per robot. Inter-robot loop closure can improve scalability but adds communication overhead. |

| UWB (Ultra-Wideband) | Medium (10–30 cm) under good conditions. | Long (up to 100–200 m in LOS). | Very High (100–1000 Hz). Low latency. | Medium-High. Robust to RF interference and multipath in theory, but performance can degrade in dense NLOS (Non-Line-of-Sight) conditions. | High. The system is inherently scalable; adding more tags has minimal impact on infrastructure, though network congestion can occur. |

| LIDAR | Very High (cm-level) | Medium-Long (up to 100–200 m for high-end models). | Medium-High (5–20 Hz for 3D LiDAR). | High. Robust to lighting conditions. Performance can degrade in presence of smoke, dust, rain, or highly reflective/absorbent surfaces. | Low-Medium. Each robot typically requires its own LiDAR. Inter-robot loop closure is complex. Dense multi-robot environments can cause interference. |

| Sensor Fusion | Very High (cm-level, often drift-free with aiding sensors). | Depends on the primary sensor (e.g., UWB range or Visual range). | High. IMU provides high-rate data between primary sensor updates, smoothing the output. | Very High. Mitigates individual sensor weaknesses, e.g., IMU counters visual drift; UWB anchors prevent LiDAR odometry drift. | Medium-High. Depends on the fusion architecture. Centralized fusion is complex; decentralized is more scalable. |

| Environmental Conditions | Algorithm | mAPE (m) | σAPE (m) | maxAPE (m) | oLRP |

|---|---|---|---|---|---|

| Sunny (Strong Light) | Visual-only Localization | 0.85 | 0.21 | 1.52 | 0.28 |

| Multi-modal Localization (w/o Resource Optimization) | 0.51 | 0.15 | 0.98 | 0.21 | |

| CCM-SLAM | 0.67 | 0.18 | 1.23 | 0.25 | |

| Proposed Method | 0.32 | 0.08 | 0.57 | 0.12 | |

| Cloudy (Low Light) | Visual-only Localization | 1.12 | 0.35 | 2.01 | 0.36 |

| Multi-modal Localization (w/o Resource Optimization) | 0.63 | 0.19 | 1.15 | 0.25 | |

| CCM-SLAM | 0.81 | 0.24 | 1.56 | 0.31 | |

| Proposed Method | 0.38 | 0.11 | 0.69 | 0.15 | |

| Light Fog | Visual-only Localization | 1.35 | 0.42 | 2.37 | 0.41 |

| Multi-modal Localization (w/o Resource Optimization) | 0.68 | 0.22 | 1.28 | 0.28 | |

| CCM-SLAM | 0.95 | 0.29 | 1.82 | 0.35 | |

| Proposed Method | 0.45 | 0.14 | 0.83 | 0.18 |

| Occlusion Type | Algorithm | mAPE (m) | σAPE (m) | oLRP_FP | oLRP_FN |

|---|---|---|---|---|---|

| Building gap occlusion | Single Visual Localization | 1.27 | 0.41 | 0.25 | 0.22 |

| Multimodal Localization without Resource Optimization | 0.68 | 0.23 | 0.16 | 0.13 | |

| CCM-SLAM | 0.92 | 0.31 | 0.21 | 0.17 | |

| Proposed Algorithm | 0.35 | 0.10 | 0.09 | 0.06 | |

| Under tree shade | Single Visual Localization | 1.05 | 0.35 | 0.22 | 0.19 |

| Multimodal Localization without Resource Optimization | 0.65 | 0.21 | 0.15 | 0.12 | |

| CCM-SLAM | 0.87 | 0.28 | 0.19 | 0.15 | |

| Proposed Algorithm | 0.39 | 0.12 | 0.08 | 0.05 | |

| Multi-target overlap | Single Visual Localization | 1.42 | 0.47 | 0.31 | 0.28 |

| Multimodal Localization without Resource Optimization | 0.75 | 0.26 | 0.18 | 0.15 | |

| CCM-SLAM | 1.03 | 0.35 | 0.21 | 0.17 | |

| Proposed Algorithm | 0.42 | 0.13 | 0.08 | 0.05 |

| Resource State | Algorithm | η_B (%) | U_CPU (%) | Detector Type | mAPE (m) |

|---|---|---|---|---|---|

| Bandwidth 2 Mbps (Low Bandwidth) | Single Visual Localization | 95 | 62 | YOLOv5s | 0.98 |

| Multimodal Localization without Resource Optimization | 120 | 78 | Faster R-CNN | 0.89 | |

| CCM-SLAM | 105 | 68 | Monocular SLAM | 1.05 | |

| Proposed Algorithm | 82 | 48 | SSD MobileNet-v2 | 0.51 | |

| CPU Load 90% (High Load) | Single Visual Localization | 75 | 95 | YOLOv5n | 1.12 |

| Multimodal Localization without Resource Optimization | 88 | 100 | Faster R-CNN | 0.76 | |

| CCM-SLAM | 82 | 98 | Monocular SLAM | 1.23 | |

| Proposed Algorithm (Task Migration) | 72 | 88 | YOLOv5s | 0.43 | |

| Bandwidth 20 Mbps + CPU Load 30% (Sufficient Resources) | Single Visual Localization | 32 | 45 | YOLOv5l | 0.65 |

| Multimodal Localization without Resource Optimization | 48 | 65 | Faster R-CNN | 0.41 | |

| CCM-SLAM | 42 | 58 | Monocular SLAM | 0.72 | |

| Proposed Algorithm | 35 | 45 | Faster R-CNN | 0.29 |

| Study/Paper | Core Methodology | Sensor Type(s) | Study Area and Test Environment | Localization Accuracy | Multi-Stage Collaborative Verification |

|---|---|---|---|---|---|

| Li et al. [9] | Hierarchical control & SLAM with COTS hardware. | Vision, IMU | Controlled/Outdoor | Not explicitly quantified (focus on system feasibility) | No |

| Akram et al. [4] | UAV-USV visual data interaction for GNSS-denied localization. | Vision, GNSS | Marine | <1.0 m | No |

| Rudol et al. [2] | Fusion of visual detection results from multiple UAVs. | Vision | Outdoor, partially occluded | Qualitative improvement (40% efficiency gain) | No |

| Typical UWB-based [10] | UWB ranging for relative positioning. | UWB, IMU | GNSS-denied Indoor/Outdoor | ~0.1–0.3 m | No |

| Our Proposed Method | Multimodal fusion with CMA, dynamic resource optimization, and multi-stage verification. | Vision, LiDAR, Radar, UWB, IMU | Marine, Urban Occlusion, Dynamic Resource Scenarios | <0.5 m (mAPE); 45% error reduction vs. baseline | Yes (Data association, EKF-weighted fusion, Grubbs’ test) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Fu, Y.; Ma, Y.; Zhang, W. Multimodal Fusion and Dynamic Resource Optimization for Robust Cooperative Localization of Low-Cost UAVs. Drones 2025, 9, 820. https://doi.org/10.3390/drones9120820

Liu H, Fu Y, Ma Y, Zhang W. Multimodal Fusion and Dynamic Resource Optimization for Robust Cooperative Localization of Low-Cost UAVs. Drones. 2025; 9(12):820. https://doi.org/10.3390/drones9120820

Chicago/Turabian StyleLiu, Hongfu, Yajing Fu, Yangyang Ma, and Wanpeng Zhang. 2025. "Multimodal Fusion and Dynamic Resource Optimization for Robust Cooperative Localization of Low-Cost UAVs" Drones 9, no. 12: 820. https://doi.org/10.3390/drones9120820

APA StyleLiu, H., Fu, Y., Ma, Y., & Zhang, W. (2025). Multimodal Fusion and Dynamic Resource Optimization for Robust Cooperative Localization of Low-Cost UAVs. Drones, 9(12), 820. https://doi.org/10.3390/drones9120820