1. Introduction

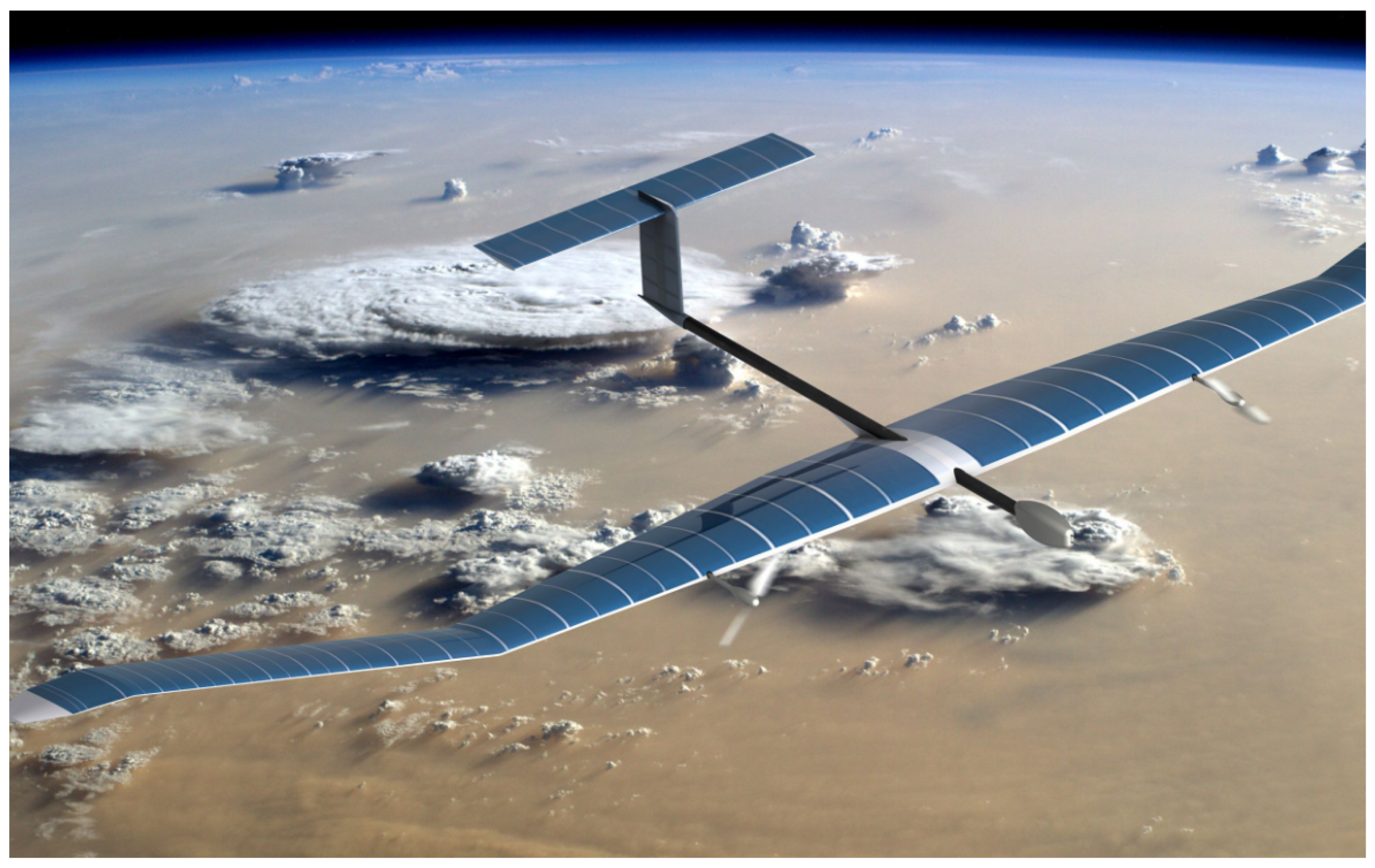

High-altitude solar drones, often referred to as High-Altitude Long-Endurance (HALE) drones, are platforms designed for long-endurance missions in the stratosphere. As shown in

Figure 1, their most notable physical characteristic is a very high aspect ratio, meaning the wings are morphologically extremely long and narrow, to accommodate large-area photovoltaic solar arrays. This morphology allows them to fly and charge via solar power during the day, while relying on batteries for continued flight overnight. As the application of these drones expands, stringent safety assurance becomes paramount. Unlike conventional drones, high-altitude solar drones must perpetually consider energy constraints during operation. Therefore, the principal safety risk is not isolated to individual components but emerges from the profound and intricate, dynamic interplay between the Flight Control System (FCS) and the Energy Management System (EMS)—the flight control–energy coupling. If system defects are not identified in the preliminary design stages, they can precipitate catastrophic failures, incurring remediation costs that are orders of magnitude greater than their initial prevention. While established safety analysis methodologies exist, such as Fault Tree Analysis (FTA) [

1] and Failure Mode and Effect Analysis (FMEA) [

2], their conventional application has a fundamental limitation. These techniques are typically executed as post-design validation activities, divorced from the formative stages of system architecture. This methodological gap means that safety considerations are not integrated into the design blueprint from its inception, often leading to the late discovery of design-induced vulnerabilities that necessitate costly and disruptive modifications. To address this shortcoming, modern systems engineering advocates for the adoption of Safety Modeling at the genesis of the design process. Specifically, SysML-based safety modeling provides a structured, model-driven framework to conduct a comprehensive and holistic analysis of the system. By formally capturing safety requirements and constraints within the system model itself, this approach aims to ensure that reliability is “designed in” rather than “inspected in,” thereby enhancing dependable future operation. However, the practical application of this paradigm remains a significant challenge, as the process of identifying relevant hazards and translating them into the formal semantics of a SysML model continues to be a labor-intensive endeavor, heavily reliant on the extensive experience of senior safety engineers and demanding a substantial investment of time.

The advent of Large Language Models (LLMs) offers a promising avenue for automating this complex modeling process. However, high-quality modeling cannot be achieved through generic models or simple prompt engineering. Advanced reasoning frameworks like Graph of Thoughts (GoT) [

3] are too general-purpose and lack the domain-specific grounding necessary for this nuanced safety field. Similarly, optimization techniques like Direct Preference Optimization (DPO), when focused only on the final output (a practice known as “behavioral alignment”), can produce a correct result without ensuring the underlying reasoning is sound. For high-altitude solar drones, where an AI merely guessing the right answer is unacceptable. This limitation is critical. Our work is built upon these powerful frameworks, but our contribution is a fundamental paradigm shift from “behavioral imitation” to “thought process alignment”. Unlike conventional methods that often merely fine-tune on expert data to mimic correct answers, our Knowledge-Enhanced Graph of Thoughts (K-EGoT) framework directly addresses the untrustworthy “black box” problem. We introduce the “Safety Rationale”—a verifiable, traceable reasoning step that makes the model’s logic both transparent and fully auditable. The core innovation lies in how we use this: we shift the optimization target of DPO from the final output (behavior) directly to the quality of the reasoning process itself, compelling the model to truly internalize deep expert logic, not just superficial spurious correlations.

Figure 1.

Typical High-Altitude Solar Drones. Reproduced from [

4].

Figure 1.

Typical High-Altitude Solar Drones. Reproduced from [

4].

We conducted a comprehensive experimental evaluation on a specially constructed dataset centered on real-world operational scenarios of high-altitude solar drones. The results show that our K-EGoT framework, leveraging dynamic exploration to solve complex coupled problems, significantly outperforms standard and fixed fine-tuning. This result strongly demonstrates that our “thought process alignment” is a more crucial and effective integration of knowledge than simple behavioral alignment or general-purpose reasoning alone.

The main contributions of this paper are as follows:

We propose a method to enhance the Graph of Thoughts (GoT) framework by introducing a “Safety Rationale,” enabling the deep coupling of general-purpose LLM reasoning with a verifiable, domain-specific drone safety knowledge base.

We demonstrate that using the “Safety Rationale” as the basis for Direct Preference Optimization (DPO) allows for aligning the model’s reasoning process with expert logic, a more robust approach than conventional behavioral alignment.

We construct and release a high-quality evaluation dataset for the flight control–energy coupling safety problem, including a domain knowledge base, which provides a valuable resource for future research. The dataset’s reliability is supported by a high inter-rater reliability score (Fleiss’ Kappa = 0.82).

Through comprehensive experiments, we provide empirical evidence that our knowledge-enhancement approach on a 7B model surpasses standard fine-tuning and prompting baselines on the same model, underscoring the value of verifiable reasoning over general capabilities in the domain of drone safety modeling.

The paper is organized as follows:

Introduction: The core position of safety in the context of the expansion of high-altitude solar UAV applications is described. It is pointed out that the traditional safety modeling relying on artificial expert analysis is difficult to keep up with the pace of rapid development, and the existing large language model (LLM) reasoning framework (such as graph of thoughts, got) is too universal due to the lack of domain knowledge; It is clear that the purpose of this study is to propose a K-EGoT framework to fill the above gaps, and briefly introduce the organizational logic of the subsequent chapters of the paper.

Background and related work: review the existing safety analysis methods (such as fault tree analysis, FTA; Failure mode and effect analysis (FMEA) is mainly used for post design verification, breaking away from the limitations of early system architecture, combing the evolution of LLM reasoning paradigm from chain of thought (COT) to tree of thoughts (TOT) and got. At the same time, it points out that the current LLM application in processing structured forms such as SysML state machine diagram and the lack of verifiability traceability required for UAV certification, which paves the way for the proposal of K-EGoT framework.

Intelligent safety modeling method: the core content of the K-EGoT framework is introduced in detail, including two core stages—“domain expert model training” (cultivating the basic LLM as domain experts through supervision and fine-tuning and direct preference optimization) and “dynamic safety extension reasoning” (performing safety extension tasks with the trained expert model as the core); This paper expounds the system construction process of UAV safety knowledge base (source extraction, classification and structure, de duplication conflict resolution, formalization), and explains the specific implementation of framework training and reasoning pipeline, such as the construction of mixed length thinking chain data set and the two-stage optimization based on “safety reasoning basis”.

Experiments and analysis: design experiments to verify the effectiveness of the K-EGoT framework. First, explain the experimental settings (build a high reliability data set with expert annotations, with Fleiss’ kappa of 0.82; Design two kinds of baselines: word class and fine-tuning; Based on qwen2-7b-instrument); Then answer the research questions through three kinds of experiments: performance comparison experiment verifies that K-EGoTt (SES 92.7) is significantly better than the baseline; Ablation Experiment quantifies the contribution of got reasoning, reasoning basis alignment, and dynamic reasoning; qualitative case analysis verifies the quality of safety extension and points out the limitations of conflict handling standards.

Discussion: discuss the research enlightenment, and emphasize the effectiveness of the combination of general reasoning framework and domain knowledge base for the professional field, as well as the key significance of verifying the reasoning process (not just the result) for UAV safety; Analyze the threat of effectiveness (the effectiveness of the structure is mitigated by the consistency of experts, the internal effectiveness is controlled by the unified model and configuration, and the external effectiveness is dealt with by proposing the road map of cross domain promotion); At the same time, it explains the value of K-EGoTt in automating early safety analysis, reducing costs and accelerating the development cycle.

Conclusion: summarize the core contribution of the paper, that is, put forward the K-EGoT framework to solve the problem of automatic safety modeling of high altitude solar UAV and verify its advantages; Acknowledge current limitations (e.g., difficulties in handling implicit priority conflict criteria); Pointed out the future research direction (development of priority arbitration mechanism, integration of formal verification tools); Finally, the author’s contribution, financial support, data availability and other information are supplemented, and the

Appendix A details the knowledge base safety standards.

3. Intelligent Safety Modeling Method

3.1. Overall Architecture: The Knowledge-Enhanced Graph of Thoughts (K-EGoT) Framework

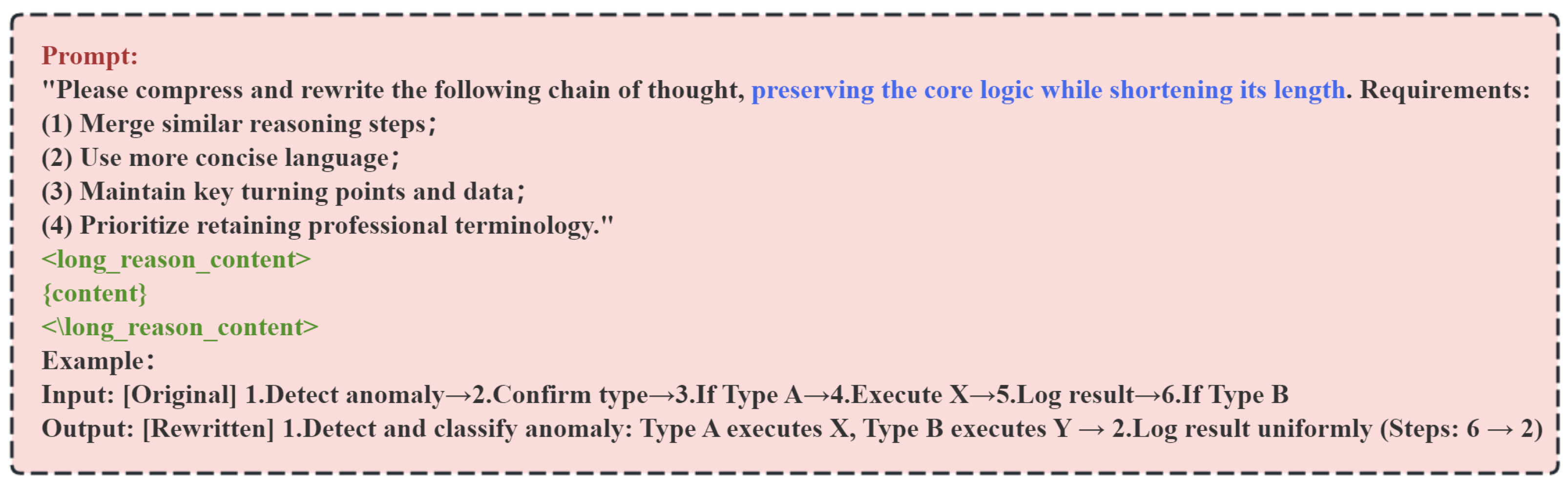

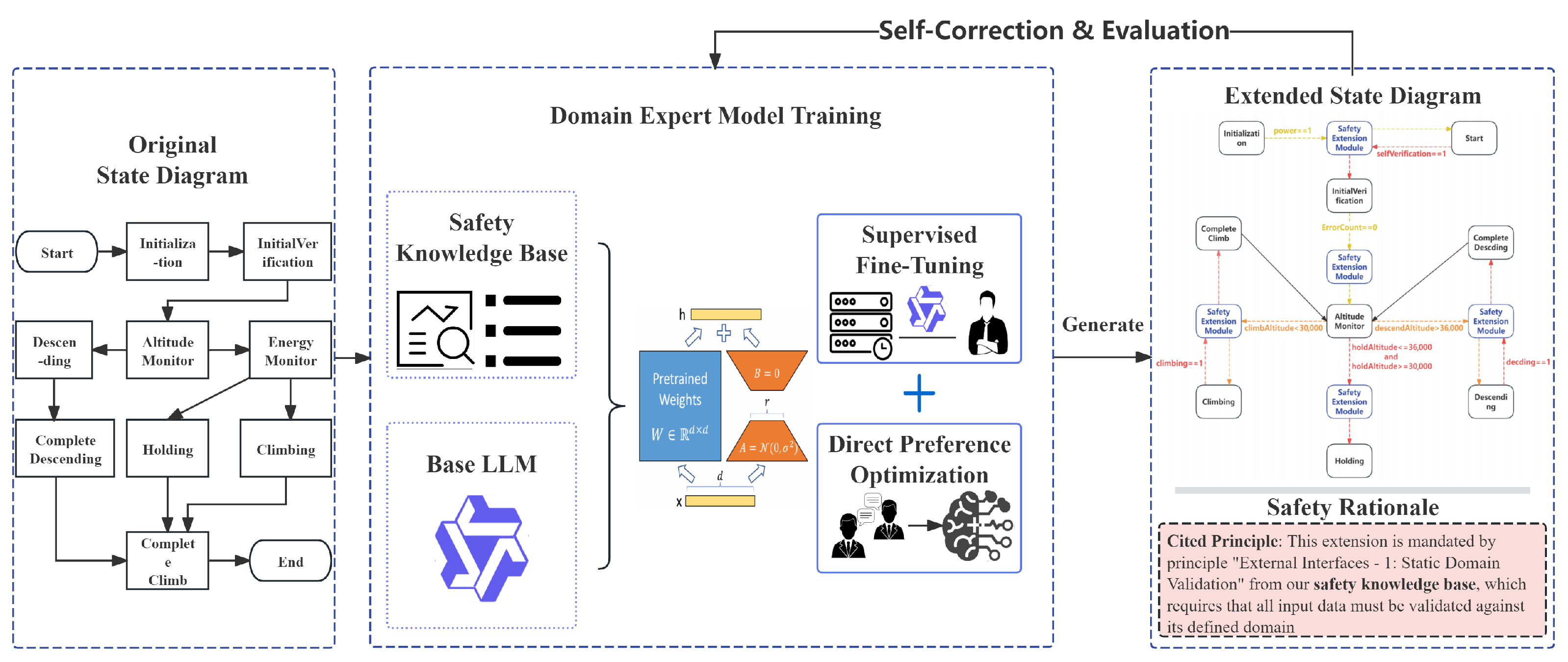

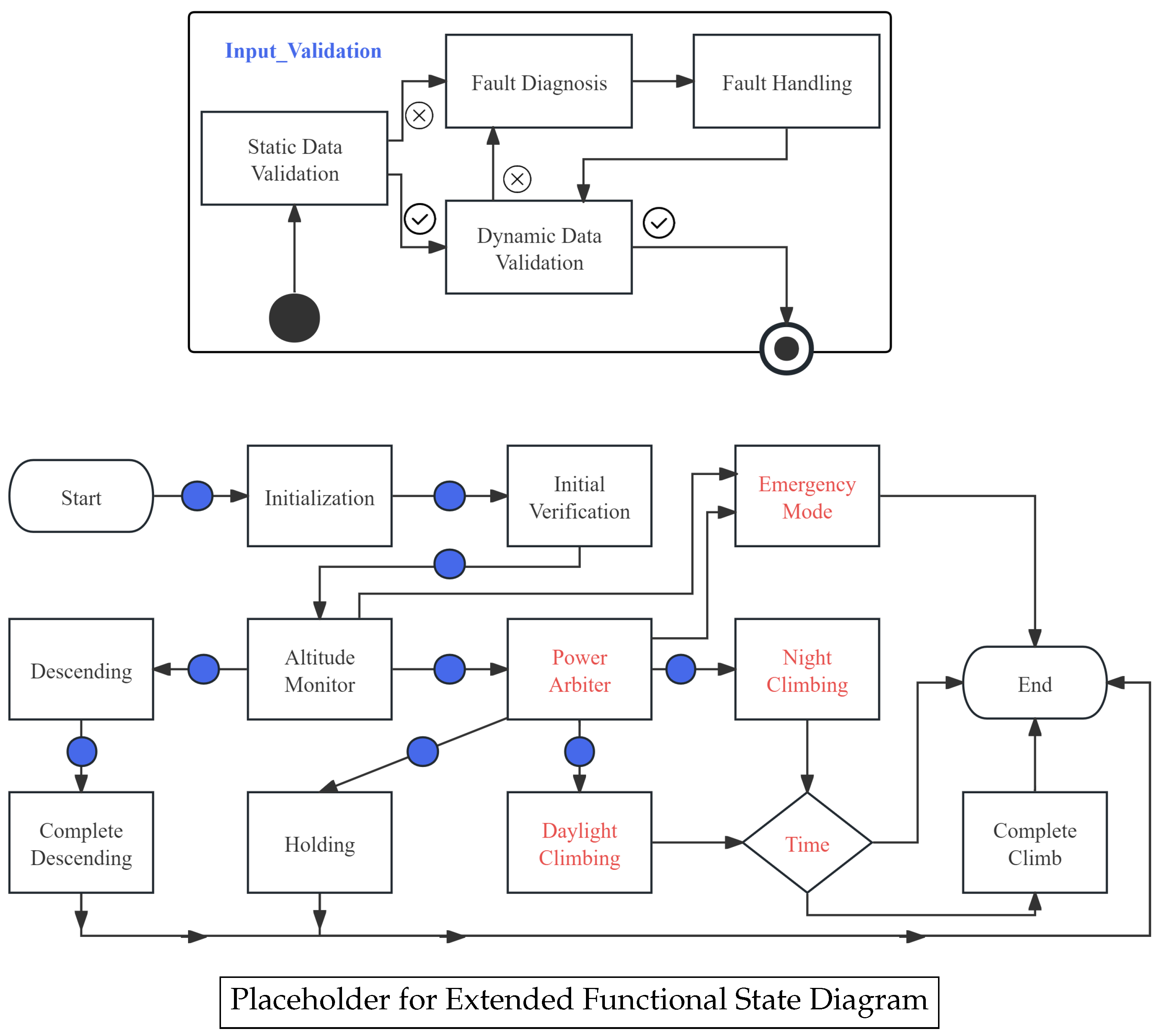

To address the challenge of formally and explicitly injecting implicit safety design criteria into early-stage design models for high-altitude solar drones, this paper proposes an innovative Knowledge-Enhanced Graph of Thoughts (K-EGoT) framework. As shown in

Figure 2, this framework aims to deeply integrate the powerful generative reasoning capabilities of Large Language Models (LLMs) with the profound expert knowledge of the drone safety domain, thereby achieving automated and trustworthy safety extension of SysML state machine diagrams.

The implementation of this framework involves two core phases, clearly embodying the design philosophy of “first train the expert, then let the expert work”.

In Phase 1: Domain Expert Model Training, our goal is to cultivate a general-purpose Base Large Language Model (Base LLM) into a domain expert well-versed in the safety of high-altitude solar drone flight control–energy coupling. This phase begins with two key inputs: our constructed Drone Safety Knowledge Base and a Base LLM (e.g., Qwen2-7B). Subsequently, we adopt a Two-Phase Optimization Strategy: first, we perform basic capability alignment using domain datasets through Supervised Fine-Tuning (SFT); then, through Direct Preference Optimization (DPO), evaluated based on the quality of the “Safety Rationale,” we achieve a robust alignment with expert reasoning processes. The final output of this phase is a deeply domain-specialized Domain Expert Model.

In Phase 2: Dynamic Safety Extension Reasoning, we use the trained Domain Expert Model as the core “execution engine” to perform specific safety extension tasks within a structured graph that possesses dynamic self-correction and intelligent exploration capabilities. This phase takes an Initial SysML State Machine Diagram as input. Our execution engine begins its work, with a core internal workflow that loops through generating an extension plan and safety rationale, followed by self-correction and evaluation based on the knowledge base. This loop iterates through Dynamic Exploration & Optimization until an optimal solution is found. Finally, this phase outputs two key products: an extended safety state machine diagram with endogenous safety semantics, and a corresponding Traceable Safety Rationale.

3.2. Knowledge Base Curation

The foundation of our knowledge-enhancement approach is a verifiable, domain-specific knowledge base. The quality and structure of this knowledge are critical for grounding the LLM’s reasoning. We developed a systematic methodology to curate this knowledge base, transforming broad safety standards into a structured format composed of formalized objects with unique IDs and defined fields.

The curation process involved four key stages:

Source Identification and Extraction: Our process began with identifying authoritative sources, including general aerospace safety standards (e.g., ARP-4761) and internal design documentation for high-altitude solar drones. A team of three domain experts systematically reviewed these documents to extract atomic safety principles, requirements, and failure mode descriptions relevant to software design.

Classification and Structuring: The extracted raw principles were then classified into a structured hierarchy. We developed a five-category taxonomy corresponding to key aspects of the system’s design: (1) External Interfaces, (2) Functional Logic, (3) Functional Hierarchy, (4) States and Modes, and (5) Flight Control–Energy Coupling. Each principle was assigned to one or more categories.

De-duplication and Conflict Resolution: Principles extracted from different sources often had semantic overlaps. Experts collaboratively reviewed the classified principles to merge duplicates and rephrase them into a canonical form. Potential conflicts between principles (e.g., a safety principle requiring power-off of a component versus a functional principle requiring it to be on) were resolved by establishing explicit priority rules and context-dependent applicability notes.

Formalization: Finally, each curated principle was formalized into a machine-readable object with a unique ID, a concise natural-language description, and structured fields (e.g., Hazard, Context, Mitigation Guideline). This formalization is crucial for enabling the automated verification of the

metric, as discussed in

Section 4.2.

This rigorous curation process ensures that our knowledge base is not merely a collection of text but a structured and verifiable foundation for our K-EGoT framework. To facilitate a systematic failure mode analysis, the knowledge base is organized into five core categories: External Interfaces, Functional Logic, Functional Hierarchy, States and Modes, and the critical Flight Control–Energy Coupling. Each category contains specific tables of analysis criteria, with some classifications featuring finer subdivisions. For instance, the “Functional Logic” category is further detailed from four perspectives, including control computation and processing logic, whereas “Functional Hierarchy” is analyzed in terms of serial and parallel relationships. This hierarchical structure thereby forms a comprehensive and structured knowledge system designed to guide and validate the AI model’s reasoning process. The detailed safety criteria organized by this methodology are provided in the

Appendix A and

Appendix B. These criteria form the core of our knowledge base, providing verifiable “factual evidence” for subsequent intelligent reasoning. For the complete, structured list of these criteria, categorized as described, please refer to

Appendix A (Safety Criteria Knowledge Base).

3.3. K-EGoT Training and Reasoning Pipeline

Having established a domain knowledge base, the K-EGoT framework “activates” this knowledge through an advanced training and reasoning pipeline, enabling it to dynamically guide and optimize the safety modeling process.

3.3.1. Phase 1: Base Model Training Based on Thought Process Alignment

The first phase of the K-EGoT framework is to train a base model with an “expert-level” safety mindset. This phase aims to make the model not only imitate correct outputs but also understand and reproduce the intrinsic logic that safety experts use in their decision-making.

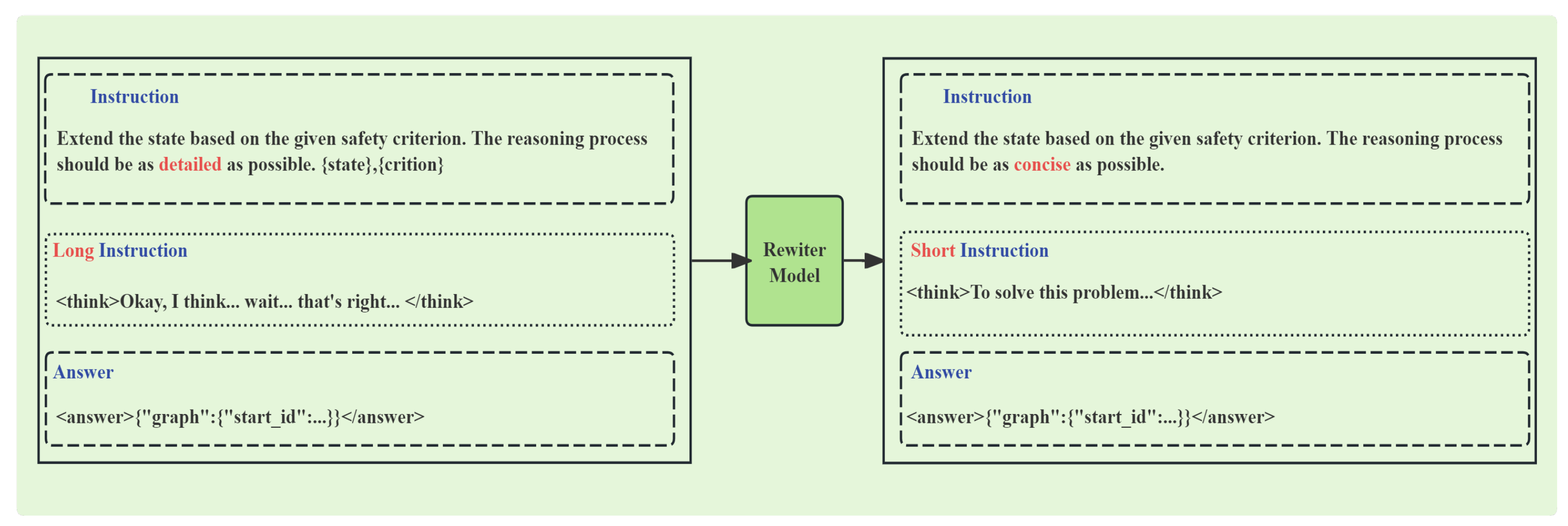

Dataset Construction for High-Altitude Solar Drone Safety Scenarios: To train the LLM to master the specific safety logic of high-altitude solar drones, we created an extended state machine diagram dataset. This dataset is composed of both long and short Chains of Thought (CoT), which provide the foundational reasoning paths for the subsequent “Graph of Thoughts” exploration.

The long-chain data was generated first. We used the Deepseek-R1 model, providing it with specific scenarios for high-altitude solar drones (e.g., “Design a state transition for a drone from daytime charging cruise to nighttime energy-saving mode, considering the risk of power fluctuations due to cloud cover”). This process created a dataset featuring detailed, step-by-step reasoning and explicit “Safety Rationales”.

Separately, the short-chain data was created using DeepSeek-V3 as a rewriter. This model compressed the lengthy reasoning processes from the long-chain data into concise versions that retained the core logic. By mixing these short reasoning chains with the original long ones during supervised fine-tuning, the model learns both comprehensive reasoning patterns and efficient reasoning shortcuts. This enables it to generate logically rigorous yet concise reasoning.

Figure 3 illustrates this rewriting process, where a rewriter model is prompted to compress a detailed, lengthy chain of thought (“Long Instruction”) into a concise version (“Short Instruction”) that retains the core logic.

When rewriting the Chains of Thought generated by DeepSeek-R1, the prompt template used is shown in

Appendix B, which includes explicit constraints such as retaining key steps and prioritizing professional terminology (e.g., SOC, MPPT).

For each data point in dataset

, the rewriter model is used to convert the long Chain of Thought trajectory

into a shorter one

, formally expressed as (

1):

represents the “input scene prompt” corresponding to the i-th data point, which triggers the initial task description for the model to generate the inference trajectory. Using these rewritten short CoT trajectories, a short reasoning chain dataset

is constructed. Finally, the long and short datasets are randomly merged to create a new mixed dataset

. The equation is (

2).

Figure 4 provides an example of the final data structure, contrasting the “long think” tag in the long-CoT dataset (left) with the “short think” tag in the short-CoT dataset (right).

Two-Phase Model Optimization based on “Safety Rationale”: (1) Supervised Fine-Tuning (SFT) Cold-Start Phase: The LLM is fine-tuned using the mixed dataset

to stimulate its reasoning capabilities for the high-altitude solar drone flight control and energy management domain. The optimization objective for Mixture SFT, denoted as

M, can be formulated as follows (

3)–(

5):

represents the target output corresponding to the i-th data point, and represents the input scene prompt for the i-th data point. This mixed approach ensures the model learns both the comprehensive flight control–energy coupling reasoning patterns from the long Chains of Thought and the efficient reasoning methods demonstrated by the short ones.

(2) Thought Process Alignment Phase based on Direct Preference Optimization (DPO): After the SFT cold-start, the DPO training phase begins. The core of this phase is to achieve thought process alignment. The positive and negative sample pairs we construct are judged not only by the correctness of the final model code but, more importantly, by the logicality and completeness of the accompanying “Safety Rationale”. For example:

Positive Sample (): The extension plan is correct, and its “Safety Rationale” clearly references knowledge base criteria with a complete logical chain.

Negative Sample (): The extension plan may be coincidentally correct, but its “Safety Rationale” is logically confused, far-fetched, or fails to cite the most relevant criteria.

The optimization objective function for DPO can be expressed as (

6):

where

denotes the Direct Preference Optimization (DPO) loss function, where

represents the learnable parameters of the domain expert model. A smaller loss value indicates that the model is more inclined to generate outputs preferred by experts.

is the DPO training dataset, composed of “input-positive/negative sample pairs” for the safety modeling scenario of high-altitude solar-powered unmanned aerial vehicles (UAVs).

x stands for a single input sample in this dataset.

is the set of positive samples corresponding to input

x; positive samples must satisfy the criteria of “correct SysML extension scheme, complete logical safety basis, and citation of knowledge base standards”.

is the set of negative samples corresponding to input

x; negative samples are characterized by “even if the extension scheme is coincidentally correct, the safety basis still has logical confusion or fails to cite key knowledge base standards”.

and

respectively represent the probabilities that the model, with parameters

, generates a positive sample

and a negative sample

given input

x. Through the cross-entropy loss, the formula forces the model to maximize the “proportion of probabilities for generating positive samples”: the numerator is the sum of generation probabilities of all positive samples, while the denominator is the sum of generation probabilities of both positive and negative samples. The logarithm of this ratio is taken, a negative sign is added, and then the sum is calculated by iterating over all input samples in

. Ultimately, this aligns the model"s reasoning process with the expert’s safety logic.

In this way, we deeply integrate the domain knowledge of flight control–energy coupling into the DPO training process, strengthening the model’s safety awareness and making it more inclined to generate extension plans that comply with the safety specifications for high-altitude solar drones.

3.3.2. Phase 2: Dynamic Safety Extension Reasoning Based on K-EGoT

Once a deeply domain-aligned expert model is available, the second phase of K-EGoT—dynamic reasoning—begins. This phase uses the trained model as an “execution engine” to perform the actual state machine diagram safety extension within a structured, self-correcting graph. The process, which achieves efficient and reliable exploration of complex safety problems through structured node interactions and dynamic parameter adjustments, is formally described in Algorithm 1.

The reasoning process of K-EGoT consists of three types of logical nodes that work together to form an iterative self-optimization loop:

Answering Node: This node is executed by our trained expert model,

. It receives the current SysML model fragment

and an aggregated prompt

from its parent node

. It then generates a set of candidate extensions, where each candidate consists of a new model fragment

and a corresponding, knowledge-base-traceable “Safety Rationale”

. This stochastic generation process can be formalized as sampling from the expert model"s probability distribution, conditioned on the input and controlled by a dynamic exploration factor

. The equation is (

7):

Evaluation Node: This node is also executed by our expert model. It receives an output pair

from an Answering Node and performs a domain-specific evaluation. Its task is to assess the quality of the “Safety Rationale”

against the domain knowledge base

. The evaluation yields a normalized score

and a meta-rationale, the “Evaluation Rationale”

. The score is a function of the rationale’s logical coherence and its grounding in the knowledge base. The equation is (

8):

where

is a weighting factor.

Aggregate Rationale Node: This node is responsible for synthesizing information to guide future reasoning steps. It fuses the prompt

that led to the current solution with the generated rationale

and the evaluation rationale

. The function

in the formula serves as the “aggregation mechanism”, it systematically combines the input elements (

,

, and

) to identify their respective strengths and weaknesses. Through this integration,

produces a more precise and informative aggregated prompt

, which then embeds corrective instructions or refined guidance for the subsequent layer of Answering Nodes. In essence,

ensures that prior reasoning (encoded in

), newly generated insights (

), and evaluative feedback (

) are harmonized into a cohesive and improved prompt, enabling more effective reasoning in later stages. The equation is (

9):

| Algorithm 1 K-EGoT Dynamic Safety Extension Reasoning |

- 1:

Input: Initial SysML model , Knowledge Base , Expert Model , Max iterations , Exploration factor range , Score threshold , Transition sharpness factor . - 2:

Output: Optimized safety-extended model . - 3:

Initialize thought graph with a root node where , . - 4:

Initialize best score , best model . - 5:

Initialize exploration factor . - 6:

for to do - 7:

Select a leaf node from G for expansion based on a selection policy . - 8:

// Answering Node: Generate k candidate extensions - 9:

for to k do - 10:

- 11:

end for - 12:

// Evaluation Node: Score each candidate - 13:

for to k do - 14:

- 15:

if then - 16:

, - 17:

end if - 18:

end for - 19:

// Aggregate Rationale Node: Create new nodes and update graph - 20:

for to k do - 21:

Create new node in V. - 22:

, , . - 23:

. - 24:

Add edge to E. - 25:

end for - 26:

// Dynamic Exploration Control: Update exploration factor for next iteration - 27:

- 28:

end for - 29:

return

|

Dynamic Control of Exploration and Exploitation: One of the most critical mechanisms of the K-EGoT framework is the use of the score produced by the Evaluation Node to dynamically control the generation Exploration Factor () of subsequent nodes, thereby achieving an intelligent balance between the breadth (exploration) and depth (exploitation) of the search.

High Score → Low Exploration Factor (Exploitation): If an extension plan receives a high score, indicating a credible reasoning path, the framework reduces the exploration factor to encourage more deterministic, high-fidelity refinement along that path.

Low Score → High Exploration Factor (Exploration): If a plan scores poorly, suggesting a flawed path, the framework increases the exploration factor to promote diversity and encourage the model to escape local minima by exploring novel solutions.

This self-adaptive mechanism is governed by an exploration factor update rule that adjusts

for the next iteration based on the best score,

, achieved in the current iteration. We model this relationship using a shifted sigmoid function, ensuring smooth transitions between exploration and exploitation. The equation is (

10):

where

is the logistic sigmoid function;

is a scaling factor controlling the sharpness of the transition;

is a predefined score threshold that demarcates “good” from “poor” solutions;

is the minimum threshold for exploring factors, at which point the model prioritizes generating schemes based on validated safety logic to reduce randomness;

is the maximum threshold for exploring factors, at which point the model will generate more diverse candidate solutions to cover potential undiscovered risks.

5. Discussion

Our research demonstrates that enhancing advanced reasoning frameworks like GoT with a verifiable knowledge base is a highly effective strategy for specialized domains. The results show that a smaller model (7B parameters), when deeply aligned with expert reasoning patterns, can significantly outperform prompting-based approaches on the same hardware. The core insight is that for drone systems, verifying the process of reasoning via the “Safety Rationale” is more crucial than just verifying the final product. This approach turns the opaque reasoning of LLMs into a transparent, auditable trail, a critical step towards building trustworthy AI for safety engineering. Our work provides a new tool for engineers to automate early-stage safety analysis, potentially reducing costs and helping teams find critical design flaws much earlier in the development lifecycle.

The practical value of the K-EGoT framework is mainly reflected in three aspects: firstly, it addresses the limitations of traditional safety analysis as a post design verification activity, facilitates the integration of safety considerations from the initial stage of the design blueprint, and can help reduce the likelihood of high-cost design rework in the later stage. Secondly, the audit trajectory generated by the safety basis mechanism aligns with the strict transparency requirements of aviation safety certification, supporting the feasibility of AI assisted safety engineering for application in the regulated aerospace field. Finally, the success of the framework on the 7B model indicates that professional safety modeling does not necessarily require a large-scale foundational model, which has particular relevance for engineering teams with limited computing resources. Case studies have shown that the system can automatically identify key safety constraints such as “prohibit climbing during low battery at night” and generate engineer verifiable decision basis through structured knowledge referencing.

Construct Validity: A potential threat is the subjectivity of our “golden standard” expert-annotated dataset. To mitigate this, we employed a rigorous curation process involving multiple experts and adjudication. We quantitatively demonstrated the reliability of this process by reporting a high Inter-Rater Reliability score (Fleiss’ Kappa = 0.82), which confirms a substantial agreement among our experts and provides a strong defense against claims of excessive subjectivity.

Internal Validity: The superior performance of our method could be attributed to implementation details rather than the approach itself. We controlled for this by using the exact same base model (Qwen2-7B) and training configuration for all fine-tuning baselines, ensuring that the only significant variable was the fine-tuning strategy itself.

External Validity: Our study is specific to the domain of high-altitude solar drones. To address this, we propose a concrete Generalization Roadmap rather than making broad claims. We believe the K-EGoT philosophy is transferable. For the autonomous driving domain, for instance, a transfer would involve: (1) curating a knowledge base from standards like ISO 26262 [

26]; (2) creating a preference dataset focused on critical decision points (e.g., intersection negotiation scenarios); and (3) fine-tuning an expert model for that domain using our rationale-centric alignment method. This constitutes a core direction for our future work.

Although the current achievements are significant, research has also revealed several directions that require further investigation: the primary challenge is to develop more refined priority arbitration mechanisms to address implicit context related priority issues between safety criteria, such as conflicts between energy conservation and task objectives. Secondly, it is necessary to integrate the framework output with the formal validation tool chain and prove the safety attributes of the generated model through mathematical methods—this is a necessary step for industrial applications. In terms of cross domain promotion, the methodology of this framework can be adapted to fields such as autonomous driving, but it needs to reconstruct the knowledge base based on standards such as ISO 26262 and re align the domains. From the perspective of system evolution, it is possible to explore the extension of modeling from the design phase to runtime safety monitoring, and build a safety assurance system that covers the entire lifecycle of unmanned aerial vehicles. Finally, developing effective human-machine collaboration interfaces that enable evidence to efficiently support engineers in reviewing and refining AI generated models will be important for achieving human-machine collaborative safety engineering.