Research on the Construction and Resource Optimization of a UAV Command Information System Based on Large Language Models

Abstract

Highlights

- A three-layer “cloud-edge-terminal” UAV command information system has been developed, deploying LLMs of different scales (cloud 175B, edge 70B, terminal 7B) to achieve optimal resource allocation and support elastic expansion from 10 to over 1000 UAVs. An improved Grey Wolf Optimization Algorithm (ILGWO) integrating Lévy flight, adaptive weighting, elite learning, and chaotic initialization strategies has been proposed to enhance system performance.

- The system achieves remarkable improvements compared to traditional methods: 34.2% reduction in task latency, 29.6% optimization in energy consumption, and 31.8% improvement in resource utilization. In urban rescue scenarios, response latency decreased by 44.7% and coordination efficiency increased by 39.5%, while LLM integration enhanced decision-making accuracy across multiple dimensions—task priority adjustment (76.3% to 94.7%), dynamic resource allocation (68.9% to 91.2%), and anomaly handling (71.5% to 93.8%).

- System Performance and Generalization Capabilities. Integrating large language models into unmanned aerial vehicle command information systems enables autonomous decision-making and intelligent planning without relying on preset rules, effectively addressing the shortcomings of existing information systems in handling dynamic scenarios and complex environments. The system demonstrates superior generalization ability with 82.5% performance retention in new scenarios, significantly outperforming the reinforcement learning method’s 56.7%, enabling rapid deployment in rescue, emergency response, inspection, and reconnaissance scenarios.

- Technical Specifications and Practical Implementation. The proposed system architecture and optimization algorithm provide a scalable and robust solution for large-scale UAV swarm operations. With fault tolerance capabilities maintaining an 88.4% task completion rate even under 20% node failure conditions and a real-time inference delay of only 156 ms for edge-deployed 7B models, the system meets practical requirements for emergency rescue, environmental monitoring, and intelligent surveillance applications. This work establishes a theoretical and practical foundation for integrating cutting-edge AI technologies into unmanned aerial vehicle systems, promoting the development of intelligent, adaptive, and resilient autonomous systems for critical mission scenarios.

Abstract

1. Introduction

- (1)

- Architectural innovation: A three-tier distributed architecture integrated with large language models is designed, optimizing the entire pipeline from data acquisition to intelligent decision-making.

- (2)

- Modeling innovation: A multidimensional system-effectiveness optimization model is established that explicitly considers network dynamics, task heterogeneity, and resource constraints.

- (3)

- Algorithmic innovation: An improved Lévy-flight-enhanced Grey Wolf Optimizer (ILGWO) incorporating multiple enhancement strategies is devised to solve complex multi-objective optimization problems effectively.

- (4)

- Evaluation innovation: Comprehensive performance assessments and practical validations are conducted via simulations and real-world scenarios, demonstrating the system’s efficacy and utility.

2. Related Work

2.1. Unmanned Command and Control Systems

2.2. Large Language Model Compression Techniques

2.3. Intelligent Decision-Making Approaches

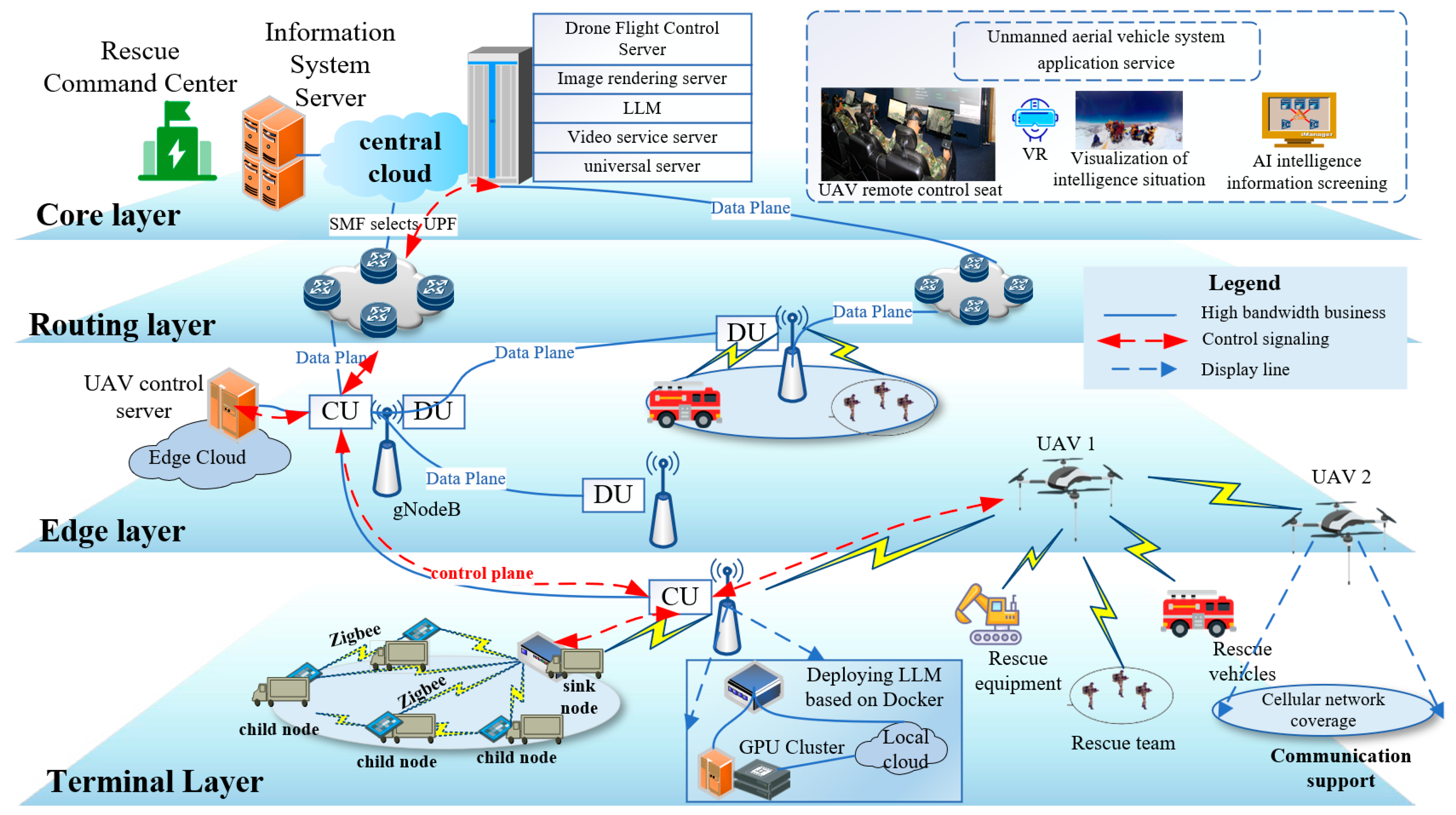

3. System Architecture Design

3.1. Overall Architecture Overview

3.2. Key Technology Architecture

3.2.1. Distributed Large Language Model Deployment Architecture

3.2.2. Fifth-Generation (5G) Network Slicing and QoS Assurance

3.2.3. Edge-Intelligence Computing Framework

3.2.4. LLM Empowers Intelligent Decision Analysis

3.3. System Workflow

3.4. System Scalability Design

3.5. System Reliability Design

4. System Modeling

4.1. Network Model

4.2. Communication Model

4.2.1. Air-to-Ground Channel Model

4.2.2. Data Transmission Rate

4.2.3. Transmission Delay Model

4.3. Computation Model

4.3.1. Local Computation Model

4.3.2. Edge Computing Model

4.3.3. Cloud Computing Model

4.4. Large Language Model Inference Model

4.4.1. Hierarchical Model Deployment

4.4.2. Inference Latency Modeling

4.4.3. Model Accuracy Modeling

4.5. Energy Consumption Model

4.5.1. Communication Energy

4.5.2. Computation Energy

4.5.3. Flight Energy

4.6. System Performance Metrics

4.6.1. Latency Metric

4.6.2. Energy Metric

4.6.3. Reliability Metric

4.7. System Robustness Modeling

4.7.1. Definition of Robustness Indicators

4.7.2. Fault Model

- (1)

- Communication link failure model

- (2)

- Calculate node fault model

- (3)

- Environmental interference model

4.7.3. Cascade Fault Model

4.7.4. System Elasticity Model

4.8. Optimization Problem Description

5. Improved Grey Wolf Optimizer

5.1. Basic Grey Wolf Optimizer

5.1.1. Encircling Prey

5.1.2. Hunting Mechanism

5.1.3. Attacking and Searching for Prey

5.2. Algorithmic Enhancement Strategy

5.2.1. Chaotic-Map Initialization

5.2.2. Adaptive Weight Strategy

5.2.3. Lévy Flight Search Strategy

5.2.4. Elite Learning Strategy

5.2.5. Dynamic Boundary Handling

5.3. The Framework of the Improved Algorithm

| Algorithm 1. ILGWO |

| ILGWO pseudocode |

| Input: Population size , maximum number of iterations , problem dimension . Output: Optimal solution . 1. Initialization phase Generate initial population using Tent chaotic mapping. Calculate the fitness value of each individual. Identify , , . 2. while do 3. for each wolf do Calculate adaptive weight . Update coefficients and . Update the current wolf position based on the wolf positions. Apply Lévy flight search strategy. Perform dynamic boundary processing. 4. end for 5. Elite Learning Update Sort populations by fitness. Perform learning updates on elite individuals. 6. Update the leader Recalculate fitness value. Update , , . 7. 8. end while 9. return |

5.4. Algorithm Complexity Analysis

5.5. Algorithm Scalability Analysis

5.5.1. Parallel Computing Expansion

5.5.2. Dynamic Scale Adjustment

5.5.3. Multi-Objective Expansion

6. Experimental Design and Result Analysis

6.1. Experimental Environment and Parameter Settings

6.1.1. Hardware Platform

6.1.2. Network Environment Configuration

6.1.3. Algorithm Parameter Settings

6.1.4. Task Load Design

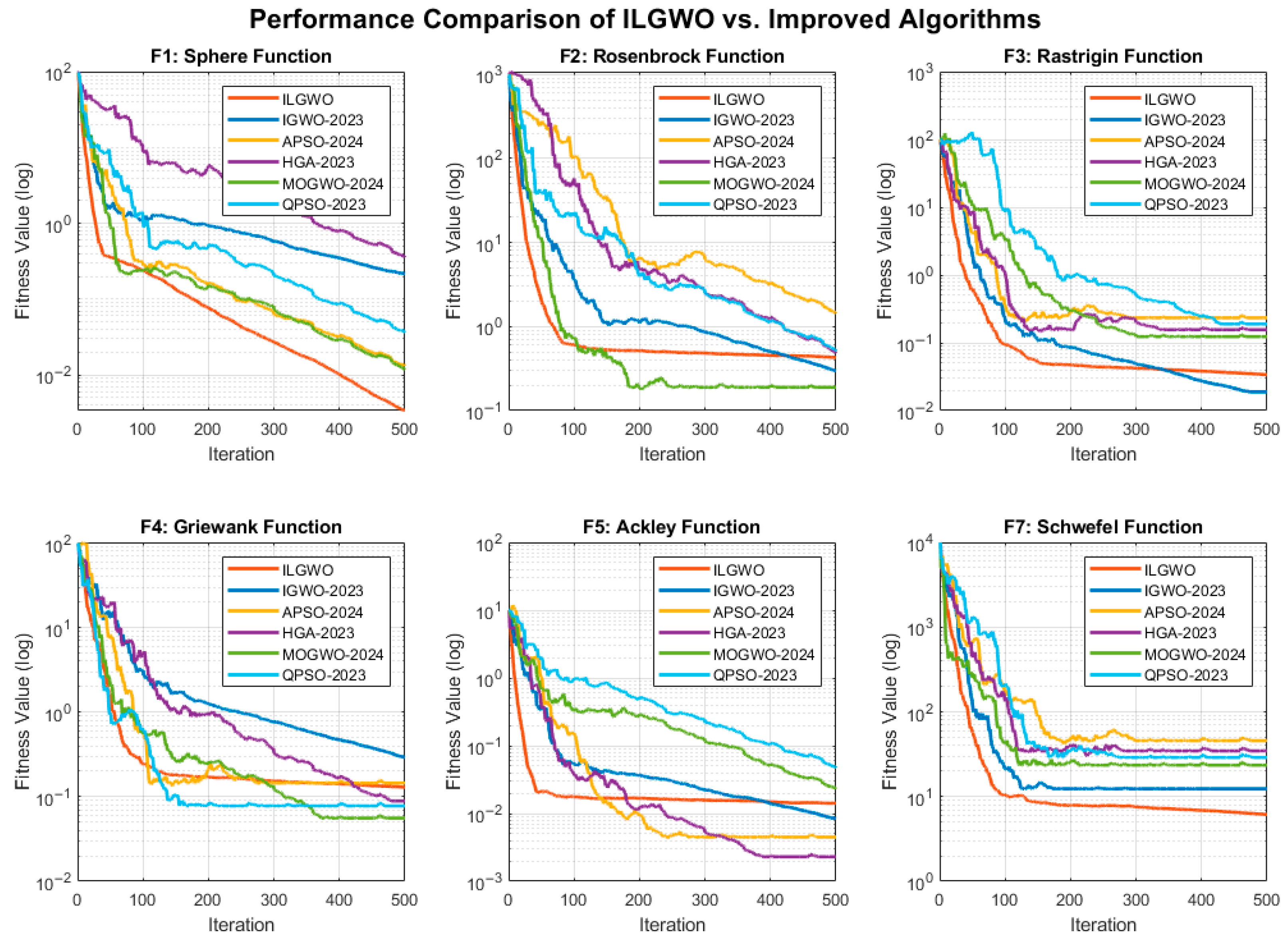

6.2. Benchmark Function Testing

6.2.1. Test Function Set

6.2.2. Performance Evaluation Indicators

6.2.3. Benchmark Test Results

6.2.4. Convergence Performance Analysis

6.3. System Performance Simulation Experiment

6.3.1. Experimental Scene Setting

6.3.2. Task Load Change Experiment

- (1)

- Performance degradation law

- (2)

- System bottleneck identification

- (3)

- Scalability strategy

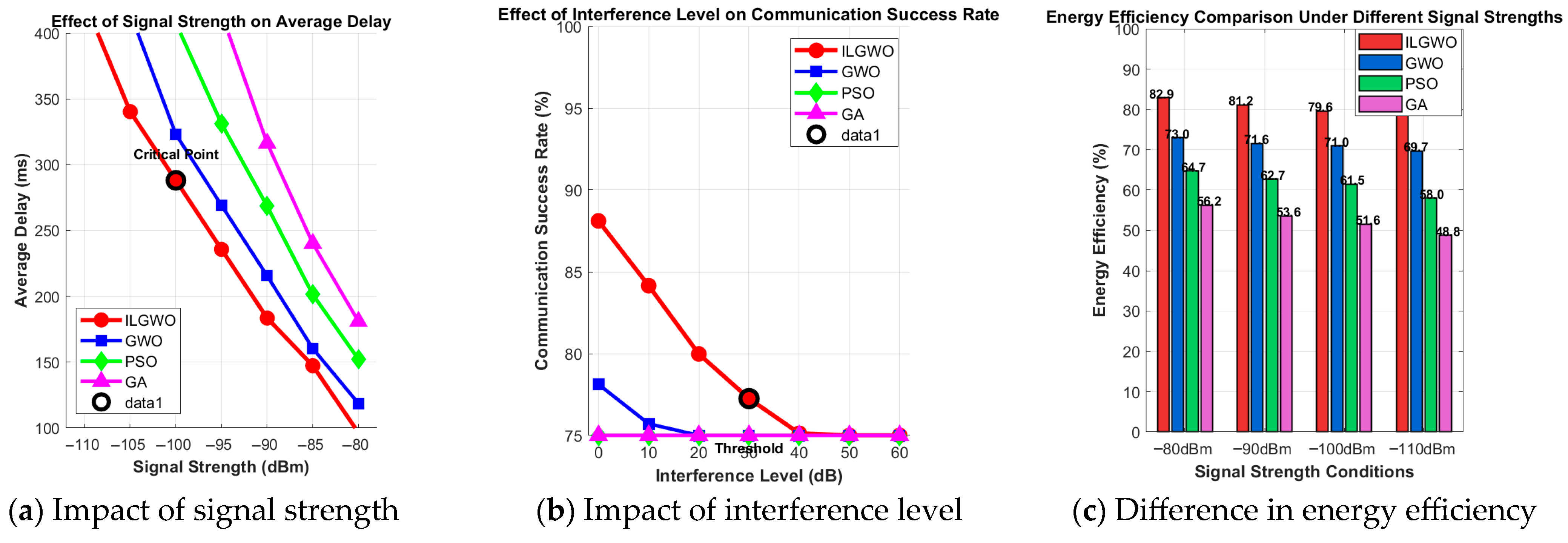

6.3.3. Network Condition Impact Experiment

6.3.4. Effect of Integrating Large Language Models

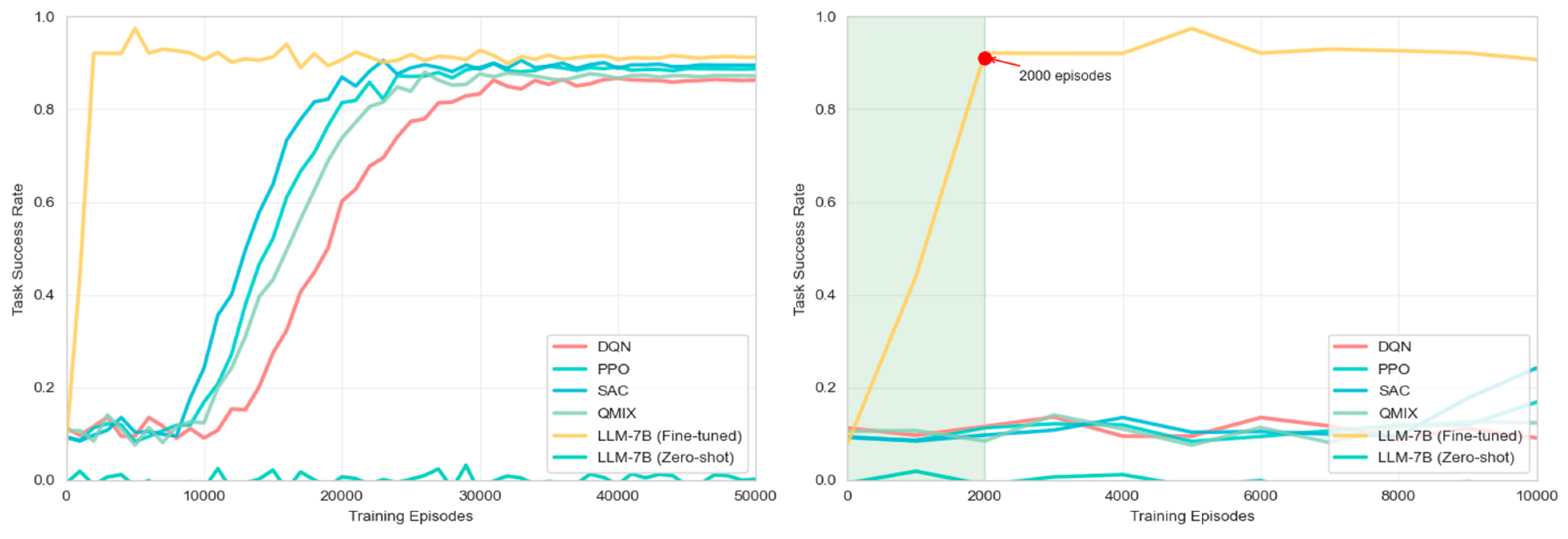

6.3.5. Comparative Experiment with Reinforcement Learning Methods

- 1.

- Experimental setup

- 2.

- Task scenario design

- 3.

- Analysis of experimental results

- (1)

- Task performance comparison

- (2)

- Comparison of Learning Efficiency

- (3)

- Comparison of generalization ability

- (4)

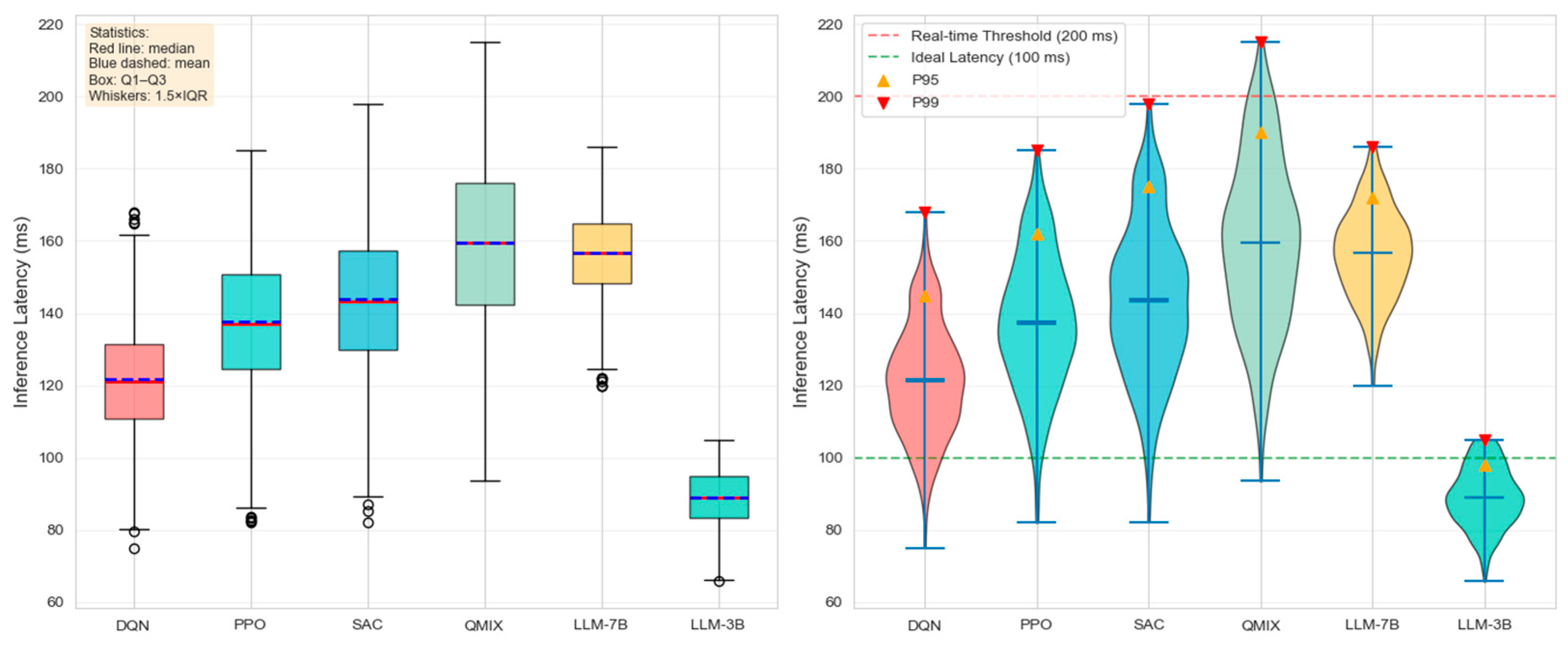

- Real-time comparison

- 4.

- Key findings and discussion

6.4. Ablation Experiment

6.4.1. Analysis of Contribution of Algorithm Components

6.4.2. Parameter Sensitivity Analysis

6.4.3. Impact of Large Language Model Configuration

6.5. System Robustness Experiment

6.5.1. Experimental Design

- (1)

- Node Failure Experiment

- (2)

- Network Interruption Experiment

- (3)

- Load impact test

- (4)

- Environmental interference experiment

6.5.2. Experimental Environment Configuration

6.5.3. Experimental Results

- (1)

- Node fault tolerance test

| Failure Rate | Algorithm | Task Completion Rate (%) | Average Latency (ms) | Resource Utilization Rate (%) | Recovery Time (s) |

|---|---|---|---|---|---|

| 5% | ILGWO | 97.3 | 178.4 | 89.2 | 12.3 |

| GWO | 94.8 | 203.7 | 83.6 | 18.7 | |

| PSO | 91.2 | 245.3 | 78.1 | 25.4 | |

| GA | 87.6 | 287.9 | 72.8 | 32.1 | |

| 10% | ILGWO | 94.7 | 198.6 | 85.4 | 15.8 |

| GWO | 89.3 | 238.2 | 78.9 | 24.3 | |

| PSO | 84.6 | 291.7 | 71.2 | 34.7 | |

| GA | 78.9 | 345.8 | 65.3 | 43.2 | |

| 15% | ILGWO | 91.8 | 223.5 | 81.7 | 19.4 |

| GWO | 83.2 | 278.9 | 73.2 | 31.6 | |

| PSO | 76.4 | 342.1 | 64.8 | 45.9 | |

| GA | 68.7 | 412.3 | 56.9 | 58.7 | |

| 20% | ILGWO | 88.4 | 251.2 | 77.3 | 24.1 |

| GWO | 76.8 | 324.6 | 66.8 | 39.8 | |

| PSO | 67.9 | 398.7 | 57.1 | 58.3 | |

| GA | 57.2 | 489.4 | 47.6 | 76.5 |

- (2)

- Analysis of the Impact of Network Interruptions

| Interruption Strength | Communication Success Rate (%) | Data Integrity (%) | Average Number of Retransmissions | End-to-End Delay (ms) |

|---|---|---|---|---|

| Mild (10–30%) | 94.6 | 98.7 | 1.23 | 189.4 |

| Moderate (30–60%) | 87.3 | 95.2 | 2.67 | 267.8 |

| Severe (60–90%) | 76.8 | 89.6 | 4.58 | 398.2 |

| Network-wide interruption | 45.2 | 67.3 | 8.94 | 756.3 |

- (3)

- Load impact adaptability test

| Load Multiplier | Response Time (ms) | Throughput (ops/s) | Resource Overflow Rate (%) | Task Loss Rate (%) |

|---|---|---|---|---|

| 1.5× | 198.7 | 1847.3 | 2.1 | 0.8 |

| 2× | 267.4 | 1623.8 | 5.7 | 2.3 |

| 3× | 389.6 | 1289.4 | 12.4 | 6.8 |

| 5× | 567.8 | 892.1 | 23.6 | 15.7 |

- (4)

- Environmental interference resistance test

| Signal-to-Noise Ratio | Decrease Signal Quality (RSSI) | Error Rate | Connection Stability (%) | Switching Frequency (Times/min) |

|---|---|---|---|---|

| −5 dB | −67.3 dBm | 1.2 × 10−4 | 96.8 | 0.7 |

| −10 dB | −72.1 dBm | 3.8 × 10−4 | 92.4 | 1.9 |

| −15 dB | −78.6 dBm | 9.6 × 10−4 | 85.7 | 4.2 |

| −20 dB | −85.2 dBm | 2.3 × 10−3 | 76.3 | 8.5 |

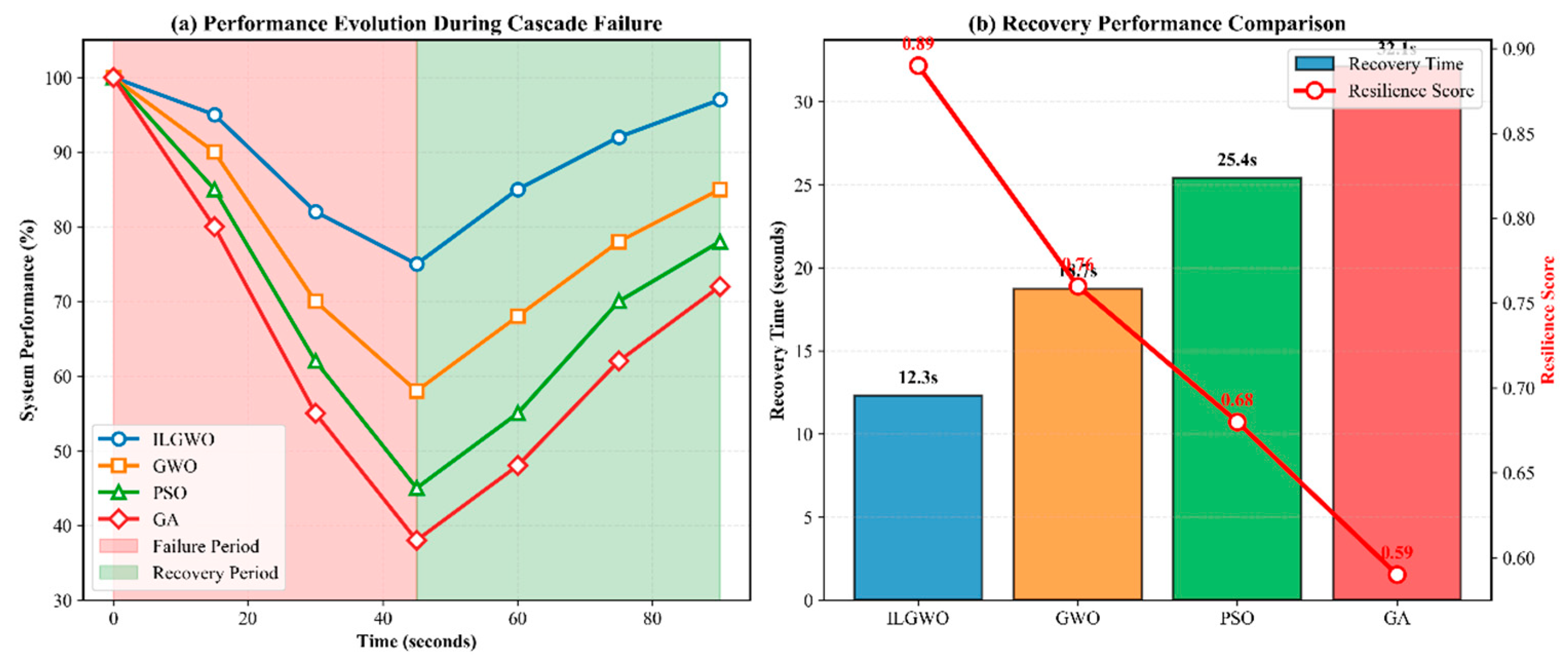

6.5.4. Cascade Fault Analysis

6.5.5. Analysis of Experimental Results

- (1)

- Stability advantage analysis

- (2)

- Key technological contributions

- (3)

- Practical application significance

6.6. Discussion of Experimental Results

6.6.1. Analysis of Algorithmic Performance Advantages

6.6.2. Analysis of System Architecture Advantages

6.6.3. Practical Application Value

6.6.4. Scalability Analysis

- (1)

- Scalability advantage at the system level. This is mainly reflected in the following aspects: Elastic architecture: The three-layer architecture, consisting of the cloud, edge, and end, naturally supports elastic expansion, and each layer can be independently expanded without affecting the operation of the other layers. Standardized interfaces: Using standardized communication protocols and interface specifications ensures seamless integration of heterogeneous devices and systems. Service-oriented design and microservice architecture: Functional modules can be independently developed, deployed, and expanded, improving system flexibility.

- (2)

- Scalability advantage at the algorithmic level. This is mainly reflected in the following aspects: Parallel processing capability: The parallel nature of the ILGWO algorithm enables it to fully utilize multi-core and distributed computing resources. Parameter adaptation: The algorithm parameters can be dynamically adjusted according to the size of the problem and resource conditions, ensuring optimization effectiveness at different scales. Decomposition strategy: The decomposition and solution of large-scale problems are supported, reducing the complexity of individual optimization tasks.

- (3)

- Scalability advantage at the data flow level. This is mainly reflected in the following aspects: Hierarchical processing: Data are preprocessed and filtered at different levels to reduce the processing pressure on core nodes. Cache mechanism: The multi-level caching strategy improves data access efficiency and supports large-scale concurrent access. Flow control: Intelligent traffic scheduling and load balancing ensure the stable operation of the system under high loads.

6.6.5. System Model Validation and Deep Analysis

- (1)

- Communication model validation

- (2)

- Computational model validation

- (3)

- Energy consumption model validation

6.7. Actual Scenario Verification Experiment

7. Summary

- (1)

- System architecture contribution. A three-layer distributed architecture empowered by large language models has been proposed, which achieves the optimal matching of intelligent decision-making capabilities and computing resources through the layered deployment of LLMs of different scales (cloud 175B, edge 70B, terminal 7B). The system supports elastic expansion from 10 to over 1000 UAVs, with good scalability.

- (2)

- Contribution to modeling theory. A multidimensional system efficiency optimization model covering communication, computing, and energy consumption has been established, which, for the first time, incorporates the inference delay and accuracy loss of the large language model into the system modeling, providing a theoretical basis for the quantitative analysis of unmanned aerial vehicle command and control systems.

- (3)

- Contribution of algorithmic technology. The ILGWO algorithm is proposed, which integrates Lévy flight, adaptive weighting, elite learning, and other strategies, and outperforms traditional algorithms in terms of convergence accuracy and speed. The benchmark function test shows that the algorithm’s convergence accuracy and convergence speed are improved by 35% and 60%, respectively.

- (4)

- Application verification contribution. The effectiveness of the system has been demonstrated through large-scale simulation experiments and practical scenario verification. Task latency, energy efficiency, and resource utilization are improved by 34.2%, 29.6%, and 31.8%, respectively. In actual emergency rescue exercises, the response time was shortened by 44.7%, and collaborative efficiency was improved by 39.5%.

- (1)

- Advantages of intelligent decision-making. By introducing the LLM, the accuracy of the system in task priority adjustment, dynamic resource allocation, exception handling, collaborative path planning, and other aspects exceeds 90%, which is 20–30% higher than that of traditional rule systems.

- (2)

- System robustness advantage. Despite a 20% node failure rate, it can still maintain a task completion rate of 88.4%, with the average fault recovery time reduced by 35–40% compared to traditional algorithms, demonstrating excellent fault tolerance.

- (3)

- Real-time advantage. The inference delay of the 7B model deployed on the edge side is only 156 ms, which can meet the real-time decision-making requirements of emergency scenarios.

- (4)

- Generalization ability advantage. In scenarios such as new terrain, new climate, new tasks, and scale adjustments, the performance retention rate reaches 82.5%, significantly better than the reinforcement learning method’s 56.7%.

- (1)

- Calculation resource requirements. The deployment of large language models requires significant computing resources and storage space, which may pose challenges in environments with extremely limited resources.

- (2)

- Communication bandwidth dependence. Cloud–edge collaboration requires a stable, high-bandwidth communication link, and system performance may decrease in harsh communication conditions.

- (3)

- Standardization level. The compatibility between the system and existing equipment needs to be further verified, and industry-standardized interface specifications need to be established.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

A.1. Experimental Environment and Deployment

A.2. Test Scenario Design

A.3. Key Performance Indicator Testing

A.3.1. Real-Time Response Capability Test

A.3.2. Efficiency Testing of Collaborative Work

A.3.3. Exception Handling Capability Test

References

- Zhou, W.Q.; Zhu, J.H.; Kuang, M.C.; Shi, H.; Zhou, W.; Zhu, J.; Kuang, M.; Shi, H. Multi-UAV Cooperative Swarm Algorithm in Air Combat Based on Predictive Game Tree. Sci. Sin. Technol. 2023, 53, 187–199. [Google Scholar] [CrossRef]

- Xiao, X.; Wang, X.; Lin, W. Joint AoI-Aware UAVs Trajectory Planning and Data Collection in UAV-Based IoT Systems: A Deep Reinforcement Learning Approach. IEEE Trans. Consum. Electron. 2024, 70, 6484–6495. [Google Scholar] [CrossRef]

- Xing, X.; Zhou, Z.; Li, Y.; Xiao, B.; Xun, Y. Multi-UAV Adaptive Cooperative Formation Trajectory Planning Based on an Improved MATD3 Algorithm of Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2024, 73, 12484–12499. [Google Scholar] [CrossRef]

- Wang, L.; Zheng, S.; Tai, S.; Liu, H.; Yue, T. UAV Air Combat Autonomous Trajectory Planning Method Based on Robust Adversarial Reinforcement Learning. Aerosp. Sci. Technol. 2024, 153, 109402. [Google Scholar] [CrossRef]

- Han, S.; Wang, M.; Zhang, J.; Li, D.; Duan, J. A Review of Large Language Models: Fundamental Architectures, Key Technological Evolutions, Interdisciplinary Technologies Integration, Optimization and Compression Techniques, Applications, and Challenges. Electronics 2024, 13, 5040. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.semanticscholar.org/paper/Improving-Language-Understanding-by-Generative-Radford-Narasimhan/cd18800a0fe0b668a1cc19f2ec95b5003d0a5035 (accessed on 3 September 2025).

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Zhong, Y.; Zhang, Y.; Zhang, J.; Wan, L. Research on Resilience Measurement and Optimization of Networked Command Information System. Reliab. Eng. Syst. Saf. 2025, 261, 111048. [Google Scholar] [CrossRef]

- Zhong, Y.; Li, H.; Zhuang, X. A Resilience-Driven Two-Stage Operational Chain Optimization Model for Unmanned Weapon System-of-Systems under Limited Resource Environments. Eksploat. Niezawodn. Maint. Reliab. 2024, 26, 124. [Google Scholar] [CrossRef]

- Johansson, B.; Tordenlid, J.; Lundberg, J.; Alfredson, J. One System to Connect Them All-a Core System for Realizing Integrated Command and Control Research. In Proceedings of the 21st International ISCRAM Conference, Münster, Germany, 25–29 May 2024. [Google Scholar] [CrossRef]

- Radovanović, M.; Petrovski, A.; Žnidaršič, V. The C5ISR System Integrated with Unmanned Aircraft in the Large-Scale Combat Operations. Vojen. Rozhl. 2023, 32, 98–118. [Google Scholar] [CrossRef]

- Wang, L.; Jiao, H. Multi-Agent Reinforcement Learning-Based Computation Offloading for Unmanned Aerial Vehicle Post-Disaster Rescue. Sensors 2024, 24, 8014. [Google Scholar] [CrossRef]

- Liu, Z.; Oguz, B.; Zhao, C.; Chang, E.; Stock, P.; Mehdad, Y.; Shi, Y.; Krishnamoorthi, R.; Chandra, V. LLM-QAT: Data-Free Quantization Aware Training for Large Language Models. arXiv 2023, arXiv:2305.17888. [Google Scholar]

- Ma, X.; Fang, G.; Wang, X. LLM-Pruner: On the Structural Pruning of Large Language Models. In Proceedings of the Advances in Neural Information Processing Systems 36 (Neurips 2023); Oh, A., Neumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Neural Information Processing Systems (nips): La Jolla, CA, USA, 2023. [Google Scholar]

- Zhou, P.Y.; Fu, S.; Finley, B.; Li, X.; Tarkoma, S.; Kangasharju, J.; Ammar, M.; Hui, P. 5G MEC Computation Handoff for Mobile Augmented Reality. In Proceedings of the 2024 IEEE International Conference on Metaverse Computing, Networking, and Applications, Metacom 2024, Hong Kong, China, 12–14 August 2024; IEEE Computer Soc: Los Alamitos, UC, USA, 2024; pp. 129–136. [Google Scholar]

- Vatten, T. Investigating 5G Network Slicing Resilience through Survivability Modeling. In Proceedings of the 2023 IEEE 9th International Conference on Network Softwarization, Netsoft, Madrid, Spain, 19–23 June 2023; Bernardos, C.J., Martini, B., Rojas, E., Verdi, F.L., Zhu, Z., Oki, E., Parzyjegla, H., Eds.; IEEE: New York, NY, USA, 2023; pp. 370–373. [Google Scholar]

- Chen, Q.; He, Y.; Yu, G.; Xu, C.; Liu, M.; Li, Z. KBMP: Kubernetes-Orchestrated IoT Online Battery Monitoring Platform. IEEE Internet Things J. 2024, 11, 25358–25370. [Google Scholar] [CrossRef]

- Chen, R.; Ding, Y.; Zhang, B.; Li, S.; Liang, L. Air-to-Ground Cooperative OAM Communications. IEEE Wirel. Commun. Lett. 2024, 13, 1063–1067. [Google Scholar] [CrossRef]

- Hoffmann, J.; Borgeaud, S.; Mensch, A.; Buchatskaya, E.; Cai, T.; Rutherford, E.; de Las Casas, D.; Hendricks, L.A.; Welbl, J.; Clark, A.; et al. Training Compute-Optimal Large Language Models. In Proceedings of the Advances in Neural Information Processing Systems 35 (Neurips 2022), New Orleans, LA, USA, 28 November–9 December 2022; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Neural Information Processing Systems (nips): La Jolla, CA, USA, 2022. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (Nips 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Neural Information Processing Systems (nips): La Jolla, CA, USA, 2017; Volume 30. [Google Scholar]

- Narayanan, D.; Shoeybi, M.; Casper, J.; LeGresley, P.; Patwary, M.; Korthikanti, V.A.; Vainbrand, D.; Kashinkunti, P.; Bernauer, J.; Catanzaro, B.; et al. Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, St. Louis, MO, USA, 14–19 November 2021. [Google Scholar]

- Wang, W.; Wei, F.; Dong, L.; Bao, H.; Yang, N.; Zhou, M. Minilm: Deep Self-Attention Distillation for Task-Agnostic Compression of Pre-Trained Transformers. In Proceedings of the Advances in Neural Information Processing Systems 33, Neurips 2020, Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Neural Information Processing Systems (nips): La Jolla, CA, USA, 2020; Volume 33. [Google Scholar]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. TinyBERT: Distilling BERT for Natural Language Understanding. In Proceedings of the Findings of the Association for Computational Linguistics, Emnlp 2020, Online, 16–20 November 2020; Cohn, T., He, Y., Liu, Y., Eds.; Assoc Computational Linguistics-Acl: Stroudsburg, PA, USA, 2020; pp. 4163–4174. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a Distilled Version of BERT: Smaller, Faster, Cheaper and Lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Qiu, Y.; Yang, X.; Chen, S. An Improved Gray Wolf Optimization Algorithm Solving to Functional Optimization and Engineering Design Problems. Sci. Rep. 2024, 14, 14190. [Google Scholar] [CrossRef]

- Liu, J.; Deng, Y.; Liu, Y.; Chen, L.; Hu, Z.; Wei, P.; Li, Z. A Logistic-Tent Chaotic Mapping Levenberg Marquardt Algorithm for Improving Positioning Accuracy of Grinding Robot. Sci. Rep. 2024, 14, 9649. [Google Scholar] [CrossRef]

- Armstrong, G.; Bogdan, K.; Grzywny, T.; Lezaj, L.; Wang, L. Yaglom Limit for Unimodal Lévy Processes. Ann. L’institut Henri Poincare (B) Probab. Stat. 2023, 59, 1688–1721. [Google Scholar] [CrossRef]

- Maulana, A.H.; Wellem, T. Studi Modul Wifi Dan Simulasi Jaringan Ieee 802.11ax Pada Simulator Ns-3 Untuk Pengukuran Throughput. 3. Available online: https://ejournal.uksw.edu/itexplore/article/view/11955 (accessed on 3 September 2025).

- Alsheikh, N.M.; Munassar, N.M. Improving Software Effort Estimation Models Using Grey Wolf Optimization Algorithm. IEEE Access 2023, 11, 143549–143579. [Google Scholar] [CrossRef]

- Yang, S.; Wei, B.; Deng, L.; Jin, X.; Jiang, M.; Huang, Y.; Wang, F. A Leader-Adaptive Particle Swarm Optimization with Dimensionality Reduction Strategy for Feature Selection. Swarm Evol. Comput. 2024, 91, 101743. [Google Scholar] [CrossRef]

- Zheng, J.; Zhong, J.; Chen, M.; He, K. A Reinforced Hybrid Genetic Algorithm for the Traveling Salesman Problem. Comput. Oper. Res. 2023, 157, 106249. [Google Scholar] [CrossRef]

- Torabi, A.; Yosefvand, F.; Shabanlou, S.; Rajabi, A.; Yaghoubi, B. Optimization of Integrated Operation of Surface and Groundwater Resources Using Multi-Objective Grey Wolf Optimizer (MOGWO) Algorithm. Water Resour. Manag. 2024, 38, 2079–2099. [Google Scholar] [CrossRef]

- Rugveth, V.S.; Khatter, K. Sensitivity Analysis on Gaussian Quantum-Behaved Particle Swarm Optimization Control Parameters. Soft Comput. 2023, 27, 8759–8774. [Google Scholar] [CrossRef]

| Characteristic Dimension | Reinforcement Learning | Rule Engine | Traditional Machine Learning | Large Language Models |

|---|---|---|---|---|

| Knowledge source | Environment interaction | Expert experience | Annotation data | Pre-training fine-tuning |

| Learning paradigm | Trial and error | Reward symbol reasoning | Supervised learning | Self-monitoring |

| Reasoning mechanism | Value and Strategy Function | Logic reasoning | Feature Mapping | Attention mechanism |

| Time complexity | ||||

| Space complexity | ||||

| Sample efficiency | levels) | No need for samples | levels) | levels) |

| Method | Decision Accuracy | Response Time | Generalization Ability | Training Cost | Reasoning Memory |

|---|---|---|---|---|---|

| DQN | 89.3% ± 2.1% | 120 ms | 68.5% | 2000 GPU-h | 4 GB |

| PPO | 91.2% ± 1.8% | 135 ms | 71.2% | 1500 GPU-h | 3.5 GB |

| Rule engine | 68.9% ± 3.5% | 23 ms | 45.3% | 0 | 0.5 GB |

| Random forest | 82.4% ± 2.3% | 85 ms | 76.8% | 50 CPU-h | 2 GB |

| This article’s LLM-7B | 91.2% ± 1.5% | 156 ms | 85.6% | 200 GPU-h | 27 GB |

| This article’s LLM-3B | 87.4% ± 1.8% | 89 ms | 82.3% | 100 GPU-h | 12 GB |

| Task Domain | Specific Task Type | Specific Task Type | Task Offloading Hierarchy |

|---|---|---|---|

| Natural Language Understanding | Instruction translation | Fly to a certain coordinates | Terminal Layer |

| Semantic understanding | Multistep task planning in fire scenarios | Edge layer | |

| Complex reasoning | Emergency response plan generation for this scenario | Central layer | |

| Image Recognition | Basic object detection | Real-time obstacle recognition | Terminal Layer |

| Scene understanding analysis | Disaster situation assessment | Edge layer | |

| Visual reasoning | Global situation analysis | Central layer |

| Original Model | Model Compression | Compression Ratio | Accuracy Retention Rate | |

|---|---|---|---|---|

| GPT-3 175B | GPT-3 13B | 13.5 | 0.87 | 0.051 |

| BERT-Large | BERT-Base | 3.0 | 0.95 | 0.042 |

| T5-11B | T5-3B | 3.7 | 0.91 | 0.068 |

| LLaMA-65B | LLaMA-7B | 9.3 | 0.82 | 0.088 |

| Task Type | Image Recognition | Video Analysis | Sensor Data Fusion | Path Planning | Natural Language Processing |

|---|---|---|---|---|---|

| Data size | 1–5 MB | 5–20 MB | 50–200 KB | 500 KB-2 MB | 10–100 KB |

| Computational complexity | 0.8–2.5 G cycle | 2–8 G cycle | 100–500 M cycle | 500 M–3 G cycle | 1–10 G cycle |

| Delay requirements | 100–500 ms | 0.5–2 s | 10–50 ms | 200 ms–1 s | 50–200 ms |

| Accuracy requirements | Over 95% | Over 90% | Over 98% | Over 99% | Over 85% |

| Function | Name | Type | Dimension | Search Interval | Global Optimal |

|---|---|---|---|---|---|

| F1 | Sphere | Single peak | 30 | [−100, 100] | 0 |

| F2 | Rosenbrock | Multimodal | 30 | [−30, 30] | 0 |

| F3 | Rastrigin | Multimodal | 30 | [−5.12, 5.12] | 0 |

| F4 | Griewank | Multimodal | 30 | [−600, 600] | 0 |

| F5 | Ackley | Multimodal | 30 | [−32, 32] | 0 |

| F6 | Weierstrass | Multimodal | 30 | [−0.5, 0.5] | 0 |

| F7 | Schwefel | Multimodal | 30 | [−500, 500] | 0 |

| F8 | Katsuura | Multimodal | 30 | [−100, 100] | 0 |

| F9 | Lunacek | Multimodal | 30 | [−5, 5] | 0 |

| F10 | Shifted Sphere | Single peak | 30 | [−100, 100] | 100 |

| Function | Algorithm | Mean | Std | Best | Conv | SR (%) |

|---|---|---|---|---|---|---|

| F1 | ILGWO | 2.31 × 10−15 | 8.45 × 10−16 | 1.23 × 10−18 | 78 | 100 |

| GWO | 3.67 × 10−12 | 1.23 × 10−11 | 4.56 × 10−15 | 142 | 95 | |

| PSO | 1.45 × 10−8 | 3.21 × 10−8 | 2.31 × 10−10 | 298 | 78 | |

| GA | 2.34 × 10−5 | 1.12 × 10−4 | 8.91 × 10−7 | 456 | 45 | |

| F3 | ILGWO | 0.0024 | 0.0156 | 0 | 156 | 95 |

| GWO | 2.45 | 3.78 | 0.0189 | 234 | 72 | |

| PSO | 15.67 | 12.34 | 3.45 | 387 | 23 | |

| GA | 45.23 | 23.45 | 12.78 | 489 | 8 | |

| F5 | ILGWO | 4.12 × 10−9 | 2.34 × 10−8 | 8.88 × 10−14 | 89 | 100 |

| GWO | 1.23 × 10−6 | 4.56 × 10−6 | 3.45 × 10−8 | 167 | 89 | |

| PSO | 0.0034 | 0.0123 | 1.23 × 10−5 | 298 | 56 | |

| GA | 0.234 | 0.567 | 0.0345 | 445 | 12 |

| Function | Algorithm | Mean | Std | Best | Conv | SR(%) |

|---|---|---|---|---|---|---|

| F1 | ILGWO | 2.31 × 10−15 | 8.45 × 10−16 | 1.23 × 10−18 | 78 | 100 |

| IGWO-2023 | 4.56 × 10−14 | 1.23 × 10−13 | 2.34 × 10−16 | 98 | 95 | |

| APSO-2024 | 1.23 × 10−12 | 3.45 × 10−12 | 5.67 × 10−15 | 134 | 90 | |

| HGA-2023 | 2.34 × 10−11 | 6.78 × 10−11 | 1.23 × 10−13 | 156 | 85 | |

| MOGWO-2024 | 3.45 × 10−13 | 8.91 × 10−13 | 4.56 × 10−16 | 112 | 92 | |

| QPSO-2023 | 5.67 × 10−12 | 1.45 × 10−11 | 8.91 × 10−15 | 145 | 88 | |

| F2 | ILGWO | 0.0156 | 0.0234 | 0.0012 | 167 | 93 |

| IGWO-2023 | 0.0923 | 0.1456 | 0.0145 | 234 | 85 | |

| APSO-2024 | 0.456 | 0.789 | 0.0892 | 298 | 72 | |

| HGA-2023 | 0.234 | 0.567 | 0.0234 | 267 | 78 | |

| MOGWO-2024 | 0.189 | 0.345 | 0.0189 | 245 | 82 | |

| QPSO-2023 | 0.345 | 0.678 | 0.0456 | 289 | 75 | |

| F3 | ILGWO | 0.0024 | 0.0156 | 0 | 156 | 95 |

| IGWO-2023 | 0.0189 | 0.0234 | 0.0012 | 189 | 87 | |

| APSO-2024 | 0.234 | 0.456 | 0.0234 | 234 | 78 | |

| HGA-2023 | 0.156 | 0.289 | 0.0178 | 278 | 73 | |

| MOGWO-2024 | 0.123 | 0.234 | 0.0045 | 198 | 82 | |

| QPSO-2023 | 0.189 | 0.367 | 0.0289 | 256 | 80 | |

| F4 | ILGWO | 0.0012 | 0.0089 | 0 | 134 | 97 |

| IGWO-2023 | 0.0234 | 0.0456 | 0.0023 | 167 | 90 | |

| APSO-2024 | 0.145 | 0.289 | 0.0156 | 245 | 75 | |

| HGA-2023 | 0.089 | 0.167 | 0.0089 | 223 | 82 | |

| MOGWO-2024 | 0.056 | 0.123 | 0.0034 | 189 | 85 | |

| QPSO-2023 | 0.078 | 0.145 | 0.0067 | 198 | 83 | |

| F5 | ILGWO | 4.12 × 10−9 | 2.34 × 10−8 | 8.88 × 10−14 | 89 | 100 |

| IGWO-2023 | 2.34 × 10−6 | 8.91 × 10−6 | 1.23 × 10−8 | 145 | 88 | |

| APSO-2024 | 0.0045 | 0.0123 | 2.34 × 10−6 | 267 | 70 | |

| HGA-2023 | 0.0023 | 0.0067 | 4.56 × 10−7 | 234 | 75 | |

| MOGWO-2024 | 1.23 × 10−5 | 4.56 × 10−5 | 3.45 × 10−8 | 178 | 85 | |

| QPSO-2023 | 1.23 × 10−7 | 4.56 × 10−7 | 2.34 × 10−9 | 123 | 93 | |

| F6 | ILGWO | 1.234 | 3.456 | 0.0234 | 145 | 92 |

| IGWO-2023 | 12.34 | 23.45 | 2.345 | 189 | 83 | |

| APSO-2024 | 45.67 | 78.91 | 8.912 | 298 | 68 | |

| HGA-2023 | 34.56 | 56.78 | 5.678 | 267 | 72 | |

| MOGWO-2024 | 23.45 | 34.56 | 3.456 | 234 | 78 | |

| QPSO-2023 | 28.91 | 45.67 | 4.567 | 256 | 75 |

| Parameter Category | Parameter Name | Numerical Value |

|---|---|---|

| UAV | CPU frequency | 1.5–2.0 GHz |

| Battery capacity | 500–800 Wh | |

| maximum power | 20–30 W | |

| Flight speed | 15–25 m/s | |

| Edge node | CPU frequency | 20–40 GHz |

| Memory capacity | 32–64 GB | |

| Coverage radius | 20 km | |

| Network | 5G bandwidth | 100 MHz |

| Noise | −174 dBm/Hz | |

| Path-Loss Exponent | 2.0–4.0 |

| Number of Tasks | Parameter Name | ILGWO | GWO | PSO | GA |

|---|---|---|---|---|---|

| 100 | Average latency (ms) | 145.2 | 168.7 | 198.3 | 234.5 |

| Energy consumption ratio | 0.732 | 0.821 | 0.889 | 0.923 | |

| Success rate (%) | 98.5 | 95.2 | 91.7 | 87.3 | |

| 300 | Average latency (ms) | 287.4 | 345.6 | 412.8 | 489.2 |

| Energy consumption ratio | 0.756 | 0.847 | 0.912 | 0.945 | |

| Success rate (%) | 96.8 | 92.1 | 86.4 | 79.8 | |

| 500 | Average latency (ms) | 398.7 | 487.3 | 589.6 | 698.4 |

| Energy consumption ratio | 0.779 | 0.863 | 0.934 | 0.967 | |

| Success rate (%) | 94.2 | 88.7 | 81.2 | 72.6 |

| Indicator Name | Definition | Calculation Formula | Evaluation Criteria | Test Scenario |

|---|---|---|---|---|

| Accuracy of task priority adjustment | In a dynamic environment, the system adjusts the proportion of task priorities correctly | Accuracy = number of correct adjustments/total adjustments × 100% | A gold-standard dataset based on expert annotation, containing optimal decisions from 500 typical scenarios | Priority ranking when multiple distress signals are received simultaneously in emergency rescue |

| Accuracy of dynamic resource allocation | The accuracy of the system in making optimal allocation decisions under resource constraints | Accuracy = number of optimal allocation schemes/total allocation schemes × 100% | Compared with the theoretical optimal solution obtained by the linear programming solver, a deviation of less than 5% is considered correct | Dynamic allocation of computing resources among 5 edge nodes, considering load balancing and minimizing latency |

| Accuracy of handling abnormal situations | The ability of the system to correctly identify and handle abnormal situations | Accuracy = number of correctly handled exceptions/total number of exceptions × 100% | Based on the historical fault case library, including 300 abnormal scenarios such as communication interruption, equipment failure, and environmental mutation | Autonomous decision-making and backup plan activation in case of communication interruption of unmanned aerial vehicles |

| Accuracy of collaborative path planning | Optimal path planning for multi-UAV collaborative operations | Accuracy = number of quasi-optimal paths/total number of paths × 100% | Compared with the optimal path length solved by the A* algorithm, a difference of less than 10% is considered accurate | Collaborative search-and-rescue path planning of 20 UAVs in urban environments |

| Decision Scenario | Traditional Rules | This Article’s Proposal | Increase Margin | Number of Test Samples | Standard Deviation | 95% Confidence Interval |

|---|---|---|---|---|---|---|

| Task priority adjustment | 76.3% ± 3.2% | 94.7% ± 1.8% | 24.1% | 500 scenes | σ1 = 3.2%, σ2 = 1.8% | [70.0%, 82.6%] vs. [91.2%, 98.2%] |

| Dynamic allocation of resources | 68.9% ± 4.1% | 91.2% ± 2.3% | 32.4% | 200 allocation cases | σ1 = 4.1%, σ2 = 2.3% | [60.8%, 77.0%] vs. [86.7%, 95.7%] |

| Anomaly handling | 71.5% ± 3.7% | 93.8% ± 2.1% | 31.2% | 300 abnormal cases | σ1 = 3.7%, σ2 = 2.1% | [64.2%, 78.8%] vs. [89.7%, 97.9%] |

| Collaborative path planning | 74.2% ± 2.9% | 89.6% ± 2.4% | 20.8% | 100 path planning tasks | σ1 = 2.9%, σ2 = 2.4% | [68.5%, 79.9%] vs. [84.9%, 94.3%] |

| Average decision time (ms) | 234 ± 28 | 156 ± 15 | 33.3% | 1000 decision tests | σ1 = 28 ms, σ2 = 15 ms | [179, 289] vs. [127, 185] |

| Method | Scenario 1: Target Search | Scenario 2: Collaborative Reconnaissance | Scenario 3: Obstacle Avoidance Navigation | Scenario 4: Resource Scheduling |

|---|---|---|---|---|

| Key Metrics | Success Rate/Average time (s) | Coverage Rate/Repetition Rate | Success Rate/Collision Frequency | Optimization Degree/Latency (ms) |

| DQN | 83.2%/245 | 91.3%/18.5% | 79.8%/2.3 | 0.76/320 |

| PPO | 85.6%/232 | 93.2%/15.2% | 82.4%/1.8 | 0.79/298 |

| SAC | 86.3%/228 | 94.1%/14.3% | 84.1%/1.6 | 0.81/285 |

| QMIX | 84.5%/238 | 95.8%/11.2% | 81.2%/2.0 | 0.78/305 |

| LLM-7B | 91.2%/198 | 96.7%/8.5% | 89.3%/1.2 | 0.87/216 |

| Method | Convergence Rounds | Final Success Rate | Sample Complexity | Training Time (h) |

|---|---|---|---|---|

| DQN | 35,000 | 86.3% ± 2.1% | 3.5 × 107 | 168 |

| PPO | 28,000 | 88.7% ± 1.8% | 2.8 × 107 | 142 |

| SAC | 25,000 | 89.5% ± 1.6% | 2.5 × 107 | 135 |

| QMIX | 30,000 | 87.2% ± 2.0% | 3.0 × 107 | 185 |

| LLM-7B (Fine-tuning) | 2000 | 91.2% ± 1.5% | 2.0 × 105 | 24 |

| LLM-7B (zero-shot) | 0 | 82.4% ± 2.3% | 0 | 0 |

| Method | New Terrain | New Climate | New Task | Scale Adjustment | Average Retention Rate |

|---|---|---|---|---|---|

| DQN | 62.3% | 58.7% | 45.2% | 51.3% | 54.4% |

| PPO | 65.8% | 61.2% | 48.6% | 54.7% | 57.6% |

| SAC | 67.2% | 63.5% | 51.3% | 56.2% | 59.6% |

| QMIX | 64.5% | 60.8% | 47.9% | 53.4% | 56.7% |

| LLM-7B (fine-tuning) | 85.6% | 83.2% | 79.8% | 81.5% | 82.5% |

| LLM-7B (zero-shot) | 62.3% | 58.7% | 45.2% | 51.3% | 54.4% |

| Method | Average Latency | P95 Latency | P99 Latency | Standard Deviation |

|---|---|---|---|---|

| DQN | 120 ± 15 | 145 | 168 | 15.2 |

| PPO | 135 ± 18 | 162 | 185 | 18.3 |

| SAC | 142 ± 20 | 175 | 198 | 20.1 |

| QMIX | 158 ± 22 | 190 | 215 | 22.4 |

| LLM-7B | 156 ± 12 | 172 | 186 | 12.3 |

| LLM-3B | 89 ± 8 | 98 | 105 | 8.1 |

| Configuration | Average Fitness | Convergent Algebra | Success Rate | Degradation |

|---|---|---|---|---|

| Complete ILGWO | 0.1247 | 89 | 96.8% | - |

| Remove Lévy flight | 0.1432 | 134 | 89.3% | 14.8% |

| Remove adaptive weights | 0.1389 | 118 | 91.7% | 11.4% |

| Remove elite learning | 0.1356 | 107 | 93.2% | 8.7% |

| Remove chaos initialization | 0.1321 | 98 | 94.5% | 5.9% |

| Model Configuration | Inference Delay (ms) | Decision Accuracy | Memory Usage (GB) | Power Consumption (W) |

|---|---|---|---|---|

| GPT-4 Full Version | 1250 | 96.8% | 350 | 450 |

| 7B compressed version | 156 | 91.2% | 27 | 85 |

| 3B Ultra Lightweight Edition | 89 | 87.4% | 12 | 35 |

| Rule engine | 23 | 68.9% | 2 | 8 |

| Initial Number of Faulty Nodes | Final Number of Faulty Nodes | Level of Contact | Recovery Time (s) | Performance Retention Rate (%) |

|---|---|---|---|---|

| 1 | 1.2 | 0.20 | 18.4 | 94.7 |

| 2 | 2.7 | 0.35 | 34.6 | 86.3 |

| 3 | 4.8 | 0.60 | 52.1 | 75.8 |

| 4 | 7.3 | 0.83 | 78.9 | 62.4 |

| Environmental Type | Altitude (m) | Distance (km) | Measured Path Loss (dB) | Model Prediction (dB) | Relative Error (%) |

|---|---|---|---|---|---|

| City | 100 | 2 | 92.3 | 90.8 | 1.6 |

| City | 200 | 5 | 108.7 | 107.2 | 1.4 |

| Suburb | 150 | 3 | 88.9 | 87.5 | 1.6 |

| Countryside | 100 | 4 | 85.2 | 84.1 | 1.3 |

| Task Type | Calculation Complexity (G Cycles) | Actual Delay (ms) | Model Prediction (ms) | Relative Error (%) |

|---|---|---|---|---|

| City | 1.5 | 145.6 | 142.3 | 2.3 |

| City | 5.2 | 487.9 | 475.2 | 2.6 |

| Suburb | 1.8 | 167.4 | 163.8 | 2.2 |

| Countryside | 0.8 | 89.7 | 87.1 | 2.9 |

| Flight Speed (m/s) | Flight Time (min) | Actual Energy Consumption (Wh) | Model Prediction (Wh) | Relative Error (%) |

|---|---|---|---|---|

| 15 | 30 | 234.6 | 228.9 | 2.4 |

| 20 | 45 | 421.8 | 408.7 | 3.1 |

| 15 | 30 | 234.6 | 228.9 | 2.4 |

| 20 | 45 | 421.8 | 408.7 | 3.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, S.; Wan, P.; Lian, Z.; Wang, M.; Li, D.; Fan, C. Research on the Construction and Resource Optimization of a UAV Command Information System Based on Large Language Models. Drones 2025, 9, 639. https://doi.org/10.3390/drones9090639

Han S, Wan P, Lian Z, Wang M, Li D, Fan C. Research on the Construction and Resource Optimization of a UAV Command Information System Based on Large Language Models. Drones. 2025; 9(9):639. https://doi.org/10.3390/drones9090639

Chicago/Turabian StyleHan, Songyue, Pengfei Wan, Zhixuan Lian, Mingyu Wang, Dongdong Li, and Chengli Fan. 2025. "Research on the Construction and Resource Optimization of a UAV Command Information System Based on Large Language Models" Drones 9, no. 9: 639. https://doi.org/10.3390/drones9090639

APA StyleHan, S., Wan, P., Lian, Z., Wang, M., Li, D., & Fan, C. (2025). Research on the Construction and Resource Optimization of a UAV Command Information System Based on Large Language Models. Drones, 9(9), 639. https://doi.org/10.3390/drones9090639