Multi-Level Contextual and Semantic Information Aggregation Network for Small Object Detection in UAV Aerial Images

Abstract

1. Introduction

2. Related Works

2.1. The Attention/Selective Mechanism

2.2. Multi-Scale Feature Fusion

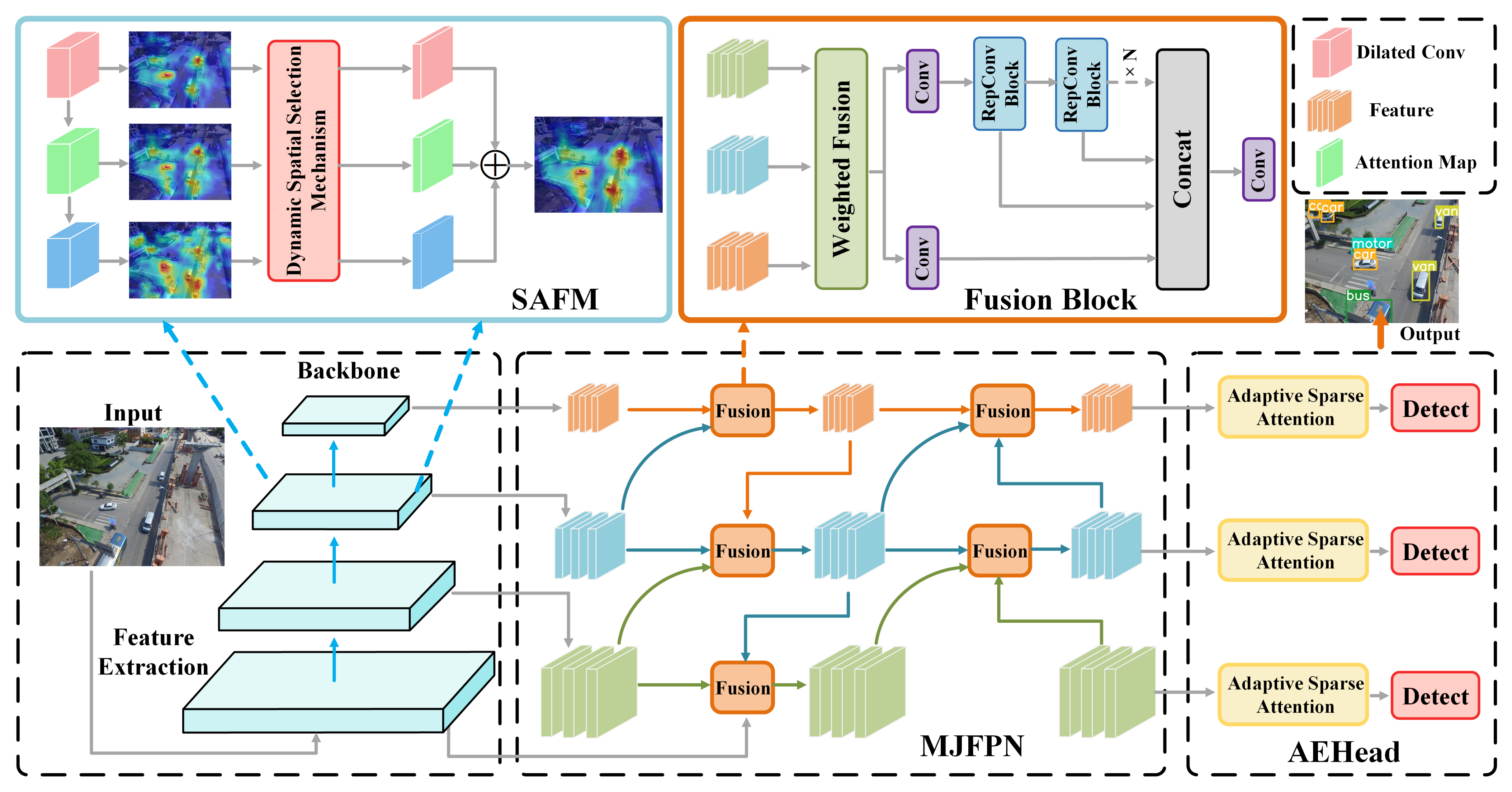

3. The Method

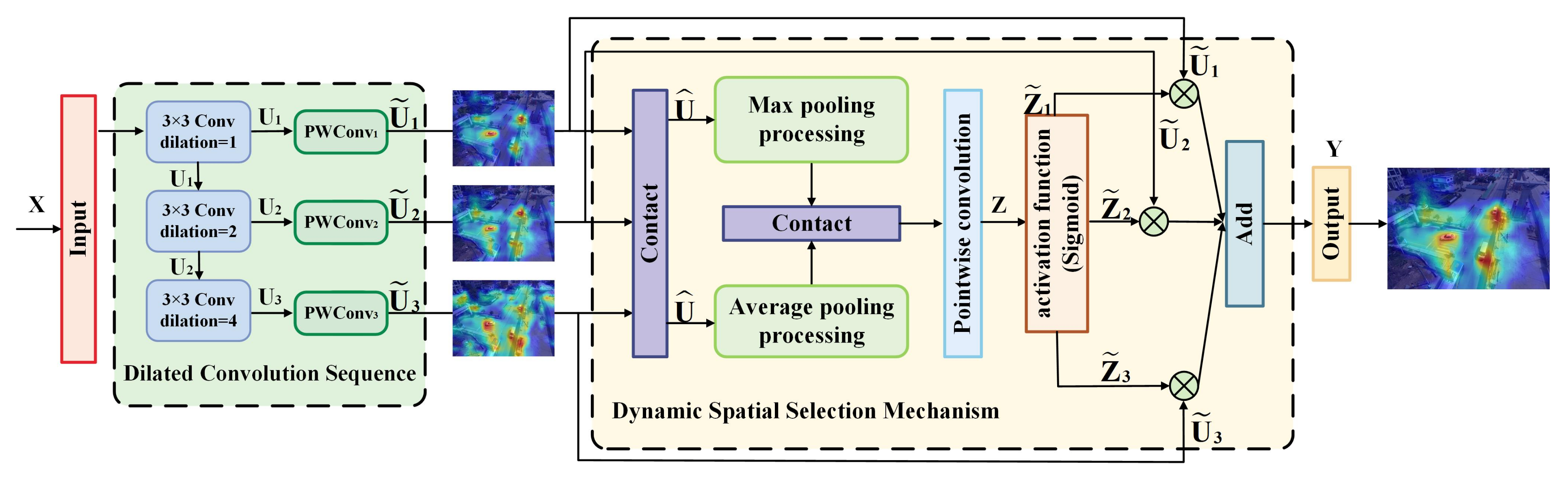

3.1. The Spatially Aware Feature Selection Module (SAFM)

3.2. The Multi-Level Joint Feature Pyramid Network (MJFPN)

3.3. The Attention-Enhanced Head (AEHead)

4. Experiments

4.1. The Datasets

4.2. The Evaluation Metrics

4.3. The Implementation Details

4.4. The Ablation Study

4.4.1. Evaluation of Different Components

4.4.2. Evaluation of the Spatial-Aware Feature Selection Module

4.4.3. Evaluation of the Multi-Level Joint Feature Pyramid Network

4.4.4. Evaluation of the Sparse Attention Mechanism

4.5. A Comparison of the Results for Different Datasets

4.5.1. The Results on VisDrone

4.5.2. Results on UAVDT

4.5.3. Results on MS COCO

4.5.4. Results on DOTA

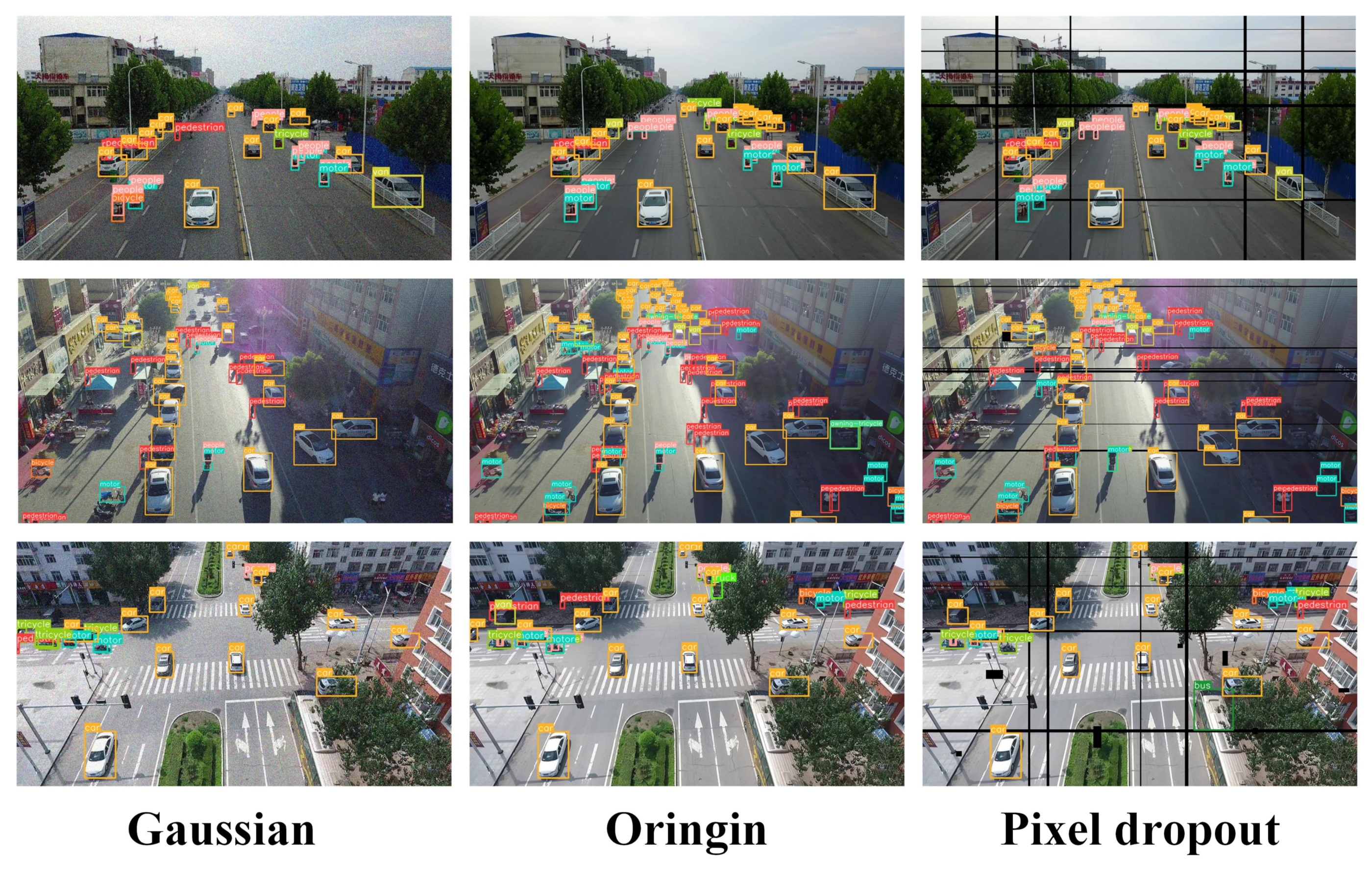

4.5.5. Results on Simulated Degradation Phenomena

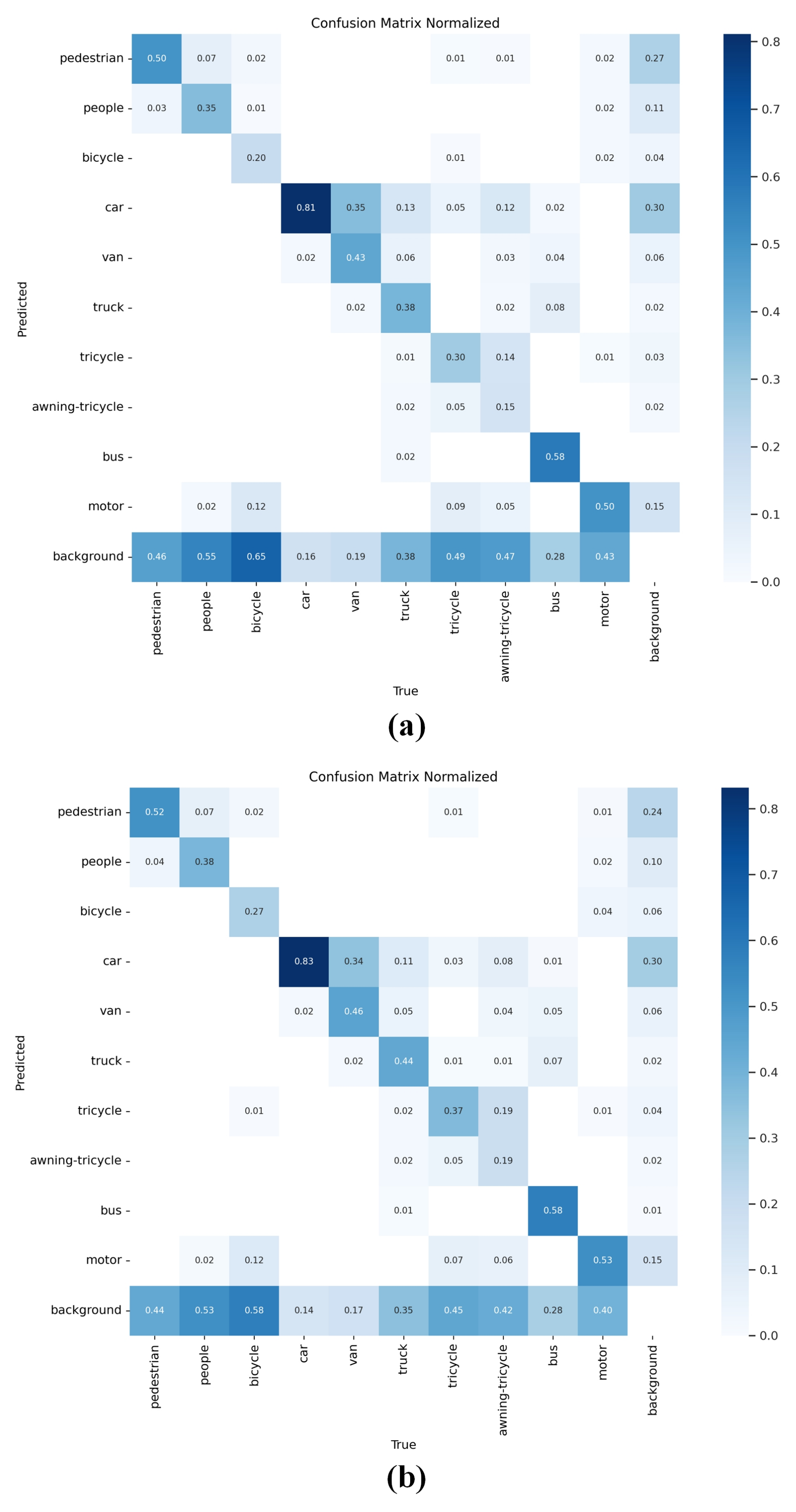

5. Visual Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, B.; Yang, Z.P.; Chen, D.Q.; Liang, S.Y.; Ma, H. Maneuvering target tracking of UAV based on MN-DDPG and transfer learning. Def. Technol. 2021, 17, 457–466. [Google Scholar] [CrossRef]

- Huang, J.; Yujie, C.; Guipeng, X.; Shuangxia, B.; Li, B.; Wang, G.; Evgeny, N. GTrXL-SAC-Based Path Planning and Obstacle-Aware Control Decision-Making for UAV Autonomous Control. Drones 2025, 9, 275. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A review of unmanned aerial vehicle low-altitude remote sensing (UAV-LARS) use in agricultural monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Guo, Z.; Niu, Y. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Wan, K.; Wu, D.; Li, B.; Gao, X.; Hu, Z.; Chen, D. ME-MADDPG: An efficient learning-based motion planning method for multiple agents in complex environments. Int. J. Intell. Syst. 2022, 37, 2393–2427. [Google Scholar] [CrossRef]

- Geng, J.; Song, S.; Jiang, W. Dual-path feature aware network for remote sensing image semantic segmentation. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 3674–3686. [Google Scholar] [CrossRef]

- He, Y.; Li, J. TSRes-YOLO: An accurate and fast cascaded detector for waste collection and transportation supervision. Eng. Appl. Artif. Intell. 2023, 126, 106997. [Google Scholar] [CrossRef]

- Feng, Q.; Li, B.; Liu, X.; Gao, X.; Wan, K. Low-high frequency network for spatial–temporal traffic flow forecasting. Eng. Appl. Artif. Intell. 2025, 158, 111304. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yin, Y.; Li, H.; Fu, W. Faster-YOLO: An accurate and faster object detection method. Digit. Signal Process. 2020, 102, 102756. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–21. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Ran, Q.; Wang, Q.; Zhao, B.; Wu, Y.; Pu, S.; Li, Z. Lightweight oriented object detection using multiscale context and enhanced channel attention in remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5786–5795. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L. Artificial intelligence for remote sensing data analysis: A review of challenges and opportunities. IEEE Geosci. Remote Sens. Mag. 2022, 10, 270–294. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution unmanned aerial vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Song, C.; Zhang, X.; She, Y.; Li, B.; Zhang, Q. Trajectory Planning for UAV Swarm Tracking Moving Target Based on an Improved Model Predictive Control Fusion Algorithm. IEEE Internet Things J. 2025, 12, 19354–19369. [Google Scholar] [CrossRef]

- He, G.; Li, F.; Wang, Q.; Bai, Z.; Xu, Y. A hierarchical sampling based triplet network for fine-grained image classification. Pattern Recognit. 2021, 115, 107889. [Google Scholar] [CrossRef]

- Liu, L.; Xia, Z.; Zhang, X.; Peng, J.; Feng, X.; Zhao, G. Information-enhanced network for noncontact heart rate estimation from facial videos. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 2136–2150. [Google Scholar] [CrossRef]

- Jiang, Y.; Xie, S.; Xie, X.; Cui, Y.; Tang, H. Emotion recognition via multiscale feature fusion network and attention mechanism. IEEE Sens. J. 2023, 23, 10790–10800. [Google Scholar] [CrossRef]

- Zhou, L.; Zhao, S.; Wan, Z.; Liu, Y.; Wang, Y.; Zuo, X. MFEFNet: A multi-scale feature information extraction and fusion network for multi-scale object detection in UAV aerial images. Drones 2024, 8, 186. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, H.; Li, Y.; Xiao, L.; Zhang, Y.; Cao, L.; Wu, D. RLRD-YOLO: An Improved YOLOv8 Algorithm for Small Object Detection from an Unmanned Aerial Vehicle (UAV) Perspective. Drones 2025, 9, 293. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2736–2746. [Google Scholar]

- Liu, J.J.; Hou, Q.; Cheng, M.M.; Wang, C.; Feng, J. Improving convolutional networks with self-calibrated convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10096–10105. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Li, Y.; Mao, H.; Girshick, R.; He, K. Exploring plain vision transformer backbones for object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 280–296. [Google Scholar]

- Mao, Y.; Zhang, J.; Wan, Z.; Tian, X.; Li, A.; Lv, Y.; Dai, Y. Generative transformer for accurate and reliable salient object detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 1041–1054. [Google Scholar] [CrossRef]

- Boroujeni, S.P.H.; Razi, A.; Khoshdel, S.; Afghah, F.; Coen, J.L.; O’Neill, L.; Fule, P.; Watts, A.; Kokolakis, N.M.T.; Vamvoudakis, K.G. A comprehensive survey of research towards AI-enabled unmanned aerial systems in pre-, active-, and post-wildfire management. Inf. Fusion 2024, 108, 102369. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Online, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Liu, H.I.; Tseng, Y.W.; Chang, K.C.; Wang, P.J.; Shuai, H.H.; Cheng, W.H. A denoising fpn with transformer r-cnn for tiny object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4704415. [Google Scholar] [CrossRef]

- Liu, X.; Leng, C.; Niu, X.; Pei, Z.; Cheng, I.; Basu, A. Find small objects in UAV images by feature mining and attention. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517905. [Google Scholar] [CrossRef]

- Li, X.; Xie, Z.; Deng, X.; Wu, Y.; Pi, Y. Traffic sign detection based on improved faster R-CNN for autonomous driving. J. Supercomput. 2022, 78, 7982–8002. [Google Scholar] [CrossRef]

- Jobaer, S.; Tang, X.S.; Zhang, Y. A deep neural network for small object detection in complex environments with unmanned aerial vehicle imagery. Eng. Appl. Artif. Intell. 2025, 148, 110466. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Yeh, I.H. Designing network design strategies through gradient path analysis. arXiv 2022, arXiv:2211.04800. [Google Scholar] [CrossRef]

- Ren, S.; Zhou, D.; He, S.; Feng, J.; Wang, X. Shunted self-attention via multi-scale token aggregation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10853–10862. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Yu, H.; Li, G.; Zhang, W.; Huang, Q.; Du, D.; Tian, Q.; Sebe, N. The unmanned aerial vehicle benchmark: Object detection, tracking and baseline. Int. J. Comput. Vis. 2020, 128, 1141–1159. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems, Kuala Lumpur, Malaysia, 10–12 July 2024; pp. 1–6. [Google Scholar]

- Chen, L.; Liu, C.; Li, W.; Xu, Q.; Deng, H. DTSSNet: Dynamic training sample selection network for UAV object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered object detection in aerial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8311–8320. [Google Scholar]

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive sparse convolutional networks with global context enhancement for faster object detection on drone images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 13435–13444. [Google Scholar]

- Duan, C.; Wei, Z.; Zhang, C.; Qu, S.; Wang, H. Coarse-grained density map guided object detection in aerial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 2789–2798. [Google Scholar]

- Zhou, L.; Liu, Z.; Zhao, H.; Hou, Y.E.; Liu, Y.; Zuo, X.; Dang, L. A multi-scale object detector based on coordinate and global information aggregation for UAV aerial images. Remote Sens. 2023, 15, 3468. [Google Scholar] [CrossRef]

- Lin, H.; Zhou, J.; Gan, Y.; Vong, C.M.; Liu, Q. Novel up-scale feature aggregation for object detection in aerial images. Neurocomputing 2020, 411, 364–374. [Google Scholar] [CrossRef]

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded Sparse Query for Accelerating High-Resolution Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 13668–13677. [Google Scholar]

- Li, C.; Yang, T.; Zhu, S.; Chen, C.; Guan, S. Density Map Guided Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Zhang, H.; Liu, K.; Gan, Z.; Zhu, G.N. UAV-DETR: Efficient end-to-end object detection for unmanned aerial vehicle imagery. arXiv 2025, arXiv:2501.01855. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Liu, C.; Gao, G.; Huang, Z.; Hu, Z.; Liu, Q.; Wang, Y. Yolc: You only look clusters for tiny object detection in aerial images. IEEE Trans. Intell. Transp. Syst. 2024, 25, 13863–13875. [Google Scholar] [CrossRef]

- Roh, B.; Shin, J.; Shin, W.; Kim, S. Sparse detr: Efficient end-to-end object detection with learnable sparsity. arXiv 2021, arXiv:2111.14330. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Wei, Z.; Duan, C.; Song, X.; Tian, Y.; Wang, H. Amrnet: Chips augmentation in aerial images object detection. arXiv 2020, arXiv:2009.07168. [Google Scholar] [CrossRef]

- Deng, S.; Li, S.; Xie, K.; Song, W.; Liao, X.; Hao, A.; Qin, H. A global-local self-adaptive network for drone-view object detection. IEEE Trans. Image Process. 2020, 30, 1556–1569. [Google Scholar] [CrossRef]

- Meethal, A.; Granger, E.; Pedersoli, M. Cascaded zoom-in detector for high resolution aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2046–2055. [Google Scholar]

- Song, G.; Du, H.; Zhang, X.; Bao, F.; Zhang, Y. Small object detection in unmanned aerial vehicle images using multi-scale hybrid attention. Eng. Appl. Artif. Intell. 2024, 128, 107455. [Google Scholar] [CrossRef]

- Zhu, C.; He, Y.; Savvides, M. Feature selective anchor-free module for single-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 840–849. [Google Scholar]

- Gao, P.; Zheng, M.; Wang, X.; Dai, J.; Li, H. Fast convergence of detr with spatially modulated co-attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3621–3630. [Google Scholar]

- Liu, Z.; Gao, G.; Sun, L.; Fang, Z. HRDNet: High-Resolution Detection Network for Small Objects. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo, Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Scrdet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8232–8241. [Google Scholar]

- Yang, X.; Yan, J.; Liao, W.; Yang, X.; Tang, J.; He, T. Scrdet++: Detecting small, cluttered and rotated objects via instance-level feature denoising and rotation loss smoothing. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2384–2399. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Dai, L.; Liu, H.; Tang, H.; Wu, Z.; Song, P. Ao2-detr: Arbitrary-oriented object detection transformer. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2342–2356. [Google Scholar] [CrossRef]

- Zeng, Y.; Chen, Y.; Yang, X.; Li, Q.; Yan, J. ARS-DETR: Aspect ratio-sensitive detection transformer for aerial oriented object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5610315. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3det: Refined single-stage detector with feature refinement for rotating object. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 3163–3171. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef]

- Zhang, G.; Lu, S.; Zhang, W. CAD-Net: A context-aware detection network for objects in remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10015–10024. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar]

| With SAFM? | With MJFPN? | With AEHead? | mAP | mAP50 | mAP75 |

|---|---|---|---|---|---|

| ✗ | ✗ | ✗ | 29.4 | 47.7 | 30.7 |

| ✓ | ✗ | ✗ | 31.1 | 50.1 | 32.2 |

| ✗ | ✓ | ✗ | 30.2 | 49.4 | 31.3 |

| ✗ | ✗ | ✓ | 29.8 | 48.4 | 31.0 |

| ✓ | ✓ | ✗ | 31.9 | 51.6 | 33.4 |

| ✓ | ✗ | ✓ | 31.5 | 50.9 | 32.4 |

| ✗ | ✓ | ✓ | 31.4 | 51.4 | 32.9 |

| ✓ | ✓ | ✓ | 32.8 | 52.2 | 34.1 |

| Kernel Size (k,d) | RFs in Sequence | mAP |

|---|---|---|

| (3, 1), (3, 2) | 3 × 3, 7 × 7 | 32.3 |

| (3, 1), (5, 2) | 3 × 3, 11 × 11 | 32.6 |

| (5, 1), (5, 2) | 5 × 5, 13 × 13 | 32.4 |

| (3, 1), (3, 2), (3, 4) | 3 × 3, 7 × 7, 15 × 15 | 32.8 |

| (5, 1), (5, 2), (5, 4) | 5 × 5, 13 × 13, 29 × 29 | 32.1 |

| (3, 1), (5, 2), (7, 4) | 3 × 3, 11 × 11, 35 × 35 | 32.0 |

| With MLC? | With WF? | With FAN? | mAP |

|---|---|---|---|

| ✗ | ✗ | ✗ | 31.5 |

| ✓ | ✗ | ✗ | 31.8 |

| ✗ | ✓ | ✗ | 32.0 |

| ✓ | ✓ | ✗ | 32.2 |

| ✓ | ✓ | ✓ | 32.8 |

| ✗ | ✓ | ✓ | 32.4 |

| Number of Regions | Top-k | mAP | Time (ms) |

|---|---|---|---|

| N = 5 | k = 4 | 32.4 | 3.43 |

| N = 7 | k = 4 | 32.8 | 3.20 |

| N = 9 | k = 4 | 32.6 | 3.13 |

| N = 7 | k = 2 | 32.3 | 3.09 |

| N = 7 | k = 6 | 33.0 | 3.42 |

| N = 7 | k = 8 | 32.6 | 3.60 |

| Attention Heads | Kernel Size | mAP |

|---|---|---|

| 2 | 3 × 3 | 32.2 |

| 4 | 3 × 3 | 32.5 |

| 8 | 3 × 3 | 32.8 |

| 16 | 3 × 3 | 32.7 |

| 8 | 5 × 5 | 32.5 |

| Methods | Backbone | mAP | mAP50 | mAP75 | mAPsmall | mAPmedium | mAPlarge |

|---|---|---|---|---|---|---|---|

| RetinaNet [53] | ResNet50 | 19.4 | 35.9 | 18.5 | 14.1 | 29.5 | 33.7 |

| FRCNN [9] | ResNet50 | 21.5 | 40.0 | 20.6 | 15.4 | 34.6 | 37.1 |

| YOLOv8 [54] | CSPDarknet | 23.9 | 39.8 | 24.4 | 14.4 | 32.3 | 34.2 |

| DTSSNet [55] | MobileNetV2 | 25.5 | 41.1 | 26.9 | 18.6 | 34.3 | 41.2 |

| ClustDet [56] | ResNet50 | 26.7 | 50.6 | 24.7 | 17.6 | 38.9 | 51.4 |

| CEASC [57] | ResNet18 | 28.7 | 50.7 | 28.4 | – | – | – |

| CDMNet [58] | ResNeXt101 | 29.7 | 50.0 | 30.9 | 21.2 | 41.8 | 42.9 |

| CGMDet [59] | CSPDarknet | 29.3 | 50.9 | 29.4 | 20.2 | 40.6 | 47.4 |

| HawkNet [60] | ResNet50 | 25.6 | 44.3 | 25.8 | 19.9 | 36.0 | 39.1 |

| QueryDet [61] | CSPDarknet | 28.3 | 48.1 | 28.8 | – | – | – |

| DMNet [62] | ResNet101 | 28.5 | 48.1 | 29.4 | 20.0 | 39.7 | 57.1 |

| UAV-DETR [63] | ResNet50 | 31.5 | 51.1 | – | – | – | – |

| RT-DETR [38] | ResNet50 | 28.4 | 47.0 | – | – | – | – |

| D-DETR [64] | ResNet50 | 27.1 | 42.2 | – | – | – | – |

| YOLC [65] | ResNet101 | 28.9 | 51.4 | 28.3 | 20.1 | 41.6 | 47.3 |

| MCSA-Net | CSPDarknet | 32.8 | 52.2 | 34.1 | 21.4 | 43.7 | 48.4 |

| Model | Parameters (M) | FLOPs(G) | mAP | mAP50 |

|---|---|---|---|---|

| Sparse DETR [66] | 40.9 | 121.0 | 27.3 | 42.5 |

| RT-DETR-R18 [38] | 20.0 | 60.0 | 26.7 | 44.6 |

| RT-DETR-R50 [38] | 42.0 | 136.0 | 28.4 | 47.0 |

| UAV-DETR-R18 [63] | 20.0 | 77.0 | 29.8 | 48.8 |

| UAV-DETR-R50 [63] | 42.0 | 170.0 | 31.5 | 51.1 |

| MCSA-Net | 14.1 | 85.5 | 32.8 | 52.2 |

| Model | mAP | mAP50 | FPS | Parameters (M) | FLOPs(G) |

|---|---|---|---|---|---|

| YOLOv5n | 28.4 | 46.3 | 25.7 | 9.0 | 23.9 |

| YOLOv5s | 22.8 | 38.3 | 32.1 | 2.5 | 7.1 |

| YOLOv8n | 23.9 | 39.8 | 31.5 | 3.0 | 8.1 |

| YOLOv8s | 29.4 | 47.7 | 24.3 | 11.1 | 28.5 |

| MCSA-Net | 32.8 | 52.2 | 10.6 | 14.1 | 85.5 |

| Methods | Backbone | mAP | mAP50 | mAP75 | mAPsmall | mAPmedium | mAPlarge |

|---|---|---|---|---|---|---|---|

| DMNet [62] | ResNet50 | 24.6 | 14.7 | 16.3 | 9.3 | 26.2 | 35.2 |

| ClusDet [56] | ResNet50 | 26.5 | 13.7 | 12.5 | 9.1 | 25.1 | 31.2 |

| YOLOv8 [54] | CSPDarknet | 28.2 | 16.2 | 16.8 | 10.7 | 28.1 | 25.3 |

| FRCNN [9] | ResNet50 | 23.4 | 11.0 | 8.4 | 8.1 | 20.2 | 26.5 |

| CenterNet [67] | Hourglass104 | 26.7 | 13.2 | 11.8 | 7.8 | 26.6 | 13.9 |

| CESAC [57] | ResNet18 | 30.9 | 17.1 | 17.8 | – | – | – |

| AMRNet [68] | ResNet50 | 30.4 | 18.2 | 19.8 | 10.3 | 31.3 | 33.5 |

| GLSAN [69] | ResNet50 | 28.1 | 17.0 | 18.8 | – | – | – |

| CZDDet [70] | ResNet50 | 35.5 | 19.3 | 21.3 | 13.0 | 31.3 | 36.0 |

| QueryDet [61] | ResNet50 | 36.1 | 18.9 | 20.6 | 12.9 | 30.9 | 25.6 |

| CDMNet [58] | ResNet50 | 35.5 | 20.7 | 22.4 | 13.9 | 33.5 | 19.8 |

| MHA-YOLO [71] | CSPDarknet | 37.2 | 21.9 | 21.5 | 14.3 | 34.2 | 37.2 |

| MCSA-Net | CSPDarknet | 35.7 | 23.6 | 28.0 | 20.2 | 29.3 | 28.9 |

| Method | mAP | ||

|---|---|---|---|

| Origin | Night | Blur | |

| Baseline | 29.4 | 25.2 (↓ 4.2) | 18.9 (↓ 10.5) |

| MCSA-Net | 35.7 | 32.1 (↓ 3.6) | 25.6 (↓ 10.1) |

| Methods | Backbone | mAP | mAP50 | mAP75 | mAPsmall | mAPmedium | mAPlarge |

|---|---|---|---|---|---|---|---|

| RetinaNet [53] | ResNeXt101 | 40.8 | 61.1 | 44.1 | 24.1 | 44.2 | 51.2 |

| FRCNN [9] | ResNet101 | 34.9 | 55.7 | 37.4 | 15.6 | 38.7 | 50.9 |

| FCOS [16] | ResNeXt101 | 44.7 | 64.1 | 48.4 | 27.6 | 47.5 | 55.6 |

| QueryDet [61] | ResNet50 | 39.3 | 59.6 | 41.9 | 24.9 | 42.3 | 51.1 |

| YOLOv8 [54] | CSPDarknet | 41.3 | 59.3 | 49.5 | 26.7 | 38.5 | 47.8 |

| FSAF [72] | ResNeXt101 | 42.9 | 63.8 | 46.3 | 26.6 | 46.2 | 52.7 |

| SMCA-DETR [73] | ResNet50 | 45.6 | 65.5 | 49.1 | 25.9 | 49.3 | 62.6 |

| D-DETR [64] | ResNet50 | 46.2 | 65.2 | 50.0 | 28.8 | 49.2 | 61.7 |

| HRDNet [74] | ResNet101 | 47.4 | 66.9 | 51.8 | 32.1 | 50.5 | 55.8 |

| MSCA-Net | CSPDarknet | 48.3 | 68.7 | 52.6 | 34.5 | 51.2 | 55.6 |

| Methods | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SCRDet [75] | 89.98 | 80.65 | 52.09 | 68.36 | 68.36 | 60.32 | 72.41 | 90.85 | 87.94 | 86.86 | 65.02 | 66.68 | 66.25 | 68.24 | 65.21 | 72.67 |

| SCRDet++ [76] | 90.01 | 82.32 | 61.94 | 68.62 | 69.92 | 81.17 | 78.83 | 90.86 | 86.32 | 85.10 | 65.10 | 61.12 | 77.69 | 80.68 | 64.25 | 76.24 |

| RoI-Trans [77] | 88.64 | 78.52 | 43.44 | 75.92 | 68.81 | 73.68 | 83.59 | 90.74 | 77.27 | 81.46 | 58.39 | 53.54 | 62.83 | 58.93 | 47.67 | 69.56 |

| AO2-DETR [78] | 87.99 | 79.46 | 45.74 | 66.64 | 78.90 | 73.90 | 73.30 | 90.40 | 80.55 | 85.89 | 55.19 | 63.63 | 51.83 | 70.15 | 60.04 | 70.91 |

| ARS-DETR [79] | 86.61 | 77.26 | 48.84 | 66.76 | 78.38 | 78.96 | 87.40 | 90.61 | 82.76 | 82.19 | 54.02 | 62.61 | 72.64 | 72.80 | 64.96 | 73.79 |

| Det [80] | 89.80 | 83.77 | 48.11 | 66.77 | 78.76 | 83.27 | 87.84 | 90.82 | 85.38 | 85.51 | 65.67 | 62.68 | 67.53 | 78.56 | 72.62 | 76.47 |

| Glid Vertex [81] | 89.64 | 85.00 | 52.26 | 77.34 | 73.01 | 73.14 | 86.82 | 90.74 | 79.02 | 86.81 | 59.55 | 70.91 | 72.94 | 70.68 | 57.32 | 75.02 |

| CADNet [82] | 87.80 | 82.40 | 49.40 | 73.50 | 71.10 | 63.50 | 76.60 | 90.90 | 79.20 | 73.30 | 48.40 | 60.90 | 62.00 | 67.00 | 62.20 | 69.90 |

| O-RCNN [83] | 89.46 | 82.12 | 54.78 | 70.86 | 78.93 | 83.00 | 88.20 | 90.90 | 87.50 | 84.68 | 63.97 | 67.69 | 74.94 | 64.84 | 52.28 | 75.87 |

| MCSA-Net | 90.55 | 81.91 | 48.54 | 72.58 | 79.57 | 78.63 | 87.81 | 90.04 | 88.19 | 88.64 | 58.64 | 68.36 | 77.66 | 81.81 | 67.36 | 77.35 |

| Explanation of each category: | ||||||||||||||||

| Full Name | plane | baseball diamond | bridge | ground track field | small vehicle | large vehicle | ship | tennis court | basket- ball court | storage tank | soccer- ball field | round- about | harbor | swim- ming pool | heli- copter | – |

| Noise Type | MCSA-Net | YOLOv8s | |||||

|---|---|---|---|---|---|---|---|

| mAP | mAP50 | mAP75 | mAP | mAP50 | mAP75 | ||

| Origin | 32.8 | 52.2 | 34.1 | 29.4 | 47.7 | 30.7 | |

| Pixel dropout | 28.8 | 47.2 | 29.7 | 25.4 | 42.6 | 25.9 | |

| Gaussian | 21.8 | 36.4 | 22.4 | 17.4 | 29.5 | 17.6 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; He, G.; Hu, Y. Multi-Level Contextual and Semantic Information Aggregation Network for Small Object Detection in UAV Aerial Images. Drones 2025, 9, 610. https://doi.org/10.3390/drones9090610

Liu Z, He G, Hu Y. Multi-Level Contextual and Semantic Information Aggregation Network for Small Object Detection in UAV Aerial Images. Drones. 2025; 9(9):610. https://doi.org/10.3390/drones9090610

Chicago/Turabian StyleLiu, Zhe, Guiqing He, and Yang Hu. 2025. "Multi-Level Contextual and Semantic Information Aggregation Network for Small Object Detection in UAV Aerial Images" Drones 9, no. 9: 610. https://doi.org/10.3390/drones9090610

APA StyleLiu, Z., He, G., & Hu, Y. (2025). Multi-Level Contextual and Semantic Information Aggregation Network for Small Object Detection in UAV Aerial Images. Drones, 9(9), 610. https://doi.org/10.3390/drones9090610