RAEM-SLAM: A Robust Adaptive End-to-End Monocular SLAM Framework for AUVs in Underwater Environments

Abstract

Highlights

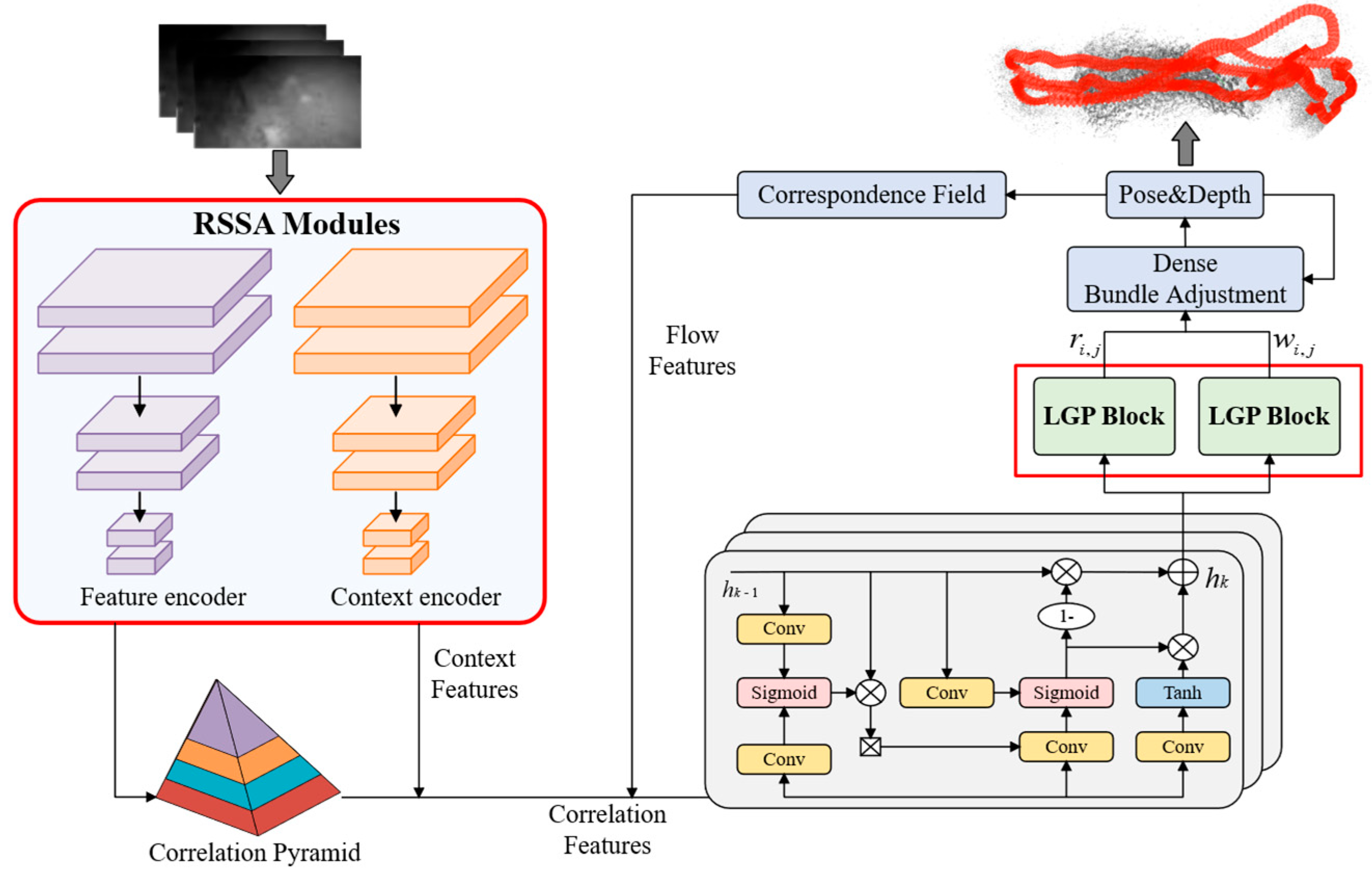

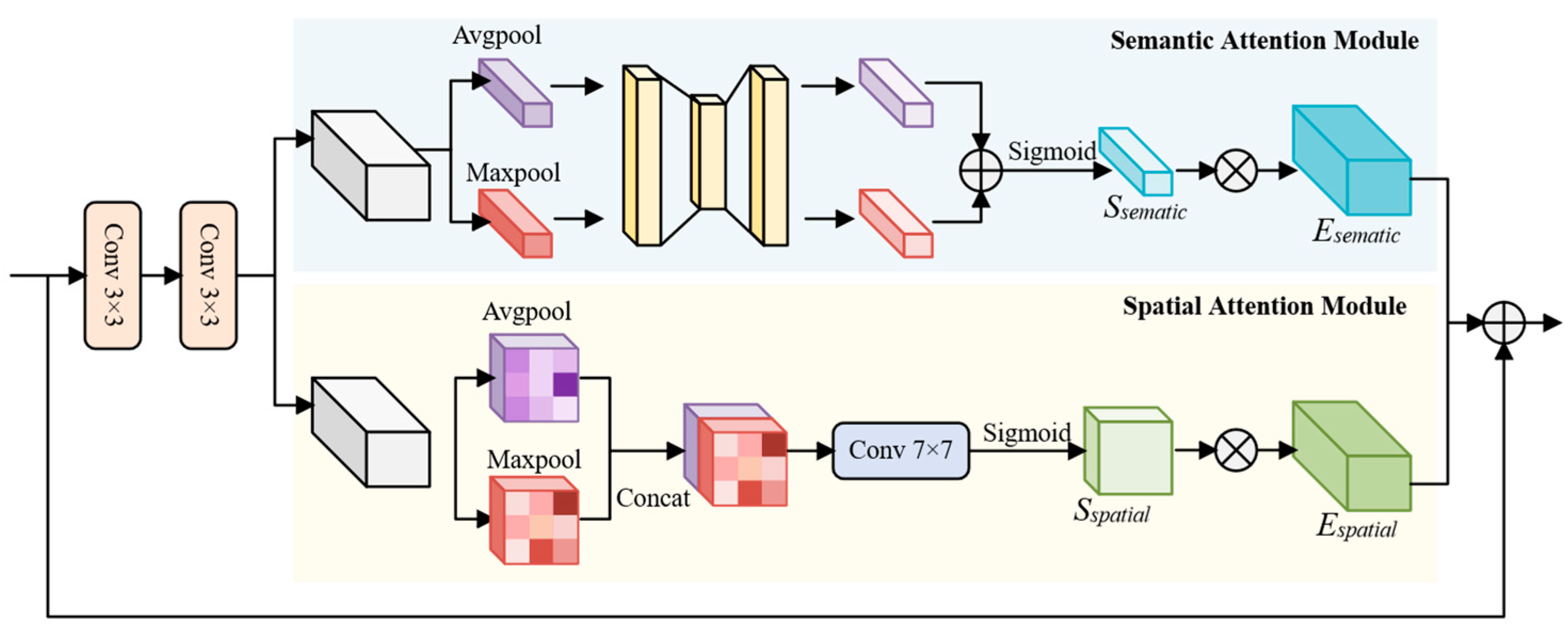

- We proposed RAEM-SLAM, a robust adaptive end-to-end monocular SLAM framework for AUVs in underwater environments. It integrates novel Residual Semantic–Spatial Attention Modules (RSSA) to enhance feature robustness against poor illumination and dynamic interference, and Local–Global Perception Block (LGP) for multi-scale motion perception.

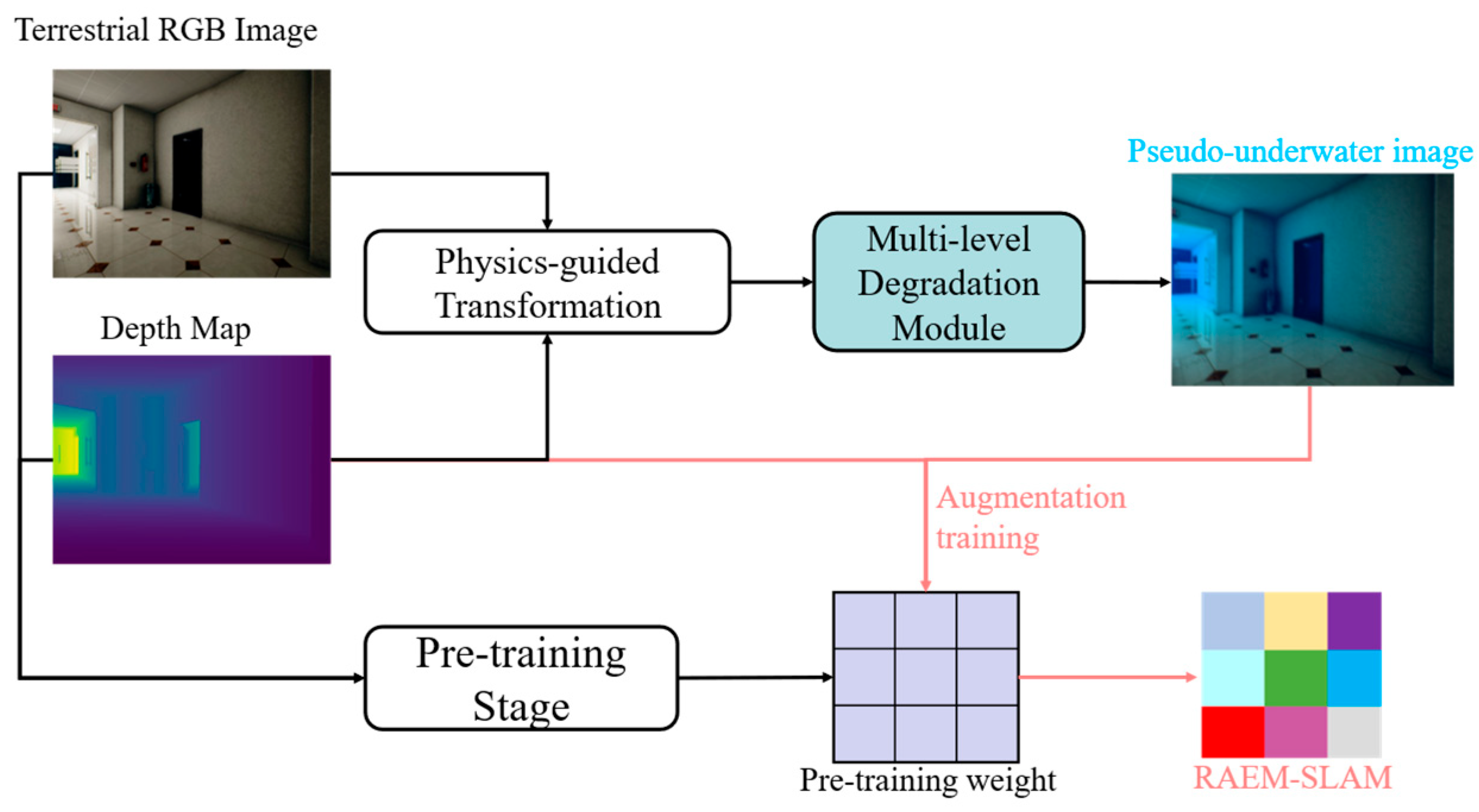

- We designed a Physics-guided Underwater Adaptive Augmentation (PUAA) method, dynamically converting terrestrial datasets into pseudo-underwater images by simulating light attenuation, scattering, and noise to bridge domain gaps for effective training.

- RAEM-SLAM, equipped with integrated RSSA and LGP, enables accurate real-time trajectory estimation and mapping for AUVs in GPS-denied underwater environments, advancing the autonomous exploration of AUVs in unknown regions (e.g., deep-sea archaeology, resource exploitation).

- PUAA significantly improves the adaptability and robustness of the system in complex underwater environments through realistic domain transformation.

Abstract

1. Introduction

- We propose RAEM-SLAM, a robust adaptive end-to-end monocular SLAM framework specifically designed for AUVs in underwater environments.

- We propose a physics-guided underwater adaptive augmentation method that dynamically transforms terrestrial scene datasets into physically realistic pseudo-underwater images for the training of RAEM-SLAM, enhancing its adaptability in complex underwater environments.

- We design and integrate a Residual Semantic–Spatial Attention Module into the feature extraction network to enhance the accuracy and stability of feature learning in underwater scenes.

- We embed a Local–Global Perception Block during the state update stage. This block fuses multi-scale local details with global information to enhance the system’s ability to perceive AUV motion, further improving trajectory estimation accuracy.

2. Related Work

2.1. Deep Learning in Visual SLAM

2.2. Underwater Visual SLAM

2.3. Semantic–Positional Feature Fusion

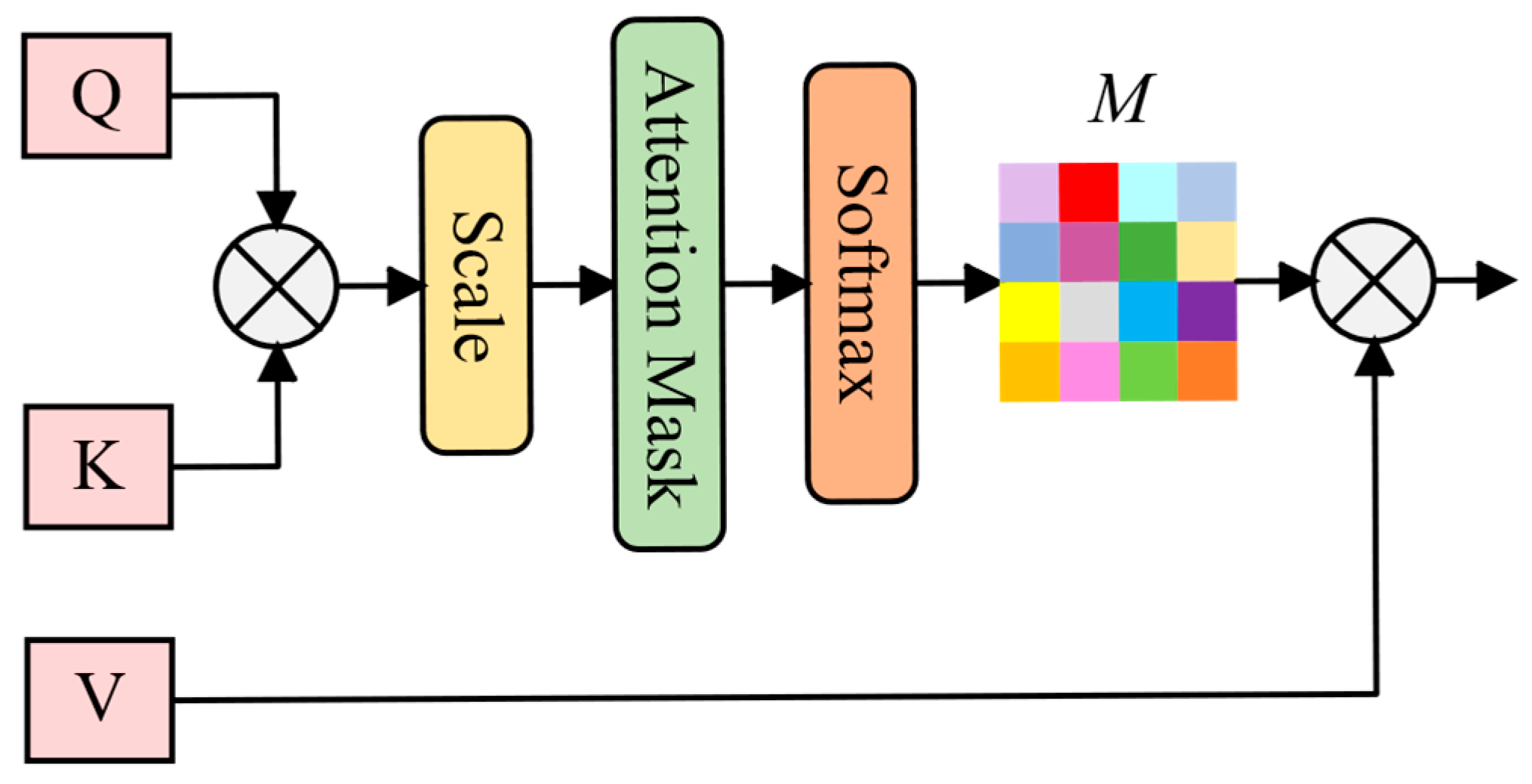

2.4. Local–Global Feature Fusion

3. Method

3.1. Overall Architecture

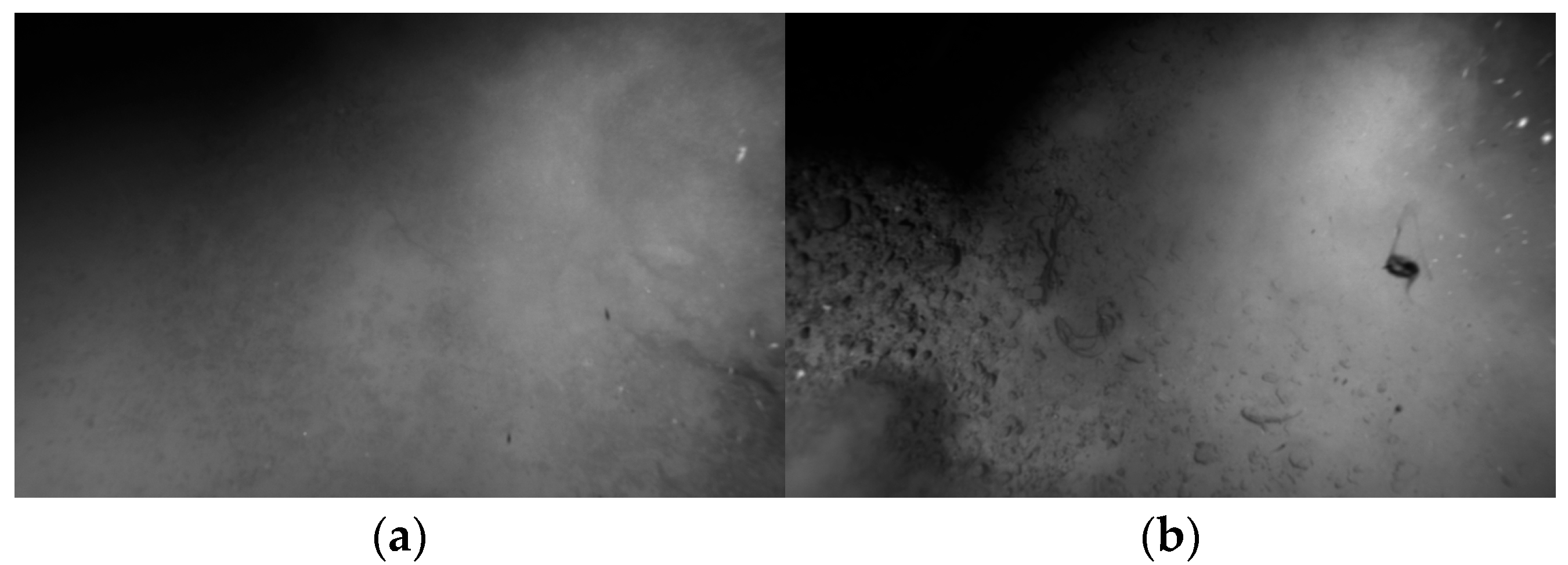

3.2. Physics-Guided Underwater Adaptive Augmentation

3.3. Residual Semantic–Spatial Attention Module

3.4. Local–Global Perception Block

3.4.1. Multi-Scale Local Perception

3.4.2. Global Perception Block

3.5. Loss Function

4. Experiments

4.1. Evaluation Metrics

4.2. Implemental Details

4.3. Ablation Study and Computational Efficiency

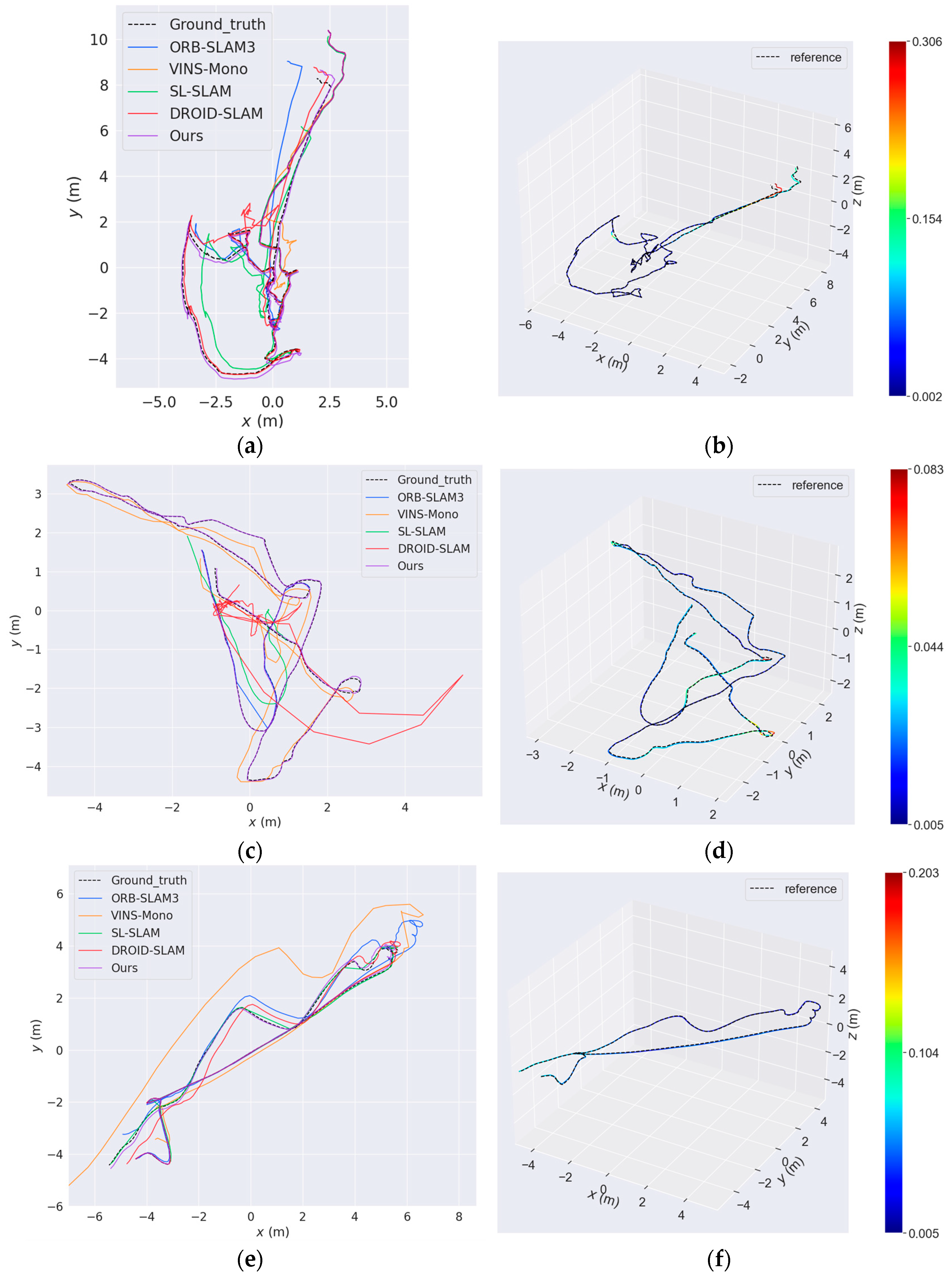

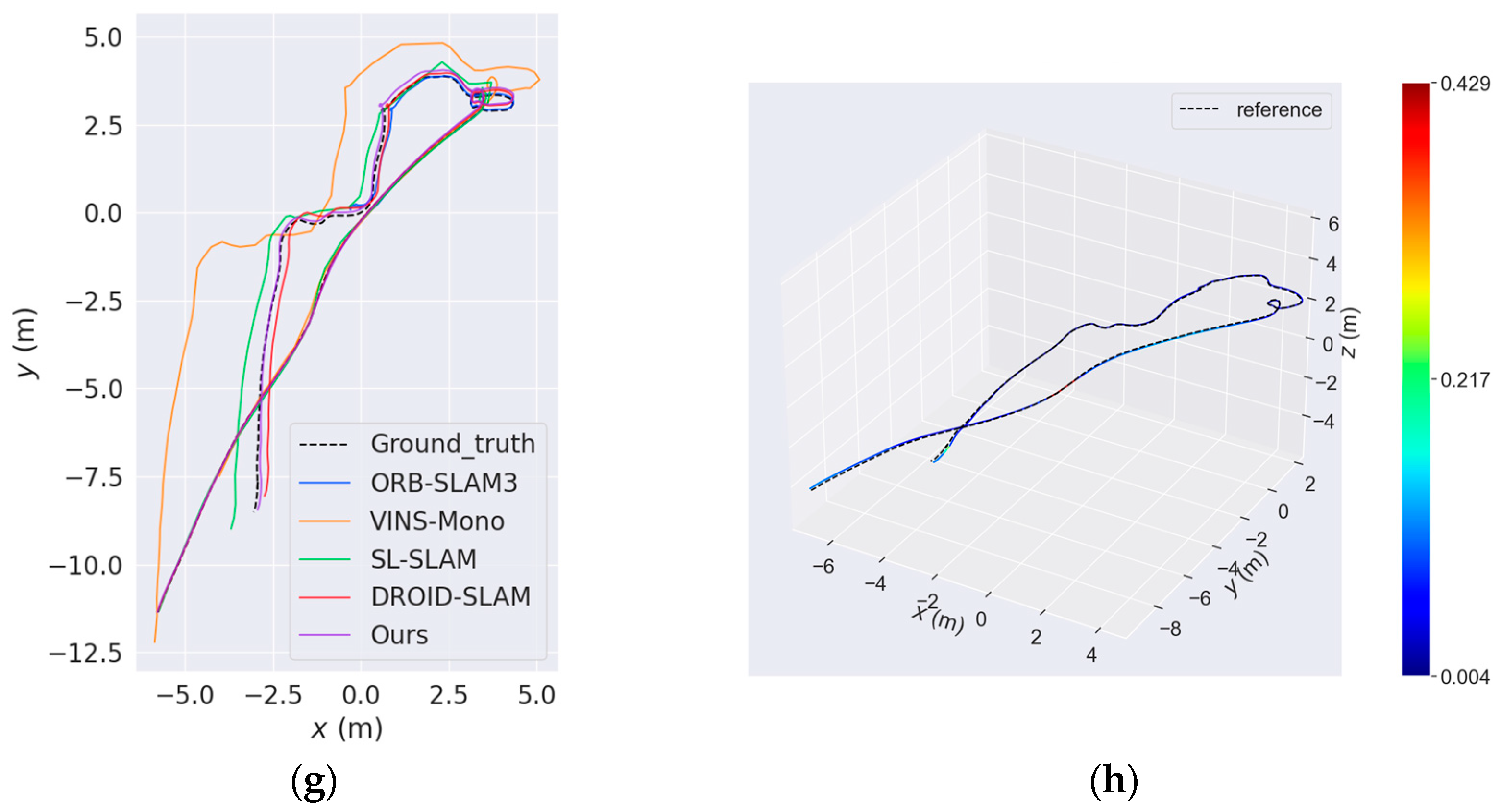

4.4. Comparative Experiment

4.4.1. Land Scene

4.4.2. Underwater Scene

- AQUALOC dataset

- 2.

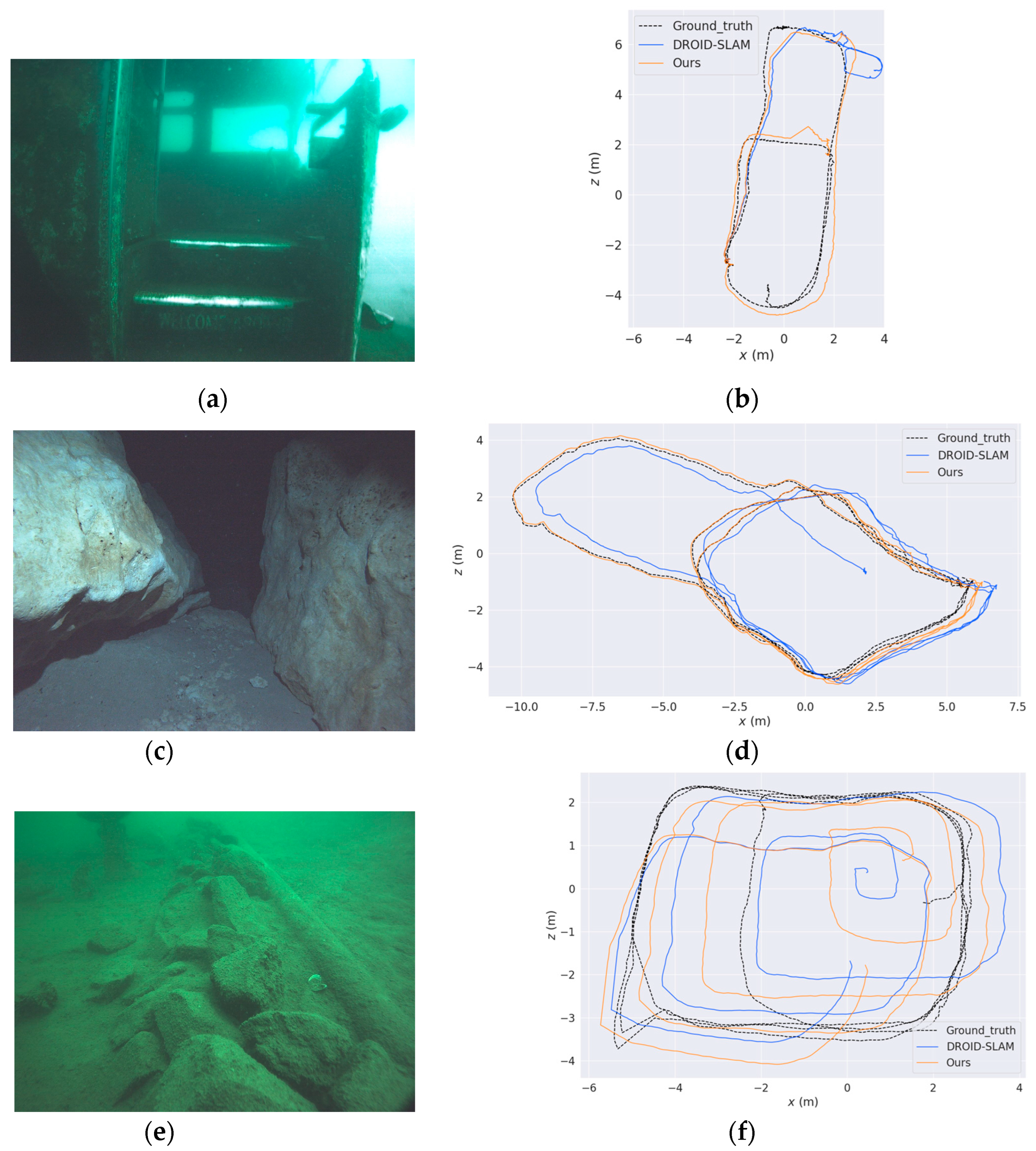

- AFRL dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Palomer, A.; Ridao, P.; Ribas, D. Inspection of an Underwater Structure Using Point-cloud SLAM with an AUV and a Laser Scanner. J. Field Robot. 2019, 36, 1333–1344. [Google Scholar] [CrossRef]

- Nauert, F.; Kampmann, P. Inspection and Maintenance of Industrial Infrastructure with Autonomous Underwater Robots. Front. Robot. AI 2023, 10, 1240276. [Google Scholar] [CrossRef]

- Allotta, B.; Costanzi, R.; Ridolfi, A.; Colombo, C.; Bellavia, F.; Fanfani, M.; Pazzaglia, F.; Salvetti, O.; Moroni, D.; Pascali, M.A.; et al. The ARROWS Project: Adapting and Developing Robotics Technologies for Underwater Archaeology. IFAC-Pap. 2015, 48, 194–199. [Google Scholar] [CrossRef]

- González-García, J.; Gómez-Espinosa, A.; Cuan-Urquizo, E.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Cabello, J.A.E. Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions. Appl. Sci. 2020, 10, 1256. [Google Scholar] [CrossRef]

- Zhang, B.; Ji, D.; Liu, S.; Zhu, X.; Xu, W. Autonomous Underwater Vehicle Navigation: A Review. Ocean. Eng. 2023, 273, 113861. [Google Scholar] [CrossRef]

- Civera, J.; Grasa, O.G.; Davison, A.J.; Montiel, J.M.M. 1-Point RANSAC for Extended Kalman Filtering: Application to Real-time Structure from Motion and Visual Odometry. J. Field Robot. 2010, 27, 609–631. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13 November 2007; pp. 225–234. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014, 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef]

- Köser, K.; Frese, U. Challenges in Underwater Visual Navigation and SLAM. In AI Technology for Underwater Robots; Kirchner, F., Straube, S., Kühn, D., Hoyer, N., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 125–135. ISBN 978-3-030-30683-0. [Google Scholar]

- Quattrini Li, A.; Coskun, A.; Doherty, S.M.; Ghasemlou, S.; Jagtap, A.S.; Modasshir, M.; Rahman, S.; Singh, A.; Xanthidis, M.; O’Kane, J.M.; et al. Experimental Comparison of Open Source Vision-Based State Estimation Algorithms. In Proceedings of the 2016 International Symposium on Experimental Robotics, Tokyo, Japan, 3–6 October 2016; Kulić, D., Nakamura, Y., Khatib, O., Venture, G., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 775–786. [Google Scholar]

- Joshi, B.; Rahman, S.; Kalaitzakis, M.; Cain, B.; Johnson, J.; Xanthidis, M.; Karapetyan, N.; Hernandez, A.; Li, A.Q.; Vitzilaios, N.; et al. Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019. [Google Scholar]

- Tang, J.; Ericson, L.; Folkesson, J.; Jensfelt, P. GCNv2: Efficient Correspondence Prediction for Real-Time SLAM. IEEE Robot. Autom. Lett. 2019, 4, 3505–3512. [Google Scholar] [CrossRef]

- Luo, H.; Liu, Y.; Guo, C.; Li, Z.; Song, W. SuperVINS: A Real-Time Visual-Inertial SLAM Framework for Challenging Imaging Conditions. IEEE Sens. J. 2025, 25, 26042–26050. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, B.; Fan, W.; Xiang, C. A Robust and Efficient Loop Closure Detection Approach for Hybrid Ground/Aerial Vehicles. Drones 2023, 7, 135. [Google Scholar] [CrossRef]

- Chen, C.; Wang, B.; Lu, C.X.; Trigoni, N.; Markham, A. Deep Learning for Visual Localization and Mapping: A Survey. IEEE Trans. Neural Netw. Learning Syst. 2023, 35, 1–21. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. DROID-SLAM: Deep Visual SLAM for Monocular, Stereo, and RGB-D Cameras. Adv. Neural Inf. Process. Syst. 2021, 34, 16558–16569. [Google Scholar]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. NICE-SLAM: Neural Implicit Scalable Encoding for SLAM. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12776–12786. [Google Scholar]

- Favorskaya, M.N. Deep Learning for Visual SLAM: The State-of-the-Art and Future Trends. Electronics 2023, 12, 2006. [Google Scholar] [CrossRef]

- Qi, Q.; Li, K.; Zheng, H.; Gao, X.; Hou, G.; Sun, K. SGUIE-Net: Semantic Attention Guided Underwater Image Enhancement with Multi-Scale Perception. IEEE Trans. on Image Process. 2022, 31, 6816–6830. [Google Scholar] [CrossRef]

- Chen, T.; Wang, N.; Chen, Y.; Kong, X.; Lin, Y.; Zhao, H.; Karimi, H.R. Semantic Attention and Relative Scene Depth-Guided Network for Underwater Image Enhancement. Eng. Appl. Artif. Intell. 2023, 123, 106532. [Google Scholar] [CrossRef]

- Liang, J.; Xu, F.; Yu, S. A Multi-Scale Semantic Attention Representation for Multi-Label Image Recognition with Graph Networks. Neurocomputing 2022, 491, 14–23. [Google Scholar] [CrossRef]

- Qi, L.; Qin, X.; Gao, F.; Dong, J.; Gao, X. SAWU-Net: Spatial Attention Weighted Unmixing Network for Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5505205. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating Spatial Attention and Standard Convolutional Operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Bai, W.; Zhang, Y.; Wang, L.; Liu, W.; Hu, J.; Huang, G. SADGFeat: Learning Local Features with Layer Spatial Attention and Domain Generalization. Image Vis. Comput. 2024, 146, 105033. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z.-X. Scale-Aware Trident Networks for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6053–6062. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Kim, H.; Yim, C. Swin Transformer Fusion Network for Image Quality Assessment. IEEE Access 2024, 12, 57741–57754. [Google Scholar] [CrossRef]

- Li, D.; Shi, X.; Long, Q.; Liu, S.; Yang, W.; Wang, F.; Wei, Q.; Qiao, F. DXSLAM: A Robust and Efficient Visual SLAM System with Deep Features. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020; pp. 4958–4965. [Google Scholar]

- Bruno, H.M.S.; Colombini, E.L. LIFT-SLAM: A Deep-Learning Feature-Based Monocular Visual SLAM Method. Neurocomputing 2021, 455, 97–110. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–33712. [Google Scholar]

- Lindenberger, P.; Sarlin, P.-E.; Pollefeys, M. LightGlue: Local Feature Matching at Light Speed. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1 October 2023; pp. 17581–17592. [Google Scholar]

- Peng, Q.; Xiang, Z.; Fan, Y.; Zhao, T.; Zhao, X. RWT-SLAM: Robust Visual SLAM for Highly Weak-Textured Environments. arXiv 2022, arXiv:2207.03539. [Google Scholar]

- Xiao, Z.; Li, S. A Real-Time, Robust and Versatile Visual-SLAM Framework Based on Deep Learning Networks. arXiv 2024, arXiv:2405.03413. [Google Scholar]

- Zhao, Z.; Wu, C. Light-SLAM: A Robust Deep-Learning Visual SLAM System Based on LightGlue under Challenging Lighting Conditions. IEEE Trans. Intell. Transp. Syst. 2025, 26, 9918–9931. [Google Scholar] [CrossRef]

- Shi, Y.; Li, R.; Shi, Y.; Liang, S. A Robust and Lightweight Loop Closure Detection Approach for Challenging Environments. Drones 2024, 8, 322. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6 November 2011; pp. 2564–2571. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. DeepVO: Towards End-to-End Visual Odometry with Deep Recurrent Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2043–2050. [Google Scholar]

- Li, R.; Wang, S.; Long, Z.; Gu, D. UnDeepVO: Monocular Visual Odometry Through Unsupervised Deep Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7286–7291. [Google Scholar]

- Zacchini, L.; Bucci, A.; Franchi, M.; Costanzi, R.; Ridolfi, A. Mono Visual Odometry for Autonomous Underwater Vehicles Navigation. In Proceedings of the OCEANS 2019—Marseille, Marseille, France, 17–20 June 2019; pp. 1–5. [Google Scholar]

- Ferrera, M.; Moras, J.; Trouvé-Peloux, P.; Creuze, V. Real-Time Monocular Visual Odometry for Turbid and Dynamic Underwater Environments. Sensors 2019, 19, 687. [Google Scholar] [CrossRef]

- Leonardi, M.; Stahl, A.; Brekke, E.F.; Ludvigsen, M. UVS: Underwater Visual SLAM—A Robust Monocular Visual SLAM System for Lifelong Underwater Operations. Auton. Robot. 2023, 47, 1367–1385. [Google Scholar] [CrossRef]

- Yang, J.; Gong, M.; Nair, G.; Lee, J.H.; Monty, J.; Pu, Y. Knowledge Distillation for Feature Extraction in Underwater VSLAM. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May 2023; pp. 5163–5169. [Google Scholar]

- Burguera, A.; Bonin-Font, F.; Font, E.G.; Torres, A.M. Combining Deep Learning and Robust Estimation for Outlier-Resilient Underwater Visual Graph SLAM. J. Mar. Sci. Eng. 2022, 10, 511. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Prudviraj, J.; Vishnu, C.; Mohan, C.K. M-FFN: Multi-Scale Feature Fusion Network for Image Captioning. Appl. Intell. 2022, 52, 14711–14723. [Google Scholar] [CrossRef]

- Wu, Y.; Yao, Q.; Fan, X.; Gong, M.; Ma, W.; Miao, Q. PANet: A Point-Attention Based Multi-Scale Feature Fusion Network for Point Cloud Registration. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Wang, R.; Lei, T.; Cui, R.; Zhang, B.; Meng, H.; Nandi, A.K. Medical Image Segmentation Using Deep Learning: A Survey. IET Image Process. 2022, 16, 1243–1267. [Google Scholar] [CrossRef]

- Liu, J.; Yang, H.; Zhou, H.-Y.; Yu, L.; Liang, Y.; Yu, Y.; Zhang, S.; Zheng, H.; Wang, S. Swin-UMamba†: Adapting Mamba-Based Vision Foundation Models for Medical Image Segmentation. IEEE Trans. Med. Imaging 2024, 2512913. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Vision and the Atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Jerlov, N.G. Optical Oceanography; Elsevier Oceanography Series; Elsevier: Amsterdam, The Netherlands, 1968; Volume 5, ISBN 978-0-444-40320-9. [Google Scholar]

- Solonenko, M.G.; Mobley, C.D. Inherent Optical Properties of Jerlov Water Types. Appl. Opt. 2015, 54, 5392. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A Benchmark for the Evaluation of RGB-D SLAM Systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Wang, W.; Zhu, D.; Wang, X.; Hu, Y.; Qiu, Y.; Wang, C.; Hu, Y.; Kapoor, A.; Scherer, S. TartanAir: A Dataset to Push the Limits of Visual SLAM. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC Micro Aerial Vehicle Datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems. IEEE Trans. Robot. 2017, 33, 249–265. [Google Scholar] [CrossRef]

- Zubizarreta, J.; Aguinaga, I.; Montiel, J.M.M. Direct Sparse Mapping. IEEE Trans. Robot. 2020, 36, 1363–1370. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Czarnowski, J.; Laidlow, T.; Clark, R.; Davison, A.J. DeepFactors: Real-Time Probabilistic Dense Monocular SLAM. IEEE Robot. Autom. Lett. 2020, 5, 721–728. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. DeepV2D: Video to Depth with Differentiable Structure from Motion. arXiv 2020, arXiv:1812.04605. [Google Scholar]

- Wang, W.; Hu, Y.; Scherer, S. TartanVO: A Generalizable Learning-Based VO. In Proceedings of the 2020 Conference on Robot Learning, Online, 16–18 November 2020. [Google Scholar]

- Ferrera, M.; Creuze, V.; Moras, J.; Trouvé-Peloux, P. AQUALOC: An Underwater Dataset for Visual–Inertial–Pressure Localization. Int. J. Robot. Res. 2019, 38, 1549–1559. [Google Scholar] [CrossRef]

- Rahman, S.; Li, A.Q.; Rekleitis, I. SVIn2: An Underwater SLAM System Using Sonar, Visual, Inertial, and Depth Sensor. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1861–1868. [Google Scholar]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

| Sequence 1 | Sequence 2 | Sequence 3 | Sequence 4 | Sequence 5 | Sequence 6 | Sequence 7 | Sequence 8 | Sequence 9 | Sequence 10 | Avg | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| No PUAA | 0.092 | 1.950 | 0.026 | 0.356 | 0.072 | 0.073 | 0.070 | 0.075 | 0.229 | 0.082 | 0.303 |

| No RSSA | 0.214 | 0.040 | 0.087 | 0.785 | 0.058 | 0.088 | 0.119 | 0.055 | 0.249 | 0.113 | 0.181 |

| No LGP | 0.217 | 0.086 | 0.039 | 0.160 | 0.168 | 0.152 | 0.081 | 0.049 | 0.251 | 0.065 | 0.127 |

| RAEM | 0.076 | 0.026 | 0.023 | 0.183 | 0.046 | 0.071 | 0.078 | 0.047 | 0.232 | 0.062 | 0.084 |

| MH01 | MH02 | MH03 | MH04 | MH05 | V101 | V102 | V103 | V201 | V202 | V203 | Avg | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Traditional | ORB-SLAM [8] | 0.071 | 0.067 | 0.071 | 0.082 | 0.060 | 0.015 | 0.020 | - | 0.021 | 0.018 | - | - |

| DSO [10] | 0.046 | 0.046 | 0.172 | 3.810 | 0.110 | 0.089 | 0.107 | 0.903 | 0.044 | 0.132 | 1.152 | 0.601 | |

| SVO [62] | 0.100 | 0.120 | 0.410 | 0.430 | 0.300 | 0.070 | 0.210 | - | 0.110 | 0.110 | 1.080 | - | |

| DSM [63] | 0.039 | 0.036 | 0.055 | 0.057 | 0.067 | 0.095 | 0.059 | 0.076 | 0.056 | 0.057 | 0.784 | 0.126 | |

| ORB-SLAM3 [64] | 0.016 | 0.027 | 0.028 | 0.138 | 0.072 | 0.033 | 0.015 | 0.033 | 0.023 | 0.029 | - | - | |

| Deep Learning | DeepFactors [65] | 1.587 | 1.479 | 3.139 | 5.331 | 4.002 | 1.520 | 0.679 | 0.900 | 0.876 | 1.905 | 1.021 | 2.040 |

| DeepV2D [66] | 0.739 | 1.144 | 0.752 | 1.492 | 1.567 | 0.981 | 0.801 | 1.570 | 0.290 | 2.202 | 2.743 | 1.298 | |

| DeepV2D (TartanAir) [66] | 1.614 | 1.492 | 1.635 | 1.775 | 1.013 | 0.717 | 0.695 | 1.483 | 0.839 | 1.052 | 0.591 | 1.173 | |

| TartanVO [67] | 0.639 | 0.325 | 0.550 | 1.153 | 1.021 | 0.447 | 0.389 | 0.622 | 0.433 | 0.749 | 1.152 | 0.680 | |

| DROID(Mono) [18] | 0.041 | 0.016 | 0.027 | 0.048 | 0.052 | 0.035 | 0.016 | 0.023 | 0.021 | 0.014 | 0.026 | 0.029 | |

| RAEM-SLAM | 0.013 | 0.020 | 0.022 | 0.031 | 0.038 | 0.026 | 0.026 | 0.019 | 0.019 | 0.014 | 0.012 | 0.022 |

| Sequence 1 | Sequence 2 | Sequence 3 | Sequence 4 | Sequence 5 | Sequence 6 | Sequence 7 | Sequence 8 | Sequence 9 | Sequence 10 | Avg | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| ORB-SLAM3(Mono) [64] | - | - | - | - | 0.274 | - | 0.581 | 0.101 | 0.332 | 0.301 | - |

| VINS-Mono [71] | - | 0.672 | 0.592 | 4.349 | 3.175 | 0.993 | 3.180 | - | - | 1.912 | - |

| SL-SLAM(Mono) [40] | 0.727 | - | - | - | 0.070 | 0.305 | 0.166 | 0.045 | 0.325 | 0.257 | - |

| DROID(Mono) [18] | 0.465 | 2.621 | 0.042 | 0.902 | 0.212 | 0.161 | 0.091 | 0.051 | 0.234 | 0.077 | 0.486 |

| RAEM-SLAM | 0.076 | 0.026 | 0.023 | 0.183 | 0.046 | 0.071 | 0.078 | 0.047 | 0.232 | 0.062 | 0.084 |

| Scene | DROID-SLAM | RAEM-SLAM (Ours) | Error Reduction |

|---|---|---|---|

| Submerged Bus | 2.386 | 1.198 | 49.8% |

| Cave | 1.185 | 0.458 | 61.4% |

| Fake Cemetery | 2.285 | 1.666 | 27.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Li, Y.; Luo, W.; Ding, X. RAEM-SLAM: A Robust Adaptive End-to-End Monocular SLAM Framework for AUVs in Underwater Environments. Drones 2025, 9, 579. https://doi.org/10.3390/drones9080579

Wu Y, Li Y, Luo W, Ding X. RAEM-SLAM: A Robust Adaptive End-to-End Monocular SLAM Framework for AUVs in Underwater Environments. Drones. 2025; 9(8):579. https://doi.org/10.3390/drones9080579

Chicago/Turabian StyleWu, Yekai, Yongjie Li, Wenda Luo, and Xin Ding. 2025. "RAEM-SLAM: A Robust Adaptive End-to-End Monocular SLAM Framework for AUVs in Underwater Environments" Drones 9, no. 8: 579. https://doi.org/10.3390/drones9080579

APA StyleWu, Y., Li, Y., Luo, W., & Ding, X. (2025). RAEM-SLAM: A Robust Adaptive End-to-End Monocular SLAM Framework for AUVs in Underwater Environments. Drones, 9(8), 579. https://doi.org/10.3390/drones9080579