Open-Vocabulary Object Detection in UAV Imagery: A Review and Future Perspectives

Abstract

1. Introduction

- Emergency rescue: After disasters, rescue teams can tell UAVs to look for important things like “people needing help”, “temporary shelters”, “red cross signs”, or “damaged buildings”. With OVOD, the UAV can understand these new instructions immediately without extra training.

- Wildlife protection: Conservationists can use UAVs to watch large natural areas for problems like hunting or logging. They can give commands like “find hunter camps”, “look for hurt elephants”, or “spot illegal cutting tools”, even if these things are not in the original training data.

- Smart cities: City officials can use UAVs with OVOD to check urban areas for problems. The system can understand commands like “find cars parked illegally near fire hydrants”, “look for big road holes”, or “find fallen branches blocking sidewalks”.

- To the best of our knowledge, this is the first survey to provide a systematic taxonomy of OVOD methods specifically for the UAV aerial imagery domain. We categorize existing studies based on their fundamental learning paradigms, which allows us to critically analyze the inherent trade-offs of each approach in the face of UAV-specific challenges.

- We go beyond separate discussions of OVOD and UAV vision by being the first to systematically align and confront the core principles of OVOD with the unique constraints of aerial scenes. This synthesis allows us to identify and clearly articulate a set of open research problems that exist specifically at the intersection of these two fields.

- Based on our in depth analysis of the current landscape and open challenges, we chart a clear and structured roadmap for the future. We outline six pivotal research directions, from lightweight models and multi-modal fusion to ethical considerations, and discuss their interdependencies, providing a valuable guide for researchers and practitioners.

2. Background

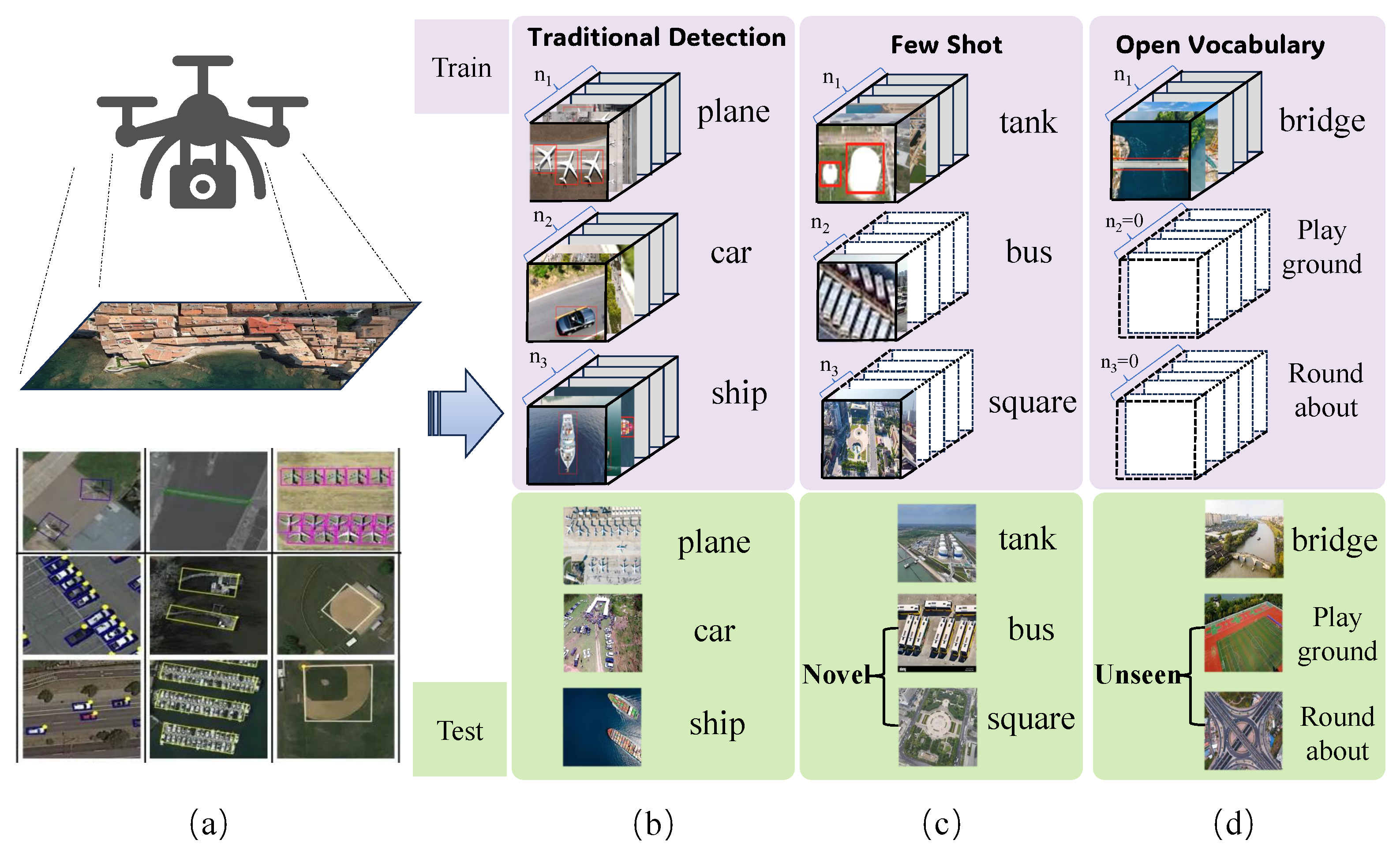

2.1. Traditional Object Detection

- R-CNN [24] was the progenitor of this approach, pioneering the use of a deep convolution network for feature extraction on region proposals generated by an external algorithm like Selective Search. While groundbreaking, its multi-stage training and slow inference limited its practical application.

- Fast R-CNN [25] significantly improved speed and efficiency by sharing the convolution feature computation across all proposals on an image. It introduced the RoIPool layer to extract fixed-size feature maps from variable-sized RoIs, enabling an end-to-end training process for the classification stage.

- Faster R-CNN [26] represented a major leap by integrating the proposal generation step directly into the network. It introduced the Region Proposal Network (RPN), a fully convolutional network that shares features with the detection network, allowing for nearly cost-free, data-driven region proposals and enabling a unified, end-to-end trainable detection framework. Due to its robustness and high accuracy, Faster R-CNN became a dominant baseline for numerous aerial object detection tasks.

- Mask R-CNN [27] extended Faster R-CNN by adding a parallel branch for predicting a pixel-level object mask in addition to the bounding box. It also introduced the RoIAlign layer, which replaced RoIPool to more precisely align the extracted features with the input, leading to significant gains in both detection and instance segmentation accuracy.

- YOLO [11,28,29] was the first model to frame object detection as a single regression problem, directly predicting bounding box coordinates and class probabilities from full images in one evaluation. Its architecture enabled unprecedented real-time performance, fundamentally shifting the research landscape.

- SSD [30] introduced the concept of using multiple feature maps from different layers of a network to detect objects at various scales. By making predictions from both deep (low-resolution) and shallow (high-resolution) feature maps, SSD significantly improved the detection of small objects, a pervasive challenge in UAV imagery.

- RetinaNet [31] identified the extreme imbalance between the foreground and background classes during training as a primary obstacle to the precision of a one-stage detector. It introduced Focal Loss [32], a novel loss function that dynamically adjusts the cross-entropy loss to down-weight the contribution of easy, well-classified examples, thereby focusing the training on a sparse set of hard-to-detect objects.

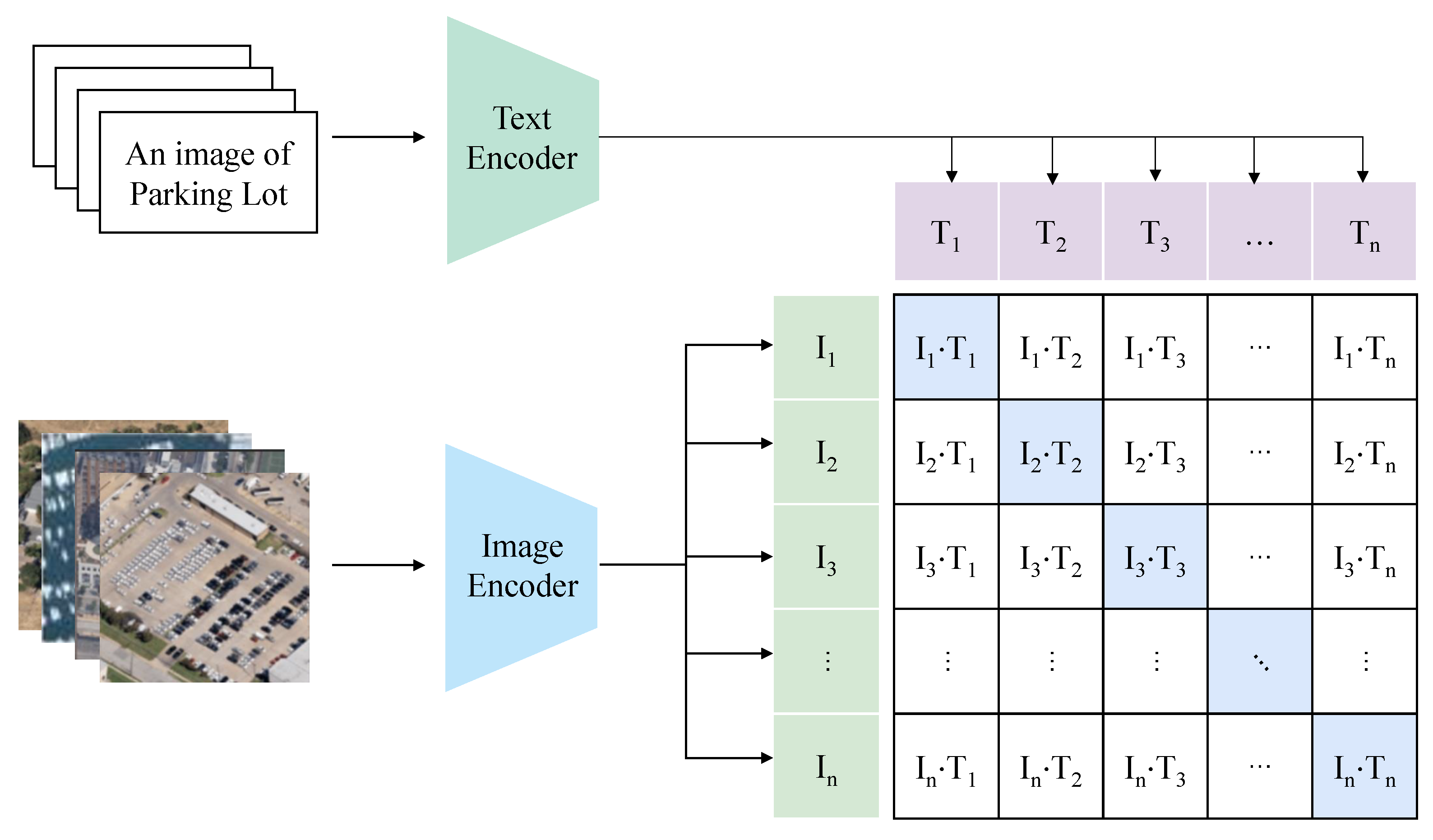

2.2. Fundamentals of Open-Vocabulary Object Detection

2.3. Unique Characteristics of UAV Imagery

- Small objects: This is perhaps the most frequently cited challenge in aerial imaging. Due to high flight altitudes and wide-angle lenses, objects of interest (e.g., people, vehicles) often occupy a minuscule portion of the image, sometimes spanning only a few dozen or even a handful of pixels. For traditional detectors, this leads to the loss of distinguishing features after successive down-sampling operations in deep neural networks. For OVOD, the challenge is even more nuanced: the low-resolution visual features extracted from such small objects may be too coarse and ambiguous to align reliably with a rich textual description in the VLM’s embedding space. The visual signature of a 10x10-pixel “person” is weak and could be easily confused with other small, vertical structures, making a confident visual–linguistic match difficult.

- High density and cluttered backgrounds: UAV platforms are often used to monitor crowded scenes like parking lots, public squares, or disaster sites. This results in images containing a high density of objects, often with significant occlusion. Differentiating between individual instances in a dense crowd or a packed car park is a severe test for any detector’s localization capabilities. Furthermore, aerial scenes are rife with complex and cluttered backgrounds—rooftop paraphernalia, varied terrain, foliage, and urban infrastructure. For an OVOD system, this clutter introduces a high number of “distractors”. The visual features of background elements (e.g., a complex pattern of shadows, an air conditioning unit) might accidentally have a high similarity score with one of the text prompts, leading to false positives.

- Varying viewpoints: The vast majority of images used to train VLMs like CLIP are taken from ground level, depicting objects from a frontal or near-frontal perspective. However, UAVs primarily capture imagery from nadir (top-down) or oblique viewpoints. This creates a significant domain gap. The visual appearance of a “car” or a “person” from directly above is drastically different from its appearance in a typical photograph. An OVOD model relying on CLIP’s pretrained knowledge may struggle because its internal concept of “car” is strongly tied to side views, not roof views. This viewpoint disparity can weaken the visual–linguistic alignment that is the cornerstone of OVOD’s success.

- Varying scales: The operational nature of UAVs, which can dynamically change their flight altitude, introduces extreme variations in object scale—often within the same mission or video sequence. An object that appears large when the UAV is flying low can become a tiny speck as it ascends. This multi-scale challenge requires a detector to be robustly invariant to scale. For OVOD, this means the visual encoder must produce consistent embeddings for the same object category across a wide range of resolutions, a non-trivial requirement that pushes the limits of standard VLM backbones.

- Challenging imaging conditions: UAV operations are not confined to perfect, sunny days. The quality of captured imagery can be significantly degraded by a variety of factors. Illumination changes (e.g., harsh sunlight creating deep shadows, or low-light conditions at dawn/dusk) can obscure object details. Adverse weather like rain, fog, or haze can reduce contrast and introduce artifacts. Finally, motion blur, caused by the UAV’s movement or a low shutter speed, can smear features. Each of these factors introduces noise and corrupts the input to the visual encoder, weakening the feature representations and making the subsequent alignment with clean text embeddings less reliable and more prone to error.

3. Methodology: OVOD for UAV Aerial Images

3.1. Pseudo-Labeling-Based Methods

3.2. CLIP-Driven Integration Methods

3.3. Summary and Comparison

4. Datasets and Evaluation Metrics

4.1. General OVOD Datasets

4.2. UAV-Specific Object Detection Datasets

- First, we observe a dramatic increase in category diversity. While conventional remote sensing datasets typically contain several dozen classes at most, open-vocabulary datasets like ORSD+ [51] and LAE-1M [50] push this to hundreds or even over a thousand categories, reflecting a significant step towards capturing real-world semantic diversity.

- Second, this leap in scale is enabled by a fundamental change in data creation methodology. Rather than relying solely on manual annotation, each of these pioneering datasets is constructed using custom-designed annotation engines. These engines are critically dependent on the powerful zero-shot and text-generation capabilities of modern VLMs, to not only scale up instance numbers but also to increase the semantic richness of the supervision, thus laying the groundwork for a new generation of foundation models for Earth observation.

4.3. Evaluation Metrics

- Average precision. The cornerstone of object detection evaluation is average precision (AP). AP provides a single-figure measure that summarizes the quality of a detector by considering both its ability to correctly classify objects (precision) and its ability to find all relevant objects (recall). To understand AP, we must first define its components: precision and recall. For a given object class, precision measures the fraction of correct predictions among all predictions made for that class. Recall measures the fraction of correct predictions among all ground-truth instances of that class. These two metrics can be formulated as follows:where true positives (TPs) are correctly detected objects, false positives (FPs) are incorrect detections, and false negatives (FNs) are missed ground-truth objects. A detection is typically considered a true positive if its intersection over union (IoU) with a ground-truth box is above a certain threshold. The IoU threshold is a critical hyperparameter that defines the required strictness of spatial accuracy. A widely used threshold is 0.5 (or 50%). Metrics reported with this threshold are often denoted as or mAP@0.5.

- Precision–recall. The precision–recall (PR) curve is an ideal detector which measures precision and recall simultaneously. However, there is often a trade-off: to increase recall (find more objects), a model may lower its confidence threshold, which can lead to more false positives and thus lower precision. The PR curve visualizes this trade-off by plotting precision against recall for various confidence thresholds.

- Calculating average precision. AP is conceptually defined as the area under the PR curve. To create a more stable and representative metric, modern evaluation protocols [88,89] employ an interpolation method. The precision at any given recall level is set to the maximum precision achieved at any recall level greater than or equal to it. This creates a monotonically decreasing PR curve, and the AP is the area under this interpolated curve.

- Mean average precision. Mean average precision (mAP) is the primary metric for object detection. It is calculated separately for the base and novel class sets. : This metric is computed over the set of base classes. It quantifies the model’s ability to retain its detection performance on the classes it was explicitly trained on. A high indicates that the model has not suffered from “catastrophic forgetting” while learning to generalize. : This is the most critical metric for OVOD. It is computed exclusively over the set of novel classes. It directly measures the model’s generalization power, its ability to locate and classify objects it has never seen before. A high signifies effective knowledge transfer from the seen to the unseen.

- Harmonic mean. To provide a single, balanced score that reflects a model’s overall OVOD capability, the harmonic mean (HM) of the base and novel mAP scores is widely used. It is calculated asThe HM is more informative than a simple arithmetic mean because it heavily penalizes models that exhibit a large disparity between base- and novel-class performance. For instance, a model that achieves a very high but a near-zero will receive a very low H-score. This metric thus encourages the development of models that achieve a strong balance between retaining knowledge of seen classes and successfully generalizing to new ones, which is the central goal of open-vocabulary object detection.

5. Challenges and Open Issues

5.1. Limitations of UAV Scenarios

5.1.1. The Domain Gap

- Domain adaptation for VLMs: How can we adapt pretrained VLMs to the aerial domain without compromising their open-vocabulary capabilities? Fine-tuning on limited aerial data risks overfitting and catastrophic forgetting of the vast knowledge learned during pretraining. Research into parameter-efficient fine-tuning (PEFT) techniques [96,97], such as adapters [98] and prompt tuning [99], is a promising direction.

- Synthetic data generation: Can we leverage simulation engines to generate large-scale, photorealistic aerial datasets with precise, multi-perspective annotations? This could help bridge the domain gap by exposing the VLM to top-down views during a secondary pretraining or fine-tuning stage.

- Viewpoint-invariant feature learning: Developing novel network architectures or training strategies that encourage the learning of viewpoint-invariant object representations is a key research goal. This might involve contrastive learning objectives that pull representations of the same object from different viewpoints closer in the embedding space.

5.1.2. Small-Object Detection

- High-resolution OVOD architectures: Designing OVOD models that can process high-resolution imagery efficiently and maintain fine-grained spatial detail throughout the network is crucial. This may involve exploring novel multi-scale backbones or attention mechanisms tailored for small-object feature preservation.

- Context-enhanced alignment: For small objects, surrounding context often provides critical clues. How can we design models that explicitly leverage contextual information (e.g., a “boat” is on “water”) to aid the visual–semantic alignment for the small object itself? This could involve graph-based reasoning or attention mechanisms that correlate object proposals with their environmental context.

- Super-resolution as a pre-processing step: Investigating the use of generative super-resolution techniques to “hallucinate” details for small objects before feature extraction could be a viable, albeit computationally expensive, strategy. The challenge is to ensure the generated details are faithful and do not introduce misleading artifacts.

5.1.3. Fine-Grained Recognition

- Hierarchical and attribute-based prompting: Instead of a single class name, can we use more descriptive, attribute-based prompts (e.g., “a long vehicle with a flat roof”, “a small four-wheeled vehicle”)? Developing methods that can parse and reason about such compositional queries is a key research area. Hierarchical prompting (e.g., querying for “vehicle” first, then refining to “truck” or “bus”) could also be a viable strategy.

- Injecting domain-specific knowledge: Can we explicitly inject domain knowledge into the model? For example, a knowledge graph could inform the model that “buses are typically longer than SUVs” or that “fishing boats are found near coastlines”. Integrating this symbolic knowledge with the model’s learned representations could significantly improve fine-grained accuracy.

- Few-shot fine-grained learning: In many applications, an operator may want to find a new, specific type of object. This requires the model to learn a new fine-grained category from just one or a few visual examples, a challenging few-shot learning problem within the OVOD context.

5.1.4. The Trade-Off Between Efficiency and Performance

- Model compression for OVOD: Research is urgently needed in adapting model compression techniques for OVOD models. These techniques include the following: Quantization: Reducing the precision of model weights to decrease memory usage and accelerate computation on compatible hardware. Pruning: Removing redundant weights or network structures to create a smaller, sparser model. Knowledge distillation: Training a small, efficient “student” model to mimic the output of a large, high-performance “teacher” VLM. The key challenge is how to effectively distill the rich and open-vocabulary knowledge.

- Efficient OVOD architectures: There is a need for the design of novel, lightweight network architectures specifically for edge-based OVOD. This might involve hybrid CNN-Transformer models or architectures that are optimized for the specific hardware accelerators found on UAVs.

- Cloud–edge collaborative systems: An alternative approach is a hybrid system where the UAV performs lightweight, onboard pre-processing (e.g., detecting potential regions of interest) and transmits only relevant data to a more powerful ground station or cloud server for full OVOD analysis. The primary challenge here is managing communication latency and bandwidth.

5.2. Limitations of Text-Prompting Methods

5.2.1. Ambiguity and Robustness of Text Prompts

- Automated prompt engineering and refinement: Can we develop methods that automatically generate or refine text prompts to be optimal for a given task or dataset? This could involve learning a mapping from a user’s high-level intent to a set of effective, low-level prompts.

- Learning from multiple prompts: Instead of relying on a single prompt, models could be designed to leverage a set of synonymous or related prompts to produce more robust and reliable detections.

- Abstract and compositional reasoning: A major leap forward would be the development of OVOD systems that can handle abstract queries by decomposing them into recognizable visual components. For example, a “dangerous situation” on a highway might be decomposed into “overturned car”, “traffic jam”, and “emergency vehicles.

5.2.2. The Lack of Benchmarks

5.3. Ethical, Privacy, and Bias Challenges

- Privacy erosion and intrusive surveillance: Traditional detectors identify categories. OVOD can interpret and log specific, nuanced activities. This qualitative leap in surveillance capability poses an unprecedented threat to personal privacy and practical obscurity, creating a pressing need for research into privacy-preserving OVOD architectures and ethical-by-design principles.

- Algorithmic bias and fairness: OVOD models inherit societal biases from their web-scale training data. In aerial imagery, this can lead to discriminatory outcomes. For example, a system might exhibit lower accuracy for certain demographic groups or misinterpret cultural objects and activities, posing significant fairness risks in applications like law enforcement or disaster response. Developing methods to audit and mitigate these biases in the aerial domain is a critical, unresolved problem.

- Potential for misuse and accountability: The ease of describing any object for detection lowers the barrier for malicious applications, from unauthorized tracking to oppressive monitoring. This creates an urgent need for robust safeguards, access control, and transparent, explainable models to ensure accountability when systems fail or are misused.

6. Future Perspectives and Directions

6.1. Domain Adaptation for UAV-OVOD

6.2. Lightweight and Efficient OVOD Models

- Quantization: Systematically reducing the numerical precision of model weights and activations [115]. Post-training quantization and quantization-aware training tailored for OVOD models need to be investigated to minimize the accuracy loss.

- Knowledge distillation: This is a particularly powerful paradigm for OVOD [116]. A large, high-performance “teacher” model can be used to train a small, efficient “student” model. The key research question is what knowledge to distill. Beyond simply matching the final detection outputs, the student could be trained to mimic the teacher’s intermediate region–text feature alignments, thereby learning the rich cross-modal relationships that enable open-vocabulary recognition.

6.3. Multi-Modal Data Fusion

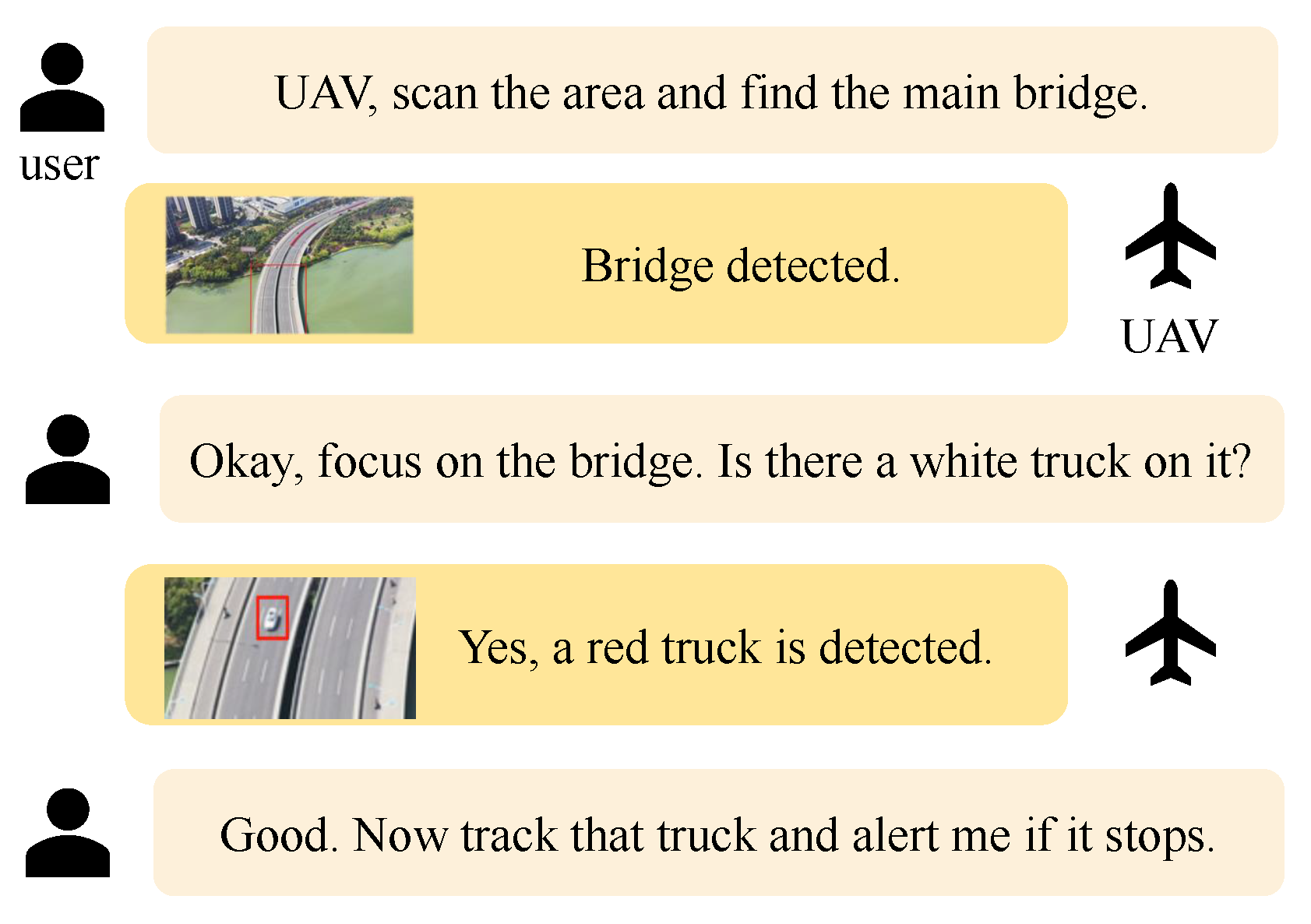

6.4. Interactive and Conversational Detection

6.5. Building Large-Scale UAV-OVOD Benchmarks

- Scale and diversity: It must contain tens of thousands of images from diverse geographical locations, altitudes, times of day, and weather conditions.

- Expansive and hierarchical vocabulary: The vocabulary should encompass hundreds or even thousands of object classes, from common categories to rare instances and fine-grained sub-categories.

- Rich annotations: Crucially, annotations must go beyond simple bounding boxes to a tight bounding box and/or a segmentation mask; attribute labels; and multiple free-form textual descriptions, capturing the object’s appearance and context.

6.6. Integration with Downstream Tasks

- Open-vocabulary tracking (OVT): Extending the “detect-by-description” capability to “track-by-description”. An operator could initiate tracking by simply describing the target, and the system would maintain a persistent track of that specific object across time and viewpoint changes.

- Open-vocabulary segmentation (OVS): A natural and crucial evolution from object detection is to move beyond bounding boxes and provide pixel-level masks for any described object or region. This task is helpful for applications requiring precise area measurement, fine-grained damage assessment, or land cover analysis. Far from being a mere future prospect, OVS for aerial imagery is an active and emerging research front, with several pioneering works [119,120,121] already laying the groundwork.

- UAV-based visual question answering (VQA) and scene captioning: Enabling an operator to ask complex questions about the aerial scene or receive dense, language-based summaries of the dynamic environment.

Author Contributions

Funding

Conflicts of Interest

Correction Statement

References

- Javed, S.; Hassan, A.; Ahmad, R.; Ahmed, W.; Ahmed, R.; Saadat, A.; Guizani, M. State-of-the-art and future research challenges in uav swarms. IEEE Internet Things J. 2024, 11, 19023–19045. [Google Scholar] [CrossRef]

- Mao, K.; Zhu, Q.; Wang, C.X.; Ye, X.; Gomez-Ponce, J.; Cai, X.; Miao, Y.; Cui, Z.; Wu, Q.; Fan, W. A survey on channel sounding technologies and measurements for UAV-assisted communications. IEEE Trans. Instrum. Meas. 2024, 73, 8004624. [Google Scholar] [CrossRef]

- Sadgrove, E.J.; Falzon, G.; Miron, D.; Lamb, D.W. Real-time object detection in agricultural/remote environments using the multiple-expert colour feature extreme learning machine (MEC-ELM). Comput. Ind. 2018, 98, 183–191. [Google Scholar] [CrossRef]

- Chaturvedi, V.; de Vries, W.T. Machine learning algorithms for urban land use planning: A review. Urban Sci. 2021, 5, 68. [Google Scholar] [CrossRef]

- Fang, Z.; Savkin, A.V. Strategies for optimized uav surveillance in various tasks and scenarios: A review. Drones 2024, 8, 193. [Google Scholar] [CrossRef]

- Albahri, A.; Khaleel, Y.L.; Habeeb, M.A.; Ismael, R.D.; Hameed, Q.A.; Deveci, M.; Homod, R.Z.; Albahri, O.; Alamoodi, A.; Alzubaidi, L. A systematic review of trustworthy artificial intelligence applications in natural disasters. Comput. Electr. Eng. 2024, 118, 109409. [Google Scholar] [CrossRef]

- Reilly, V.; Idrees, H.; Shah, M. Detection and tracking of large number of targets in wide area surveillance. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Proceedings, Part III 11. Springer: Berlin/Heidelberg, Germany, 2010; pp. 186–199. [Google Scholar]

- Sy Nguyen, V.; Jung, J.; Jung, S.; Joe, S.; Kim, B. Deployable Hook Retrieval System for UAV Rescue and Delivery. IEEE Access 2021, 9, 74632–74645. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Nelson, J.R.; Grubesic, T.H.; Wallace, D.; Chamberlain, A.W. The view from above: A survey of the public’s perception of unmanned aerial vehicles and privacy. J. Urban Technol. 2019, 26, 83–105. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Yi, Z.F.; Frederick, H.; Mendoza, R.L.; Avery, R.; Goodman, L. AI Mapping Risks to Wildlife in Tanzania: Rapid scanning aerial images to flag the changing frontier of human-wildlife proximity. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5299–5302. [Google Scholar]

- Zhao, T.; Nevatia, R. Car detection in low resolution aerial images. Image Vis. Comput. 2003, 21, 693–703. [Google Scholar] [CrossRef]

- Han, K.; Huang, X.; Li, Y.; Vaze, S.; Li, J.; Jia, X. What’s in a Name? Beyond Class Indices for Image Recognition. arXiv 2024, arXiv:2304.02364. [Google Scholar] [CrossRef]

- Vaze, S.; Han, K.; Vedaldi, A.; Zisserman, A. Open-set recognition: A good closed-set classifier is all you need? In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Bansal, A.; Sikka, K.; Sharma, G.; Chellappa, R.; Divakaran, A. Zero-Shot Object Detection. arXiv 2018, arXiv:1804.04340. [Google Scholar] [CrossRef]

- Zhao, H.; Puig, X.; Zhou, B.; Fidler, S.; Torralba, A. Open Vocabulary Scene Parsing. arXiv 2017, arXiv:1703.08769. [Google Scholar] [CrossRef]

- Zareian, A.; Rosa, K.D.; Hu, D.H.; Chang, S.F. Open-Vocabulary Object Detection Using Captions. arXiv 2021, arXiv:2011.10678. [Google Scholar] [CrossRef]

- Gu, X.; Lin, T.Y.; Kuo, W.; Cui, Y. Open-vocabulary Object Detection via Vision and Language Knowledge Distillation. arXiv 2022, arXiv:2104.13921. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar] [CrossRef]

- Soviany, P.; Ionescu, R.T. Optimizing the Trade-Off between Single-Stage and Two-Stage Deep Object Detectors using Image Difficulty Prediction. In Proceedings of the 2018 20th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 20–23 September 2018; pp. 209–214. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition; 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Paris, France, 4–6 October 2017. [Google Scholar]

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.; Zhao, J.; Han, Z. Effective Fusion Factor in FPN for Tiny Object Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1159–1167. [Google Scholar] [CrossRef]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered object detection in aerial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8311–8320. [Google Scholar]

- Wang, J.; Yang, W.; Guo, H.; Zhang, R.; Xia, G.S. Tiny object detection in aerial images. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3791–3798. [Google Scholar]

- Li, C.; Yang, T.; Zhu, S.; Chen, C.; Guan, S. Density Map Guided Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive Sparse Convolutional Networks With Global Context Enhancement for Faster Object Detection on Drone Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13435–13444. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Geng, C.; Huang, S.j.; Chen, S. Recent advances in open set recognition: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3614–3631. [Google Scholar] [CrossRef]

- Yang, H.M.; Zhang, X.Y.; Yin, F.; Yang, Q.; Liu, C.L. Convolutional prototype network for open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2358–2370. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Minderer, M.; Gritsenko, A.; Stone, A.; Neumann, M.; Weissenborn, D.; Dosovitskiy, A.; Mahendran, A.; Arnab, A.; Dehghani, M.; Shen, Z.; et al. Simple open-vocabulary object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 728–755. [Google Scholar]

- Du, Y.; Wei, F.; Zhang, Z.; Shi, M.; Gao, Y.; Li, G. Learning to prompt for open-vocabulary object detection with vision-language model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14084–14093. [Google Scholar]

- Rasheed, H.; Maaz, M.; Khattak, M.U.; Khan, S.; Khan, F.S. Bridging the Gap between Object and Image-level Representations for Open-Vocabulary Detection. arXiv 2022, arXiv:2207.03482. [Google Scholar] [CrossRef]

- Zhong, Y.; Yang, J.; Zhang, P.; Li, C.; Codella, N.; Li, L.H.; Zhou, L.; Dai, X.; Yuan, L.; Li, Y.; et al. RegionCLIP: Region-based Language-Image Pretraining. arXiv 2021, arXiv:2112.09106. [Google Scholar] [CrossRef]

- Li, Y.; Guo, W.; Yang, X.; Liao, N.; He, D.; Zhou, J.; Yu, W. Toward open vocabulary aerial object detection with clip-activated student-teacher learning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2024; pp. 431–448. [Google Scholar]

- Saini, N.; Dubey, A.; Das, D.; Chattopadhyay, C. Advancing open-set object detection in remote sensing using multimodal large language model. In Proceedings of the Winter Conference on Applications of Computer Vision, Tucson, Arizona, 28 February–4 March 2025; pp. 451–458. [Google Scholar]

- Wei, G.; Yuan, X.; Liu, Y.; Shang, Z.; Yao, K.; Li, C.; Yan, Q.; Zhao, C.; Zhang, H.; Xiao, R. OVA-DETR: Open vocabulary aerial object detection using image-text alignment and fusion. arXiv 2024, arXiv:2408.12246. [Google Scholar]

- Pan, J.; Liu, Y.; Fu, Y.; Ma, M.; Li, J.; Paudel, D.P.; Van Gool, L.; Huang, X. Locate anything on earth: Advancing open-vocabulary object detection for remote sensing community. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 6281–6289. [Google Scholar]

- Huang, Z.; Feng, Y.; Yang, S.; Liu, Z.; Liu, Q.; Wang, Y. Openrsd: Towards open-prompts for object detection in remote sensing images. arXiv 2025, arXiv:2503.06146. [Google Scholar]

- Xie, J.; Wang, G.; Zhang, T.; Sun, Y.; Chen, H.; Zhuang, Y.; Li, J. LLaMA-Unidetector: An LLaMA-Based Universal Framework for Open-Vocabulary Object Detection in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4409318. [Google Scholar] [CrossRef]

- Zang, Z.; Lin, C.; Tang, C.; Wang, T.; Lv, J. Zero-shot aerial object detection with visual description regularization. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 6926–6934. [Google Scholar]

- Liu, F.; Chen, D.; Guan, Z.; Zhou, X.; Zhu, J.; Ye, Q.; Fu, L.; Zhou, J. RemoteCLIP: A Vision Language Foundation Model for Remote Sensing. arXiv 2024, arXiv:2306.11029. [Google Scholar] [CrossRef]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, E.; Bailey, P.; Chen, Z.; et al. PaLM 2 Technical Report. arXiv 2023, arXiv:2305.10403. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2024, arXiv:2304.08069. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Nilsen, P. Making sense of implementation theories, models, and frameworks. In Implementation Science 3.0; Springer: Berlin/Heidelberg, Germany, 2020; pp. 53–79. [Google Scholar]

- Venable, J.; Pries-Heje, J.; Baskerville, R. FEDS: A framework for evaluation in design science research. Eur. J. Inf. Syst. 2016, 25, 77–89. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Gupta, A.; Dollár, P.; Girshick, R. LVIS: A Dataset for Large Vocabulary Instance Segmentation. arXiv 2019, arXiv:1908.03195. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, P.; Chu, T.; Cao, Y.; Zhou, Y.; Wu, T.; Wang, B.; He, C.; Lin, D. V3Det: Vast Vocabulary Visual Detection Dataset. arXiv 2023, arXiv:2304.03752. [Google Scholar] [CrossRef]

- Yao, Y.; Liu, P.; Zhao, T.; Zhang, Q.; Liao, J.; Fang, C.; Lee, K.; Wang, Q. How to Evaluate the Generalization of Detection? A Benchmark for Comprehensive Open-Vocabulary Detection. arXiv 2023, arXiv:2308.13177. [Google Scholar] [CrossRef]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 1–27 September 2015; pp. 3735–3739. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate Object Localization in Remote Sensing Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, L.; Weng, L.; Yang, Y. A high resolution optical satellite image dataset for ship recognition and some new baselines. In Proceedings of the International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017; SciTePress: Setúbal, Portugal, 2017; Volume 2, pp. 324–331. [Google Scholar]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Random Access Memories: A New Paradigm for Target Detection in High Resolution Aerial Remote Sensing Images. IEEE Trans. Image Process. 2018, 27, 1100–1111. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Zhang, Y.; Yuan, Y.; Feng, Y.; Lu, X. Hierarchical and Robust Convolutional Neural Network for Very High-Resolution Remote Sensing Object Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5535–5548. [Google Scholar] [CrossRef]

- Haroon, M.; Shahzad, M.; Fraz, M.M. Multisized Object Detection Using Spaceborne Optical Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3032–3046. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, J.; Li, K.; Xie, X.; Lang, C.; Yao, Y.; Han, J. Anchor-free oriented proposal generator for object detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5625411. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar] [CrossRef]

- Lam, D.; Kuzma, R.; McGee, K.; Dooley, S.; Laielli, M.; Klaric, M.; Bulatov, Y.; McCord, B. xview: Objects in context in overhead imagery. arXiv 2018, arXiv:1802.07856. [Google Scholar] [CrossRef]

- Bansal, A.; Sikka, K.; Sharma, G.; Chellappa, R.; Divakaran, A. Zero-shot object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 384–400. [Google Scholar]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Li, Y.; Luo, J.; Zhang, Y.; Tan, Y.; Yu, J.G.; Bai, S. Learning to holistically detect bridges from large-size vhr remote sensing imagery. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 11507–11523. [Google Scholar] [CrossRef]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards Large-Scale Small Object Detection: Survey and Benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Sun, Y.; Feng, S.; Li, X.; Ye, Y.; Kang, J.; Huang, X. Visual Grounding in Remote Sensing Images. In Proceedings of the 30th ACM International Conference on Multimedia, New York, NY, USA, 10 October 2022; MM ’22. pp. 404–412. [Google Scholar] [CrossRef]

- Li, K.; Wang, D.; Xu, H.; Zhong, H.; Wang, C. Language-Guided Progressive Attention for Visual Grounding in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5631413. [Google Scholar] [CrossRef]

- Zhan, Y.; Xiong, Z.; Yuan, Y. RSVG: Exploring Data and Models for Visual Grounding on Remote Sensing Data. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5604513. [Google Scholar] [CrossRef]

- Wei, G.; Liu, Y.; Yuan, X.; Xue, X.; Guo, L.; Yang, Y.; Zhao, C.; Bai, Z.; Zhang, H.; Xiao, R. From Word to Sentence: A Large-Scale Multi-Instance Dataset for Open-Set Aerial Detection. arXiv 2025, arXiv:2505.03334. [Google Scholar]

- Li, Y.; Wang, L.; Wang, T.; Yang, X.; Luo, J.; Wang, Q.; Deng, Y.; Wang, W.; Sun, X.; Li, H.; et al. Star: A first-ever dataset and a large-scale benchmark for scene graph generation in large-size satellite imagery. IEEE Trans. Pattern Anal. Mach. Intell 2025, 47, 1832–1849. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable Person Re-Identification: A Benchmark. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–15 December 2015. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Everingham, M.; Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Leng, J.; Ye, Y.; Mo, M.; Gao, C.; Gan, J.; Xiao, B.; Gao, X. Recent Advances for Aerial Object Detection: A Survey. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. arXiv 2021, arXiv:2001.06303. [Google Scholar] [CrossRef] [PubMed]

- Cazzato, D.; Cimarelli, C.; Sanchez-Lopez, J.L.; Voos, H.; Leo, M. A Survey of Computer Vision Methods for 2D Object Detection from Unmanned Aerial Vehicles. J. Imaging 2020, 6, 78. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In Proceedings of the Computer Vision—ECCV, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Kalra, I.; Singh, M.; Nagpal, S.; Singh, R.; Vatsa, M.; Sujit, P.B. DroneSURF: Benchmark Dataset for Drone-based Face Recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; IEEE Press: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. arXiv 2018, arXiv:1804.00518. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

- Li, X.L.; Liang, P. Prefix-Tuning: Optimizing Continuous Prompts for Generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 4582–4597. [Google Scholar] [CrossRef]

- Hu, Z.; Wang, L.; Lan, Y.; Xu, W.; Lim, E.P.; Bing, L.; Xu, X.; Poria, S.; Lee, R.K.W. LLM-Adapters: An Adapter Family for Parameter-Efficient Fine-Tuning of Large Language Models. arXiv 2023, arXiv:2304.01933. [Google Scholar] [CrossRef]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. arXiv 2021, arXiv:2104.08691. [Google Scholar] [CrossRef]

- Shi, T.; Gong, J.; Hu, J.; Zhi, X.; Zhang, W.; Zhang, Y.; Zhang, P.; Bao, G. Feature-Enhanced CenterNet for Small Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 5488. [Google Scholar] [CrossRef]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A Normalized Gaussian Wasserstein Distance for Tiny Object Detection. arXiv 2022, arXiv:2110.13389. [Google Scholar] [CrossRef]

- Jing, R.; Zhang, W.; Li, Y.; Li, W.; Liu, Y. Feature aggregation network for small object detection. Expert Syst. Appl. 2024, 255, 124686. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar] [CrossRef]

- Ma, Z.; Zhou, L.; Wu, D.; Zhang, X. A small object detection method with context information for high altitude images. Pattern Recognit. Lett. 2025, 188, 22–28. [Google Scholar] [CrossRef]

- Zhang, R.; Xie, C.; Deng, L. A fine-grained object detection model for aerial images based on yolov5 deep neural network. Chin. J. Electron. 2023, 32, 51–63. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Wang, S.; Li, B.Z.; Khabsa, M.; Fang, H.; Ma, H. Linformer: Self-Attention with Linear Complexity. arXiv 2020, arXiv:2006.04768. [Google Scholar] [CrossRef]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlos, T.; Hawkins, P.; Davis, J.; Mohiuddin, A.; Kaiser, L.; et al. Rethinking Attention with Performers. arXiv 2022, arXiv:2009.14794. [Google Scholar] [CrossRef]

- Kitaev, N.; Kaiser, Ł.; Levskaya, A. Reformer: The Efficient Transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar] [CrossRef]

- Manhardt, F.; Arroyo, D.M.; Rupprecht, C.; Busam, B.; Birdal, T.; Navab, N.; Tombari, F. Explaining the ambiguity of object detection and 6d pose from visual data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6841–6850. [Google Scholar]

- He, H.; Ding, J.; Xu, B.; Xia, G.S. On the robustness of object detection models on aerial images. IEEE Trans. Geosci. Remote Sens. 2024, 63, 5600512. [Google Scholar] [CrossRef]

- Yue, M.; Zhang, L.; Huang, J.; Zhang, H. Lightweight and efficient tiny-object detection based on improved YOLOv8n for UAV aerial images. Drones 2024, 8, 276. [Google Scholar] [CrossRef]

- Hu, M.; Li, Z.; Yu, J.; Wan, X.; Tan, H.; Lin, Z. Efficient-lightweight YOLO: Improving small object detection in YOLO for aerial images. Sensors 2023, 23, 6423. [Google Scholar] [CrossRef]

- Plastiras, G.; Siddiqui, S.; Kyrkou, C.; Theocharides, T. Efficient embedded deep neural-network-based object detection via joint quantization and tiling. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August–2 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6–10. [Google Scholar]

- Yang, Y.; Sun, X.; Diao, W.; Li, H.; Wu, Y.; Li, X.; Fu, K. Adaptive knowledge distillation for lightweight remote sensing object detectors optimizing. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5623715. [Google Scholar] [CrossRef]

- Zhao, P.; Yuan, G.; Cai, Y.; Niu, W.; Liu, Q.; Wen, W.; Ren, B.; Wang, Y.; Lin, X. Neural pruning search for real-time object detection of autonomous vehicles. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 5–9 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 835–840. [Google Scholar]

- Wang, Y.; Yang, Y.; Zhao, X. Object detection using clustering algorithm adaptive searching regions in aerial images. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 651–664. [Google Scholar]

- Cao, Q.; Chen, Y.; Ma, C.; Yang, X. Open-Vocabulary Remote Sensing Image Semantic Segmentation. arXiv 2024, arXiv:2409.07683. [Google Scholar] [CrossRef]

- Li, K.; Liu, R.; Cao, X.; Bai, X.; Zhou, F.; Meng, D.; Wang, Z. SegEarth-OV: Towards Training-Free Open-Vocabulary Segmentation for Remote Sensing Images. arXiv 2024, arXiv:2410.01768. [Google Scholar] [CrossRef]

- Ye, C.; Zhuge, Y.; Zhang, P. Towards Open-Vocabulary Remote Sensing Image Semantic Segmentation. arXiv 2024, arXiv:2412.19492. [Google Scholar] [CrossRef]

| Model | DIOR | DOTA v1.0 | ||||

|---|---|---|---|---|---|---|

| mAP-based Evaluation | ||||||

| Base mAP | Novel mAP | HM | Base mAP | Novel mAP | HM | |

| DescReg | 68.7 | 7.9 | 14.2 | 68.7 | 4.7 | 8.8 |

| CastDet | 51.3 | 24.3 | 33.0 | 60.6 | 36.0 | 45.1 |

| OVA-DETR | 79.6 | 26.1 | 39.3 | 75.5 | 23.7 | 36.1 |

| -based Evaluation | ||||||

| LAE-DINO | 85.5 | – | ||||

| OPEN-RSD | – | 77.7 | ||||

| LLaMA-Unidetector | 51.38 | 50.22 | ||||

| Domain | Dataset | Class | Image | Instance | Annotation Method |

|---|---|---|---|---|---|

| General scene | COCO [61] | 80 | 328 K | 2.5 M | - |

| LVIS [62] | 1200 | 164 K | 2.2 M | - | |

| Remote Sensing | UCAS-AOD [65] | 2 | 2420 | 14596 | HBB |

| RSOD [66] | 4 | 3644 | 22,221 | OBB | |

| HRSC [67] | 19 | 1061 | 2976 | OBB | |

| NWPU-VHR [68] | 10 | 800 | 3651 | HBB | |

| LEVIR [69] | 3 | 21,952 | 11,028 | HBB | |

| DOTA v1.0 [70] | 15 | 2806 | 188,282 | OBB | |

| HRRSD [71] | 13 | 21,761 | 55,740 | HBB | |

| SIMD [72] | 15 | 5000 | 45,096 | HBB | |

| DIOR [73] | 20 | 23,463 | 190,288 | HBB | |

| DIOR-R [74] | 20 | 23,463 | 192,512 | OBB | |

| DOTA v2.0 [75] | 18 | 11,268 | 1,973,658 | OBB | |

| xView [76] | 60 | 1127 | >1 M | HBB | |

| Visdrone [77] | 10 | 29,040 | 740,419 | HBB | |

| FAIR1M [78] | 37 | 15,266 | >1 M | OBB | |

| GLH-Bridge [79] | 1 | 6000 | 59,737 | ALL | |

| SODA [80] | 9 | 31,798 | 1,008,346 | HBB | |

| RSVG [81] | - | 4239 | 7933 | HBB | |

| OPT-RSVG [82] | 14 | 25,452 | 48,952 | HBB | |

| DIOR-RSVG [83] | 20 | 17,402 | 38,320 | HBB | |

| Open vocabulary | MI-OAD [84] | 100 | 163,023 | 2 M | HBB |

| ORSD+ [51] | 200 | 474,058 | - | ALL | |

| LAE-1M [50] | 1600 | - | 1 M | HBB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Y.; Li, J.; Ou, C.; Yan, D.; Zhang, H.; Xue, X. Open-Vocabulary Object Detection in UAV Imagery: A Review and Future Perspectives. Drones 2025, 9, 557. https://doi.org/10.3390/drones9080557

Zhou Y, Li J, Ou C, Yan D, Zhang H, Xue X. Open-Vocabulary Object Detection in UAV Imagery: A Review and Future Perspectives. Drones. 2025; 9(8):557. https://doi.org/10.3390/drones9080557

Chicago/Turabian StyleZhou, Yang, Junjie Li, Congyang Ou, Dawei Yan, Haokui Zhang, and Xizhe Xue. 2025. "Open-Vocabulary Object Detection in UAV Imagery: A Review and Future Perspectives" Drones 9, no. 8: 557. https://doi.org/10.3390/drones9080557

APA StyleZhou, Y., Li, J., Ou, C., Yan, D., Zhang, H., & Xue, X. (2025). Open-Vocabulary Object Detection in UAV Imagery: A Review and Future Perspectives. Drones, 9(8), 557. https://doi.org/10.3390/drones9080557