Aerial Autonomy Under Adversity: Advances in Obstacle and Aircraft Detection Techniques for Unmanned Aerial Vehicles

Abstract

1. Introduction

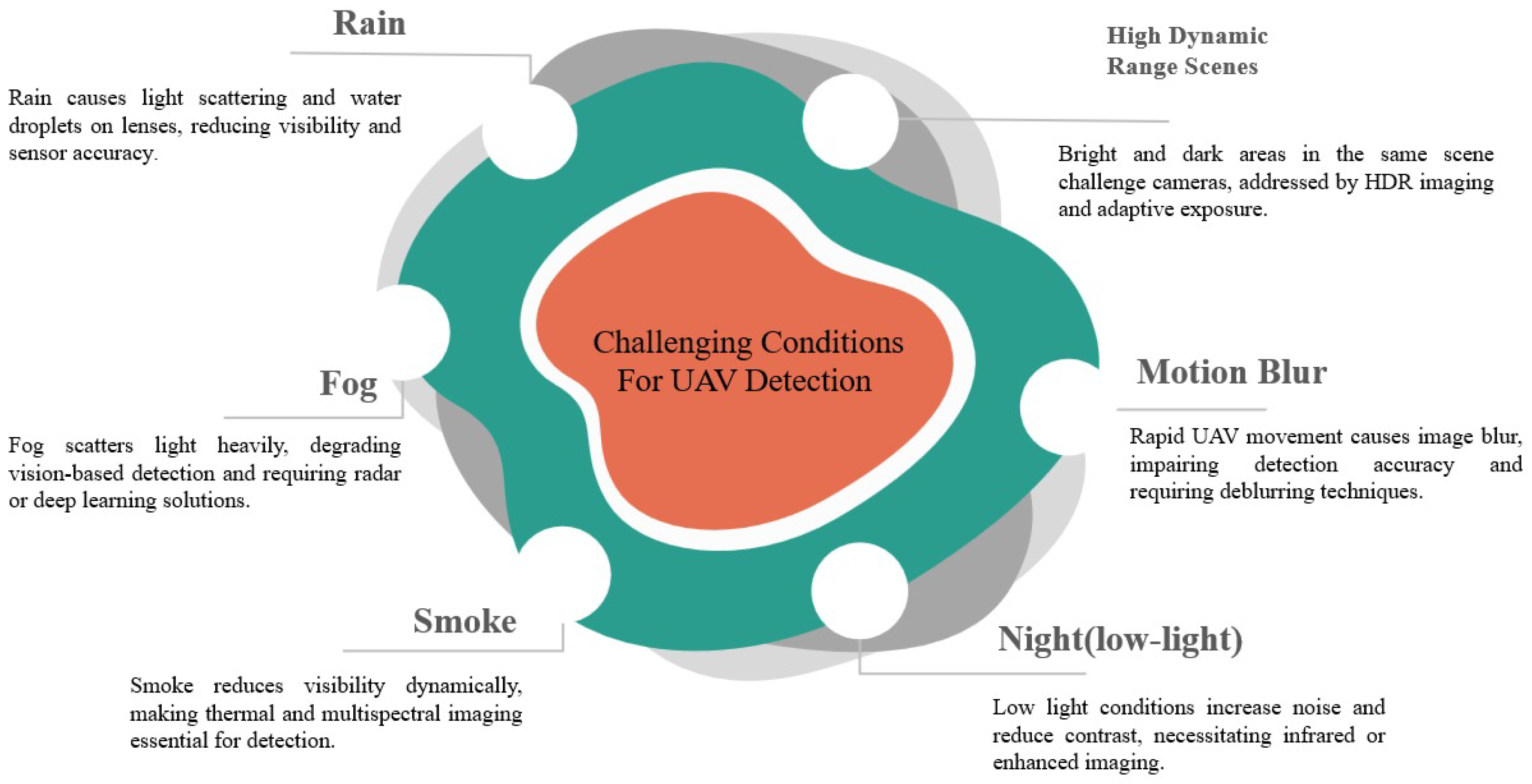

2. Environmental Effects on Sensor Reliability

2.1. Impact of Rain on UAV Detection

2.2. Fog Induced Detection Challenges

2.3. Smoke as a Detection Barrier

2.4. Low-Light and Nighttime Detection

2.5. Motion Blur in High-Speed UAVs

2.6. High Dynamic Range Scenes

3. Real-Time Perception and Obstacle Detection Challenges

3.1. Detecting Wires, Cables, and Thin Branches

3.2. Real-Time Tracking of Moving Objects

3.3. Handling Dense Stationary Environments

3.4. Robust Detection in Harsh Weather Conditions

3.5. Fast Motion Causing Blur and Image Distortion

3.6. Limited Computing Power on UAVs

4. Sensors Used for Detection

4.1. Visual and Thermal Imaging for Obstacle Detection

4.2. High-Resolution 3D Mapping with Laser Pulses

4.3. Robust Detection in Adverse Weather Conditions

4.4. Ultrasonic Sensing for Short-Range Detection

5. Obstacle and Aircraft Detection Methods

5.1. Classical Methods

5.2. Modern Deep Learning Methods

5.3. Obstacle Detection Methods

5.4. Aircraft Detection Methods

5.5. Laser-Based Visual Identification Techniques for Multi-UAV Detection

6. Standard Benchmark Datasets

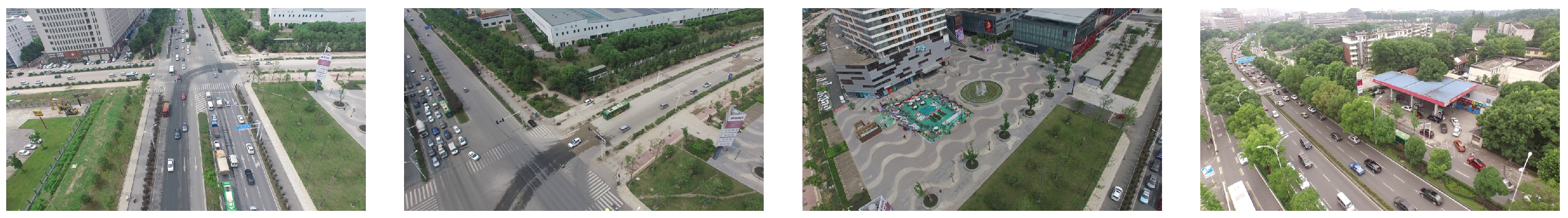

6.1. High-Resolution Urban UAV Video Dataset

6.2. Diverse Urban Object Detection Dataset

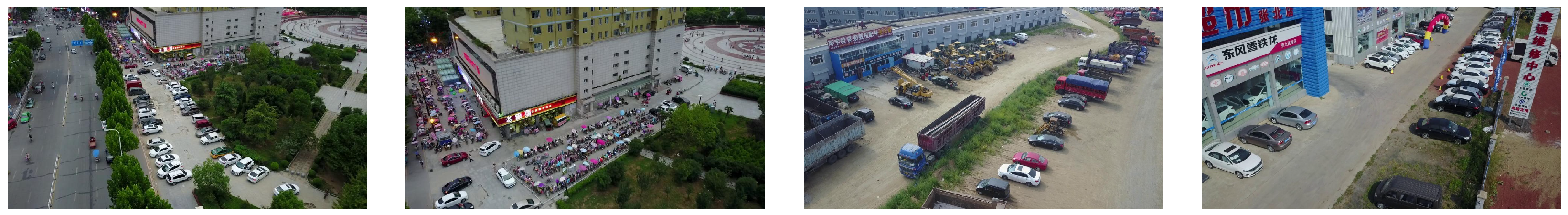

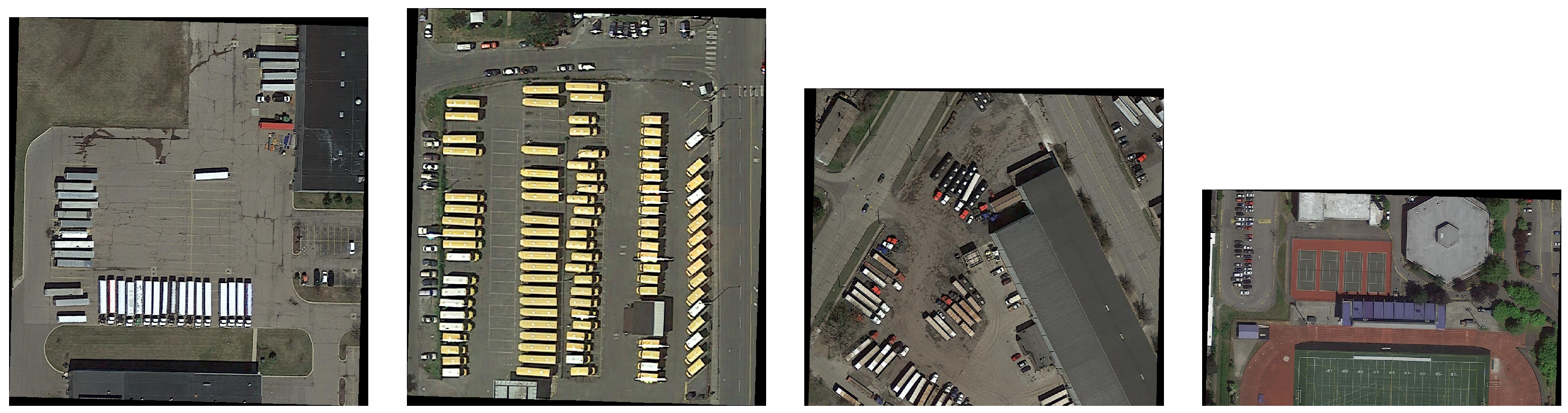

6.3. Large-Scale Aerial Object Detection Dataset

6.4. Synthetic Fog for Adverse Weather Evaluation

7. Performance Evalution Metrices

- Mean Average Precision (MAP): Mean Average Precision is one of the numerous widely employed metrics in object detection. It estimates how well a detection model recognizes objects with both high precision and recall. For each class, Average Precision is calculated based on the area under the precision-recall curve, and MAP is the mean of all MAPS across all classes [107]. In UAV applications, MAP delivers a complete view of the model’s ability to correctly see obstacles like wires, vehicles, or aircraft under different conditions, such as rain or fog. A high MAP indicates constant and accurate detection across multiple object types and scenarios [108].where N is the number of classes or recall levels, and is the Average Precision for class i.

- Precision and Recall: Precision calculates how many detected objects are appropriate, while Recall estimates how many suitable objects are detected. In UAV obstacle detection, high precision means more occasional wrong warnings (e.g., detecting a shadow as an obstacle), and high Recall points more occasional missed detections (e.g., not detecting a thin wire) [109]. These metrics are especially critical in safety-critical applications like midair collision avoidance, where missing an obstacle (low Recall) or detecting a non-existent one (low precision) could lead to serious consequences [110].where is the number of true positives and is the number of false positives.where is the number of false negatives.

- F1 Score: The F1 Score is the harmonic mean of precision and recall. It delivers a single metric that negates both values, making it particularly useful when there’s an unstable trade-off between precision and recall [111]. In UAV detection schemes, the F1 Score is a helpful indicator of how well the technique performs overall, specifically in disorderly environments or low-visibility conditions where false positives and false negatives are likely [112].This balances both precision and recall.

- Robustness under Degraded Conditions: Many models that perform well in ideal scenarios often degrade significantly in real-world environments. Robustness is evaluated by testing the model on datasets specifically designed to simulate or contain real-world degradation. It is a key requirement for UAVs operating in unpredictable outdoor conditions [115]. To evaluate the effectiveness of object detection systems in UAV applications, several key performance metrics are employed. These include precision, recall, F1 score, latency, and robustness, which collectively help assess detection accuracy and real-time responsiveness. An overview of these performance metrics is shown in Figure 9.

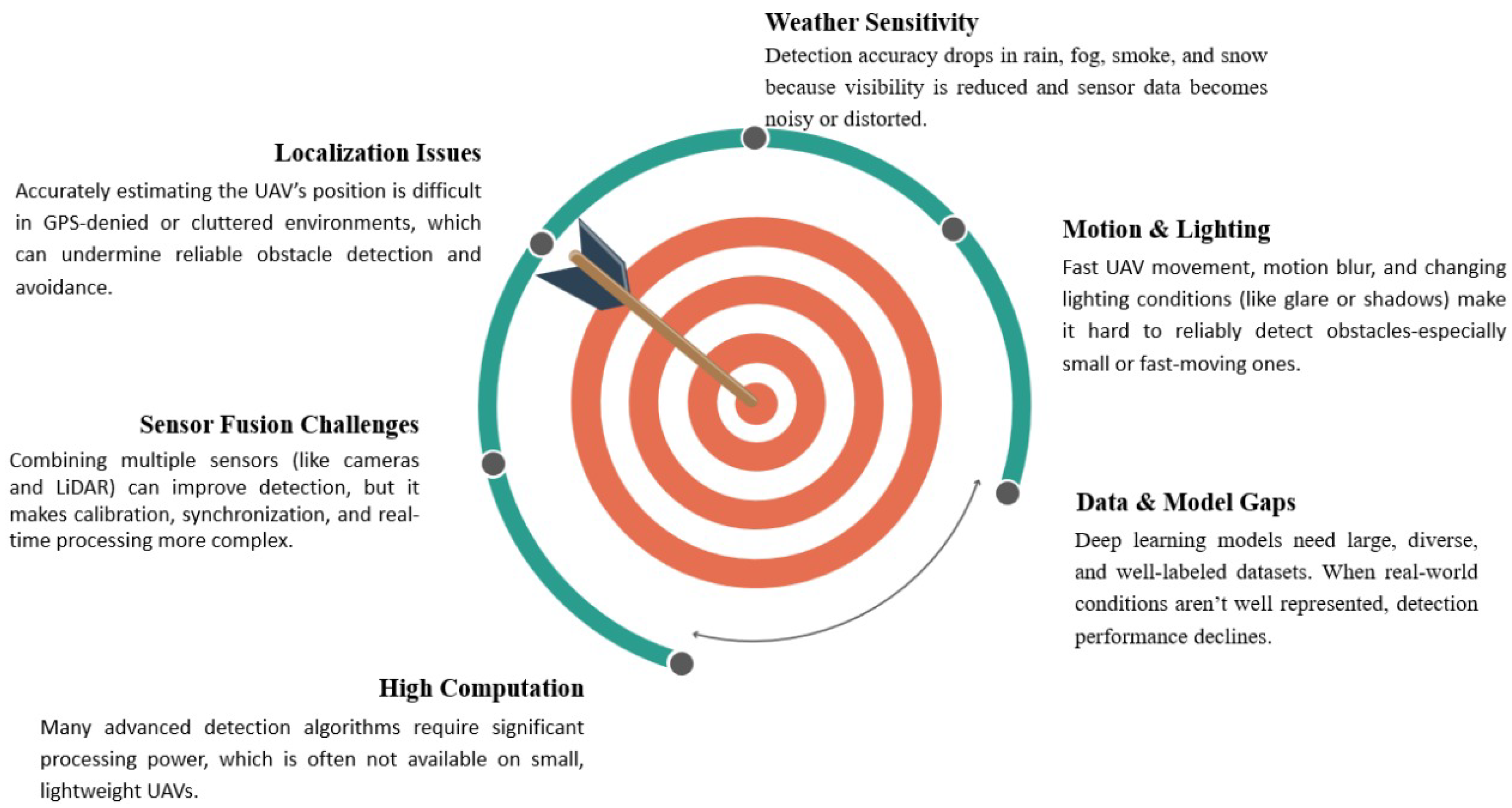

8. Limitations of Current Detection Approaches

9. Future Directions

- Real-time Processing under Low Visibility: Adverse weather, such as fog, smoke, and heavy rain, particularly debases sensor inputs. Coming systems must improve computational efficiency to allow quick and dependable detection under such visibility conditions.

- Lightweight Deep Learning Models: Deep neural networks deliver high accuracy, but are usually computationally rich. There is a requirement for optimized, resource-efficient measures that maintain high detection accuracy while working within the limited memory and power capabilities of UAV platforms.

- Event-based Vision Processing: Event cameras show important benefits in high-speed or low-light techniques by capturing only differences in a scene. However, existing algorithms for processing such data are immature. Further work is required to design robust algorithms and incorporate them effectively with UAV techniques for dynamic obstacle and aircraft detection.

- Adaptive Multi-Sensor Fusion: Combining inputs from RGB, LiDAR, radar, and event cameras can enhance detection robustness. Future strategies should dynamically adjust sensor weighting based on the environment (e.g., increasing radar weight in fog)—however, challenges in sensor calibration and data synchronization remain.

- Zero-Shot Detection: This method enables UAVs to detect unrecognized objects during deployment without prior training. It carries enormous promise for unpredictable scenarios, such as natural disasters or emerging threats. Future work must concentrate on improving generalization capabilities and semantic knowledge.

- Human-Machine Interfaces for UAV Control: Reflexive control systems, such as gesture-based interaction (e.g., wearable gloves), can improve responsiveness in real-time, particularly in domain operations. Such systems lower cognitive load and permit seamless human-drone collaboration.

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Priya, S.S.; Sanjana, P.S.; Yanamala, R.M.R.; Raj, R.D.A.; Pallakonda, A.; Napoli, C.; Randieri, C. Flight-Safe Inference: SVD-Compressed LSTM Acceleration for Real-Time UAV Engine Monitoring Using Custom FPGA Hardware Architecture. Drones 2025, 9, 494. [Google Scholar] [CrossRef]

- Yeddula, L.R.; Pallakonda, A.; Raj, R.D.A.; Yanamala, R.M.R.; Prakasha, K.K.; Kumar, M.S. YOLOv8n-GBE: A Hybrid YOLOv8n Model with Ghost Convolutions and BiFPN-ECA Attention for Solar PV Defect Localization. IEEE Access 2025, 13, 114012–114028. [Google Scholar] [CrossRef]

- Fiorio, M.; Galatolo, R.; Di Rito, G. Development and Experimental Validation of a Sense-and-Avoid System for a Mini-UAV. Drones 2025, 9, 96. [Google Scholar] [CrossRef]

- Fasano, G.; Opromolla, R. Analytical Framework for Sensing Requirements Definition in Non-Cooperative UAS Sense and Avoid. Drones 2023, 7, 621. [Google Scholar] [CrossRef]

- Gandhi, T.; Yang, M.-T.; Kasturi, R.; Camps, O.; Coraor, L.; McCandless, J. Detection of obstacles in the flight path of an aircraft. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 176–191. [Google Scholar] [CrossRef]

- Ameli, Z.; Aremanda, Y.; Friess, W.A.; Landis, E.N. Impact of UAV Hardware Options on Bridge Inspection Mission Capabilities. Drones 2022, 6, 64. [Google Scholar] [CrossRef]

- Merei, A.; Mcheick, H.; Ghaddar, A.; Rebaine, D. A Survey on Obstacle Detection and Avoidance Methods for UAVs. Drones 2025, 9, 203. [Google Scholar] [CrossRef]

- Ojdanić, D.; Gräf, B.; Sinn, A.; Yoo, H.W.; Schitter, G. Camera-guided real-time laser ranging for multi-UAV distance measurement. Appl. Opt. 2022, 61, 9233–9240. [Google Scholar] [CrossRef]

- Sumi, Y.; Kim, B.K.; Ogure, T.; Kodama, M.; Sakai, N.; Kobayashi, M. Impact of Rainfall on the Detection Performance of Non-Contact Safety Sensors for UAVs/UGVs. Sensors 2024, 24, 2713. [Google Scholar] [CrossRef]

- Singh, P.; Gupta, K.; Jain, A.K.; Jain, A.; Jain, A. Vision-based UAV detection in complex backgrounds and rainy conditions. In Proceedings of the 2024 2nd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 15–16 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1097–1102. [Google Scholar]

- Aswini, N.; Kumar, E.K.; Uma, S.V. UAV and obstacle sensing techniques—A perspective. Int. J. Intell. Unmanned Syst. 2018, 6, 32–46. [Google Scholar] [CrossRef]

- Fasano, G.; Accardo, D.; Tirri, A.E.; Moccia, A.; De Lellis, E. Sky region obstacle detection and tracking for vision-based UAS sense and avoid. J. Intell. Robot. Syst. 2016, 84, 121–144. [Google Scholar] [CrossRef]

- Shabnam Sadeghi, E. Mixed reality and remote sensing application of unmanned aerial vehicle in fire and smoke detection. J. Ind. Inf. Integr. 2019, 15, 42–49. [Google Scholar] [CrossRef]

- Chao, P.-Y.; Hsu, W.-C.; Chen, W.-Y. Design of Automatic Correction System for UAV’s Smoke Trajectory Angle Based on KNN Algorithm. Electronics 2022, 11, 3587. [Google Scholar] [CrossRef]

- Loffi, J.M.; Wallace, R.J.; Vance, S.M.; Jacob, J.; Dunlap, J.C.; Mitchell, T.A.; Johnson, D.C. Pilot Visual Detection of Small Unmanned Aircraft on Final Approach during Nighttime Conditions. Int. J. Aviat. Aeronaut. Aerosp. 2021, 8, 11. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, D.; Cao, Y. Visual navigation algorithm for night landing of fixed-wing unmanned aerial vehicle. Aerospace 2022, 9, 615. [Google Scholar] [CrossRef]

- Zhan, Q.; Zhou, Y.; Zhang, J.; Sun, C.; Shen, R.; He, B. A novel method for measuring center-axis velocity of unmanned aerial vehicles through synthetic motion blur images. Auton. Intell. Syst. 2024, 4, 16. [Google Scholar] [CrossRef]

- Ruf, B.; Monka, S.; Kollmann, M.; Grinberg, M. Real-Time on-Board Obstacle Avoidance for UAVS Based on Embedded Stereo Vision. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-1, 363–370. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, D.; Cao, Y. Image Quality enhancement with applications to unmanned aerial vehicle obstacle detection. Aerospace 2022, 9, 829. [Google Scholar] [CrossRef]

- Li, J.; Xiong, X.; Yan, Y.; Yang, Y. A survey of indoor uav obstacle avoidance research. IEEE Access 2023, 11, 51861–51891. [Google Scholar] [CrossRef]

- Stambler, A.; Sherwin, G.; Rowe, P. Detection and reconstruction of wires using cameras for aircraft safety systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 697–703. [Google Scholar]

- Junior, V.A.A.; Cugnasca, P.S. Detecting cables and power lines in Small-UAS (Unmanned Aircraft Systems) images through deep learning. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–7. [Google Scholar]

- Zhou, C.; Yang, J.; Zhao, C.; Hua, G. Accurate thin-structure obstacle detection for autonomous mobile robots. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1–10. [Google Scholar]

- Shin, H.-S.; Tsourdos, A.; White, B.; Shanmugavel, M.; Tahk, M.-J. UAV conflict detection and resolution for static and dynamic obstacles. In Proceedings of the AIAA Guidance, Navigation and Control Conference and Exhibit, Honolulu, HI, USA, 18–21 August 2008; p. 6521. [Google Scholar]

- Chen, H.; Lu, P. Real-time identification and avoidance of simultaneous static and dynamic obstacles on point cloud for UAVs navigation. Robot. Auton. Syst. 2022, 154, 104124. [Google Scholar] [CrossRef]

- Lim, C.; Li, B.; Ng, E.M.; Liu, X.; Low, K.H. Three-dimensional (3D) dynamic obstacle perception in a detect-and-avoid framework for unmanned aerial vehicles. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 996–1004. [Google Scholar]

- Saunders, J.; Call, B.; Curtis, A.; Beard, R.; McLain, T. Static and dynamic obstacle avoidance in miniature air vehicles. In Proceedings of the Infotech@ Aerospace, Arlington, VA, USA, 26–29 September 2005; p. 6950. [Google Scholar]

- Aldao, E.; González-deSantos, L.M.; Michinel, H.; Higinio, G.-J. UAV obstacle avoidance algorithm to navigate in dynamic building environments. Drones 2022, 6, 16. [Google Scholar] [CrossRef]

- Sandino, J.; Vanegas, F.; Maire, F.; Caccetta, P.; Sanderson, C.; Gonzalez, F. UAV framework for autonomous onboard navigation and people/object detection in cluttered indoor environments. Remote Sens. 2020, 12, 3386. [Google Scholar] [CrossRef]

- Govindaraju, V.; Leng, G.; Qian, Z. Visibility-based UAV path planning for surveillance in cluttered environments. In Proceedings of the 2014 IEEE International Symposium on Safety, Security, Rescue Robotics, Toyako, Japan, 27–30 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Gageik, N.; Benz, P.; Montenegro, S. Obstacle detection and collision avoidance for a UAV with complementary low-cost sensors. IEEE Access 2015, 3, 599–609. [Google Scholar] [CrossRef]

- Stambler, A.; Spiker, S.; Bergerman, M.; Singh, S. Toward autonomous rotorcraft flight in degraded visual environments: Experiments and lessons learned. In Proceedings of the Degraded Visual Environments: Enhanced, Synthetic, and External Vision Solutions, Baltimore, MD, USA, 19–20 April 2016; SPIE: Bellingham, WA, USA, 2016; Volume 9839, pp. 19–30. [Google Scholar]

- Sieberth, T.; Wackrow, R.; Chandler, J.H. Automatic detection of blurred images in UAV image sets. ISPRS J. Photogramm. Remote Sens. 2016, 122, 1–16. [Google Scholar] [CrossRef]

- Mao, Y.; Chen, M.; Wei, X.; Chen, B. Obstacle recognition and avoidance for UAVs under resource-constrained environments. IEEE Access 2020, 8, 169408–169422. [Google Scholar] [CrossRef]

- Jaffari, R.; Hashmani, M.A.; Reyes-Aldasoro, C.C.; Aziz, N.; Rizvi, S.S.H. Deep learning object detection techniques for thin objects in computer vision: An experimental investigation. In Proceedings of the 2021 7th International Conference on Control, Automation and Robotics (ICCAR), Singapore, 23–26 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 295–302. [Google Scholar]

- Baykara, H.C.; Bıyık, E.; Gül, G.; Onural, D.; Öztürk, A.S.; Yıldız, I. Tracking and classification of multiple moving objects in UAV videos. In Proceedings of the 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI), Boston, MA, USA, 6–8 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 945–950. [Google Scholar]

- Petrlík, M.; Báča, T.; Heřt, D.; Vrba, M.; Krajník, T.; Saska, M. A robust UAV system for operations in a constrained environment. IEEE Robot. Autom. Lett. 2020, 5, 2169–2176. [Google Scholar] [CrossRef]

- Bello, A.B.; Navarro, F.; Raposo, J.; Miranda, M.; Zazo, A.; Álvarez, M. Fixed-wing UAV flight operation under harsh weather conditions: A case study in Livingston island glaciers, Antarctica. Drones 2022, 6, 384. [Google Scholar] [CrossRef]

- Oktay, T.; Celik, H.; Turkmen, I. Maximizing autonomous performance of fixed-wing unmanned aerial vehicle to reduce motion blur in taken images. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2018, 232, 857–868. [Google Scholar] [CrossRef]

- Eom, S.; Lee, H.; Park, J.; Lee, I. UAV-aided wireless communication designs with propulsion energy limitations. IEEE Trans. Veh. Technol. 2019, 69, 651–662. [Google Scholar] [CrossRef]

- Miled, B.; Meriem; Zeng, Q.; Liu, Y. Discussion on event-based cameras for dynamic obstacles recognition and detection for UAVs in outdoor environments. In UKRAS22 Conference “Robotics for Unconstrained Environments” Proceedings; EPSRC UK-RAS Network:: Manchester, UK, 2022. [Google Scholar]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.; Conradt, J.; Daniilidis, K.; et al. Event-based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 169–190. [Google Scholar] [CrossRef]

- Dinaux, R.M. Obstacle Detection and Avoidance onboard an MAV using a Monocular Event-Based Camera. Master’s Thesis, Delft University of Technology, Delft, The Netherlands, 2021. [Google Scholar]

- Moffatt, A.; Platt, E.; Mondragon, B.; Kwok, A.; Uryeu, D.; Bhandari, S. Obstacle detection and avoidance system for small UAVs using a LiDAR. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 633–640. [Google Scholar]

- Aldao, E.; Santos, L.M.G.-D.; González-Jorge, H. LiDAR based detect and avoid system for UAV navigation in UAM corridors. Drones 2022, 6, 185. [Google Scholar] [CrossRef]

- Zheng, L.; Zhang, P.; Tan, J.; Li, F. The obstacle detection method of uav based on 2D lidar. IEEE Access 2019, 7, 163437–163448. [Google Scholar] [CrossRef]

- Pao, W.Y.; Howorth, J.; Li, L.; Agelin-Chaab, M.; Roy, L.; Knutzen, J.; Baltazar-y-Jimenez, A.; Muenker, K. Investigation of automotive LiDAR vision in rain from material and optical perspectives. Sensors 2024, 24, 2997. [Google Scholar] [CrossRef] [PubMed]

- Forlenza, L.; Fasano, G.; Accardo, D.; Moccia, A.; Rispoli, A. Integrated obstacle detection system based on radar and optical sensors. In Proceedings of the AIAA Infotech@ Aerospace 2010, Atlanta, Georgia, 20–22 April 2010. AIAA 2010-3421. [Google Scholar]

- Papa, U.; Del Core, G.; Giordano, G.; Ponte, S. Obstacle detection and ranging sensor integration for a small unmanned aircraft system. In Proceedings of the 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21–23 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 571–577. [Google Scholar]

- Poitevin, P.; Pelletier, M.; Lamontagne, P. Challenges in detecting UAS with radar. In Proceedings of the 2017 International Carnahan Conference on Security Technology (ICCST), Madrid, Spain, 23–26 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Gibbs, G.; Jia, H.; Madani, I. Obstacle detection with ultrasonic sensors and signal analysis metrics. Transp. Res. Procedia 2017, 28, 173–182. [Google Scholar] [CrossRef]

- Cazzato, D.; Cimarelli, C.; Sanchez-Lopez, J.L.; Voos, H.; Leo, M. A survey of computer vision methods for 2d object detection from unmanned aerial vehicles. J. Imaging 2020, 6, 78. [Google Scholar] [CrossRef] [PubMed]

- Vrba, M.; Walter, V.; Pritzl, V.; Pliska, M.; Báča, T.; Spurný, V.; Heřt, D.; Saska, M. On onboard LiDAR-based flying object detection. IEEE Trans. Robot. 2024, 41, 593–611. [Google Scholar] [CrossRef]

- Moses, A.; Rutherford, M.J.; Valavanis, K.P. Radar-based detection and identification for miniature air vehicles. In Proceedings of the 2011 IEEE International Conference on Control Applications (CCA), Denver, CO, USA, 28–30 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 933–940. [Google Scholar]

- Papa, U.; Core, G.D. Design of sonar sensor model for safe landing of an UAV. In Proceedings of the 2015 IEEE Metrology for Aerospace (MetroAeroSpace), Benevento, Italy, 4–5 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 346–350. [Google Scholar]

- Vera-Yanez, D.; Pereira, A.; Rodrigues, N.; Molina, J.P.; García, A.S.; Fernández-Caballero, A. Optical flow-based obstacle detection for mid-air collision avoidance. Sensors 2024, 24, 3016. [Google Scholar] [CrossRef]

- Thomas, B. Optical flow: Traditional approaches. In Computer Vision: A Reference Guide; Springer: Berlin/Heidelberg, Germany, 2021; pp. 921–925. [Google Scholar]

- Braillon, C.; Pradalier, C.; Crowley, J.L.; Laugier, C. Real-time moving obstacle detection using optical flow models. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Meguro-Ku, Japan, 13–15 June 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 466–471. [Google Scholar]

- Collins; Brendan, M.; Kornhauser, A.L. Stereo Vision for Obstacle Detection in Autonomous Navigation; DARPA Grand Challenge; Princeton University Technical Paper; Princeton University: Princeton, NJ, USA, 2006; pp. 255–264. [Google Scholar]

- Bernini, N.; Bertozzi, M.; Castangia, L.; Patander, M.; Sabbatelli, M. Real-time obstacle detection using stereo vision for autonomous ground vehicles: A survey. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 873–878. [Google Scholar]

- Riabko, A.; Averyanova, Y. Comparative analysis of SIFT and SURF methods for local feature detection in satellite imagery. In Proceedings of the CEUR Workshop Proceedings, CMSE 2024, Kyiv, Ukraine, 17 June 2024; Volume 3732, pp. 21–31. [Google Scholar]

- Hussey, T.B. Surface Defect Detection in Aircraft Skin & Visual Navigation based on Forced Feature Selection through Segmentation. 2021. Available online: https://apps.dtic.mil/sti/trecms/pdf/AD1132718.pdf (accessed on 16 June 2005).

- Tsapparellas, K.; Jelev, N.; Waters, J.; Brunswicker, S.; Mihaylova, L.S. Vision-based runway detection and landing for unmanned aerial vehicle enhanced autonomy. In Proceedings of the 2023 IEEE International Conference on Mechatronics and Automation (ICMA), Harbin, China, 6–9 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 239–246. [Google Scholar]

- Kalsotra, R.; Arora, S. Background subtraction for moving object detection: Explorations of recent developments and challenges. Vis. Comput. 2022, 38, 4151–4178. [Google Scholar] [CrossRef]

- Bilous, N.; Malko, V.; Frohme, M.; Nechyporenko, A. Comparison of CNN-Based Architectures for Detection of Different Object Classes. AI 2024, 5, 113. [Google Scholar] [CrossRef]

- Tian, Y. Effective Image Enhancement and Fast Object Detection for Improved UAV Applications. Master’s Thesis, University of Strathclyde, Glasgow, UK, 2023. [Google Scholar]

- Kim, J.; Cho, J. Rgdinet: Efficient onboard object detection with faster r-cnn for air-to-ground surveillance. Sensors 2021, 21, 1677. [Google Scholar] [CrossRef]

- Ramesh, G.; Jeswin, Y.; Divith, R.R.; Suhaag, B.R.; Kiran Raj, K.M. Real Time Object Detection and Tracking Using SSD Mobilenetv2 on Jetbot GPU. In Proceedings of the 2024 IEEE International Conference on Distributed Computing, VLSI, Electrical Circuits and Robotics (DISCOVER), Mangalore, India, 18–19 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 255–260. [Google Scholar]

- Turay, T.; Vladimirova, T. Toward performing image classification and object detection with convolutional neural networks in autonomous driving systems: A survey. IEEE Access 2022, 10, 14076–14119. [Google Scholar] [CrossRef]

- Liu, S.; Li, Y.; Qu, J.; Wu, R. Airport UAV and birds detection based on deformable DETR. J. Phys. Conf. Ser. 2022, 2253, 012024. [Google Scholar]

- Şengül, F.; Adem, K. Detection of Military Aircraft Using YOLO and Transformer-Based Object Detection Models in Complex Environments. BilişIm Teknol. Derg. 2025, 18, 85–97. [Google Scholar] [CrossRef]

- Zheng, X.; Liu, Y.; Lu, Y.; Hua, T.; Pan, T.; Zhang, W.; Tao, D.; Wang, L. Deep learning for event-based vision: A comprehensive survey and benchmarks. arXiv 2023, arXiv:2302.08890. [Google Scholar]

- Sun, H. Moving Objects Detection and Tracking Using Hybrid Event-Based and Frame-Based Vision for Autonomous Driving. Ph.D. Dissertation, École centrale de Nantes, Nantes, France, 2023. [Google Scholar]

- Thakur, A.; Mishra, S.K. An in-depth evaluation of deep learning-enabled adaptive approaches for detecting obstacles using sensor-fused data in autonomous vehicles. Eng. Appl. Artif. Intell. 2024, 133, 108550. [Google Scholar] [CrossRef]

- Alaba, S.; Gurbuz, A.; Ball, J. A comprehensive survey of deep learning multisensor fusion-based 3d object detection for autonomous driving: Methods, challenges, open issues, and future directions. TechRxiv 2022. [Google Scholar] [CrossRef]

- Zhang, J. An aircraft image detection and tracking method based on improved optical flow method. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2512–2516. [Google Scholar]

- Bota, S.; Nedevschi, S.; Konig, M. A framework for object detection, tracking and classification in urban traffic scenarios using stereovision. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 153–156. [Google Scholar]

- Garcia-Garcia, B.; Bouwmans, T.; Silva, A.J.R. Background subtraction in real applications: Challenges, current models and future directions. Comput. Sci. Rev. 2020, 35, 100204. [Google Scholar] [CrossRef]

- Azam, B.; Khan, M.J.; Bhatti, F.A.; Maud, A.R.M.; Hussain, S.F.; Hashmi, A.J.; Khurshid, K. Aircraft detection in satellite imagery using deep learning-based object detectors. Microprocess. Microsyst. 2022, 94, 104630. [Google Scholar] [CrossRef]

- Alganci, U.; Soydas, M.; Sertel, E. Comparative research on deep learning approaches for airplane detection from very high-resolution satellite images. Remote Sens. 2020, 12, 458. [Google Scholar] [CrossRef]

- Jiang, Z.P.; Wang, Z.Q.; Zhang, Y.S.; Yu, Y.; Cheng, B.B.; Zhao, L.H.; Zhang, M.W. A vehicle object detection algorithm in UAV video stream based on improved Deformable DETR. Comput. Eng. Sci. 2024, 46, 91. [Google Scholar]

- Mitrokhin, A.; Fermüller, C.; Parameshwara, C.; Aloimonos, Y. Event-based moving object detection and tracking. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Lee, S.; Seo, S.-W. Sensor fusion for aircraft detection at airport ramps using conditional random fields. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18100–18112. [Google Scholar] [CrossRef]

- Ruz, J.J.; Pajares, G.; de la Cruz, J.M.; Arevalo, O. UAV Trajectory Planning for Static and Dynamic Environments; INTECH Open Access Publisher: London, UK, 2009. [Google Scholar]

- Fasano, G.; Accado, D.; Moccia, A.; Moroney, D. Sense and avoid for unmanned aircraft systems. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 82–110. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Pan, H. Multi-scale vehicle detection in high-resolution aerial images with context information. IEEE Access 2020, 8, 208643–208657. [Google Scholar] [CrossRef]

- Hwang, S.; Lee, J.; Shin, H.; Cho, S.; Shim, D.H. Aircraft detection using deep convolutional neural network in small unmanned aircraft systems. In Proceedings of the 2018 AIAA Information Systems-AIAA Infotech@ Aerospace, Kissimmee, FL, USA, 8–12 January 2018; p. 2137. [Google Scholar]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Al-Absi, M.A.; Fu, R.; Kim, K.-H.; Lee, Y.-S.; Al-Absi, A.A.; Lee, H.-J. Tracking unmanned aerial vehicles based on the Kalman filter considering uncertainty and error aware. Electronics 2021, 10, 3067. [Google Scholar] [CrossRef]

- Wang, T.; Chen, B.; Wang, N.; Ji, Y.; Li, H.; Zhang, M. Zero-shot obstacle detection using panoramic vision in farmland. J. Field Robot. 2024, 41, 2169–2183. [Google Scholar] [CrossRef]

- Ming, R.; Zhou, Z.; Lyu, Z.; Luo, X.; Zi, L.; Song, C.; Zang, Y.; Liu, W.; Jiang, R. Laser tracking leader-follower automatic cooperative navigation system for UAVs. Int. J. Agric. Biol. Eng. 2022, 15, 165–176. [Google Scholar] [CrossRef]

- Zou, C.; Li, L.; Cai, G.; Lin, R. Fixed-point landing method for unmanned aerial vehicles using multi-sensor pattern detection. Unmanned Syst. 2024, 12, 173–182. [Google Scholar] [CrossRef]

- Loureiro, G.; Dias, A.; Martins, A.; Almeida, J. Emergency landing spot detection algorithm for unmanned aerial vehicles. Remote Sens. 2021, 13, 1930. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Qureshi, J.; Roberts, P. Laboratory and UAV-based identification and classification of tomato yellow leaf curl, bacterial spot, and target spot diseases in tomato utilizing hyperspectral imaging and machine learning. Remote Sens. 2020, 12, 2732. [Google Scholar] [CrossRef]

- Lyu, Y.; Vosselman, G.; Xia, G.S.; Yilmaz, A.; Yang, M.Y. UAVid: A semantic segmentation dataset for UAV imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Lyu, Y.; Vosselman, G.; Xia, G.; Yilmaz, A.; Yang, M.Y. The uavid dataset for video semantic segmentation. arXiv 2018, arXiv:1810.10438. [Google Scholar]

- Zeng, Y.; Duan, Q.; Chen, X.; Peng, D.; Mao, Y.; Yang, K. UAVData: A dataset for unmanned aerial vehicle detection. Soft Comput. 2021, 25, 5385–5393. [Google Scholar] [CrossRef]

- Cao, Y.; He, Z.; Wang, L.; Wang, W.; Yuan, Y.; Zhang, D.; Zhang, J.; Zhu, P.; Van Gool, L.; Han, J.; et al. VisDrone-DET2021: The vision meets drone object detection challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2847–2854. [Google Scholar]

- Muzammul, M.; Algarni, A.; Ghadi, Y.Y.; Assam, M. Enhancing UAV aerial image analysis: Integrating advanced SAHI techniques with real-time detection models on the VisDrone dataset. IEEE Access 2024, 12, 21621–21633. [Google Scholar] [CrossRef]

- Vo, N.D.; Ngo, H.G.; Le, T.; Nguyen, Q.; Doan, D.D.; Nguyen, B.; Le-Huu, D.; Le-Duy, N.; Tran, H.; Nguyen, K.D.; et al. Aerial Data Exploration: An in-Depth Study From Horizontal to Oriented Viewpoint. IEEE Access 2024, 12, 37799–37824. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar] [CrossRef]

- Gao, Q.; Shen, X.; Niu, W. Large-scale synthetic urban dataset for aerial scene understanding. IEEE Access 2020, 8, 42131–42140. [Google Scholar] [CrossRef]

- Tran, M.T.; Tran, B.V.; Vo, N.D.; Nguyen, K. Uit-dronefog: Toward high-performance object detection via high-quality aerial foggy dataset. In Proceedings of the 2021 8th NAFOSTED Conference on Information and Computer Science (NICS), Hanoi, Vietnam, 21–22 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 290–295. [Google Scholar]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic foggy scene understanding with synthetic data. Int. J. Comput. Vis. 2018, 126, 973–992. [Google Scholar] [CrossRef]

- Binas, J.; Neil, D.; Liu, S.C.; Delbruck, T. DDD17: End-to-end DAVIS driving dataset. arXiv 2017, arXiv:1711.01458. [Google Scholar]

- Fang, W.; Zhang, G.; Zheng, Y.; Chen, Y. Multi-task learning for uav aerial object detection in foggy weather condition. Remote Sens. 2023, 15, 4617. [Google Scholar] [CrossRef]

- Henderson, P.; Ferrari, V. End-to-end training of object class detectors for mean average precision. In Proceedings of the Computer Vision–ACCV 2016: 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Revised Selected Papers, Part V 13. Springer: Berlin/Heidelberg, Germany, 2017; pp. 198–213. [Google Scholar]

- Sobti, A.; Arora, C.; Balakrishnan, M. Object detection in real-time systems: Going beyond precision. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1020–1028. [Google Scholar]

- Carrio, A.; Lin, Y.; Saripalli, S.; Campoy, P. Obstacle detection system for small UAVs using ADS-B and thermal imaging. J. Intell. Robot. Syst. 2017, 88, 583–595. [Google Scholar] [CrossRef]

- Ali, W. Deep Learning-Based Obstacle-Avoiding Autonomous UAV for GPS-Denied Structures. Master’s Thesis, University of Manitoba, Winnipeg, MB, Canada, 2023. [Google Scholar]

- Li, Y.; Li, M.; Qi, J.; Zhou, D.; Zou, Z.; Liu, K. Detection of typical obstacles in orchards based on deep convolutional neural network. Comput. Electron. Agric. 2021, 181, 105932. [Google Scholar] [CrossRef]

- He, Y.; Liu, Z. A feature fusion method to improve the driving obstacle detection under foggy weather. IEEE Trans. Transp. Electrif. 2021, 7, 2505–2515. [Google Scholar] [CrossRef]

- Falanga, D.; Kim, S.; Scaramuzza, D. How fast is too fast? The role of perception latency in high-speed sense and avoid. IEEE Robot. Autom. Lett. 2019, 4, 1884–1891. [Google Scholar] [CrossRef]

- Garnett, N.; Silberstein, S.; Oron, S.; Fetaya, E.; Verner, U.; Ayash, A.; Goldner, V.; Cohen, R.; Horn, K.; Levi, D. Real-time category-based and general obstacle detection for autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 198–205. [Google Scholar]

- Fang, Z.; Yang, S.; Jain, S.; Dubey, G.; Roth, S.; Maeta, S.; Nuske, S.; Zhang, Y.; Scherer, S. Robust autonomous flight in constrained and visually degraded shipboard environments. J. Field Robot. 2017, 34, 25–52. [Google Scholar] [CrossRef]

- Martínez-Martín, E.; del Pobil, A.P. Motion detection in static backgrounds. In Robust Motion Detection in Real-Life Scenarios; Springer: London, UK, 2012; pp. 5–42. [Google Scholar]

- Paek, D.-H.; Kong, S.-H.; Wijaya, K.T. K-radar: 4d radar object detection for autonomous driving in various weather conditions. Adv. Neural Inf. Process. Syst. 2022, 35, 3819–3829. [Google Scholar]

- Dell’Olmo, P.V.; Kuznetsov, O.; Frontoni, E.; Arnesano, M.; Napoli, C.; Randieri, C. Dataset Dependency in CNN-Based Copy-Move Forgery Detection: A Multi-Dataset Comparative Analysis. Mach. Learn. Knowl. Extr. 2025, 7, 54. [Google Scholar] [CrossRef]

- Randieri, C.; Perrotta, A.; Puglisi, A.; Bocci, M.G.; Napoli, C. CNN-Based Framework for Classifying COVID-19, Pneumonia, and Normal Chest X-Rays. Big Data Cogn. Comput. 2025, 9, 186. [Google Scholar] [CrossRef]

- Lee, J.; Wang, J.; Crandall, D.; Šabanović, S.; Fox, G. Real-time, cloud-based object detection for unmanned aerial vehicles. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 36–43. [Google Scholar]

- Shi, C.; Lai, G.; Yu, Y.; Bellone, M.; Lippiello, V. Real-time multi-modal active vision for object detection on UAVs equipped with limited field of view LiDAR and camera. IEEE Robot. Autom. Lett. 2023, 8, 6571–6578. [Google Scholar] [CrossRef]

| Challenge | Description | Impact on UAV Operation | Detection Requirements |

|---|---|---|---|

| Thin Object Detection [35] | Difficulty in identifying small, low-contrast objects like wires, cables, and branches, especially in complex backgrounds. | High risk of collisions during low-altitude or urban flights. | High-resolution sensing, edge-enhancement algorithms, adaptive thresholding. |

| Real-Time Tracking of Moving Objects [36] | Obstacles like vehicles, humans, animals, and other UAVs constantly change position. | Requires fast decision-making in dynamic environments such as disaster zones or airports. | Temporal tracking, motion prediction, multi-frame data fusion. |

| Dense Stationary Environments [37] | Urban and forested areas present cluttered scenes with many static obstacles. | Poor detection may lead to inefficient routes or mid-air collisions. | Context-aware detection, semantic segmentation, spatial filtering. |

| Harsh Weather Conditions [38] | Rain, fog, smoke, and dust reduce visibility and sensor performance. | Degraded perception, increased false detections or failures. | Sensor fusion (e.g., radar + camera), robust feature extraction, weather-resilient models. |

| Motion Blur at High Speed [39] | Sudden movements or high-speed navigation cause image distortion and blur. | Reduces detection accuracy, especially in fast missions (e.g., surveillance, delivery). | Fast shutter sensors, motion-deblurring algorithms, event-based cameras. |

| Limited Onboard Computing Power [40] | UAVs have constraints on weight, power, and processing hardware. | Real-time detection becomes difficult without lightweight models. | Efficient algorithms, model pruning, hardware acceleration (e.g., TPU/FPGA). |

| Sensor Type | Working Principle | Advantage in Bad Conditions | Disadvantage in Bad Conditions | Best for Challenging Conditions |

|---|---|---|---|---|

| RGB Camera [52] | Uses visible light (RGB), infrared (IR) for heat signatures, or detects changes in scenes (event-based). | High-resolution images, cheap, work well in low light, and detect heat. | Efforts in fog, rain, smoke, and low light; poor in weighty rain, fog, and smoke. | Night time detection with IR aid; night-time or thermal detection. |

| LiDAR [53] | Emits laser pulsations to construct a 3D map of surroundings based on light return time. | Real deep, operates in lower light. | Affected by rain, fog, and smoke. | 3D habitat mapping. |

| Radar [54] | Emits radio waves to detect objects by computing the reflected signals. | Works well in rain, fog, and smoke. | Low resolution, confused in urban areas. | Long-range detection in inadequate visibility. |

| Sonar [55] | Uses sound waves to detect objects by evaluating sound wave reflections. | Short-range detection, good in low light. | Limited range, impacted by ambient noise. | Close-range obstacle detection. |

| Method | Type | Strengths | Limitations in Challenging Conditions | Typical Use Cases |

|---|---|---|---|---|

| Optical Flow [76] | Classical | Estimates motion; useful for dynamic obstacle detection. | Sensitive to rain, fog, motion blur; mistakes in inadequate lighting. | Motion tracking, obstacle avoidance. |

| Stereo Vision [77] | Classical | Deep analysis; emulates human binocular vision. | Inadequate implementation in fog/smoke; low accuracy in textureless circumstances. | 3D mapping, length measurement. |

| Feature Tracking (SIFT, SURF) [61] | Classical | Dedicated on textured surfaces; rotation/scale invariant. | Fails under blur, low contrast, rain/fog distortion. | Object tracking, navigation. |

| Background Subtraction [78] | Classical | Easy and quick for detecting rolling objects. | Insufficient under lighting differences, fog, rain; not rich in dynamic scenes. | Surveillance, simple obstacle detection. |

| YOLO, Faster R-CNN, SSD [79,80] | Deep Learning | Real-time, high accuracy; multiple object detection. | Needs diverse training data; performance drops in unseen bad conditions. | Aircraft and obstacle detection, real-time UAV vision. |

| DETR, Deformable DETR [81] | Deep Learning | Good for cluttered scenes; models long-range dependencies. | Computationally costly; narrow on UAV hardware. | Tough object detection, multi-target tracking. |

| Event-based Vision Networks [82] | Deep Learning | Effective in rapid movement and low light; low latency. | Needs event cameras; fixed datasets and hardware help. | High-speed UAV navigation, blur-resistant detection. |

| Sensor Fusion (Camera + LiDAR) [83] | Deep Learning | Combines depth and texture; improves detection in fog/rain. | Complex calibration; high computational load. | Robust UAV detection in bad weather. |

| Detection Type | Sensors Used | Algorithms/Models | Limitations in Challenging Conditions | Typical Use Cases |

|---|---|---|---|---|

| Obstacle Detection | LiDAR, RGB/stereo cameras, ultrasonic, event cameras | SLAM, YOLO, optical flow, semantic segmentation [76,77,79] | Sensitive to fog, rain, thin objects, motion blur; short-range limitations | Urban navigation, low-altitude flight, indoor mapping, precision landing |

| Aircraft Detection | Radar, infrared, thermal, long-range vision, ADS-B | Kalman filter, tracking networks, CNN/DETR models [90] | Issues with long-range accuracy, small object visibility, dynamic flight paths | Airspace monitoring, collision avoidance, multi-UAV coordination |

| Dataset | Environment | Weather/Lighting | Type | Annotations | Key Applications |

|---|---|---|---|---|---|

| UAVid [97] | Urban (real-world) | Mostly clear, daylight | Real-world | Semantic segmentation | Urban scene understanding, object detection |

| VisDrone [98] | Urban, crowded scenes | Varies (clear, overcast, etc.) | Real-world | Bounding boxes, object class, tracking | Object detection, tracking, crowd analysis |

| DOTA [88] | Aerial/satellite images | Clear (daylight scenes) | Real-world | Oriented bounding boxes | Aircraft/vehicle detection from aerial views |

| Foggy Cityscapes [106] | Urban (synthetic fog) | Foggy conditions (simulated) | Synthetic | Pixel-level segmentation | Adverse weather evaluation, semantic segmentation |

| Method | Sensors Used | Conditions Handled | Strengths | Weaknesses |

|---|---|---|---|---|

| Optical Flow [76] | RGB Camera | Limited fog, moderate motion blur | Simple, lightweight | Fails in poor lighting, sensitive to noise |

| Stereo Vision [77] | Dual RGB Cameras | Good in daylight, not in fog/rain/night | Depth estimation | High computation, unreliable in bad weather |

| Feature Tracking (SIFT, SURF) [61] | RGB Camera | Clear, moderately cluttered scenes | Robust to scale/rotation | Fails in low-texture, fog, smoke |

| Motion Detection [116] | RGB Camera | Static backgrounds, low clutter | Fast, low resource | Not effective in UAV motion/dynamic scenes |

| YOLO/Faster R-CNN/SSD [79,80] | RGB/IR Camera | Some fog, night with IR | High accuracy, fast inference | Needs large dataset, struggles in dense fog/rain |

| Deformable DETR [81] | RGB Camera | Clutter, blur, partial occlusion | Captures global context | Heavy model, higher latency |

| Event-based Neural Networks [82] | Event Camera | Fast motion, low light, blur | Excellent for motion blur, low latency | Expensive hardware, limited datasets |

| Sensor Fusion (Camera+LiDAR) [83] | RGB/IR Camera + LiDAR | Fog, clutter, occlusion | Combines depth/image, robust | Complex calibration, degraded in heavy rain |

| Radar-based Detection [117] | Radar (Camera) | Rain, fog, smoke | Works in harsh weather, long range | Low spatial resolution |

| Sonar-based Detection [55] | Sonar | Short-range, indoor/low-speed | Lightweight, noise resistant | Limited range/accuracy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Randieri, C.; Ganesh, S.V.; Raj, R.D.A.; Yanamala, R.M.R.; Pallakonda, A.; Napoli, C. Aerial Autonomy Under Adversity: Advances in Obstacle and Aircraft Detection Techniques for Unmanned Aerial Vehicles. Drones 2025, 9, 549. https://doi.org/10.3390/drones9080549

Randieri C, Ganesh SV, Raj RDA, Yanamala RMR, Pallakonda A, Napoli C. Aerial Autonomy Under Adversity: Advances in Obstacle and Aircraft Detection Techniques for Unmanned Aerial Vehicles. Drones. 2025; 9(8):549. https://doi.org/10.3390/drones9080549

Chicago/Turabian StyleRandieri, Cristian, Sai Venkata Ganesh, Rayappa David Amar Raj, Rama Muni Reddy Yanamala, Archana Pallakonda, and Christian Napoli. 2025. "Aerial Autonomy Under Adversity: Advances in Obstacle and Aircraft Detection Techniques for Unmanned Aerial Vehicles" Drones 9, no. 8: 549. https://doi.org/10.3390/drones9080549

APA StyleRandieri, C., Ganesh, S. V., Raj, R. D. A., Yanamala, R. M. R., Pallakonda, A., & Napoli, C. (2025). Aerial Autonomy Under Adversity: Advances in Obstacle and Aircraft Detection Techniques for Unmanned Aerial Vehicles. Drones, 9(8), 549. https://doi.org/10.3390/drones9080549