Fast Detection of Plants in Soybean Fields Using UAVs, YOLOv8x Framework, and Image Segmentation

Abstract

1. Introduction

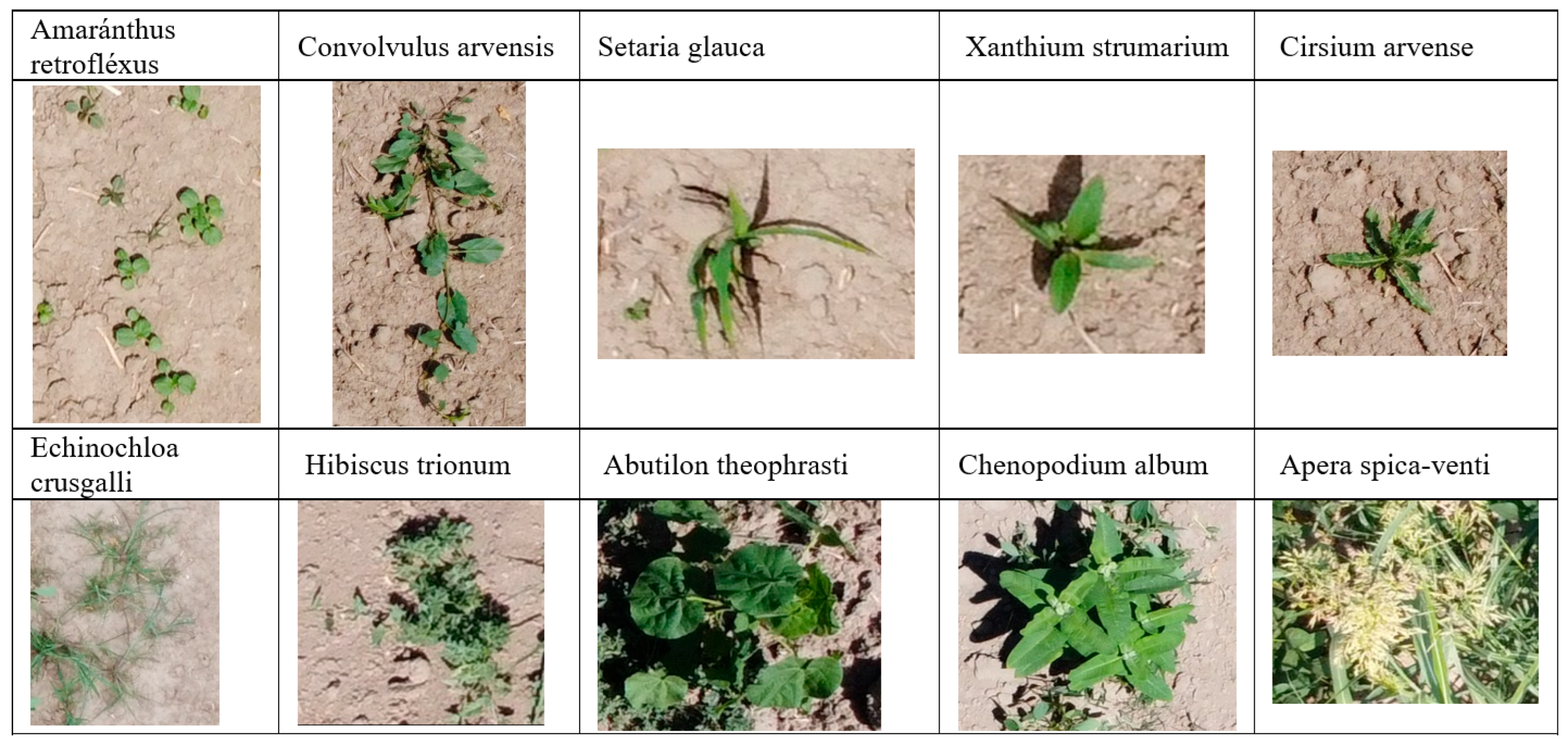

- A new dataset for soybean fields was developed; it includes annotated images of not only soybeans but also seven common weed species found in agricultural fields in Central Asia.

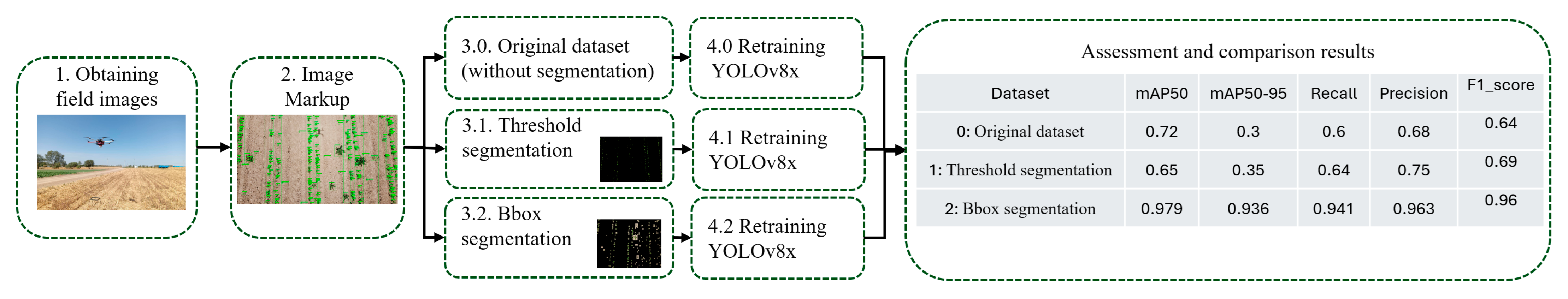

- A method for preprocessing the training data using Bbox segmentation is proposed; it significantly improves the accuracy of classification and localization. The application of the proposed approach led to a significant improvement in the model performance: f1 score increased from 0.64 to 0.959 and mAP@0.5 increased from 0.72 to 0.979.

- The highest classification accuracy to date was achieved using the YOLOv8x algorithm and UAV images (f1 score = 0.984) for the soybean recognition task.

- The proposed model significantly outperformed previously described approaches in terms of detection speed, providing a 13-fold increase in speed compared to the results presented in the literature previously. The trained model processes one image in 20.9 milliseconds and can provide detection in a video stream at a rate of 47 frames per second. That is, plant detection is possible in real time.

- The second section describes the materials and methods in detail, including the procedures for data collection, image labeling, and object detection.

- The third section presents the results of the experiments conducted using the proposed approach.

- The fourth section analyzes and discusses the results, focusing on their comparative evaluation and interpretation.

- The conclusion summarizes the results of the study and identifies possible directions for future research.

2. Materials and Methods

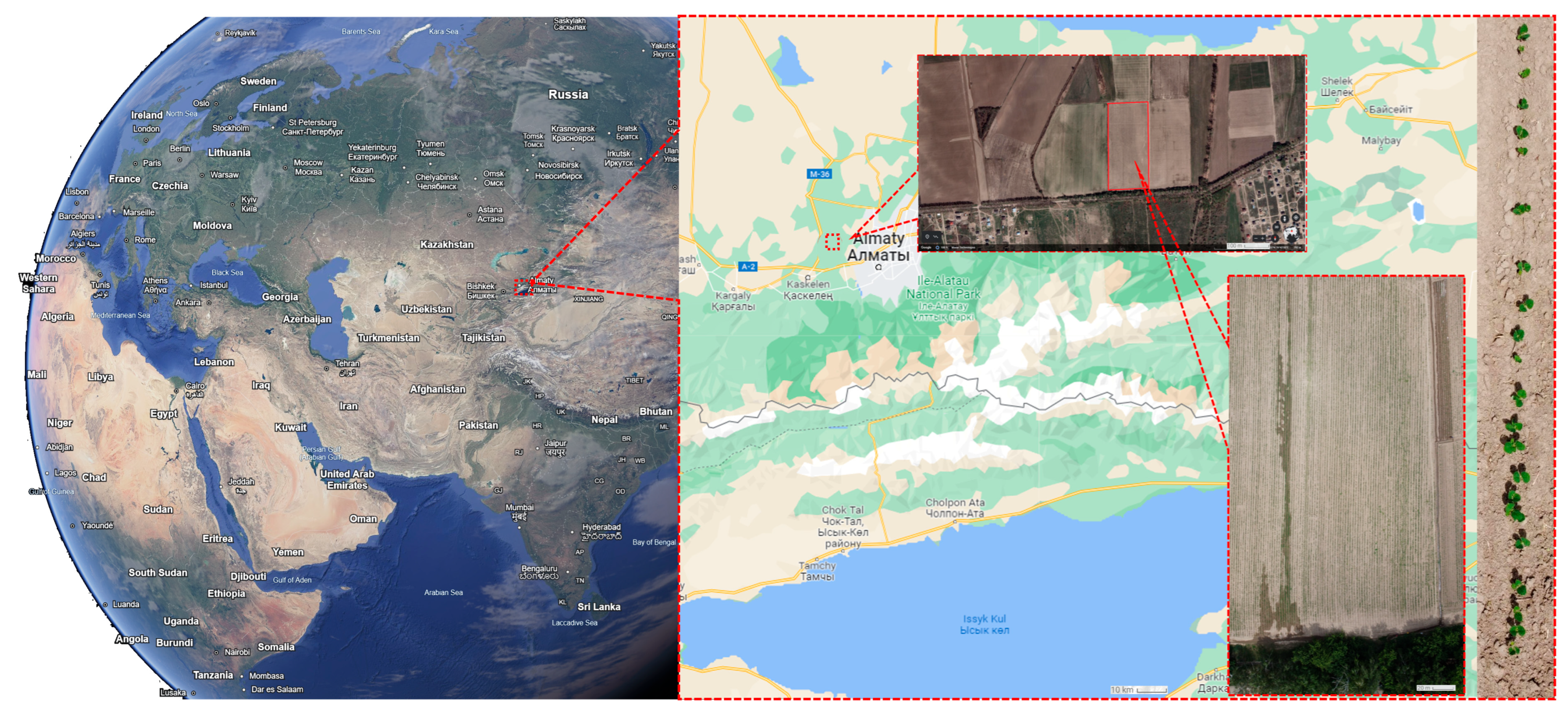

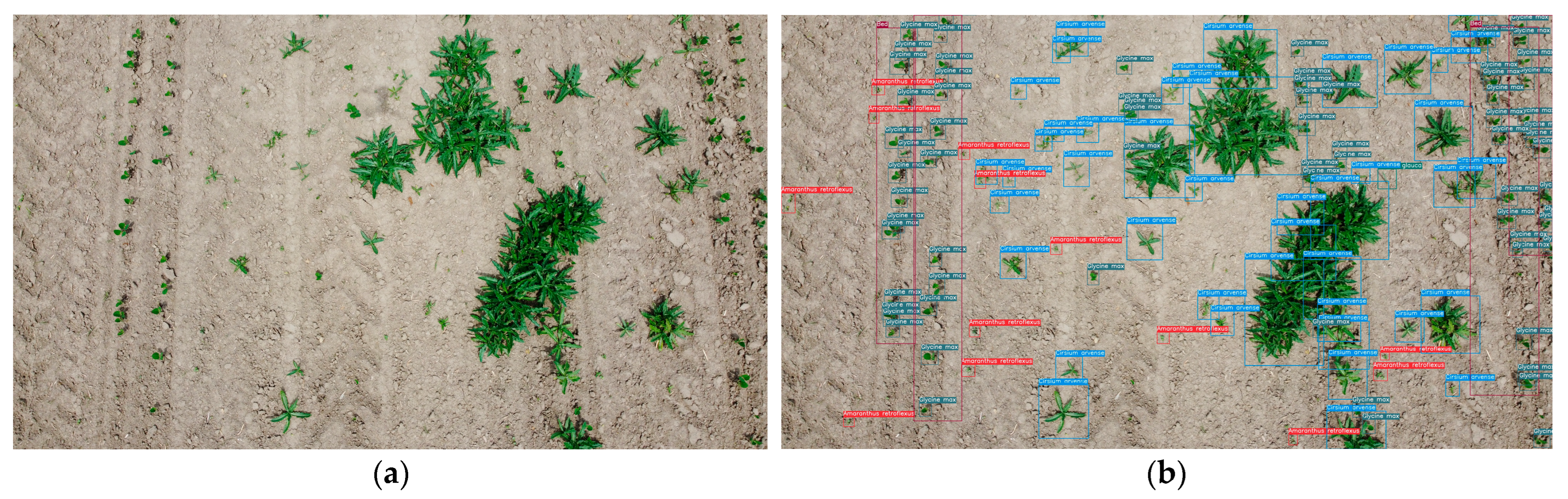

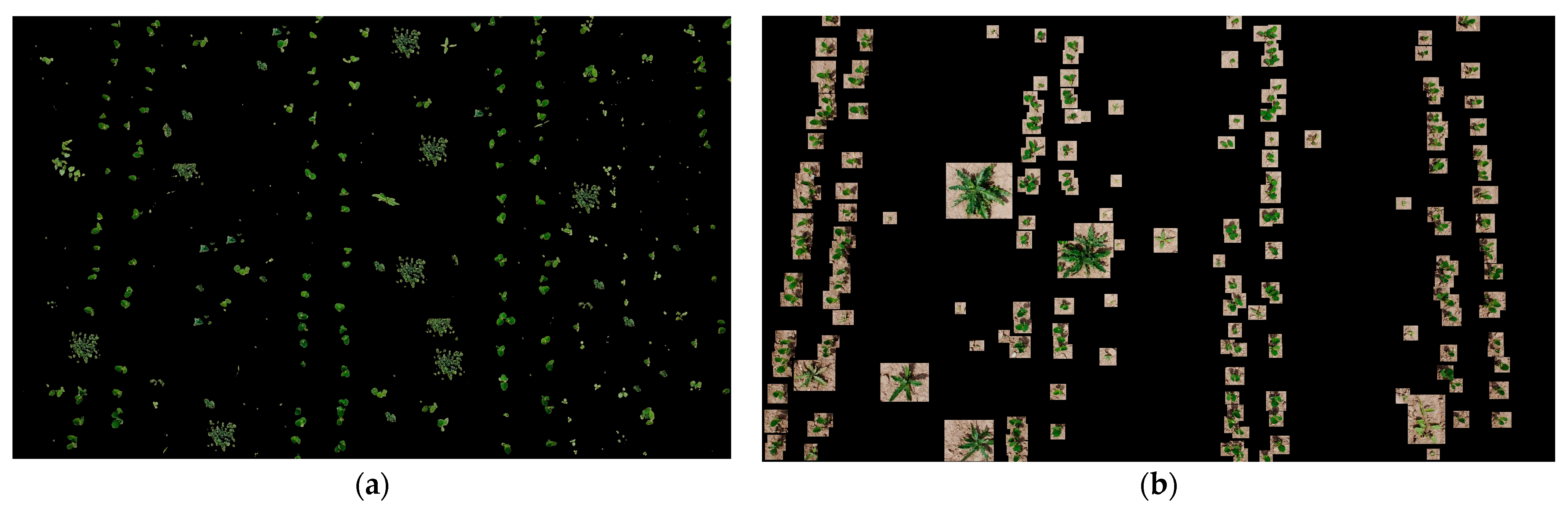

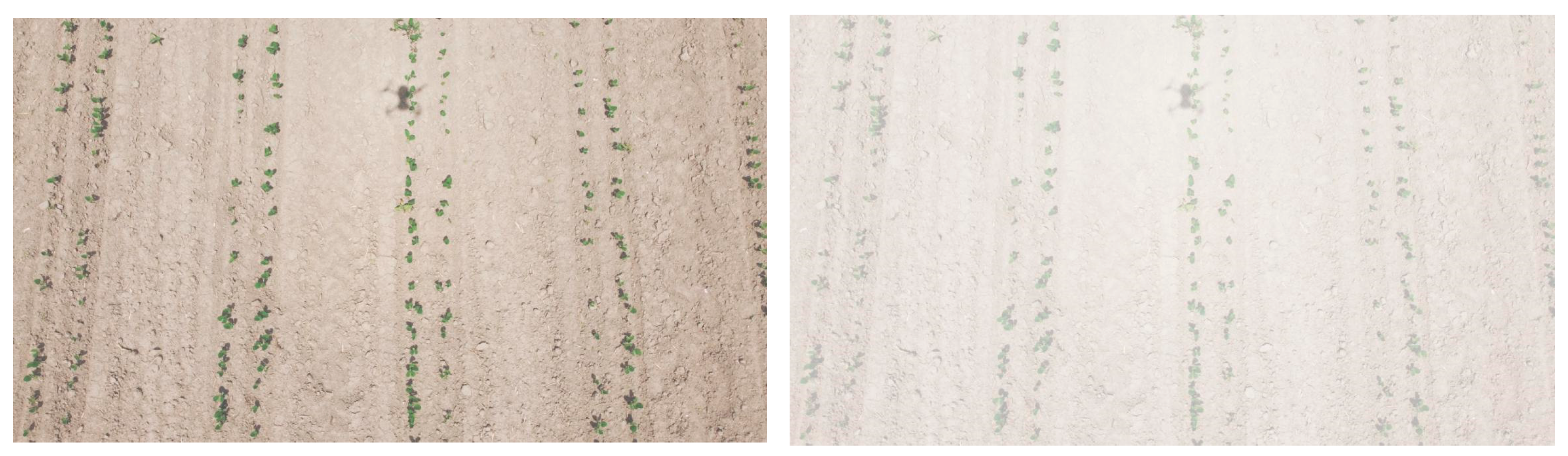

- Field images were obtained during the routine experimental overflights of soybean fields. The overflights were conducted using a commercially available DJI Mavic Mini 2 UAV (SZ DJI Technology Co., Ltd., Shenzhen, China) equipped with an RGB camera with 4K pixel resolution (CMOS 1/2.3″, Effective Pixels: 12 MP, FOV: 83°, resolution 4000 × 2250) at an altitude of 4 to 10 m (see Figure 4a).

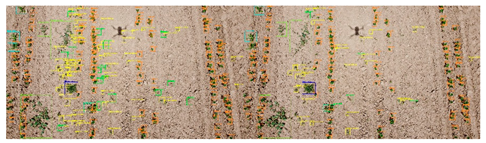

- Image labeling was performed using CVAT; therefore, most plant images were boxed and annotated with one of 10 weed and soybean classes (see Figure 4b).The set of acquired marked images was divided in an 80/20 proportion into training (300 images) and test images (76 images).

- Segmentation. The essence of the segmentation process is to remove the background along with unlabeled objects in each image. Two approaches were used for this purpose.

- 3.1.

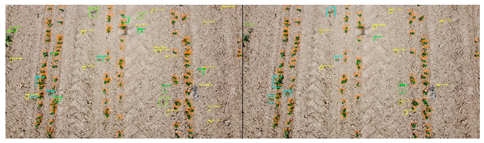

- The first approach implemented in the experiment 1 provided threshold segmentation of plant images using the plantcv library [23]. In the process of threshold segmentation, each pixel of the image is compared with a given brightness threshold selected during the preliminary experiments in the range from 0 to 255. The best result is obtained at the threshold equal to 144. The brightness of pixels with a value lower than the threshold is set to 0. Pixels with a brightness higher than the threshold are not changed (see Figure 5a).

- 3.2.

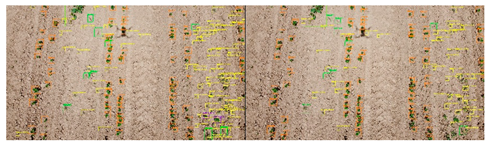

- The second segmentation option (experiment 2 or Bbox segmentation) involved removing all unlabeled plant and background images outside the bounding box (Bbox) (see Figure 5b).

- YOLOv8x retraining and evaluation of the results. The YOLOv8x framework, which has 70–80 million trainable parameters [24], was used in the experiments. The YOLOv8x model was pre-trained on the COCO (Common Objects in Context) dataset containing objects of 80 classes ranging from persons and cars to toothbrushes [25,26]. For each dataset, the same set of images was used to test and evaluate the results obtained, but the retraining was performed independently on three datasets: dataset for experiment 1 (threshold segmentation), dataset for experiment 2 (Bbox segmentation), and original dataset without segmentation. To improve the generalization ability of the model and its qualitative indicators, the training dataset was extended by applying the image augmentation library [27]. A total of 1000 augmented images were added and used for training in experiments 1 and 2. The augmentation parameters are described in Appendix C.

3. Results

4. Discussion

- The datasets are not balanced and contain many images of cultivated plants and much fewer weeds. As a result, the quality of weed classification is significantly inferior to the quality of useful plant detection.All experiments were performed only during the first stage of soybean growth, when plants are well identified and there is little overlap. Expanding the dataset with field images at later stages of soybean growth will increase the practical applicability of the dataset and the corresponding detection method.

- The photographs of the fields were taken from a low altitude, which on the one hand improves the quality of the images and on the other hand significantly increases the required duration of the UAV flight.

5. Conclusions

- Improve the balance of classes in the datasets by increasing the number of weed plant images.

- Analyze the possibilities of plant detection at the next phases of soybean development.

- Analyze the dependence of detection quality on the UAV flight altitude and shooting conditions.

- Increase the set of annotated images by surveying fields in different regions of the country.

- Apply the described approach to the detection of other cultivated plants growing in Kazakhstan.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Abbreviated List of the Main Phases of Soybean Development

| No. | Name of the Growth Stage | Timing |

|---|---|---|

| 1 | Seedlings | The end of the first and beginning of the second week after sowing |

| 2 | True trifoliate leaf, or trefoil | 3–4 weeks |

| 3 | 2–5 trefoils | 5 weeks to 10 weeks |

| 4 | Branching | 11 weeks |

| 5 | Flowering | 12 weeks to 16 weeks |

| 6 | Formation of beans | 13–14 weeks to 17–18 weeks |

| 7 | Seed filling | 17 weeks to 20 weeks |

| 8 | Ripening | 21 weeks to 23 weeks |

Appendix B. Drone DJI Mavic Mini 2 Specifications

| Parameter | Meaning |

|---|---|

| Takeoff weight | <249 g |

| Diagonal size (without propellers) | 213 mm |

| Maximum altitude gain rate | 5 m/s (S mode); 3 m/s (N mode); 2 m/s (C mode) |

| Maximum descent rate | 3.5 m/s (S mode); 3 m/s (P mode); 1.5 m/s (C mode) |

| Maximum flight speed | 16 m/s (S mode); 10 m/s (N mode); 6 m/s (C mode) |

| Maximum tilt angle | 40° (S mode); 25° (N mode); 25° (C mode) (up to 40° in strong wind) |

| Maximum angular velocity | 130°/s (S mode); 60°/s (N mode); 30°/s (C mode) (can be set to 250°/s in the DJI Fly app) |

| Maximum flight altitude | 2000–4000 m |

| Maximum wind speed | 8.5–10.5 m/s (up to Beaufort 5) |

| Maximum flight time | 31 min (at 4.7 m/s in calm weather) |

| Range of operating temperatures | 0° to 40 °C |

| Positioning accuracy in the vertical plane | ±0.1 m (visual positioning), ±0.5 m (satellite positioning) |

| Positioning accuracy in the horizontal plane | ±0.3 m (visual positioning), ±1.5 m (satellite positioning) |

| Operating frequency | 2.4–2.4835 GHz |

| Parameter | Meaning |

|---|---|

| Effective number of pixels | 12 MP |

| Sensor type | CMOS, 1/2.3″ size |

| Lens | Field of view: 83°, equivalent to 24 mm (35 mm format); aperture: f/2.8; focus: 1 m to infinity |

| Shutter speed | Electronic shutter, speed: 4–1/8000 sec |

| ISO | Video: 100–3200 (auto), 100–3200 (manual); photo: 100–3200 (auto), 100–3200 (manual) |

| Image size | 4:3: 4000 × 3000; 16:9: 4000 × 2250 |

| Supported file systems | FAT32 (up to 32 GB); exFAT (over 32 GB) |

| Maximum video bitrate | 100 Mbps |

| Supported file formats | Photo: JPEG/DNG (RAW); video: MP4 (H.264/MPEG-4 AVC) |

| Supported memory cards | UHS-I Speed Class 3 or higher |

| Video resolution | 4K: 3840 × 2160@24/25/30fps; 2.7K: 2720 × 1530 for 24/25/30/48/50/60 fps; FHD: 1920 × 1080 for 24/25/30/48/50/60 fps. |

Appendix C. Image Augmentation Parameters

- Scaling—slight increase or decrease in the image within the range from 90% to 110%. This helps the model become resistant to changes in the size of objects.

- Horizontal reflection (Fliplr)—with a 50% probability, the image is mirrored horizontally.

- Vertical reflection (Flipud)—with a 50% probability, the image is mirrored vertically.

- Rotate—random rotation of the image within the range from −15 to + 15 degrees. This imitates camera tilts or imperfect shooting.

- Gaussian blur—adds blur with varying intensity (from none to moderate). Increases the model’s resistance to fuzzy or blurry images.

- Change brightness/contrast (multiply)—multiplies pixels by a random value from 0.8 to 1.2. Increases the model’s resistance to random changes in lighting conditions.

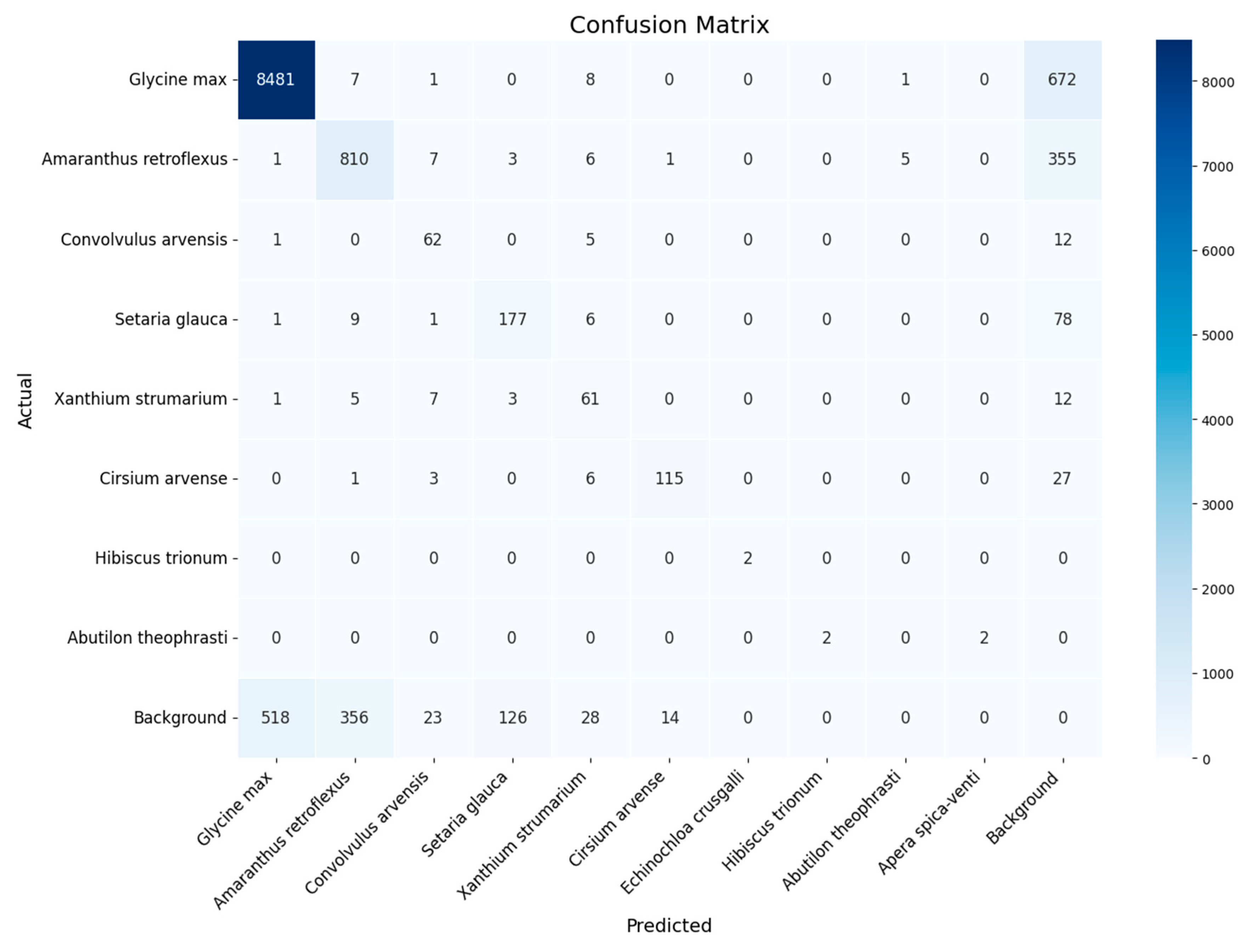

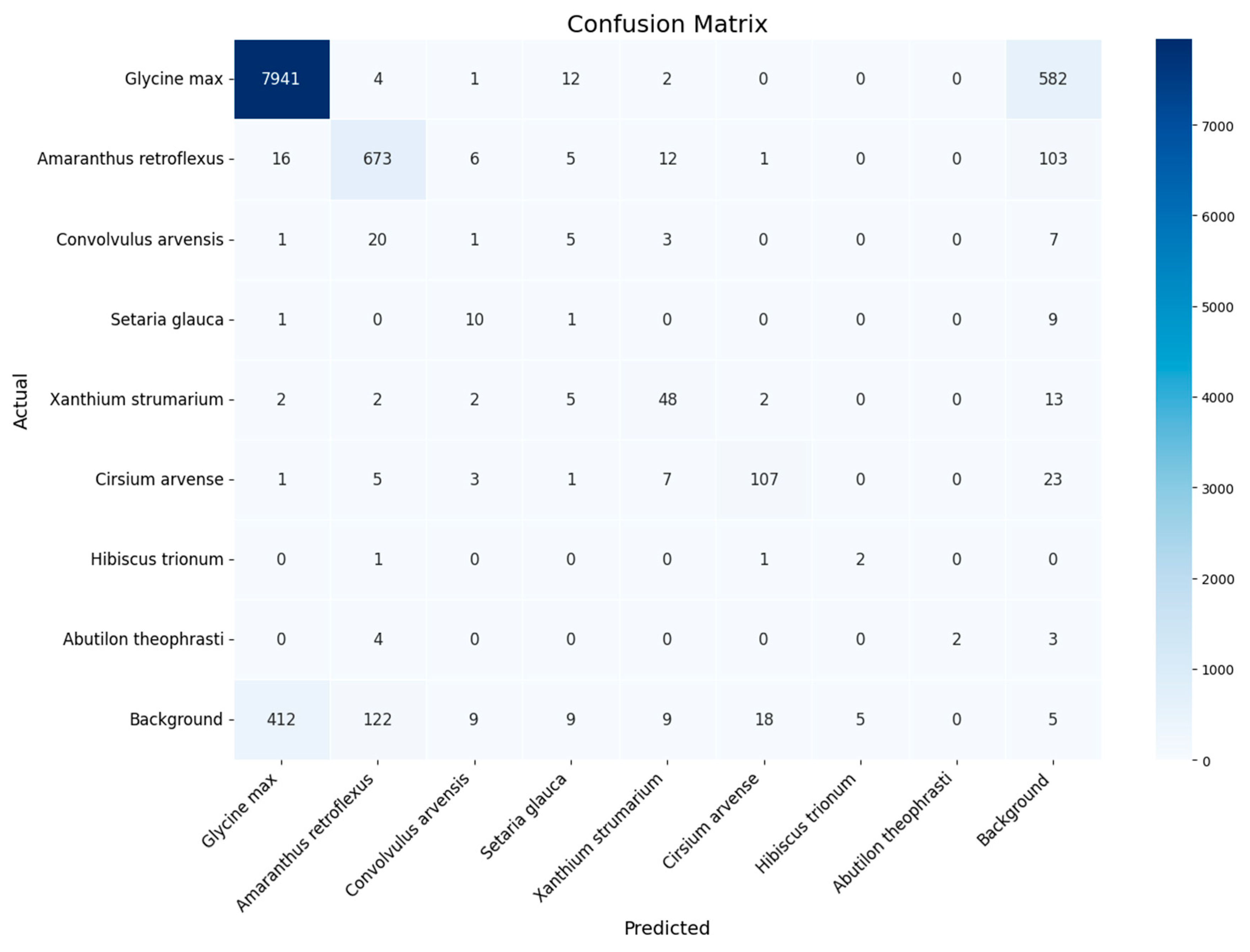

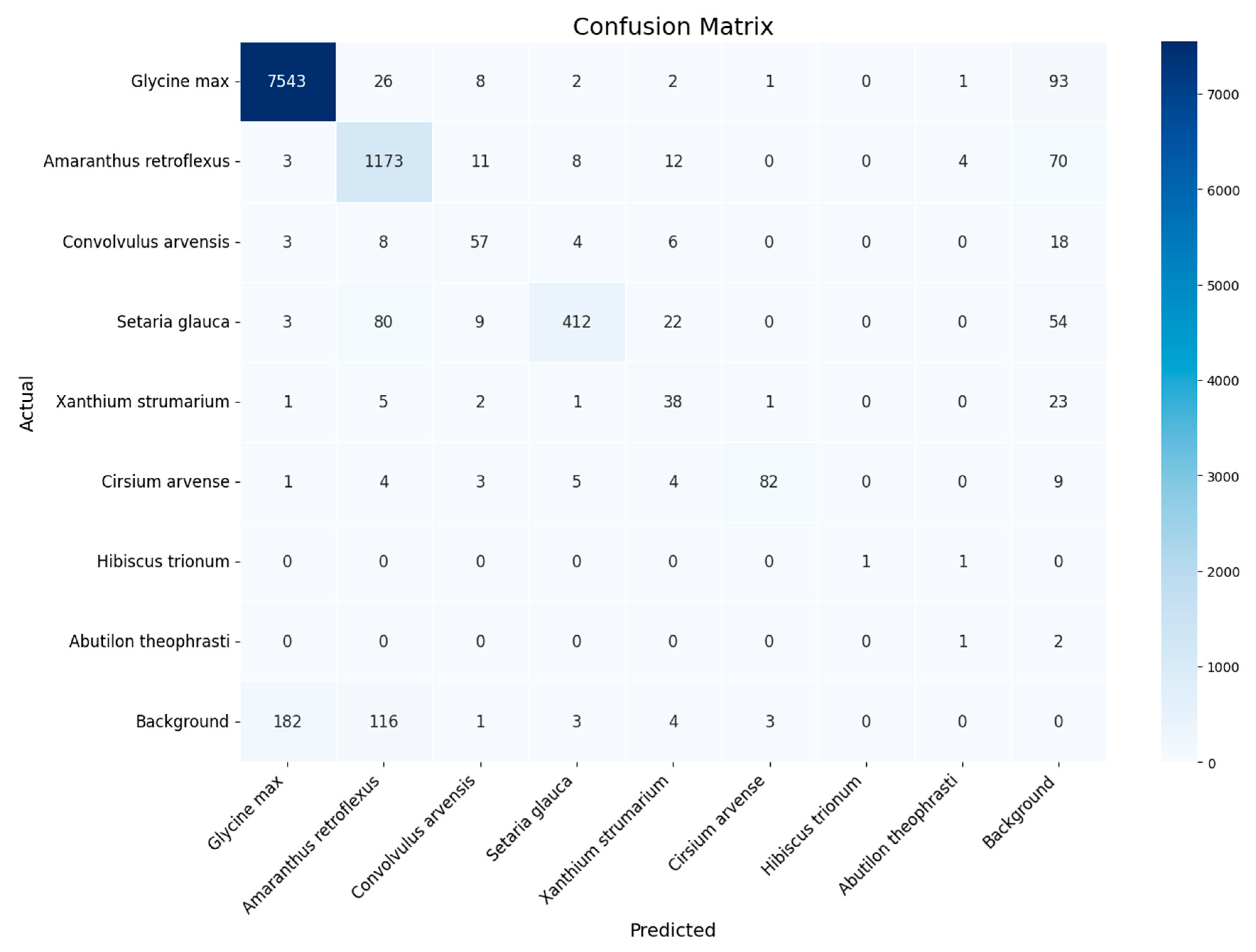

Appendix D. Classification Quality for Each Class Across All Experiments

| Class | Original Dataset (0) | Threshold Segmentation (1) | Bbox Segmentation and k-Fold Cross-Validation, k = 10) (2) |

|---|---|---|---|

| Glycine max | 0.933 | 0.939 | 0.984 |

| Amaranthus retroflexus | 0.638 | 0.817 | 0.942 |

| Convolvulus arvensis | 0.240 | 0.029 | 0.960 |

| Setaria glauca | 0.741 | 0.034 | 0.972 |

| Xanthium strumarium | 0.397 | 0.619 | 0.896 |

| Cirsium arvense | 0.777 | 0.775 | 0.964 |

| Hibiscus trionum | 0.000 | 0.364 | 0.582 |

| Abutilon theophrasti | 0.000 | 0.364 | 0.682 |

| Macro F1 | 0.341 | 0.439 | 0.873 |

| Micro F1 | 0.807 | 0.858 | 0.959 |

Appendix E. Classification Errors

| No. | Filename | F1 Score Micro | Image of Fields. On the Right Is Expert Marking; on the Left Is the Result of Automatic Detection. |

|---|---|---|---|

| 1 | DJI_0497.jpg | 0.6 |  |

| 2 | DJI_0489.jpg | 0.791 |  |

| 3 | DJI_0502.jpg | 0.8 |  |

| 4 | DJI_0214.jpg | 0.804 |  |

| 5 | DJI_0316.jpg | 0.813 |  |

| 6 | DJI_0367.jpg | 0.827 |  |

| 7 | DJI_0490.jpg | 0.831 |  |

| 8 | DJI_0182.jpg | 0.834 |  |

| 9 | DJI_0370.jpg | 0.836 |  |

| 10 | DJI_0232.jpg | 0.836 |  |

References

- Dwivedi, A. Precision Agriculture; Parmar Publishers & Distributors: Pune, India, 2017; Volume 5, pp. 83–105. [Google Scholar]

- Kashyap, B.; Kumar, R. Sensing Methodologies in Agriculture for Soil Moisture and Nutrient Monitoring. IEEE Access 2021, 9, 14095–14121. [Google Scholar] [CrossRef]

- Mukhamediev, R.I.; Symagulov, A.; Kuchin, Y.; Zaitseva, E.; Bekbotayeva, A.; Yakunin, K.; Assanov, I.; Levashenko, V.; Popova, Y.; Akzhalova, A.; et al. Review of Some Applications of Unmanned Aerial Vehicles Technology in the Resource-Rich Country. Appl. Sci. 2021, 11, 10171. [Google Scholar] [CrossRef]

- Albrekht, V.; Mukhamediev, R.I.; Popova, Y.; Muhamedijeva, E.; Botaibekov, A. Top2Vec Topic Modeling to Analyze the Dynamics of Publication Activity Related to Environmental Monitoring Using Unmanned Aerial Vehicles. Publications 2025, 13, 15. [Google Scholar] [CrossRef]

- Oxenenko, A.; Yerimbetova, A.; Kuanaev, A.; Mukhamediyev, R.; Kuchin, Y. Technical means of remote monitoring using unmanned aerial platforms. Phys. Math. Ser. 2024, 3, 152–173. (In Russian) [Google Scholar] [CrossRef]

- Masi, M.; Di Pasquale, J.; Vecchio, Y.; Capitanio, F. Precision Farming: Barriers of Variable Rate Technology Adoption in Italy. Land 2023, 12, 1084. [Google Scholar] [CrossRef]

- Ferreira, A.; Freitas, D.; Silva, G.; Pistori, H.; Folhes, M. Weed Detection in Soybean Crops Using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Peteinatos, G.; Reichel, P.; Karouta, J.; Andújar, D.; Gerhards, R. Weed Identification in Maize, Sunflower, and Potatoes with the Aid of Convolutional Neural Networks. Remote Sens. 2020, 12, 4185. [Google Scholar] [CrossRef]

- Asad, M.; Bais, A. Weed Detection in Canola Fields Using Maximum Likelihood Classification and Deep Convolutional Neural Network. Inf. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize Seedling Detection under Different Growth Stages and Complex Field Environments Based on an Improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Suh, H.; IJsselmuiden, J.; Hofstee, J.; van Henten, E. Transfer Learning for the Classification of Sugar Beet and Volunteer Potato under Field Conditions. Biosyst. Eng. 2018, 174, 50–65. [Google Scholar] [CrossRef]

- Chechliński, Ł.; Siemiątkowska, B.; Majewski, M. A System for Weeds and Crops Identification—Reaching over 10 FPS on Raspberry Pi with the Usage of MobileNets, DenseNet and Custom Modifications. Sensors 2019, 19, 3787. [Google Scholar] [CrossRef]

- Umar, M.; Altaf, S.; Ahmad, S.; Mahmoud, H.; Mohamed, A.S.N.; Ayub, R. Precision Agriculture Through Deep Learning: Tomato Plant Multiple Diseases Recognition with CNN and Improved YOLOv7. IEEE Access 2024, 12, 49167–49183. [Google Scholar] [CrossRef]

- Osman, Y.; Dennis, R.; Elgazzar, K. Yield Estimation and Visualization Solution for Precision Agriculture. Sensors 2021, 21, 6657. [Google Scholar] [CrossRef] [PubMed]

- Symagulov, A.; Kuchin, Y.; Yakunin, K.; Murzakhmetov, S.; Yelis, M.; Oxenenko, A.; Assanov, I.; Bastaubayeva, S.; Tabynbaeva, L.; Rabčan, J.; et al. Recognition of Soybean Crops and Weeds with YOLO v4 and UAV. In Proceedings of the International Conference on Internet and Modern Society, St. Petersburg, Russia, 23–25 June 2022; Springer Nature: Cham, Switzerland, 2022; pp. 3–14. [Google Scholar]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A Novel Benchmark of YOLO Object Detectors for Multi-Class Weed Detection in Cotton Production Systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Sunil, G.C.; Upadhyay, A.; Zhang, Y.; Howatt, K.; Peters, T.; Ostlie, M.; Aderholdt, W.; Sun, X. Field-Based Multispecies Weed and Crop Detection Using Ground Robots and Advanced YOLO Models: A Data and Model-Centric Approach. Smart Agric. Technol. 2024, 9, 100538. [Google Scholar]

- Kavitha, S.; Gangambika, G.; Padmini, K.; Supriya, H.S.; Rallapalli, S.; Sowmya, K. Automatic Weed Detection Using CCOA Based YOLO Network in Soybean Field. In Proceedings of the 2024 Second International Conference on Data Science and Information System (ICDSIS), Hassan, India, 17–18 May 2024; IEEE: New York, NY, USA, 2024; pp. 1–8. [Google Scholar]

- Tetila, E.C.; Moro, B.L.; Astolfi, G.; da Costa, A.B.; Amorim, W.P.; de Souza Belete, N.A.; Pistori, H.; Barbedo, J.G.A. Real-Time Detection of Weeds by Species in Soybean Using UAV Images. Crop Prot. 2024, 184, 106846. [Google Scholar] [CrossRef]

- Li, J.; Zhang, W.; Zhou, H.; Yu, C.; Li, Q. Weed Detection in Soybean Fields Using Improved YOLOv7 and Evaluating Herbicide Reduction Efficacy. Front. Plant Sci. 2024, 14, 1284338. [Google Scholar] [CrossRef]

- YOLOv8 Label Format: A Step-by-Step Guide. Available online: https://yolov8.org/yolov8-label-format/ (accessed on 9 April 2025).

- CVAT. Available online: https://www.cvat.ai/ (accessed on 9 April 2025).

- PlantCV: Plant Computer Vision. Available online: https://plantcv.org/ (accessed on 9 April 2025).

- Explanation of All of YOLO Series Part 11. Available online: https://zenn.dev/yuto_mo/articles/14a87a0db17dfa (accessed on 9 April 2025).

- COCO Dataset. Available online: https://docs.ultralytics.com/ru/datasets/detect/coco/ (accessed on 9 April 2025).

- coco.yaml File. Available online: https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml (accessed on 9 April 2025).

- imgaug Documentation. Available online: https://imgaug.readthedocs.io/en/latest/ (accessed on 9 April 2025).

- Mukhamediyev, R.; Amirgaliyev, E. Introduction to Machine Learning; Litres: Almaty, Kazakhstan, 2022; ISBN 978-601-08-1177-5. (In Russian) [Google Scholar]

- YOLO Performance Metrics. Available online: https://docs.ultralytics.com/ru/guides/yolo-performance-metrics/#object-detection-metrics (accessed on 9 April 2025).

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. A survey on deep learning-based identification of plant and crop diseases from UAV-based aerial images. Clust. Comput. 2023, 26, 1297–1317. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, B.; Tang, Z.; Xue, J.; Chen, R.; Kan, H.; Lu, S.; Feng, L.; He, Y.; Yi, S. A rapid field crop data collection method for complexity cropping patterns using UAV and YOLOv3. Front. Earth Sci. 2024, 18, 242–255. [Google Scholar] [CrossRef]

- Nnadozie, E.C.; Casaseca-de-la-Higuera, P.; Iloanusi, O.; Ani, O.; Alberola-López, C. Simplifying YOLOv5 for deployment in a real crop monitoring setting. Multimed. Tools Appl. 2024, 83, 50197–50223. [Google Scholar] [CrossRef]

- Sonawane, S.; Patil, N.N. Performance Evaluation of Modified YOLOv5 Object Detectors for Crop-Weed Classification and Detection in Agriculture Images. SN Comput. Sci. 2025, 6, 126. [Google Scholar] [CrossRef]

- Pikun, W.; Ling, W.; Jiangxin, Q.; Jiashuai, D. Unmanned aerial vehicles object detection based on image haze removal under sea fog conditions. IET Image Process. 2022, 16, 2709–2721. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Hu, E.; Wang, A.; Shiri, B.; Lin, W. VNDHR: Variational single nighttime image Dehazing for enhancing visibility in intelligent transportation systems via hybrid regularization. IEEE Trans. Intell. Transp. Syst. 2025, 26, 10189–10203. [Google Scholar] [CrossRef]

- Consumer Drones Comparison. Available online: https://www.dji.com/products/comparison-consumer-drones?from=store-product-page-comparison (accessed on 9 April 2025).

- Support for DJI Mini 2. Available online: https://www.dji.com/global/support/product/mini-2 (accessed on 9 April 2025).

| Metric | Formula | Explanation |

|---|---|---|

| Classification Indicators [28] | ||

| Precision | where true positive (TP) and true negative (TN) are cases of correct operation of the classifier. Accordingly, false negatives (FNs) and false positives (FPs) are cases of misclassification. | Proportion of true positive predictions among all predicted positive cases. |

| Recall | Proportion of true positive predictions among all actual positive cases. | |

| F1 score (a harmonic mean) | A harmonic mean between accuracy and recall. | |

| Localization Indicators | ||

| IoU | A measure that quantifies the overlap between the predicted bounding box (usually a rectangle—Bbox) and the true bounding box. It plays an important role in assessing the accuracy of object localization [29]. | |

where P(R) is accuracy versus recall function. | Average accuracy for one class, calculated as the area under the precision–recall curve. | |

| mAP50 | where N is the total number of classes and is the mean value of precision (AP) for class i at IoU = 0.5. | The mean value of accuracy across all classes, used to estimate the overall performance of the model. |

| mAP50–95 | where N is the total number of classes and . j is an index taking values from 0 to 9, corresponding to IoU thresholds of 0.5, 0.55, 0.6, …, 0.95. | Mean accuracy averaged over multiple IoU thresholds from 0.5 to 0.95 in steps of 0.05. |

| Class Name | Decoding of the claSS Name | 0 | 1 | 2 |

|---|---|---|---|---|

| 0: Bed | Bed | 2458 | 0 | 0 |

| 1: Glycine max | Soybean | 42,282 | 41,368 | 42,282 |

| 2: Amaranthus retroflexus | Common wheatgrass | 6155 | 3840 | 6155 |

| 3: Convolvulus arvensis | Field creeper | 385 | 304 | 385 |

| 4: Setaria glauca | Bristle broom | 1897 | 149 | 1897 |

| 5: Xanthium strumarium | Common dunnitch | 513 | 439 | 513 |

| 6: Cirsium arvense | Pink thistle | 532 | 516 | 532 |

| 7: Echinochloa crusgalli | Chicken millet | 0 | 0 | 0 |

| 8: Hibiscus trionum | Hibiscus trifoliate | 15 | 15 | 15 |

| 9: Abutilon theophrasti | Theophrastus canatum | 27 | 27 | 27 |

| 10: Chenopodium album | White marmoset | 0 | 0 | 0 |

| 11: Apera spica-venti | Common broom, field broom | 0 | 0 | 0 |

| Training Dataset | mAP50 | mAP50–95 | Recall | Precision | F1 Score Micro | F1 Score Macro | F1 Score Glycine Max |

|---|---|---|---|---|---|---|---|

| Original dataset | 0.72 | 0.3 | 0.599 | 0.677 | 0.6356 | 0.341 | 0.933 |

| Experiment 1 (threshold segmentation) | 0.65 | 0.348 | 0.639 | 0.749 | 0.6896 | 0.439 | 0.939 |

| Experiment 2 (Bbox segmentation and k-fold cross-validation, k = 10) | 0.979 | 0.936 | 0.941 | 0.963 | 0.959 | 0.873 | 0.984 |

| Opacity Level | F1 Score Micro |

|---|---|

| 0 | 0.959 |

| 0.1 | 0.85 |

| 0.2 | 0.852 |

| 0.3 | 0.853 |

| 0.4 | 0.828 |

| 0.5 | 0.841 |

| 0.6 | 0.805 |

| 0.7 | 0.78 |

| 0.8 | 0.635 |

| 0.9 | 0.272 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mukhamediev, R.I.; Smurygin, V.; Symagulov, A.; Kuchin, Y.; Popova, Y.; Abdoldina, F.; Tabynbayeva, L.; Gopejenko, V.; Oxenenko, A. Fast Detection of Plants in Soybean Fields Using UAVs, YOLOv8x Framework, and Image Segmentation. Drones 2025, 9, 547. https://doi.org/10.3390/drones9080547

Mukhamediev RI, Smurygin V, Symagulov A, Kuchin Y, Popova Y, Abdoldina F, Tabynbayeva L, Gopejenko V, Oxenenko A. Fast Detection of Plants in Soybean Fields Using UAVs, YOLOv8x Framework, and Image Segmentation. Drones. 2025; 9(8):547. https://doi.org/10.3390/drones9080547

Chicago/Turabian StyleMukhamediev, Ravil I., Valentin Smurygin, Adilkhan Symagulov, Yan Kuchin, Yelena Popova, Farida Abdoldina, Laila Tabynbayeva, Viktors Gopejenko, and Alexey Oxenenko. 2025. "Fast Detection of Plants in Soybean Fields Using UAVs, YOLOv8x Framework, and Image Segmentation" Drones 9, no. 8: 547. https://doi.org/10.3390/drones9080547

APA StyleMukhamediev, R. I., Smurygin, V., Symagulov, A., Kuchin, Y., Popova, Y., Abdoldina, F., Tabynbayeva, L., Gopejenko, V., & Oxenenko, A. (2025). Fast Detection of Plants in Soybean Fields Using UAVs, YOLOv8x Framework, and Image Segmentation. Drones, 9(8), 547. https://doi.org/10.3390/drones9080547